CNN-Based Automatic Detection of Beachlines Using UAVs for Enhanced Waste Management in Tailings Storage Facilities

Abstract

1. Introduction

- Maintaining a minimum beach width of 200 m adjacent to the dams with a slope towards the reservoir;

- Ensuring a 0.5 m elevation difference between the dam crest and the beach surface, as well as its uniform growth;

- Limiting the formation of one dam section to no longer than three weeks;

- Controlling the sequence of beach formation for every other section of the TSF.

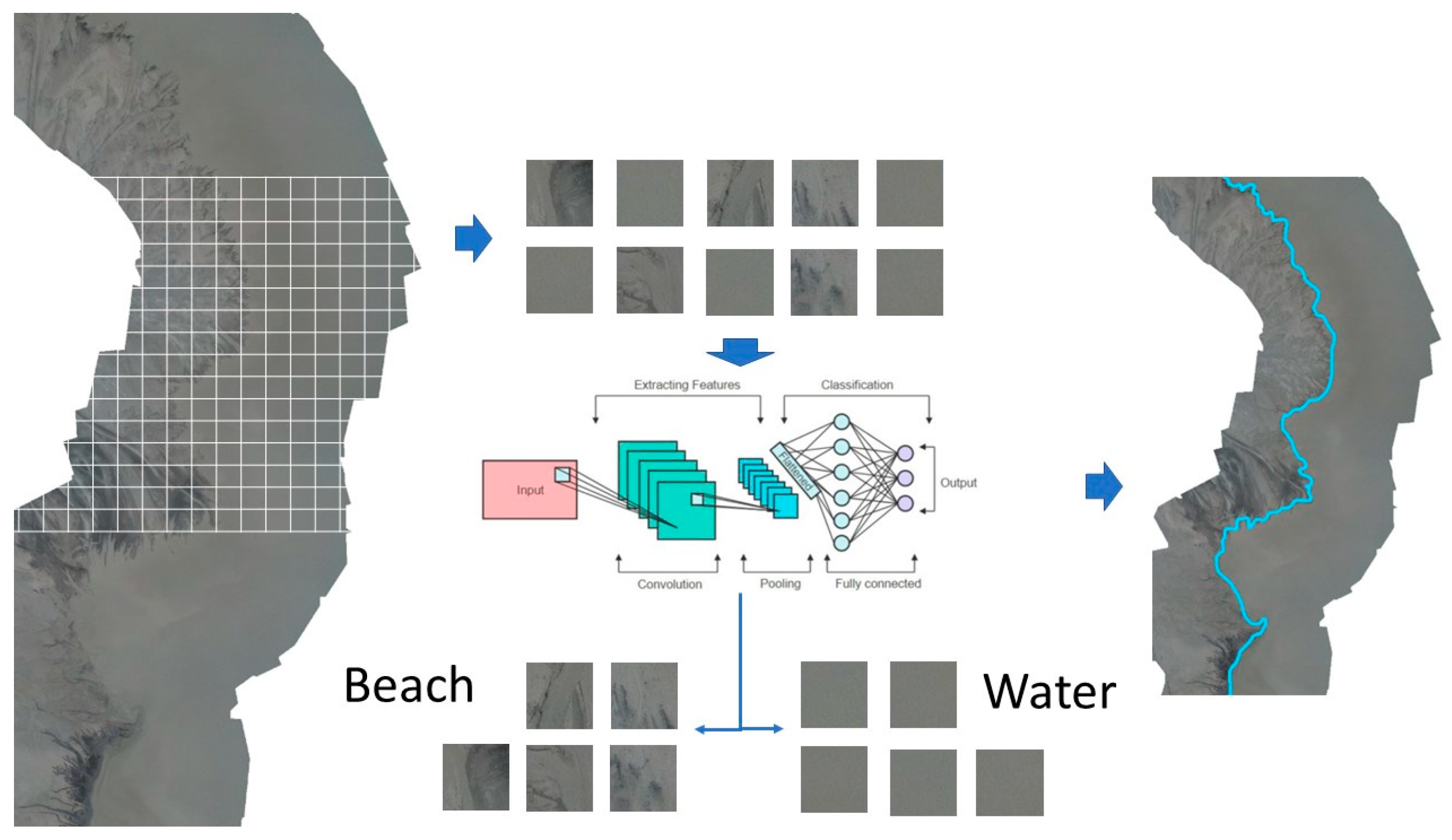

2. Materials and Methods

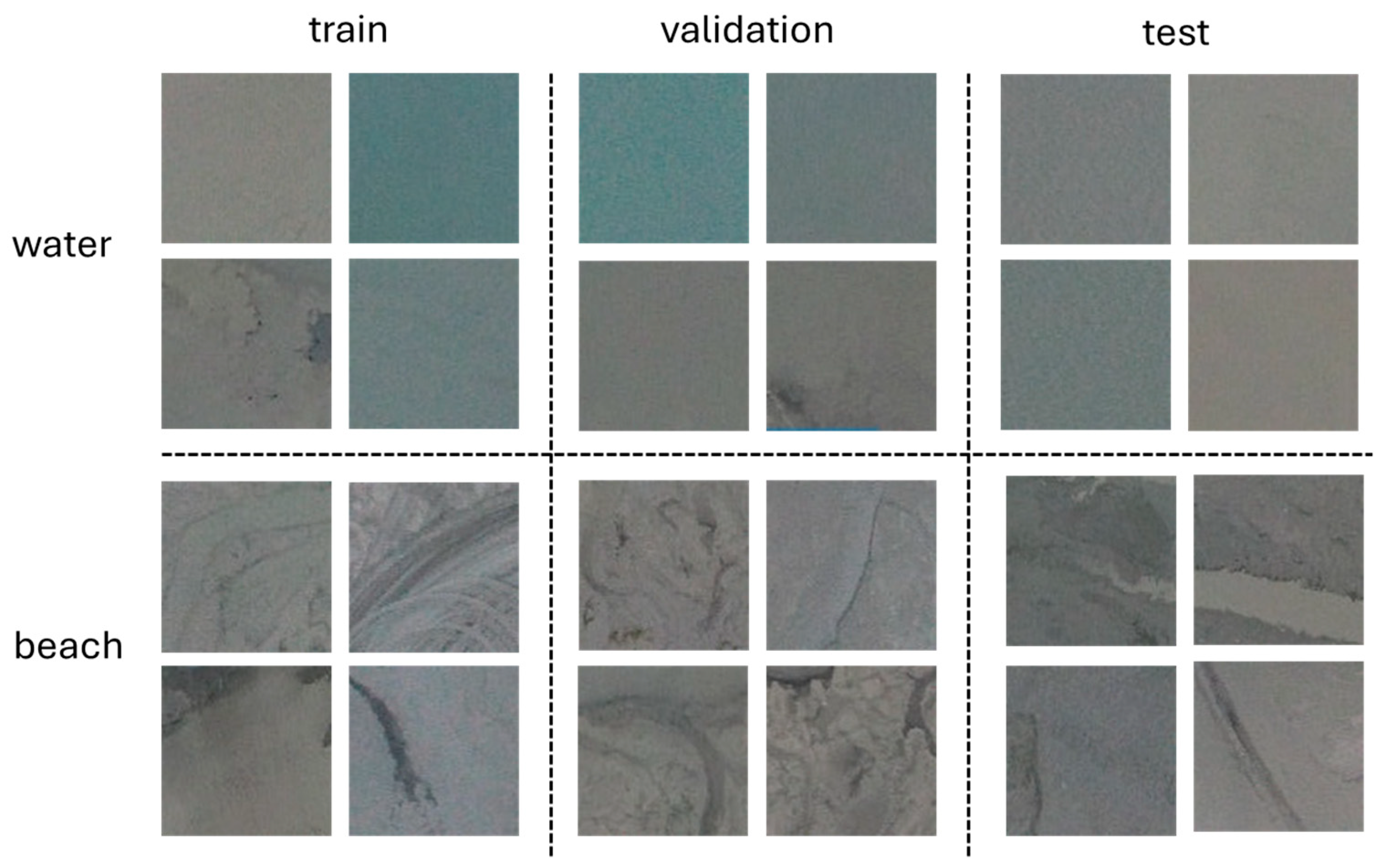

Data Collection and Preparation

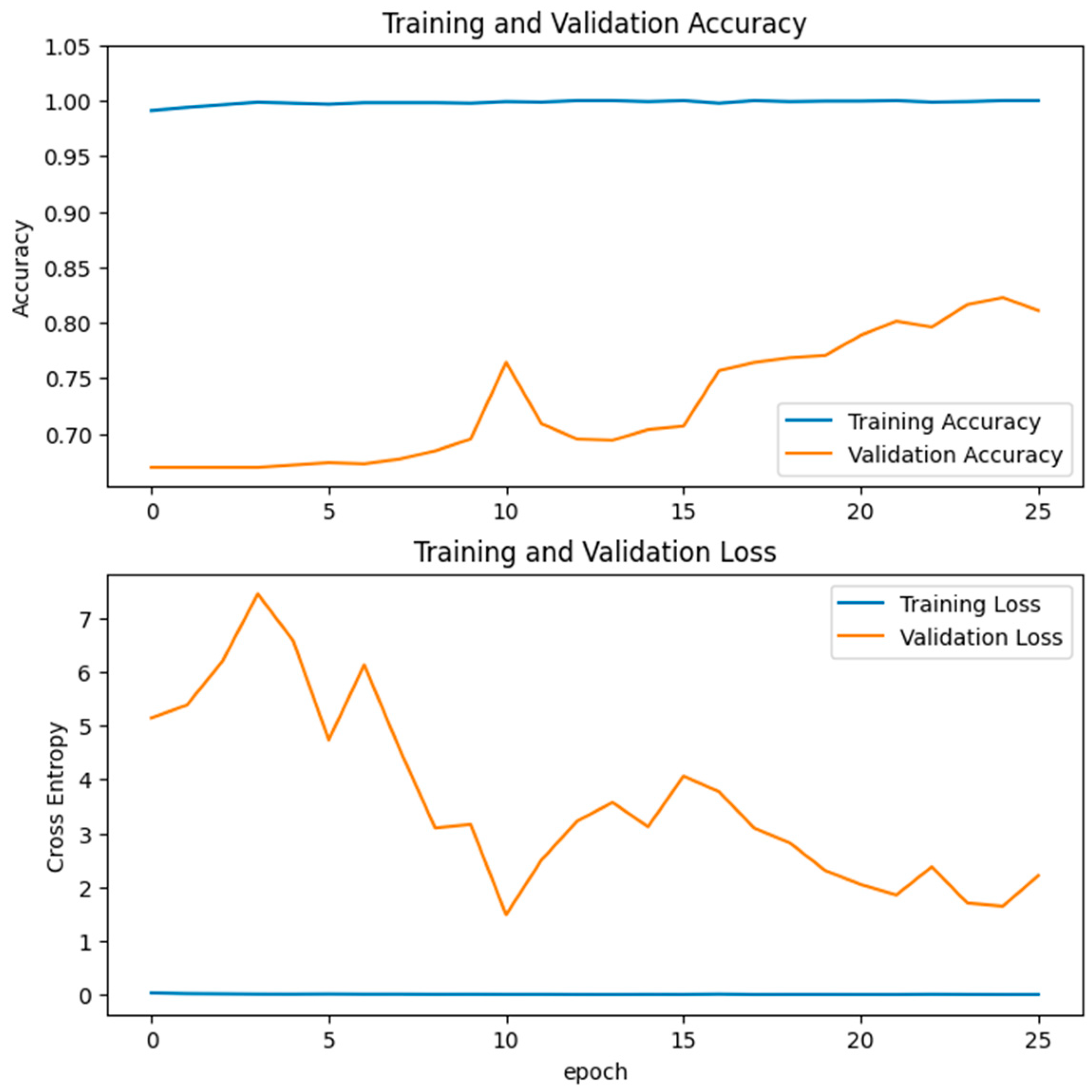

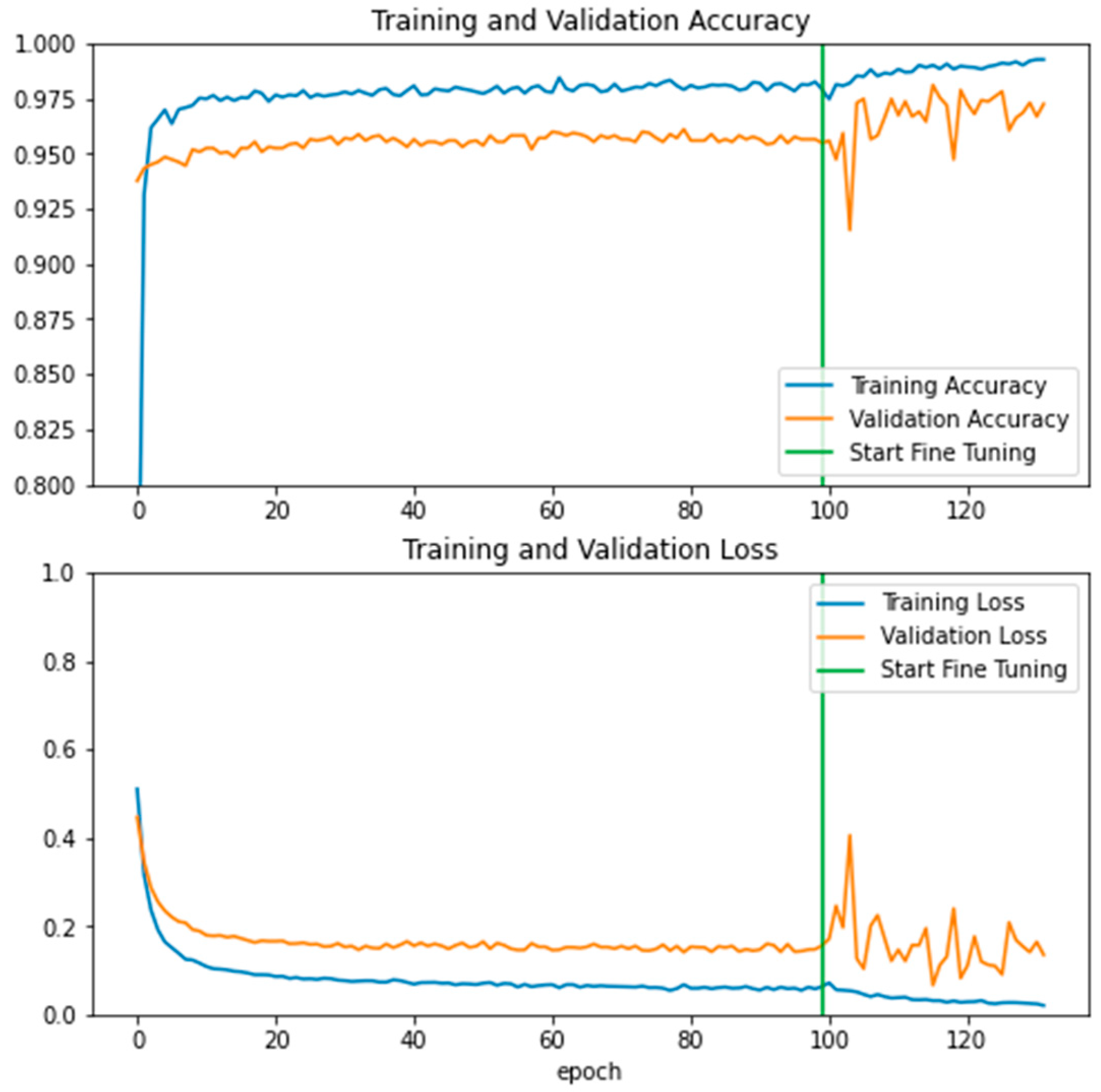

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Clarkson, L.; Williams, D. Critical review of tailings dam monitoring best practice. Int. J. Min. Reclam. Environ. 2020, 34, 119–148. [Google Scholar] [CrossRef]

- Sun, E.; Zhang, X.; Li, Z. The internet of things (IOT) and cloud computing (CC) based tailings dam monitoring and pre-alarm system in mines. Saf. Sci. 2021, 50, 811–815. [Google Scholar] [CrossRef]

- Dong, L.; Shu, W.; Sun, D.; Li, X.; Zhang, L. Pre-alarm system based on real-time monitoring and numerical simulation using internet of things and cloud computing for tailings dam in mines. IEEE Access 2017, 5, 21080–21089. [Google Scholar] [CrossRef]

- Koperska, W.; Stachowiak, M.; Duda-Mróz, N.; Stefaniak, P.; Jachnik, B.; Bursa, B.; Stefanek, P. The Tailings Storage Facility (TSF) stability monitoring system using advanced big data analytics on the example of the Żelazny Most Facility. Arch. Civ. Eng. 2022, 68, 297–311. [Google Scholar]

- Duda, N.; Jachnik, B.; Stefaniak, P.; Bursa, B.; Stefanek, P. Tailings storage facility stability monitoring using CPT data analytics on the Zelazny Most facility. In Proceedings of the Application of Computers and Operations Research in the Mineral Industries” (APCOM 2021): Minerals Industry 4.0: The Next Digital Transformation in Mining, Johannesburg, South Africa, 30 August–1 September 2021; ISBN 978-1-928410-26-3. [Google Scholar]

- Koperska, W.; Stachowiak, M.; Jachnik, B.; Stefaniak, P.; Bursa, B.; Stefanek, P. Machine Learning Methods in the Inclinometers Readings Anomaly Detection Issue on the Example of Tailings Storage Facility. In IFIP International Workshop on Artificial Intelligence for Knowledge Management; Springer: Cham, Switzerland, 2021; pp. 235–249. [Google Scholar]

- Duda-Mróz, N.; Koperska, W.; Stefaniak, P.; Anufriiev, S.; Stachowiak, M.; Stefanek, P. Application of Dynamic Time Warping to Determine the Shear Wave Velocity from the Down-Hole Test. Appl. Sci. 2023, 13, 9736. [Google Scholar] [CrossRef]

- Boak, E.H.; Turner, I.L. Shoreline definition and detection: A review. J. Coast. Res. 2005, 21, 688–703. [Google Scholar] [CrossRef]

- Toure, S.; Diop, O.; Kpalma, K.; Maiga, A.S. Shoreline detection using optical remote sensing: A review. ISPRS Int. J. Geo-Inf. 2019, 8, 75. [Google Scholar] [CrossRef]

- Abdelhady, H.U.; Troy, C.D.; Habib, A.; Manish, R. A simple, fully automated shoreline detection algorithm for high-resolution multi-spectral imagery. Remote Sens. 2022, 14, 557. [Google Scholar] [CrossRef]

- Aedla, R.; Dwarakish, G.S.; Reddy, D.V. Automatic shoreline detection and change detection analysis of netravati-gurpurrivermouth using histogram equalization and adaptive thresholding techniques. Aquat. Procedia 2015, 4, 563–570. [Google Scholar] [CrossRef]

- Tajima, Y.; Wu, L.; Watanabe, K. Development of a shoreline detection method using an artificial neural network based on satellite SAR imagery. Remote Sens. 2021, 13, 2254. [Google Scholar] [CrossRef]

- Plant, N.G.; Aarninkhof, S.G.; Turner, I.L.; Kingston, K.S. The performance of shoreline detection models applied to video imagery. J. Coast. Res. 2007, 23, 658–670. [Google Scholar] [CrossRef]

- Dang, T.M.; Nguyen, B.D. Applications of UAVs in mine industry: A scoping review. J. Sustain. Min. 2023, 22, 128–146. [Google Scholar]

- Ren, H.; Zhao, Y.; Xiao, W.; Hu, Z. A review of UAV monitoring in mining areas: Current status and future perspectives. Int. J. Coal Sci. Technol. 2019, 6, 320–333. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned aerial vehicles in agriculture: A review of perspective of platform, control, and applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Shahmoradi, J.; Talebi, E.; Roghanchi, P.; Hassanalian, M. A comprehensive review of applications of drone technology in the mining industry. Drones 2020, 4, 34. [Google Scholar] [CrossRef]

- Park, S.; Choi, Y. Applications of unmanned aerial vehicles in mining from exploration to reclamation: A review. Minerals 2020, 10, 663. [Google Scholar] [CrossRef]

- Kršák, B.; Blišťan, P.; Pauliková, A.; Puškárová, P.; Kovanič, Ľ.; Palková, J.; Zelizňaková, V. Use of low-cost UAV photogrammetry to analyze the accuracy of a digital elevation model in a case study. Measurement 2016, 91, 276–287. [Google Scholar] [CrossRef]

- Outay, F.; Mengash, H.A.; Adnan, M. Applications of unmanned aerial vehicle (UAV) in road safety, traffic and highway infrastructure management: Recent advances and challenges. Transp. Res. Part A Policy Pract. 2020, 141, 116–129. [Google Scholar] [CrossRef]

- Kanellakis, C.; Nikolakopoulos, G. Evaluation of visual localization systems in underground mining. In Proceedings of the 2016 24th Mediterranean Conference on Control and Automation (MED), Athens, Greece, 21–24 June 2016; pp. 539–544. [Google Scholar]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Singhal, G.; Bansod, B.; Mathew, L. Unmanned aerial vehicle classification, applications and challenges: A review. Preprints 2018, 2018110601, 1–19. [Google Scholar]

- Beretta, F.; Shibata, H.; Cordova, R.; Peroni, R.d.L.; Azambuja, J.; Costa, J.F.C.L. Topographic modelling using UAVs compared with traditional survey methods in mining. REM-Int. Eng. J. 2018, 71, 463–470. [Google Scholar] [CrossRef]

- Stead, D.; Donati, D.; Wolter, A.; Sturzenegger, M. Application of remote sensing to the investigation of rock slopes: Experience gained and lessons learned. ISPRS Int. J. Geo-Inf. 2019, 8, 296. [Google Scholar] [CrossRef]

- Filipova, S.; Filipov, D.; Raeva, P. Creating 3D model of an open pit quarry by UAV imaging and analysis in GIS. In Proceedings of the 6th International Conference on Cartography & GIS, Albena, Bulgaria, 13–17 June 2016; Volume 6, p. 652. [Google Scholar]

- Xiang, J.; Chen, J.; Sofia, G.; Tian, Y.; Tarolli, P. Open-pit mine geomorphic changes analysis using multi-temporal UAV survey. Environ. Earth Sci. 2018, 77, 220. [Google Scholar] [CrossRef]

- Nguyen, Q.L.; Le Thi, T.H.; Tong, S.S.; Kim, T.T.H. UAV photogrammetry-based for open pit coal mine large scale mapping, case studies in Cam Pha City, Vietnam. Устойчивое Развитие Горных Территорий 2020, 12, 501–509. [Google Scholar]

- Bui, D.T.; Long, N.Q.; Bui, X.-N.; Nguyen, V.-N.; Van Pham, C.; Van Le, C.; Ngo, P.-T.T.; Bui, D.T.; Kristoffersen, B. Lightweight unmanned aerial vehicle and structure-from-motion photogrammetry for generating digital surface model for open-pit coal mine area and its accuracy assessment. In Advances and Applications in Geospatial Technology and Earth Resources: Proceedings of the International Conference on Geo-Spatial Technologies and Earth Resources; Springer: Berlin/Heidelberg, Germany; pp. 17–33.

- Nguyen, N.V. Building DEM for deep open-pit coal mines using DJI Inspire 2. J. Min. Earth Sci. Vol. 2020, 61, 1–10. [Google Scholar]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. Processing of drone-borne hyperspectral data for geological applications. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016. [Google Scholar]

- Beretta, F.; Rodrigues, A.L.; Peroni, R.L.; Costa, J.F.C.L. Automated lithological classification using UAV and machine learning on an open cast mine. Appl. Earth Sci. 2019, 128, 79–88. [Google Scholar] [CrossRef]

- Madjid, M.Y.A.; Vandeginste, V.; Hampson, G.; Jordan, C.J.; Booth, A.D. Drones in carbonate geology: Opportunities and challenges, and application in diagenetic dolomite geobody mapping. Mar. Pet. Geol. 2018, 91, 723–734. [Google Scholar] [CrossRef]

- Kirsch, M.; Lorenz, S.; Zimmermann, R.; Tusa, L.; Möckel, R.; Hödl, P.; Booysen, R.; Khodadadzadeh, M.; Gloaguen, R. Integration of terrestrial and drone-borne hyperspectral and photogrammetric sensing methods for exploration mapping and mining monitoring. Remote Sens. 2018, 10, 1366. [Google Scholar] [CrossRef]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The need for accurate geometric and radiometric corrections of drone-borne hyperspectral data for mineral exploration: Mephysto—A toolbox for pre-processing drone-borne hyperspectral data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

- Lyons-Baral, J.; Kemeny, J. Applications of point cloud technology in geomechanical characterization, analysis and predictive modeling. Min. Eng. 2016, 68, 18–29. [Google Scholar]

- Raj, P. Use of Drones in an Underground Mine for Geotechnical Monitoring. Master’s Thesis, The University of Arizona, Tucson, AZ, USA, 2019. [Google Scholar]

- Turner, R.M.; Bhagwat, N.P.; Galayda, L.J.; Knoll, C.S.; Russell, E.A.; MacLaughlin, M.M. Geotechnical characterization of underground mine excavations from UAV-captured photogrammetric & thermal imagery. In Proceedings of the 52nd ARMA US Rock Mechanics/Geomechanics Symposium, Seattle, WA, USA, 17–20 June 2018; p. ARMA–2018. [Google Scholar]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned aerial vehicles for search and rescue: A survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Daud, S.M.S.M.; Yusof, M.Y.P.M.; Heo, C.C.; Khoo, L.S.; Singh, M.K.C.; Mahmood, M.S.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, R.; Singh, D.; Chauhan, D.S.; Singh, K.P. Detection of coal mine fires in the Jharia coal field using NOAA/AVHRR data. J. Geophys. Eng. 2006, 3, 212–218. [Google Scholar] [CrossRef]

- Dunnington, L.; Nakagawa, M. Fast and safe gas detection from underground coal fire by drone fly over. Environ. Pollut. 2017, 229, 139–145. [Google Scholar] [CrossRef]

- Bamford, T.; Esmaeili, K.; Schoellig, A.P. A real-time analysis of rock fragmentation using UAV technology. arXiv 2016, arXiv:1607.04243. [Google Scholar]

- Bamford, T.; Esmaeili, K.; Schoellig, A.P. Aerial rock fragmentation analysis in low-light condition using UAV technology. arXiv 2017, arXiv:1708.06343. [Google Scholar]

- Freire, G.R.; Cota, R.F. Capture of images in inaccessible areas in an underground mine using an unmanned aerial vehicle. In Proceedings of the UMT 2017: Proceedings of the First International Conference on Underground Mining Technology, Australian Centre for Geomechanics, Sudbury, Canada, 11–13 October 2017. [Google Scholar]

- Castendyk, D.N.; Straight, B.J.; Voorhis, J.C.; Somogyi, M.K.; Jepson, W.E.; Kucera, B.L. Using aerial drones to select sample depths in pit lakes. In Proceedings of the 13th International Conference on MINE Closure, Perth, WA, Australia, 3–5 September 2019; pp. 1113–1126. [Google Scholar]

- Padró, J.C.; Carabassa, V.; Balagué, J.; Brotons, L.; Alcañiz, J.M.; Pons, X. Monitoring opencast mine restorations using Unmanned Aerial System (UAS) imagery. Sci. Total Environ. 2019, 657, 1602–1614. [Google Scholar] [CrossRef]

- Jackisch, R.; Lorenz, S.; Zimmermann, R.; Möckel, R.; Gloaguen, R. Drone-borne hyperspectral monitoring of acid mine drainage: An example from the Sokolov lignite district. Remote Sens. 2018, 10, 385. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification Using Deep Learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

| Layer Type | Output Shape | Number of Parameters |

|---|---|---|

| Input layer | (100, 100, 3) | 0 |

| Sequential | (100, 100, 3) | 0 |

| True Divide | (100, 100, 3) | 0 |

| Subtract | (100, 100, 3) | 0 |

| Functional—MobileNetV2 | (4, 4, 1280) | 2,257,984 |

| Global Average Pooling 2D | (1280) | 0 |

| Dropout | (1280) | 0 |

| Dense | (1) | 1281 |

| beach | water | |

|---|---|---|

| beach | 348 | 3 |

| water | 39 | 665 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anufriiev, S.; Stefaniak, P.; Koperska, W.; Stachowiak, M.; Skoczylas, A.; Stefanek, P. CNN-Based Automatic Detection of Beachlines Using UAVs for Enhanced Waste Management in Tailings Storage Facilities. Appl. Sci. 2025, 15, 5786. https://doi.org/10.3390/app15105786

Anufriiev S, Stefaniak P, Koperska W, Stachowiak M, Skoczylas A, Stefanek P. CNN-Based Automatic Detection of Beachlines Using UAVs for Enhanced Waste Management in Tailings Storage Facilities. Applied Sciences. 2025; 15(10):5786. https://doi.org/10.3390/app15105786

Chicago/Turabian StyleAnufriiev, Sergii, Paweł Stefaniak, Wioletta Koperska, Maria Stachowiak, Artur Skoczylas, and Paweł Stefanek. 2025. "CNN-Based Automatic Detection of Beachlines Using UAVs for Enhanced Waste Management in Tailings Storage Facilities" Applied Sciences 15, no. 10: 5786. https://doi.org/10.3390/app15105786

APA StyleAnufriiev, S., Stefaniak, P., Koperska, W., Stachowiak, M., Skoczylas, A., & Stefanek, P. (2025). CNN-Based Automatic Detection of Beachlines Using UAVs for Enhanced Waste Management in Tailings Storage Facilities. Applied Sciences, 15(10), 5786. https://doi.org/10.3390/app15105786