Abstract

Sarcasm often arises from subtle contrasts between literal meaning and speaker intention. As online communication increasingly includes voice-based content, detecting sarcasm across speech and text becomes more important—and more complex. The existing methods usually focus on generic multimodal fusion but often miss how sarcasm manifests differently in each modality. We propose a model that explicitly encodes audio signals into the textual representation space, allowing prosodic cues to inform language understanding. To extract relevant features at different levels, we use a multi-scale convolutional architecture. The experiments show consistent gains over prior models on both text and speech sarcasm detection tasks.

1. Introduction

Sarcasm is a distinctive form of emotional expression, often characterized by a discrepancy between linguistic content and true intent. With the rapid development of social networks, people increasingly enjoy sharing their daily lives and engaging in discussions on various social platforms. These discussions generate a vast amount of multimodal data. Compared to unimodal data, multimodal data provide a more comprehensive set of information [1,2,3].

The current research developments indicate that a significant challenge in multimodal sarcasm detection lies in identifying the similarities between modalities and effectively extracting and merging information from different modalities during the processing of cross-modal data [4].

The previous research on multimodal detection has primarily concentrated on image and text modalities [5], with limited exploration of audio and text detection. Thus, understanding how to semantically align audio and text and effectively utilize their latent sarcastic features remains a key issue [6].

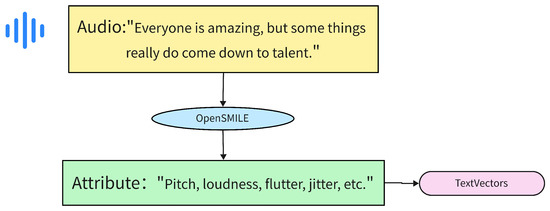

To address these challenges, this paper presents a solution that utilizes critical audio information as attributes for text, enhancing the continuity between text and audio through effective text feature extraction methods, as illustrated in Figure 1. In this framework, OpenSMILE, an open-source toolkit for extracting acoustic features such as pitch, loudness, and jitter, is utilized to process raw audio signals into structured attribute representations.

Figure 1.

Audio attributes converted to text vectors.

This paper introduces a Multimodal Multi-Scale Convolutional Sarcasm Neural Network (MMCSNN) designed to tackle the sarcasm detection task. It processes data across different modalities and their dependencies, effectively integrating text and audio features, and captures multi-scale information to improve sarcasm detection performance.

The experimental results on a publicly available dataset [7] suggest that our model makes more effective use of multimodal signals, leading to a clear boost in sarcasm detection accuracy.

In summary, this work introduces a multimodal sarcasm detection framework that emphasizes the integration of audio attributes into text feature space, supported by a multi-scale convolutional architecture. The proposed model aims to enhance the cross-modal interaction and achieve more robust and accurate sarcasm detection. Extensive experiments validate its effectiveness across various benchmarks.

The main contributions of this work are as follows:

- We incorporate audio-based cues as a central element in the sarcasm detection process, enhancing the interaction between audio and textual features. By embedding these audio attributes into the model, we enable closer alignment between spoken tone and linguistic content, which helps to capture sarcastic expressions more reliably.

- The application of multi-scale convolutional neural networks to multimodal global features: The paper proposes the use of multi-scale convolutional neural networks to extract global features from both text and audio at different scales. Multi-scale convolution effectively captures both local and global information, improving feature robustness [8]. This method not only enhances the representation ability of audio features but also boosts the overall model performance through multi-scale feature fusion.

- Extensive experimental analysis: The paper conducts a thorough experimental analysis, with the results demonstrating that the proposed method significantly outperforms the existing baseline methods across multiple evaluation metrics. The experiments validate the effectiveness and advantages of the Multimodal Multi-Scale Convolutional Sarcasm Neural Network in sarcasm detection.

2. Related Work

2.1. Sarcasm Detection in Pure Text

In the early stages of sarcasm detection, most approaches were based on text, relying heavily on experts from various fields [9], thus lacking generalizability. Deep learning methods have been used to train large-scale corpora to capture the high-dimensional semantic information of sarcastic sentences [10,11,12]. However, these text-based methods are often limited by the ambiguity of textual sarcasm, which does not fully capture the nuances that are often conveyed through voice tone or contextual cues [13].

Veale proposed a multimodal sarcasm detection method based on TextCNN, while Ghosh utilized Twitter data and incorporated user information and temporal data as inputs to CNN and LSTM models [9]. Tay employed a neural network model based on the attention mechanism for sarcasm recognition [14]. Additionally, BERT has been used for embedding context and various emotions for sarcasm recognition in text [15]. These works demonstrated some progress but still relied heavily on text for sarcasm recognition. In contrast, multimodal approaches aim to overcome these limitations by incorporating audio and contextual information [16].

2.2. Audio-Based Sarcasm Detection

In academic research, studies on sarcasm detection based purely on audio data are relatively scarce. Most of the research focuses on the analysis of audio feature extraction methods. There are also common tools for extracting audio features, such as OpenSMILE and VGGish. OpenSMILE (Open-Source Speech and Music Interpretation by Large-Space Extraction) can extract various audio features, including pitch, phoneme, and energy [17], and is widely used in emotion analysis [18]. VGGish is a pre-trained audio feature extraction model that uses Mel spectrograms as input and extracts 128-dimensional feature vectors through a deep learning network [19].

Although these methods can yield certain effects, they still show limitations in some domains. Current approaches struggle to address the issue of feature redundancy. For example, OpenSMILE can provide sufficient acoustic features, but it cannot accurately identify information relevant to sarcasm, and it may even introduce noise that reduces model efficiency. In addition, considering the problem of multimodal integration, how to accurately identify which acoustic features are helpful for sarcasm detection remains a major challenge. To address this problem, Table 1 compares several audio feature extraction tools, focusing on the issue of feature redundancy and emphasizing the importance of making more targeted feature selections.

Table 1.

Comparison of audio feature extraction tools.

Additionally, we acknowledge the importance of adversarial robustness in multimodal learning. Recent studies [20] have explored the vulnerabilities of deep models under adversarial perturbations, which may inspire future extensions of this work.

2.3. Multimodal Sarcasm Detection

In multimodal sarcasm detection, the core task is to utilize information from different modalities (such as text, audio, images, etc.) to analyze the interaction between data from each modality, thereby effectively extracting the implicit sarcastic information and better predicting sarcasm labels [21,22]. Compared to unimodal approaches, multimodal applications are more complex, handling a greater variety of feature data [23], and modalities may either coordinate or interfere with each other.

Previous studies have highlighted the individual roles of each modality in multimodal sarcasm detection [24]. For instance, Rosso et al. discussed these contributions in detail [25]. Cai et al. proposed a simple hierarchical-attention-based fusion neural network [26,27]. Liang et al. studied emotional relationships within and across modalities, proposing an interactive cross-modal interaction graph [28]. Liu enhanced the connection between image and text modalities [29].

This paper focuses on audio–text multimodal sarcasm detection, which, to our knowledge, has received limited attention in the field. We propose the use of audio attributes as integral features to bridge the gap between text and audio modalities, enhancing the overall performance of sarcasm detection. By incorporating a multi-scale convolutional architecture, our model captures both local and global feature interactions, enabling more robust and accurate sarcasm detection across modalities.

3. Methodology

3.1. Overall Framework

The architecture of the proposed Multimodal Multi-Scale Convolutional Neural Network (MMCSNN) is composed of three core modules: feature extraction, feature fusion, and integration.

During the feature extraction process, the MMCSNN model derives different feature representations from multiple modalities, including text, audio, and auxiliary audio attributes. For different types of text, corresponding pre-trained models are used to obtain better feature performance. Since the importance of features varies across modalities, OpenSMILE is used to extract audio descriptors and encode them in a text-like embedding format to enhance cross-modal interaction. By incorporating these audio cues, the alignment between text and audio modalities is improved, providing richer resources for sarcasm cues.

The fusion module mainly consists of two parts. On one hand, it filters redundant information and enhances important signals in each modality to preserve subtle indicators of sarcasm. On the other hand, multi-scale convolution layers with different kernel sizes are applied to strengthen the correlation between modalities [30,31]. Finally, a fully connected layer with batch normalization and activation functions is used to transmit the fused features to the classifier and generate the final output.

3.2. Feature Extraction Layer

3.2.1. Text Feature Representation

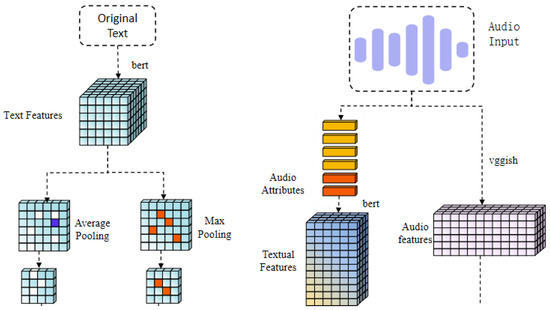

In this study, as shown in Figure 2, we employ a pre-trained BERT model to obtain the semantic representation of the input text. The initial step involves encoding the raw text using a tokenizer to produce text vectors , which are compatible with BERT’s input requirements. This encoding step is not merely a transformation of the text data but a crucial step in ensuring that the text information can be effectively captured and passed on in multimodal tasks. Since BERT is capable of modeling deep semantic relationships through multiple layers of contextualization, text encoding becomes the foundation for improving sarcasm detection performance.

Figure 2.

Feature extraction layer.

The text sequence is passed into the pre-trained BERT model, which outputs a three-dimensional tensor called the last hidden state with the shape (batch_size, sequence_length, feature_dim).

Since the information in this tensor is overly redundant, a dual-pooling operation is applied to better capture the semantic content within the sequence. This process helps to retain the features most related to sarcasm, resulting in the final feature vector:

Here, represents the hidden state of the i-th token, and concat denotes the concatenation operation between the pooled vectors.

3.2.2. Audio Feature Representation

In this study, as shown in Figure 3, the pre-trained VGGish model is used to extract audio features. Since audio data can be affected by various types of noise, data augmentation techniques such as adding random noise and random cropping are applied to the audio data to enhance the model’s robustness [32].

Figure 3.

Feature integration layer.

First, the audio data undergo noise addition and cropping operations. The noise is Gaussian noise with a mean of 0, and the cropping operation randomly selects a segment of the audio signal:

Here, represents the noise amplitude, and noise is Gaussian noise with a mean of 0, is the random initial position, and sr is the sample rate of the audio.

Next, the Mel spectrogram of the augmented audio data is extracted and converted into a log-spectrogram [33]. These processes help to capture the time–frequency features of the audio while reducing noise interference:

Here, S is the Mel spectrogram, nfft is the window size for FFT (Fast Fourier Transform), is the hop size, and nmels is the number of Mel bands.

To further process the sarcastic signals in audio, the log-Mel spectrogram is combined with VGGish, which helps to improve the overall quality of the features and produces the final audio-level feature vector:

3.2.3. Audio Descriptive Attribute Feature Representation

There exists a large amount of sarcasm-related information in audio that can be further explored, but current research mainly focuses on clues within the audio itself, without establishing connections between audio and text. Therefore, this paper introduces the concept of audio attributes. Through audio attributes, a bridge is built between audio and textual modalities to enhance synchronization of information across them.

As shown in Figure 3, we preliminarily extract a large number of sarcasm-related acoustic features using OpenSMILE. These features help the model to understand the relationship between changes in audio signals and the semantic content in text, and they are crucial for capturing subtle emotional variations in audio.

The audio attribute signals selected in this work include two levels of descriptors. On one hand, low-level descriptors such as F0, shimmer, and jitter reflect fine-grained fluctuations in audio signals. On the other hand, high-level features like HNR can be used as more direct indicators to detect sarcastic speech.

However, due to the different dimensional ranges across modalities, it is necessary to process the extracted features to make them suitable for our model framework. In this study, we scale all attributes to a fixed range to ensure that each dimension contributes properly. After normalization, the audio attribute features become a kind of textual representation that can describe audio signals, thereby improving cross-modal interaction and enhancing the model’s ability to analyze multimodal data.

In this study, we select seven acoustic attributes—F0, loudness, shimmer, jitter, HNR, F1, and F2—as the basis for audio feature extraction. These attributes were chosen because they jointly capture both low-level prosodic variations and high-level vocal characteristics, which are essential for modeling subtle emotional cues in sarcastic speech. F0, shimmer, and jitter primarily describe fine-grained pitch fluctuations, while HNR reflects speech signal periodicity and noisiness. F1 and F2 formant frequencies provide information about vocal tract resonances and articulation style. Compared to traditional features such as MFCCs, these selected attributes offer a more direct and interpretable representation of emotional and prosodic nuances, which are critical in sarcasm understanding.

To facilitate downstream multimodal fusion, the output of the BERT encoder is further refined through a batch normalization and activation layer, and subsequently projected to a fixed dimensionality of 128 via a linear transformation. The resulting audio-attribute embedding vector, denoted as , is 128-dimensional and serves as the final representation used in cross-modal integration.

Unlike conventional multimodal fusion strategies that treat text and audio features independently until the final integration stage, our approach embeds audio-attribute vectors into the same semantic space as text. By encoding the attribute features via a BERT encoder, the audio cues are aligned with the token-level semantics of textual inputs. This unified representation enables fine-grained cross-modal interactions, allowing the model to associate prosodic variations in audio with contextual semantics in text. Such design not only enhances fusion interpretability but also highlights the novelty of leveraging attribute-aware alignment in sarcasm detection [34].

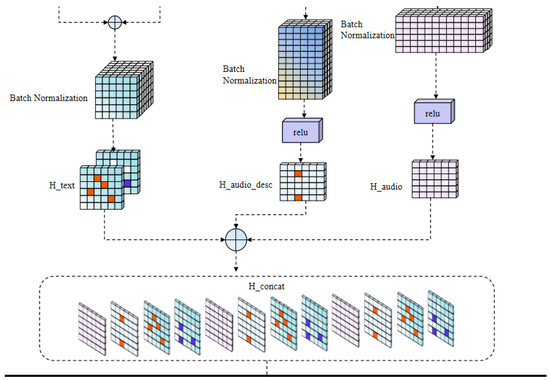

3.3. Fusion Layer

The fusion layer mainly consists of two parts. One part is a feature enhancement unit, which further strengthens the features from different modalities. The other part is a multi-scale convolutional layer, which considers modality features from multiple scales, allowing the model to capture both detailed and global information, enhancing its cross-modal capability(Figure 4).

Figure 4.

Fusion layer.

3.3.1. Input Feature Processing Layer

First, different modalities are normalized through their respective linear transformations. Specifically, each unimodal input is first passed through its own linear transformation, followed by normalization. The modality types include text, audio, and audio descriptors.

After these operations, the features from different modalities are fused into a unified multimodal feature vector:

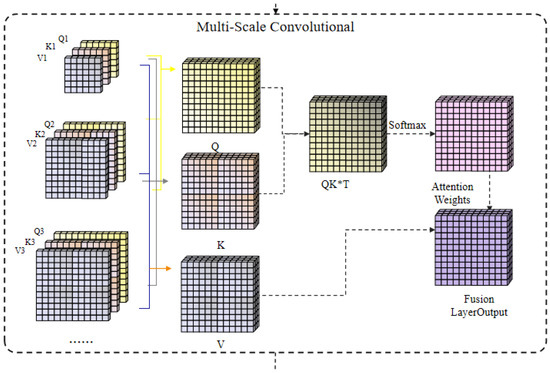

3.3.2. Multi-Scale Convolutional Layer

Our method introduces a dynamic and adaptive multi-scale convolutional structure. By avoiding the use of a fixed convolutional kernel size, the model becomes more flexible when capturing multimodal features at different levels of granularity.

Here, Conv refers to a group of convolution operations with varying kernel sizes.

Then, to make the newly fused features suitable for the traditional attention mechanism, we apply the ReLU function to activate Q and K. This operation further enhances the alignment between modalities and helps to obtain the most informative elements from different perspectives.

The attention mechanism then computes the similarity between the transformed and , and the attention weights are applied:

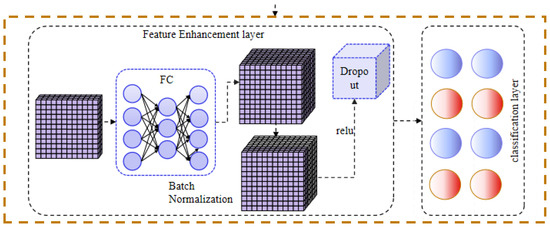

3.4. Feature Integration Layer

After passing through the multi-scale convolutional layers, the features already contain rich sarcastic signals. However, before generating the final prediction, additional processing is still required. The feature integration layer is designed to further refine these features. This module mainly consists of two parts: a feature enhancement layer, which further strengthens the features, and a final classification layer, which produces the final prediction result (Figure 5).

Figure 5.

Feature integration layer.

Feature Enhancement Layer

To better model the relationships among the fused features, this layer applies a fully connected transformation, followed by batch normalization, ReLU activation, and dropout. These steps increase the model’s expressiveness, reduce overfitting, and promote better generalization.

The transformation is given by

Here, and are the weights and biases of the fully connected layer, respectively.

3.5. Final Classification Layer

After the feature enhancement process, the resulting features now contain both global-scale and fine-grained focused information, which can effectively support accurate sarcasm recognition.

Therefore, by applying the sigmoid activation function, the output is mapped into the range of [0, 1], making it suitable for the final sarcasm detection.

4. Experiment Settings

4.1. Dataset and Evaluation Metrics

We evaluate our model on the publicly available MCSh dataset (Multimodal Chinese Sarcasm and Humor), which contains sarcastic and humorous content collected primarily from stand-up comedy performances. Each sample includes textual transcriptions and the corresponding video clips. Additionally, the speaking style of the MCSH dataset predominantly carries a humorous and sarcastic tone, typical of stand-up comedy performances. The topics mainly revolve around entertainment and social commentary, aligning with the general themes of stand-up comedy. The textual transcriptions are provided along with the original dataset and are assumed to be manually annotated, ensuring the alignment between audio and text.

For this study, we further process the original data by segmenting the video content and extracting audio tracks for each instance. The resulting dataset is split into training, validation, and test subsets, with 4,899 samples used for training, 612 for validation, and 613 for testing.

Each sample is annotated with a binary label, where 0 denotes non-sarcastic content and 1 indicates sarcasm. A detailed breakdown of the dataset statistics is presented in Table 2.

Table 2.

Statistics of the multimodal sarcasm dataset.

Based on previous work, accuracy (Acc), precision (Pre), recall (Rec), and F1-score (F1) are used as evaluation metrics. Additionally, due to the imbalanced distribution of the MCSh dataset, macro-average precision (Macro Pre) is also used as an evaluation metric to comprehensively assess the experimental results.

4.2. Hyperparameter Settings

We adopt bert-base-chinese as the text encoder to extract semantic representations from the input text. For the audio modality, VGGish is employed to capture acoustic features, while handcrafted audio attributes are derived using OpenSMILE (version 3.0).

A summary of the remaining training hyperparameters is provided in Table 3.

Table 3.

Hyperparameters.

4.3. Baseline Comparison

We compare the proposed method against several representative baseline models commonly used in sarcasm detection tasks. The core characteristics of each model are briefly summarized as follows:

- TextCNN [35]: Applies convolutional neural networks to textual data for sentence-level classification, leveraging n-gram patterns to capture semantic cues.

- TextCNN-LSTM: Integrates CNNs with long short-term memory units. CNNs handle local feature extraction, while LSTMs model long-range dependencies to improve overall classification performance.

- BERT: A bidirectional-transformer-based model pre-trained on large-scale corpora, known for its effectiveness in semantic representation and downstream language understanding tasks.

- VGGish: An audio feature extraction model based on Convolutional Neural Networks (CNNs), which extracts high-dimensional spatiotemporal features from raw audio waveforms through hierarchical convolution operations.

- MFCC [36]: A classical acoustic feature extraction method with strong interpretability, widely used in tasks such as speech recognition and speaker identification.

- OPENL3: A self-supervised learning-based audio representation model, suitable for open-domain multimodal scenarios.

- BERT+VGGish: A dual-stream architecture that integrates textual and acoustic information. The textual branch uses the BERT pre-trained language model to extract word-level or sentence-level semantic features, while the acoustic branch uses VGGish to capture prosodic features.

- TextCNN+VGGish: A multimodal framework that combines local text patterns with global acoustic features.

- Wav2Vec2.0 + BERT + CMA [37]: A multimodal fusion model that combines audio and text embeddings extracted from pre-trained Wav2Vec2.0 and BERT encoders. The two modality-specific representations are then aligned via a cross-modal attention (CMA) mechanism to capture interaction patterns for sarcasm detection.

5. Experimental Results and Analysis

5.1. Main Experimental Results

Table 4 presents the performance comparison between our proposed Multimodal Multi-Scale Convolutional Sarcasm Neural Network (MMCSNN) and several competitive baselines on the MCSh dataset. The baselines include models based on single modalities (text or audio) and multimodal fusion strategies.

Table 4.

Main experimental results of unimodal and multimodal models on the MCSH dataset. Bold values indicate the best results in each column.

In the single-text modality, BERT achieves the best performance due to its strong contextual understanding.

For the audio-only modality, the combination of OPENL3 and SVM achieves excellent results, indicating that audio cues such as pitch and tonal variation play a crucial role in sarcasm detection.

After fusing the two modalities, a significant performance improvement can be observed, which demonstrates that combining textual and audio features indeed enhances sarcasm detection capabilities. Among them, our proposed MMCSNN model outperforms all the baseline methods across all the metrics, achieving an accuracy of 86.88%, an F1-score of 88.20%, and a Macro F1 of 86.17%.

These results directly reflect the advantages of our model from a quantitative perspective. Further analysis of its structural improvements is provided in Section 5.2.

To further strengthen the comparison, we additionally incorporate a recent multimodal baseline: Wav2Vec2.0+BERT with cross-modal attention (CMA). This model leverages pre-trained encoders for both audio and text, followed by attention-based cross-modal fusion. As shown in Table 4, this method achieves strong performance (F1 = 83.20) but still lags behind our proposed MMCSNN across all the key metrics.

5.2. Multimodal Results Analysis

As shown in Table 4, multimodal models that integrate both textual and audio features show a clear performance improvement over traditional unimodal methods.

This is because traditional unimodal models, such as text-based approaches like TextCNN and BERT, although capable of capturing syntactic and semantic structures effectively, often struggle to identify the hidden sarcastic cues embedded in audio signals.

On the other hand, audio-only models like VGGISH can perceive tonal variations, but the lack of contextual information limits their ability to achieve strong results.

In contrast, the excellent performance of our proposed MMCSNN model across all the evaluation metrics demonstrates that the key to sarcasm detection lies in cross-modal reasoning—specifically the analysis of semantic and acoustic shifts across modalities.

To address this, we introduce the concept of audio attributes, improving the model’s ability to detect tonal changes related to sarcasm and further enhancing the integration of multimodal features.

In addition, the use of a dynamic multi-scale convolutional layer allows the model to perform deeper analysis under varying receptive fields, enabling it to detect more complex and globally distributed hidden cues.

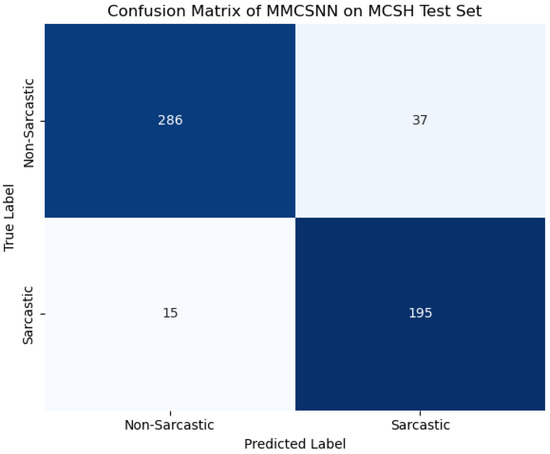

To further verify the model’s performance, a confusion matrix is plotted based on the results on the test set, as shown in Figure 6. The model achieves balanced classification across both the sarcastic and non-sarcastic categories, with relatively low false positive and false negative rates. This result highlights the model’s ability to handle subtle sarcasm cues while maintaining robustness against misclassifications.

Figure 6.

Confusion matrix of MMCSNN on MCSh test set.

5.3. Robustness and Significance Verification

To further evaluate the robustness and statistical reliability of our proposed MMCSNN model, we conducted five independent training runs using different random seeds. Table 5 reports the mean and standard deviation (mean ± std) for the accuracy, F1, and Macro F1-scores of both the MMCSNN and the strong baseline BERT+VGGish.

Table 5.

Performance comparison over 5 runs (mean ± std) on MCSH dataset. Bold values indicate the best results in each column.

As shown in the results, the MMCSNN consistently achieves superior performance with relatively low variance across runs, demonstrating the model’s stability under stochastic training conditions, including dropout and data augmentation. In addition, we performed paired two-tailed t-tests on the five results between the MMCSNN and BERT+VGGish. The performance improvements achieved by the MMCSNN are statistically significant across all the metrics, with .

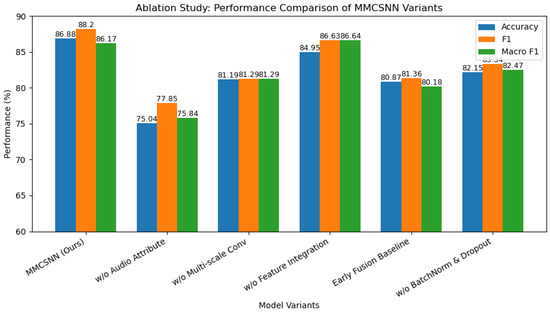

5.4. Ablation Study

To better demonstrate the contribution of each module, this paper conducts an ablation study by removing each module individually using a controlled variable approach. The experimental results are summarized in Table 6 and illustrated in Figure 7.

Table 6.

Ablation study results of MMCSNN on the MCSh dataset. Bold values indicate the best results in each column.

Figure 7.

Ablation study: performance comparison of MMCSNN variants.

To further investigate the impact of different audio attribute subsets, we conducted an ablation study by isolating low-level and high-level descriptor groups, and by removing individual key features. As shown in Table 7, the high-level features (HNR, F1, and F2) yielded slightly better performance compared to the low-level ones (F0, shimmer, and jitter), indicating their superior ability to capture vocal tract characteristics essential for sarcasm recognition. However, removing F0 caused the most significant drop in accuracy, suggesting its crucial role as a tonal anchor. These results validate the complementary nature of prosodic and spectral features in our attribute design.

Table 7.

Effect of audio attribute subsets and individual feature removal on MMCSNN performance. Bold values indicate the best results in each column.

In addition to the ablation studies conducted, we further examined the architectural variations suggested by the reviewer. Specifically, for the multi-scale convolution module, we explored different kernel size combinations (e.g., 1–3–5, 3–5–7, and 5–7–9) during the model development. The experimental results showed that, while varying the kernel sizes slightly affected performance, the default setting of 3 × 3, 5 × 5, and 7 × 7 achieved the best trade-off between capturing local fine-grained cues and broader contextual information. Therefore, we adopted 3 × 3, 5 × 5, and 7 × 7 as the default setting for our multi-scale convolutional structure. Additionally, regarding the feature fusion strategy, we employed a late fusion approach rather than early fusion. Early fusion, which directly concatenates raw features from different modalities, often leads to scale mismatches and noisy interactions that obscure subtle sarcastic signals. In contrast, late fusion allows each modality to be deeply encoded before integration, resulting in a more semantically aligned and robust multimodal representation for sarcasm detection.

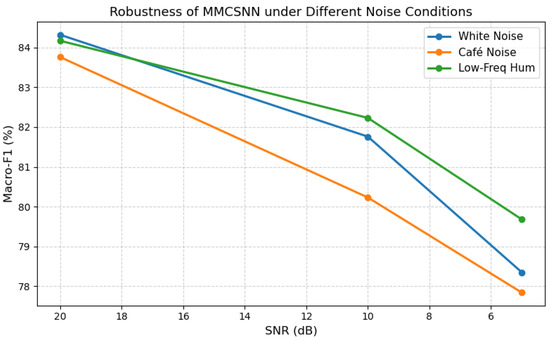

5.5. Robustness Under Noisy Conditions

To evaluate the performance of the MMCSNN in complex acoustic environments, we conducted a noise augmentation experiment. By simulating real-world usage scenarios, various types of background noise were introduced, including white noise, café background chatter, and electrical interference—common disturbances in audio inputs. Each type of noise was tested under three different signal-to-noise ratio (SNR) levels: 20 dB, 10 dB, and 5 dB.

As shown in Figure 8, the MMCSNN still performs well under all the noise conditions. Even at 5 dB, the Macro F1-score remains above 77%. It is worth noting that, starting from 10 dB, white noise caused a significant performance drop. This may be due to its broad spectral coverage, which can interfere with the extraction of audio attribute features.

Figure 8.

Robustness of MMCSNN under different noise conditions.

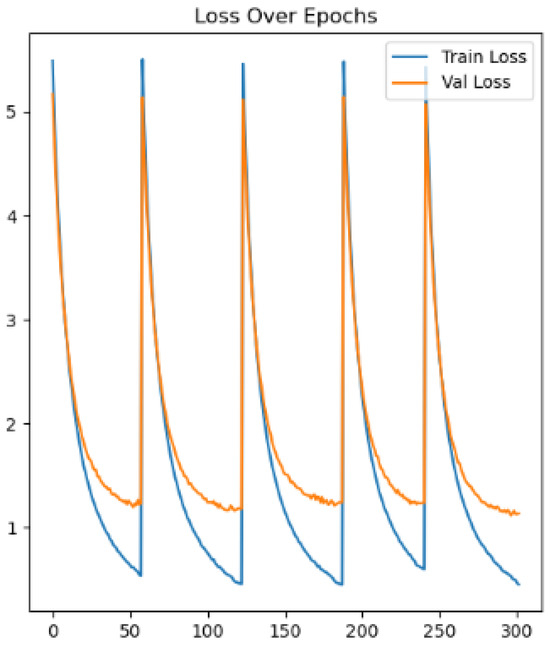

5.6. Training Convergence Analysis

To further examine the learning dynamics and ensure that the MMCSNN model is not prone to overfitting, we visualize the training and validation loss across 5-fold cross-validation in Figure 9. The results show consistent downward trends with minimal divergence between the curves, indicating stable convergence behavior and good generalization. This further verifies the model’s robustness under stochastic optimization.

Figure 9.

Training and validation loss curves over 5-fold cross-validation.

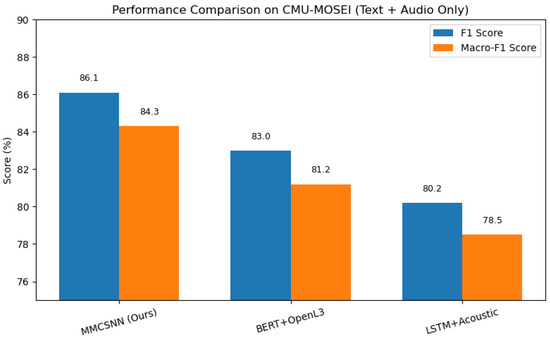

5.7. Generalization Across Datasets

To assess whether the MMCSNN can generalize beyond its original training distribution, we evaluate its performance on the CMU-MOSEI dataset. While CMU-MOSEI includes visual signals, only the text and audio modalities are used here to stay consistent with our model’s design, which excludes visual input in favor of streamlined bimodal processing.

We compare the MMCSNN with two commonly used bimodal baselines: BERT+OpenL3, which pairs contextual text embeddings with audio features from a pre-trained encoder, and LSTM+Acoustic, which combines sequential modeling of text with handcrafted prosodic descriptors. These baselines are chosen to match our two-modality setup and are widely adopted in related tasks involving sentiment and sarcasm analysis.

As reported in Figure 10, the MMCSNN reaches an F1-score of 86.1% and a Macro F1 of 84.3%, outperforming both baselines. This suggests that the model is capable of adapting to different data distributions and linguistic styles while maintaining effective cross-modal alignment.

Figure 10.

Performance comparison on CMU-MOSEI (text + audio only).

Overall, compared with the traditional methods, the MMCSNN model still shows certain improvement and can be effectively transferred to different domains without relying on visual information. This further verifies the generalization ability of the MMCSNN model.

5.8. Computational Complexity Analysis

To evaluate the practical feasibility of deploying the MMCSNN in real-world scenarios, we conducted an empirical analysis of its computational complexity. Table 8 reports the average inference time per sample and the total model size for the MMCSNN and several strong baselines. All the experiments were executed on a dual NVIDIA RTX 3090 GPU setup with a batch size of 32.

Table 8.

Comparison of inference time and model size.

Despite integrating multi-scale convolution and audio attribute modules, the MMCSNN exhibits a comparable inference time and significantly lower memory footprint than the existing transformer-based multimodal models. Notably, the model size of the MMCSNN is only 30.2 MB, substantially smaller than models such as BERT+VGGish (104.6 MB) and Wav2Vec2.0+BERT+CMA (837.6 MB). This is because pre-trained encoders like BERT and VGGish are used in frozen feature extraction mode and not embedded into the saved model architecture, making the final deployable model lightweight and highly portable.

These results confirm that the MMCSNN achieves a favorable balance between performance and computational cost, making it more suitable for deployment in latency-sensitive or resource-constrained environments.

6. Conclusions and Future Work

This paper focuses on the task of multimodal sarcasm detection.and proposes the MMCSNN model, a neural network designed to enhance feature information and comprehensively capture fused features based on audio attributes and multi-scale convolution. The experimental results on multiple datasets show that the model exhibits strong robustness and generalization ability.

6.1. Main Contributions and Findings

The main contributions of this work are summarized as follows:

- Audio Attribute Integration: A novel attribute-based audio representation method is introduced to enhance semantic alignment between speech and text modalities. This mechanism strengthens cross-modal interaction and significantly improves sarcasm recognition performance.

- Multimodal Multi-Scale Neural Network: A multi-scale convolutional framework is developed to capture multi-level interactions between modalities. The proposed MMCSNN model outperforms competitive baselines across multiple benchmarks, demonstrating the effectiveness of hierarchical fusion for multimodal sarcasm detection.

6.2. Future Work

Future research can be expanded in the following directions [38,39,40]:

- Finer-Grained Information Capture: Future research will focus on extracting more fine-grained emotional and prosodic features from both audio and text, with the aim of improving the model’s performance in handling nuanced or context-dependent sarcastic expressions.

- Multimodal Interaction Mechanism: Building upon the current audio–text fusion framework, the proposed mechanism can be extended to incorporate video-based features. This extension is expected to further enhance cross-modal interaction and enable the model to learn richer sarcasm representations from multimodal contexts.

Author Contributions

Conceptualization, H.W.; Methodology, H.W.; Software, Y.Z.; Validation, Y.Z.; Formal analysis, Y.Z.; Investigation, H.W. and H.Z.; Resources, Y.Z.; Data curation, H.Z.; Writing—original draft, Y.Z.; Writing—review & editing, H.W.; Visualization, L.Z.; Supervision, H.W.; Project administration, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Master’s Innovation Capacity Improvement Program of North China University of Water Resources and Electric Power, grant number NCWUYIC-202416097.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the use of publicly available anonymized datasets.

Informed Consent Statement

Not applicable.

Data Availability Statement

The MCSH dataset used in this study is publicly available at https://github.com/SYF111/MCSH (accessed on 16 May 2025).

Acknowledgments

The authors gratefully acknowledge the constructive feedback provided by the anonymous reviewers and thank their academic advisors for their continued support during the development of this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cui, J.; Wang, Z.; Ho, S.-B.; Cambria, E. Survey on sentiment analysis: Evolution of research methods and topics. Artif. Intell. Rev. 2023, 56, 8469–8510. [Google Scholar] [CrossRef] [PubMed]

- Maynard, D.; Greenwood, M.A. Who cares about sarcastic tweets? Investigating the impact of sarcasm on sentiment analysis. In Proceedings of the LREC 2014 Proceedings, Language Resources and Evaluation Conference (LREC), Reykjavik, Iceland, 26–31 May 2014; pp. 4238–4243. [Google Scholar]

- Yue, T.; Mao, R.; Wang, H.; Hu, Z.; Cambria, E. KnowleNet: Knowledge Fusion Network for Multimodal Sarcasm Detection. Inf. Fusion 2023, 100, 101921. [Google Scholar] [CrossRef]

- Pramanick, S.; Roy, A.; Patel, V.M. Multimodal learning using optimal transport for sarcasm and humor detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 3930–3940. [Google Scholar]

- Ghosh, A.; Veale, T. Learning to Detect Sarcasm in Multimodal Social Platforms. In Proceedings of the 2016 ACM Conf. Empirical Methods in Natural Language Processing (EMNLP), Austin, TX, USA, 1–5 November 2016; pp. 1003–1012. [Google Scholar]

- Joshi, A.; Sharma, V.; Bhattacharyya, P. Automatic sarcasm detection in speech using deep learning. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; p. 9053775. [Google Scholar]

- Kumar, A.; Sangwan, S.R.; Arora, A.; Nayyar, A. Combining Prosodic Features and Deep Neural Networks for Sarcasm Detection. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT), San Diego, CA, USA, 13–16 December 2016; pp. 384–390. Available online: https://ieeexplore.ieee.org/document/7846283 (accessed on 15 May 2023).

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. EfficientViT: Multi-Scale Linear Attention for High-Resolution Dense Prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023. [Google Scholar]

- Kovaz, D.; Kreuz, R.J.; Riordan, M.A. Distinguishing sarcasm from literal language: Evidence from books and blogging. Discourse Process. 2013, 50, 598–615. [Google Scholar] [CrossRef]

- Kamal, A.; Abulaish, M. Cat-bigru: Convolution and attention with bi-directional gated recurrent unit for self-deprecating sarcasm detection. Cogn. Comput. 2022, 14, 91–109. [Google Scholar] [CrossRef]

- Kumar, A.; Sangwan, S.R.; Arora, A.; Nayyar, A.; Abdel-Basset, M. Sarcasm detection using soft attention-based bidirectional long short-term memory model with convolution network. IEEE Access 2019, 7, 23319–23328. [Google Scholar]

- Zhang, M.; Zhang, Y.; Fu, G. Tweet sarcasm detection using deep neural network. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 2449–2460. [Google Scholar]

- Bharti, S.K.; Babu, S.K.; Jena, S.K. Parsing-based sarcasm sentiment recognition in twitter data. In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Paris, France, 25–28 August 2015; pp. 1373–1380. [Google Scholar]

- Tay, Y.; Tuan, L.A.; Hui, S.C.; Su, J. Reasoning with sarcasm by reading in-between. In Proceedings of the ACL, Melbourne, Australia, 15–20 July 2018; pp. 1010–1020. [Google Scholar]

- Liu, H.; Wei, R.; Tu, G.; Lin, J.; Liu, C.; Jiang, D. Sarcasm driven by sentiment: A sentiment-aware hierarchical fusion network for multimodal sarcasm detection. Inf. Fusion 2024, 108, 102353. [Google Scholar] [CrossRef]

- Ghosh, A.; Veale, T. Magnets for sarcasm: Making sarcasm detection timely, contextual and very personal. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 482–491. [Google Scholar]

- Eyben, F.; Weninger, F.; Groß, F.; Schuller, B. Recent developments in openSMILE, the Munich open-source multimedia feature extractor. In Proceedings of the 21st ACM International Conference on Multimedia, Barcelona, Spain, 21–25 October 2013; pp. 835–838. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. openSMILE—The Munich versatile and fast open-source audio feature extractor. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1459–1462. [Google Scholar]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar]

- Singh, S.; Jain, A.; Sharma, V. Adversarial attack and defense in multimodal sarcasm detection. Multimed. Tools Appl. 2023, 83, 27667–27694. [Google Scholar]

- Babanejad, N.; Davoudi, H.; An, A.; Papagelis, M. Affective and contextual embedding for sarcasm detection. In Proceedings of the 28th International Conference on Computational Linguistics, Online, 8–13 December 2020; pp. 225–243. [Google Scholar]

- Wang, X.; Sun, X.; Yang, T.; Wang, H. Building a bridge: A method for image-text sarcasm detection without pretraining on image-text data. In Proceedings of the First International Workshop on Natural Language Processing Beyond Text, Online, 20 November 2020; pp. 19–29. [Google Scholar]

- Schmidt, M.; Wu, L.; Kalyan, P. Exploring Multimodal Fusion for Sarcasm Detection: The Interplay of Text and Image. IEEE Trans. Affect. Comput. 2023, 14, 567–578. [Google Scholar]

- Lu, H.; Li, H.; Liu, D. Robust speech recognition based on convolutional neural networks with auxiliary features. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2221–2232. [Google Scholar]

- Malik, M.; Tomás, D.; Rosso, P. How challenging is multimodal irony detection? In Natural Language Processing and Information Systems; Métais, E., Meziane, F., Sugumaran, V., Manning, W., Reiff-Marganiec, S., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 18–32. [Google Scholar]

- Cai, Y.; Cai, H.; Wan, X. Multi-modal sarcasm detection in twitter with hierarchical fusion model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 2506–2515. [Google Scholar]

- Tao, X.; Li, X.; Wu, W.; Li, J. On-device Structured and Context Partitioned Projection Networks. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3778–3786. [Google Scholar] [CrossRef]

- Liang, B.; Lou, C.; Li, X.; Gui, L.; Yang, M.; Xu, R. Multi-modal sarcasm detection with interactive in-modal and cross-modal graphs. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 4707–4715. [Google Scholar]

- Liu, H.; Yang, B.; Yu, Z. A Multi-View Interactive Approach for Multimodal Sarcasm Detection in Social Internet of Things with Knowledge Enhancement. Appl. Sci. 2024, 14, 2146. [Google Scholar] [CrossRef]

- Mallat, S. A Wavelet Tour of Signal Processing: The Sparse Way, 3rd ed.; Academic Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation classification via convolutional deep neural network. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; pp. 2335–2344. Available online: https://aclanthology.org/C14-1220 (accessed on 18 May 2025).

- Ghosh, D.S.B.; Prasad, H.K.V.C.; Prasad, N.M. Diverse Gaussian noise consistency regularization for robustness and uncertainty calibration. arXiv 2021, arXiv:2104.01231. [Google Scholar]

- Cramer, J.; Wu, H.-H.; Salamon, J.; Bello, J.P. Look, Listen, and Learn More: Design Choices for Deep Audio Embeddings. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3852–3856. [Google Scholar] [CrossRef]

- Zhong, W.; Zhang, Z.; Wu, Q.; Xue, Y.; Cai, Q. A Semantic Enhancement Framework for Multimodal Sarcasm Detection. Mathematics 2024, 12, 317. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar] [CrossRef]

- Davis, S.; Mermelstein, P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 357–366. [Google Scholar] [CrossRef]

- Tsai, Y.-H.H.; Bai, S.; Liang, P.P.; Zadeh, A.; Morency, L.-P. Multimodal Transformer for Unaligned Multimodal Language Sequences. In Proceedings of the 57th Conference, Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 6558–6569. [Google Scholar] [CrossRef]

- Joshi, A.; Sharma, V.; Bhattacharyya, P. Harnessing context incongruity for sarcasm detection. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), Beijing, China, 26–31 July 2015; pp. 757–762. [Google Scholar]

- Chaudhari, P.; Chandankhede, C. Literature survey of sarcasm detection. In Proceedings of the 2017 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2017; pp. 2041–2046. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).