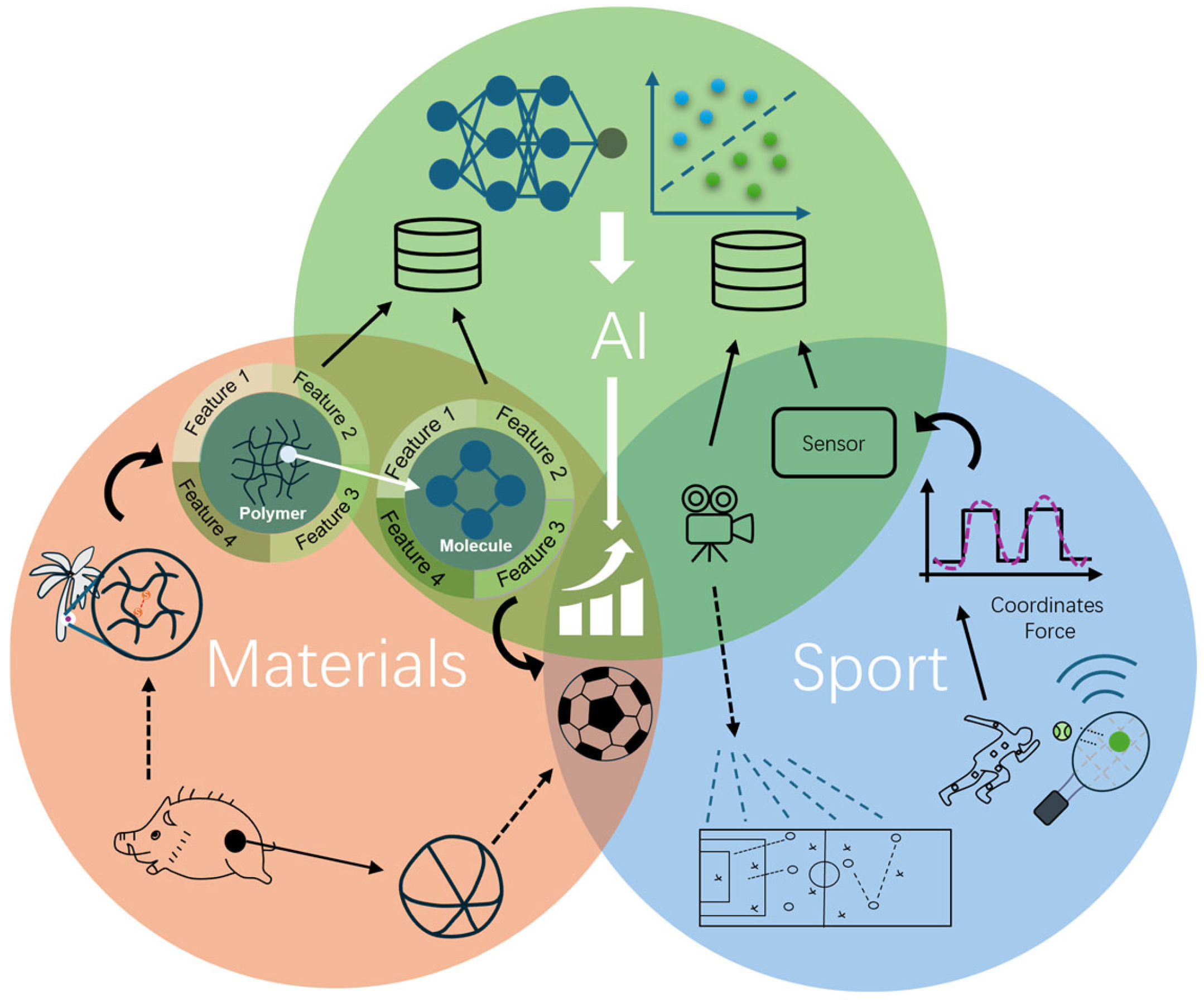

The AI-Driven Transformation in New Materials Manufacturing and the Development of Intelligent Sports

Abstract

1. The Evolution of Sports: A Synergy of Historical Development and Technological Innovation

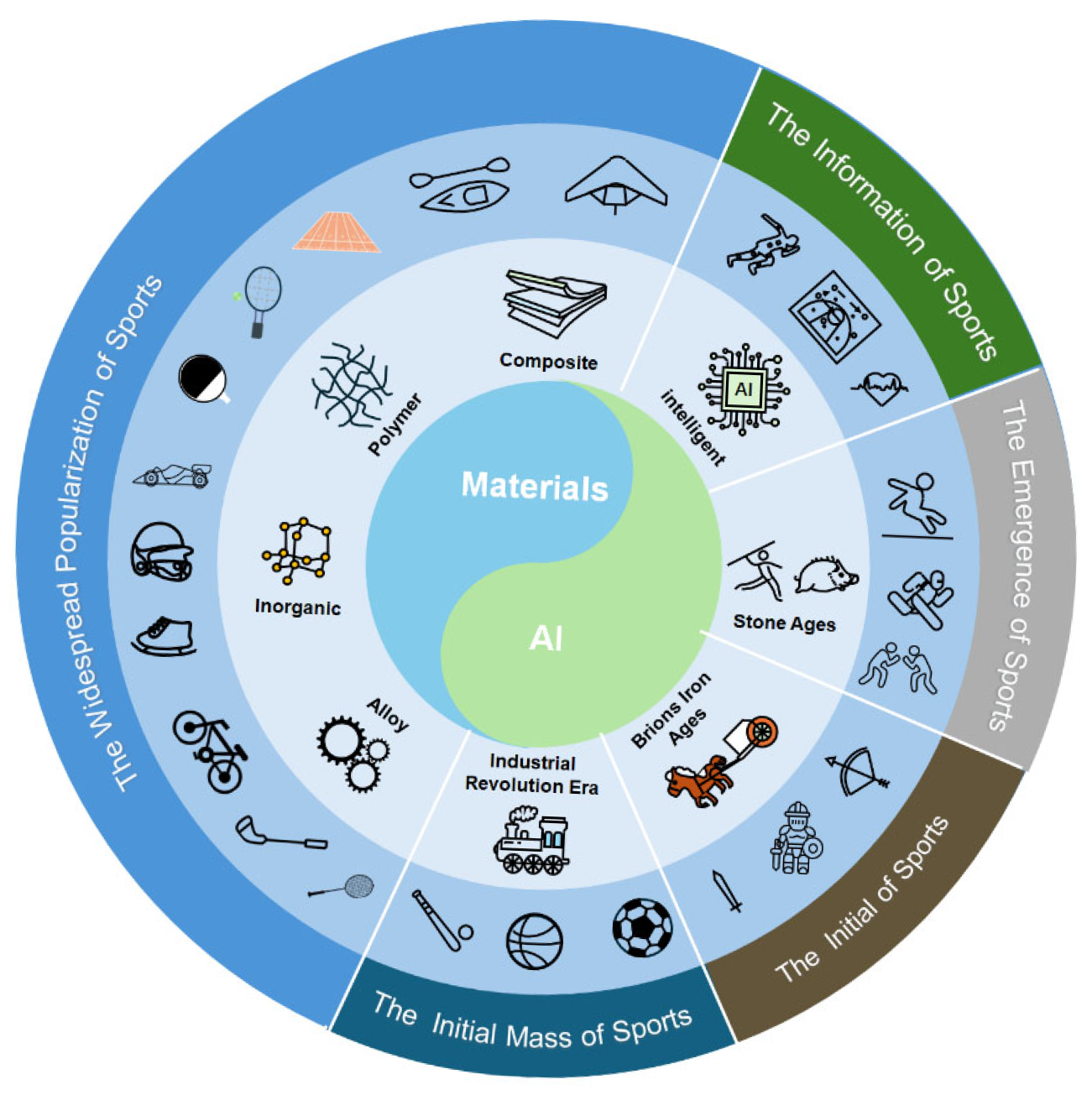

1.1. The Evolution of Sports from a Technology-Driven Perspective: From Material Innovation to Intelligent Integration

1.2. The Evolution of Sports from a Historical Development Perspective: From the Stone Age to the Intelligent Age

2. The Predicament of Material Research and Development and Driving Forces Behind the Rise of Artificial Intelligence

2.1. From Traditional Trial and Error to Intelligent Design: The Transformation of the AI Materials Research and Development Paradigm Driven by Computational Materials Science

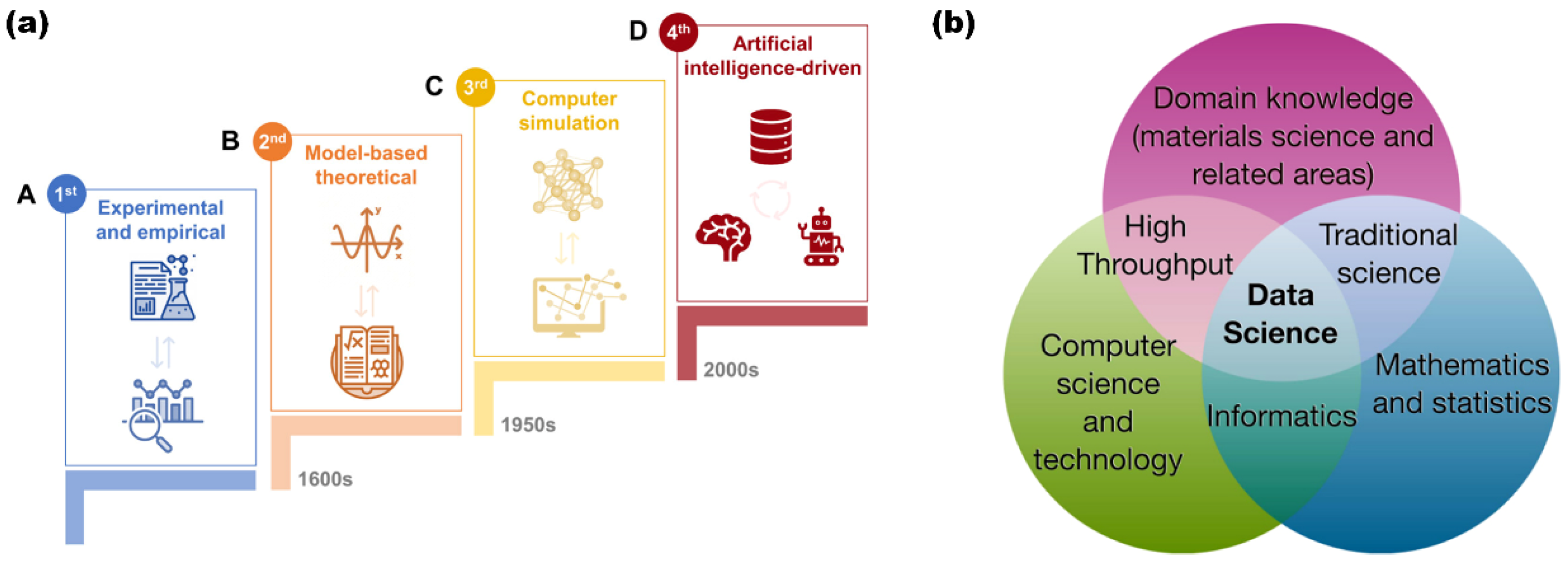

2.2. The Evolution of Computational Materials Science

2.3. Barriers to the Development of Computational Materials Science

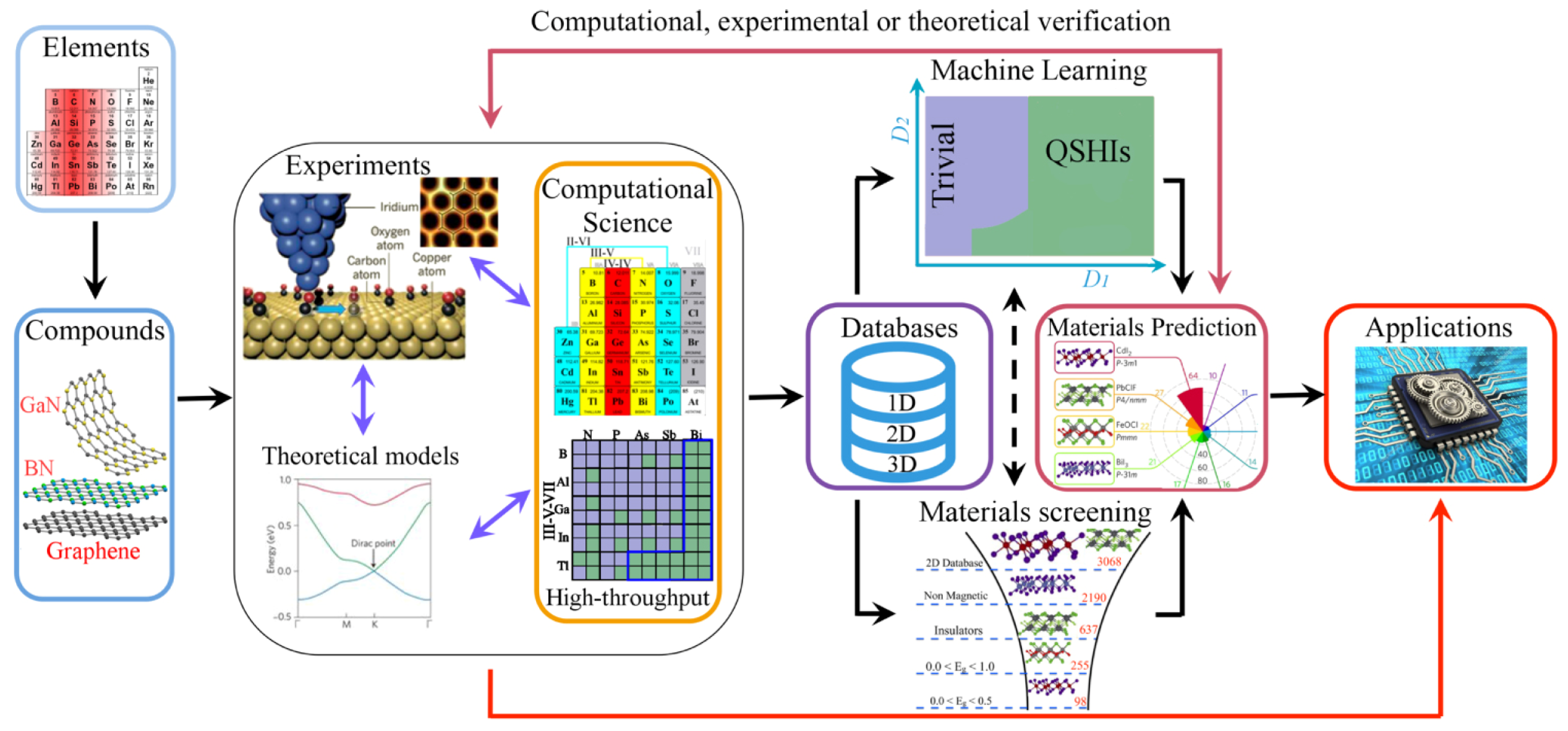

3. AI Transforms the Development of Computational Materials Science

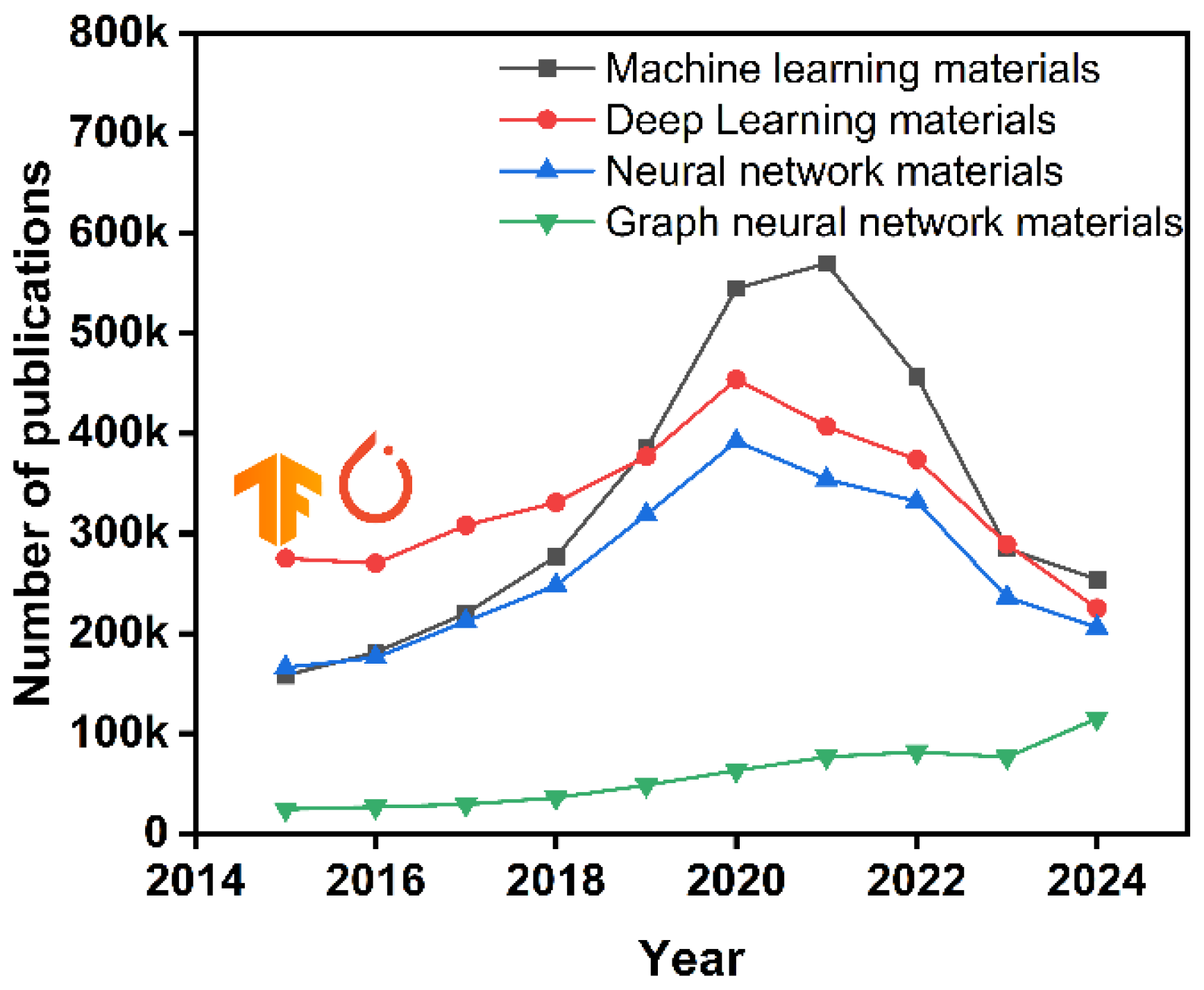

3.1. The Connection Between AI and Materials

3.2. AI in Materials Science: Current Applications and Prospects

3.3. The Model Form That Combines Materials Science and AI

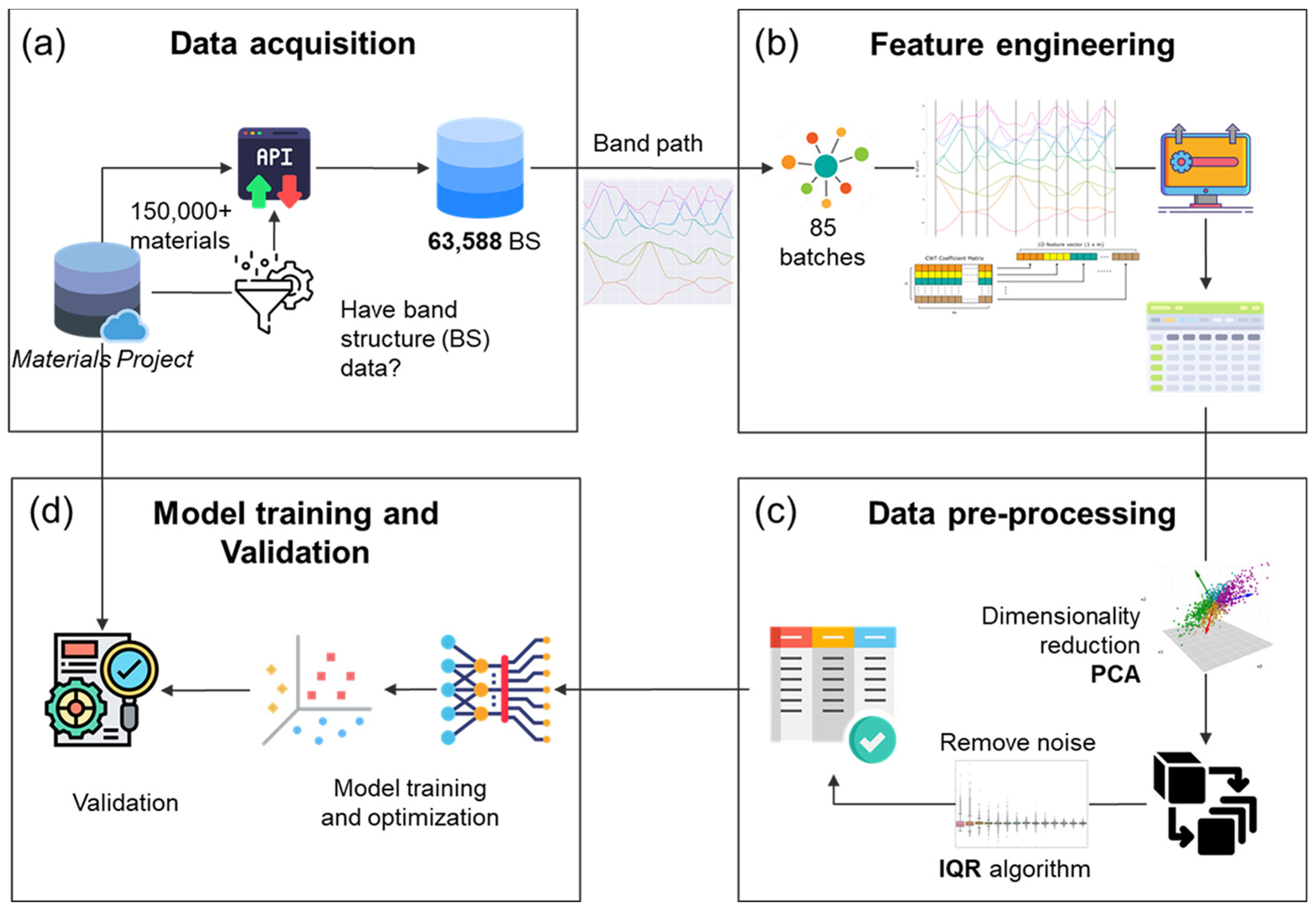

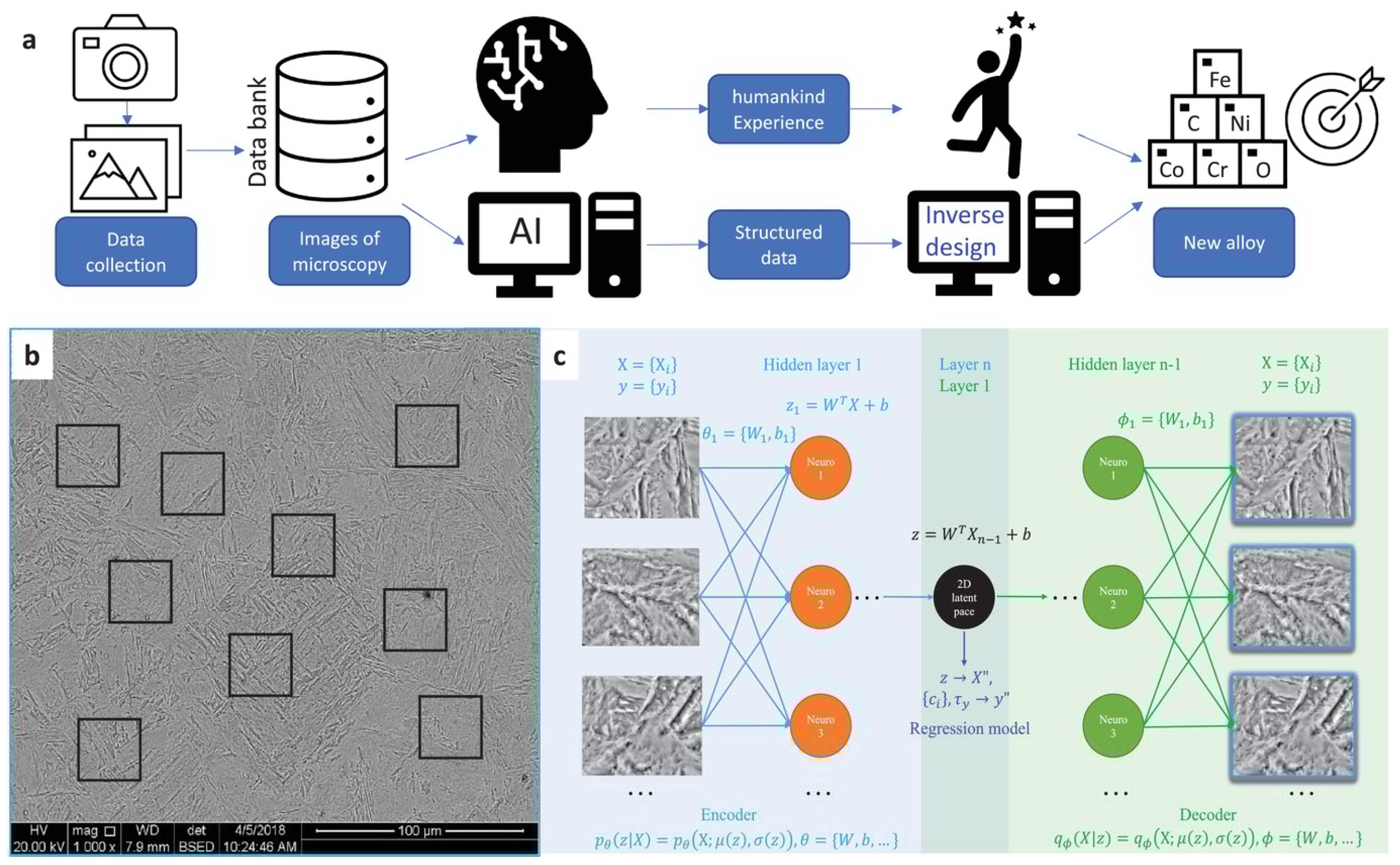

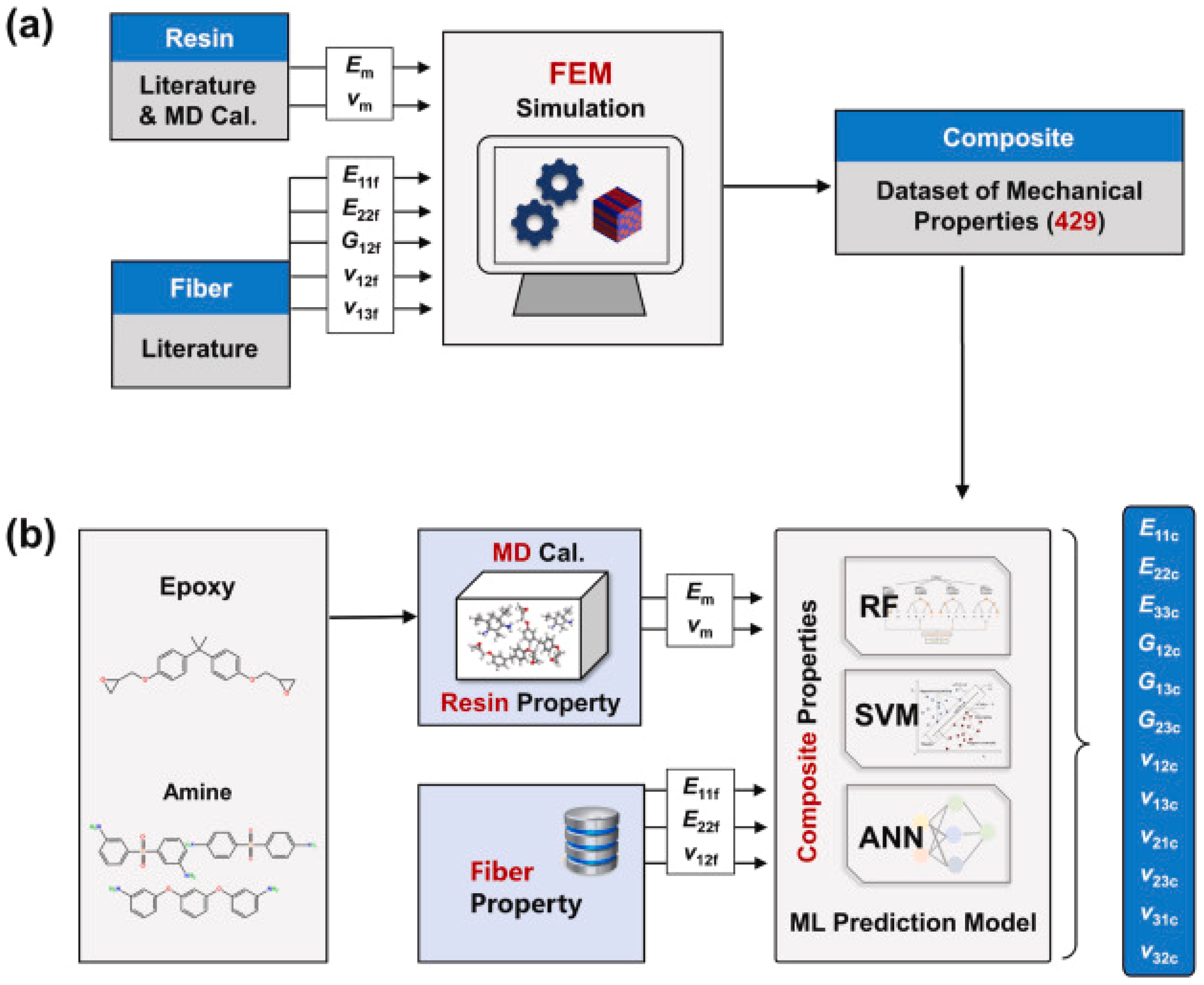

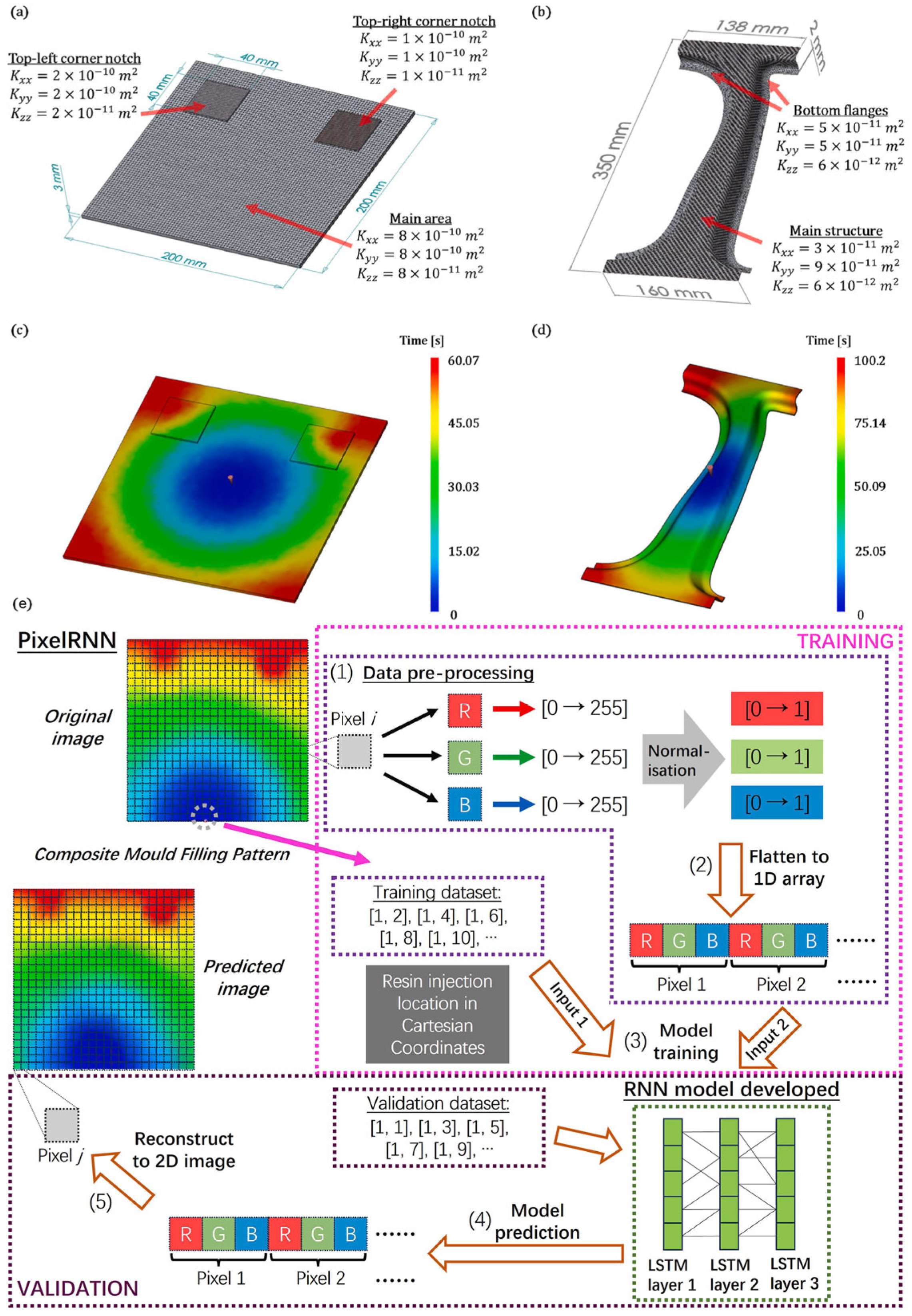

3.3.1. Machine Learning and Neural Network Data-Driven Models in Materials Science

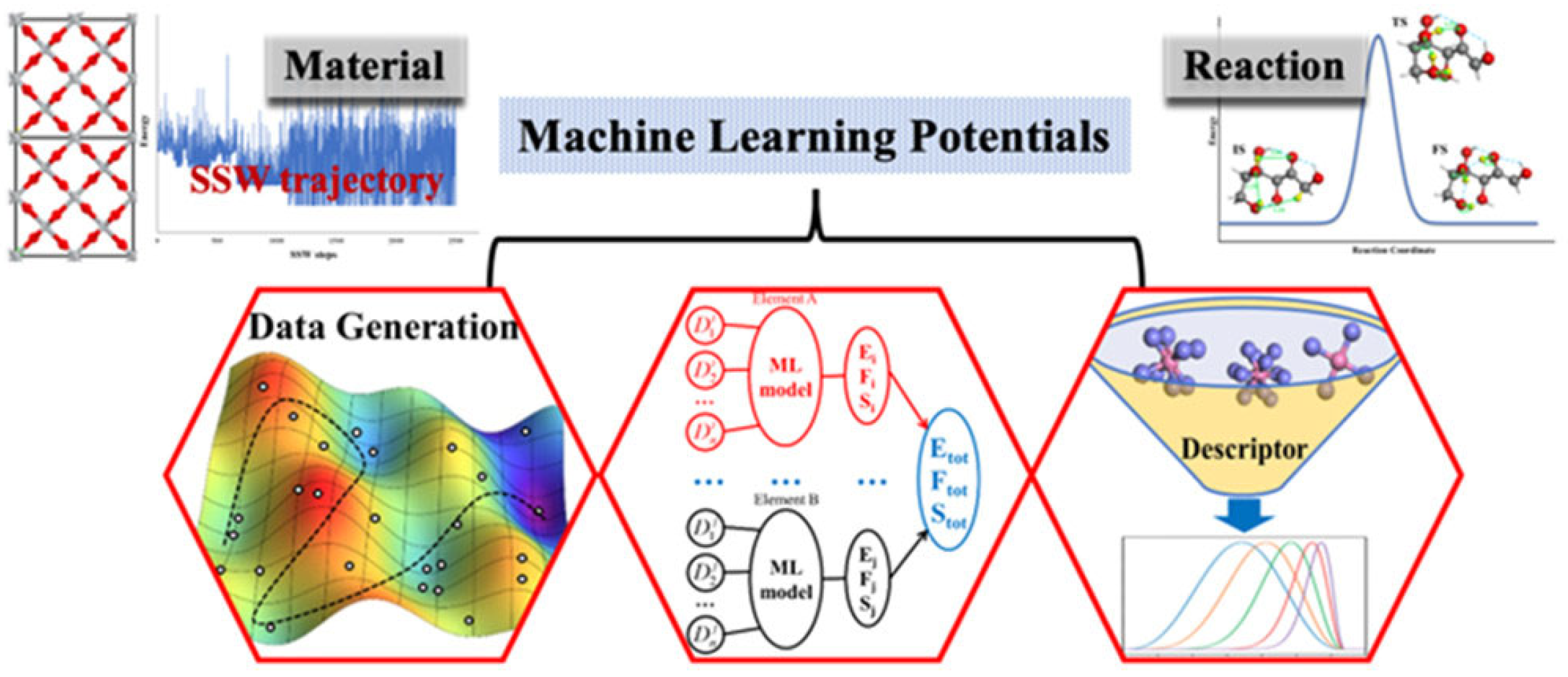

3.3.2. Machine Learning Potentials in Materials Science

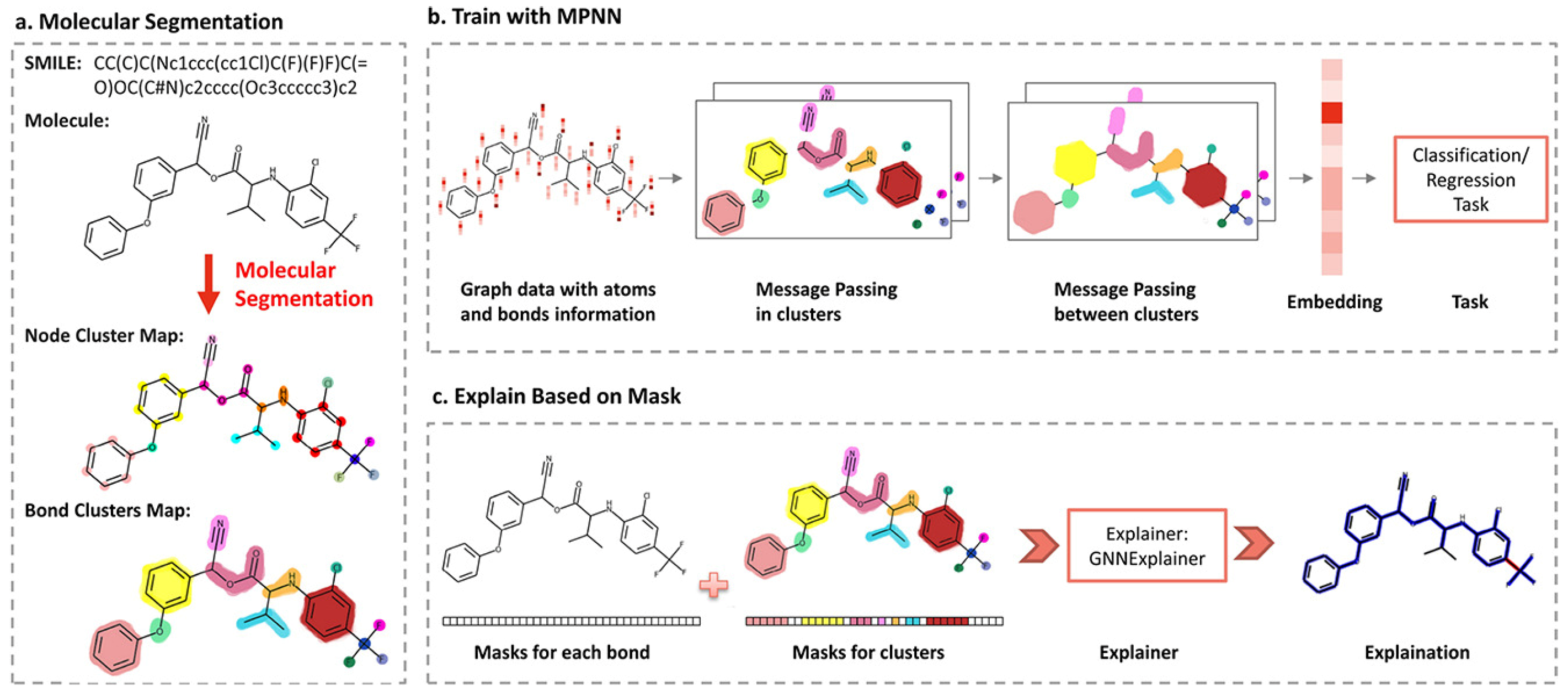

3.3.3. Graph Neural Network Based on Molecular Structure

3.3.4. The Differences and Connections Among the Three Models

4. The Application of Artificial Intelligence in the Design of Basic Materials for Sports

4.1. Piezoelectric Materials

4.2. Polymer Materials

4.3. Ceramic Materials

4.4. Metallic Materials

4.5. Composite Materials

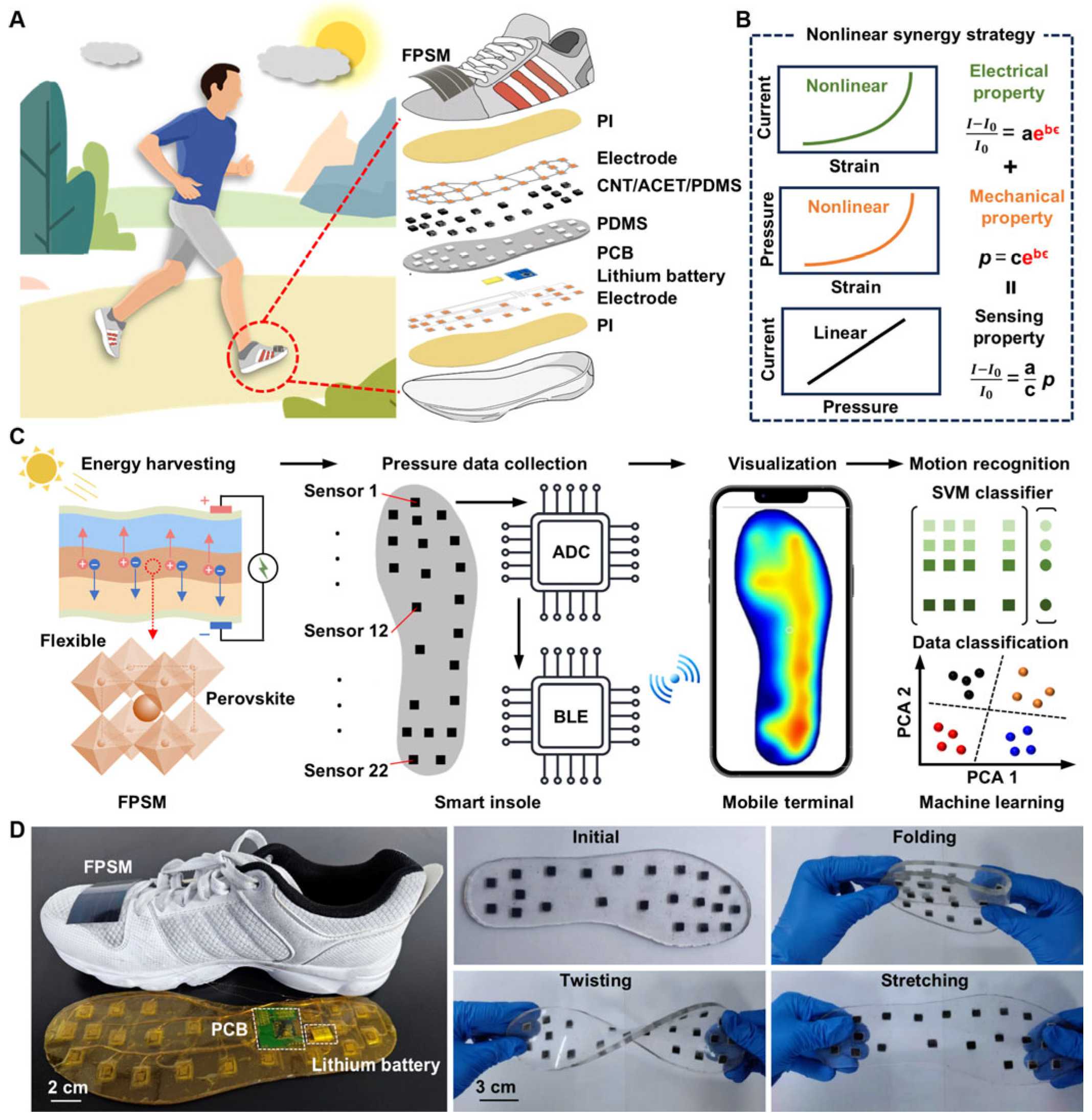

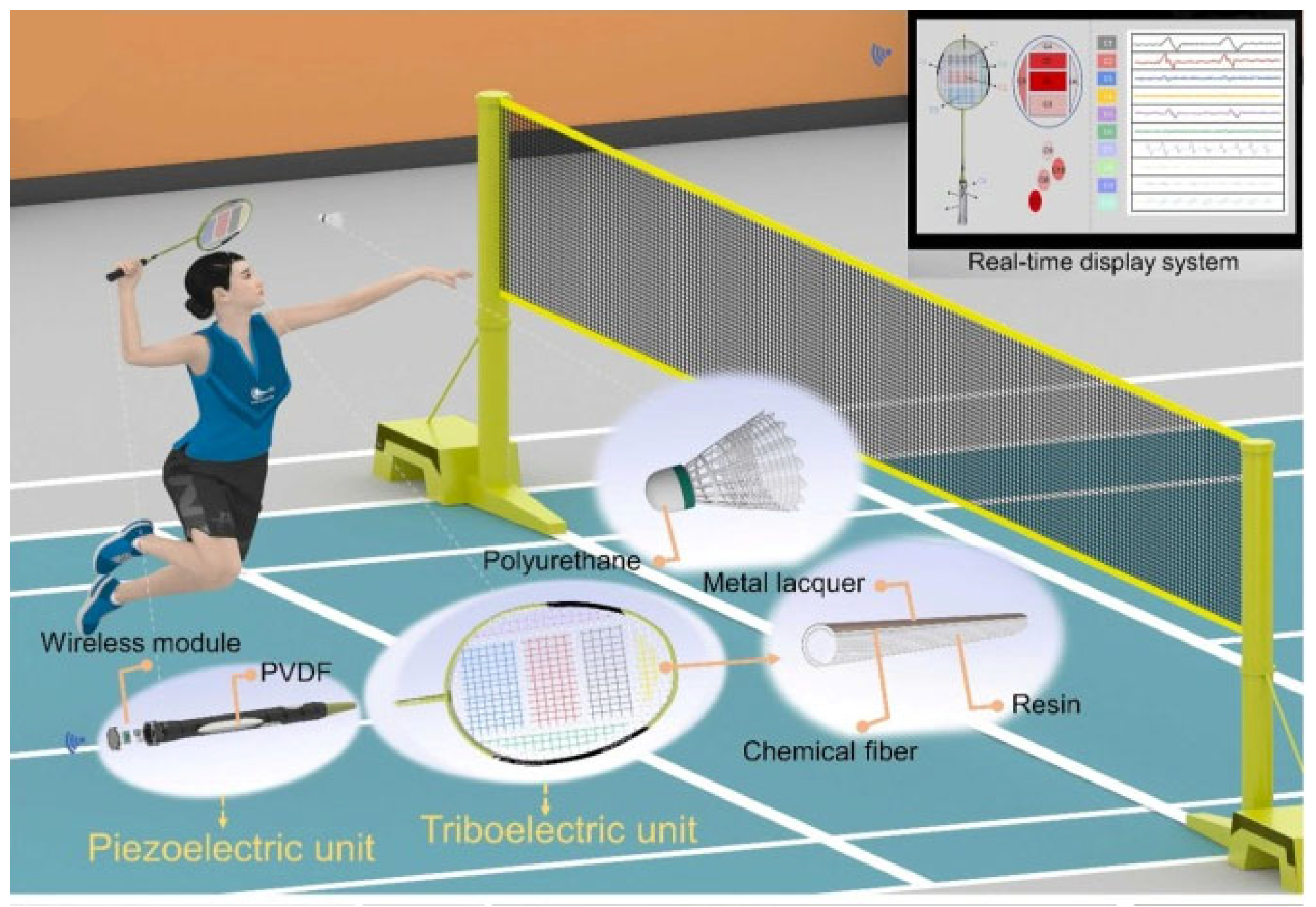

5. Multifunctional Material Integrated Devices Enhance Sports Data Collection and AI Analysis

6. AI and Sports Data

6.1. Application of AI in Sports Data Analysis

6.2. AI in Sports Data: Privacy, Data Integrity, and Anti-Tampering Risks

7. The Application of Dual Drivers of Materials and AI in Sports Events

8. Conclusions and Future Prospects

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Delaney, T.; Madigan, T. The Sociology of Sports: An introduction; McFarland: Jefferson, NC, USA, 2021. [Google Scholar]

- Savage, G. Formula 1 Composites Engineering. Eng. Fail. Anal. 2010, 17, 92–115. [Google Scholar] [CrossRef]

- Arderiu, A.; De Fondeville, R. Influence of advanced footwear technology on sub-2 hour marathon and other top running performances. J. Quant. Anal. Sports 2022, 18, 73–86. [Google Scholar] [CrossRef]

- Morales, A.T.; Tamayo Fajardo, J.A.; González-García, H. High-Speed Swimsuits and Their Historical Development in Competitive Swimming. Front. Psychol. 2019, 10, 2639. [Google Scholar] [CrossRef]

- Ma, W.; Liu, Y.; Yi, Q.; Liu, X.; Xing, W.; Zhao, R.; Liu, H.; Li, R. Table tennis coaching system based on a multimodal large language model with a table tennis knowledge base. PLoS ONE 2025, 20, e0317839. [Google Scholar] [CrossRef]

- Rowe, D. Sport, Culture and the Media: The Unruly Trinity, 1st ed.; Lü, P., Translator; Tsinghua University Press: Beijing, China, 2013. [Google Scholar]

- Memmert, D. (Ed.) Sports Technology: Technologies, Fields of Application, Sports Equipment and Materials for Sport; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar] [CrossRef]

- Amzallag, N. From Metallurgy to Bronze Age Civilizations: The Synthetic Theory. Am. J. Archaeol. 2009, 113, 497–519. [Google Scholar] [CrossRef]

- Kidd, B. Onward to the Olympics: Historical Perspectives on the Olympic Games. Univ. Tor. Q. 2009, 78, 342–344. [Google Scholar] [CrossRef]

- Bennett, J.M.; Hollister, C.W. Medieval Europe: A Short History; McGraw-Hill: New York, NY, USA, 2006. [Google Scholar]

- Flory, P.J. Network Structure and the Elastic Properties of Vulcanized Rubber. Chem. Rev. 1944, 35, 51–75. [Google Scholar] [CrossRef]

- Wang, S.; Yu, S. Advances in Biomimetic Materials. Small Methods 2024, 8, 2301487. [Google Scholar] [CrossRef]

- An, S.; Yoon, S.S.; Lee, M.W. Self-Healing Structural Materials. Polymers 2021, 13, 2297. [Google Scholar] [CrossRef]

- Hong, J.-W.; Yoon, C.; Jo, K.; Won, J.H.; Park, S. Recent advances in recording and modulation technologies for next-generation neural interfaces. iScience 2021, 24, 103550. [Google Scholar] [CrossRef]

- Yang, K.; McErlain-Naylor, S.A.; Isaia, B.; Callaway, A.; Beeby, S. E-Textiles for Sports and Fitness Sensing: Current State, Challenges, and Future Opportunities. Sensors 2024, 24, 1058. [Google Scholar] [CrossRef] [PubMed]

- Drăgulinescu, A.; Drăgulinescu, A.-M.; Zincă, G.; Bucur, D.; Feieș, V.; Neagu, D.-M. Smart Socks and In-Shoe Systems: State-of-the-Art for Two Popular Technologies for Foot Motion Analysis, Sports, and Medical Applications. Sensors 2020, 20, 4316. [Google Scholar] [CrossRef] [PubMed]

- He, Q.; Zeng, Y.; Jiang, L.; Wang, Z.; Lu, G.; Kang, H.; Li, P.; Bethers, B.; Feng, S.; Sun, L.; et al. Growing recyclable and healable piezoelectric composites in 3D printed bioinspired structure for protective wearable sensor. Nat. Commun. 2023, 14, 6477. [Google Scholar] [CrossRef] [PubMed]

- Callister, W.D.; Rethwisch, D.G. Fundamentals of Materials Science and Engineering; Wiley: London, UK, 2000. [Google Scholar]

- Bello, S.A. Carbon-Fiber Composites: Development, Structure, Properties, and Applications. In Handbook of Nanomaterials and Nanocomposites for Energy and Environmental Applications; Kharissova, O.V., Torres-Martínez, L.M., Kharisov, B.I., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 63–84. [Google Scholar] [CrossRef]

- Ogura, M.; Fukushima, T.; Zeller, R.; Dederichs, P.H. Structure of the high-entropy alloy Al CrFeCoNi: Fcc versus bcc. J. Alloys Compd. 2017, 715, 454–459. [Google Scholar] [CrossRef]

- Neelam, R.; Kulkarni, S.A.; Bharath, H.S.; Powar, S.; Doddamani, M. Mechanical response of additively manufactured foam: A machine learning approach. Results Eng. 2022, 16, 100801. [Google Scholar] [CrossRef]

- Bhaduri, A.; Gupta, A.; Graham-Brady, L. Stress field prediction in fiber-reinforced composite materials using a deep learning approach. Compos. Part B Eng. 2022, 238, 109879. [Google Scholar] [CrossRef]

- LeSar, R. Introduction to Computational Materials Science: Fundamentals to Applications; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Louie, S.G.; Chan, Y.-H.; Da Jornada, F.H.; Li, Z.; Qiu, D.Y. Discovering and understanding materials through computation. Nat. Mater. 2021, 20, 728–735. [Google Scholar] [CrossRef]

- Ohno, K.; Esfarjani, K.; Kawazoe, Y. Computational Materials Science: From Ab Initio to Monte Carlo Methods, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Gao, L.; Lin, J.; Wang, L.; Du, L. Machine Learning-Assisted Design of Advanced Polymeric Materials. Acc. Mater. Res. 2024, 5, 571–584. [Google Scholar] [CrossRef]

- Schleder, G.R.; Padilha, A.C.M.; Acosta, C.M.; Costa, M.; Fazzio, A. From DFT to machine learning: Recent approaches to materials science—A review. J. Phys. Mater. 2019, 2, 032001. [Google Scholar] [CrossRef]

- Kohn, W.; Sham, L.J. Self-Consistent Equations Including Exchange and Correlation Effects. Phys. Rev. 1965, 140, A1133–A1138. [Google Scholar] [CrossRef]

- Rapaport, D.C. The Art of Molecular Dynamics Simulation; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- De Pablo, J.J.; Jackson, N.E.; Webb, M.A.; Chen, L.-Q.; Moore, J.E.; Morgan, D.; Jacobs, R.; Pollock, T.; Schlom, D.G.; Toberer, E.S.; et al. New frontiers for the materials genome initiative. NPJ Comput. Mater. 2019, 5, 41. [Google Scholar] [CrossRef]

- Curtarolo, S.; Setyawan, W.; Hart, G.L.; Jahnatek, M.; Chepulskii, R.V.; Taylor, R.H.; Wang, S.; Xue, J.; Yang, K.; Levy, O.; et al. AFLOW: An automatic framework for high-throughput materials discovery. Comput. Mater. Sci. 2012, 58, 218–226. [Google Scholar] [CrossRef]

- Saal, J.E.; Kirklin, S.; Aykol, M.; Meredig, B.; Wolverton, C. Materials design and discovery with high-throughput density functional theory: The open quantum materials database (OQMD). JOM 2013, 65, 1501–1509. [Google Scholar] [CrossRef]

- Levine, I.N.; Busch, D.H.; Shull, H. Quantum Chemistry; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Winston, P.H. Artificial Intelligence; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1984. [Google Scholar]

- Hjorth Larsen, A.; Jørgen Mortensen, J.; Blomqvist, J.; Castelli, I.E.; Christensen, R.; Dułak, M.; Friis, J.; Groves, M.N.; Hammer, B.; Hargus, C.; et al. The atomic simulation environment—A Python library for working with atoms. J. Phys. Condens. Matter 2017, 29, 273002. [Google Scholar] [CrossRef]

- Ong, S.P.; Richards, W.D.; Jain, A.; Hautier, G.; Kocher, M.; Cholia, S.; Gunter, D.; Chevrier, V.L.; Persson, K.A.; Ceder, G. Python Materials Genomics (pymatgen): A robust, open-source python library for materials analysis. Comput. Mater. Sci. 2013, 68, 314–319. [Google Scholar] [CrossRef]

- Bento, A.P.; Hersey, A.; Félix, E.; Landrum, G.; Gaulton, A.; Atkinson, F.; Bellis, L.J.; De Veij, M.; Leach, A.R. An open source chemical structure curation pipeline using RDKit. J. Cheminform. 2020, 12, 51. [Google Scholar] [CrossRef]

- Linstrom, P.J.; Mallard, W.G. The NIST Chemistry WebBook: A Chemical Data Resource on the Internet. J. Chem. Eng. Data 2001, 46, 1059–1063. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX conference on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver Convention Centre, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef]

- Skolnick, J.; Gao, M.; Zhou, H.; Singh, S. AlphaFold 2: Why It Works and Its Implications for Understanding the Relationships of Protein Sequence, Structure, and Function. J. Chem. Inf. Model. 2021, 61, 4827–4831. [Google Scholar] [CrossRef]

- Butler, K.T.; Davies, D.W.; Cartwright, H.; Isayev, O.; Walsh, A. Machine learning for molecular and materials science. Nature 2018, 559, 547–555. [Google Scholar] [CrossRef]

- Sha, W.; Edwards, K.L. The use of artificial neural networks in materials science based research. Mater. Des. 2007, 28, 1747–1752. [Google Scholar] [CrossRef]

- Behler, J. Perspective: Machine learning potentials for atomistic simulations. J. Chem. Phys. 2016, 145, 170901. [Google Scholar] [CrossRef] [PubMed]

- Sinha, P.; Joshi, A.; Dey, R.; Misra, S. Machine-Learning-Assisted Materials Discovery from Electronic Band Structure. J. Chem. Inf. Model. 2024, 64, 8404–8413. [Google Scholar] [CrossRef] [PubMed]

- Pei, Z.; Rozman, K.A.; Doğan, Ö.N.; Wen, Y.; Gao, N.; Holm, E.A.; Hawk, J.A.; Alman, D.E.; Gao, M.C. Machine-Learning Microstructure for Inverse Material Design. Adv. Sci. 2021, 8, 2101207. [Google Scholar] [CrossRef]

- Chan, C.H.; Sun, M.; Huang, B. Application of machine learning for advanced material prediction and design. EcoMat 2022, 4, e12194. [Google Scholar] [CrossRef]

- Wang, A.Y.-T.; Mahmoud, M.S.; Czasny, M.; Gurlo, A. CrabNet for Explainable Deep Learning in Materials Science: Bridging the Gap Between Academia and Industry. Integr. Mater. Manuf. Innov. 2022, 11, 41–56. [Google Scholar] [CrossRef]

- Du, H.; Hui, J.; Zhang, L.; Wang, H. Rational Design of Deep Learning Networks Based on a Fusion Strategy for Improved Material Property Predictions. J. Chem. Theory Comput. 2024, 20, 6756–6771. [Google Scholar] [CrossRef]

- Kresse, G.; Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 1993, 47, 558–561. [Google Scholar] [CrossRef]

- Kang, P.-L.; Shang, C.; Liu, Z.-P. Large-Scale Atomic Simulation via Machine Learning Potentials Constructed by Global Potential Energy Surface Exploration. Acc. Chem. Res. 2020, 53, 2119–2129. [Google Scholar] [CrossRef]

- Behler, J.; Parrinello, M. Generalized Neural-Network Representation of High-Dimensional Potential-Energy Surfaces. Phys. Rev. Lett. 2007, 98, 146401. [Google Scholar] [CrossRef]

- Bartók, A.P.; Payne, M.C.; Kondor, R.; Csányi, G. Gaussian Approximation Potentials: The Accuracy of Quantum Mechanics, without the Electrons. Phys. Rev. Lett. 2010, 104, 136403. [Google Scholar] [CrossRef] [PubMed]

- Thompson, A.P.; Swiler, L.P.; Trott, C.R.; Foiles, S.M.; Tucker, G.J. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Comput. Phys. 2015, 285, 316–330. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, L.; Han, J. EW DeePMD-kit: A deep learning package for many-body potential energy representation and molecular dynamics. Comput. Phys. Commun. 2018, 228, 178–184. [Google Scholar] [CrossRef]

- Kovács, D.P.; Oord, C.V.D.; Kucera, J.; Allen, A.E.A.; Cole, D.J.; Ortner, C.; Csányi, G. Linear Atomic Cluster Expansion Force Fields for Organic Molecules: Beyond RMSE. J. Chem. Theory Comput. 2021, 17, 7696–7711. [Google Scholar] [CrossRef]

- Hedman, D.; McLean, B.; Bichara, C.; Maruyama, S.; Larsson, J.A.; Ding, F. Dynamics of growing carbon nanotube interfaces probed by machine learning-enabled molecular simulations. Nat. Commun. 2024, 15, 4076. [Google Scholar] [CrossRef]

- McCandler, C.A.; Pihlajamäki, A.; Malola, S.; Häkkinen, H.; Persson, K.A. Gold–Thiolate Nanocluster Dynamics and Intercluster Reactions Enabled by a Machine Learned Interatomic Potential. ACS Nano 2024, 18, 19014–19023. [Google Scholar] [CrossRef]

- Chen, Z.; Li, D.; Liu, M.; Liu, J. Graph neural networks with molecular segmentation for property prediction and structure–property relationship discovery. Comput. Chem. Eng. 2023, 179, 108403. [Google Scholar] [CrossRef]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural Message Passing for Quantum Chemistry. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017. [Google Scholar]

- Schütt, K.T.; Sauceda, H.E.; Kindermans, P.-J.; Tkatchenko, A.; Müller, K.-R. SchNet–A deep learning architecture for molecules and materials. J. Chem. Phys. 2018, 148, 241722. [Google Scholar] [CrossRef]

- Yun, S.; Jeong, M.; Kim, R.; Kang, J.; Kim, H.J. Graph transformer networks. In Advances in Neural Information Processing Systems, Proceedings of the 2019 Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Neural Information Processing Systems Foundation, Inc. (NeurIPS): San Diego, CA, USA, 2019. [Google Scholar]

- Louis, S.-Y.; Zhao, Y.; Nasiri, A.; Wang, X.; Song, Y.; Liu, F.; Hu, J. Graph convolutional neural networks with global attention for improved materials property prediction. Phys. Chem. Chem. Phys. 2020, 22, 18141–18148. [Google Scholar] [CrossRef]

- Lai, X.; Yang, P.; Wang, K.; Yang, Q.; Yu, D. MGRNN: Structure Generation of Molecules Based on Graph Recurrent Neural Networks. Mol. Inform. 2021, 40, 2100091. [Google Scholar] [CrossRef]

- Korolev, V.; Mitrofanov, A. Coarse-Grained Crystal Graph Neural Networks for Reticular Materials Design. J. Chem. Inf. Model. 2024, 64, 1919–1931. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Feng, J.; Ji, Y.; Li, Y. SLI-GNN: A Self-Learning-Input Graph Neural Network for Predicting Crystal and Molecular Properties. J. Phys. Chem. A 2023, 127, 5921–5929. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, H.-F. Investigating Material Interface Diffusion Phenomena through Graph Neural Networks in Applied Materials. ACS Appl. Mater. Interfaces 2024, 16, 53153–53162. [Google Scholar] [CrossRef]

- Jing, H.; Guan, C.; Yang, Y.; Zhu, H. Machine learning-assisted design of AlN-based high-performance piezoelectric materials. J. Mater. Chem. A 2023, 11, 14840–14849. [Google Scholar] [CrossRef]

- Singh, K.; Adhikari, J.; Roscow, J. Prediction of the electromechanical properties of a piezoelectric composite material through the artificial neural network. Mater. Today Commun. 2024, 38, 108288. [Google Scholar] [CrossRef]

- Zhao, G.; Xu, T.; Fu, X.; Zhao, W.; Wang, L.; Lin, J.; Hu, Y.; Du, L. Machine-learning-assisted multiscale modeling strategy for predicting mechanical properties of carbon fiber reinforced polymers. Compos. Sci. Technol. 2024, 248, 110455. [Google Scholar] [CrossRef]

- Yuan, Z. Predicting mechanical behaviors of rubber materials with artificial neural networks. Int. J. Mech. Sci. 2023, 249, 108265. [Google Scholar] [CrossRef]

- Qian, H.; Zheng, J.; Wang, Y.; Jiang, D. Fatigue Life Prediction Method of Ceramic Matrix Composites Based on Artificial Neural Network. Appl. Compos. Mater. 2023, 30, 1251–1268. [Google Scholar] [CrossRef]

- Rocha, H.R.O.; Roukos, R.; Abou Dargham, S.; Romanos, J.; Chaumont, D.; Silva, J.A.L.; Wörtche, H. Optimizing a machine learning design of dielectric properties in lead-free piezoelectric ceramics. Mater. Des. 2024, 243, 113053. [Google Scholar] [CrossRef]

- Morand, L.; Iraki, T.; Dornheim, J.; Sandfeld, S.; Link, N.; Helm, D. Machine learning for structure-guided materials and process design. Mater. Des. 2024, 248, 113453. [Google Scholar] [CrossRef]

- Hung, T.-H.; Xu, Z.-X.; Kang, D.-Y.; Lin, L.-C. Chemistry-Encoded Convolutional Neural Networks for Predicting Gaseous Adsorption in Porous Materials. J. Phys. Chem. C 2022, 126, 2813–2822. [Google Scholar] [CrossRef]

- Bishara, D.; Xie, Y.; Liu, W.K.; Li, S. A State-of-the-Art Review on Machine Learning-Based Multiscale Modeling, Simulation, Homogenization and Design of Materials. Arch. Comput. Methods Eng. 2023, 30, 191–222. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, S.; Bwar, K.H.; Eisenbart, B.; Lu, G.; Belaadi, A.; Fox, B.; Chai, B.X. Application of machine learning for composite moulding process modelling. Compos. Commun. 2024, 48, 101960. [Google Scholar] [CrossRef]

- Wang, Q.; Guan, H.; Wang, C.; Lei, P.; Sheng, H.; Bi, H.; Hu, J.; Guo, C.; Mao, Y.; Yuan, J.; et al. A wireless, self-powered smart insole for gait monitoring and recognition via nonlinear synergistic pressure sensing. Sci. Adv. 2025, 11, eadu1598. [Google Scholar] [CrossRef]

- Yuan, J.; Xue, J.; Liu, M.; Wu, L.; Cheng, J.; Qu, X.; Yu, D.; Wang, E.; Fan, Z.; Liu, Z.; et al. Self-powered intelligent badminton racket for machine learning-enhanced real-time training monitoring. Nano Energy 2024, 132, 110377. [Google Scholar] [CrossRef]

- Van Dijk, M.P.; Kok, M.; Berger, M.A.M.; Hoozemans, M.J.M.; Veeger, D.H.E.J. Machine Learning to Improve Orientation Estimation in Sports Situations Challenging for Inertial Sensor Use. Front. Sports Act. Living 2021, 3, 670263. [Google Scholar] [CrossRef]

- Havlucu, H.; Akgun, B.; Eskenazi, T.; Coskun, A.; Ozcan, O. Toward Detecting the Zone of Elite Tennis Players Through Wearable Technology. Front. Sports Act. Living 2022, 4, 939641. [Google Scholar] [CrossRef]

- Yang, Z.; Ke, P.; Zhang, Y.; Du, F.; Hong, P. Quantitative analysis of the dominant external factors influencing elite speed Skaters’ performance using BP neural network. Front. Sports Act. Living 2024, 6, 1227785. [Google Scholar] [CrossRef]

- Munoz-Macho, A.A.; Domínguez-Morales, M.J.; Sevillano-Ramos, J.L. Performance and healthcare analysis in elite sports teams using artificial intelligence: A scoping review. Front. Sports Act. Living 2024, 6, 1383723. [Google Scholar] [CrossRef]

- Musat, C.L.; Mereuta, C.; Nechita, A.; Tutunaru, D.; Voipan, A.E.; Voipan, D.; Mereuta, E.; Gurau, T.V.; Gurău, G.; Nechita, L.C. Diagnostic Applications of AI in Sports: A Comprehensive Review of Injury Risk Prediction Methods. Diagnostics 2024, 14, 2516. [Google Scholar] [CrossRef]

- Liu, P.; Li, X.; Zang, B.; Diao, G. Privacy-preserving sports data fusion and prediction with smart devices in distributed environment. J. Cloud Comput. 2024, 13, 106. [Google Scholar] [CrossRef]

- Hegi, H.; Heitz, J.; Kredel, R. Sensor-based augmented visual feedback for coordination training in healthy adults: A scoping review. Front. Sports Act. Living 2023, 5, 1145247. [Google Scholar] [CrossRef] [PubMed]

- Mascia, G.; De Lazzari, B.; Camomilla, V. Machine learning aided jump height estimate democratization through smartphone measures. Front. Sports Act. Living 2023, 5, 1112739. [Google Scholar] [CrossRef] [PubMed]

- Young, F.; Mason, R.; Wall, C.; Morris, R.; Stuart, S.; Godfrey, A. Examination of a foot mounted IMU-based methodology for a running gait assessment. Front. Sports Act. Living 2022, 4, 956889. [Google Scholar] [CrossRef]

- Goodin, P.; Gardner, A.J.; Dokani, N.; Nizette, B.; Ahmadizadeh, S.; Edwards, S.; Iverson, G.L. Development of a Machine-Learning-Based Classifier for the Identification of Head and Body Impacts in Elite Level Australian Rules Football Players. Front. Sports Act. Living 2021, 3, 725245. [Google Scholar] [CrossRef]

| Material Type | Principle | Application |

| Piezoelectric Materials | Generate charge variations under mechanical force. | Monitoring foot pressure distribution and dynamic motion state during running |

| Piezoresistive Materials | Resistance changes with mechanical strain | Monitoring joint angles and subtle muscle activity changes |

| Magneto-resistive Materials | Magnetic materials respond to external magnetic field variations. | Capturing displacement and direction in motion, commonly used in motion trajectory tracking |

| Deformation-sensitive Materials | Utilize strain effects and elastic recovery forces | Real-time monitoring of joint bending and dynamic deformation. Widely used in joint activity monitoring, smart clothing, and high-precision detection of complex dynamic behaviors |

| Type of Material | Advantages | Disadvantages |

|---|---|---|

| Carbon fiber | High strength and low weight, good corrosion resistance, good fatigue properties | Brittleness, easy breakage, high cost, difficult processing, and difficult waste recycling |

| Polymer materials | Lightweight and highly malleable, easy to form, good chemical resistance, good elasticity and comfort | Poor thermal stability, easy to deform or decompose, low mechanical properties, difficult to withstand large loads, aging problems |

| Alloy material | High strength and corrosion resistance, excellent processability, good high temperature resistance | The density is large and not suitable for lightweighting requirements; the cost is high, and some alloys are embrittlement at low temperatures |

| Ceramic materials | High hardness and wear resistance, high temperature stability, corrosion resistance and insulation | Brittleness, easy to break, difficult to process, easy to crack, heavy weight |

| Sports Equipment | Primary Materials | Material Category | Key Applications |

|---|---|---|---|

| Basketball/Soccer/Volleyball | Rubber, PU/PVC Leather | Polymer Materials | Outer layer: PU synthetic leather; Inner bladder: butyl rubber |

| Badminton Racket | Carbon Fiber, Titanium Alloy | Composite + Metallic | Frame: carbon fiber composite; Shaft: titanium alloy or carbon fiber |

| Tennis Racket | Carbon Fiber, Kevlar | Composite Materials | Main body: carbon fiber-reinforced epoxy resin; Some include Kevlar for toughness |

| Golf Club | Titanium Alloy, Carbon Fiber | Metallic + Composite | Clubhead: titanium alloy; Shaft: carbon fiber composite |

| Bicycle Frame | Aluminum/Carbon Fiber/Titanium | Metallic + Composite | Entry-level: aluminum alloy; Racing: carbon fiber; High-end: titanium alloy |

| Treadmill Belt | Rubber + Nylon Fiber | Polymer + Composite | Surface: anti-slip rubber; Base layer: nylon fiber reinforcement |

| Swimming Goggles | Polycarbonate (PC), Silicone | Polymer Materials | Lens: PC; Seal: silicone |

| Skis | Wood + Fiberglass + Polyethylene | Composite Materials | Core: wood; Reinforcement: fiberglass; Base: ultra-high-molecular-weight polyethylene |

| Dumbbells/Barbells | Cast Iron, Steel | Metallic Materials | Main body: cast iron (chrome-plated); Bar: chromium-molybdenum steel |

| Climbing Rope | Nylon, Polyester | Polymer Materials | Core: braided nylon fibers; Outer sheath: polyester |

| Table Tennis Ball | Celluloid/ABS Plastic | Polymer Materials | Professional: celluloid; Training: ABS plastic |

| Ice Skates | Stainless Steel + Carbon Steel | Metallic Materials | Blade: high-carbon stainless steel; Holder: alloy steel |

| Sports Protective Gear (Knee Pads, etc.) | EVA Foam + Nylon Fabric | Polymer + Composite | Cushioning: EVA foam; Outer layer: nylon/PU-coated fabric |

| Baseball Bat | Aluminum Alloy/Maple Wood/Composite | Metallic + Natural + Composite | Professional: aluminum alloy; Traditional: maple wood; Advanced: carbon fiber + fiberglass composite |

| Climbing Carabiners | Aluminum Alloy | Metallic Materials | Aerospace-grade aluminum alloy |

| Toolkits | Description |

|---|---|

| ASE (Atomic Simulation Environment) [35] | Widely used for atomistic simulations, supporting various quantum chemistry and molecular dynamics engines |

| Pymatgen [36] | Powerful tool for materials science, mainly for crystal structure analysis, electronic structure processing, and data generation |

| RDKit [37] | Open-source toolkit for cheminformatics and molecular modeling, widely used for molecule manipulation, reaction simulation, and property prediction. |

| Toolkits | Description |

|---|---|

| OQMD | Open quantum materials database focused on DFT calculations |

| Materials Project | Provides computational and experimental materials data |

| CCDC | Database of small molecule crystal structures |

| PubChem | Largest chemical molecule database with properties and bioactivity data |

| NIST Chemistry [38] | Provides thermodynamic and spectral data from NIST |

| Model Form | Content | Features | Disadvantages |

|---|---|---|---|

| Data-driven Machine Learning Prediction Model [43,44] | Trains machine learning models using large-scale experimental and computational data to establish a mapping relationship between material properties and structure. | Fast prediction of material properties. Efficient handling of complex, multi-dimensional data to discover potential patterns. | Strong dependency on high-quality data. The availability and balance of data directly affect model performance. |

| Machine Learning Potentials [45] | Construct potential functions (Machine Learning Potentials, MLPs) through machine learning techniques to replace traditional quantum mechanical methods for simulating atomic interactions. | This method is suitable for large-scale molecular dynamics simulations involving electron transfer and chemical bond breaking, applicable to studying reaction mechanisms and dynamic evolution in material systems at the thousand- to ten-thousand-atom scale. | Requires a large amount of high-precision computational data (e.g., DFT data) for training. Model transferability is poor, and cross-system predictive capabilities need improvement. |

| Graph Neural Network-Based Material Modeling [41] | Graph Neural Networks (GNNs) directly model the material’s molecular or crystal structure (usually represented as graphs) to predict the chemical properties and physical performance of materials. | Incorporates molecular information from material structures into the prediction model, helping to accurately describe complex chemical bonds and interactions. Compared to traditional neural networks, it offers better physical meaning and interpretability. | High computer resource requirements for building and optimizing graphics |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Jiang, S.; Li, J. The AI-Driven Transformation in New Materials Manufacturing and the Development of Intelligent Sports. Appl. Sci. 2025, 15, 5667. https://doi.org/10.3390/app15105667

Wang F, Jiang S, Li J. The AI-Driven Transformation in New Materials Manufacturing and the Development of Intelligent Sports. Applied Sciences. 2025; 15(10):5667. https://doi.org/10.3390/app15105667

Chicago/Turabian StyleWang, Fang, Shunnan Jiang, and Jun Li. 2025. "The AI-Driven Transformation in New Materials Manufacturing and the Development of Intelligent Sports" Applied Sciences 15, no. 10: 5667. https://doi.org/10.3390/app15105667

APA StyleWang, F., Jiang, S., & Li, J. (2025). The AI-Driven Transformation in New Materials Manufacturing and the Development of Intelligent Sports. Applied Sciences, 15(10), 5667. https://doi.org/10.3390/app15105667