A Deep Learning Approach to Classify AI-Generated and Human-Written Texts

Abstract

1. Introduction

- The formulation of an LSTM-based detection framework tailored to the Turkish language;

- The development of a novel dataset comprising both human-written and ChatGPT-generated Turkish texts;

- A comprehensive experimental evaluation, varying key parameters such as dropout rate, epochs, embedding size, and patch size;

- The demonstration of high detection accuracy, offering practical implications for real-world applications in academia, media, and cybersecurity.

2. Background and Related Literature

2.1. Overview of LSTM Technique

2.2. Related Literature

3. Materials and Method

3.1. Dataset Creation

- Embedding layer: Used to create numerical representations of text, with the vocabulary size reduced to a maximum of 10,000 words. In this study, the tokenizer vocabulary was capped at 10,000 words to achieve a balance between computational efficiency and effective text representation. Based on Zipf’s Law, a small set of high-frequency words accounts for most of the text, making this limit sufficient for capturing essential semantics while avoiding the burden of rare words. Prior research [28] also supports that focusing on frequent terms improves model performance without significant semantic loss. Moreover, restricting the vocabulary size helps the model generalize better by reducing overfitting and unnecessary memorization.

- LSTM layer: composed of LSTM layers, with a kernel regularizer applied.

- Dropout layer: included to prevent overfitting.

- The test data ratio: the proportion of data used for testing training data. It was set to 30% for the first three tests.

- Batch normalization layer: added to normalize the distributions between layers.

- Dense layer: the output layer uses a sigmoid activation function to classify text as AI-generated or human-written.

- The Adam algorithm was used as the optimizer for training the model and the learning rate was set to 0.0005. The early stopping technique was applied and the binary_crossentropy loss function was utilized to construct the model.

3.2. Data Preprocessing

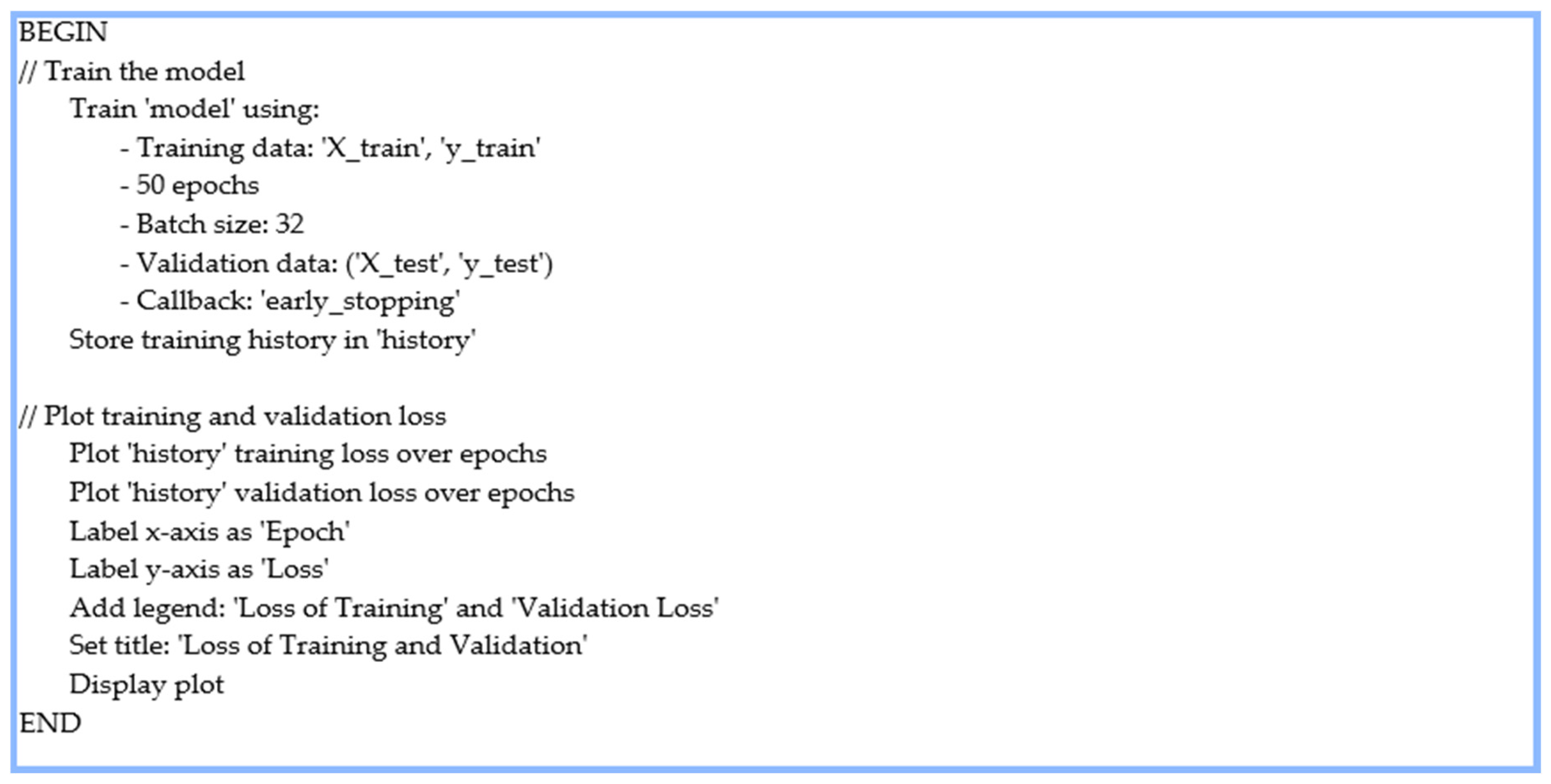

3.3. Model Training

3.4. Model Evaluation and Validation

4. Results and Discussion

4.1. Test 1

4.2. Test 2

4.3. Test 3

4.4. Test 4

5. Discussion

5.1. Results Comparison

- Epoch reduction (20 → 10): The reduction in epoch count from 20 to 10 had a comparatively minor impact. While it reduced training time, the overall structure and performance of the model remained largely intact. This suggests that, in this context, fewer epochs were sufficient for capturing the core patterns without significantly compromising accuracy, provided that other hyperparameters were well tuned.

- Dropout rate impact (20% → 40%): The most pronounced effect on model performance resulted from the increased dropout rate, which increased from 20% to 40%. This adjustment led to a substantial decline of approximately 10–11 points in the F1 score, indicating that the model experienced over-regularization. High dropout rates, while effective for reducing overfitting, can excessively disrupt the learning process, limiting the model’s ability to capture complex patterns in the data. This aligns with the broader understanding that excessive dropout can degrade model capacity and stability.

- Learning rate adjustment (0.0001 → 0.001): The shift from a 0.0001 to 0.001 learning rate had the second most significant impact. While this increase accelerated the training process, it also introduced the risk of unstable weight updates, potentially causing the model to oscillate around local minima or fail to converge effectively. This underscores the importance of selecting an optimal learning rate to balance training speed and model stability.

5.2. Practical and Research Implications

5.3. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chaka, C. Reviewing the performance of AI detection tools in differentiating between AI-generated and human-written texts: A literature and integrative hybrid review. J. Appl. Learn. Teach. 2024, 7, 115–126. [Google Scholar]

- Chimata, S.; Bollimuntha, A.R.; Devagiri, D.; Puligadda, S. An Investigative Analysis on Generation of AI Text Using Deep Learning Models for Large Language Models. In Proceedings of the 2024 International Conference on Smart Systems for Electrical, Electronics, Communication and Computer Engineering (ICSSEECC), IEEE, Coimbatore, India, 28–29 June 2024; pp. 7–12. [Google Scholar]

- Alzoubi, Y.I.; Mishra, A.; Topcu, A.E.; Cibikdiken, A.O. Generative artificial intelligence technology for systems engineering research: Contribution and challenges. Int. J. Ind. Eng. Manag. 2024, 15, 169–179. [Google Scholar] [CrossRef]

- Topcu, A.E.; Alzoubi, Y.I.; Elbasi, E.; Camalan, E. Social media zero-day attack detection using TensorFlow. Electronics 2023, 12, 3554. [Google Scholar] [CrossRef]

- Menard, P.; Bott, G.J. Artificial intelligence misuse and concern for information privacy: New construct validation and future directions. Inf. Syst. J. 2025, 35, 322–367. [Google Scholar] [CrossRef]

- Zhou, J.; Müller, H.; Holzinger, A.; Chen, F. Ethical ChatGPT: Concerns, challenges, and commandments. Electronics 2024, 13, 3417. [Google Scholar] [CrossRef]

- Sanchez, T.W.; Brenman, M.; Ye, X. The ethical concerns of artificial intelligence in urban planning. J. Am. Plan. Assoc. 2025, 91, 294–307. [Google Scholar] [CrossRef]

- Alzoubi, Y.I.; Mishra, A.; Topcu, A.E. Research trends in deep learning and machine learning for cloud computing security. Artif. Intell. Rev. 2024, 57, 132. [Google Scholar] [CrossRef]

- Katib, I.; Assiri, F.Y.; Abdushkour, H.A.; Hamed, D.; Ragab, M. Differentiating chat generative pretrained transformer from humans: Detecting ChatGPT-generated text and human text using machine learning. Mathematics 2023, 11, 3400. [Google Scholar] [CrossRef]

- Boutadjine, A.; Harrag, F.; Shaalan, K. Human vs. Machine: A Comparative Study on the Detection of AI-Generated Content. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 24, 1–26. [Google Scholar] [CrossRef]

- Elkhatat, A.M.; Elsaid, K.; Almeer, S. Evaluating the efficacy of AI content detection tools in differentiating between human and AI-generated text. Int. J. Educ. Integr. 2023, 19, 1–17. [Google Scholar] [CrossRef]

- Kayabaş, A.; Schmid, H.; Topçu, A.E.; Kiliç, Ö. TRMOR: A finite-state-based morphological analyzer for Turkish. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 3837–3851. [Google Scholar] [CrossRef]

- Kayabaş, A.; Topcu, A.E.; Kiliç, Ö. A novel hybrid algorithm for morphological analysis: Artificial Neural-Net-XMOR. Turk. J. Electr. Eng. Comput. Sci. 2022, 30, 1726–1740. [Google Scholar] [CrossRef]

- Wani, M.A.; Abd El-Latif, A.A.; ELAffendi, M.; Hussain, A. AI-based Framework for Discriminating Human-authored and AI-generated Text. IEEE Trans. Artif. Intell. 2024, 1–15. [Google Scholar] [CrossRef]

- Latif, G.; Mohammad, N.; Brahim, G.B.; Alghazo, J.; Fawagreh, K. Detection of AI-written and human-written text using deep recurrent neural networks. In Proceedings of the Fourth Symposium on Pattern Recognition and Applications (SPRA 2023), Napoli, Italy, 1–3 December 2023; pp. 11–20. [Google Scholar]

- Zulqarnain, M.; Alsaedi, A.K.Z.; Ghazali, R.; Ghouse, M.G.; Sharif, W.; Husaini, N.A. A comparative analysis on question classification task based on deep learning approaches. PeerJ Comput. Sci. 2021, 7, e570. [Google Scholar] [CrossRef] [PubMed]

- Sardinha, T.B. AI-generated vs human-authored texts: A multidimensional comparison. Appl. Corpus Linguist. 2024, 4, 100083. [Google Scholar] [CrossRef]

- Kalra, M.P.; Mathur, A.; Patvardhan, C. Detection of AI-generated Text: An Experimental Study. In Proceedings of the 3rd World Conference on Applied Intelligence and Computing (AIC), IEEE, Gwalior, India, 27–28 July 2024; pp. 552–557. [Google Scholar]

- Shah, A.; Ranka, P.; Dedhia, U.; Prasad, S.; Muni, S.; Bhowmick, K. Detecting and Unmasking AI-Generated Texts through Explainable Artificial Intelligence using Stylistic Features. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 1043–1053. [Google Scholar] [CrossRef]

- Mindner, L.; Schlippe, T.; Schaaff, K. Classification of human-and ai-generated texts: Investigating features for chatgpt. In Artificial Intelligence in Education Technologies: New Development and Innovative Practices—AIET 2023—Lecture Notes on Data Engineering and Communications Technologies; Schlippe, T., Cheng, E., Wang, T., Eds.; Springer: Singapore, 2023; Volume 190, pp. 152–170. [Google Scholar]

- Schaaff, K.; Schlippe, T.; Mindner, L. Classification of human-and AI-generated texts for different languages and domains. Int. J. Speech Technol. 2024, 27, 935–956. [Google Scholar] [CrossRef]

- Uzun, L. ChatGPT and academic integrity concerns: Detecting artificial intelligence generated content. Lang. Educ. Technol. 2023, 3, 45–54. [Google Scholar]

- Vashistha, M.; Dhiman, I.; Singh, P.; Kumar, A.; Malhotra, D. A Comparative Study of Classification of Human-Written Text Versus AI-Generated Text. In Proceedings of International Conference on Recent Innovations in Computing. ICRIC 2023. Lecture Notes in Electrical Engineering; Illés, Z., Verma, C., Gonçalves, P., Singh, P., Eds.; Springer: Singapore, 2023; Volume 1195, pp. 197–206. [Google Scholar]

- Alamleh, H.; AlQahtani, A.A.S.; ElSaid, A. Distinguishing human-written and ChatGPT-generated text using machine learning. In Proceedings of the 2023 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 27–28 April 2023; pp. 154–158. [Google Scholar]

- OpenAI. Open AI—ChatGPT. Available online: https://chatgpt.com/ (accessed on 7 March 2025).

- Gemini. Google AI—Gemini. Available online: https://gemini.google.com/app (accessed on 8 March 2025).

- Copilot. Microsoft Copilot: Your AI companion. Available online: https://copilot.microsoft.com/ (accessed on 9 March 2025).

- Joulin, A.; Cissé, M.; Grangier, D.; Jégou, H. Efficient softmax approximation for GPUs. In Proceedings of the 34th International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1302–1310. [Google Scholar]

| Category | Study | Focus | Deep Learning Technique Used | Findings |

|---|---|---|---|---|

| Using deep learning models | [15] | Proposed a new model for text classification. | DRNN | Test accuracy of 88.52% using a dataset of 900 samples. |

| [14] | Combined existing datasets, real-time tweets, and synthetic data generated by AI models. | CNN, ANN, RNN, LSTM, GRU, BiLSTM, and BiRNN | BiLSTM achieved the highest F1 score of 98.77%. | |

| [2] | LSTM-based approach for classification. | LSTM | Achieved accuracy, precision, recall, and F1 scores above 98%. | |

| [9] | Introduced the TSA-LSTM-RNN. | TSA-LSTM-RNN | Achieved 93.17% (accuracy for human dataset) and 93.83% (ChatGPT 4o dataset). | |

| Alternative approaches | [18] | Classification using statistical methods. | SVM | Binary classification achieved 99.87% accuracy; accuracy for rephrased texts was 87.58% (classification) and 84.91% (statistical). |

| [10] | DeepFake text detection. | TCN and four modified TCN models | Modified TCN models outperformed the baseline, achieving F1 scores exceeding 98% and accuracies between 94% and 99%. | |

| This study | Differentiate and classify AI and human-generated text. | LSTM | F1 score for both AI and human generated text was best achieved for model 1 (20 epochs and 128 patch size), achieving above 98%. Other models achieved above 96%. | |

| Model Features | 20-Epoch Model | 50-Epoch Model |

|---|---|---|

| Patch size | 128 | 128 |

| Embedding size | 300 | 300 |

| Regularization (L1, L2) | 0.0001 | 0.001 |

| Dropout rate | 20% | 20% |

| Model’s test accuracy | 97.28% | 95.99% |

| AI precision | 97% | 91% |

| Human precision | 98% | 92% |

| AI recall | 98% | 92% |

| Human recall | 97% | 91% |

| AI F1 Score | 98% | 87% |

| Human F1 Score | 97% | 88% |

| Model Features | 20-Epoch Model | 10-Epoch Model |

|---|---|---|

| Patch size | 128 | 128 |

| Embedding size | 300 | 100 |

| Regularization (L1, L2) | 0.0001 | 0.001 |

| Dropout rate | 20% | 40% |

| Model’s test accuracy | 97.28% | 93.12% |

| AI precision | 97% | 99% |

| Human precision | 98% | 88% |

| AI recall | 98% | 87% |

| Human recall | 97% | 99% |

| AI F1 Score | 98% | 93.6% |

| Human F1 Score | 97% | 94% |

| Model Features | 20-Epoch Model | 10-Epoch Model |

|---|---|---|

| Patch size | 128 | 64 |

| Embedding size | 300 | 100 |

| Regularization (L1, L2) | 0.0001 | 0.001 |

| Dropout rate | 20% | 40% |

| Model’s test accuracy | 97.28% | 93.12% |

| AI precision | 97% | 99% |

| Human precision | 98% | 88% |

| AI recall | 98% | 87% |

| Human recall | 97% | 99% |

| AI F1 Score | 98% | 96.19% |

| Human F1 Score | 97% | 96% |

| Model Features | 20-Epoch Model | 20-Epoch Model |

|---|---|---|

| Patch size | 128 | 128 |

| Embedding size | 300 | 300 |

| Regularization (L1, L2) | 0.0001 | 0.0001 |

| Dropout rate | 20% | 20% |

| Test data ratio | 30% | 60% |

| Model’s test accuracy | 97.28% | 99.89% |

| AI F1 Score | 98% | 91% |

| Human F1 Score | 97% | 90% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kayabas, A.; Topcu, A.E.; Alzoubi, Y.I.; Yıldız, M. A Deep Learning Approach to Classify AI-Generated and Human-Written Texts. Appl. Sci. 2025, 15, 5541. https://doi.org/10.3390/app15105541

Kayabas A, Topcu AE, Alzoubi YI, Yıldız M. A Deep Learning Approach to Classify AI-Generated and Human-Written Texts. Applied Sciences. 2025; 15(10):5541. https://doi.org/10.3390/app15105541

Chicago/Turabian StyleKayabas, Ayla, Ahmet Ercan Topcu, Yehia Ibrahim Alzoubi, and Mehmet Yıldız. 2025. "A Deep Learning Approach to Classify AI-Generated and Human-Written Texts" Applied Sciences 15, no. 10: 5541. https://doi.org/10.3390/app15105541

APA StyleKayabas, A., Topcu, A. E., Alzoubi, Y. I., & Yıldız, M. (2025). A Deep Learning Approach to Classify AI-Generated and Human-Written Texts. Applied Sciences, 15(10), 5541. https://doi.org/10.3390/app15105541