An Overview of CNN-Based Image Analysis in Solar Cells, Photovoltaic Modules, and Power Plants

Abstract

1. Introduction

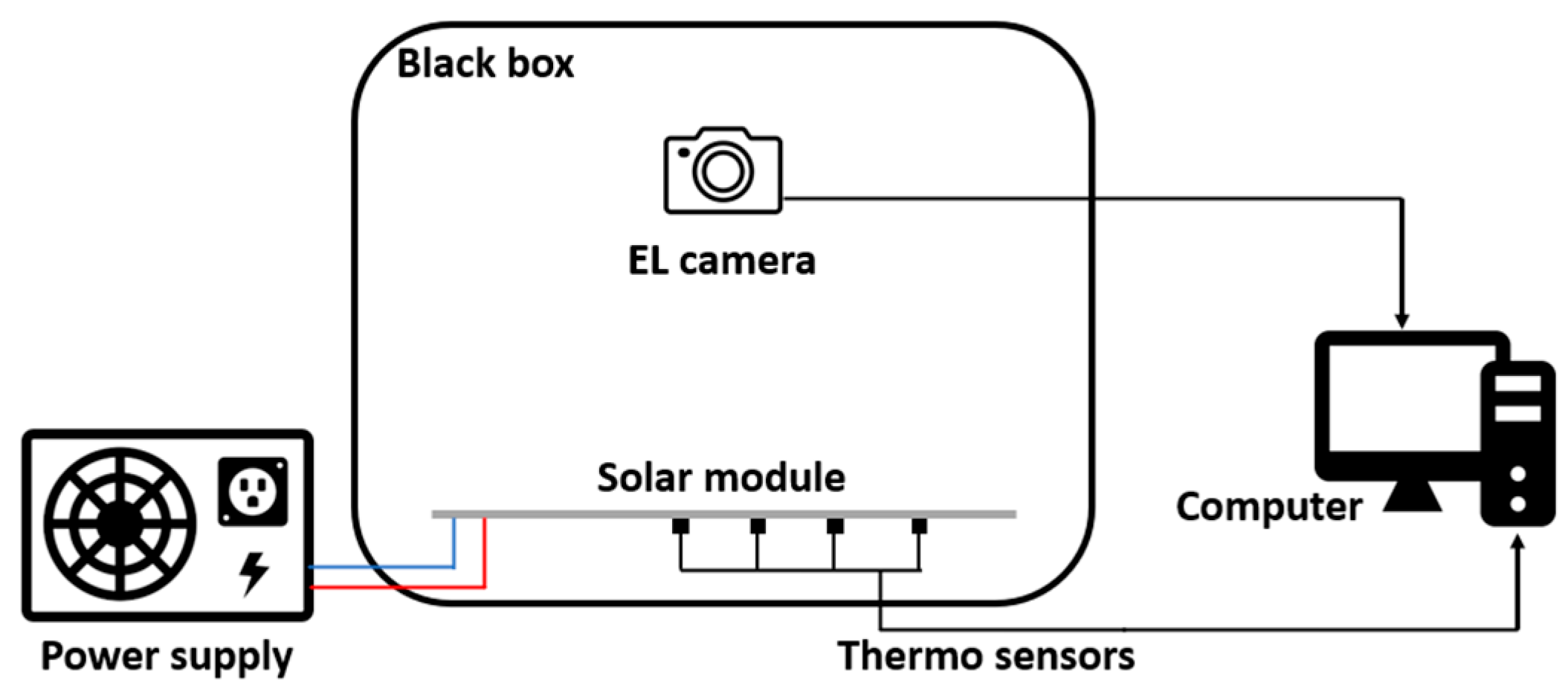

2. Imaging Methods and CNN Processing

2.1. Inspection Methods of Solar Cells and Modules

- (a)

- Crack;

- (b)

- Fracture;

- (c)

- Soldering failure;

- (d)

- Hotspot;

- (e)

- Finger interruption;

- (f)

- Tabbing disconnection;

- (g)

- Material defect;

- (h)

- Edge defect (contamination during the silicon ingot growth);

- (i)

- Corrosion;

- (j)

- PID phenomenon;

- (k)

- Black core;

- (l)

- Backsheet scratch.

2.2. CNNs

- Fault localization (segmentation): a more complex process, which means the precise (pixel-wise) determination of the location of faults within a module. Examples include, but are not limited to, U-Net, SegNet, DeepLabV3/V3+, PSPNet, and HRNet [69].

3. Exhibition of Recent Literature

3.1. Literature Examination of EL Images from 2025

3.2. Literature Examining Other Imaging Technologies from 2025

3.3. Literature Examining EL Images from 2024

3.4. Literature Examining Other Imaging Technologies from 2024

3.5. Model Performance Metrics

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| CNN | convolutional neural network |

| CPU | Central Processing Unit |

| CT | computerized tomography |

| DDPM | Denoising Diffusion Probabilistic Model |

| EL | electroluminescence |

| GAN | Generative Adversarial Networks |

| GPU | Graphics Processing Unit |

| IoU | Intersection over Union |

| IR | infrared |

| LED | light-emitting diode |

| LMFF | Lightweight Multiscale Feature Fusion Network |

| mAP | Mean Average Precision |

| MRI | magnetic resonance imaging |

| NIR | near-infrared |

| PID | Potential Induced Degradation |

| PL | photoluminescence |

| PV | photovoltaic |

| ReLU | Rectified Linear Units |

| RGB | Red Green Blue |

| SVM | support vector machine |

| UAV | unmanned aerial vehicle |

| VAE | variational autoencoder |

| VGG | Visual Geometry Group |

| YOLO | You Only Look Once |

References

- Soeder, D.J. Fossil Fuels and Climate Change. In Fracking and the Environment; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Holechek, J.L.; Geli, H.M.E.; Sawalhah, M.N.; Valdez, R. A Global Assessment: Can Renewable Energy Replace Fossil Fuels by 2050? Sustainability 2022, 14, 4792. [Google Scholar] [CrossRef]

- Wang, J.; Azam, W. Natural resource scarcity, fossil fuel energy consumption, and total greenhouse gas emissions in top emitting countries. Geosci. Front. 2024, 14, 101757. [Google Scholar] [CrossRef]

- Borowski, P.F. Mitigating Climate Change and the Development of Green Energy versus a Return to Fossil Fuels Due to the Energy Crisis in 2022. Energies 2022, 15, 9289. [Google Scholar] [CrossRef]

- Yolcan, O.O. World energy outlook and state of renewable energy: 10-Year evaluation. Innov. Green Dev. 2023, 2, 100070. [Google Scholar] [CrossRef]

- Government of Hungary. National Energy and Climate Plan of Hungary. Available online: https://cdn.kormany.hu/uploads/document/5/54/54b/54b7fc0579a1a285f81d183931bfaa7e4588b80e.pdf (accessed on 31 March 2025).

- Di Sabatino, M.; Hendawi, R.; Garcia, A.S. Silicon Solar Cells: Trends, Manufacturing Challenges, and AI Perspectives. Crystals 2024, 14, 167. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, J.; Ge, Y.; Dong, J. Uncovering the rapid expansion of photovoltaic power plants in China from 2010 to 2022 using satellite data and deep learning. Remote Sens. Environ. 2024, 305, 114100. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, Y.; Wang, J.; Huang, Y.; Zhao, X. China’s renewable energy expansion and global implications. Renew. Sustain. Energy Rev. 2022, 161, 112386. [Google Scholar] [CrossRef]

- Liu, W.; Chen, W.; Zhao, X.; Xu, J.; Wang, C. Solar energy development in China—A review. Energy Rep. 2021, 7, 3816–3826. [Google Scholar] [CrossRef]

- Li, J.; Zhang, H.; Wang, Y.; Zhou, M. Forecasting solar power capacity in China using hybrid machine learning models. J. Clean. Prod. 2023, 398, 136394. [Google Scholar] [CrossRef]

- Tang, W.; Yang, Q.; Hu, X.; Yan, W. Edge Intelligence for Smart EL Images Defects Detection of PV Plants in the IoT-Based Inspection System. IEEE Internet Things J. 2023, 10, 3047–3056. [Google Scholar] [CrossRef]

- Iftikhar, H.; Sarquis, E.; Branco, P.J.C. Why Can Simple Operation and Maintenance (O&M) Practices in Large-Scale Grid-Connected PV Power Plants Play a Key Role in Improving Its Energy Output? Energies 2021, 14, 3798. [Google Scholar] [CrossRef]

- Høiaas, I.; Grujic, K.; Imenes, A.G.; Burud, I.; Olsen, E.; Belbachir, N. Inspection and condition monitoring of large-scale photovoltaic power plants: A review of imaging technologies. Renew. Sustain. Energy Rev. 2022, 161, 112353. [Google Scholar] [CrossRef]

- Buerhop, C.; Bommes, L.; Schlipf, J.; Pickel, T.; Fladung, A.; Peters, I.M. Infrared imaging of photovoltaic modules: A review of the state of the art and future challenges facing gigawatt photovoltaic power stations. Prog. Energy 2022, 4, 042010. [Google Scholar] [CrossRef]

- Garzón, A.M.; Laiton, N.; Sicachá, V.; Celeita, D.F.; Le, T.D. Smart equipment failure detection with machine learning applied to thermography inspection data in modern power systems. In Proceedings of the 2023 11th International Conference on Smart Grid (icSmartGrid), Paris, France, 4–7 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Parenti, M.; Fossa, M.; Delucchi, L. A model for energy predictions and diagnostics of large-scale photovoltaic systems based on electric data and thermal imaging of the PV fields. Renew. Sustain. Energy Rev. 2024, 206, 114858. [Google Scholar] [CrossRef]

- Sinap, V.; Kumtepe, A. CNN-based automatic detection of photovoltaic solar module anomalies in infrared images: A comparative study. Neural Comput. Appl. 2024, 36, 17715–17736. [Google Scholar] [CrossRef]

- Starzyński, J.; Zawadzki, P.; Harańczyk, D. Machine Learning in Solar Plants Inspection Automation. Energies 2022, 15, 5966. [Google Scholar] [CrossRef]

- Rodriguez-Vazquez, J.; Prieto-Centeno, I.; Fernandez-Cortizas, M.; Perez-Saura, D.; Molina, M.; Campoy, P. Real-Time Object Detection for Autonomous Solar Farm Inspection via UAVs. Sensors 2024, 24, 777. [Google Scholar] [CrossRef]

- Meribout, M.; Tiwari, V.K.; Herrera, J.P.P.; Baobaid, A.N.M.A. Solar panel inspection techniques and prospects. Measurement 2023, 209, 112466. [Google Scholar] [CrossRef]

- Al-Zaabi, F.H.K.; Al Washahi, A.M.S.; Al-Maaini, R.K.M.; Boddu, M.K. Advancing Solar PV Component Inspection: Early Defect Detection with UAV Based Thermal Imaging and Machine Learning. In Proceedings of the 2023 Middle East and North Africa Solar Conference (MENA-SC), Dubai, United Arab Emirates, 15–18 November 2023; pp. 1–3. [Google Scholar] [CrossRef]

- Hwang, H.P.-C.; Ku, C.C.-Y.; Chan, J.C.-C. Detection of Malfunctioning Photovoltaic Modules Based on Machine Learning Algorithms. IEEE Access 2021, 9, 37210–37219. [Google Scholar] [CrossRef]

- Dhimish, M.; Tyrrell, A.M. Optical Filter Design for Daylight Outdoor Electroluminescence Imaging of PV Modules. Photonics 2024, 11, 63. [Google Scholar] [CrossRef]

- Ishikawa, Y. Outdoor evaluation of photovoltaic modules using electroluminescence method. JSAP Rev. 2022, 2022, 220412. [Google Scholar] [CrossRef]

- Redondo-Plaza, A.; Zorita-Lamadrid, Á.L.; Alonso-Gómez, V.; Hernández-Callejo, L. Inspection techniques in photovoltaic power plants: A review of electroluminescence and photoluminescence imaging. Renew. Energies 2024, 2, 27533735241282603. [Google Scholar] [CrossRef]

- Guada, M.; Moretón, Á.; Rodríguez-Conde, S.; Sánchez, L.A.; Martínez, M.; Miguel González, Á.; Jiménez, J.; Pérez, L.; Parra, V.; Martínez, O. Daylight luminescence system for silicon solar panels based on a bias switching method. Energy Sci. Eng. 2020, 8, 3839–3853. [Google Scholar] [CrossRef]

- Santamaría, R.D.P.; Dhimish, M.; Benatto, G.A.D.R.; Kari, T.; Poulsen, P.B.; Spataru, S.V. From Indoor Electroluminescence to Outdoor Daylight Electroluminescence Imaging: A Comprehensive Review of Techniques, Advances, and AI-Driven Perspectives. Preprints 2025, 16, 2025031168. [Google Scholar] [CrossRef]

- Khadka, N.; Bista, A.; Adhikari, B.; Shrestha, A.; Bista, D.; Adhikary, B. Current Practices of Solar Photovoltaic Panel Cleaning System and Future Prospects of Machine Learning Implementation. IEEE Access 2020, 8, 135948–135962. [Google Scholar] [CrossRef]

- Rahman, M.R.; Tabassum, S.; Haque, E.; Nishat, M.M.; Faisal, F.; Hossain, E. CNN-based Deep Learning Approach for Micro-crack Detection of Solar Panels. In Proceedings of the 2021 3rd International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 18–19 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Hassan, S.; Dhimish, M. A Survey of CNN-Based Approaches for Crack Detection in Solar PV Modules: Current Trends and Future Directions. Solar 2023, 3, 663–683. [Google Scholar] [CrossRef]

- Waqar Akram, M.; Li, G.; Jin, Y.; Chen, X. Failures of Photovoltaic modules and their Detection: A Review. Appl. Energy 2022, 313, 118822. [Google Scholar] [CrossRef]

- Lins, S.; Pandl, K.D.; Teigeler, H.; Thiebes, S.; Bayer, C.; Sunyaev, A. Artificial Intelligence as a Service. Bus. Inf. Syst. Eng. 2021, 63, 441–456. [Google Scholar] [CrossRef]

- Minh, D.; Wang, H.X.; Li, Y.F.; Nguyen, T.N. Explainable artificial intelligence: A comprehensive review. Artif. Intell. Rev. 2022, 55, 3503–3568. [Google Scholar] [CrossRef]

- Fu, G.; Le, W.; Zhang, Z.; Li, J.; Zhu, Q.; Niu, F.; Chen, H.; Sun, F.; Shen, Y. A Surface Defect Inspection Model via Rich Feature Extraction and Residual-Based Progressive Integration CNN. Machines 2023, 11, 124. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, C.; Dong, X. A survey of real-time surface defect inspection methods based on deep learning. Artif. Intell. Rev. 2023, 56, 12131–12170. [Google Scholar] [CrossRef]

- Jha, S.B.; Babiceanu, R.F. Deep CNN-based visual defect detection: Survey of current literature. Comput. Ind. 2023, 148, 103911. [Google Scholar] [CrossRef]

- Tang, W.; Yang, Q.; Dai, Z.; Yan, W. Module defect detection and diagnosis for intelligent maintenance of solar photovoltaic plants: Techniques, systems and perspectives. Energy 2024, 297, 131222. [Google Scholar] [CrossRef]

- Zheng, X.; Zheng, S.; Kong, Y.; Chen, J. Recent advances in surface defect inspection of industrial products using deep learning techniques. Int. J. Adv. Manuf. Technol. 2021, 113, 35–58. [Google Scholar] [CrossRef]

- Diethelm, M.; Penninck, L.; Regnat, M.; Offermans, T. Finite Element Modeling for Analysis of Electroluminescence and Infrared Images of Thin-Film Solar Cells. Sol. Energy 2020, 197, 408–416. [Google Scholar] [CrossRef]

- Gao, M.; Xie, Y.; Song, P.; Qian, J.; Sun, X.; Liu, J. A Definition Rule for Defect Classification and Grading of Solar Cells Photoluminescence Feature Images and Estimation of CNN-Based Automatic Defect Detection Method. Crystals 2023, 13, 819. [Google Scholar] [CrossRef]

- Rachid, Z.; Nadir, F.A. Hybrid CNN-SVM Model for High-Accuracy Defect Detection in PV Modules Using Infrared Images. Alger. J. Renew. Energy Sustain. Dev. 2024, 6, 44–52. [Google Scholar]

- Al-Otum, H.M. Deep learning-based automated defect classification in Electroluminescence images of solar panels. Adv. Eng. Inform. 2023, 58, 102147. [Google Scholar] [CrossRef]

- Puranik, V.E.; Kumar, R.; Gupta, R. Progress in module level quantitative electroluminescence imaging of crystalline silicon PV module: A review. Solar Energy 2024, 264, 111994. [Google Scholar] [CrossRef]

- Ory, D.; Paul, N.; Lombez, L. Extended Quantitative Characterization of Solar Cell from Calibrated Voltage-Dependent Electroluminescence Imaging. J. Appl. Phys. 2021, 129, 043106. [Google Scholar] [CrossRef]

- Jia, Y.; Wang, Y.; Hu, X.; Xu, J.; Chen, S. Diagnosing Breakdown Mechanisms in Monocrystalline Silicon Solar Cells via Electroluminescence Imaging. Sol. Energy 2021, 221, 300–309. [Google Scholar] [CrossRef]

- Chen, X.; Karin, T.; Jain, A. Automated Defect Identification in Electroluminescence Images of Solar Modules. Sol. Energy 2022, 239, 380–392. [Google Scholar] [CrossRef]

- Zebari, D.A.; Al-Waisy, A.S.; Ibrahim, D.A. Identifying Defective Solar Cells in Electroluminescence Images Using Deep Feature Representations. PeerJ Comput. Sci. 2022, 8, e992. [Google Scholar] [CrossRef]

- Zafirovska, I. Line Scan Photoluminescence and Electroluminescence Imaging of Silicon Solar Cells and Modules. Ph.D. Thesis, University of New South Wales, Sydney, NSW, Australia, 2019. Available online: https://www.researchgate.net/publication/339676573_Line_scan_photoluminescence_and_electroluminescence_imaging_of_silicon_solar_cells_and_modules (accessed on 11 March 2025).

- Planes, E.; Spalla, M.; Juillard, S.; Perrin, L. Absolute Quantification of Photo-/Electroluminescence Imaging for Solar Cells: Definition and Application to Organic and Perovskite Devices. ACS Appl. Electron. Mater. 2019, 1, 1372–1379. [Google Scholar] [CrossRef]

- Rajput, A.S.; Zhang, Y.; Ho, J.W.; Aberle, A.G. Comparative Study of the Electrical Parameters of Individual Solar Cells in a c-Si Module Extracted Using Indoor and Outdoor Electroluminescence Imaging. IEEE J. Photovolt. 2020, 10, 1125–1132. [Google Scholar] [CrossRef]

- Xi, X.; Sun, Q.; Shao, J.; Liu, G.; Yang, G.; Zhu, B. A real-time monitoring method of potential-induced degradation shunts for crystalline silicon solar cells. J. Renew. Sustain. Energy 2025, 17, 013502. [Google Scholar] [CrossRef]

- Mateo Romero, H.F. Employing Artificial Intelligence Techniques for the Estimation of Energy Production in Photovoltaic Solar Cells Based on Electroluminescence Images. Ph.D. Thesis, Universidad de Valladolid, Valladolid, Spain, 2024. Available online: http://uvadoc.uva.es/handle/10324/71657 (accessed on 11 March 2025).

- Colvin, D.J.; Schneller, E.J.; Davis, K.O. Cell Dark Current–Voltage from Non-Calibrated Module Electroluminescence Image Analysis. Sol. Energy 2022, 250, 1204–1214. [Google Scholar] [CrossRef]

- Daher, D.H.; Mathieu, A.; Abdallah, A.; Mouhoumed, D.; Logerais, P.-O.; Gaillard, L.; Ménézo, C. Leon Gaillard and Christophe Ménézo Photovoltaic failure diagnosis using imaging techniques and electrical characterization. EPJ Photovolt. 2024, 15, 25. [Google Scholar] [CrossRef]

- Barraz, Z.; Sebari, I.; Ait El Kadi, K.; Ait Abdelmoula, I. Towards a Holistic Approach for UAV-Based Large-Scale Photovoltaic Inspection: A Review on Deep Learning and Image Processing Techniques. Technologies 2025, 13, 117. [Google Scholar] [CrossRef]

- Ghahremani, A.; Adams, S.D.; Norton, M.; Khoo, S.Y.; Kouzani, A.Z. Advancements in AI-Driven detection and localisation of solar panel defects. Adv. Eng. Inform. 2025, 64, 103104. [Google Scholar] [CrossRef]

- Jalal, M.; Khalil, I.U.; Haq, A.U. Deep learning approaches for visual faults diagnosis of photovoltaic systems: State-of-the-Art review. Results Eng. 2024, 23, 102622. [Google Scholar] [CrossRef]

- Balachandran, G.B.; Devisridhivyadharshini, M.; Ramachandran, M.E.; Santhiya, R. Comparative investigation of imaging techniques, pre-processing and visual fault diagnosis using artificial intelligence models for solar photovoltaic system—A comprehensive review. Measurement 2024, 232, 114683. [Google Scholar] [CrossRef]

- Michail, A.; Livera, A.; Tziolis, G.; Carús Candás, J.L.; Fernandez, A.; Antuña Yudego, E.; Fernández Martínez, D.; Antonopoulos, A.; Tripolitsiotis, A.; Partsinevelos, P.; et al. A comprehensive review of unmanned aerial vehicle-based approaches to support photovoltaic plant diagnosis. Heliyon 2024, 10, e23983. [Google Scholar] [CrossRef]

- Zaman, E.E.; Khanam, R. PV-faultNet: Optimized CNN Architecture to detect defects resulting efficient PV production. arXiv 2024. [Google Scholar] [CrossRef]

- Lin, K.-M.; Lin, H.-H.; Lin, Y.-T. Development of a CNN-based hierarchical inspection system for detecting defects on electroluminescence images of single-crystal silicon photovoltaic modules. Mater. Today Commun. 2022, 31, 103796. [Google Scholar] [CrossRef]

- Karakan, A. Detection of Defective Solar Panel Cells in Electroluminescence Images with Deep Learning. Sustainability 2025, 17, 1141. [Google Scholar] [CrossRef]

- Qureshi, U.R.; Rashid, A.; Altini, N.; Bevilacqua, V.; La Scala, M. Explainable Intelligent Inspection of Solar Photovoltaic Systems with Deep Transfer Learning: Considering Warmer Weather Effects Using Aerial Radiometric Infrared Thermography. Electronics 2025, 14, 755. [Google Scholar] [CrossRef]

- Samrouth, K.; Nazir, S.; Bakir, N.; Khodor, N. Dual Cnn for Photovoltaic Electroluminescence Images Microcrack Detection. Available online: https://ssrn.com/abstract=5015797 (accessed on 20 March 2025).

- Noura, H.N.; Chahine, K.; Bassil, J.; Chaaya, J.A.; Salman, O. Efficient combination of deep learning models for solar panel damage and soiling detection. Measurement 2025, 251, 117185. [Google Scholar] [CrossRef]

- Li, W.; Wang, F.; Sun, Z. Semantic segmentation method of photovoltaic cell microcracks based on EL polarization imaging. Sol. Energy 2025, 291, 113364. [Google Scholar] [CrossRef]

- Binomairah, A.; Abdullah, A.; Khoo, B.E.; Mahdavipour, Z.; Teo, T.W.; Noor, N.S.M.; Abdullah, M.Z. Detection of microcracks and dark spots in monocrystalline PERC cells using photoluminescene imaging and YOLO-based CNN with spatial pyramid pooling. EPJ Photovolt. 2022, 13, 27. [Google Scholar] [CrossRef]

- Shihavuddin, A.S.M.; Rashid, M.R.A.; Maruf, H.; Hasan, M.A.; Ul Haq, M.A.; Ashique, R.H.; Al Mansur, A. Image based surface damage detection of renewable energy installations using a unified deep learning approach. Energy Rep. 2021, 7, 4566–4576. [Google Scholar] [CrossRef]

- Ding, S.; Jing, W.; Chen, H.; Chen, C. Yolo Based Defects Detection Algorithm for EL in PV Modules with Focal and Efficient IoU Loss. Appl. Sci. 2024, 14, 7493. [Google Scholar] [CrossRef]

- Wang, N.; Huang, S.; Liu, X.; Wang, Z.; Liu, Y.; Gao, Z. MRA-YOLOv8: A Network Enhancing Feature Extraction Ability for Photovoltaic Cell Defects. Sensors 2025, 25, 1542. [Google Scholar] [CrossRef]

- Lin, H.-H.; Dandage, H.K.; Lin, K.-M.; Lin, Y.-T.; Chen, Y.-J. Efficient Cell Segmentation from Electroluminescent Images of Single-Crystalline Silicon Photovoltaic Modules and Cell-Based Defect Identification Using Deep Learning with Pseudo-Colorization. Sensors 2021, 21, 4292. [Google Scholar] [CrossRef]

- Chen, S.; Lu, Y.; Qin, G.; Hou, X. Polycrystalline silicon photovoltaic cell defects detection based on global context information and multi-scale feature fusion in electroluminescence images. Mater. Today Commun. 2024, 41, 110627. [Google Scholar] [CrossRef]

- Lang, D.; Lv, Z. A PV cell defect detector combined with transformer and attention mechanism. Sci. Rep. 2024, 14, 20671. [Google Scholar] [CrossRef]

- Gao, Y.; Pang, C.; Zeng, X.; Jiang, P. A Single-Stage Photovoltaic Module Defect Detection Method Based on Optimized YOLOv8. IEEE Access 2025, 13, 27805–27817. [Google Scholar] [CrossRef]

- Demirci, M.Y.; Beşli, N.; Gümüşçü, A. An improved hybrid solar cell defect detection approach using Generative Adversarial Networks and weighted classification. Expert Syst. Appl. 2024, 252, 124230. [Google Scholar] [CrossRef]

- da Silveira Junior, C.R.; Sousa, C.E.R.; Fonseca Alves, R.H. Automatic Fault Classification in Photovoltaic Modules Using Denoising Diffusion Probabilistic Model, Generative Adversarial Networks, and Convolutional Neural Networks. Energies 2025, 18, 776. [Google Scholar] [CrossRef]

- Parikh, H.R.; Buratti, Y.; Spataru, S.; Villebro, F.; Reis Benatto, G.A.D.; Poulsen, P.B.; Wendlandt, S.; Kerekes, T.; Sera, D.; Hameiri, Z. Solar Cell Cracks and Finger Failure Detection Using Statistical Parameters of Electroluminescence Images and Machine Learning. Appl. Sci. 2020, 10, 8834. [Google Scholar] [CrossRef]

- Chen, H.; Pang, Y.; Hu, Q.; Liu, K. Solar cell surface defect inspection based on multispectral convolutional neural network. J. Intell. Manuf. 2020, 31, 453–468. [Google Scholar] [CrossRef]

- Al-Otum, H.M. Classification of anomalies in electroluminescence images of solar PV modules using CNN-based deep learning. Sol. Energy 2024, 278, 112803. [Google Scholar] [CrossRef]

- Lo, C.-M.; Lin, T.-Y. Automated optical inspection based on synthetic mechanisms combining deep learning and machine learning. J. Intell. Manuf. 2024. [Google Scholar] [CrossRef]

- Lian, J.; Wang, L.; Liu, T.; Ding, X.; Yu, Z. Automatic visual inspection for printed circuit board via novel Mask R-CNN in smart city applications. Sustain. Energy Technol. Assess. 2021, 44, 1010320. [Google Scholar] [CrossRef]

- Moon, I.Y.; Lee, H.W.; Kim, S.-J.; Oh, Y.-S.; Jung, J.; Kang, S.-H. Analysis of the Region of Interest According to CNN Structure in Hierarchical Pattern Surface Inspection Using CAM. Materials 2021, 14, 2095. [Google Scholar] [CrossRef]

- Balzategui, J.; Eciolaza, L.; Maestro-Watson, D. Anomaly Detection and Automatic Labeling for Solar Cell Quality Inspection Based on Generative Adversarial Network. Sensors 2021, 21, 4361. [Google Scholar] [CrossRef]

- Bharadiya, J.P. A Comparative Study of Business Intelligence and Artificial Intelligence with Big Data Analytics. Am. J. Artif. Intell. 2023, 7, 24–30. [Google Scholar] [CrossRef]

- Laot, E.; Puel, J.-B.; Guillemoles, J.-F.; Ory, D. Physics-Based Machine Learning Electroluminescence Models for Fast yet Accurate Solar Cell Characterization. Prog. Photovolt. Res. Appl. 2025. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, L.; Chen, X.; Cen, Y.; Zhang, L.; Zhang, F. A lightweight multiscale feature fusion network for solar cell defect detection. Comput. Mater. Contin. 2025, 82, 521–542. [Google Scholar] [CrossRef]

- Demir, F. Enhancing Defect Classification in Solar Panels with Electroluminescence Imaging and Advanced Machine Learning Strategies. IEEE Access 2025, 13, 58481–58495. [Google Scholar] [CrossRef]

- Thakfan, A.; Bin Salamah, Y. Development and Performance Evaluation of a Hybrid AI-Based Method for Defects Detection in Photovoltaic Systems. Energies 2025, 18, 812. [Google Scholar] [CrossRef]

- Tang, C.; Sun, D.; Zou, J.; Xiong, Y.; Fang, G.; Zhang, W. Lay-up defects inspection for automated fiber placement with structural light scanning and deep learning. Polym. Compos. 2025, 1–11. [Google Scholar] [CrossRef]

- Yousif, H.; Al-Milaji, Z. Fault detection from PV images using hybrid deep learning model. Sol. Energy 2024, 267, 112207. [Google Scholar] [CrossRef]

- İmak, A. Automatic Classification of Defective Photovoltaic Module Cells Based on a Novel CNN-PCA-SVM Deep Hybrid Model in Electroluminescence Images. Turk. J. Sci. Technol. 2024, 19, 497–508. [Google Scholar] [CrossRef]

- Gopalakrishnan, S.; Wahab, N.I.A.; Veerasamy, V.; Hizam, H.; Farade, R.A. NASNet-LSTM based Deep learning Classifier for Anomaly Detection in Solar Photovoltaic Modules. J. Phys. Conf. Ser. 2024, 2777, 012006. [Google Scholar] [CrossRef]

- Sridharan, N.V.; Vaithiyanathan, S.; Aghaei, M. Voting based ensemble for detecting visual faults in photovoltaic modules using AlexNet features. Energy Rep. 2024, 11, 3889–3901. [Google Scholar] [CrossRef]

- Ledmaoui, Y.; El Maghraoui, A.; El Aroussi, M.; Saadane, R. Enhanced Fault Detection in Photovoltaic Panels Using CNN-Based Classification with PyQt5 Implementation. Sensors 2024, 24, 7407. [Google Scholar] [CrossRef]

- Hassan, S.; Dhimish, M. Enhancing solar photovoltaic modules quality assurance through convolutional neural network-aided automated defect detection. Renew. Energy 2023, 219, 119389. [Google Scholar] [CrossRef]

| Author | Reference | Date | Imaging Technology | Model | Model Application | Damage Type |

|---|---|---|---|---|---|---|

| Karakan | [65] | 2025 | EL | AlexNet, GoogleNet, MobileNet, VGG16, ResNet50, DenseNet121, SqueezeNet | classification (intact, cracked, broken) | crack, fracture |

| Wang et al. | [73] | 2025 | EL | MRA-YOLOv8 (MBCANet, ResBlock, AMPDIoU) | detection | crack, broken grid, spots |

| Laot et al. | [88] | 2025 | EL | MLP, modified U-NET (mU-NET) | regression (local Rs and J0 estimate) | dislocation, damaged tab (fingers) |

| Chen et al. | [89] | 2025 | EL | LMFF (Lightweight Multiscale Feature Fusion Network) | segmentation (precise localization of failures) | crack, black spots, broken grid |

| Demir | [90] | 2025 | EL | CNN + RSWS | classification (2 and 4 classes) | microcrack, fracture, tab interruption |

| Li et al. | [69] | 2025 | EL | improved U-Net | segmentation (microcrack) | microcrack |

| Author | Reference | Date | Imaging Technology | Model | Model Application | Damage Type |

|---|---|---|---|---|---|---|

| da Silveira Junior et al. | [79] | 2025 | IR | CNN + GAN, DDPM | classification (11 classes) | contamination, shading, diode |

| Qureshi et al. | [66] | 2025 | IR (radiometric heat map) | MobileNetV2, InceptionV3, VGG16, CNN-ensemble (DTL models) | multi-classification, diagnostics | hotspot, heated junction box, substring, multistring, patchwork |

| Thakfan | [91] | 2025 | IR, I-V curves | ML + transfer learning | failure detection and diagnostics | surface and performance defects |

| Tang et al. | [92] | 2025 | point cloud 3D | PointNet++ | segmentation (lay-up failures) | wrinkle, bridge, gap, overlap |

| Noura et al. | [68] | 2025 | RGB | EfficientNet, ViT, YOLOv5, VGG19, ResNet50, Swin Transformer, MobileNet, ConvNext, NASNet | classification (faulty/intact, contamination/damage types) | dust, bird droppings, snow, physical and electrical injuries |

| Gao et al. | [77] | 2025 | RGB | YOLOv8 (PSA-det) | detection | scratches, broken grid, discoloration |

| Author | Reference | Date | Picture | Model | Model Application | Damage Type |

|---|---|---|---|---|---|---|

| Zaman et al. | [63] | 2024 | EL | Custom CNN | classification | common failures |

| Samrouth et al. | [67] | 2024 | EL | Dual CNN (shallow + deep), VGG19, AlexNet | detection (binary) | microcrack |

| Ding et al. | [72] | 2024 | EL | YOLOv5 (m, l, x, s) + Cascade | detection, classification | 12 types: crack, dislocation, etc. |

| Chen et al. | [75] | 2024 | EL | YOLOv8 + Attention | classification, detection | corner, scratch, printing error etc. |

| Lang | [76] | 2024 | EL | YOLOv8 + Transformer + PSA attention | failure detection | crack, fracture, shading, spots |

| Demirci et al. | [78] | 2024 | EL | GAN, VGG-16, CNN | classification | microcracks, broken cells, finger interruptions |

| Al-Otum | [82] | 2024 | EL | LwNet, SqueezeNet, GoogleNet | classification (4 and 8 classes) | crack, microcrack, break, finger interruption, disconnected cell, diode failure, soldering defect |

| Yousif | [93] | 2024 | EL | CNN + HoG (hybrid model) | classification (faulty/intact) | crack, PID, dark spots |

| İmak | [94] | 2024 | EL | CNN + PCA + SVM (MobileNetV2, DenseNet201, InceptionV3) | classification (faulty/intact) | crack, contamination, shadow, manufacturing defect |

| Author | Reference | Date | Picture | Model | Model Application | Damage Type |

|---|---|---|---|---|---|---|

| Sinap and Kumtepe | [18] | 2024 | IR | custom CNN | anomaly detection, classification (12 classes) | cracking, hotspot, shadowing, diode fault, soiling, vegetation, offline module, etc. |

| Gopalakrishnan et al. | [95] | 2024 | IR | NASNet + LSTM | anomaly detection, classification (12 classes) | hotspot, cracking, shadowing, soiling, offline, vegetation, etc. |

| Zaghdoudi | [44] | 2024 | IR | CNN + SVM (hybrid) VGG, ResNet, ViT | classification | hotspot, cracking, shadowing, soiling, diode fault, offline module, etc. (12 classes) |

| Sridharan et al. | [96] | 2024 | RGB (UAV) | AlexNet + ensemble (SVM, KNN, J48) | classification (visual failures) | snail trail, fractures, discoloration, burn marks |

| Rodriguez-Vazquez et al. | [20] | 2024 | RGB | CenterNet-based keypoint detection | real-time solar panel detection with UAV | panel level localization |

| Ledmaoui et al. | [97] | 2024 | RGB | CNN (VGG16 based) | anomaly classification, failure detection | dust, dirt, bird droppings, shading |

| Author | Reference | Date | Accuracy | Precision | Recall | F1-Score | IoU/mAP |

|---|---|---|---|---|---|---|---|

| Karakan | [65] | 2025 | 97.82% (mono), 96.29% (poli) | N/A | N/A | N/A | N/A |

| Wang et al. | [73] | 2025 | N/A | N/A | N/A | N/A | mAP50: 91.7% (PVEL-AD), 69.3% (SPDI) |

| Laot et al. | [88] | 2025 | ≈99.99% (MLP), ≈99.12% (mU-NET) | N/A | N/A | N/A | N/A |

| Chen et al. | [89] | 2025 | N/A | N/A | N/A | 81.3%, 67.5%, 96.2% | IoU: 68.5%, 51.0%, 92.7% |

| Demir | [90] | 2025 | 98.17% (2 classes), 97.02% (4 classes) | N/A | N/A | N/A | N/A |

| Li et al. | [69] | 2025 | N/A | N/A | N/A | N/A | better than other networks, without specific value |

| da Silveira Junior et al. | [79] | 2025 | 89.83% (DDPM), 86.98% (GAN) | N/A | N/A | N/A | N/A |

| Qureshi et al. | [66] | 2025 | CNN- ensemble: 100%, MobileNetV2: 99.8% | N/A | N/A | CNN-ensemble: 1.000 | N/A |

| Thakfan | [91] | 2025 | >98% | N/A | >98% | N/A | N/A |

| Tang et al. | [92] | 2025 | N/A | N/A | N/A | N/A | IoU: >72% |

| Noura et al. | [68] | 2025 | 96.3%, 91.8% | N/A | N/A | 87% (YOLOv5s) | IoU = 95% (UNet + ASPP) |

| Gao et al. | [77] | 2025 | N/A | N/A | N/A | N/A | mAP50: 87.2% |

| Author | Reference | Date | Accuracy | Precision | Recall | F1N/Ascore | IoU/mAP |

|---|---|---|---|---|---|---|---|

| Zaman et al. | [63] | 2024 | 91.67% (val) | 0.91 | 0.89 | 0.9 | N/A |

| Samrouth et al. | [67] | 2024 | N/A | N/A | N/A | N/A | qualitative comparison only |

| Ding et al. | [72] | 2024 | N/A | N/A | N/A | N/A | mAP: 85.7% (YOLOv5m), 86.5% (YOLOv5s), 86.7% (YOLOv5x) |

| Chen et al. | [75] | 2024 | N/A | N/A | N/A | 0.697 | mAP50: 77.9%, mAP50:95: 49.6% |

| Lang | [76] | 2024 | N/A | N/A | N/A | N/A | mAP50: 77.9% |

| Demirci et al. | [78] | 2024 | 94.11%% | 94.7% | 96.7% | 95.7% | N/A |

| Al-Otum | [82] | 2024 | LwNet: 96.2%, SqueezeNet: 93.95%, GoogleNet: 94.6% | LwNet: 95.2% | LwNet: 94.8% | LwNet: 95.0% | n.a. |

| Yousif | [93] | 2024 | Better than 6 previous models | N/A | N/A | N/A | N/A |

| İmak | [94] | 2024 | 92.19% | 0.92 | 0.9 | 0.91 | N/A |

| Sinap and Kumtepe | [18] | 2024 | detection: 92%, fault classification: 82% | N/A | N/A | N/A | N/A |

| Gopalakrishnan et al. | [95] | 2024 | 84.75% | N/A | N/A | N/A | N/A |

| Zaghdoudi | [44] | 2024 | 92.37% | N/A | N/A | N/A | N/A |

| Sridharan et al. | [96] | 2024 | 98.30% (2 classes) | N/A | N/A | N/A | N/A |

| Rodriguez-Vazquez et al. | [20] | 2024 | N/A | N/A | N/A | N/A | IoU (keypoint detection): 85.3% |

| Ledmaoui et al. | [97] | 2024 | 91.46% | N/A | N/A | 91.67% | Specificity: 98.29% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matusz-Kalász, D.; Bodnár, I.; Jobbágy, M. An Overview of CNN-Based Image Analysis in Solar Cells, Photovoltaic Modules, and Power Plants. Appl. Sci. 2025, 15, 5511. https://doi.org/10.3390/app15105511

Matusz-Kalász D, Bodnár I, Jobbágy M. An Overview of CNN-Based Image Analysis in Solar Cells, Photovoltaic Modules, and Power Plants. Applied Sciences. 2025; 15(10):5511. https://doi.org/10.3390/app15105511

Chicago/Turabian StyleMatusz-Kalász, Dávid, István Bodnár, and Marcell Jobbágy. 2025. "An Overview of CNN-Based Image Analysis in Solar Cells, Photovoltaic Modules, and Power Plants" Applied Sciences 15, no. 10: 5511. https://doi.org/10.3390/app15105511

APA StyleMatusz-Kalász, D., Bodnár, I., & Jobbágy, M. (2025). An Overview of CNN-Based Image Analysis in Solar Cells, Photovoltaic Modules, and Power Plants. Applied Sciences, 15(10), 5511. https://doi.org/10.3390/app15105511