Abstract

Collaborative filtering, as a widely used recommendation method, is widely applied but susceptible to data poisoning attacks, where malicious actors inject synthetic user interaction data to manipulate recommendation results and secure illicit benefits. Traditional poisoning attack methods require in-depth understanding of the recommendation system. However, they fail to address its dynamic nature and algorithmic complexity, thereby hindering effective breaches of the system’s defensive mechanisms. In this paper, we propose DRLAttack, a deep reinforcement learning-based framework for data poisoning attacks. DRLAttack can launch both white-box and black-box data poisoning attacks. In the white-box setting, DRLAttack dynamically tailors attack strategies to recommendation context changes, generating more potent and stealthy fake user interactions for the precise targeting of data poisoning. Furthermore, we extend DRLAttack to black-box settings. By introducing spy users to simulate the behavior of active and inactive users into the training dataset, we indirectly obtain the promotion status of target items and adjust the attack strategy in response. Experimental results on real-world recommendation system datasets demonstrate that DRLAttack can effectively manipulate recommendation results.

1. Introduction

Recommendation systems are crucial in personalizing applications, combining user attributes and historical behavior data to predict and meet users’ personalized preferences. As one of the core algorithms, collaborative filtering technology mines similarities between users by analyzing their historical interactions, thereby providing accurate personalized recommendations and enhancing user experience.

Data poisoning attacks are one of the primary threats to recommendation systems [1,2]. These attacks aim to subtly alter training data and typically occur during the initial phase of the machine learning life cycle (the training phase). During data collection, attackers can inject carefully crafted malicious data into clean datasets, thereby misleading the subsequent model training process [3]. Once the poisoned model is deployed, its outputs will be skewed according to the attacker’s intentions, leading to recommendations that do not accurately reflect users’ preferences. The openness of recommendation systems, where models are often trained on public data, creates opportunities for malicious actors. Additionally, the significant influence of these systems on online users provides incentives for such attacks [4]. Attackers can inject fake data into the training set through controlled fake user accounts, causing the model to behave abnormally and manipulate the scores and rankings of certain items [5]. The goal is to manipulate the scores and rankings of certain items, making them appear more or less aligned with the interests of typical users. This manipulation can result in these items being recommended more frequently or suppressed in users’ recommendation lists, thereby influencing their visibility and promotion [6].

With ongoing updates and improvements to collaborative filtering recommendation algorithms by service providers, data poisoning attacks must continually adapt to face two significant challenges. First, the fake user interaction data generated by attackers often perform poorly and are easily detected. Previous studies have shown that attackers manually create fake data using heuristic methods [7,8], typically following simple attack strategies. For instance, a common approach involves frequently clicking on the target item and a few popular items, which may mislead the recommendation system into associating the target item with those popular items. However, such simplistic rules often fail to achieve high attack success rates against complex recommendation systems. Second, attackers generally lack prior knowledge of the recommendation algorithms and models in use. Most service providers now keep users’ recommendation lists private, visible only to the users themselves, which complicates the attackers’ ability to execute informed attacks. Furthermore, the robust performance and complex structures of modern recommendation systems make it challenging for attackers to access specific model parameters, limiting their ability to conduct targeted and effective attacks.

To address these challenges, we developed a deep reinforcement learning-based framework for poisoning attacks, named DRLAttack. This framework utilizes a Deep Q-Network (DQN) and its variants, employing similarity measurement techniques to identify items similar to the target while designing novel action spaces and reward functions. Additionally, we integrated the Dueling Network architecture to enhance the optimization of the agent’s attack strategies.

By leveraging the RecBole recommendation system library, we enable DRLAttack to autonomously generate high-quality fake user interaction data, allowing it to dynamically simulate real user behaviors for promotional attacks on target items. Furthermore, to address reward feedback in the black-box setting, we introduce spy users that mimic the behavior patterns of real users to indirectly gather information on the promotion status of target items. We refine the reward function to include a weighted sum of the target item’s hit rate and its ranking position in the recommendation list. This approach provides the agent with more informative feedback, enabling it to adapt its strategies more effectively. This foundation allows us to optimize the strategy network of DRLAttack to capture the dynamic changes in fake user interaction behavior. To address the aforementioned challenges, our contributions are summarized as follows:

- We propose DRLAttack, a deep reinforcement learning-based data poisoning framework that can adapt to both white-box and black-box attack settings, effectively launching data poisoning attacks against various recommendation systems.

- We design a white-box data poisoning attack using DRLAttack, combining the Deep Q-Network (DQN) with the RecBole recommendation system library to autonomously generate high-quality and covert fake user interaction data.

- DRLAttack is adapted for black-box settings by introducing spy users and optimizing both the reward function and the strategy network, enabling targeted attacks with limited background knowledge.

- We conduct extensive experiments on multiple real-world datasets, which fully demonstrate the effectiveness of our attack framework.

The rest of this paper is organized as follows. Section 2 discusses related work. Section 3 provides the problem definition. Section 4 details our poisoning attack framework in a white-box setting, using deep reinforcement learning. Section 5 introduces the improved deep reinforcement learning-based poisoning attack framework in a black-box setting. Section 6 presents the experimental setup and results on real datasets. Finally, Section 7 summarizes the research findings and future work of this paper.

2. Related Work

Early work on data poisoning attacks against recommendation systems was mostly independent of the targeted recommendation algorithms and was influenced by heuristic methods. For instance, ref. [9] introduced random attacks, where fake users randomly select items and assign ratings with a distribution that follows a normal distribution. However, this method is often easily detected due to its lack of sophistication. Ref. [10] proposed average attacks. Attackers randomly choose items and assign them average ratings to make fake users appear more similar to ordinary users.

Ref. [11] discussed popularity attacks, where attackers select a few items with the highest average ratings (popular items) along with other items to form the behavior data for fake users. To better understand and categorize these attacks, we classify them based on the type of collaborative filtering algorithms they target.

2.1. Attacks Against Neighborhood-Based Algorithms

In attacks against neighborhood-based collaborative filtering algorithms, attackers often leverage the characteristics of a user’s nearest neighbors to boost the ranking of target items. Ref. [12] describes manipulating recommendation outcomes by forging or tampering with user rating data, for example, by artificially raising or lowering ratings for certain items, leading to skewed recommendation results. Ref. [13] involves adding noise through random ratings, a method that requires access to the recommendation system’s rating data and significant resources.

2.2. Attacks Against Association Rule-Based Algorithms

For association rule-based collaborative filtering algorithms, attackers inject false co-visitation between items in each user’s profile rather than fake ratings and enhance attack performance in two ways. Ref. [14] discusses changing item properties or tags via forging or tampering. For instance, attackers might tag dissimilar items with the same label or similar items with different labels to confuse the algorithm. Ref. [15] discusses adding junk items that are unrelated to the target items or are highly similar to real items but of lower quality. This approach disrupts the mining results of association rules.

2.3. Attacks Against Graph-Based Algorithms

For attacks against graph-based random walk collaborative filtering algorithms, attackers generally employ the following four strategies: Adding Junk Nodes: As described in [16,17], attackers introduce nodes without practical significance, such as fake user or item nodes. This affects the recommendation system’s random walks by influencing the paths taken. Disrupting Edge Weights: attackers modify the weights of certain edges to influence the recommendation system’s prioritization during random walks [9]. Targeting Crucial Nodes: In [18], attackers focus on critical nodes within the recommendation system, rendering them dysfunctional to disrupt the system’s operations. Using Projected Gradient Descent: According to [19], attackers apply a bi-level optimization attack framework solved via projected gradient descent to execute their strategies.

2.4. Attacks Against Latent Factor Model-Based Algorithms

For latent factor model-based collaborative filtering algorithms, as discussed in [20,21,22,23], attackers use projected gradient ascent to solve a bi-level optimization attack framework. Additionally, instead of directly attacking unknown recommendation systems, attackers typically take specific actions on social networking sites to collect a set of sample data related to the target recommendation system. These data are used to build a surrogate model that deeply learns the recommendation patterns. This surrogate model can estimate the item proximity learned by the target recommendation system. By attacking the surrogate model, attackers can directly transfer the generated fake user interaction data to attack the target recommendation system. This approach allows for an effective attack by leveraging insights gained from the proxy model to undermine the target system.

2.5. Defense Mechanisms

Although research on data poisoning attacks against various collaborative filtering algorithms is progressing rapidly, online service providers have also introduced methods to defend against and mitigate the threats of these attacks. Ref. [24] proposes robust optimization techniques to enhance the resilience of recommendation systems against data poisoning attacks. Meanwhile, ref. [25] introduces distillation techniques to mitigate the impact of such attacks.

Finally, Table 1 categorizes the types of collaborative filtering algorithms and summarizes the limitations of related works. It can be seen from the table that designing a method with high stealthiness that can probe the behavior of recommendation models and adaptively learn the target model is challenging.

Table 1.

Summary of attack methods and limitations for different collaborative filtering algorithms.

3. Problem Definition

For a normal user u, a recommendation system generates a -k recommendation list . This list represents the user’s preference ranking for items, where the first element indicates the item that user u is most likely to click on next. This paper focuses on designing attack methods for targeted attacks. Generally, by precisely manipulating the input training data, attackers can ensure that the target item appears in as many users’ recommendation lists as possible, potentially achieving a higher ranking. The key action of the target attack consists of the following three steps:

- Create fake user accounts: These accounts are used to simulate the rating behaviors of real users by rating specific items and other items, thereby influencing the output of the recommendation system. Assume N represents the number of fake user accounts created by the attacker. Each fake account will perform a series of actions aimed at elevating the recommendation ranking of a specific item. The creation of fake user accounts can be denoted as , where represents the collection of all fake user accounts.

- Rating item selection: The primary goal is to manipulate the recommendation algorithm by having fake users rate a carefully selected set of items, thus ensuring the target item is recommended to more users. This process requires meticulous planning to ensure the attack activities are not easily detected by the system while maximizing the impact on the recommendation results. Our strategy in this step begins with mixing the target item with ordinary items to simulate the interaction behavior of real users. Assume T is the maximum number of items each fake user interacts with. By mixing the target item set with ordinary items, attackers can more effectively conceal their actions. These items are numbered as a sequence of behaviors , and attackers adjust this sequence to ensure they can disrupt the true recommendation outcomes.

- Rating manipulation: To further simulate the rating behavior of real users, attackers use different rating patterns on selected items. This means not only giving high scores to the target items but also assigning medium or low scores to some ordinary items, as well as incorporating implicit feedback (such as clicking or not clicking) to increase the diversity and credibility of the rating behavior. For each fake user and item , rating manipulation can be represented through function . Explicit rating manipulation can be represented as , where s is the score assigned to item . Generally, , for target items, tends to be higher scores, while for ordinary items, appropriate scores are chosen according to the manipulation strategy to simulate real user behavior. Implicit rating manipulation mainly relies on user–item interaction behaviors. If user interacts with item , then ’s value is set to 1, indicating a positive reaction from the user to the item; if there is no interaction between user and item , then ’s value is set to 0, indicating no direct interest in the item, but not necessarily a dislike. Increasing the number of interactions with the target item (i.e., setting more 1 values) can enhance the exposure of the target item, reflecting its importance and visibility in the recommendation system, thereby increasing the likelihood of it being recommended.

After generating synthetic user accounts and their interaction data, the subsequent procedure involves integrating these data into the original training set to enhance the visibility of target items in user recommendation lists through training. A white-box setting is ideal, where attackers, having a basic understanding of the recommendation algorithm, can replicate it to obtain user recommendation lists. However, this ideal scenario is hard to achieve in reality; in a black-box setting, user recommendation lists are private and only visible to the user themselves, making it difficult for attackers to assess the promotion of target items. To overcome this challenge, we introduce the concept of “spy users” as an innovative solution. Spy users refer to a group of synthetic user accounts created and controlled by attackers, designed to mimic the normal interaction behaviors of real users within the recommendation system. By reading the user–item interaction records from the training dataset, these users are categorized into two types: active users and inactive users, to cover different user behavior patterns:

Active users: For explicit datasets, users who frequently rate items highly (e.g., scores between 1 and 5) are considered active users. A user is deemed active if their average rating for items is three or higher. For implicit datasets, which lack ratings, users whose number of item interactions exceeds the average are regarded as active users.

Inactive users: Conversely, any user not classified as an active user within the dataset is considered inactive. These users are often a focus of the recommendation system, because once they engage with an item from the recommendation list, the system can quickly analyze their preferences and subsequently recommend more related items to them. To effectively target the recommendation system, constructing spy users to simulate the behaviors of inactive users requires meticulous planning.

By introducing spy users, we provide a feasible source of feedback for attack strategies in black-box settings. By analyzing the presence of target items in the recommendation lists of spy users, attackers can indirectly assess the impact of their actions and adjust their strategies accordingly.

4. DRLAttack in the White-Box Setting

4.1. Deep Reinforcement Learning Architecture

When constructing the DRLAttack, the core concept is to model the attack process as a framework compatible with reinforcement learning. In this framework, the attacker acts as an agent interacting with the environment. To achieve this, it is essential to define new types of state spaces, action spaces, and reward functions and ensure that the entire process complies with the requirements of the Markov Decision Process (MDP). This includes the state space S, action space A, conditional transition probabilities P, reward function R, and discount factor (i.e., ). Here is a detailed introduction to the model architecture:

Environmental modeling: In the DRLAttack framework, the environment comprises the structure and algorithmic parameters of the target recommendation system. This environment is capable of capturing user preferences and behavior patterns through data on interactions between users and items, and it uses this information to generate recommendation lists.

Agent design: DRLAttack employs a Deep Q-Network (DQN) deep reinforcement learning algorithm as its agent. This agent improves its strategy by observing the current state, selecting appropriate actions, and receiving rewards provided by the environment. The goal of the agent is to maximize the cumulative reward.

Design of action space A: The action space defines all the possible actions that an attacker can perform, typically including the set of all items in the recommendation system. However, designing it this way often has a negative impact on the learning of the agent’s attack strategies, as the number of items in a real recommendation system’s training dataset is usually very large. It is challenging for the agent to identify correlations between users and items within a vast action space using limited attack resources, leading to a low success rate for attacks. To improve the design of the action space, we first analyze the training dataset before preparing the attack. Using similarity measurement methods, we identify other items similar to the target item to form a candidate item set . For implicit datasets, we use the Jaccard coefficient to find candidate items. The Jaccard similarity [26] between any two items and is calculated as follows:

where represents the number of times two items are interacted with by the same user (i.e., the count of simultaneous interactions with both items by users), and represents the total number of interactions where at least one of the items is interacted with by users (i.e., the count of interactions with at least one of the two items). The process of finding n similar items first involves constructing a matrix R from the user–item implicit interaction data, where rows represent users and columns represent items. An element value of 1 indicates an interaction between a user and an item, while 0 indicates no interaction. Then, calculate the similarity: for target item , iterate through all other items in the matrix to compute the Jaccard similarity between each item and target item . Finally, rank all items based on the calculated Jaccard similarities and select the top n items with the highest similarity as the candidate set for target item .

For explicit datasets (where users provide ratings for items), cosine similarity is used to identify other items most similar to the target item to form a candidate item set. For any two items a and , their cosine similarity [26] is calculated as follows:

where and represent the ratings given by user i to items a and b, respectively, and n represents the total number of users who rated the items.

Additionally, if the action space only includes items similar to the target item, this may make the attack actions more conspicuous and easily recognizable by the recommendation system’s anomaly detection mechanisms. On the other hand, an action space purely based on similar items might limit the agent’s exploration range, affecting learning efficiency and the ultimate effectiveness of the attack. Therefore, we opt to combine n ordinary item and n candidate items to form the action space. The redesign of action space A can make the behavior of fake users more akin to that of real users, reducing the risk of detection. The mix of candidate and ordinary items also enhances the diversity of the action space.

Design of state space S: The core of the state space is the sequence of items that the agent has chosen in the steps leading up to the current step t. Existing data poisoning attack methods often fix the target item’s identifier at the beginning of the state space and then base subsequent actions on the agent’s learning. While this approach is straightforward, it generates a highly correlated sequence of fake user behaviors that can be easily detected and mitigated by the recommendation system’s defensive mechanisms. Practical scenarios also require the consideration of limited attack resources, as each fake user can interact with only a limited number of items. Therefore, we define state space S as a sequence , where represents the sequence of items a fake user chooses to interact with at timestep t. The length of the sequence is determined by the maximum number of items T that a fake user can manipulate.

In the DRLAttack framework, the goal is to generate N independent fake user behavior sequences, each reaching the predetermined maximum length of T. Therefore, the process is set up to proceed in parallel, where each fake user independently selects actions from action space A without interfering with others, until their respective sequences are complete. For each fake user and at each timestep t, the update rule for the behavior sequence is , continuing until . This design facilitates the parallel generation of multiple independent fake user behavior sequences under limited attack resources, enhancing the stealth and efficiency of the attack.

Design of conditional transition probability: The transition probability defines the likelihood that, following an action taken by the attacker at timestep t, the state transitions from the current state to the next state .

Design of reward function R: In reinforcement learning, the reward provided by the environment offers immediate feedback to the agent. This paper focuses on push attacks in a targeted attack scenario, where the attacker aims to recommend the target item to as many users as possible. DRLAttack employs a rank-based reward function, where a positive reward is calculated whenever the target item appears on user ’s recommendation -k list. Here, represents the set of all users, and is a subset of target users selected by the attacker for calculating the reward, considering that the general logic of recommendation systems is to not recommend items a user has already interacted with. Therefore, essentially represents the subset of users who have not interacted with the target item. The hit rate (HR@K) is used as the ranking metric in the reward function for a given state and action . After all N fake user behavior sequences are generated and injected into the target recommendation system to obtain the recommendation lists for the target users, the reward value is calculated as the sum of all HR@K values for the user set as follows:

where s and a, respectively, represent the current state and the action taken in that state. The calculation of the reward is not directly related to the specific action but is based on the overall effect of the attack. returns the hit rate of the target item in the recommendation -k list of the target user . The reward value is the sum of all hit rates for all target users in .

When an attacker aims to promote a group of target items to as many users as possible, the design of the reward function needs to consider the overall recommendation success of all target items. It can be defined as the sum of successful recommendations for each target item across a set of target users as follows:

By calculating the hit rate for each target item in the recommendation list and summing these rates, the agent is encouraged to recommend the items from the target item set to as many users as possible. Such a reward mechanism makes the attack strategy more explicit and goal-oriented.

4.2. Optimization Process of Attack Strategies

In the previous section, the Markov Decision Process (MDP) was used to simulate the interaction between attackers and the recommendation system. To address the challenges posed by high-dimensional state and action spaces, we utilize a Deep Q-Network (DQN) in the DRLAttack framework, enhanced with a Dueling Network architecture to further optimize network performance. A Dueling Network is a neural network design that decomposes the Q-function into two separate components [27]: the value function and the advantage function . The purpose of this design is to better learn the relative advantage of choosing each action in a given state, while maintaining an overall estimate of the state value. In the Dueling Network, the calculation of the Q-function is accomplished by combining the value function and the advantage function as follows:

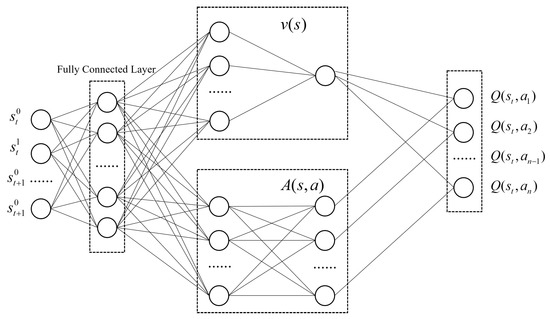

where represents the average of the advantage functions across all actions, which ensures that the contribution of the advantage function to the Q-value is zero when summed over all possible actions. This arrangement allows the value function to remain independent of the chosen action, while the advantage function represents the additional value relative to the average action. This separation facilitates a more focused learning of state values and the specific advantages of each action, improving the efficiency and effectiveness of policy optimization in complex environments. In the DRLAttack framework, the specific details of the network implementation include the input layer, hidden layers, and output layer, as shown in Figure 1. Input Layer: The input to the network is the representation of state s, which in the context of recommendation systems is the sequence of behaviors of fake users. Hidden Layers: These consist of multiple fully connected layers (DNN) designed to extract deep features from the state. Output Layer: The value function and advantage function are combined according to Equation (5) to generate Q-value estimates for each action.

Figure 1.

Network architecture of DRLAttack.

During each iteration of the training phase, there are mainly two stages: the replay memory generation stage and the parameter update stage. In the replay memory generation stage, the agent selects actions from a mixed item set based on the current Q-network and -strategy. After executing action , the environment returns a new state and reward . In the parameter update stage, the agent randomly samples an experience tuple from the experience buffer, and then updates parameters by minimizing the defined loss as follows:

where is the output of the target network, used only for calculating the target value, and is the output of the current network, used to generate new actions. These two networks have the same architecture but different parameters. Every fixed number of timesteps, the parameters of and are synchronized.

Additionally, we introduced the Prioritized Experience Replay (PER) [28] mechanism into the DRLAttack framework. In PER, each experience tuple and is assigned a priority that reflects the importance of learning from that experience. Given that the data poisoning attack on recommendation systems aims to maximize the recommendation of target items to as many users as possible, the priority p is set based on the reward value r obtained from each state sequence as follows:

where is the TD error calculated based on the reward value r, and is a small constant used to ensure that every experience has at least a minimal chance of being selected. When using PER, the sampled experiences are assigned importance sampling weights (ISWs) [29] to counteract biases from non-uniform sampling as follows:

where N is the total number of experiences in the replay memory, ensuring proper normalization of weights; is the probability of an experience tuple being drawn; and is a hyper-parameter that gradually approaches 1 as training progresses. The updated loss function, incorporating importance sampling weights , is as follows:

In the aforementioned update rules, the loss function is multiplied by the corresponding importance sampling weight to adjust the impact of each experience update step, offsetting the uneven distribution of updates caused by prioritized sampling. The early stages of training might require more exploration, while the later stages focus more on exploiting the knowledge that has already been learned. Thus, the adjustment of importance sampling weight can help balance between these two aspects.

4.3. Autonomous Process of Generating False Data

To facilitate autonomous experiments for data poisoning attacks, we designed an autonomous process within the DRLAttack framework that fully utilizes the datasets and recommendation algorithms provided by the RecBole library. This process does not need to download or preprocess training datasets, nor does it necessitate adjustments to the structure or parameters of the recommendation algorithms. The RecBole library uses a unified atomic file format [30], where each atomic file can be seen as a table with m row and n columns (excluding the header), representing that the file stores n different types of features, with a total of m interaction records. The first line of the file serves as the header, with each column in the header formatted as , indicating the name and type of the feature. Features are categorized into one of four types (single discrete feature, discrete feature sequence, single continuous feature, continuous feature sequence), all of which can be conveniently converted into tensors organized by batch [31]. In taking the ml-100k dataset as an example, its file contains four basic attributes: user ID, item ID, rating value, and timestamp. These attributes are very common in real-world recommendation scenarios and serve as the standardized data foundation for the attack experiments in this study.

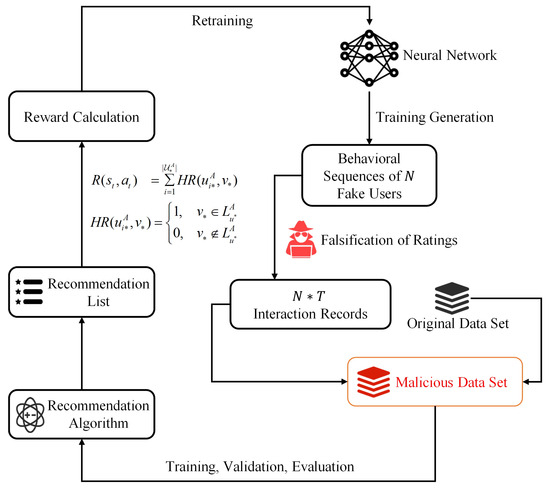

Based on the original training data and recommendation algorithms provided by the RecBole library, the design of the DRLAttack autonomous attack process is illustrated in Figure 2. The steps above form a cycle unit and constitute an autonomous experimental workflow. The agent repeatedly carries out the generation of behavior sequences, integration of datasets, and verification of recommendation effects, until the strategy converges or the predetermined number of iterations is reached. The autonomous attack process makes use of the RecBole library’s unified data format, ensuring the reproducible and scalability of the experiments.

Figure 2.

DRLAttack’s autonomous attack process.

5. DRLAttack in the Black-Box Setting

In the white-box setting, the DRLAttack algorithm can directly obtain feedback from the recommendation system regarding the injected ratings and the effectiveness of the attack. This capability facilitates the optimization of the DRLAttack algorithm. However, in real-world attack scenarios, recommendation systems typically do not provide any feedback to the attacker, making it difficult for the DRLAttack algorithm to assess the effectiveness of the attack. Therefore, we introduce the black-box setting.

In the black-box setting, the internal mechanisms of the recommendation system and the user recommendation lists are invisible to the attacker. To overcome this challenge, we propose the “spy user” mechanism. By creating and controlling a group of spy users that mimic the behavior of real users, the attacker can indirectly obtain state feedback information from the recommendation system without arousing suspicion, thereby evaluating the effectiveness of the attack.

Despite the transition from the white-box to black-box setting, the overall framework and process of the DRLAttack algorithm remain unchanged, as shown in Figure 2. The “spy user” mechanism not only enhances the stealthiness of the attack but also improves the adaptability and effectiveness of the DRLAttack algorithm.

5.1. Construction of Spy Users

In Section 3, we proposed that users can be divided into two categories by analyzing the training dataset: active users and inactive users. This classification is based on the frequency of interactions with the recommendation system and rating behaviors. By creating spy users, the attacking agent simulates the interaction behaviors of both types of real users. This allows the collection of feedback on the recommendation lists provided to these spy users, thereby guiding the agent to improve attack strategies and achieve the objective of promoting target items.

Active users are those who tend to frequently interact with the recommendation system and give positive ratings to recommended items, such as people who are particularly fond of watching movies, shopping, or listening to music in real life. Given that the rating system is a 5-point scale, we select the median rating (3 points) as the threshold to identify active users. Here, a rating of 1 indicates the lowest level of activity, while a rating of 5 indicates the highest level of activity. Active users are defined as those with an average rating value of 3 or higher:

where represents the set of items that user u has interacted with, and is the rating value that user u gives to an item i.

For implicit datasets, where the interaction between users and items cannot be measured by specific rating values, user activity is distinguished by counting the number of items each user has interacted with. To start, calculate the average number of items each user interacts with, represented as , as follows:

where U represents the total number of users, and is the number of items that user i has interacted with. Based on , whether user i is considered an active user can be determined by the following logical expression:

As this paper focuses on a data poisoning attack targeting specific items, users who have already interacted with the target item are not considered. Therefore, we first compile a list of users who have not interacted with the target item, represented as . From this set , we then identify subsets of active users and inactive users . Through our design, each spy user’s interaction records not only simulate the behavior patterns of real users but also incorporate a small number of randomly selected items to enhance the diversity and randomness of their actions, thereby reducing the risk of detection and removal. This approach maintains the representatives of spy users while enhancing their stealth, providing reliable reward feedback signals for subsequent attack strategies.

5.2. Improvement of Reward Function

In the white-box setting, the design of the reward function primarily depends on the hit rate (HR@K) of the target item in the recommendation lists of target users, because attackers can directly access the output of the recommendation system and accurately assess the promotion effect of the target item. However, when transitioning to a black-box setting, attackers face the limitation of not being able to directly access all users’ recommendation lists, and can only inject a small number of spy users to obtain recommendation lists, which significantly reduces the applicability of the original reward function design. To address this, we propose an improved reward function design that combines the positional information of the target item in the recommendation list with the hit rate, expanding the range of reward values and thereby enhancing the learning efficiency of the agent and the optimization effect of the attack strategy. Considering the significant impact of the target item’s position in the recommendation lists of spy users on the effectiveness of the attack, the improved reward function is as follows:

where represents the hit rate of the target item in the recommendation list of spy users, k is the length of the recommendation list, represents the position of the target item in the recommendation list of the i-th user. If the target item is not in the list, then , meaning it is considered to be outside the list. represents a weighting factor, using the reciprocal of the position as the weight. The specific solution process can be seen in Algorithm 1.

| Algorithm 1 Solving for Position Information |

|

5.3. Introduction of LSTM Network

After creating spy users and redesigning the reward function, we recognize that in the data poisoning attack scenario, attackers need to dynamically adjust their strategies based on feedback from the recommendation system, as both user behavior and system feedback are continuously changing. Traditional deep neural networks, while excellent at processing static data, have significant limitations in capturing long-term dependencies in time series data. Therefore, we incorporated Long Short-Term Memory networks (LSTM) into the DRLAttack attack framework under the black-box setting. LSTM [32] can handle sequences of user–item interactions and predict the next best recommendation item. Because LSTM takes into account past interaction sequences and can capture the dynamic changes in user behavior, it provides a more dynamic and flexible learning mechanism for the agent in the black-box setting data poisoning attack strategy.

Specifically, at each timestep t, the state—namely, the sequence of items that the user has chosen to interact with up to that point—is fed into the LSTM network. The network then outputs the next optimal action based on the current sequence state—deciding which item ID to interact with next. The network structure and decision process primarily include the following steps:

State representation: At timestep t, state is represented as a sequence of items that the user has interacted with. This sequence is transformed into a vector through an embedding layer to be fed into the LSTM layer.

Defining the LSTM network: The LSTM layer receives an embedding of the user and item sequences along with the previous cell state and outputs the current timestep’s hidden state and cell state as follows:

where is the LSTM hidden state at timestep t, which integrates the user’s features with the features of all previously interacted items at all past timesteps. is the LSTM cell state at timestep t, acting as an internal memory mechanism that carries the flow of information between timesteps, responsible for storing and transferring critical information across different timesteps. is the representation of the user’s features. is the feature representation of items interacted with prior to timestep t. The size of all hidden layers is set to match the size of the feature representations . This network design allows the LSTM to retain historical information and use it to predict future actions.

Action selection: After establishing the LSTM network, the policy network samples the next action based on the hidden state , which is the next item ID to interact with. This follows a multinomial distribution over the action space A [33], meaning each possible action has a probability associated with it. These probabilities are calculated based on as follows:

where is a deep neural network that employs a dual-layer DNN with ReLU activation functions, which helps capture complex nonlinear relationships and enhances the model’s representational power. The size of the DNN layers is set to match the dimension of the item feature representation , ensuring that the network’s output can be directly compared and computed with the item features. represents the network parameters. denotes the dot product operation between two vectors. The entire process can be interpreted as follows: generates a score for each possible action, and the dot product operation adjusts these scores, outputting the value of each potential action to reflect the advantage of specific actions.

Policy decision: The agent employs an -greedy strategy to select the next action from at the current timestep, which is the next item ID to interact with.

User behavior in recommendation systems exhibits strong temporal dependencies, and the LSTM network is specifically designed to handle such dependencies. It can capture and utilize this dependency to make better predictions. Moreover, since the LSTM retains historical information, it can consider the items a user has interacted with in the past when making sequential decisions, thus better understanding the user’s long-term preferences and behavior patterns. Additionally, user–item interactions in recommendation systems can be highly complex, and the use of LSTM allows the model to capture these intricate interaction patterns and consider them in the decision-making process.

6. Experiment

6.1. Experimental Setup

6.1.1. Dataset

To validate the effectiveness of the DRLAttack framework, we utilized three widely recognized real-world recommendation system datasets, including two movie datasets and one music dataset: MovieLens-100K, MovieLens-1M, and Last.fm. These datasets represent the two common types of data in recommendation systems—explicit and implicit feedback, as shown in Table 2. Here is an introduction to these datasets:

Table 2.

Data poisoning attack experimental datasets.

The MovieLens-100K dataset serves as a classic case study for explicit rating feedback in recommendation system research. It contains 100,000 rating records from 943 users on 1682 movies, with ratings ranging from 1 to 5. This dataset ensures that each user has provided at least 20 ratings, giving the data a certain level of completeness. The MovieLens-1M dataset offers a richer rating environment, containing over one million ratings from 6040 users on 3706 movies.

Unlike the movie rating datasets, the Last.fm dataset provides an implicit feedback scenario about music preferences. It includes 92,834 tag assignment records from 1892 users for 17,632 artists. These tags indicate the interactions between users and music artists, and although they cannot be directly quantified as ratings, they remain an important indicator of user preferences, particularly suitable for experiments on the impact of tagging behavior on recommendation outcomes. This chapter utilizes this dataset to study the effects of poisoning attacks with non-rating behavior data.

6.1.2. Baseline Attack Method

We compared DRLAttack with various representative heuristic-based attack strategies, where each attacker injects N fake user into the target recommendation system. Different attacks utilize different strategies to select items for the fake users to interact with:

Random attack [34]: The attacker creates a unique set of items for each fake user by mixing the target item with a series of randomly picked other items. During the attack, each fake user randomly selects an item from their assigned set for interaction, ensuring each selection is non-repetitive. The key to this method lies in its randomness, intended to simulate the unpredictability of ordinary user behavior.

Random–similarity attack: This strategy combines the precision of similarity measurements with the unpredictability of random selection. The attack execution comprises three main steps: Initially, a set of items highly similar to the target item is identified from the original dataset using the Jaccard coefficient for implicit datasets or the cosine similarity method for explicit datasets. Subsequently, these similar items are combined with randomly selected ordinary items to form the final attack item set. Lastly, items are randomly chosen from this set for each fake user to interact with, thereby effectively simulating real user behavior patterns.

Popular attack [35]: The attacker focuses on creating false associations between the target item and currently popular items. The popularity of an item in explicit datasets is generally represented by its average rating, and in implicit datasets by its interaction frequency. The n popular items are then combined with other ordinary items to form the attack item set, with items being randomly selected for interaction.

In the black-box setting, adjustments are made to the reward function and the network structure of the DQN algorithm for the DRLAttack method. Therefore, we added two ablation methods based on the three baseline attack methods to serve as baseline comparison methods for the DRLAttack strategy in the black-box setting:

DRLAttack-White: The reward function and the network structure of the DQN algorithm remain as they were designed for the white-box setting in Section 4. The reward function does not integrate information about the position of the target item in the recommendation list and does not incorporate LSTM to handle the sequence of interactions by the fake users.

DRLAttack-DNN: Based on the DRLAttack method in the white-box setting, this version uses the improved reward function from this chapter, which combines the hit rate of the target item and its position information in the recommendation list. The DQN algorithm employs a deep neural network (DNN) as its network structure design.

6.1.3. Targeted Recommendation Algorithm

In our experimental research on data poisoning attacks, we selected three types of collaborative filtering recommendation algorithms from the RecBole library. The core idea of these algorithms is to utilize the interaction data between users and items to make recommendations. However, each employs different technologies and model structures to enhance the accuracy and efficiency of recommendations:

Bayesian Personalized Ranking (BPR) [36]: BPR optimizes pairwise ranking loss to improve recommendation accuracy by focusing on the relative order of user preferences rather than absolute ratings. Leveraging a Bayesian framework to model user–item interactions, it is particularly effective in handling implicit feedback data, emphasizing the relative preferences between items.

Deep Matrix Factorization (DMF) [37]: This approach applies deep learning techniques to traditional matrix factorization methods, using deep neural networks to capture the complex nonlinear relationships between users and items.

Neural Matrix Factorization (NeuMF) [38]: NeuMF is a neural network-based recommendation algorithm that combines matrix factorization with a multi-layer perceptron (MLP). It integrates linear matrix factorization and nonlinear MLP paths in parallel to learn complex user–item interaction patterns, enhancing recommendation accuracy.

6.1.4. Evaluation Metric

In the experimental evaluation metrics section, we primarily use the hit rate of the target item I and Normalized Discounted Cumulative Gain (NDCG) to assess the effectiveness of the poisoning attack in promoting the target item. Initially, for each target item, based on the original training dataset, we identify all users who have not interacted with the item and divide these users into two subsets: The target user set is randomly selected from the list of users who have never rated the item. These users are used to simulate interactions under the attack strategy, allowing for the calculation of reward values and optimization of the attack strategy. And the evaluation user set, consisting of the remaining users, is used as the basis for calculating evaluation metrics to measure the actual impact of the poisoning attack on the recommendation system.

HR@K: Assume that each user’s recommendation list contains k items. HR@K is calculated as the proportion of evaluation users whose -k recommendation lists include the target item, derived using Equation (3).

NDCG@K: Considering the ranking positions of items in the recommendation lists, by calculating NDCG, we can assess the effectiveness of the poisoning attack strategy in improving the ranking quality of the target item.

HR@K and NDCG@K [39,40,41] are commonly used evaluation metrics in recommendation systems, which are used to measure the coverage and ranking quality of recommendation systems, respectively.

6.1.5. Experimental Environment and Parameters

Here is a detailed description of the experimental setup used to conduct data poisoning attack experiments, which includes both hardware and software environments:

- Hardware environmentGPU: NVIDIA GeForce RTX 4090 (NVIDIA, Santa Clara, CA, USA), utilized for its robust processing capabilities in handling complex computations required for deep learning. CPU: Intel(R) Xeon(R) Silver 4210R CPU @ 2.40GHz (Intel, Santa Clara, CA, USA), capable of efficiently managing multiple tasks. Memory: 128 GB of RAM, to ensure smooth operation of large datasets and extensive computational processes without bottlenecks.

- Software environmentOperating System: Ubuntu 20.04 LTS, chosen for its stability and compatibility with various development tools and libraries. Development Environment: Set up in a virtual environment on Anaconda, which helps manage package dependencies easily. Deep Learning Framework: PyTorch (version 1.12.0), chosen for its efficiency and flexibility in building neural network models. Recommendation System Library: RecBole (version 1.2.0), which provides comprehensive support for implementing and evaluating recommendation algorithms.

- Details of the data poisoning attack experimentsAlgorithm: DQN with a Dueling Network architecture to enhance decision-making capabilities. Learning Rate: 0.001, to control the speed of model weight updates. Reward Decay Factor (): 0.95, which determines the current value of future rewards and affects the discounting in the learning process. Exploration Rate: Begins at 1.0 and linearly reduces to 0.01, to balance exploration of new actions against exploiting known actions. Experience Buffer Size: 10,000, used to store past interactions for replay, crucial for experience-based learning. Batch Size: 128, determining the number of samples per training iteration. Target Network Update Frequency: Updated every 100 episodes to regulate the update rate of the target Q-network and stabilize learning.

6.2. Results in the White-Box Setting

6.2.1. Effectiveness of Poisoning Attack

We utilized the Bayesian Personalized Ranking (BPR) recommendation algorithm to train on three different datasets. Due to the varying sizes of these datasets, the number of fake users inserted during the attack process also varied. By default, the experimental settings involve setting the fake user count to of all users, with . Table 3 shows the results (The bold numbers are the best performance between the methods (including our method); The underlined numbers are the best performance between the methods (excluding our method).) of the poisoning attacks at different fake user ratios and the impact of the maximum interaction sequence length of fake users on the effectiveness of the attack.

Table 3.

HR@10 for different attack methods at different attack sizes in White-Box Setting.

For the ML-100K dataset, item number 200 was targeted in an attack involving 943 users, of whom 737 had not rated the item. Out of these, 100 users were randomly selected as target users to receive feedback rewards, and the rest were used as evaluation users to calculate the HR@10 metric for the top 10 recommendation lists. In all cases, DRLAttack significantly outperformed the baseline attacks. In particular, when of fake users were inserted, DRLAttack’s hit rate was approximately three times higher than the best hit rate of other attack methods. Additionally, the experiment found that an overly large T value could actually decrease the hit rate of the poisoning attack. For instance, the random attack method saw a decrease in hit rate at compared to , while the decline in DRLAttack was relatively stable. The attack on the ML-1M dataset yielded similar effects.

For the Last.fm music dataset, the popular attack utilized the recommendation system’s tendency to recommend popular items, but due to the high user–item sparsity in Last.fm, the popular attack performed worst in this dataset. DRLAttack remained the most effective in all cases, with the random attack based on similarity measures achieving the best performance among baseline attacks. It managed to increase the hit rate to 0.010, but this was only of the hit rate achieved by DRLAttack.

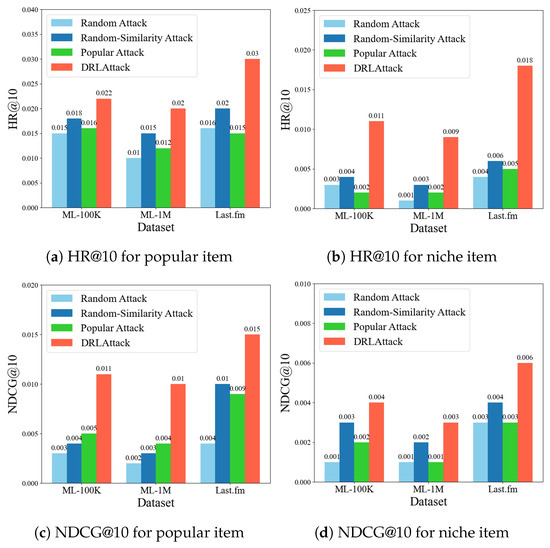

6.2.2. Popular vs. Niche Target Item

We explored the effectiveness of DRLAttack when targeting popular and niche (less popular) items. The default experimental settings were set with a fake user ratio of and , and the chosen recommendation algorithm for the attack was DMF. To differentiate between popular and niche items, we ranked items based on the total number of user interactions. The top of items with the most interactions were classified as popular items, while the bottom with the fewest interactions were classified as niche items. The specific item numbers targeted for the attack in the three datasets, along with statistics on users who had not interacted with these items, are detailed in Table 4.

Table 4.

Selection of target item for attack.

In experiments with popular items, DRLAttack demonstrated a higher average hit rate (HR@10) on the ML-100K, ML-1M, and Last.fm datasets, with rates of 0.022, 0.020, and 0.030, respectively, compared to the three baseline methods. This indicates that DRLAttack can more effectively manipulate the recommendation system, even for those items that already have high exposure rates.

For attacks on niche items, as shown in Figure 3, DRLAttack also outperformed all baseline methods across the three datasets, with HR@10 rates of 0.011, 0.009, and 0.018, respectively. This result further confirms the effectiveness of DRLAttack in enhancing the ranking of target items. Particularly in the Last.fm dataset, DRLAttack’s hit rate was almost three times that of the best baseline method.

Figure 3.

Attack effects for popular and niche target item.

NDCG, another important metric for measuring the quality of ranking in recommendation lists, showed significant advantages for DRLAttack in experiments with popular items, as displayed in Figure 3. Although the increase in NDCG value for niche items was not as pronounced as for popular items, it still far exceeded the attack effects of the baseline methods. These results still demonstrate that DRLAttack can effectively improve the ranking quality of niche items.

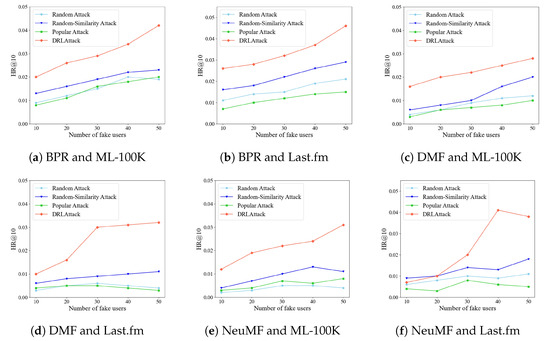

6.2.3. Attack on Multiple Target Items

In real-world recommendation systems, malicious attackers may aim to boost the exposure and recommendation ranking of multiple target items to gain more commercial benefits. Therefore, we conducted experiments on the ML-100K and Last.fm datasets, initially selecting three items each from collections of popular and niche items to form a target item list. The target item list for ML-100K was [100, 110, 150, 200, 500, 710], and for Last.fm, it was [100, 225, 356, 535, 1000, 1210]. The results are shown in Figure 4, with default parameter settings at .

Figure 4.

Attack effects for multiple target items.

For the BPR algorithm trained on the ML-100K and Last.fm datasets, the performance of DRLAttack significantly improved with the increase in the number of fake users, especially when the number of fake users reached 40 and 50, showing a marked improvement over the baseline methods. For the DMF algorithm, DRLAttack also demonstrated superior performance compared to baseline methods, particularly on the Last.fm dataset, where its performance enhancement was more pronounced with an increase in the number of fake users. In the case of the NeuMF algorithm, DRLAttack performed better than all baseline methods as well, but when the number of fake users increased from 40 to 50, the effectiveness of DRLAttack’s attacks actually decreased. This could be due to conflicts in the interaction sequences chosen by the fake users, as the NeuMF algorithm may have captured all the behavior sequence patterns, leading to biases in the recommendation results.

6.2.4. Effectiveness Under Defensive Mechanisms

Considering the realistic scenario where recommendation systems might deploy defense mechanisms to detect and mitigate fraudulent rating interactions by “water armies”, experiments were conducted using the NeuMF recommendation algorithm to study the effectiveness of poisoning attacks under a rating-based detector. RDMA (Rating Deviation from Mean Agreement) is a fake user detection mechanism based on user rating behaviors, reflecting the disparity between a user’s ratings and the average ratings of the respective items. For user u, RDMA measures the average deviation in that user’s ratings from the item’s average ratings. This feature is calculated through the following steps: first, for each item i rated by user u, calculate the absolute difference between the user’s rating and the average rating of that item, and then divide by the number of ratings for that item; next, sum all these values and divide by the total number of items that user has rated. The mathematical expression is as follows:

We conducted experiments on two explicit rating datasets, ML-100K and ML-1M, with the default number of fake users set at and . The results are displayed in Table 5. The experimental data show that, compared to other baseline methods, DRLAttack demonstrates higher effectiveness in attacks on both datasets, both before and after the deployment of the RDMA defense mechanism. On smaller datasets, attack methods struggle to counteract the RDMA defense, whereas on the larger ML-1M dataset, due to the vast number of users and items, the proportion of fake users and their interactions is relatively small, which complicates the detection of fake users.

Table 5.

Attack effects before and after deployment of RDMA defense methods (number of false users).

6.3. Results in the Black-Box Setting

6.3.1. Effectiveness of Poisoning Attack

For each dataset, we randomly selected 50 active and 50 inactive users, fabricating their interaction records to form an evaluation user set (totaling 100 users) for assessing the effectiveness of the attack. We then calculated evaluation metrics based on the recommendation lists generated for each user in this set. The BPR recommendation algorithm was used to train on three datasets, with the experimental parameters set as in Section 6.2. Additionally, 30 spy users were created (15 simulating active users and 15 simulating inactive users) to receive feedback from the recommendation system for reward calculation. Table 6 shows the results of the poisoning attacks with different attack scales, including the proportion of fake users and the maximum sequence length of user behaviors.

Table 6.

HR@10 for different attack methods at different attack sizes in Black-Box Setting.

In the white-box settings, the three baseline methods still underperformed in the black-box setting; even when the proportion of fake users reached , the best hit rate was only 0.07, significantly lower than the 0.16 hit rate of DRLAttack at fake users. Across the three datasets, the DRLAttack method outperformed DRLAttack-DNN, achieving a hit rate up to three times better than DRLAttack-DNN, primarily due to the introduction of LSTM. While DRLAttack-White and DRLAttack-DNN also utilized some improvements, their lack of capability to process time series data restricted their performance.

6.3.2. Spy User Selection

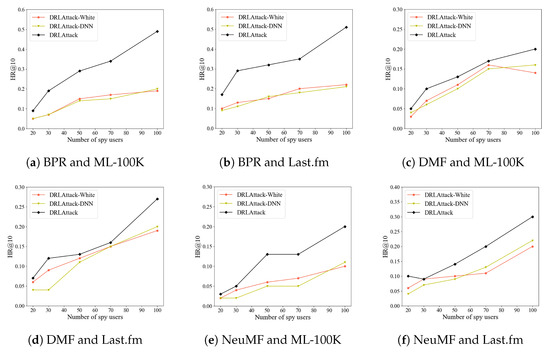

During experiments on poisoning attacks in a black-box setting, the number of spy users and their composition are two critical factors that can significantly influence the effectiveness of the attacks. We conducted experiments on the ML-100K and Last.fm datasets across three recommendation algorithms. Initially, we varied the number of spy users (maintaining an equal ratio of active to inactive users), and the results are illustrated in Figure 5.

Figure 5.

Impact of the number of spy users on the effectiveness of the attack.

DRLAttack consistently achieved higher hit rates across all spy user settings compared to DRLAttack-White and DRLAttack-DNN, and the hit rates for all three attack methods increased as the number of spy users grew. With fewer spy users (such as 20 to 30), the increase in hit rate was more moderate, while with a larger number (such as 50 to 100), the improvement was more pronounced.

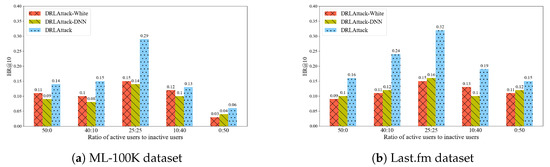

After verifying the impact of the number of spy users on the effectiveness of the attacks, we explored the influence of the type of ordinary user behaviors simulated by spy users on attack performance. With the number of spy users fixed at 50, the experimental results, as shown in Figure 6, indicate that on the ML-100K and Last.fm datasets, the hit rate of DRLAttack initially increased as the proportion of active users rose, albeit modestly. However, after reaching a peak (at an equal ratio of 25 active to 25 inactive users), the hit rate began to decline as the proportion of active users continued to increase. A similar trend was observed in the attack methods of DRLAttack-White and DRLAttack-DNN. These results suggest that considering the diversity and complexity of user behavior patterns is crucial when designing attack strategies against recommendation systems.

Figure 6.

Attack effects for different user ratios in two datasets.

6.3.3. Effectiveness Under Defensive Mechanisms

We also conducted experiments in a black-box setting to explore whether poisoning attack methods could evade detection by the RDMA mechanism. The experimental results are displayed in Table 7.

Table 7.

Attack effects before and after deployment of RDMA defense methods (number of spy users).

By fixing the number of generated fake users at 30 and varying the number of spy users, we observed that when the number of spy users was small, the decline in hit rates for the three attack methods was not significant. However, when the number of spy users reached 70, the hit rate sharply decreased. This suggests that as the number of spy users increases, the fake user data generated by the DRLAttack strategy becomes more generic and easily detectable by defense mechanisms, significantly impacting the effectiveness of the final attack.

7. Conclusions

In this paper, we propose a deep reinforcement learning-based poisoning framework, DRLAttack. In the white-box setting, we optimize the action space via similarity measurement methods and integrate Dueling Networks into the DQN algorithm to significantly enhance its performance. By leveraging the RecBole library, DRLAttack can autonomously generate effective and stealthy fake user interaction data, enabling targeted promotion attacks. For the black-box setting, we enhance DRLAttack by incorporating spy users and an improved reward function that simulates normal user behavior. This allows us to obtain feedback from the recommendation system and adapt to its unpredictability. Moreover, the LSTM network enhances the stealthiness and effectiveness of data poisoning attacks by capturing long-term dependencies in user behaviors and dynamically adjusting attack strategies. Our experiments on real datasets demonstrate that DRLAttack can effectively manipulate recommendation results in both white-box and black-box settings, successfully pushing specific target items to the top of the recommendation list.

Future research will continue to explore targeted promotion attacks on large datasets and improve attack strategies to effectively use limited resources. These efforts will pose a significant threat to recommendation systems. Additionally, studying the dynamic interaction between recommendation systems and attackers will be a key focus. We will design defense mechanisms that can adapt in real time to changes in attack strategies, which will have practical application value in addressing more complex and diverse attack scenarios.

Author Contributions

Conceptualization, M.L. and Y.S.; methodology, M.L.; validation, J.F.; formal analysis, J.F.; writing—original draft preparation, J.F.; writing—review and editing, P.C.; visualization, P.C.; funding acquisition, M.L. and Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 62372126, 62372129, U2436208, U2468204, 62272119, 62072130), the Guangdong Basic and Applied Basic Research Foundation (No. 2023A1515030142), the Key Technologies R&D Program of Guangdong Province (No. 2024B0101010002), the Strategic Research and Consulting Project of the Chinese Academy of Engineering (No. 2023-JB-13), and the Project of Guangdong Key Laboratory of Industrial Control System Security (2024B1212020010).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fan, J.; Yan, Q.; Li, M.; Qu, G.; Xiao, Y. A survey on data poisoning attacks and defenses. In Proceedings of the 2022 7th IEEE International Conference on Data Science in Cyberspace (DSC), Guilin, China, 11–13 July 2022; pp. 48–55. [Google Scholar]

- Li, M.; Lian, Y.; Zhu, J.; Lin, J.; Wan, J.; Sun, Y. A Sampling-Based Method for Detecting Data Poisoning Attacks in Recommendation Systems. Mathematics 2024, 12, 247. [Google Scholar] [CrossRef]

- Biggio, B.; Rieck, K.; Ariu, D.; Wressnegger, C.; Corona, I.; Giacinto, G.; Roli, F. Poisoning behavioral malware clustering. In Proceedings of the 2014 Workshop on Artificial Intelligent and Security Workshop, Scottsdale, AZ, USA, 7 November 2014; pp. 27–36. [Google Scholar]

- Deldjoo, Y.; Di Noia, T.; Merra, F.A. Assessing the impact of a user-item collaborative attack on class of users. arXiv 2019, arXiv:1908.07968. [Google Scholar]

- Wang, Z.; Gao, M.; Yu, J.; Ma, H.; Yin, H.; Sadiq, S. Poisoning Attacks against Recommender Systems: A Survey. arXiv 2024, arXiv:2401.01527. [Google Scholar]

- Yuan, W.; Nguyen, Q.V.H.; He, T.; Chen, L.; Yin, H. Manipulating federated recommender systems: Poisoning with synthetic users and its countermeasures. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 1690–1699. [Google Scholar]

- Deldjoo, Y.; Noia, T.D.; Merra, F.A. A survey on adversarial recommender systems: From attack/defense strategies to generative adversarial networks. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Anelli, V.W.; Deldjoo, Y.; DiNoia, T.; Merra, F.A. Adversarial recommender systems: Attack, defense, and advances. In Recommender Systems Handbook; Springer: New York, NY, USA, 2021; pp. 335–379. [Google Scholar]

- Lin, C.; Chen, S.; Li, H.; Xiao, Y.; Li, L.; Yang, Q. Attacking recommender systems with augmented user profiles. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual, 19–23 October 2020; pp. 855–864. [Google Scholar]

- Burke, R.; Mobasher, B.; Bhaumik, R. Limited knowledge shilling attacks in collaborative filtering systems. In Proceedings of the 19th International Joint Conference on Artificial Intelligence (IJCAI 2005), Edinburgh, Scotland, 30 July–5 August 2005; pp. 17–24. [Google Scholar]

- Wu, C.; Lian, D.; Ge, Y.; Zhu, Z.; Chen, E.; Yuan, S. Fight fire with fire: Towards robust recommender systems via adversarial poisoning training. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; pp. 1074–1083. [Google Scholar]

- Huang, C.; Li, H. Single-user injection for invisible shilling attack against recommender systems. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 864–873. [Google Scholar]

- Chen, L.; Xu, Y.; Xie, F.; Huang, M.; Zheng, Z. Data poisoning attacks on neighborhood-based recommender systems. Trans. Emerg. Telecommun. Technol. 2021, 32, e3872. [Google Scholar] [CrossRef]

- Guo, S.; Bai, T.; Deng, W. Targeted shilling attacks on gnn-based recommender systems. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 649–658. [Google Scholar]

- Yang, G.; Gong, N.Z.; Cai, Y. Fake Co-visitation Injection Attacks to Recommender Systems. In Proceedings of the NDSS, San Diego, CA, USA, 26 February–1 March 2017. [Google Scholar]

- Peng, S.; Mine, T. A robust hierarchical graph convolutional network model for collaborative filtering. arXiv 2020, arXiv:2004.14734. [Google Scholar]

- Nguyen Thanh, T.; Quach, N.D.K.; Nguyen, T.T.; Huynh, T.T.; Vu, V.H.; Nguyen, P.L.; Jo, J.; Nguyen, Q.V.H. Poisoning GNN-based recommender systems with generative surrogate-based attacks. ACM Trans. Inf. Syst. 2023, 41, 1–24. [Google Scholar] [CrossRef]

- Yu, Y.; Liu, Q.; Wu, L.; Yu, R.; Yu, S.L.; Zhang, Z. Untargeted attack against federated recommendation systems via poisonous item embeddings and the defense. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4854–4863. [Google Scholar]

- Fang, M.; Yang, G.; Gong, N.Z.; Liu, J. Poisoning attacks to graph-based recommender systems. In Proceedings of the 34th Annual Computer Security Applications Conference, San Juan, PR, USA, 3–7 December 2018; pp. 381–392. [Google Scholar]

- Song, J.; Li, Z.; Hu, Z.; Wu, Y.; Li, Z.; Li, J.; Gao, J. Poisonrec: An adaptive data poisoning framework for attacking black-box recommender systems. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 157–168. [Google Scholar]

- Zhang, H.; Li, Y.; Ding, B.; Gao, J. LOKI: A practical data poisoning attack framework against next item recommendations. IEEE Trans. Knowl. Data Eng. 2022, 35, 5047–5059. [Google Scholar] [CrossRef]

- Fang, M.; Gong, N.Z.; Liu, J. Influence function based data poisoning attacks to top-n recommender systems. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 3019–3025. [Google Scholar]

- Mądry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. stat 2017, 1050, 9. [Google Scholar]

- Chiang, H.Y.; Chen, Y.S.; Song, Y.Z.; Shuai, H.H.; Chang, J.S. Shilling black-box review-based recommender systems through fake review generation. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 286–297. [Google Scholar]

- Liu, X.; Tao, Z.; Jiang, T.; Chang, H.; Ma, Y.; Huang, X. ToDA: Target-oriented Diffusion Attacker against Recommendation System. arXiv 2024, arXiv:2401.12578. [Google Scholar]

- Tan, P.N.; Steinbach, M.; Kumar, V. Introduction to Data Mining; Addison-Wesley: Boston, MA, USA, 2006. [Google Scholar]

- Fan, W.; Zhao, X.; Li, Q.; Derr, T.; Ma, Y.; Liu, H.; Wang, J.; Tang, J. Adversarial attacks for black-box recommender systems via copying transferable cross-domain user profiles. IEEE Trans. Knowl. Data Eng. 2023, 35, 12415–12429. [Google Scholar] [CrossRef]

- Yang, S.; Yao, L.; Wang, C.; Xu, X.; Zhu, L. Incorporated Model-Agnostic Profile Injection Attacks on Recommender Systems. In Proceedings of the 2023 IEEE International Conference on Data Mining (ICDM), Shanghai, China, 1–4 December 2023; pp. 1481–1486. [Google Scholar]

- Zhang, X.; Chen, J.; Zhang, R.; Wang, C.; Liu, L. Attacking recommender systems with plausible profile. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4788–4800. [Google Scholar] [CrossRef]

- Zhao, W.X.; Mu, S.; Hou, Y.; Lin, Z.; Chen, Y.; Pan, X.; Li, K.; Lu, Y.; Wang, H.; Tian, C.; et al. Recbole: Towards a unified, comprehensive and efficient framework for recommendation algorithms. In Proceedings of the 30th acm International Conference on Information & Knowledge Management, Queensland, Australia, 1–5 November 2021; pp. 4653–4664. [Google Scholar]

- Xu, L.; Tian, Z.; Zhang, G.; Zhang, J.; Wang, L.; Zheng, B.; Li, Y.; Tang, J.; Zhang, Z.; Hou, Y.; et al. Towards a more user-friendly and easy-to-use benchmark library for recommender systems. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 2837–2847. [Google Scholar]

- Wang, Y.; Liu, Y.; Wang, Q.; Wang, C.; Li, C. Poisoning self-supervised learning based sequential recommendations. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 300–310. [Google Scholar]

- Yuan, F.; Yao, L.; Benatallah, B. Adversarial collaborative auto-encoder for top-n recommendation. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- He, X.; He, Z.; Du, X.; Chua, T.S. Adversarial personalized ranking for recommendation. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 355–364. [Google Scholar]

- Zhang, S.; Yin, H.; Chen, T.; Huang, Z.; Nguyen, Q.V.H.; Cui, L. Pipattack: Poisoning federated recommender systems for manipulating item promotion. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Virtual, 21–25 February 2022; pp. 1415–1423. [Google Scholar]

- Cao, Y.; Chen, X.; Yao, L.; Wang, X.; Zhang, W.E. Adversarial attacks and detection on reinforcement learning-based interactive recommender systems. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 1669–1672. [Google Scholar]

- Yue, Z.; He, Z.; Zeng, H.; McAuley, J. Black-box attacks on sequential recommenders via data-free model extraction. In Proceedings of the 15th ACM Conference on Recommender Systems, Amsterdam, The Netherlands, 27 September–1 October 2021; pp. 44–54. [Google Scholar]

- Fan, W.; Derr, T.; Zhao, X.; Ma, Y.; Liu, H.; Wang, J.; Tang, J.; Li, Q. Attacking black-box recommendations via copying cross-domain user profiles. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 1583–1594. [Google Scholar]

- Zhang, K.; Cao, Q.; Wu, Y.; Sun, F.; Shen, H.; Cheng, X. Improving the Shortest Plank: Vulnerability-Aware Adversarial Training for Robust Recommender System. In Proceedings of the 18th ACM Conference on Recommender Systems, New York, NY, USA, 14–18 October 2024; RecSys ’24. pp. 680–689. [Google Scholar]

- Pourashraf, P.; Mobasher, B. Using user’s local context to support local news. In Proceedings of the 30th ACM Conference on User Modeling, Adaptation and Personalization, Barcelona, Spain, 4–7 July 2022; UMAP’22 Adjunct. pp. 359–365. [Google Scholar]

- Jiang, L.; Liu, K.; Wang, Y.; Wang, D.; Wang, P.; Fu, Y.; Yin, M. Reinforced Explainable Knowledge Concept Recommendation in MOOCs. ACM Trans. Intell. Syst. Technol. 2023, 14, 1–20. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).