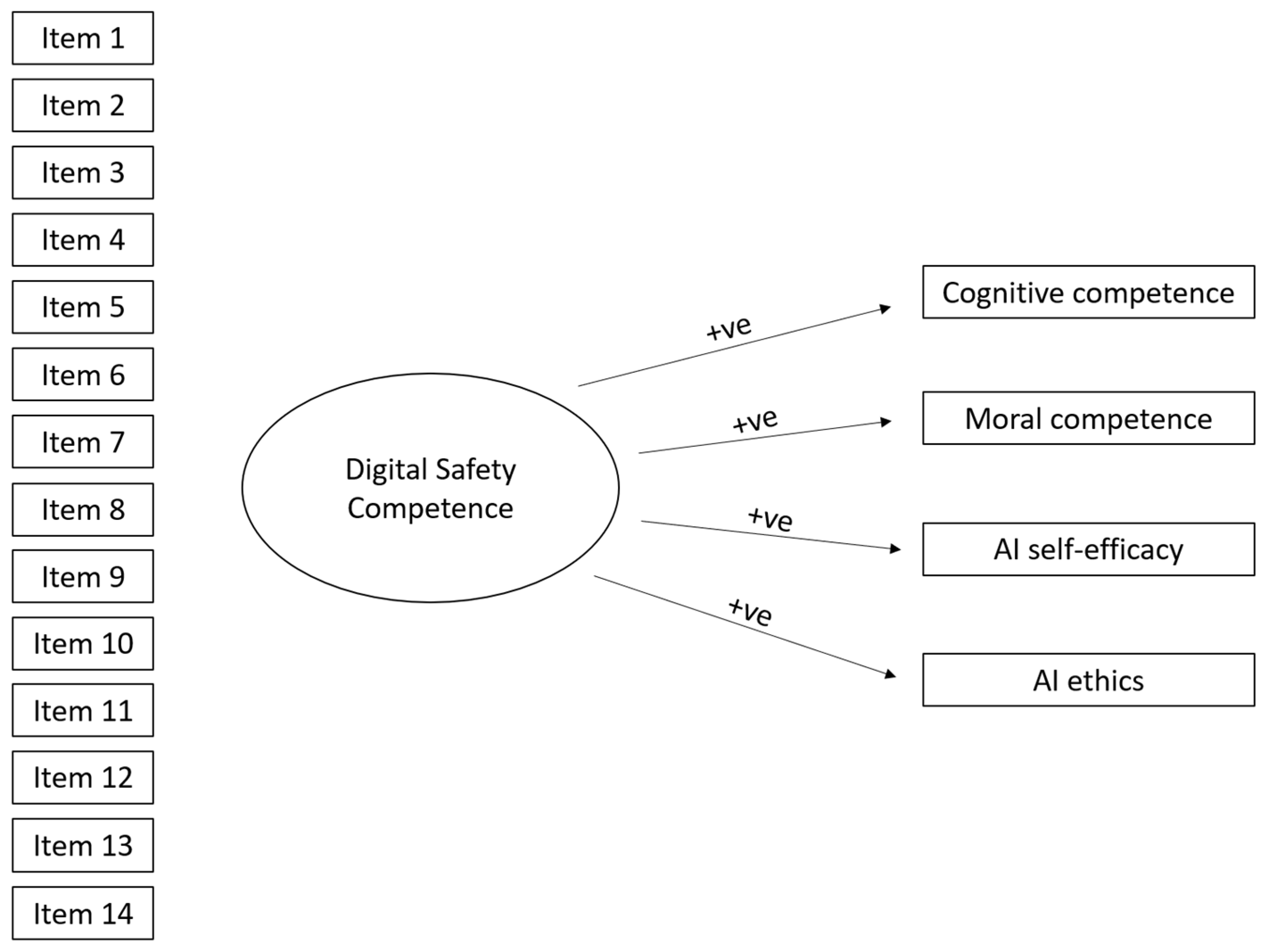

The Impact of Digital Safety Competence on Cognitive Competence, AI Self-Efficacy, and Character

Abstract

1. Introduction

1.1. Digital Competence

1.2. Digital Safety Competence (DSC)

1.3. University’s Role

1.4. Cognitive Competence

1.5. AI Self-Efficacy

1.6. AI Ethics and Moral Competence

2. Materials and Methods

2.1. Research Design and Sample

2.2. Measurement

2.3. Data Analysis

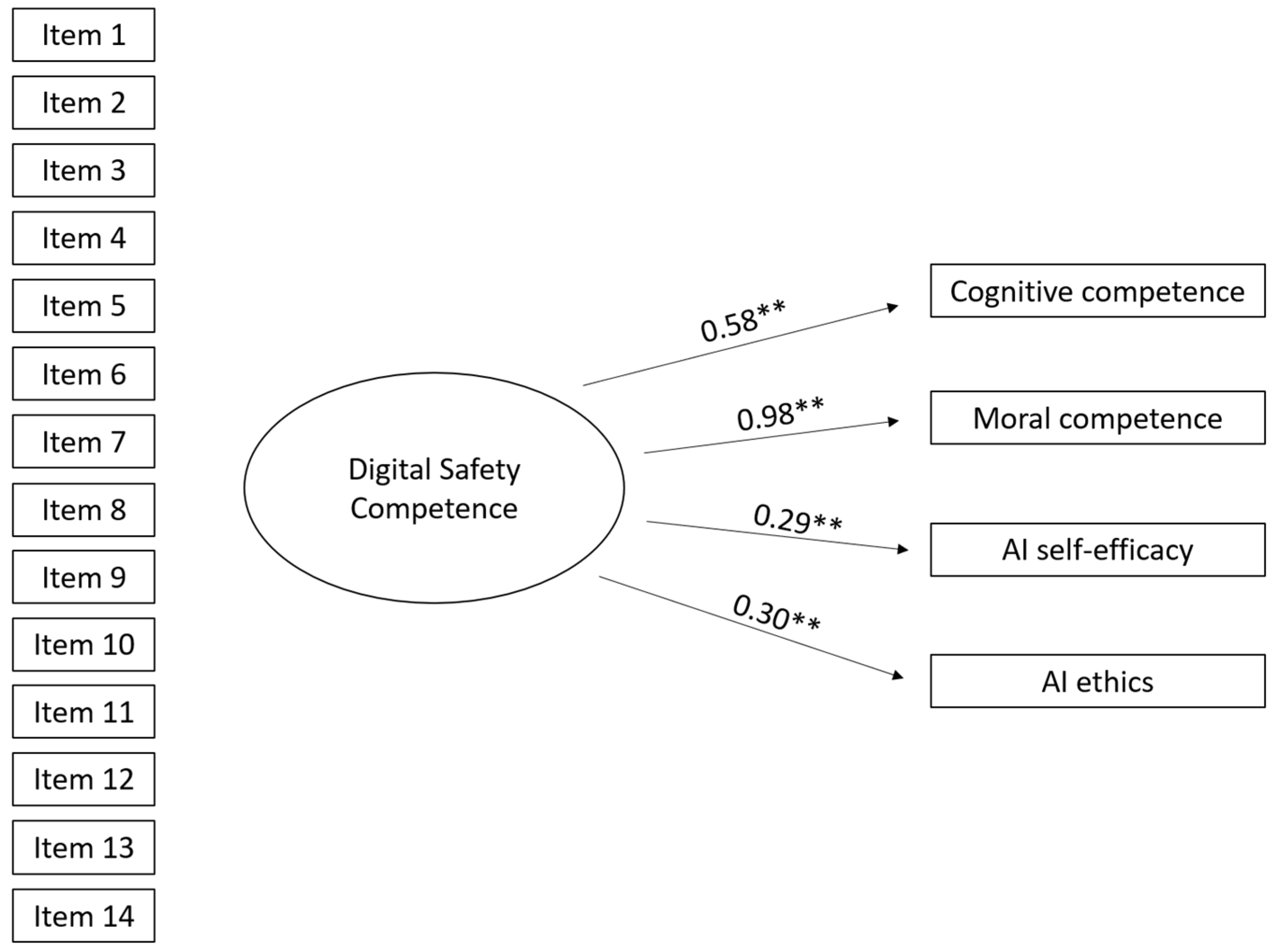

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ramos, A.; Casado, E.; Sevillano, U. Evaluating digital literacy levels among students in two private universities in Panama. South Fla. J. Dev. 2023, 4, 1869–1886. [Google Scholar] [CrossRef]

- UNESCO. AI and Education: Guidance for Policy-Makers. UNESCO, 2021. Recommendation on the Ethics of Artificial Intelligence, 2020. Digital Library UNESDOC. Available online: http://en.unesco.org/ (accessed on 12 April 2025).

- Kong, S.-C.; Cheung, W.M.-Y.; Zhang, G. Evaluation of an artificial intelligence literacy course for university students with diverse study backgrounds. Comput. Educ. Artif. Intell. 2021, 2, 100026. [Google Scholar] [CrossRef]

- Nguyen, L.A.T.; Habók, A. Tools for assessing teacher digital literacy: A review. J. Comput. Educ. 2023, 11, 305–346. [Google Scholar] [CrossRef]

- Jin, K.-Y.; Reichert, F.; Cagasan, L.P.; de la Torre, J.; Law, N. Measuring digital literacy across three age cohorts: Exploring test dimensionality and performance differences. Comput. Educ. 2020, 157, 103968. [Google Scholar] [CrossRef]

- Carretero, S.; Vuorikari, R.; Punie, Y. DigComp 2.1: The Digital Competence Framework for Citizens with Eight Proficiency Levels and Examples of Use; Publications of the European Union: Luxembourg, 2017. [Google Scholar] [CrossRef]

- Janssen, J.; Stoyanov, S.; Ferrari, A.; Punie, Y.; Pannekeet, K.; Sloep, P. Experts’ views on digital competence: Commonalities and differences. Comput. Educ. 2013, 68, 473–481. [Google Scholar] [CrossRef]

- Hernández-Martín, A.; Martín-del-Pozo, M.; Iglesias-Rodríguez, A. Pre-adolescents’ digital competences in the area of safety. Does frequency of social media use mean safer and more knowledgeable digital usage? Educ. Inf. Technol. 2021, 26, 1043–1067. [Google Scholar] [CrossRef]

- Kindarji, V.; Wong, W.H. Digital Literacy Will Be Key in a World Transformed by AI; Schwartz Reisman Institute: Toronto, ON, USA, 2023; Available online: https://srinstitute.utoronto.ca/news/digital-literacy-will-be-key-in-a-world-transformed-by-ai (accessed on 12 April 2025).

- OECD. Beyond Academic Learning: First Results from the Survey of Social and Emotional Skills; OECD Publishing: Paris, France, 2021. [Google Scholar] [CrossRef]

- Widowati, A.; Siswanto, I.; Wakid, M. Factors affecting students’ academic performance: Self-efficacy, digital literacy, and academic engagement effects. Int. J. Instr. 2023, 16, 885–898. [Google Scholar] [CrossRef]

- Mejías-Acosta, A.; D’Armas Regnault, M.; Vargas-Cano, E.; Cárdenas-Cobo, J.; Vidal-Silva, C. Assessment of digital competencies in higher education students: Development and validation of a measurement scale. Front. Educ. 2024, 9, 1497376. [Google Scholar] [CrossRef]

- Kryukova, N.I.; Chistyakov, A.A.; Shulga, T.I.; Omarova, L.B.; Tkachenko, T.V.; Malakhovsky, A.K.; Babieva, N.S. Adaptation of higher education students’ digital skills survey to Russian universities. Eurasia J. Math. Sci. Technol. Educ. 2022, 18, em2183. [Google Scholar] [CrossRef]

- Wulan, R.; Sintowoko, D.A.W.; Resmadi, I.; Yenni, S. Digital Skills in Education: Perspective from Teaching Capabilities in Technology. In Proceedings of the 9th BCM International Conference, Bandung, Indonesia, 1 September 2022; pp. 432–436. [Google Scholar] [CrossRef]

- Ferrari, A. Digital Competence in Practice: An Analysis of Frameworks; Publications Office of the EU: Luxembourg, 2012; Available online: https://ifap.ru/library/book522.pdf (accessed on 12 April 2025).

- Riina, V.; Stefano, K.; Yves, P. DigComp 2.2: The Digital Competence Framework for Citizens—With New Examples of Knowledge, Skills and Attitudes; JRC Research Reports JRC128415; Joint Research Centre: Brussels, Belgium, 2022. [Google Scholar]

- Blanc, S.; Conchado, A.; Benlloch-Dualde, J.V.; Monteiro, A.; Grindei, L. Digital competence development in schools: A study on the association of problem-solving with autonomy and digital attitudes. Int. J. STEM Educ. 2025, 12, 13. [Google Scholar] [CrossRef]

- Godaert, E.; Aesaert, K.; Voogt, J.; van Braak, J. Assessment of students’ digital competences in primary school: A systematic review. Educ. Inf. Technol. 2022, 27, 9953–10011. [Google Scholar] [CrossRef]

- Kim, D.; Vandenberghe, C. Ethical Leadership and Team Ethical Voice and Citizenship Behavior in the Military: The Roles of Team Moral Efficacy and Ethical Climate. Group Organ. Manag. 2020, 45, 514–555. [Google Scholar] [CrossRef]

- Shek, D.T.; Dou, D.; Zhu, X.; Chai, W. Positive youth development: Current perspectives. Adolesc. Health Med. Ther. 2019, 10, 131–141. [Google Scholar] [CrossRef]

- Estrada, F.J.R.; George-Reyes, C.E.; Glasserman-Morales, L.D. Security as an emerging dimension of Digital Literacy for education: A systematic literature review. J. E Learn. Knowl. Soc. 2022, 18, 22–33. [Google Scholar]

- Chiu, T.K. A holistic approach to the design of artificial intelligence (AI) education for K-12 schools. TechTrends 2021, 65, 796–807. [Google Scholar] [CrossRef]

- Carr, N. The Shallows: What the Internet Is Doing to Our Brains; WW Norton and Company: New York, NY, USA, 2020. [Google Scholar]

- Malik, A.; Khan, M.L.; Hussain, K.; Qadir, J.; Tarhini, A. AI in higher education: Unveiling academicians’ perspectives on teaching, research, and ethics in the age of ChatGPT. Interact. Learn. Environ. 2024, 33, 1–17. [Google Scholar] [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 2023, 6, 342–363. [Google Scholar] [CrossRef]

- Cooper, G. Examining science education in ChatGPT: An exploratory study of generative artificial intelligence. J. Sci. Educ. Technol. 2023, 32, 444–452. [Google Scholar] [CrossRef]

- Gruenhagen, J.H.; Sinclair, P.M.; Carroll, J.-A.; Baker, P.R.A.; Wilson, A.; Demant, D. The rapid rise of generative AI and its implications for academic integrity: Students’ perceptions and use of chatbots for assistance with assessments. Comput. Educ. Artif. Intell. 2024, 7, 100273. [Google Scholar] [CrossRef]

- Mumtaz, S.; Parahoo, S.K.; Gupta, N.; Harvey, H.L. Tryst with the unknown: Navigating an unplanned transition to online examinations. Qual. Assur. Educ. 2023, 31, 4–17. [Google Scholar] [CrossRef]

- Smerdon, D. AI in essay-based assessment: Student adoption, usage, and performance. Comput. Educ. Artif. Intell. 2024, 7, 100288. [Google Scholar] [CrossRef]

- Intelligent. Nearly 1 in 3 College Students Have Used ChatGPT on Written Assignments; Intelligent: Seattle, WA, USA, 2023; Available online: https://intelligent.com (accessed on 12 April 2025).

- Yusuf, A.; Pervin, N.; Román-González, M. Generative AI and the future of higher education: A threat to academic integrity or reformation? Evidence from multicultural perspectives. Int. J. Educ. Technol. High. Educ. 2024, 21, 21–29. [Google Scholar] [CrossRef]

- Playfoot, D.; Quigley, M.; Thomas, A.G. Hey ChatGPT, give me a title for a paper about degree apathy and student use of AI for assignment writing. Internet High. Educ. 2024, 62, 100950. [Google Scholar] [CrossRef]

- Chan, C.K.Y. Students’ perceptions of ‘AI-giarism’: Investigating changes in understandings of academic misconduct. Educ. Inform. Technol. 2024, 30, 8087–8108. [Google Scholar] [CrossRef]

- Chang, C.Y.; Kuo, H.C. The development and validation of the digital literacy questionnaire and the evaluation of students’ digital literacy. Educ. Inf. Technol. 2025; in press. [Google Scholar] [CrossRef]

- Redecker, C.; Punie, Y. Digital Competence Framework for Educators (DigCompEdu); European Union: Brussels, Belgium, 2017; Available online: https://publications.jrc.ec.europa.eu/repository/handle/JRC107466 (accessed on 12 April 2025).

- Zhu, W.; Huang, L.; Zhou, X.; Li, X.; Shi, G.; Ying, J.; Wang, C. Could AI Ethical Anxiety, Perceived Ethical Risks and Ethical Awareness About AI Influence University Students’ Use of Generative AI Products? An Ethical Perspective. Int. J. Hum. Comput. Interact. 2024, 41, 742–764. [Google Scholar] [CrossRef]

- Mekheimer, M.; Abdelhalim, W.M. The digital age students: Exploring leadership, freedom, and ethical online behavior: A quantitative study. Soc. Sci. Humanit. Open 2025, 11, 101325. [Google Scholar] [CrossRef]

- Santoni de Sio, F. Human Freedom in the Age of AI, 1st ed.; Routledge: London, UK, 2024. [Google Scholar]

- Burns, T.; Gottschalk, F. Educating 21st Century Children: Emotional Well-Being in the Digital Age, Educational Research and Innovation; OECD Publishing: Paris, France, 2019. [Google Scholar] [CrossRef]

- Hatlevik, O.E.; Tømte, K. Using multilevel analysis to examine the relationship between upper secondary students’ Internet safety awareness, social background and academic aspirations. Future Internet 2014, 6, 717–734. [Google Scholar] [CrossRef]

- Ahmad, M.A.; Teredesai, A.; Eckert, C. Fairness, Accountability, and Transparency in AI At Scale: Lessons from National Programs. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; p. 690. [Google Scholar]

- Microsoft. Responsible and Trusted AI. Available online: https://learn.microsoft.com/en-us/azure/cloud-adoption-framework/innovate/best-practices/trusted-ai. (accessed on 12 April 2025).

- Katende, E. Critical thinking and higher education: A historical, theoretical and conceptual perspective. J. Educ. Pract. 2023, 7, 19–39. [Google Scholar] [CrossRef]

- Lu, K.; Zhu, J.; Pang, F.; Shadiev, R. Understanding the relationship between college students’ artificial intelligence literacy and higher-order thinking skills using the 3P model: The mediating roles of behavioral engagement and peer interaction. Educ. Technol. Res. Dev. 2024. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Lee, K.K.W. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learn. Environ. 2023, 10, 60. [Google Scholar] [CrossRef]

- Delcker, J.; Heil, J.; Ifenthaler, D.; Seufert, S.; Spirgi, L. First-year students AI-competence as a predictor for intended and de facto use of AI tools for supporting learning processes in higher education. Int. J. Educ. Technol. High. Educ. 2024, 21, 18. [Google Scholar] [CrossRef]

- Bakht Jamal, S.A.A.R. Analysis of the Digital Citizenship Practices among University Students in Pakistan. Pak. J. Distance Online Learn. 2023, 9, 50. [Google Scholar] [CrossRef]

- Fry, P.S. Fostering Children’s Cognitive Competence Through Mediated Learning Experiences: Frontiers and Futures; Charles C. Thomas, Publisher: Springfield, IL, USA, 1992. [Google Scholar]

- Vygotsky, L.S.; Cole, M. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: London, UK, 1978. [Google Scholar]

- Demetriou, A.; Spanoudis, G.C.; Greiff, S.; Makris, N.; Panaoura, R.; Kazi, S. Changing priorities in the development of cognitive competence and school learning: A general theory. Front. Psychol. 2022, 13, 954971. [Google Scholar] [CrossRef]

- Sun, R.C.; Hui, E.K. Cognitive competence as a positive youth development construct: A conceptual review. Sci. World J. 2012, 2012, 210953. [Google Scholar] [CrossRef] [PubMed]

- Facione, P.A.; Gittens, C.A.; Facione, N.C. Think Critically, 3rd ed.; Pearson: New York City, NY, USA, 2020. [Google Scholar]

- Fjeldheim, S.; Kleppe, L.C.; Stang, E.; Storen-Vaczy, B. Digital competence in social work education: Readiness for practice. Soc. Work Educ. 2024, 1–17. [Google Scholar] [CrossRef]

- Zhu, H.; Andersen, S.T. Digital competence in social work practice and education: Experiences from Norway. Nord. Soc. Work Res. 2021, 12, 823–838. [Google Scholar] [CrossRef]

- Zhu, C.; Sun, M.; Luo, J.; Li, T.; Wang, M. How to harness the potential of ChatGPT in education? Knowl. Manag. E Learn. 2023, 15, 133–152. [Google Scholar] [CrossRef]

- Lee, H.P.H.; Sarkar, A.; Tankelevitch, L.; Drosos, I.; Rintel, S.; Banks, R.; Wilson, N. The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects from a Survey of Knowledge Workers; Microsoft: Redmond, WA, USA, 2025. [Google Scholar]

- Bandura, A. Self-Efficacy: The Exercise of Control; W.H. Freeman: New York, NY, USA, 1997. [Google Scholar]

- Wang, Y.Y.; Chuang, Y.W. Artificial intelligence self-efficacy: Scale development and validation. Educ. Inf. Technol. 2024, 29, 4785–4808. [Google Scholar] [CrossRef]

- Bewersdorff, A.; Hornberger, M.; Nerdel, C.; Schiff, D.S. AI advocates and cautious critics: How AI attitudes, AI interest, use of AI, and AI literacy build university students’ AI self-efficacy. Comput. Educ. Artif. Intell. 2025, 8, 100340. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.L.P.; Yuan, T. Measuring user competence in using artificial intelligence: Validity and reliability of the artificial intelligence literacy scale. Behav. Inform. Technol. 2023, 42, 1324–1337. [Google Scholar] [CrossRef]

- Lee, Y.F.; Hwang, G.J.; Chen, P.Y. Impacts of an AI-based chatbot on college students’ after-class review, academic performance, self-efficacy, learning attitude, and motivation. Educ. Technol. Res. Dev. 2022, 70, 1843–1865. [Google Scholar] [CrossRef]

- Chen, D.; Liu, W.; Liu, X. What drives college students to use AI for L2 learning? Modeling the roles of self-efficacy, anxiety, and attitude based on an extended technology acceptance model. Acta Psychol. 2024, 249, 104442. [Google Scholar] [CrossRef]

- Cabezas-González, M.; Casillas-Martín, S.; García-Valcárcel Muñoz Repiso, A. Influence of the use of video games, social media, and smartphones on the development of digital competence with regard to safety. Comput. Sch. 2024, 1–20. [Google Scholar] [CrossRef]

- Holmes, W.; Porayska-Pomsta, K. The Ethics of Artificial Intelligence in Education; Routledge: London, UK, 2023. [Google Scholar]

- O’Dea, X.; Ng, D.T.K.; O’Dea, M.; Shkuratskyy, V. Factors affecting university students’ generative AI literacy: Evidence and evaluation in the UK and Hong Kong contexts. Policy Futures Educ. 2024, 14782103241287401. [Google Scholar] [CrossRef]

- Kittredge, A.K.; Hopman, E.W.; Reuveni, B.; Dionne, D.; Freeman, C.; Jiang, X. Mobile language app learners’ self-efficacy increases after using generative AI. Front. Educ. 2025, 10, 1499497. [Google Scholar] [CrossRef]

- Siau, K.; Wang, W. Artificial Intelligence (AI) Ethics: Ethics of AI and Ethical AI. J. Database Manag. 2020, 31, 74–87. [Google Scholar] [CrossRef]

- López, C. Artificial Intelligence and Advanced Materials. Adv. Mater. 2023, 35, 2208683. [Google Scholar] [CrossRef] [PubMed]

- Gammoh, L.A. ChatGPT risks in academia: Examining university educators’ challenges in Jordan. Educ. Inform. Technol. 2025, 30, 3645–3667. [Google Scholar] [CrossRef]

- European Commission: Directorate-General for Education, Youth, Sport and Culture. In Key Competences for Lifelong Learning; Publications Office of the European Union: Luxembourg, 2019; Available online: https://data.europa.eu/doi/10.2766/569540 (accessed on 3 May 2025).

- Skov, A. The Digital Cometence Wheel. Center for Digital Dannelse. Available online: https://digital-competence.eu/ (accessed on 12 April 2025).

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Edu. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Liu, Z. The digital competence of Chinese university students: A survey study. J. Educ. Educ. Res. 2023, 2, 35–38. [Google Scholar] [CrossRef]

- Tso, W.W.Y.; Reichert, F.; Law, N.; Fu, K.W.; de la Torre, J.; Rao, N.; Ip, P. Digital competence as a protective factor against gaming addiction in children and adolescents: A cross-sectional study in Hong Kong. Lancet Reg. Health West. Pac. 2022, 20, 100382. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.-H.; Horng, J.-S.; Chou, S.-F.; Yu, T.-Y.; Lee, M.-T.; Lapuz, M.C.B. Digital capability, digital learning, and sustainable behaviour among university students in Taiwan: A comparison design of integrated mediation-moderation models. Int. J. Manag. Educ. 2023, 21, 100835. [Google Scholar] [CrossRef]

- Shek, D.T.L.; Yu, L.; Wu, F.K.Y.; Ng, C.S.M. General education program in a new 4-year university curriculum in Hong Kong: Findings based on multiple evaluation strategies. Int. J. Disabil. Hum. Dev. 2015, 14, 377–384. [Google Scholar] [CrossRef]

- Long, D.; Magerko, B. What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–16. [Google Scholar]

- Ma, C.M.S.; Shek, D.T.L.; Fan, I.Y.H.; Zhu, X.X.; Hu, X.G.; Chan, K.; Yick, K.L.; Chu, R.W.C.; Lui, R.W.C. The Relationship Between the use of Generative Artificial Intelligence (GenAI) and Student Engagement: Insights from AI Self-Efficacy; AISE: Brussels, Belgium, Under peer review.

- Jöreskog, K.G. Structural analysis of covariance and correlation matrices. Psychometrika 1978, 43, 443–477. [Google Scholar] [CrossRef]

- Wolf, E.J.; Harrington, K.M.; Clark, S.L.; Miller, M.W. Sample size requirements for structural equation models: An evaluation of power, bias, and solution propriety. Educ. Psychol. Meas. 2013, 76, 913–934. [Google Scholar] [CrossRef] [PubMed]

- Browne, M.W.; Cudeck, R. Alternative Ways of Assessing Model Fit. In Testing Structural Equation Models; Bollen, K.A., Long, J.S., Eds.; Sage: Washington, DC, USA, 1993; pp. 136–162. [Google Scholar]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Curran, P.J.; West, S.G.; Finch, J.F. The robustness of test statistics to nonnormality and specification error in confirmatory factor analysis. Psychol. Methods 1996, 1, 16–29. [Google Scholar] [CrossRef]

- Shi, Y.; Qu, S. The effect of cognitive ability on academic achievement: The mediating role of self-discipline and the moderating role of planning. Front. Psychol. 2022, 13, 1014655. [Google Scholar] [CrossRef]

- Gök, B.; Erdoğan, T. The investigation of the creative thinking levels and the critical thinking disposition of pre-service elementary teachers. Ank. Univ. J. Fac. Educ. Sci. 2011, 44, 29–52. [Google Scholar] [CrossRef]

- Karakuş, İ. University students’ cognitive flexibility and critical thinking dispositions. Front. Psychol. 2024, 15, 1420272. [Google Scholar] [CrossRef] [PubMed]

- Getenet, S.; Cantle, R.; Redmond, P.; Albion, P. Students’ digital technology attitude, literacy and self-efficacy and their effect on online learning engagement. Int. J. Educ. Technol. High. Educ. 2024, 21, 3. [Google Scholar] [CrossRef]

- Pagani, L.; Argentin, G.; Gui, M.; Stanca, L. The impact of digital skills on educational outcomes: Evidence from performance tests. Educ. Stud. 2016, 42, 137–162. [Google Scholar] [CrossRef]

- Fagerlund, J.; Häkkinen, P.; Vesisenaho, M.; Viiri, J. Computational thinking in programming with Scratch in primary schools: A systematic review. Comput. Appl. Eng. Educ. 2021, 29, 12–28. [Google Scholar] [CrossRef]

- Luo, Y.; Xie, M.; Lian, Z. Emotional engagement and student satisfaction: A study of Chinese college students based on a nationally representative sample. Asia Pac. Educ. Res. 2019, 28, 283–292. [Google Scholar] [CrossRef]

- Dunn, T.; Kennedy, M. Technology Enhanced Learning in higher education; motivations, engagement and academic achievement. Comput. Educ. 2019, 137, 104–113. [Google Scholar] [CrossRef]

- Yilmaz, R.; Karaoglan Yilmaz, F.G. The effect of generative artificial intelligence (AI)-based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Comput. Educ. Artif. Intell. 2023, 4, 100147. [Google Scholar] [CrossRef]

- Yang, Y. Influences of digital literacy and moral sensitivity on artificial intelligence ethics awareness among nursing students. Healthcare 2024, 12, 2172. [Google Scholar] [CrossRef]

- Kwak, Y.; Ahn, J.W.; Seo, Y.H. Influence of AI ethics awareness, attitude, anxiety, and self-efficacy on nursing students’ behavioral intentions. BMC Nurs. 2022, 21, 267. [Google Scholar] [CrossRef]

- Mogavi, H.; Deng, R.; Kim, C.J.; Zhou, J.; Kwon, P.; Hui, P. ChatGPT in education: A blessing or a curse? A qualitative study exploring early adopters’ utilization and perceptions. Comput. Hum. Behav. 2024, 2, 100027. [Google Scholar] [CrossRef]

- Devaux, M.; Sassi, F. Social disparities in hazardous alcohol use: Self-report bias may lead to incorrect estimates. Eur. J. Public Health 2016, 26, 129–134. [Google Scholar] [CrossRef] [PubMed]

- Porat, E.; Blau, I.; Barak, A. Measuring digital literacies: Junior high-school students’ perceived competencies versus actual performance. Comput. Educ. 2018, 126, 23–36. [Google Scholar] [CrossRef]

- Shahzad, M.F.; Xu, S.; Zahid, H. Exploring the impact of generative AI-based technologies on learning performance through self-efficacy, fairness & ethics, creativity, and trust in higher education. Educ. Inf. Technol. 2025, 30, 3691–3716. [Google Scholar] [CrossRef]

- Acosta-Enriquez, B.G.; Ballesteros, M.A.A.; Vargas, C.G.A.P.; Ulloa, M.N.O.; Ulloa, C.R.G.; Romero, J.M.P.; Jaramillo, N.D.G.; Orellana, H.U.C.; Anzoátegui, D.X.A.; Roca, C.L. Knowledge, attitudes, and perceived ethics regarding the use of ChatGPT among generation Z university students. Int. J. Educ. Integr. 2024, 20, 10. [Google Scholar] [CrossRef]

| M | SD | α | Skewness | Kurtosis | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 4.12 | 0.71 | 0.81 | 0.71 | −0.01 | - | ||||

| 3.85 | 0.91 | - | 0.91 | 0.58 | 0.44 ** | - | |||

| 3.24 | 0.57 | 0.48 | 0.57 | 0.62 | 0.17 * | 0.03 | - | ||

| 3.36 | 0.81 | 0.87 | 0.81 | 1.63 | 0.29 ** | 0.24 ** | 0.03 | - | |

| 3.88 | 0.56 | 0.73 | 0.56 | −0.02 | 0.29 ** | 0.16 * | 0.29 ** | 0.13 | - |

| Model 1 | Model 2 | Estimate (Linear Term) | Estimate (Quadratic Term) | |

|---|---|---|---|---|

| Cognitive competence | ||||

| AIC | 259.81 | 259.39 | −0.79 | 0.12 * |

| BIC | 262.86 | 262.44 | ||

| Moral competence | ||||

| AIC | 279.66 | 276.62 | −1.22 | 0.17 # |

| BIC | 282.72 | 279.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, C.M.S.; Shek, D.T.L.; Fan, I.Y.H.; Zhu, X.; Hu, X. The Impact of Digital Safety Competence on Cognitive Competence, AI Self-Efficacy, and Character. Appl. Sci. 2025, 15, 5440. https://doi.org/10.3390/app15105440

Ma CMS, Shek DTL, Fan IYH, Zhu X, Hu X. The Impact of Digital Safety Competence on Cognitive Competence, AI Self-Efficacy, and Character. Applied Sciences. 2025; 15(10):5440. https://doi.org/10.3390/app15105440

Chicago/Turabian StyleMa, Cecilia M. S., Daniel T. L. Shek, Irene Y. H. Fan, Xixian Zhu, and Xiangen Hu. 2025. "The Impact of Digital Safety Competence on Cognitive Competence, AI Self-Efficacy, and Character" Applied Sciences 15, no. 10: 5440. https://doi.org/10.3390/app15105440

APA StyleMa, C. M. S., Shek, D. T. L., Fan, I. Y. H., Zhu, X., & Hu, X. (2025). The Impact of Digital Safety Competence on Cognitive Competence, AI Self-Efficacy, and Character. Applied Sciences, 15(10), 5440. https://doi.org/10.3390/app15105440