Abstract

Birdsong classification plays a crucial role in monitoring species distribution, population structure, and environmental changes. Existing methods typically use supervised learning to extract specific features for classification, but this may limit the generalization ability of the model and lead to generalization errors. Unsupervised feature extraction methods are an emerging approach that offers enhanced adaptability, particularly for handling unlabeled and diverse birdsong data. However, their drawback may bring additional time cost to downstream tasks, which may impact overall efficiency. To address these challenges, we propose DBS-NET, a Dual-Branch Network Model for birdsong classification. DBS-NET consists of two branches: a supervised branch (Res-iDAFF) and an unsupervised branch (based on a contrastive learning approach). We introduce an iterative dual-attention feature fusion (iDAFF) module in the backbone to enhance contextual feature extraction, and a linear residual classifier is exploited further improve classification accuracy. Additionally, to address class imbalance in the dataset, a weighted loss function is introduced to adjust the cross-entropy loss with optimized class weights. To improve training efficiency, the backbone networks of both branches share a portion of their weights, reducing the computational overhead. In the experiments on a self-built 30-class dataset and the Birdsdata dataset, the proposed method achieved accuracies of 97.54% and 97.09%, respectively, outperforming other supervised and unsupervised birdsong classification methods.

1. Introduction

Birds are one of the most widely distributed species, inhabiting almost all ecosystems, and their survival is an important indicator of global ecological health. However, the Global Red List of Birds indicates that 1445 bird species (12.95% of the total) are classified as Vulnerable (VU), Endangered (EN), or Critically Endangered (CR) [1]. Therefore, it is vital to protect birds. Birdsong is a primary means of communication among birds and is essential for the interaction within and between populations. Different species exhibit distinct song patterns. Analyzing the subtle features of birdsong patterns can yield information that cannot be obtained through images. In addition, the recordings of birdsong are not affected by the field of view or lighting conditions [2]. Thus, identification of bird species based on song is one of the most important methods in ecological monitoring and a vital component of habitat monitoring and protection for endangered species [3]. However, due to the diversity of bird species and the complexity of birdsongs, the annotation of bird audio data is time-consuming. Therefore, making use of existing annotated data to achieve accurate birdsong recognition remains a challenge.

With the development of deep learning in the field of computer vision, a novel exploratory mechanism has been introduced to solve the challenges of birdsong recognition. At present, the birdsong classification technology mainly converts signals into spectral representation and carry out detailed analysis of the spectral features of birdsong [2]. Common spectral representations, such as Short-Time Fourier Transform (STFT) spectra, Mel spectrograms, and Mel Frequency Cepstrum Coefficients (MFCC), are used as inputs to convolutional neural network (CNN) models for birdsong classification. For example, Na et al. [4] combined features of Linear Prediction Cepstral Coefficients (LMs) and MFCC with a 3DCCNN-LSTM model to classify four bird species, obtaining an average accuracy of 97.9%. Xie et al. [5] combined Mel spectrograms, harmonic component spectrograms, and percussion component spectrograms with a CNN model, achieving an F1-score of 95.95% for the classification task of 43 bird species. Wang et al. [6] introduced an improved Transformer model for recognizing MFCC spectrograms of 15 bird species with an accuracy of 93.57%. Fu et al. [7] applied the wavelet transform to convert birdsongs into spectrograms, constructed a DR-ACGAN model, and incorporated a dynamic convolutional kernel into the classifier, achieving an accuracy of 97.60% with the VGG16 model. Kahl et al. [8] developed BirdNET, which used Mel spectrograms to successfully identify 984 bird species in North America and Europe, with an average accuracy of 0.791 in the high noise environments. These studies highlight the effectiveness of neural networks in the classification of birdsong spectrograms. However, most of them relied on supervised learning approaches and required a large amount of labeled training data. In addition, supervised learning methods are prone to generalization errors, spurious associations, and adversarial attacks [9].

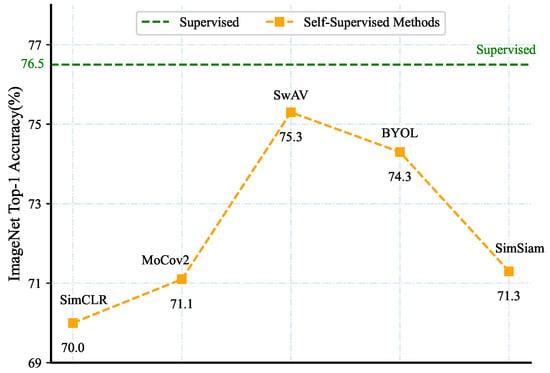

With the advent of unsupervised learning [10,11,12,13], supervised information can now be obtained from unlabeled data. Self-supervised learning [14,15,16,17,18,19] is a subset of unsupervised learning, and contrastive learning [20,21,22,23,24,25] is a branch of self-supervised learning [9]. Contrastive learning is a discriminative method whose aim is to cluster similar samples more closely in the representation space while dispersing dissimilar samples. Through this process, the model learns more discriminative features, enabling it to better distinguish different samples, and providing a solid foundation for solving downstream tasks. The existing contrastive learning methods include Simple Contrastive Learning Representation (SimCLR) [17], Momentum Contrast (MoCoV2) [26], Swapping Assignments between Views (SwAV) [19], Bootstrap Your Own Latent (BYOL) [14], and Siamese Network for Contrastive Learning (SimSiam) [18]. The fine-tuned classification accuracy rates of these methods on ImageNet are shown in Figure 1. As shown in Figure 1, the accuracy of existing contrastive learning approaches is very close to that of supervised learning, indicating that these unsupervised feature extraction techniques are effective.

Figure 1.

Accuracy of unsupervised models on ImageNet.

SimCLR is a classic algorithm for contrastive learning with simple structure, clever design and high performance.It has achieved remarkable success in computer vision. Li [27] used unlabeled data to train a feature extraction network with SimCLR, and then added a linear classification layer for supervised training and fine-tuning with a small amount of labeled data, achieving remarkable results in the intrusion detection for industrial control systems. Yang et al. [28] pre-trained SimCLR on unlabeled plant image data and fine-tuned the pre-training model on labeled plant disease samples, obtaining an accuracy comparable to that of supervised learning. Shi et al. [29] combined SimCLR with the MetaFormer-2 model to classify snakes at a fine-grained level, with an accuracy of 83.8%. Sun et al. [30] pre-trained SimCLR on a dataset of chest digital radiography (DR) images and used it as the backbone of a fully convolutional one-stage (FCOS) object detection network to identify rib fractures from DR images. SimCLR leverages a large amount of unlabeled data to learn general data representations through data augmentation and transformations and then updates the network based on the InfoNCE loss (Normalized Temperature-Scaled Cross-Entropy Loss) [31,32]. This learned knowledge is then transferred to smaller models for specific downstream tasks. The fine-tuned downstream tasks in these studies achieved an accuracy rate comparable to that of supervised learning, further demonstrating the powerful feature representation capability of SimCLR contrastive learning. However, this two-stage training process increases complexity, is time-consuming, requires additional resources, and poses challenges for optimization and debugging.

Zhang [33] further demonstrated that although self-supervised learning has been successful in several downstream tasks. However, if the model is fine-tuned using only labeled data from downstream tasks, its generality and applicability may not be fully realized. In addition, existing supervised learning methods still require a significant amount of time [28]. Therefore, we address the challenge of utilizing the representation capability of self-supervised learning to reduce training time while achieving better accuracy. In this study, we propose a Dual-Branch Supervised and Unsupervised Learning Network (DBS-NET) is proposed for Birdsong Classification, which employs a weight assignment strategy to balance the losses of the two branches, and enhance the feature generalization ability of the backbone network. To verify the effectiveness of DBS-NET model, experiments are carried out on the self-built 30-class and Birdsdata datasets. The main contributions of this paper are summarized as follows:

- A Dual-Branch Network (DBS-NET) combining supervised and self-supervised learning is proposed for birdsong classification. Using a weight allocation strategy, unsupervised and supervised feature extraction are integrated into one framework for joint training, which effectively balances the contributions of the two branches.

- An enhanced backbone based on an iterative dual-attention feature fusion module (iDAFF) is constructed, and an enhanced linear residual classifier is designed to further improve the classification capability of the model.

- Weighted loss for A class imbalance weighted loss function is designed to calculate the weight of each category according to its frequency in the dataset. These weights are then used in cross-entropy loss to ensure balanced training across categories.

2. Materials

In this study, we compiled an independent dataset, BirdSong30, by gathering birdsong recordings of 30 representative species from diverse ecosystems across northern and southern China using two publicly accessible databases (Xeno-canto: https://www.xeno-canto.org/ (accessed on 8 April 2024); Birder: http://www.birder.cn/ (accessed on 18 May 2024)). All audio files were standardized to MP3 format (16 kHz sampling rate, 320 kbps bitrate). Recordings with a signal-to-noise ratio below 15 dB, overlapping species, or significant anthropogenic noise were discarded, and the remaining clips were segmented into non-overlapping 2 s excerpts using Adobe Audition. Each excerpt then underwent spectral-gating noise reduction combined with median filtering to suppress stationary background and transient spikes, followed by adaptive endpoint detection and silence removal based on short-time energy and zero-crossing rate, yielding high-SNR, information-rich birdsong samples.

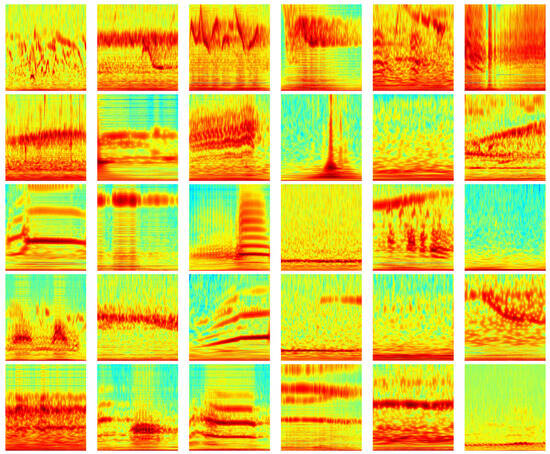

After preprocessing, the processed audio segments were transformed into wavelet spectrograms (Figure 2). The specific parameters and implementation details of the wavelet transform are provided in Section 3.1.

Figure 2.

The wavelet spectrogram’s x-axis and y-axis correspond to the time and frequency–scale domains. The color of the spectrogram denotes energy information, with hotter colors indicating higher energy levels.

Finally, 32,835 wavelet spectrograms were generated to constitute spectral dataset. Then, the dataset was randomly divided into a training set and a test set at a ratio of 8:2. The details of species and corresponding data information are summarized in Table 1.

Table 1.

Birdsong dataset information.

3. Methods

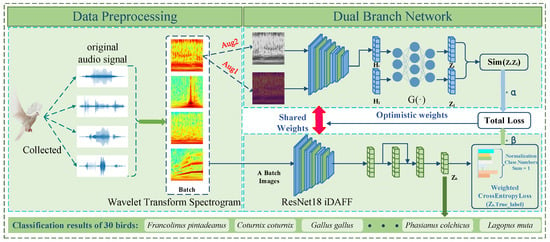

This study proposes a Dual-Branch Network that combines supervised classification learning (SCL) and self-supervised contrastive learning (SSCL). This method leverages a multi-task learning framework to optimize classification loss and contrastive loss simultaneously. The strong discriminative features of supervised classification integrates with the generalizable and robust features of SimCLR, which enhances the ability of model to deal with complex environments and improve classification accuracy. The overall structure of the method is illustrated in Figure 3. The model inference process is shown in Algorithm 1.

| Algorithm 1 DBS-Net inference algorithm |

| Input: Labeled audio segments |

Output: Predicted class labels

|

Figure 3.

The proposed framework.

3.1. Wavelet Transform

Existing studies usually convert birdsong signals into STFT spectrograms [34] and Mel spectrograms [35]. However, a fixed STFT window function results in time resolution and frequency resolution not being optimized at the same time. In addition, Mel spectrograms are significantly affected by amplitude variations, which means that different intensities of the same sound can produce different features [36].

On the other hand, the wavelet transform has good time-frequency resolution and can provide local information of the signal in both time and frequency domains. It is also more suitable for non-stationary signals. Therefore, in this paper, wavelet transform is used to convert birdsong signals into spectrograms. The formula of wavelet transform is defined as follows:

where a is the scale parameter, which controls the scale of the wavelet function; is the time shift that controls the translation of the wavelet function; is a wavelet basis function. The choice of wavelet basis function directly affects the time-frequency resolution and spectral characteristics of the wavelet transform. Therefore, the Morlet wavelet [37], with a higher response to birdsong, is selected in this paper, and its definition is shown in Formula (2).

where is the center frequency and is the bandwidth.

3.2. Supervised Feature Representation

Supervised feature representation focuses on extracting discriminative features and learning class-specific representations by mining the labeled information in the data. Supervised classification learning (SCL) aims to fully use labeled data to achieve effective feature representation. As shown in Figure 3, the supervised classification branch employs a ResNet18 as a backbone network to encode input data into high-dimensional feature representations. ResNet18 has an 18-layer architecture that balances computational efficiency and representational power. Its residual connections improve gradient flow for stable training. Unlike deeper variants such as ResNet50 or ResNet101, it reduces both computational cost and overfitting risk. Its proven performance across diverse classification tasks further underscores its suitability as an encoder.

In the classification head, we designed a linear residual structure, as shown in Figure 3. The formula is shown in (3), where is the final output feature, is the supervised encoder output feature, and n is the number of repetitions of the intermediate linear layer.

The linear residual structure increases the number of linear layers of the classification head through skip connections, and deepens the nonlinear transformation enables network to capture more complex feature patterns.

The spectrogram data in this study show class imbalance that causes the trained classifier to favor the majority class and ignore the minority class samples, which can lead to distortion of probability output of a few classes, thus affecting the reliability of classification results. To address this issue, a balanced cross-entropy loss function is proposed. First, the weights of different categories are calculated based on their proportion in the dataset. Then, these weights are used to initialize the cross-entropy loss function.

The number of samples in each category in the dataset is defined as , and the weight of category can be calculated by Formula (4).

The weighted cross-entropy loss function can be defined as

3.3. Self-Supervised Feature Representation

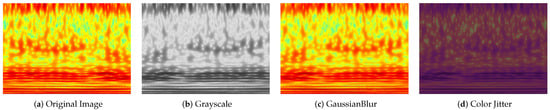

Self-supervised feature representation focuses on learning generalizable features from unlabeled data. The SimCLR framework, as contrastive learning, is used to construct the Self-Supervised Classification Learning (SSCL) branch. The SSCL branch leverages data augmentation techniques to generate positive and negative sample pairs. As shown in Figure 4, positive pairs are obtained by performing different augmentation transformations on the same input sample, while negative pairs are formed by combining representations of different samples within the same batch. Each pair of augmented views (denoted as , ) is processed by the encoder to generate the corresponding feature representations , , respectively. Subsequently, a projection head, consisting of a fully connected layer and a nonlinear activation function, is introduced to map the feature representation to a higher-dimensional representation space. This mapping function produces the projected representations . The ultimate goal is to maximize the similarity of the positive sample pairs, while minimizing the similarity score between the negative sample pairs. The similarity scores is calcullated using the InfoNCE loss, as shown in Formula (6).

where represents the cosine similarity between the two vectors. is the temperature scalar used as a hyperparameter to adjust the smoothness of the similarity. This unsupervised framework utilizes a large amount of unlabeled data to learn general representations and applies them to smaller models for specific tasks.

Figure 4.

Data augmentation. During image augmentation, each method is randomly applied with a probability of 0.5. An image can be augmented by one or more methods. These augment methods include affine transformations, perspective transformations, contrast changes, Gaussian noise, hue/saturation changes, and blurring.

3.4. Iteration Dual Attention Feature Fusion Module

In the residual blocks, skip connections are usually used to fuse the original features X with the convolved output . These connections provide an uninterrupted alternative path for the gradient during the backpropagation process, making the gradient flow smoother and mitigating the problem of gradient vanishing. However, traditional feature fusion methods, such as element-wise addition or concatenation, often lead to information redundancy and fail to effectively capture contextual relationships between features.

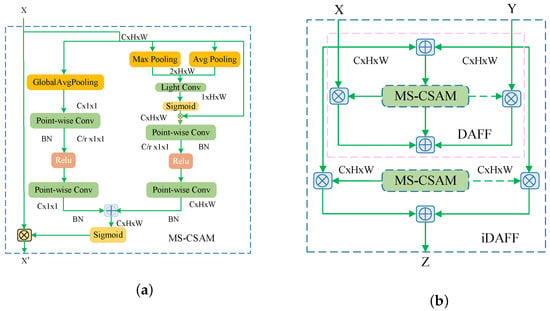

To address this issue, we refer to the work of Dai et al. [38], proposing Multi-Scale Channel and Spatial Attention Module (MS-CSAM), dual-attention feature fusion (DFF), and iterative dual-attention feature fusion (iDAFF), as shown in Figure 5. In our MS-CSAM, a multi-scale feature extraction mechanism is introduced to combine maxinum pooling, average pooling, and global average pooling to capture features at different scales. This is especially beneficial for tasks with significant differences in time-frequency features, such as birdsong classification, and can greatly improve the robustness and generalization ability of the model. In addition, MS-CSAM employs light convolution to reduce computational overhead while maintaining strong feature extraction capabilities to capture the fine-grained features and ensure efficient model performance. The fusion process is described as follows.

Figure 5.

Structures of MS-CSAM (a) and iDAFF (b). In MS-CSAM, r is the channel scaling ratio and ⊕ denotes the broadcasting addition. In iDAFF, the structure within the red dashed box represents ADFF, and iDAFF consists of iterative DAFFs. ⊗ denotes element-wise multiplication.

We define two input features in ResNet skip connection. X represents the original feature and Y denotes the residual feature learned from the ResNet block. Based on the multi-scale channel attention module M, Dual-Attentional Feature Fusion (DAFF) can be formulated as in Formula (7).

In Formula (7), Z refers to the fusion feature, ⊎ denotes the initial feature fusion, and the sum of the elements is used for calculation. DAFF is as shown in Figure 5b, and the dashed line represents 1 −. The fusion weight consists of real numbers between 0 and 1, as does 1 −, which allows the network to choose between X and Y or average them.

The initial feature fusion is replaced by an DAFF module to form an iterative dual-attention feature fusion (iDAFF). Hence, can be re-expressed as in Formula (8). This iterative approach aims to optimize the quality of feature fusion through continuous improvement and adjustment so as to provide a more accurate and reliable data basis for subsequent analysis and application.

The traditional residual connections in the backbone network are replaced by the iDAFF mechanism. It combines local and global attention, and uses feature weighting for fusion. This approach improves the richness and expressiveness of feature representation, and maintains efficiency in terms of parameters and computational complexity. In addition, it significantly improves the performance of the network in handling complex tasks.

3.5. Dual-Branch Network

The proposed Dual-Branch Network combines the advantages of supervised and self-supervised learning for feature representation. The supervised branch focuses on learning discriminative and task-specific representations using labeled data, while the self-supervised branch leverages contrastive learning to extract robust and generalizable representations from unlabeled data. Both branches are jointly optimized under a unified framework to achieve complementary benefits, as shown in Figure 6.

Figure 6.

The architecture of the proposed DBS-NET for birdsong classification. It consists of an unsupervised branch based on SimCLR and a supervised branch built upon Res-iDAFF. The unsupervised branch uses SimCLR to extract features from unlabeled data, while the supervised branch enhances feature extraction from birdsong spectrograms by modifications to the residual feature fusion module and the integration of a more complex linear classifier. For branch integration, we employ a weight assignment strategy to balance the contributions of both branches.

To integrate the contributions of both branches, the total loss is defined as a weighted combination of the supervised classification loss and the self-supervised contrastive loss.

where and are weight coefficients that control the contributions of the supervised and self-supervised losses, respectively, and the sum of the two weights is always 1. represents the InfoNCE loss used in the self-supervised branch to maximize the similarity of positive pairs and minimize the similarity of negative pairs. is the cross-entropy loss calculated from the output of the supervised branch and the ground truth labels.

4. Experiments and Result Analysis

To verify the validity and the generalization of the model, we conduct experiments on two datasets. The main experiments rely on the self-built birdsong dataset BirdSong30, while the public dataset Birdsdata is used for generalization experiments. The dataset is divided into a training set and a test set by stratified sampling with a ratio of 8:2.

4.1. Experimental Environment Settings

In this study, all the experiments were implemented in Python 3.10. The proposed network model was implemented in the PyTorch 2.3.1 framework, trained on Anaconda3, PyCharm 2020.1, and performed on hardware environment of AMD Ryzen 9 and NVIDIA GeForce RTX A6000. The detailed experimental settings are listed in Table 2.

Table 2.

The settings of experiments.

4.2. Model Evaluation Metrics

The performance of the proposed birdsong classification model was evaluated using four metrics such as accuracy, precision, recall, and F1-score, which are calculated as shown in Formulas (10)–(13).

TP (True Positive) is the number of instances in which the model correctly predicts positive results. TN (True Negative) is the number of instances that the model correctly predicts as negative. FP (False Positive) is the number of instances that the model incorrectly predicts as positive. FN (False Negative) is the number of instances that the model incorrectly predicts negative.

4.3. Model Performance Experiments

To demonstrate the effectiveness of combination of self-supervised representation learning and supervised classification learning, the comparative experiments are conducted on the self-built dataset. The parameter settings for different models are shown in Table 3. The experimental results are listed in Table 4. DBS-NET performs well in all four metrics. For example, the accuracy of DBS-NET is 1.73% higher than SCL and 1.78% higher than than of SSCL. DBS-NET completes training in an average of 13.86 h, whereas SSCL requires 9.958 h for pre-training and 7.597 h for fine-tuning, totaling 17.555 h. Thus, compared with SSCL, DBS-NET not only improves classification accuracy but also markedly shortens training time.

Table 3.

Hyperparameters of experiments.

Table 4.

The performance of birdsong classification with different methods.

Table 5 shows the training performance of the proposed model for each category. As seen in the table, the accuracy rates for the two categories, Falco tinnunculus and Anas platyrhynchos, are relatively low. This may be due to the small sample size of these two categories, which makes it difficult to learn effective representations. To better understand the classification results, t-SNE (t-distributed Stochastic Neighbor Embedding) [39] was used to visualize the output features of SCL, SSCL, and DBS-NET by downscaling the high-dimensional features to two dimensions. The results are shown in Figure 7. Subfigure (a) presents the results of SCL visualization. The distribution of classification features is relatively scattered, and there is obvious overlap between many categories. However, it is still possible to observe that the feature points of some categories form distinct clusters. Subfigure (b) shows the visualized results of SSCL. Compared with SCL, the feature point distribution is more compact, indicating that the model can better distinguish features from different categories. However, some category clusters still contain data from other categories, suggesting that the model still struggles with distinguishing similar features. Subfigure (c) is the t-SNE visualization of DBS-NET. The output features of DBS-NET are highly clustered in the t-SNE space, with a clear separation between different categories. This indicates that DBS-NET can capture the similarities and differences of birdsong features more effectively, so that similar features are still clustered together after dimensionality reduction. However, there is still some overlap between Class 23 and Class 24, and the data of these classes are mixed with other classes. This may be due to misclassifications of the model, but it does not affect the superior performance of our model.

Table 5.

The performance of birdsong classification with different classification learning methods.

Figure 7.

Comparative visualization of T-SNE visual effects using three different models, Each number in a cluster represents the corresponding category.

4.4. Ablation Experiments

To evaluate the effectiveness of each module in the proposed model, ablation experiments were carried out. Table 6 shows the results of ablation experiments of the DBS-NET model with a different module. iDAFF (iterative dual-attention feature fusion), Linear Residual and Weighted Loss of the model are considered. The experimental results demonstrate that the performance of model progressively improves as additional modules are incorporated. Model 8 (with iDAFF, Linear Residual, and Weighted Loss) achieves the best performance across all metrics, with an accuracy of 97.54%, precision of 97.47%, recall of 97.23%, and F1-score of 97.22%. Compared with the baseline model (Model 1), accuracy was increased by 0.76%. Furthermore, the combination of iDAFF and Linear Residual (Model 5) improved the accuracy by 0.64%, while the model with Weighted Loss (Model 6) performed slightly worse. These results indicate that the integration of all modules plays a crucial role in enhancing the performance of DBS-NET. Additionally, eliminating the self-supervised branch decreases overall accuracy by 0.87% points and reduces F1-score by 0.66% points, indicating that the SimCLR-based branch plays a decisive role in final performance of DBS-NET, enchancing.

Table 6.

Effectiveness of different modules in a Dual-Branch Network.

To further illustrate the impact of the weighted loss, we drew radar charts to visualize the results, as shown in Figure 8. After applying the weighted loss, the model achieved significant improvements in precision, recall, and F1-score over different classes compared to without using weighted losses, especially for previously underperforming classes (e.g., Class 1 and Class 15). The smoother curves in the weighted loss chart indicate more balanced performance across multiple classes, mitigating the effects of class imbalance. In addition, the average accuracy of weighted loss increased by 0.12%, precision by 0.26%, recall by 0.39%, and F1-score by 0.25%. These improvements further demonstrate the effectiveness of weighted loss in optimizing model performance and enhancing the recognition ability of imbalance classes.

Figure 8.

Effect of weighted loss on model performance.

4.5. Detail Experiments

In the proposed DBS-NET, the contribution of each branch to the total loss is controlled by the weight coefficients and . To accurately assess the impact of these coefficients on the network, we used a unified backbone (ResNet18) for both branches. To optimize the weight distribution in the Dual-Branch model, we implemented an auto-exploration strategy. In this strategy, the backbone was pre-trained using ResNet18, and trained with the Adam optimizer (learning rate = 0.0003). The data augmentation methods included RandomResizedCrop, GaussianBlur, RandomApply ColorJitter, and RandomGrayscale. The auto-exploration strategy traversed all in the range [0.1, 0.9], with a step size of 0.1. The corresponding to each is set to . Each combination of (, ) was tested to observe its impact on the performance of the model. The results are listed in Table 7.

Table 7.

DBS-NET Weight Experiment.

As shown in Table 7, the classification performance of the model is improves with the increase of the supervised branch weight coefficient (). When (), the highest accuracy (97.66%) is achieved. This indicates that the supervised branch plays a pivotal role in the classification task. It has strong discriminability and directly contributes to the optimization of the classification objective. However, when only the supervised branch is used (i.e., training with only supervised learning), the performance is slightly lower than that of the optimal combination. This suggests that although not directly aligned with the classification objective, the unsupervised branch enhances overall performance by improving the robustness of the feature representations.

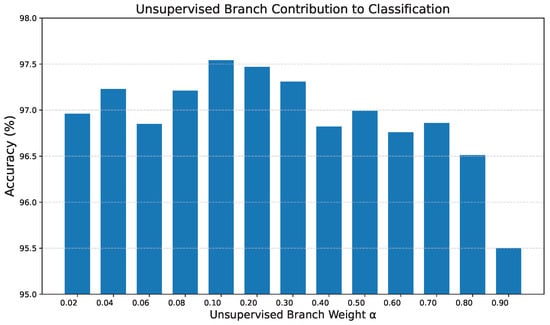

To further explore the optimal balance between and , more extreme values of . Specifically, varied in the range [0.92, 0.98] with a step size of 0.02 (meaning in [0.08, 0.02]). As shown in Figure 9, the performance of the model improves as the supervised branch weight increases from 0.1 to 0.9. When the value of exceeds 0.92, the classification accuracy begins to decline. This suggests that the unsupervised branch, as an auxiliary component, effectively complements the supervised branch by balancing discriminative and robust features to achieve higher accuracy.

Figure 9.

Exploring the optimal weight combination.

Figure 10 illustrates how varying the weight of the unsupervised branch influences classification accuracy. As increases from 0.02 to 0.10, accuracy rises steadily, reaching a peak of about 97.6% when is in the 0.10–0.20 range. Beyond ≈ 0.30, accuracy begins to drop: the model increasingly emphasises generic instance discrimination at the expense of label-driven separation. When it is pushed to 0.90, accuracy falls to about 95.5%, confirming that excessive reliance on the unsupervised objective reduces class-specific performance. Overall, Figure 10 demonstrates that the unsupervised branch is indeed beneficial, but only when balanced against the supervised loss, an between 0.10 and 0.20 delivers the optimal synergy for this task.

Figure 10.

Unsupervised branch contribution to classification.

4.6. Generalization Experiments

To evaluate the generalizability of the proposed model, we conducted experiments on three datasets: BirdSong30, Birdsdata, and BirdSong16. BirdSong30 serves as the primary dataset for this study. Birdsdata is a public dataset containing 20 bird species and 14,311 audio clips (each clip is normalized and trimmed to 2 seconds). BirdSong16 contains 16 categories and 271 recordings. Detailed information of datasets are provided in Table 8. Table 9 shows the comparison results, indicating that the proposed model performs well on all three datasets. The model achieves accuracy, precision, recall, and F1-score above 97% on BirdSong30 and Birdsdata, with slightly lower (but still competitive) results on BirdSong16. These findings suggest that the model shows good stability and generalization on different datasets.

Table 8.

Description of datasets.

Table 9.

Generalization experiments of DBS-NET on different datasets.

To further validate the generalization ability of our proposed model, experiments on different backbone networks were conducted. As shown in Table 10, our model achieves consistently high performance on various backbones and remains competitive on all metrics. This demonstrates the applicability and flexibility of the framework. Notably, ResNet18 outperforms the other networks, with a relatively low computational complexity (4.535 GFLOPs) and a moderate number of parameters (12.603M). Although ResNet34 and ResNet50 provide slightly lower performance, their increased number of parameters and computational costs (6.389 GFLOPs and 8.887 GFLOPs, respectively) make them less efficient in practice.

Table 10.

Backbone selection experiments.

The experimental results indicate that the shallower layers performs better on the birdsong dataset. This can be attributed to the nature of spectrogram recognition tasks, where local texture and frequency band information are crucial, making deeper network architectures unnecessary. The shallower network can effectively extract the basic discriminative features for classification. Moreover, ResNet18 retains high-resolution feature maps in its early stages, providing a distinct advantage for tasks that require fine-grained information extraction. Hu et al. [40] also pointed out that models with fewer layers perform better on sound-related tasks. Therefore, in this paper, ResNet18 was chosen as the backbone network.

Table 11 compares the classification accuracy of ResNet18, ResNet34, and ResNet50 under SCL, SSCL, and the full DBS-NET. Despite having the fewest parameters, ResNet-18 delivers the best performance in all three settings—95.94% (SCL), 95.83% (SSCL) and 97.54% (DBS-NET). Accuracy declines slightly as the network deepens, indicating that ResNet-18 offers the optimal trade-off between complexity and performance for this task. Consistent with findings in other studies, larger backbones do not yield accuracy gains commensurate with their parameter growth on birdsong classification [40].

Table 11.

Ablation on encoder backbones (SCL, SSCL and DBS-NET).

5. Discussion

5.1. Comparison with State of the Art

To verify the advantages of the proposed model, we compared it with existing state-of-the-art birdsong classification methods. First, we conducted experimental comparisons on two datasets: BirdSong30 and BirdSong16. The results shown in Table 12 indicate that the accuracy of the proposed model is superior to the existing methods on the same dataset. The proposed model demonstrates generalization performance on two different datasets, highlighting its robustness and applicability in different scenarios.

Table 12.

Comparative analysis with other methods on BirdSong30 and BirdSong16 dataset.

In addition, the proposed method was compared with other existing models, and the results are presented in Table 13. As can be seen, the proposed model consistently achieves better classification performance on the same dataset. This result shows that the overall classification ability of the proposed model outperforms other models and further verifies its effectiveness and potential in birdsong classification tasks.

Table 13.

Comparative analysis with other methods on the Birdsdata dataset.

5.2. Weight Assignment Strategy

In a multi-branch network, optimizing different loss functions requires balancing the contributions of each branch to ensure optimal performance on the final task. To evaluate the effectiveness of the weight coefficients in the proposed DBS-NET, we compared it with other weight allocation strategies, including softmax auto-learning weight coefficients, exponential weight decay [33], and learning based on uncertainty loss functions [41], as shown in Table 14.

Table 14.

Comparison with weight assignment strategy.

The results indicate that our proposed strategy outperforms the other three methods in terms of accuracy, precision, recall, and F1-score. The main reason behind this superior performance is that our fixed weight assignment strategy eliminates to adjust weights frequently during training, making the learning process more stable and controllable. By setting fixed weights, the contribution of each task to the total loss can be clearly defined, allowing the model to focus more effectively on optimizing the most important tasks.

In contrast, softmax and uncertainty-based loss functions tend to introduce frequent weight fluctuations during training. These fluctuations can interfere with the stability and convergence of the model, making training more unstable. The exponential decay strategy assumes that the importance of a task gradually decrease over time, but it fails to fully capture the nature of tasks that require consistent attention throughout the training process.

The use of the relatively fixed loss weights ensures that each branch maintains a consistent contribution during training, which facilitates smooth convergence. This consistent weighting leads to better overall performance, as shown in Table 14, where DBS-NET achieves the highest values on all evaluation metrics.

5.3. Effectiveness of Imbalance-Handling Strategies

Table 15 shows that none of the three imbalance-handling strategies—oversampling, class-specific augmentation, or their combination—deliver the expected gains in accuracy, micro-F1, or macro-F1; some metrics even decline slightly. According to the class counts reported in Table 1, the largest class contains about 2934 samples while the smallest has only 580, giving a max-to-min ratio of roughly 5.1:1, i.e., a moderate long-tailed distribution. Under this imbalance, simply applying oversampling or class-specific augmentation fails to raise macro-F1 for three main reasons. First, repeating minority-class samples by a factor of ≈3 encourages the model to memorize the training spectrograms rather than learn features that generalize, thereby suppressing recall on the validation set. Second, the strong cropping and color jitter designed for minority classes do not fully respect the time–frequency texture of birdsong spectrograms; they may disrupt key harmonic structures, so the added noise offsets any diversity gain. Third, long-tailed classes often coincide with recording-device or environmental differences, and simple duplication or generic augmentation cannot bridge this domain gap, leaving the decision boundary largely unchanged. In future work, we plan to explore few-shot learning techniques and advanced data synthesis methods to further improve classification accuracy under class-imbalanced conditions.

Table 15.

Performance with different imbalance-handling strategies.

6. Limitations and Future Scope

A dual-branch SimCLR-based approach for birdsong classification that combines self-supervised representation learning with supervised classification learning is employed. The dual-branch network structure significantly improves the feature extraction and classification capability of the model. This method provides a novel strategy for birdsong feature extraction and classification and provides a strong technical support for ecological monitoring and biodiversity assessment. There are still some challenges and open questions to be addressed in future research. The following are key areas for future work:

- Broader applicability: Extend this Dual-Branch approach to other animal vocalization datasets or even to image-based datasets to verify its generality.

- Network architecture: Explore replacing the ResNet18 backbone with other network architectures (e.g., Transformers or larger CNNs) to further improve performance.

- Data augmentation: Investigate more powerful or specialized data augmentation methods to enrich the training data and enhance model robustness.

7. Conclusions

In this paper, a Dual-Branch Network for birdsong classification is proposed for efficient feature extraction with a limited dataset. The Dual-Branch Network consists of SimCLR and Res-iDAFF. The optimal data augmentation method for the SimCLR branch and the optimal network structure for the ResNet branch (ResNet-iDAFF) are then explored. The weight assignment strategy combines the losses of these two branches to form the final DBS-NET structure. Experiments show that the DBS-NET model proposed in this paper is more effective than each branch of SimCLR and ResNet-iDAFF models. In addition, it is found that the iDAFF module, the linear residual layer, and the weighted loss strategy can improve the performance of birdsong classification so as to provide more reliable data support for ecological monitoring and environmental protection.

Author Contributions

Conceptualization, Z.W.; methodology, Z.W. and Y.C.; software, Z.W.; validation, Z.W. and H.S.; formal analysis, Z.W.; investigation, Z.W.; resources, Y.Z.; data curation, Y.Z.; writing—original draft preparation, Z.W.; writing—review and editing, Z.W. and Y.Z.; visualization, D.L.; project administration, Y.Z.; funding acquisition, Y.Z. and D.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (Grant No. 32360388, Grant No. 31860332).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in this study is available at the following links: Kaggle (https://www.kaggle.com/datasets/chunjiangyu123/30-birdsong-wt-spectrum-dataset) or Google Drive (https://drive.google.com/file/d/1-LDrqoF-lysoj4_vSreECZQs6shyK0yU/view?usp=drive_link).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- IUCN Red List. The IUCN Red List of Threatened Species. Di sponí vel em. Aces so em. 2004; Volume 12. Available online: https://www.iucnredlist.org/ (accessed on 20 May 2024).

- Hu, S.; Chu, Y.; Wen, Z.; Zhou, G.; Sun, Y.; Chen, A. Deep learning bird song recognition based on MFF-ScSEnet. Ecol. Indic. 2023, 154, 110844. [Google Scholar] [CrossRef]

- Sastry, S.; Khanal, S.; Dhakal, A.; Huang, D.; Jacobs, N. BirdSAT: Cross-View Contrastive Masked Autoencoders for Bird Species Classification and Mapping. arXiv 2023, arXiv:2310.19168. [Google Scholar]

- Yan, N.; Chen, A.; Zhou, G.; Zhang, Z.; Liu, X.; Wang, J.; Liu, Z.; Chen, W. Birdsong classification based on multi-feature fusion. Multimed. Tools Appl. 2021, 80, 36529–36547. [Google Scholar] [CrossRef]

- Xie, J.; Zhu, M. Handcrafted features and late fusion with deep learning for bird sound classification. Ecol. Inform. 2019, 52, 74–81. [Google Scholar] [CrossRef]

- A hierarchical birdsong feature extraction architecture combining static and dynamic modeling. Ecol. Indic. 2023, 150, 110258. [CrossRef]

- Fu, Y.; Yu, C.; Zhang, Y.; Lv, D.; Yin, Y.; Lu, J.; Lv, D. Classification of birdsong spectrograms based on DR-ACGAN and dynamic convolution. Ecol. Inform. 2023, 77, 102250. [Google Scholar] [CrossRef]

- Kahl, S.; Wood, C.M.; Eibl, M.; Klinck, H. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 2021, 61, 101236. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-supervised learning: Generative or contrastive. IEEE Trans. Knowl. Data Eng. 2021, 35, 857–876. [Google Scholar] [CrossRef]

- Stowell, D.; Plumbley, M.D. Automatic large-scale classification of bird sounds is strongly improved by unsupervised feature learning. PeerJ 2014, 2, e488. [Google Scholar] [CrossRef]

- Guerrero, M.J.; Bedoya, C.L.; López, J.D.; Daza, J.M.; Isaza, C. Acoustic animal identification using unsupervised learning. Methods Ecol. Evol. 2023, 14, 1500–1514. [Google Scholar] [CrossRef]

- Michaud, F.; Sueur, J.; Le Cesne, M.; Haupert, S. Unsupervised classification to improve the quality of a bird song recording dataset. Ecol. Inform. 2023, 74, 101952. [Google Scholar] [CrossRef]

- Wei, W.; Xu, S.; Zhang, L.; Zhang, J.; Zhang, Y. Boosting hyperspectral image classification with unsupervised feature learning. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5502315. [Google Scholar] [CrossRef]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Zbontar, J.; Jing, L.; Misra, I.; LeCun, Y.; Deny, S. Barlow twins: Self-supervised learning via redundancy reduction. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 12310–12320. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Chen, X.; He, K. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15750–15758. [Google Scholar]

- Caron, M.; Misra, I.; Mairal, J.; Goyal, P.; Bojanowski, P.; Joulin, A. Unsupervised learning of visual features by contrasting cluster assignments. Adv. Neural Inf. Process. Syst. 2020, 33, 9912–9924. [Google Scholar]

- Wang, T.; Isola, P. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 9929–9939. [Google Scholar]

- Li, J.; Zhou, P.; Xiong, C.; Hoi, S.C. Prototypical contrastive learning of unsupervised representations. arXiv 2020, arXiv:2005.04966. [Google Scholar]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised feature learning via non-parametric instance discrimination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3733–3742. [Google Scholar]

- Oord, A.V.D.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Becker, S.; Hinton, G.E. Self-organizing neural network that discovers surfaces in random-dot stereograms. Nature 1992, 355, 161–163. [Google Scholar] [CrossRef]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1735–1742. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.; He, K. Improved baselines with momentum contrastive learning. arXiv 2020, arXiv:2003.04297. [Google Scholar]

- Li, C.; Li, F.; Zhang, L.; Yang, A.; Hu, Z.; He, M. Intrusion Detection for Industrial Control Systems Based on Improved Contrastive Learning SimCLR. Appl. Sci. 2023, 13, 9227. [Google Scholar] [CrossRef]

- Bunyang, S.; Thedwichienchai, N.; Pintong, K.; Lael, N.; Kunaborimas, W.; Boonrat, P.; Siriborvornratanakul, T. Self-supervised learning advanced plant disease image classification with SimCLR. Adv. Comput. Intell. 2023, 3, 18. [Google Scholar] [CrossRef]

- Shi, Z.; Chen, H.; Liu, C.; Qiu, J. Metaformer Model with ArcFaceLoss and Contrastive Learning for SnakeCLEF2023 Fine-Grained Classification. CLEF (Working Notes). 2023; pp. 2137–2148. Available online: https://api.semanticscholar.org/CorpusID:264441643 (accessed on 20 May 2024).

- Sun, H.; Wang, X.; Li, Z.; Liu, A.; Xu, S.; Jiang, Q.; Li, Q.; Xue, Z.; Gong, J.; Chen, L.; et al. Automated Rib Fracture Detection on Chest X-Ray Using Contrastive Learning. J. Digit. Imaging 2023, 36, 2138–2147. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G.E. Big self-supervised models are strong semi-supervised learners. Adv. Neural Inf. Process. Syst. 2020, 33, 22243–22255. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Zhang, C.; Li, Q.; Zhan, H.; Li, Y.; Gao, X. One-step progressive representation transfer learning for bird sound classification. Appl. Acoust. 2023, 212, 109614. [Google Scholar] [CrossRef]

- Wu, S.H.; Chang, H.W.; Lin, R.S.; Tuanmu, M.N. SILIC: A cross database framework for automatically extracting robust biodiversity information from soundscape recordings based on object detection and a tiny training dataset. Ecol. Inform. 2022, 68, 101534. [Google Scholar] [CrossRef]

- Höchst, J.; Bellafkir, H.; Lampe, P.; Vogelbacher, M.; Mühling, M.; Schneider, D.; Lindner, K.; Rösner, S.; Schabo, D.G.; Farwig, N.; et al. Bird@ Edge: Bird Species Recognition at the Edge. In Proceedings of the Networked Systems: 10th International Conference, NETYS 2022, Virtual Event, 17–19 May 2022; Springer: Berlin, Germany, 2022; pp. 69–86. [Google Scholar] [CrossRef]

- Xie, J.; Towsey, M.; Eichinski, P.; Zhang, J.; Roe, P. Acoustic feature extraction using perceptual wavelet packet decomposition for frog call classification. In Proceedings of the 2015 IEEE 11th International Conference on e-Science, Munich, Germany, 31 August–4 September 2015; pp. 237–242. [Google Scholar] [CrossRef]

- Priyadarshani, N.; Marsland, S.; Castro, I.; Punchihewa, A. Birdsong denoising using wavelets. PLoS ONE 2016, 11, e0146790. [Google Scholar] [CrossRef]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 3560–3569. [Google Scholar]

- Linderman, G.C.; Rachh, M.; Hoskins, J.G.; Steinerberger, S.; Kluger, Y. Efficient algorithms for t-distributed stochastic neighborhood embedding. arXiv 2017, arXiv:1712.09005. [Google Scholar]

- Zhang, Q.; Hu, S.; Tang, L.; Deng, R.; Yang, C.; Zhou, G.; Chen, A. SDFIE-NET–A self-learning dual-feature fusion information capture expression method for birdsong recognition. Appl. Acoust. 2024, 221, 110004. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7482–7491. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).