Criteria Analysis for the Selection of a Generative Artificial Intelligence Tool for Academic Research Based on an Improved Group DEMATEL Method

Abstract

1. Introduction

- (1)

- A review of evaluation criteria relevant to the selection of GenAI tools for academic research.

- (2)

- A IDEMATEL method that enhances the classical DEMATEL approach.

- (3)

- An application of the IDEMATEL method to analyze interdependencies among criteria in selecting a GenAI tool for academic research.

2. Research on Evaluation and Analysis of Criteria for GenAI Tools Based on Multi-Criteria Methods

2.1. Comparative Analysis of the Use of Multi-Criteria Decision-Making (MCDM) Methods in the Evaluation of GenAI Tools

2.2. Criteria for Selecting GenAI Tools

3. The Selection of DEMATEL Method for GenAI Tools Criteria Analysis

4. The IDEMATEL Method

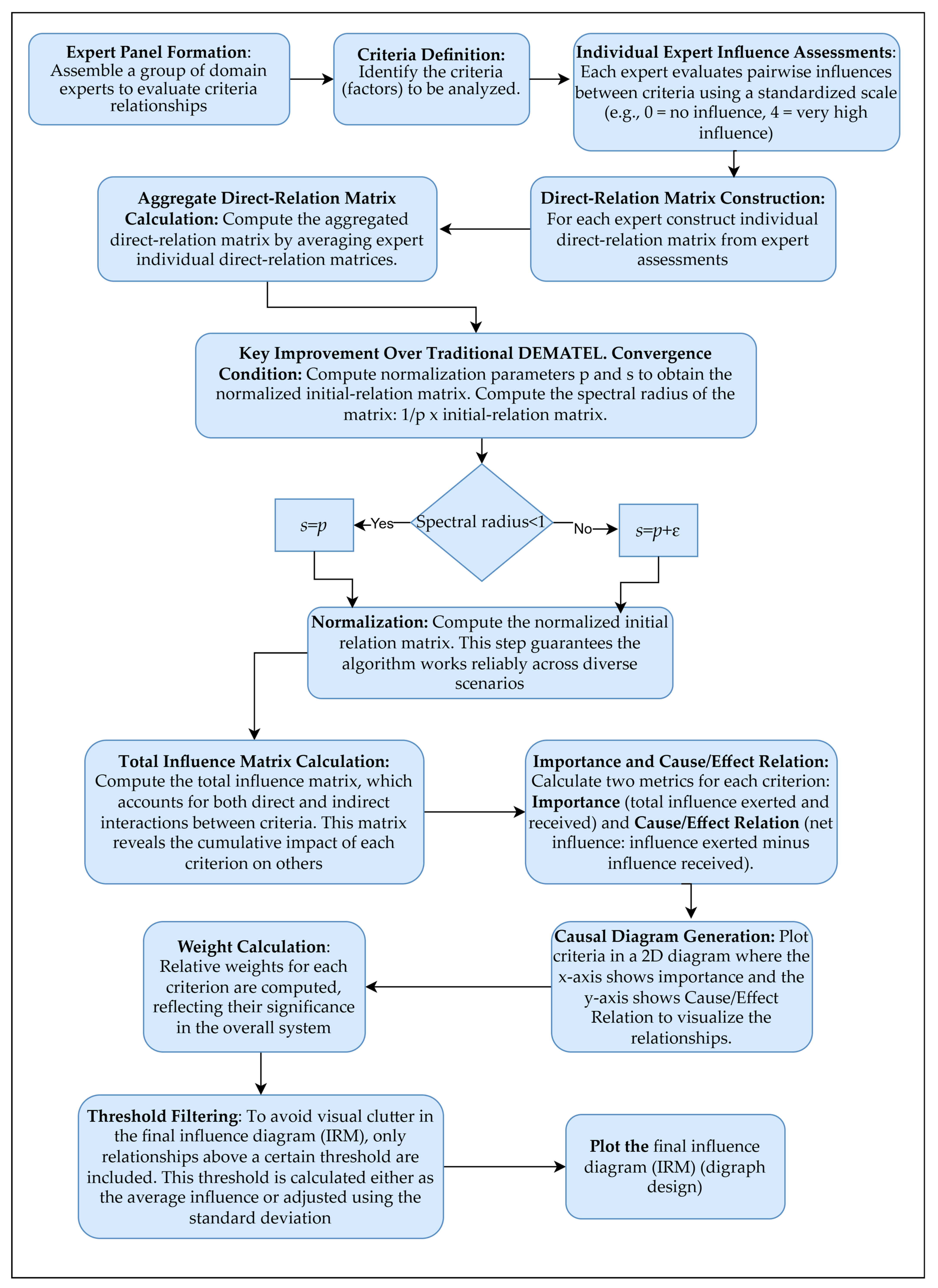

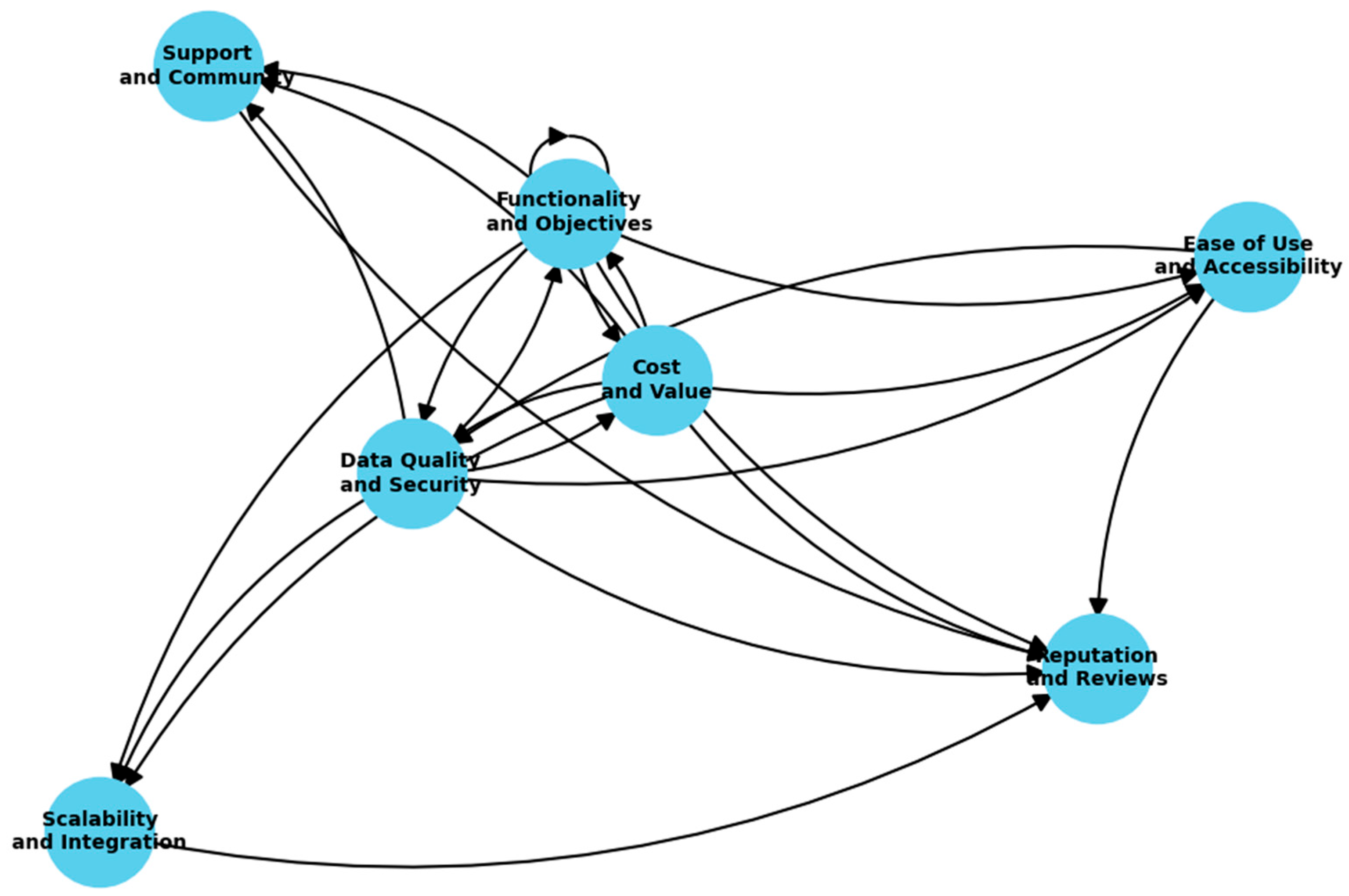

5. The IDEMATEL Algorithm

6. An Application of the IDEMATEL Method for Evaluation and Analysis of Criteria Used in Selection of GenAI Tools for Academic Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Some Conditions That Imply the Powers of a Square Matrix Tend to Zero

- the norm that is and

- the norm that is .

- (i)

- If for every then the powers of matrix tend to zero.

- (ii)

- If for some then the powers of matrix tend to zero.

References

- Panda, S.; Kaur, D.N. Exploring the role of generative AI in academia: Opportunities and challenges. IP Indian J. Libr. Sci. Inf. Technol. 2024, 9, 12–23. [Google Scholar] [CrossRef]

- Kalota, F. A primer on generative artificial intelligence. Educ. Sci. 2024, 14, 172. [Google Scholar] [CrossRef]

- Akpan, I.J.; Kobara, Y.M.; Owolabi, J.; Akpan, A.A.; Offodile, O.F. Conversational and generative artificial intelligence and human–chatbot interaction in education and research. Int. Trans. Oper. Res. 2025, 32, 1251–1281. [Google Scholar] [CrossRef]

- Andersen, J.P.; Degn, L.; Fishberg, R.; Graversen, E.K.; Horbach, S.P.; Schmidt, E.K.; Sørensen, M.P. Generative artificial intelligence (GenAI) in the research process: A survey of researchers’ practices and perceptions. Technol. Soc. 2025, 81, 102813. [Google Scholar] [CrossRef]

- Gabus, A.; Fontela, E. World Problems: An Invitation to Further Thought Within the Framework of DEMATEL; Battelle Geneva Research Center: Geneva, Switzerland, 1972. [Google Scholar]

- Pinzolits, R. AI in academia: An overview of selected tools and their areas of application. MAP Educ. Humanit. 2024, 4, 37–50. [Google Scholar] [CrossRef]

- Danler, M.; Hackl, W.O.; Neururer, S.B.; Pfeifer, B. Quality and effectiveness of AI tools for students and researchers for scientific literature review and analysis. In Proceedings of the dHealth 2024 Conference, Vienna, Austria, 7–8 May 2024; pp. 203–208. [Google Scholar] [CrossRef]

- Šarlauskienė, L.; Dagytė, S. Criteria for selecting artificial intelligence tools. Innov. Publ. Print. Multimed. Technol. 2024, 100–106. [Google Scholar] [CrossRef]

- Al-kfairy, M. Factors impacting the adoption and acceptance of ChatGPT in educational settings: A narrative review of empirical studies. Appl. Syst. Innov. 2024, 7, 110. [Google Scholar] [CrossRef]

- Aydogmus, H.Y.; Aydogmus, U. Evaluation of artificial intelligence tools for universities with fuzzy multi-criteria decision-making methods. In AI Adoption and Diffusion in Education; Sart, G., Sezgin, F.H., Eds.; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 153–178. [Google Scholar] [CrossRef]

- Bukar, U.A.; Sayeed, M.S.; Razak, S.F.A.; Yogarayan, S.; Sneesl, R. Decision-making framework for the utilization of generative artificial intelligence in education: A case study of ChatGPT. IEEE Access 2024, 12, 95368–95384. [Google Scholar] [CrossRef]

- Huang, Q.; Lv, C.; Lu, L.; Tu, S. Evaluating the quality of AI-generated digital educational resources for university teaching and learning. Systems 2025, 13, 174. [Google Scholar] [CrossRef]

- Bukar, U.A.; Sayeed, M.S.; Razak, S.F.A.; Yogarayan, S.; Sneesl, R. Prioritizing ethical conundrums in the utilization of ChatGPT in education through an analytical hierarchical approach. Educ. Sci. 2024, 14, 959. [Google Scholar] [CrossRef]

- Agarwal, A. Optimizing employee roles in the era of generative AI: A multi-criteria decision-making analysis of co-creation dynamics. Cogent Soc. Sci. 2025, 11, 2476737. [Google Scholar] [CrossRef]

- Gupta, S.; Kaur, S.; Gupta, M.; Singh, T. AI empowered academia: A fuzzy prioritization framework for academic challenges. J. Int. Educ. Bus. 2024; ahead-of-print. [Google Scholar] [CrossRef]

- Ilieva, G. Extension of interval-valued hesitant Fermatean fuzzy TOPSIS for evaluating and benchmarking of generative AI chatbots. Electronics 2025, 14, 555. [Google Scholar] [CrossRef]

- Chakrabortty, R.K.; Abdel-Basset, M.; Ali, A.M. A multi-criteria decision analysis model for selecting an optimum customer service chatbot under uncertainty. Decis. Anal. J. 2023, 6, 100168. [Google Scholar] [CrossRef]

- Yang, L.; Wang, J. Factors influencing initial public acceptance of integrating the ChatGPT-type model with government services. Kybernetes 2024, 53, 4948–4975. [Google Scholar] [CrossRef]

- Fontoura, L.; de Mattos Nascimento, D.L.; Neto, J.V.; Caiado, R.G.G. Energy Gen-AI technology framework: A perspective of energy efficiency and business ethics in operation management. Technol. Soc. 2025, 81, 102847. [Google Scholar] [CrossRef]

- Gupta, R.; Rathore, B. Exploring the generative AI adoption in service industry: A mixed-method analysis. J. Retail. Consum. Serv. 2024, 81, 103997. [Google Scholar] [CrossRef]

- Dergaa, I.; Chamari, K.; Żmijewski, P.; Saad, B. From human writing to artificial intelligence generated text: Examining the prospects and potential threats of ChatGPT in academic writing. Biol. Sport 2023, 40, 615–622. [Google Scholar] [CrossRef]

- Oyelude, A. Artificial intelligence (AI) tools for academic research. Libr. Hi Tech News 2024, 41, 18–20. [Google Scholar] [CrossRef]

- Burger, B.; Kanbach, D.K.; Kraus, S.; Breier, M.; Corvello, V. On the use of AI-based tools like ChatGPT to support management research. Eur. J. Innov. Manag. 2023, 26, 233–241. [Google Scholar] [CrossRef]

- Olu-Ajayi, R.; Alaka, H.; Sunmola, F.; Ajayi, S.; Mporas, I. Statistical and artificial intelligence-based tools for building energy prediction: A systematic literature review. IEEE Trans. Eng. Manag. 2024, 71, 14733–14753. [Google Scholar] [CrossRef]

- Ekundayo, T.; Khan, Z.; Nuzhat, S. Evaluating the influence of artificial intelligence on scholarly research: A study focused on academics. Hum. Behav. Emerg. Technol. 2024, 2024, 8713718. [Google Scholar] [CrossRef]

- Polonsky, M.; Rotman, J. Should artificial intelligent agents be your co-author? Arguments in favour, informed by ChatGPT. Australas. Mark. J. 2023, 31, 91–96. [Google Scholar] [CrossRef]

- Iorliam, A.; Ingio, J.A. A comparative analysis of generative artificial intelligence tools for natural language processing. J. Comput. Theor. Appl. 2024, 1, 311–325. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Bhattamisra, S.; Banerjee, P.; Gupta, P.; Mayuren, J.; Patra, S.; Candasamy, M. Artificial intelligence in pharmaceutical and healthcare research. Big Data Cogn. Comput. 2023, 7, 10. [Google Scholar] [CrossRef]

- Michalak, R. Fostering undergraduate academic research: Rolling out a tech stack with AI-powered tools in a library. J. Libr. Admin. 2024, 64, 335–346. [Google Scholar] [CrossRef]

- Saaty, T.L. A scaling method for priorities in hierarchical structures. J. Math. Psychol. 1977, 15, 234–281. [Google Scholar] [CrossRef]

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill Press: New York, NY, USA, 1980. [Google Scholar]

- Saaty, T.L. Decision Making with Dependence and Feedback: The Analytic Network Process; RWS Publications: Pittsburgh, PA, USA, 2001. [Google Scholar]

- Rezaei, J. Best-worst multi-criteria decision-making method. Omega 2015, 53, 49–57. [Google Scholar] [CrossRef]

- Rădulescu, C.Z.; Rădulescu, M. Group decision support approach for cloud quality of service criteria weighting. Stud. Inform. Control 2018, 27, 275–284. [Google Scholar] [CrossRef]

- Wu, H.H.; Tsai, Y.N. An integrated approach of AHP and DEMATEL methods in evaluating the criteria of auto spare parts industry. Int. J. Syst. Sci. 2012, 43, 2114–2124. [Google Scholar] [CrossRef]

- Tavana, M.; Soltanifar, M.; Santos-Arteaga, F.J.; Sharafi, H. Analytic hierarchy process and data envelopment analysis: A match made in heaven. Expert Syst. Appl. 2023, 223, 119902. [Google Scholar] [CrossRef]

- Srđević, Z.; Srđević, B.; Ždero, S.; Ilić, M. How MCDM method and the number of comparisons influence the priority vector. Comput. Sci. Inf. Syst. 2022, 19, 251–275. [Google Scholar] [CrossRef]

- Tan, Y.; Wang, X.; Liu, X.; Zhang, S.; Li, N.; Liang, J.; Hu, D.; Yang, Q. Comparison of AHP and BWM methods based on ArcGIS for ecological suitability assessment of Panax notoginseng in Yunnan Province, China. Ind. Crops Prod. 2023, 199, 116737. [Google Scholar] [CrossRef]

- Muerza, V.; Milenkovic, M.; Larrodé, E.; Bojovic, N. Selection of an international distribution center location: A comparison between stand-alone ANP and DEMATEL-ANP applications. Res. Transp. Bus. Manag. 2024, 56, 101135. [Google Scholar] [CrossRef]

- Bharti, S.S.; Prasad, K.; Sudha, S.; Kumari, V. Prioritisation of factors for artificial intelligence-based technology adoption by banking customers in India: Evidence using the DEMATEL approach. Appl. Finance Lett. 2023, 12, 2–22. [Google Scholar] [CrossRef]

- Sharma, M.; Luthra, S.; Joshi, S.; Kumar, A. Implementing challenges of artificial intelligence: Evidence from public manufacturing sector of an emerging economy. Gov. Inf. Q. 2022, 39, 101624. [Google Scholar] [CrossRef]

- Alinezhad, A.; Khalili, J. DEMATEL method. In New Methods and Applications in Multiple Attribute Decision Making (MADM); International Series in Operations Research & Management Science; Springer: Cham, Switzerland, 2019; Volume 277, pp. 215–221. [Google Scholar] [CrossRef]

- Lee, H.-S.; Tzeng, G.-H.; Yeih, W.; Wang, Y.-J.; Yang, S.-C. Revised DEMATEL: Resolving the infeasibility of DEMATEL. Appl. Math. Model. 2013, 37, 6746–6757. [Google Scholar] [CrossRef]

- Chen, C.Y.; Huang, J.J. A novel DEMATEL approach by considering normalization and invertibility. Symmetry 2022, 14, 1109. [Google Scholar] [CrossRef]

| References | Problem | Multi-Criteria Method | Criteria | Domain |

|---|---|---|---|---|

| [10] | Evaluate AI tools that can be used in universities | Intuitionistic Fuzzy Multi-Criteria Decision-Making | Perceived ease of use, perceived usefulness, personalization, interaction, trust | Education |

| [11] | Whether to impose restrictions or legislate in the usage of GenAI | Analytic Hierarchy Process (AHP) | Copyright, legal, and compliance issues; privacy and confidentiality; academic integrity; incorrect reference and citation practices; safety and security concerns | Education |

| [12] | An evaluation index system for AI-generated digital educational resources | A combination of the Delphi method and AHP | Content characteristics, expression characteristics, user characteristics, technical characteristics | Education |

| [13] | Identifies and categorizes ethical concerns associated with ChatGPT | AHP | Risk, reward, resilience | Education |

| [14] | Selection of generative artificial intelligence GenAI chatbots | Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) | Conversational ability, user experience, integration capability, price | Various applications |

| [15] | A decision-making framework for chatbot selection in the telecommunication industry | Combined Compromise Solution (CoCoSo) and AHP single-valued neutrosophic sets | Security, speed, responsiveness, satisfaction, reliability, assurance, tangibility, engagement, and empathy | Telecommunication industry |

| [16] | Identifying critical decision points and alternatives in integrating generative AI through a systematic literature review | AHP | Innovativeness, productivity enhancement from investment made, change in customer experience, data safety and ethics, organizational adaptability | Organizations |

| [17] | The barriers which are impeding the implementation of Generative AI Technologies, such as ChatGPT in the educational landscape | Fuzzy AHP | Ethical concerns, technological concerns, regulatory concerns, societal concerns, trust issues, human values concerns, psychological concerns | Education |

| [18] | Integrating the Chat Generative Pre-Trained Transformer-type (ChatGPT-type) model with government services | LDA Grey-DEMATEL method and TAISM | Data layer, technology layer, user layer, service layer, environment layer | Government services |

| [19] | GenAI a critical solution for improving energy efficiency amid increasing demand for sustainable energy at the operational and supply chain management levels | Fuzzy DEMATEL | Integrity, transparency and accountability, fairness and bias mitigation, privacy and data protection, compliance with laws and regulations, sustainability and environmental responsibility, employment and workforce | Energy and business |

| [20] | Adoption of GenAI tools | F-DEMATEL and FAHP | Ethical, technological, regulations and policies, cost, and human resources | Business, service organizations |

| Method | Core Mechanism | Features | Strengths | Weaknesses |

|---|---|---|---|---|

| AHP | Hierarchical pairwise comparisons using Saaty’s 1–9 scale to derive weights. |

|

|

|

| ANP | Extends AHP to account for interdependencies among criteria using feedback loops. |

|

|

|

| DEMATEL | Builds causal relationships between criteria using influence matrices. |

|

|

|

| BWM | Compares all criteria against predefined “best” and “worst” anchors. |

|

|

|

| Method | Number of Comparisons Required | Criteria Dependency Consideration | Scale Used |

|---|---|---|---|

| AHP | n(n − 1)/2 | None | Saaty’s 1–9 Scale |

| ANP | n(n − 1)/2 | Interdependencies | Saaty’s 1–9 Scale (or extensions) |

| DEMATEL | n(n − 1) | Causal Relationships | Integer Scale (e.g., 0–4 or 0–5) |

| BWM | 2n − 3 | None | 1–9 Scale |

| Criterion | Symbol | Description |

|---|---|---|

| Functionality and Objectives | FO | Alignment of the tool’s features with specific research tasks (literature review, data analysis, writing, etc.). |

| Ease of Use and Accessibility | UA | User-friendliness for researchers with varying technical expertise; intuitive interfaces; compatibility across platforms. |

| Scalability and Integration | SI | The tool’s ability to handle increasing data/demands and integrate with existing research workflows and systems. |

| Data Quality and Security | QS | Ensuring data accuracy, reliability, and protection against breaches; maintaining privacy. |

| Cost and Value | CV | Balancing the cost (subscriptions, usage charges) with the value provided (efficiency, accuracy, improved outcomes). |

| Support and Community | SC | Availability of technical support, training resources, and a community of users for assistance and best practices. |

| Reputation and Reviews | RR | Overall reputation of the AI tool and vendor; feedback from other users. |

| Criteria | FO | UA | SI | QS | CV | SC | RR |

|---|---|---|---|---|---|---|---|

| FO | 0.000 | 3.000 | 3.000 | 4.000 | 4.000 | 2.000 | 3.000 |

| UA | 2.667 | 0.000 | 2.000 | 3.000 | 2.000 | 2.000 | 2.333 |

| SI | 2.667 | 2.000 | 0.000 | 2.000 | 2.000 | 2.000 | 4.000 |

| QS | 3.333 | 3.000 | 3.000 | 0.000 | 3.000 | 2.333 | 2.667 |

| CV | 3.667 | 2.000 | 2.000 | 3.000 | 0.000 | 4.000 | 3.333 |

| SC | 1.000 | 2.000 | 2.000 | 2.000 | 2.000 | 0.000 | 3.667 |

| RR | 2.000 | 2.000 | 2.000 | 2.000 | 2.000 | 2.000 | 0.000 |

| Criteria | FO | UA | SI | QS | CV | SC | RR |

|---|---|---|---|---|---|---|---|

| FO | 0.000000 | 0.157895 | 0.157895 | 0.210526 | 0.210526 | 0.105263 | 0.157895 |

| UA | 0.140351 | 0.000000 | 0.105263 | 0.157895 | 0.105263 | 0.105263 | 0.122807 |

| SI | 0.140351 | 0.105263 | 0.000000 | 0.105263 | 0.105263 | 0.105263 | 0.210526 |

| QS | 0.175439 | 0.157895 | 0.157895 | 0.000000 | 0.157895 | 0.122807 | 0.140351 |

| CV | 0.192982 | 0.105263 | 0.105263 | 0.157895 | 0.000000 | 0.210526 | 0.175439 |

| SC | 0.052632 | 0.105263 | 0.105263 | 0.105263 | 0.105263 | 0.000000 | 0.192982 |

| RR | 0.105263 | 0.105263 | 0.105263 | 0.105263 | 0.105263 | 0.105263 | 0.000000 |

| Criteria | FO | UA | SI | QS | CV | SC | RR |

|---|---|---|---|---|---|---|---|

| FO | 0.615483 | 0.700611 | 0.700611 | 0.809385 | 0.777539 | 0.672040 | 0.868314 |

| UA | 0.586781 | 0.429056 | 0.524294 | 0.617193 | 0.552236 | 0.529353 | 0.664188 |

| SI | 0.592153 | 0.529982 | 0.434743 | 0.582269 | 0.558179 | 0.536459 | 0.743810 |

| QS | 0.710256 | 0.653214 | 0.653214 | 0.580363 | 0.687035 | 0.634654 | 0.794699 |

| CV | 0.730663 | 0.624210 | 0.624210 | 0.728502 | 0.563766 | 0.715220 | 0.838054 |

| SC | 0.456101 | 0.467019 | 0.467019 | 0.509966 | 0.488738 | 0.380419 | 0.651403 |

| RR | 0.493836 | 0.463589 | 0.463589 | 0.508177 | 0.487105 | 0.470331 | 0.480049 |

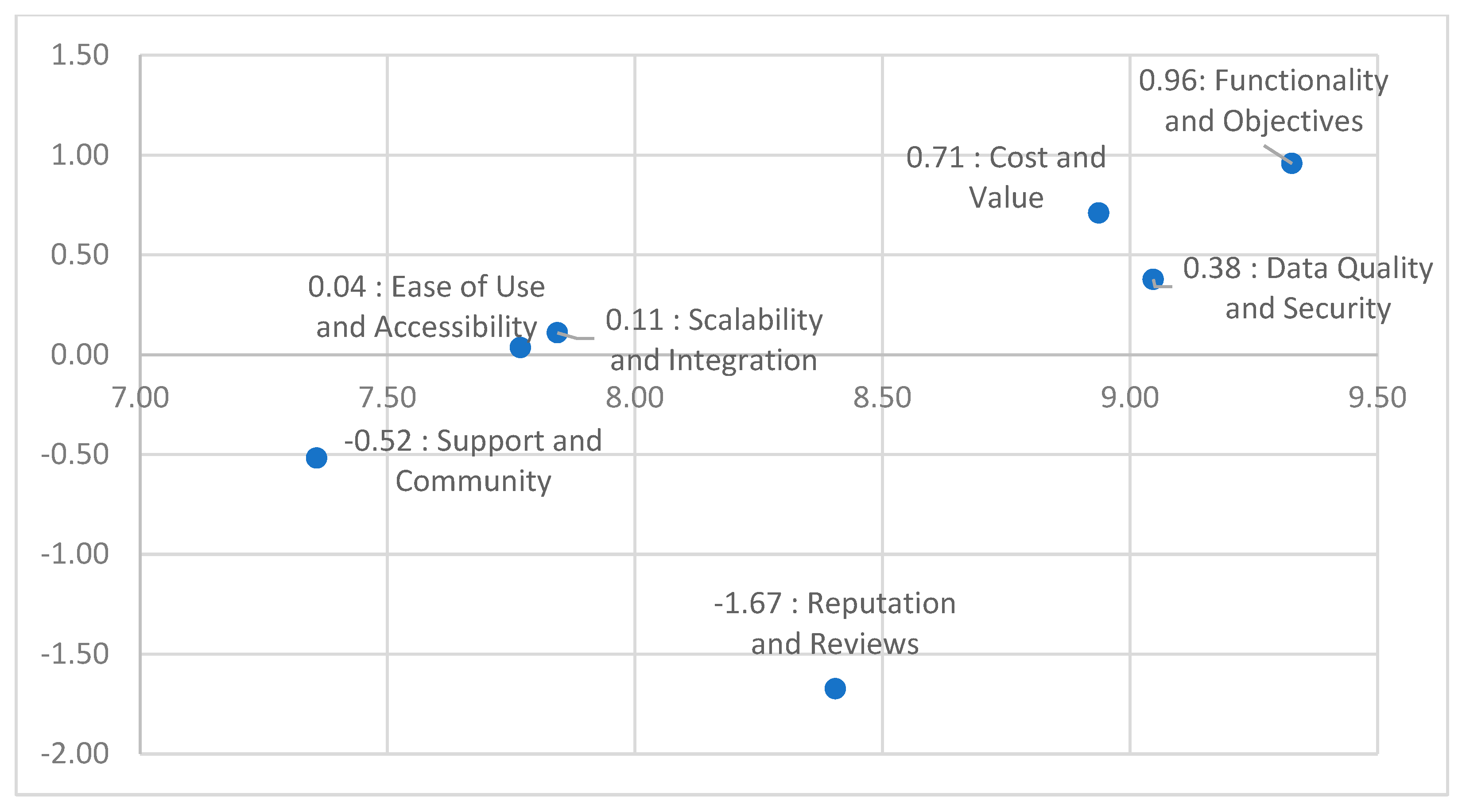

| Criteria Symbols | Criteria | r+ | r− | r+ Rank | w | ||

|---|---|---|---|---|---|---|---|

| FO | Functionality and Objectives | 5.14398 | 4.18527 | 9.32926 | 0.95871 | 1 | 0.15893 |

| UA | Ease of Use and Accessibility | 3.90310 | 3.86768 | 7.77078 | 0.03542 | 6 | 0.13238 |

| SI | Scalability and Integration | 3.97760 | 3.86768 | 7.84528 | 0.10991 | 5 | 0.13365 |

| QS | Data Quality and Security | 4.71343 | 4.33586 | 9.04929 | 0.37758 | 2 | 0.15416 |

| CV | Cost and Value | 4.82463 | 4.11460 | 8.93922 | 0.71003 | 3 | 0.15229 |

| SC | Support and Community | 3.42067 | 3.93847 | 7.35914 | −0.51781 | 7 | 0.12537 |

| RR | Reputation and Reviews | 3.36667 | 5.04052 | 8.40719 | −1.67384 | 4 | 0.14322 |

| Criteria Symbols | Criteria | IDEMATEL | DEMATEL | Revised DEMATEL (LEE) | |||

|---|---|---|---|---|---|---|---|

| r+ | r− | r+ | r− | r+ | r− | ||

| FO | Functionality and Objectives | 9.32926 | 0.95871 | 9.32926 | 0.95871 | 9.32671 | 0.95845 |

| UA | Ease of Use and Accessibility | 7.77078 | 0.03542 | 7.77078 | 0.03542 | 7.76866 | 0.03541 |

| SI | Scalability and Integration | 7.84528 | 0.10991 | 7.84528 | 0.10991 | 7.84313 | 0.10989 |

| QS | Data Quality and Security | 9.04929 | 0.37758 | 9.04929 | 0.37758 | 9.04683 | 0.37748 |

| CV | Cost and Value | 8.93922 | 0.71003 | 8.93922 | 0.71003 | 8.93679 | 0.70984 |

| SC | Support and Community | 7.35914 | −0.51781 | 7.35914 | −0.51781 | 7.35713 | −0.51767 |

| RR | Reputation and Reviews | 8.40719 | −1.67384 | 8.40719 | −1.67384 | 8.40490 | −1.67340 |

| Criterion | ChatGPT (OpenAI) | Perplexity | Scite.ai (Scite (New York, NY, USA) | Gemini (Google DeepMind) | Claude (Anthropic) |

|---|---|---|---|---|---|

| Functionality and Objectives | 4.5 (Conversational AI for text/code generation, no citations) | 4.8 (Real-time citations + file analysis) | 5 (Smart citation context analysis) | 4.7 (Multimodal AI for reasoning across text, images, audio) | 4.6 (Data analysis, and ethical automation) |

| Cost and Value | $20/month for Plus (GPT-4/4.5), free basic tier | $20/month (Pro plan), free limited use | $10–$50/month (Institutional rates, academic/enterprise access needed) | Free tier + enterprise pricing | Custom pricing |

| Data Quality and Security | 4 (Pre-2023 data, no source verification, security respected) | 4.9 (Links to real sources, source citations) | 5 (1.2B+ verified citations, very strong on safety) | 4.5 (Multimodal accuracy) | 4.7 (Content moderation) |

| Ease of Use and Accessibility | 4.5 (Web, app, and API interfaces, user-friendly design) | 4.8 (Browser extensions, simple interface) | 4.6 (intuitive dashboard; browser extensions) | 4.7 (Integrated with Google ecosystem) | 4.5 (Web, API, and enterprise solutions, Multilingual support) |

| Scalability and Integration | 4 (API for developers, plug-ins for various apps, API rate limits) | 4.3 (Collaboration features, Sonar API access) | 4.8 (Integrates with Zotero, EndNote, browser plug-ins) | 5 (Native integration with Google services) | 4.4 (API and enterprise workflow integration) |

| Reputation and Reviews | 4.6 (Widely adopted, 100M+ users) | 4.8 (Speed, accuracy, and citation transparency) | 4.9 (Highly regarded among researchers for citation analysis, context, and integration) | 4.7 (Recognized for multimodal prowess, trusted infrastructure, Google credibility) | 4.5 (High ethical standards, top-rated for safety) |

| Support and Community | 4 (OpenAI forums, support channels, Community forums) | 4.5 (Priority support for Pro, active community) | 4.7 (Institutional webinars, documentation, responsive support; growing reputation in academia) | 4.8 (Google Cloud support, large user base) | 4.6 (Dedicated enterprise support) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radulescu, C.Z.; Radulescu, M. Criteria Analysis for the Selection of a Generative Artificial Intelligence Tool for Academic Research Based on an Improved Group DEMATEL Method. Appl. Sci. 2025, 15, 5416. https://doi.org/10.3390/app15105416

Radulescu CZ, Radulescu M. Criteria Analysis for the Selection of a Generative Artificial Intelligence Tool for Academic Research Based on an Improved Group DEMATEL Method. Applied Sciences. 2025; 15(10):5416. https://doi.org/10.3390/app15105416

Chicago/Turabian StyleRadulescu, Constanta Zoie, and Marius Radulescu. 2025. "Criteria Analysis for the Selection of a Generative Artificial Intelligence Tool for Academic Research Based on an Improved Group DEMATEL Method" Applied Sciences 15, no. 10: 5416. https://doi.org/10.3390/app15105416

APA StyleRadulescu, C. Z., & Radulescu, M. (2025). Criteria Analysis for the Selection of a Generative Artificial Intelligence Tool for Academic Research Based on an Improved Group DEMATEL Method. Applied Sciences, 15(10), 5416. https://doi.org/10.3390/app15105416