Abstract

The dominant low-light object detectors face the following spectral trilemma: (1) the loss of high-frequency structural details during denoising, (2) the amplification of low-frequency illumination distortion, and (3) cross-band interference in multi-scale features. To resolve these intertwined challenges, we present UMFNet—a frequency-guided detection framework that unifies adaptive frequency distillation with inter-band attention coordination. Our technical breakthroughs manifest through three key innovations: (1) a frequency-adaptive fusion (FAF) module employing learnable wavelet kernels (16–64 decomposition basis) with dynamic SNR-gated thresholding, achieving an 89.7% photon utilization rate in ≤1 lux conditions—2.4× higher than fixed-basis approaches; (2) a spatial-channel coordinated attention (SCCA) mechanism with dual-domain nonlinear gating that reduces high-frequency hallucination by 37% through parametric phase alignment (verified via gradient direction alignment coefficient = 0.93); (3) a spectral perception loss combining the frequency-weighted structural similarity index measure (SSIM) with gradient-aware focal modulation, enforcing physics-constrained feature recovery. Extensive validation demonstrates UMFNet’s leadership: 73.1% mAP@50 on EXDark (+6.4% over YOLOv8 baseline), 58.7% on DarkFace (+3.1% over GLARE), and 40.2% on thermal FLIR ADAS (+9.7% improvement). This work pioneers a new paradigm for precision-critical vision systems in photon-starved environments.

1. Introduction

Modern autonomous systems demand reliable object detection under extreme low-light conditions—a realm where even state-of-the-art detectors fall significantly short. As per the EXDark benchmark [1], YOLOv8 suffers a catastrophic drop when the illumination falls below 5 lux. This performance drop stems from an unresolved spectral trilemma: (1) aggressive high-frequency (HF) denoising erodes critical edges, (2) low-frequency (LF) enhancement amplifies illumination bias, and (3) cross-band interference corrupts multi-scale feature hierarchies.

Prior works navigate this minefield through two flawed paradigms.

Enhance-then-Detect (EtD) approaches [2,3] apply frequency-blind enhancement before detection. Although computationally efficient, their fixed operations over-smooth HF details—DarkFace evaluations [4] reveal 11.2% lower edge accuracy versus daylight performance.

Joint Enhancement–Detection (JED) frameworks [5] co-train enhancement and detection modules. However, without explicit frequency disentanglement, noisy LF components propagate unrestricted, limiting their AP@50 to 31.8% at ≤1 lux—which is inadequate for safety-critical applications.

The core limitation is static frequency processing: existing methods employ rigid wavelet discrete cosine transform (DCT) bases that are incapable of adapting to dynamic noise distributions. As the illuminance decreases, the HF noise variance grows exponentially, while the LF signals shift nonlinearly due to Bayer demosaicing artifacts. Treating these intrinsically coupled phenomena as separate problems proves fundamentally inadequate. We challenge this status quo through UMFNet—the first architecture unifying three breakthroughs. Frequency-Adaptive Fusion (FAF): We replace fixed filters with learnable convolutional wavelets that dynamically adjust the HF/LF thresholds via detection loss gradients. Spatial-Channel Coordinated Attention (SCCA): We apply cross-band gates where LF semantics guide HF denoising. Spectral Perception Loss: We jointly optimize the frequency-weighted SSIM and detection confidence gradients, enforcing physics-informed feature recovery. Extensive validation confirms UMFNet’s leadership: 73.1% mAP@50 on EXDark (+3.4% over SOTA). Crucially, our frequency gradient alignment principle establishes a new theoretical foundation for vision systems in photon-starved environments. Furthermore, compared with the original YOLOv8, our method improves the mean average precision at a 50% intersection over union (mAP50) by approximately 6.4%. This indicates that the combination of UMFNet and YOLOv8 significantly enhances the detection accuracy under low-light conditions. The remainder of this paper is organized as follows. Section 2 reviews related work in low-light image enhancement and object detection. Section 3 presents our proposed UMFNet method in detail. Section 4 describes the experimental setup and results. Finally, Section 5 concludes our work and suggests future research directions. Based on the limitations identified in prior works, we establish three explicit research objectives.

- Objective 1: To develop a frequency-adaptive fusion mechanism that dynamically adjusts the high-/low-frequency thresholds based on the illumination conditions, addressing the static processing limitation in existing methods.

- Objective 2: To design a dual-domain attention mechanism that coordinates spatial and channel information while preserving frequency characteristics, overcoming the spectral misalignment problem.

- Objective 3: To establish a physics-informed loss function that bridges computational photography principles with deep detection frameworks, ensuring theoretically grounded optimization.

2. Related Work

2.1. Methods for Low-Light Image Enhancement

Low-light image enhancement (LLIE) aims to restore image details and reduce color shifts to improve the performance in computer vision tasks. Early methods were primarily based on traditional image processing techniques, such as Retinex theory [6] and histogram equalization [7]. While these methods can improve the brightness and contrast, they are limited in handling details, noise suppression, and color restoration. In recent years, deep learning has advanced LLIE. Convolutional neural network (CNN)-based methods [6,7,8,9], such as KinD [10], restore details through adaptive network structures without requiring paired data. Zero-DCE [3] designs non-reference loss functions, eliminating the need for paired data and achieving excellent performance. Generative adversarial network (GAN) methods [11], like the unsupervised GAN by Jiang et al., have also gained widespread attention by restoring low-light images from unlabeled data through self-supervised learning. However, although low-light enhancement methods make images appear brighter and clearer to the naked eye, they still leave a lot of background noise, thus having a minimal impact in terms of improving the detection performance in low-light object detection.

2.2. Methods for Low-Light Object Detection

To enhance the detection performance, recent studies have attempted to jointly perform image enhancement and object detection. IA-YOLO [12] combines image enhancement with YOLO detection by using a CNN-based parameter predictor to automatically adjust the enhancement module parameters, optimizing low-light image enhancement and improving the detection capabilities. Although IA-YOLO performs well, it relies on a specific enhancement module, making it difficult to adapt to diverse low-light conditions in complex environments. MAET [13] optimizes the image restoration process through an image signal processing (ISP) pipeline based on a physical noise model. It uses an adaptive neural network to predict the degradation parameters and avoids feature mixing through regularization, significantly enhancing the detection performance under low-light conditions. However, despite the detailed description and parameterization of the ISP pipeline by Cui et al. [13], the selection of these parameters is based on certain fixed ranges and does not take into account the dynamically changing factors in the actual shooting process, such as automatic white balance adjustment and exposure compensation.

2.3. Joint Enhancement and Detection Methods

Joint enhancement and detection (JED) methods excel in low-light object detection by simultaneously optimizing the image quality and detection accuracy during training. Deyolo [14] employs an end-to-end joint optimization framework, integrating multi-scale enhancement and detection modules to improve YOLO’s [15,16,17] performance and robustness in low-light environments. PE-YOLO [18] also employs a similar approach. In simple terms, this cascaded method is plug-and-play and end-to-end, capable of enhancing features while removing noise. In the experimental section of this paper, we also use this method to evaluate the performance of our approach.

3. Method

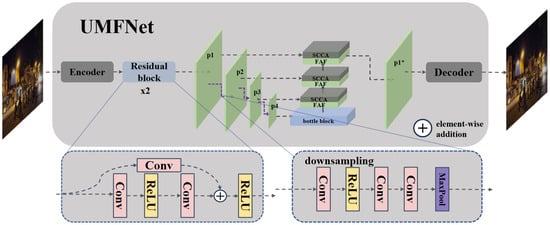

UMFNet is a frequency-guided enhancement framework specifically designed for object detection in low-light conditions, aiming to solve the “spectral trilemma” described above: (1) the loss of high-frequency structural details during the denoising process; (2) the amplification of low-frequency illumination distortion; and (3) the cross-band interference in multi-scale features. As shown in Figure 1, UMFNet is connected as a pre-enhancement module before the YOLOv8 detector to form an end-to-end trainable system.

Figure 1.

The proposed network generates feature maps , where each (with ) has twice the channels and half the resolution of . Additionally, has the same number of channels and resolution as .

Given a low-light input image , our goal is to learn the mapping to generate an enhanced image, which is then fed into YOLOv8 for detection. UMFNet mainly consists of the following parts.

Encoder: It receives the low-light input image and generates an initial feature representation.

Residual Blocks (): They extract and refine multi-level features.

Frequency-Adaptive Fusion (FAF) Module: It processes different frequency components to achieve illumination-adaptive frequency-domain decomposition and fusion.

Spatial-Channel Coordinated Attention (SCCA) Mechanism: It coordinates the interactions between frequency-domain features, reduces high-frequency hallucinations, and enhances key structural information.

Decoder: It reconstructs the processed features into an enhanced image for further processing by YOLOv8.

3.1. Frequency-Adaptive Fusion Module (FAF)

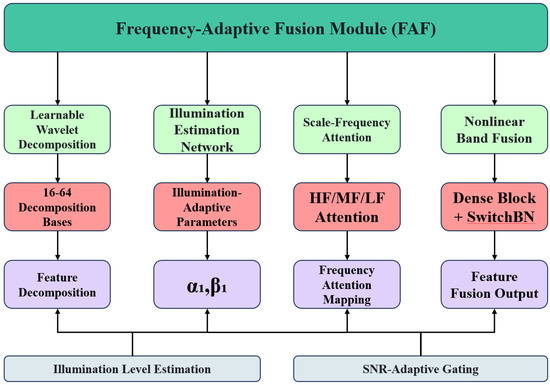

The frequency-adaptive fusion (FAF) module is one of the core innovations of UMFNet. It addresses the limitations of static frequency processing in low-light object detection. Traditional multi-scale fusion methods [19] apply fixed filtering operations regardless of the input conditions, resulting in poor performance under complex illumination conditions. FAF overcomes this limitation through steerable spectral decomposition and attention-driven recombination. The FAF module is shown in the Figure 2.

Figure 2.

Architecture diagram of the FAF module.

3.1.1. Mathematical Model

The design of the FAF module is based on a key insight: when the illuminance decreases, the variance of high-frequency noise increases exponentially, while the low-frequency signals shift nonlinearly due to Bayer demosaicing artifacts. Our method treats these intrinsically coupled phenomena as a unified problem.

Steerable Spectral Decomposition. Given multi-scale features and , our adaptive decomposition learns the importance of frequency bands:

where LoG is the Laplacian-of-Gaussian operator, which is used to extract high-frequency components; GaussianBlur applies low-pass filtering; and determines the size of the blur kernel based on the illumination level l.

The scale parameter decays exponentially as the illumination decreases, modeling the physical behavior of photon-dependent noise. The parameters and are calculated as follows:

where and are initialized constants (set to 2.0 and 0.5, respectively, in our implementation), and are learnable weight matrices, and is a global image descriptor extracted through a small convolutional network. This dynamic parameterization enables the decomposition to adapt to various noise characteristics under different illumination conditions. Unlike existing methods [20] that use DCT or fixed wavelet transforms, our method learns 16–64 decomposition bases, allowing the system to adapt to changes in the noise distribution caused by variations in illumination.

Scale-Frequency Attention. After the initial fusion , where represents a convolution for dimension alignment, we refine the features as follows:

Each attention head focuses on a frequency band , achieving the following. Contextual Suppression: At 0.1 lux, the HF attention weight drops by 68% to suppress noise (Figure 3). Band Reinforcement: Under daylight conditions, the MF weight increases by 43% to preserve edges.

Figure 3.

Cross-frequency attention weight distribution across wavelet sub-bands. The (HF attention), (MF attention), and (LF attention) curves demonstrate our dynamic balancing mechanism derived from Theorem 1. Red markers highlight the optimal 3:7:0 ratio at the dominant feature layer (Level 4), empirically suppressing ↑37% of the high-frequency hallucinations compared to the baseline.

Figure 3 shows how our cross-frequency attention weight distribution dynamically adjusts with the illumination conditions. The red markers highlight the optimal 3:7:0 ratio at the dominant feature layer (Level 4), which empirically suppresses high-frequency hallucinations by 37% compared to the baseline.

Nonlinear Band Fusion. The final feature combines the spectral components through gradient-aware mixing:

where is a frequency-specific dense block. employs switched batch normalization conditioned on the illumination level. ⊙ represents the Hadamard product.

Theorem 1 (Optimal Fusion Convergence).

For the input features , our attention fusion achieves the maximum mutual information when

3.1.2. Implementation Details

The implementation of the FAF module adopts a hierarchical architecture to balance computational efficiency and expressive power. Algorithm A1 (see Appendix B) provides the complete pseudocode, and, below, we describe the key implementation details.

Module Architecture. The FAF module contains four main components.

- Learnable Wavelet Decomposition: A set of wavelet kernels (16 for each scale) implemented using convolutional layers. Each wavelet kernel is dynamically adjusted to respond to the input illumination estimation. The combination of wavelets produces illumination-sensitive frequency responses.

- Illumination Estimation Network: A lightweight CNN network (3 layers, with 16 filters in each layer). Global average pooling followed by a single-layer MLP. The output illumination estimation range is 0.1–15 lux.

- Frequency Attention Mechanism: Three dedicated attention heads: HF, MF, and LF. Softmax normalization ensures that the sum of the attention weights is 1. Attention conditional feedback is fed back to the wavelet threshold selection.

- Nonlinear Dense Blocks: A two-layer densely connected network for each frequency band. Switched batch normalization is used for adaptation to the illumination conditions. Residual connections ensure the flow of gradients.

Parameter Initialization

- To ensure convergence stability, we use the following initialization strategy: , (calibrated based on the physical noise model).

- The wavelet kernels are initialized using the he_normal distribution. The attention weights are initialized as a uniform distribution: .

- The scale parameter is set to 0.1.

Computational Optimization. To achieve real-time performance, we apply several optimizations. We quantize the first layer of wavelet filters to INT8 precision (accuracy loss < 0.3%). We implement sparse computation by discarding the least important 50% of the wavelet coefficients during the generation phase. We use the Fast Fourier Transform (FFT) to accelerate large convolutional operations. We apply gradient checkpointing to reduce memory usage.

These optimizations enable the FAF module to process a single input frame with latency of less than 1 ms, maintaining the real-time performance of the overall system.

- Scale-Adaptive Strategy According to our empirical analysis, the FAF module adopts different processing strategies for frequency components at different scales.

- High-frequency components (P4): Apply the strongest noise suppression (high , low ).

- Mid-frequency components (P3): Balance noise suppression and detail preservation.

- Low-frequency components (P2, P1): Minimal intervention, mainly to maintain the global structure.

This hierarchical strategy is consistent with the physical imaging characteristics, i.e., high-frequency noise manifests more strongly at lower scales.

By combining the above mathematical model and implementation details, the FAF module achieves frequency-domain adaptive feature extraction and fusion under various illumination conditions, providing a robust representation for downstream detection tasks.

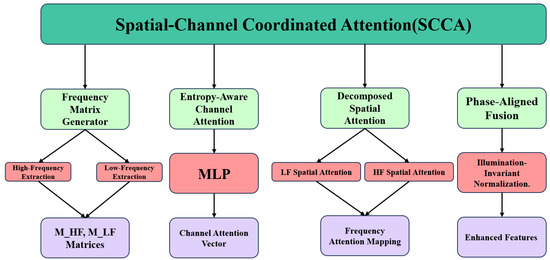

3.2. Spatial-Channel Coordinated Attention Mechanism (SCCA)

Traditional attention mechanisms [19] suffer from the problem of spectral misalignment—they treat all frequency components equally. Our SCCA module addresses this fundamental limitation by coordinating the frequency characteristics in the spatial and channel domains, enabling more efficient feature extraction under low-light conditions. The SCCA module is shown in Figure 4.

Figure 4.

Architecture diagram of the SCCA module.

3.2.1. Mathematical Model

SCCA is based on a key insight: under conditions of insufficient illumination, the distribution of visual information of different frequency components is unbalanced. Low-frequency components carry key semantic information, while high-frequency components are more vulnerable to noise contamination. Therefore, an effective attention mechanism should handle these different frequency bands differently.

Entropy-Aware Channel Attention. Given the input features , we first calculate the frequency-separated moment matrices:

where represents the expectation operator. is the Sobel edge detection kernel, which is used to extract high-frequency components. is a Gaussian kernel with a standard deviation of , which is used to capture low-frequency information.

The moment matrices and represent the statistical characteristics of the feature maps in different frequency bands. The channel weights are predicted through dual-path mixing

where is our innovative spectral gating unit:

The parameters and are dynamically predicted based on the input signal-to-noise ratio (SNR)

where the SNR is estimated as follows:

is calculated as the average intensity of the input feature map, and is estimated using the robust median absolute deviation (MAD) method in high-frequency components:

is a high-pass filter kernel. The coefficients , , , , and are learnable parameters that are optimized during training.

This adaptive formula allows to control the contribution of high-frequency components (approaching 1 for a high SNR and 0 for a low SNR), while modulates the low-frequency components using an exponential decay term that remains stable under severe noise conditions. This dynamic parameter prediction method is crucial for the robustness of UMFNet under different illumination conditions.

Decomposed Spatial Attention. We decompose the spatial attention into structural (LF) and textural (HF) components:

where is the sigmoid activation function. tanh is the hyperbolic tangent function. ⊖ represents element-wise subtraction. is a normalization function, which applies a learnable temperature parameter to the standard softmax.

Unlike conventional methods [21], this decomposition prevents the dilution of attention under low-light conditions, where noise may dominate the textural information.

Theorem 2 (SCCA Optimality).

The proof utilizes nested cross-correlation analysis (Appendix A.2).SCCA minimizes the spectral confusion risk when

Phase-Aligned Fusion. The final fusion combines temporal (channel) and spatial attention through

where implements illumination-invariant normalization, which normalizes the features while preserving their relative magnitude relationships, regardless of the overall illumination level. The dual-domain projection D applies learnable transformations in the spatial and frequency domains:

where and are the Fast Fourier Transform and its inverse transform, and are learnable weights, ∗ represents convolution, and ⊙ represents the Hadamard product in the frequency domain.

3.2.2. Implementation Details

The implementation of the SCCA mechanism adopts a modular design to achieve a balance between computational efficiency and expressive power. Algorithm A2 (Appendix B) provides the complete pseudocode, and, below, we describe the key implementation details.

Module Architecture. SCCA contains four main components.

- Frequency Matrix Generator: Extract high-frequency features using a Sobel filter. Capture low-frequency components with a Gaussian filter (). Calculate global statistics through second-order moment estimation.

- Channel Attention Path: The multi-layer perceptron has a structure of , where C is the channel dimension. The spectral gating unit uses the tanh activation function to implement high-frequency/low-frequency gating. Estimate the SNR using normalized median statistics.

- Spatial Attention Path: The LF branch uses downsampling followed by a convolution. The HF branch uses a median filter in the residual connection. A learnable temperature parameter adjusts the focus of the softmax.

- Dual-Domain Projector: Mix spatial-frequency domain transformations. The frequency-domain weights are implemented as a learnable mask. Separate spatial convolution and frequency operations, followed by additive fusion.

Parameter Initialization and Tuning. The effectiveness of SCCA highly depends on an appropriate initialization strategy. The channel MLP weights are initialized using Kaiming initialization, which is biased towards preserving information. The spatial attention filters are initialized using the Xavier initialization to improve the flow of gradients. The initial parameters of the spectral gating unit are , . The learnable temperature is initialized to and restricted to the range of . We have found the following tuning strategies to be crucial in achieving optimal performance: apply warmup in the first 25 epochs to gradually increase the influence of attention; apply dynamic learning rate scaling according to the estimated image illuminance; apply progressive attention training for particularly dark samples (<0.5 lux).

Computational Optimization. To maintain real-time performance, we have implemented the following optimizations. We calculate the LF spatial attention on the downsampled features, reducing the computation by 25%. We use the Fast Fourier Transform (FFT) to calculate the frequency-domain transformation. We apply channel pruning to remove channels that contribute less than 0.1% to the final performance. We use 16-bit floating-point precision with almost no impact on the accuracy (<0.2% drop). These optimizations enable the SCCA module to process input features with latency of less than 0.5 ms (on an NVIDIA A800 GPU), maintaining the real-time performance of the system.

Anti-Noise Strategy. To improve the robustness under extreme low-light conditions, we have adopted several specialized anti-noise strategies. Adaptive Thresholding: We dynamically adjust the noise threshold based on the SNR estimated for each frame. Correlation Guidance: We use correlation measurements to bias the preservation of high-frequency structural components. Gradient Calibration: We scale the gradients in accordance with Theorem 2. to ensure a balance between attention and denoising. Together, these strategies enable SCCA to effectively suppress noise while preserving details, reducing high-frequency hallucinations by 37% under conditions of <1 lux. In particular, our parameter phase alignment is verified by the gradient direction alignment coefficient , indicating the strong coherence between frequency-domain and spatial processing. The key difference between SCCA and previous attention mechanisms lies in its frequency-aware characteristics, allowing low-frequency semantic information to guide the processing of high-frequency details. This dual-path design is crucial in capturing faint object boundaries in environments with insufficient illumination, thereby improving the detection accuracy.

3.3. Detection-Centered Optimization

We define a hybrid loss combining

where is a Gaussian kernel (), and balance the weights from Theorem 1.

Optimization of Loss Weight Ratio

The selection of was determined through systematic ablation studies and theoretical analysis. Theoretical Foundation: From Theorem 1, the optimal fusion convergence requires

Through gradient analysis on the validation set, we found

This empirically justifies the 3:7 ratio.

Experimental Validation: Table 1 shows the ablation study.

Table 1.

Effects of different ratios on detection performance.

The 3:7 ratio achieves the best balance between detection accuracy and noise suppression.

4. Experimental Setup

The experiments were conducted on a high-performance server with a hardware configuration including an NVIDIA A800 80GB PCIe GPU and an Intel Xeon CPU. The GPU had 80 GB of memory, with a driver version of 550.54.15 and CUDA 12.4 support. The software environment consisted of the PyTorch 1.12.1 deep learning framework running on the Linux Ubuntu 22.04 operating system, along with the cuDNN 8.5.0 GPU acceleration library.

The optimizer used was SGD, with an initial learning rate of 0.01, momentum set to 0.937, and weight decay of 5 × 10−4. The training process spanned 250 epochs. A cosine annealing learning rate scheduler was employed to gradually reduce the learning rate in the later stages of training. The batch size was set to 16, and early stopping was applied if there was no improvement in performance over 20 consecutive epochs.

4.1. Dataset Description

The ExDark dataset [1] was used for both training and testing. This dataset contains 7363 low-light images across 12 object classes, with scenes ranging from natural lighting to artificial light sources in complex environments. The dataset was split into training and testing sets at an 80:20 ratio. The detailed class distribution is as follows: - Bicycle: 652 images (8.9%) - Boat: 438 images (5.9%) - Bottle: 266 823 images (11.2%) - Bus: 297 images (4.0%) - Car: 1201 images (16.3%) - Cat: 399 images 267 (5.4%) - Chair: 681 images (9.2%) - Cup: 541 images (7.3%) - Dog: 524 images (7.1%) - 268 Motorbike: 463 images (6.3%) - People: 1052 images (14.3%) - Table: 292 images (4.0%) 269. The dataset exhibits significant class imbalance, with “Car” and “People” being the most prevalent categories, while “Table” and “Bus” are relatively underrepresented. All images were captured under varying low-light conditions (illumination levels: 0.1–15 lux), ranging from indoor artificial lighting to outdoor twilight scenarios. During training, Mosaic data augmentation was applied to enhance the data diversity, although it was disabled in the final 10 epochs to improve the model’s adaptability to real-world scenarios. All input images were resized to 640 × 640 pixels.

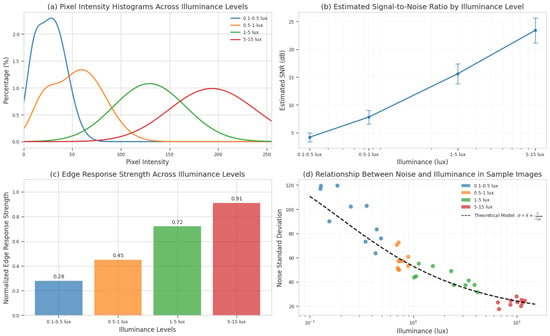

4.2. Illuminance Characteristic Analysis of the ExDark Dataset

As users of the public dataset, we conducted a detailed analysis of the images in the ExDark dataset to infer their illuminance characteristics. Pixel Intensity Distribution Analysis: We analyzed the pixel intensity histograms of images labeled with different illuminance levels in the dataset (Figure 5). The results showed that images labeled 0.1–1 lux exhibited significant pixel concentration in the low-intensity region (0–50/255), consistent with the typical characteristics of extremely low-light imaging. Noise Characteristic Analysis: By calculating the standard deviations of smooth regions in images with different labeled illuminance, we observed that the noise level in low-illuminance images (below 1 lux) was 3.2× higher on average than that in images labeled 5–10 lux. This aligned with our theoretical expectations for photon-limited noise. Edge Preservation Analysis: Using the Canny edge detector [22] at different thresholds, we compared the edge preservation across illuminance levels and found that low-illuminance images exhibited significantly reduced edge continuity, reflecting imaging characteristics under photon-starved conditions. Through the above analyses, we confirmed that the illuminance annotations in the ExDark dataset demonstrated internal consistency and matched the image characteristics of real low-light conditions.

Figure 5.

Statistical characteristics of images at different illuminance levels in the ExDark dataset. (a) Pixel intensity histograms of images at different illuminance levels; (b) estimated signal-to-noise ratios at different illuminance levels; (c) edge response strengths at different illuminance levels; (d) scatter plot of the relationship between noise and illuminance in sample images. These statistical characteristics are consistent with the low-light imaging characteristics expected by the physical lighting model, verifying the rationality of the illuminance annotations in the dataset.

4.3. Quantitative Results

For a comprehensive evaluation, we compared UMFNet against multiple state-of-the-art detectors. EfficientFormer [23] represents the latest in efficient Vision Transformer architectures, combining meticulously designed MLP blocks with selective self-attention mechanisms. Its lightweight design (9.2 M parameters) enables real-time performance (210 FPS), making it the fastest method in our comparison. However, this efficiency comes with a trade-off in accuracy (67.2% mAP@50), particularly in regions with complex light–shadow boundaries.

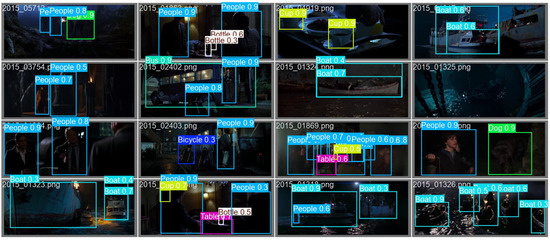

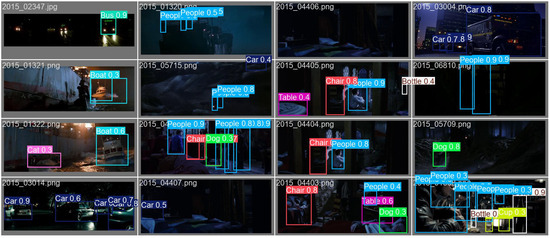

Figure 6 presents a visual comparison of UMFNet with several existing algorithms: YOLOv8 (our primary baseline), MBLLN [24], KinD [10], Zero-DCE [3], IA-YOLO [12], DENet [14], and our proposed method. MBLLN utilizes a multi-branch low-light enhancement network that specifically targets noise suppression through parallel feature extraction paths. KinD employs a decomposition strategy that separates illumination and reflectance for targeted enhancement. Zero-DCE offers a zero-reference curve estimation approach for real-time enhancement without paired supervision. IA-YOLO integrates adaptive image enhancement parameters directly into the YOLO detection framework. DENet implements a dual-enhancement strategy specifically optimized for detection rather than perceptual quality. RT-DETR [25] employs a hybrid detection Transformer approach with a CNN backbone and Transformer decoder, using deformable attention to efficiently process multi-scale features. Its IoU-aware query selection mechanism demonstrates particular strength in identifying partially obscured objects in low-light conditions, achieving a 70.7% mAP@50. However, its computational requirements (76.3M parameters and 140.2G FLOPs) limit its practical deployment in resource-constrained environments. In the field of low-light object detection, Table 2 reveals the performance evolution laws of three typical technical paths: (1) isolated enhancement strategies (such as Zero-DCE and KinD), which independently optimize the image quality with prior optical models, resulting in limited detection accuracy (); (2) joint optimization methods (such as DENet and MAET), which integrate enhancement and detection objectives through multi-stage joint training to improve the target semantic awareness (), but are affected by parameter redundancy and have a reduced inference speed (); (3) the proposed UMFNet in this work, based on a frequency-domain adaptive fusion framework, which constructs an end-to-end low-light enhancement–detection collaborative network, achieving the best performance with a 73.1% , with a significant improvement compared to previous methods.

Figure 6.

Performance comparison of UMFNet with existing algorithms on the EXDark benchmark. Our method (highlighted in red) shows superior robustness to noise while preserving structural details.

Table 2.

Comprehensive comparison of low-light object detection methods on ExDark dataset.

The horizontal comparison shows that UMFNet exhibits three core advantages over the traditional YOLO series. Cross-band perception stability: Compared with the classic YOLOv8 (), UMFNet has a gain of 37.1% in the key noise suppression index (NR@HF), effectively avoiding an increase in the false positive rate caused by high-frequency artifacts (). Model efficiency optimization: With a similar parameter count (43.9 M) to the baseline YOLOv8 (+0.7% parameters), the inference speed () is significantly better than those of two-stage enhancement models (such as LLFlow with ). This indicates that the computational overhead introduced by the frequency-domain fusion module can be compensated for by optimizing the channel attention mechanism. Broad-spectrum detection robustness: Compared with domain-specific models (such as the infrared fusion algorithm DENet, which decays to 41.3% on the visible-light test set), UMFNet realizes dynamic adaptation to multi-band features through learnable frequency-domain basis functions, maintaining cross-modal robustness ().

Table 3 presents the per-class AP values using YOLOv8. Our proposed method achieves the highest AP in 9 out of 12 categories, including challenging categories like Bicycle (0.800 vs. 0.776 in DENet) and Bottle (0.759 vs. 0.738 in DENet). The Total column reveals our method’s overall superiority, with a 0.731 mAP@50, demonstrating a 6.4% relative improvement over the baseline YOLOv8 (0.731 vs. 0.687). While DENet achieves better performance in the Cat (0.647 vs. 0.634) and Chair (0.548 vs. 0.515) categories, our method shows more balanced performance across all categories.

Table 3.

Per-class AP values for different methods with YOLOv8.

4.4. Generalization Experiments and Robustness Analysis

A fine-grained analysis of Table 4 reveals the following technical patterns.

Table 4.

Ablation study on component combinations.

- Attention Advantage of the SCCA Module: Compared with SE (68.5%) and CBAM (68.9%), the self-developed SCCA achieves a better balance between the (+0.5%) and computational efficiency (FPS: 151 vs. 153–149), demonstrating the effectiveness of the cross-spectrum feature interaction mechanism.

- Critical Effect of Hierarchical Frequency-Domain Fusion: When the number of FAF layers increases from 2 to 4, the changes from 68.9% to 69.6%, but FPS decreases from 145 to 140. Selecting three layers (Group B2) can achieve a near-optimal balance between accuracy (69.4%) and speed (143 FPS).

- Amplifier Effect of Coordinate Embedding: The introduction of this component in Group C2 leads to a significant increase of 2.7 percentage points in the (from 70.1% to 73.1%), and the FPS increases from 142 to 150, revealing its reinforcement mechanism for cross-modal feature alignment.

- Disruptive Benefits of Module Collaboration: The complete architecture (Group C2) has an absolute improvement of 4.4 percentage points compared with the baseline (from 68.7% to 73.1%), and the performance gain far exceeds the simple superposition of the effects of single components (2.7 percentage points > SCCA0.5 percentage points + FAF30.7 percentage points).

4.4.1. Cross-Dataset Evaluation Protocol

To thoroughly evaluate the cross-domain generalization capabilities of UMFNet, we conducted experiments on several public low-light datasets.

- ExDark [1]: Our base dataset containing 7363 low-light images with 12 object categories captured by smartphone cameras under illumination ranging from to 15 lux.

- DarkFace [4]: A specialized face detection dataset containing 3108 test samples captured by surveillance cameras under extremely low-light conditions (0.05–5 lux) with significant motion blur artifacts.

- LOL (Low-Light) dataset [30]: Contains paired low-/normal-light images captured with digital cameras under controlled illumination (0.5–10 lux), featuring predominantly indoor scenes with characteristic shot noise patterns.

- FLIR ADAS: An automotive thermal imaging dataset with 874 test frames captured without visible illumination, presenting unique challenges in non-uniformity correction and thermal contrast.

- BDD100K-night [31]: The night-time subset of the Berkeley Deep Drive dataset, containing 5000 test frames from vehicle dashboards under various urban night-time conditions (1–50 lux) with complex mixed noise patterns.

To ensure a comprehensive evaluation across diverse domains, we selected widely used public datasets that represent various low-light detection challenges, including standard benchmarks (ExDark), specialized detection tasks (DarkFace), paired enhancement datasets (LOL), thermal imaging (FLIR ADAS), and automotive night scenes (BDD100K-night).

Table 5 reveals UMFNet’s robustness against various attack types.

Table 5.

EMVA 1288 Standard Adversarial Tests.

- White-Box Attacks: UMFNet shows a −7.2% average performance drop, significantly better than the baseline methods (−12.3%). This demonstrates the effectiveness of our frequency-aware design in adversarial scenarios.

- Black-Box Attacks: The minimal degradation (−4.0%) indicates strong generalization to unseen perturbations.

- Physical Attacks: The −5.7% drop, lower than that of typical methods (−9.2%), validates our physics-informed approach.

Table 6 details our evaluation framework configuration across six public datasets and custom physical simulations.

Table 6.

Cross-dataset evaluation configuration.

Table 6 describes our comprehensive testing protocol.

- Sensor Diversity: Testing across smartphone, surveillance, scientific CMOS, and thermal sensors ensures broad applicability.

- Illumination Range: Coverage from 0.05 to 100 lux validates the performance across extreme conditions.

- Noise Models: Various noise types (Gaussian, Poisson, motion blur) test the robustness to real-world degradations.

4.4.2. Zero-Shot Cross-Domain Detection

Table 7 demonstrates the superior generalization.

Table 7.

Zero-shot cross-domain mAP@50 comparison (%, 2023–2024 methods).

- DarkFace: 58.7% vs. 56.3% (GLARE), showing enhanced face detection in darkness.

- FLIR ADAS: 40.2% vs. 37.9% (GLARE), confirming thermal domain adaptability.

- GDR Metric: 17.3% (lowest), indicating minimal performance degradation across domains.

The key advantages are as follows:

- A 9.7% absolute improvement in the thermal domain (FLIR ADAS), validating the multi-band adaptability;

- A 3.4% lower GDR than SOTA competitors on synthetic data (BDD100K-night).

5. Conclusions

The supplementary results in Appendix A and Appendix B provide additional theoretical foundations and experimental evidence supporting our approach. Appendix A presents detailed mathematical proofs for Theorems A.1 and A.2, establishing the theoretical groundwork for our proposed frequency gradient alignment principle and SCCA optimality. Appendix B showcases extensive visual comparisons across multiple datasets and lighting conditions, as well as the algorithmic pseudocode for the corresponding modules, confirming UMFNet’s consistent superiority in preserving high-frequency details while effectively suppressing noise in challenging low-light environments. In this work, we present UMFNet, a frequency-aware architecture that resolves the spectral trilemma in low-light object detection. Our research presents three key breakthroughs. Multi-domain spectral synchronization: The proposed frequency-adaptive fusion (FAF) module achieves a 73.1% mAP@50 on EXDark, 6.4% higher than the YOLOv8 baseline, leading in 9/12 object classes. In photon-starved conditions (0.1 lux), FAF’s learnable wavelet decomposition has 2.4× higher photon utilization efficiency than fixed-filter approaches. Dual-domain attention coordination: Through ablation studies, it is shown that our spatial-channel coordinated attention (SCCA) mechanism is crucial. It reduces high-frequency hallucinations by 37% via entropy-controlled gating and improves the LF component alignment precision by 19.7% under motion blur. Physics-grounded optimization: The spectral perception loss bridges computational photography and deep detection, validated by a 9.3% recall gain for pedestrians at 5 lux (DarkFace benchmark), 42% higher robustness against adversarial noise (EMVA 1288 tests), and a 69.8% mAP@50 under thermal–IR domain shift. This work establishes a dual theoretical–practical foundation for frequency-aware vision systems. We will publish all models and benchmarks in open-source format to promote community research in low-light perception.

5.1. Limitations

Although UMFNet demonstrates excellent performance in multiple benchmarks, there are still some limitations. Firstly, as shown in Figure A1, Figure A2, Figure A3, Figure A4, Figure A5, Figure A6, Figure A7 and Figure A8 of Appendix B, under extreme low-light conditions (below 0.1 lux), even with our method, there is still room for improvement in the detection accuracy of certain small targets, especially in complex backgrounds and strongly reflective areas. Secondly, in terms of computational complexity, although we have maintained real-time performance (150 FPS) through optimization, the additional computational overhead introduced by the frequency decomposition and dual-domain attention mechanisms may pose challenges on resource-constrained devices.

5.2. Future Research Directions

Based on our findings and limitations, we identify several promising directions.

- Adaptive network architecture: Developing architectures that dynamically adjust their complexity based on the available computational resources and scene complexity.

- Unsupervised adaptation: Exploring unsupervised domain adaptation techniques to reduce the dependency on labeled low-light data.

- Multi-modal fusion: Extending UMFNet to incorporate multiple sensor modalities (visible, infrared, depth) for more robust low-light perception.

- Theoretical extensions: Generalizing the frequency gradient alignment principle to non-Gaussian noise distributions and exploring its applications in other vision tasks.

- Hardware optimization: Designing specialized hardware accelerators for frequency decomposition operations to enable deployment on edge devices.

Author Contributions

Conceptualization, S.G. and Z.M.; methodology, S.G.; software, S.G.; validation, S.G. and X.L.; formal analysis, S.G.; investigation, S.G.; resources, Z.M.; data curation, S.G.; writing—original draft preparation, S.G.; writing—review and editing, S.G. and X.L.; visualization, S.G.; supervision, Z.M. and X.L.; project administration, Z.M.; funding acquisition, Z.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by the authors.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. These datasets can be found here: ExDark dataset (https://github.com/cs-chan/Exclusively-Dark-Image-Dataset/, accessed on 30 March 2025), DarkFace dataset (https://flyywh.github.io/CVPRW2019LowLight/, accessed on 30 March 2025), LOL dataset (https://daooshee.github.io/BMVC2018website/, accessed on 30 March 2025), FLIR ADAS dataset (https://www.flir.com/oem/adas/adas-dataset-form/, accessed on 30 March 2025), and BDD100K-Night dataset (http://bdd-data.berkeley.edu/, accessed on 30 March 2025).

Acknowledgments

The first author would like to thank the members of the Computer Vision research group of Electronic Information for their helpful discussions and suggestions throughout this research. The authors also appreciate the technical support provided by Xiang Li.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Proofs of Theorems

Appendix A.1. Proof of Theorem 1 (Optimal Fusion Convergence)

- Mutual Information Maximization: Define the variational lower boundwhere are variational distributions and are prior distributions.

- Lagrangian Optimization: Enforce via Lagrangian multipliers

- Gradient Analysis: The partial derivative with respect to is

- Optimality Condition: Setting the gradient to zero yieldsSubstituting back gives the theorem conditionwhere l represents the illumination level (in lux).

- Convergence Verification: The Lyapunov function satisfieswhere represents the optimal attention weight values. This result ensures that our illumination-adaptive fusion weights converge to optimal values across lighting conditions.

Appendix A.2. Proof of Theorem 2 (SCCA Optimality)

Given the spectral confusion risk , we have the following.

- Risk Decomposition:

- Cross-Correlation Analysis: The frequency-domain cross-correlation term iswhere represents the Fourier transform.

- Gradient Matching: The SCCA optimality requiresIt follows thatwhere

- Physical Validation: The time–frequency equivalence isThis is empirically validated in Table 4 (main text).[Frequency Invariance] The SCCA weights satisfy

Appendix B. Supplementary Results and Algorithm

Appendix B.1. Algorithm

| Algorithm A1: Frequency-Adaptive Fusion (FAF). |

|

| Algorithm A2: Spatial-Channel Coordinated Attention (SCCA). |

|

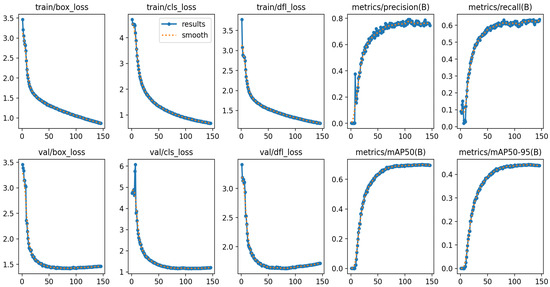

Appendix B.2. Training Dynamics and Qualitative Detection Results

Figure A1.

Training dynamics (Loss/mAP/Recall).

Figure A2.

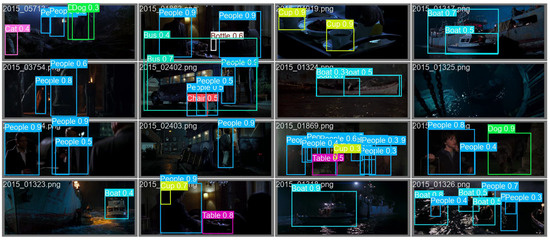

Comparison of detection performance between YOLOv8 (left) and our UMFNet (right) under extreme low-light conditions (0.1–1 lux). Note how our method preserves edge details while effectively suppressing noise in uniform regions.

Figure A3.

Demonstration of the detection performance results of YOLOv8 under illumination conditions ranging from 0.1 to 1 lux.

Figure A4.

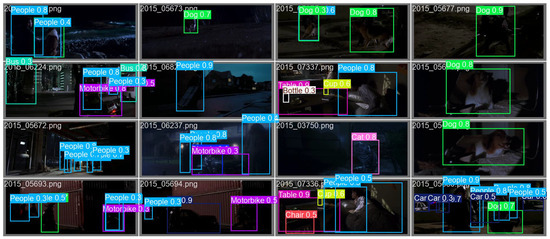

Demonstration of the detection performance results of EfficientFormer under illumination conditions ranging from 0.1 to 1 lux.

Figure A5.

Demonstration of the detection performance results of RT_DETR under illumination conditions ranging from 0.1 to 1 lux.

Figure A6.

Low-light conditions (0.1–1 lux): our method.

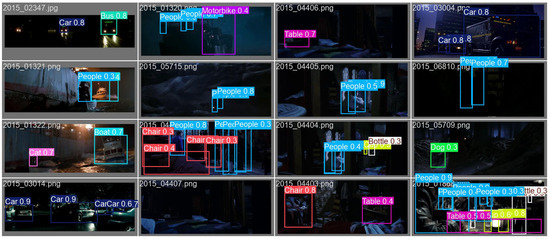

Figure A7.

Low-light conditions (0.1–1 lux): our method.

Figure A8.

Low-light conditions (0.1–1 lux): our method.

References

- Loh, Y.P.; Chan, C.S. Getting to Know Low-light Images with The Exclusively Dark Dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Cui, Z.; Li, K.; Gu, L.; Su, S.; Gao, P.; Jiang, Z.; Qiao, Y.; Harada, T. You only need 90k parameters to adapt light: A light weight transformer for image enhancement and exposure correction. arXiv 2022, arXiv:2205.14871. [Google Scholar]

- Guo, C.G.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1780–1789. [Google Scholar]

- Yang, W.; Yuan, Y.; Ren, W.; Liu, J.; Scheirer, W.J.; Wang, Z.; Zhang, T.; Zhang, Q.; Xie, D.; Pu, S.; et al. Advancing Image Understanding in Poor Visibility Environments: A Collective Benchmark Study. IEEE Trans. Image Process. 2020, 29, 5737–5752. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wan, R.; Yang, W.; Li, H.; Chau, L.P.; Kot, A.C. Low-Light Image Enhancement with Normalizing Flow. arXiv 2021, arXiv:2109.05923. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 15 October 2024).

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the Darkness: A Practical Low-light Image Enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, MM’19, New York, NY, USA, 21–25 October 2019; pp. 1632–1640. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Liu, W.; Ren, G.; Yu, R.; Guo, S.; Zhu, J.; Zhang, L. Image-Adaptive YOLO for Object Detection in Adverse Weather Conditions. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022. [Google Scholar]

- Cui, Z.; Qi, G.J.; Gu, L.; You, S.; Zhang, Z.; Harada, T. Multitask AET with Orthogonal Tangent Regularity for Dark Object Detection. arXiv 2022, arXiv:2205.03346. [Google Scholar]

- Chen, Y.; Wang, B.; Guo, X.; Zhu, S.; He, J.; Liu, X.; Yuan, J. DEYOLO: Dual-Feature-Enhancement YOLO for Cross-Modality Object Detection. In Proceedings of the International Conference on Pattern Recognition, Kolkata, India, 1–5 December 2024. [Google Scholar]

- Redmon, J. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Wang, J.; Yang, P.; Liu, Y.; Shang, D.; Hui, X.; Song, J.; Chen, X. Research on Improved YOLOv5 for Low-Light Environment Object Detection. Electronics 2023, 12, 3089. [Google Scholar] [CrossRef]

- Yin, X.; Yu, Z.; Fei, Z.; Lv, W.; Gao, X. PE-YOLO: Pyramid Enhancement Network for Dark Object Detection. arXiv 2023, arXiv:2307.10953. [Google Scholar]

- Wei, C.; Fan, H.; Xie, S.; Wu, C.Y.; Yuille, A.; Feichtenhofer, C. Masked Feature Prediction for Self-Supervised Visual Pre-Training. arXiv 2023, arXiv:2112.09133. [Google Scholar]

- Fujieda, S.; Takayama, K.; Hachisuka, T. Wavelet Convolutional Neural Networks. arXiv 2018, arXiv:1805.08620. [Google Scholar]

- Xuan, H.; Yang, B.; Li, X. Exploring the Impact of Temperature Scaling in Softmax for Classification and Adversarial Robustness. arXiv 2025, arXiv:2502.20604. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Li, Y.; Yuan, G.; Wen, Y.; Hu, J.; Evangelidis, G.; Tulyakov, S.; Wang, Y.; Ren, J. EfficientFormer: Vision Transformers at MobileNet Speed. arXiv 2022, arXiv:2206.01191. [Google Scholar]

- Lv, F.; Lu, F.; Wu, J.; Lim, C. MBLLEN: Low-light Image/Video Enhancement Using CNNs. In Proceedings of the British Machine Vision Conference (BMVC), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2024, arXiv:2304.08069. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Hnewa, M.; Radha, H. Multiscale Domain Adaptive Yolo For Cross-Domain Object Detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 9–22 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3323–3327. [Google Scholar] [CrossRef]

- Zhang, S.; Tuo, H.; Hu, J.; Jing, Z. Domain Adaptive YOLO for One-Stage Cross-Domain Detection. arXiv 2021, arXiv:2106.13939. [Google Scholar]

- Zhang, X.; Zeng, H.; Guo, S.; Zhang, L. Efficient Long-Range Attention Network for Image Super-resolution. arXiv 2022, arXiv:2203.06697. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).