3D Point Cloud Dataset of Heavy Construction Equipment

Abstract

1. Introduction

1.1. Research Background and Objectives

1.2. Research Scope and Methods

2. Related Works

2.1. Review of Classification and Segmentation Dataset in the Construction Field

2.2. Review of Large-Scale 3D Point Clouds Datasets for Classification and Segmentation

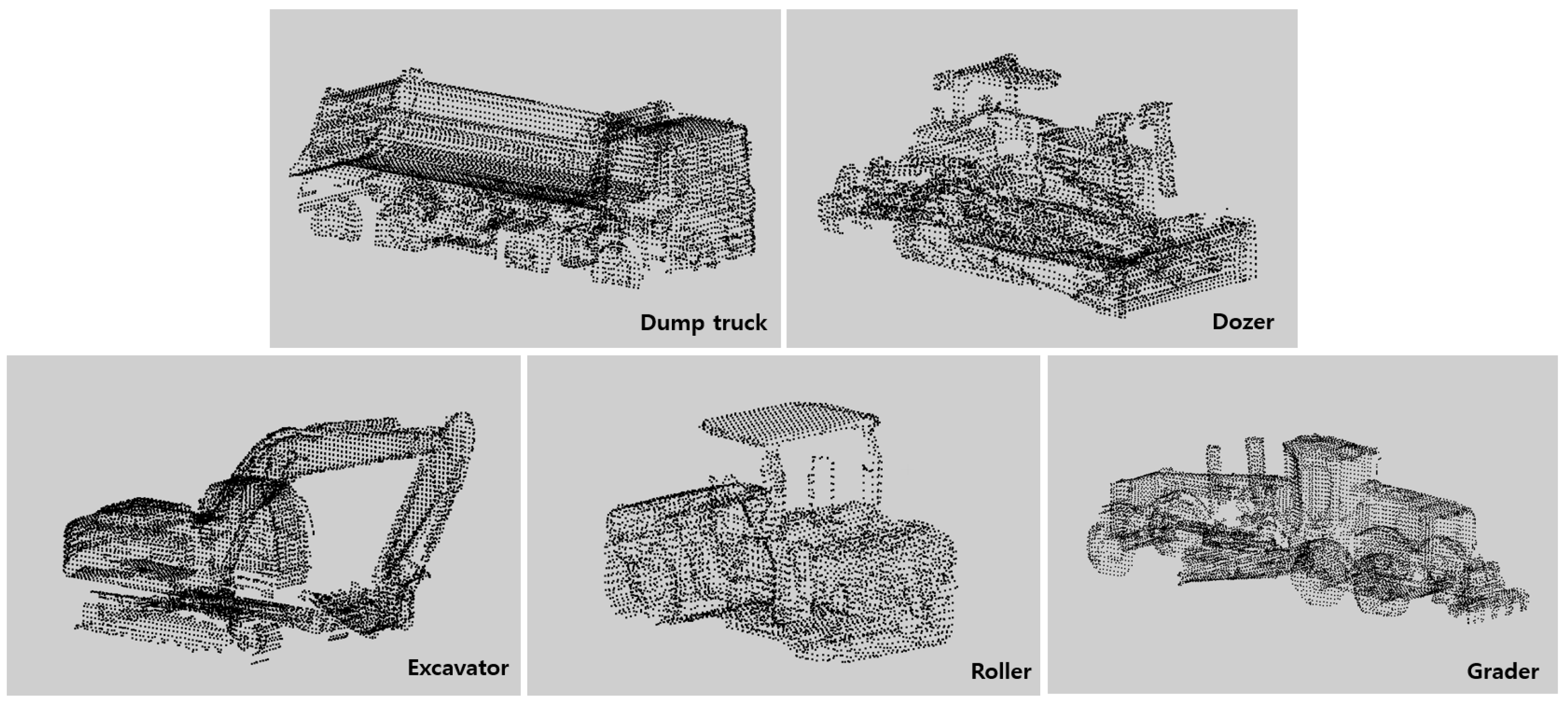

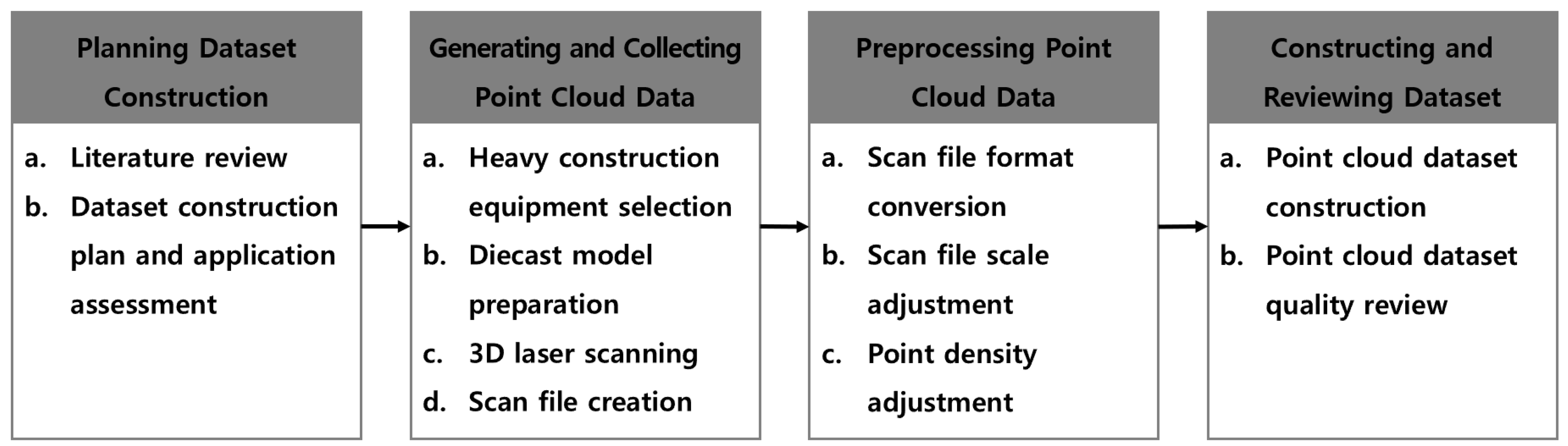

3. Development of 3D-ConHE Dataset

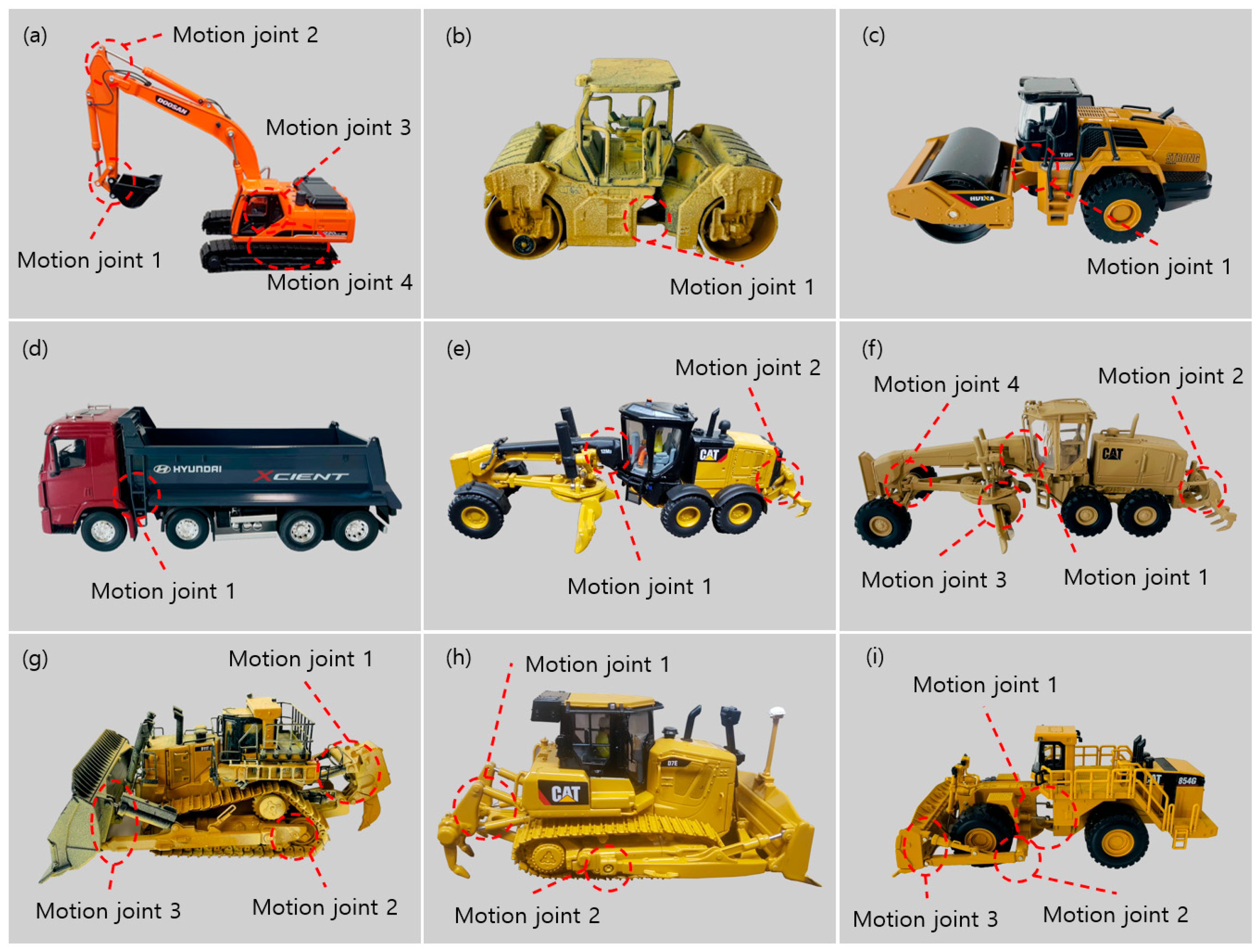

3.1. 3D Point Cloud Data Generation and Collection

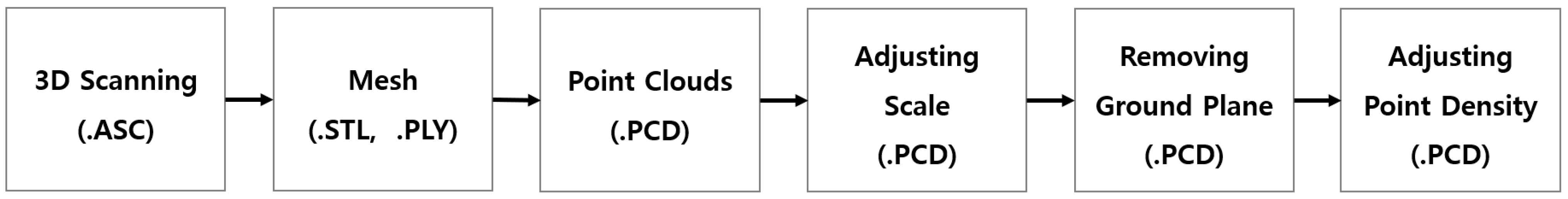

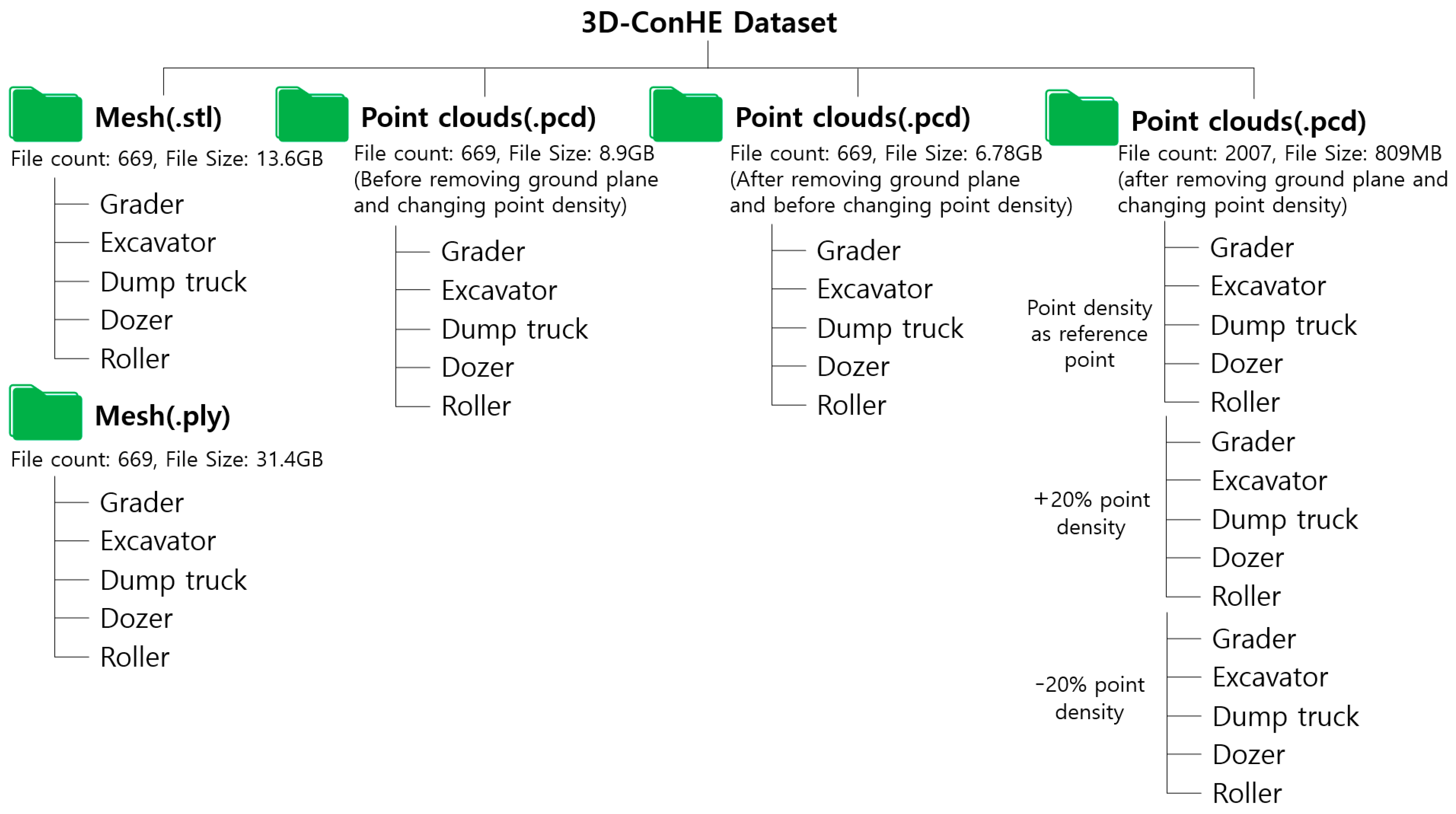

3.2. Preprocessing of 3D Point Cloud Data

- Converting Files: To generate PCD files from the ASC files produced during the scanning process, the ASC files for each piece of equipment were merged to create mesh files in STL format. These mesh files were further processed to obtain the final PCD files through PLY files. The 3D scanner’s software ( EinScan SE/SP Software Version 3.1.3.0) facilitated the conversion of ASC, STL, and PLY files. Open3D was then used to convert PLY files into PCD files. This conversion process yielded a total of 669 mesh-converted PLY files and 669 PCD files; all consistent with the scale of the diecast models of heavy construction equipment.

- Adjusting Scale: The diecast models of heavy construction equipment used for 3D scanning were scaled down to between 1:32 and 1:87, compared to their real-size counterparts. To facilitate the use of these differently scaled models in this research, a scale adjustment process was executed using the Cloudcompare software. This ensured that the scanned data created in this research matched the actual scale of heavy construction equipment used on-site.

- Removing Ground Plane: After the scale adjustment, the ground planes in the PCD files of the diecast models of heavy construction equipment were removed using the Cloudcompare software. This phase was taken into account, considering that the point cloud data of a ground plane is not captured in actual data collected using UAV and UGV equipment. The aim was to make the data more closely resemble on-site data obtained through scanning, thus enhancing the accuracy of ML and DL object detection models. This operation led to a reduction in the size of the 3D-ConHE dataset.

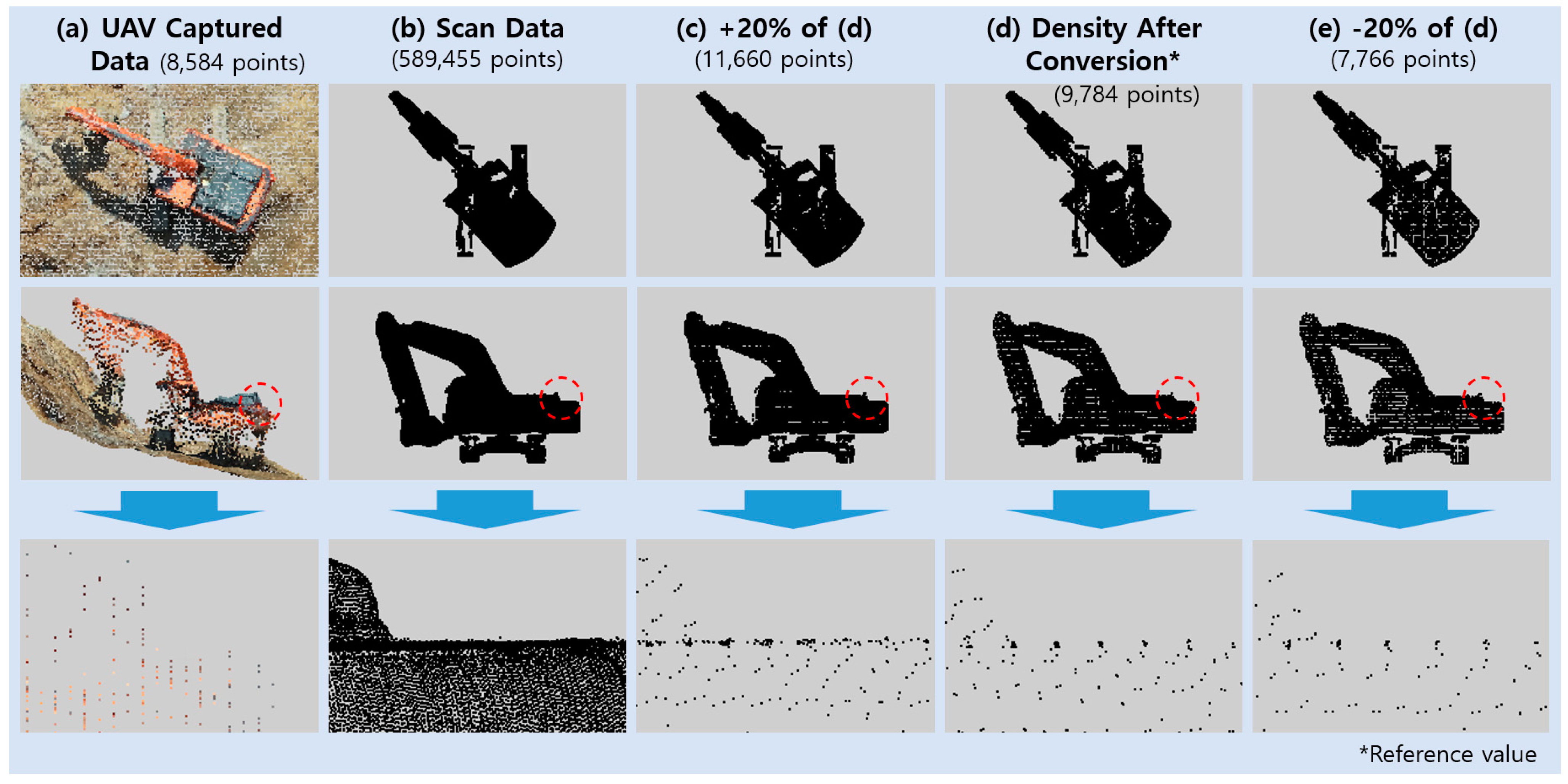

- Adjusting Point Density: This research examined the point cloud data of heavy construction equipment collected by UAV to adjust the point cloud data’s point density. Typically, the point cloud data of heavy construction equipment captured by UAV, as displayed in Figure 6, features significantly lower point density compared to data generated from scanning diecast models. This discrepancy arises from the equipment’s characteristics and the data capture method. In Figure 6, the data were acquired using a DJI PHANTOM 4 RTK (Shenzhen, China), equipped with a 20-megapixel camera, flying at an altitude of 100 m. Figure 6a shows the extracted point cloud data from heavy construction equipment on a construction site captured by the UAV, while Figure 6b displays data generated from scanning heavy construction equipment diecast models indoors. When comparing the point density between Figure 6a,b, the lower point density in Figure 6a is likely attributed to the drone capturing data from an altitude of 100 m above the ground. Furthermore, a drone capturing data at a 100 m altitude can be affected by wind, causing it to fluctuate in altitude, sometimes ranging between 80 and 120 m above the ground. The unreliability of this on-site data collection method results in the point cloud data captured by UAVs having lower point densities than data generated from static scanning of diecast models indoors. Consequently, this research employed point density values at a UAV data capture height of 100 m as reference values for diecast models (Figure 6d). Additionally, data captured at altitudes of 80 and 120 m were taken into account to adjust the point density of diecast models, as depicted in Figure 6d,e; introducing a variance of +20% and −20%.

3.3. Dataset Construction

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Barbosa, F.; Mischke, J.; Parsons, M. Improving Construction Productivity; McKinsey & Company: Chicago, IL, USA, 2017. [Google Scholar]

- Durdyev, S.; Ismail, S. Offsite Manufacturing in the Construction Industry for Productivity Improvement. EMJ Eng. Manag. J. 2019, 31, 35–46. [Google Scholar] [CrossRef]

- Cho, Y.K.; Leite, F.; Behzadan, A.; Wang, C. State-of-the-Art Review on the Applicability of AI Methods to Automated Construction. In ASCE International Conference on Computing in Civil Engineering; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 105–113. [Google Scholar]

- Bamfo-Agyei, E.; Thwala, D.W.; Aigbavboa, C. Performance Improvement of Construction Workers to Achieve Better Productivity for Labour-Intensive Works. Buildings 2022, 12, 1593. [Google Scholar] [CrossRef]

- Cai, S.; Ma, Z.; Skibniewski, M.J.; Bao, S. Construction Automation and Robotics for High-Rise Buildings over the Past Decades: A Comprehensive Review. Adv. Eng. Inform. 2019, 42, 100989. [Google Scholar] [CrossRef]

- Lasky, T.A.; Ravani, B. Sensor-Based Path Planning and Motion Control for a Robotic System for Roadway Crack Sealing. IEEE Trans. Control Syst. Technol. 2000, 8, 609–622. [Google Scholar] [CrossRef]

- Bennett, D.A.; Feng, X.; Velinsky, S.A. Robotic Machine for Highway Crack Sealing. Transp. Res. Rec. 2003, 1827, 18–26. [Google Scholar] [CrossRef]

- Dakhli, Z.; Lafhaj, Z. Robotic Mechanical Design for Brick-Laying Automation. Cogent Eng. 2017, 4. [Google Scholar] [CrossRef]

- Johns, R.L.; Wermelinger, M.; Mascaro, R.; Jud, D.; Hurkxkens, I.; Vasey, L.; Chli, M.; Gramazio, F.; Kohler, M.; Hutter, M. A Framework for Robotic Excavation and Dry Stone Construction Using On-Site Materials. Sci. Robot. 2023, 8, eabp9758. [Google Scholar] [CrossRef]

- Lee, M.S.; Shin, Y.; Choi, S.J.; Kang, H.B.; Cho, K.Y. Development of a Machine Control Technology and Productivity Evaluation for Excavator. J. Drive Control 2020, 17, 37–43. [Google Scholar]

- Yeom, D.J.; Yoo, H.S.; Kim, J.S.; Kim, Y.S. Development of a Vision-Based Machine Guidance System for Hydraulic Excavators. J. Asian Archit. Build. Eng. 2022, 22, 1564–1581. [Google Scholar] [CrossRef]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic Creation of Semantically Rich 3D Building Models from Laser Scanner Data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Pu, S.; Vosselman, G. Knowledge Based Reconstruction of Building Models from Terrestrial Laser Scanning Data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 575–584. [Google Scholar] [CrossRef]

- Park, S.; Kim, S.; Seo, H. Study on Representative Parameters of Reverse Engineering for Maintenance of Ballasted Tracks. Appl. Sci. 2022, 12, 5973. [Google Scholar] [CrossRef]

- Park, S.Y.; Kim, S. Analysis of Overlap Ratio for Registration Accuracy Improvement of 3D Point Cloud Data at Construction Sites. J. KIBIM 2021, 11, 1–9. [Google Scholar]

- Park, S.Y.; Kim, S. Performance Evaluation of Denoising Algorithms for the 3D Construction Digital Map. J. KIBIM 2020, 10, 32–39. [Google Scholar]

- Choi, Y.; Park, S.; Kim, S. GCP-Based Automated Fine Alignment Method for Improving the Accuracy of Coordinate Information on UAV Point Cloud Data. Sensors 2022, 22, 8735. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.; Park, S.; Kim, S. Development of Point Cloud Data-Denoising Technology for Earthwork Sites Using Encoder-Decoder Network. KSCE J. Civ. Eng. 2022, 26, 4380–4389. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Shi, W. Seismic Control of Adaptive Variable Stiffness Intelligent Structures Using Fuzzy Control Strategy Combined with LSTM. J. Build. Eng. 2023, 78, 107549. [Google Scholar] [CrossRef]

- Singh, K.B.; Arat, M.A. Deep Learning in the Automotive Industry: Recent Advances and Application Examples. arXiv 2019, arXiv:1906.08834. [Google Scholar]

- Axelsson, M.; Holmberg, M.; Serra, S.; Ovren, H.; Tulldahl, M. Semantic Labeling of Lidar Point Clouds for UAV Applications. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 4309–4316. [Google Scholar] [CrossRef]

- Yoon, S.; Kim, J. Efficient Multi-Agent Task Allocation for Collaborative Route Planning with Multiple Unmanned Vehicles. IFAC-PapersOnLine 2017, 50, 3580–3585. [Google Scholar] [CrossRef]

- Mostafa, K.; Hegazy, T. Automation in Construction Review of Image-Based Analysis and Applications in Construction. Autom. Constr. 2021, 122, 103516. [Google Scholar] [CrossRef]

- Li, H.; Lu, M.; Hsu, S.C.; Gray, M.; Huang, T. Proactive Behavior-Based Safety Management for Construction Safety Improvement. Saf. Sci. 2015, 75, 107–117. [Google Scholar] [CrossRef]

- Jeong, I.; Kim, J.; Chi, S.; Roh, M.; Biggs, H. Solitary Work Detection of Heavy Equipment Using Computer Vision. KSCE J. Civ. Environ. Eng. Res. 2021, 41, 441–447. [Google Scholar]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Mirzaei, K.; Arashpour, M.; Asadi, E.; Masoumi, H.; Bai, Y.; Behnood, A. 3D Point Cloud Data Processing with Machine Learning for Construction and Infrastructure Applications: A Comprehensive Review. Adv. Eng. Inform. 2022, 51, 101501. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Rob. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Fei-Fei, L.; Deng, J.; Li, K. ImageNet: Constructing a Large-Scale Image Database. J. Vis. 2010, 9, 1037. [Google Scholar] [CrossRef]

- Kim, H.; Kim, H.; Hong, Y.W.; Byun, H. Detecting Construction Equipment Using a Region-Based Fully Convolutional Network and Transfer Learning. J. Comput. Civ. Eng. 2018, 32, 04017082. [Google Scholar] [CrossRef]

- Arabi, S.; Haghighat, A.; Sharma, A. A Deep Learning Based Solution for Construction Equipment Detection: From Development to Deployment. arXiv 2019, arXiv:1904.09021. [Google Scholar]

- Xiao, B.; Kang, S.-C. Development of an Image Data Set of Construction Machines for Deep Learning Object Detection. J. Comput. Civ. Eng. 2021, 35, 05020005. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Kim, J.; Chi, S.; Seo, J. Automated Vision-Based Construction Object Detection Using Active Learning. KSCE J. Civ. Environ. Eng. Res. 2019, 39, 631–636. [Google Scholar]

- Chen, J.; Fang, Y.; Cho, Y.K.; Kim, C. Principal Axes Descriptor for Automated Construction-Equipment Classification from Point Clouds. J. Comput. Civ. Eng. 2017, 31, 04016058. [Google Scholar] [CrossRef]

- Chen, J.; Fang, Y.; Cho, Y.K. Performance Evaluation of 3D Descriptors for Object Recognition in Construction Applications. Autom. Constr. 2018, 86, 44–52. [Google Scholar] [CrossRef]

- Kim, H.; Kim, C. Deep-Learning-Based Classification of Point Clouds for Bridge Inspection. Remote Sens. 2020, 12, 3757. [Google Scholar] [CrossRef]

- Kim, J.; Chung, D.; Kim, Y.; Kim, H. Deep Learning-Based 3D Reconstruction of Scaffolds Using a Robot Dog. Autom. Constr. 2022, 134, 104092. [Google Scholar] [CrossRef]

- Dai, A.; Ritchie, D.; Bokeloh, M.; Reed, S.; Sturm, J.; Niebner, M. ScanComplete: Large-Scale Scene Completion and Semantic Segmentation for 3D Scans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4578–4587. [Google Scholar] [CrossRef]

- Skabek, K.; Kowalski, P. Building the Models of Cultural Heritage Objects Using Multiple 3D Scanners. Theor. Appl. Inform. 2009, 21, 115–129. [Google Scholar]

- Hackel, T.; Wegner, J.D.; Savinov, N.; Ladicky, L.; Schindler, K.; Pollefeys, M. Large-Scale Supervised Learning for 3D Point Cloud Labeling: Semantic3d.Net. Photogramm. Eng. Remote Sens. 2018, 84, 297–308. [Google Scholar] [CrossRef]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection from Point Cloud with Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Zhang, Q.; Kailkhura, B.; Yu, Z.; Xiao, C.; Mao, Z.M. Benchmarking Robustness of 3D Point Cloud Recognition against Common Corruptions. arXiv 2022, arXiv:2201.12296. [Google Scholar]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D Semantic Parsing of Large-Scale Indoor Spaces (a) Raw Point Cloud (b) Space Parsing and Alignment in Canonical 3D Space (c) Building Element Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1534–1543. [Google Scholar] [CrossRef]

- Mitwally, M.F.; Casper, R.F. Using Aromatese Inhibitors to Induce Ovulation in Breast Ca Survivors. Contemp. Ob/Gyn 2004, 49, 73–83. [Google Scholar]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3d.net: A new large-scale point cloud classification. arXiv 2017, arXiv:1704.03847. [Google Scholar] [CrossRef]

- Roynard, X.; Deschaud, J.E.; Goulette, F. Paris-Lille-3D: A Large and High-Quality Ground-Truth Urban Point Cloud Dataset for Automatic Segmentation and Classification. Int. J. Rob. Res. 2018, 37, 545–557. [Google Scholar] [CrossRef]

- Tan, W.; Qin, N.; Ma, L.; Li, Y.; Du, J.; Cai, G.; Yang, K.; Li, J. Toronto-3D: A Large-Scale Mobile LiDAR Dataset for Semantic Segmentation of Urban Roadways. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 797–806. [Google Scholar] [CrossRef]

- Li, J.; Wu, W.; Yang, B.; Zou, X.; Yang, Y.; Zhao, X.; Dong, Z. WHU-Helmet: A Helmet-Based Multisensor SLAM Dataset for the Evaluation of Real-Time 3-D Mapping in Large-Scale GNSS-Denied Environments. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Nguyen, T.-M.; Yuan, S.; Nguyen, T.H.; Yin, P.; Cao, H.; Xie, L.; Wozniak, M.; Jensfelt, P.; Thiel, M.; Ziegenbein, J.; et al. MCD: Diverse Large-Scale Multi-Campus Dataset for Robot Perception. arXiv 2024, arXiv:2403.11496. [Google Scholar]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Girardeau-Montaut, D. CloudCompare. 2019. Available online: https://www.cloudcompare.org/ (accessed on 10 November 2023).

| Name | Year | Environment | Primary Fields | File Format | Objects/Points | Number of Classes | Application Technology | Data Acquisition Type |

|---|---|---|---|---|---|---|---|---|

| ShapeNet | 2015 | Indoor | X, Y, Z, R, G, B | .obj | 51,300 objects (ShapeNetCore), 12,000 objects (ShapeNetSem) | 55 (ShapeNetCore), 270 (ShapeNetSem) | Classification | Conversion of CAD models |

| ModelNet | 2015 | Indoor | X, Y, Z, number of vertices, edges, faces | .off | 12,311 objects (ModelNet40), 4899 objects (ModelNet10) | 10 (ModelNet 10), 40 (ModelNet 40) | Classification | Conversion of CAD models |

| S3DIS | 2016 | Indoor | X, Y, Z, R, G, B | .h5 | 695 million points | 13 | Segmentation | Converting CAD files into mesh files |

| Semantic3D | 2017 | Urban | X, Y, Z, intensity, R, G, B, class | .txt | 4 billion points | 8 | Segmentation | TLS |

| Paris-Lille-3D | 2018 | Urban | X, Y, Z, intensity, class | .ply | 143 million points | 50 | Segmentation | MLS |

| Toronto-3D | 2020 | Urban | X, Y, Z, R, G, B, intensity, GPS time, scan angle rank, label | .ply | 78 million points | 8 | Segmentation | MLS |

| Semantic KITTI | 2019 | Urban | X, Y, Z, intensity, label | .bin | 23,201 objects | 28 | Segmentation | MLS |

| 3D-ConHE (ours) | 2023 | Indoor | X, Y, Z, | .stl .ply .pcd | 4683 objects | - | Classification, segmentation | Portable scanner |

| Types of Equipment | Classification in Figure 2 | Diecast Model Product Number | Scale | Number of Motion Joints | Number of Scan Files |

|---|---|---|---|---|---|

| Excavator | (a) | Doosan DX225LCA | 1:40 | 4 | 108 |

| Doosan DX380LC-9C | 1:50 | 4 | 108 | ||

| Doosan DH220 | 1:50 | 4 | 108 | ||

| Komatsu PC210LC-10 | 1:50 | 4 | 108 | ||

| Hyundai R215-9 | 1:40 | 4 | 108 | ||

| Roller | (b) | Caterpillar Cat 85630 | 1:64 | 1 | 5 |

| (c) | Huina 1715 | 1:50 | 1 | 3 | |

| Dump Truck | (d) | Huina 1718 | 1:50 | 1 | 3 |

| Hyundai Xcient | 1:32 | 1 | 3 | ||

| Hyundai HD370 | 1:32 | 1 | 3 | ||

| Grader | (e) | Caterpillar Cat 12M3 | 1:87 | 2 | 18 |

| (f) | Caterpillar Cat 120M | 1:50 | 4 | 54 | |

| Dozer | (g) | Caterpillar Cat D8R | 1:50 | 3 | 8 |

| (h) | Caterpillar Cat D7E | 1:50 | 2 | 4 | |

| (i) | Caterpillar Cat 924H | 1:50 | 3 | 8 | |

| Caterpillar Cat D11T | 1:50 | 3 | 8 | ||

| (j) | Caterpillar Cat 854G | 1:50 | 3 | 12 | |

| Total | 669 | ||||

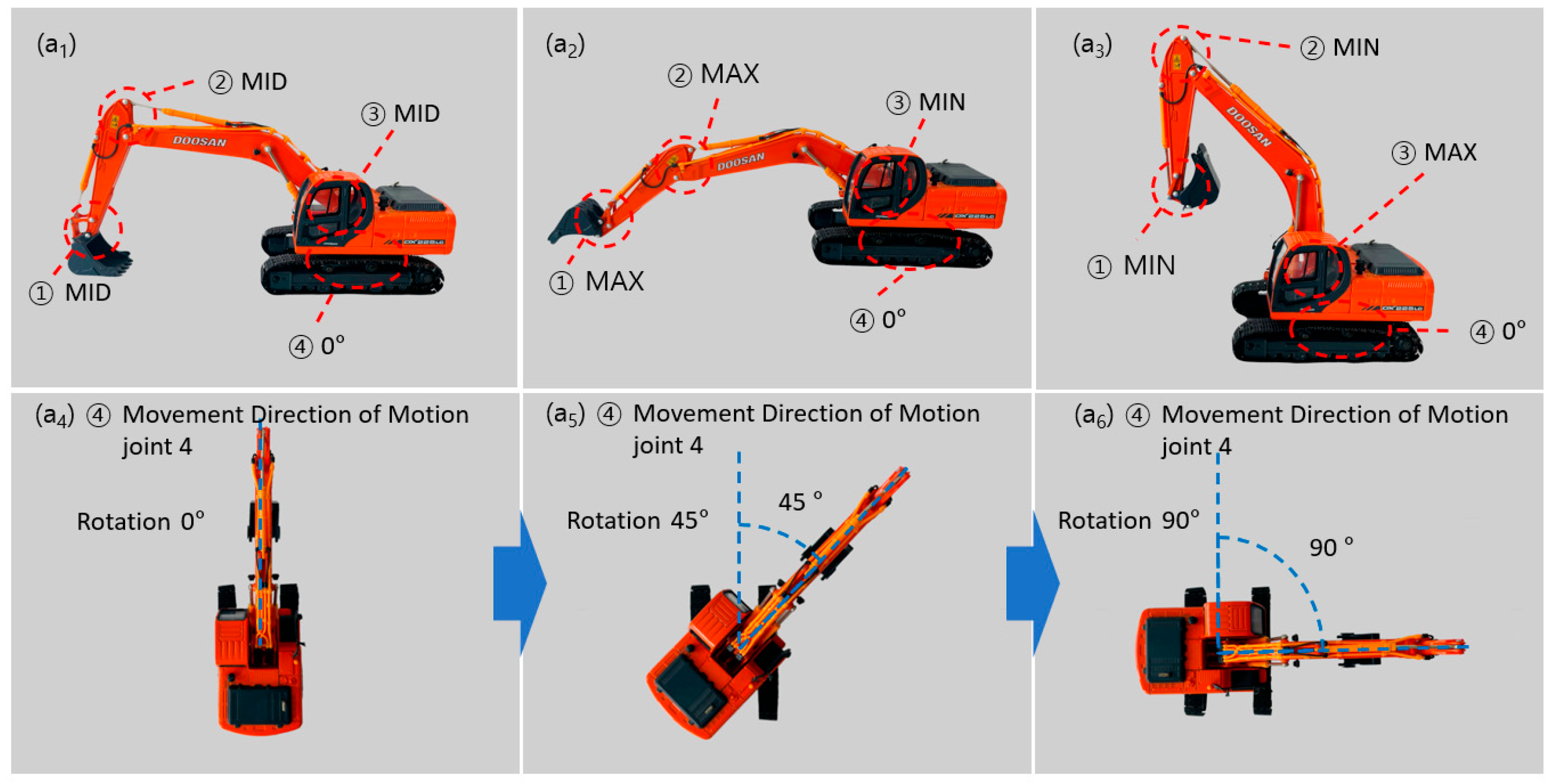

| Type | Number of Motion Joints | ① Movement Direction of Motion Joint 1 | ② Movement Direction of Motion Joint 2 | ③ Movement Direction of Motion Joint 3 | ④ Movement Direction of Motion Joint 4 | Number of Scans 1 |

|---|---|---|---|---|---|---|

| Excavator | 4 | Vertical: MAX, MID, MIN | Vertical: MAX, MID, MIN | Vertical: MAX, MID, MIN | Horizontal Rotation: 0°, 45°, 90°, 135° | 540 |

| Roller | 1 | Horizontal: LEFT, LEFT MID, MID, RIGHT MID RIGHT | - | - | - | 5 |

| 1 | Horizontal: LEFT, MID, RIGHT | 3 | ||||

| Dump Truck | 1 | Vertical: MAX, MID, MIN | - | - | - | 9 |

| Grader | 2 | Horizontal: LEFT, MID, RIGHT | Vertical: MAX, MIN | - | - | 18 |

| 4 | Horizontal: LEFT, MID, RIGHT | Vertical: MAX, MIN | Horizontal: LEFT, MID, RIGHT | Horizontal: LEFT, MID, RIGHT | 54 | |

| Dozer | 3 | Vertical: MAX, MIN | Vertical: MAX, MIN | Vertical: MAX, MIN | - | 24 |

| 2 | Vertical: MAX, MIN | Vertical: MAX, MIN | - | - | 4 | |

| 3 | Horizontal: LEFT, MID, RIGHT | Vertical: MAX, MIN | Vertical: MAX, MIN | - | 12 | |

| Total | 669 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Kim, S. 3D Point Cloud Dataset of Heavy Construction Equipment. Appl. Sci. 2024, 14, 3599. https://doi.org/10.3390/app14093599

Park S, Kim S. 3D Point Cloud Dataset of Heavy Construction Equipment. Applied Sciences. 2024; 14(9):3599. https://doi.org/10.3390/app14093599

Chicago/Turabian StylePark, Suyeul, and Seok Kim. 2024. "3D Point Cloud Dataset of Heavy Construction Equipment" Applied Sciences 14, no. 9: 3599. https://doi.org/10.3390/app14093599

APA StylePark, S., & Kim, S. (2024). 3D Point Cloud Dataset of Heavy Construction Equipment. Applied Sciences, 14(9), 3599. https://doi.org/10.3390/app14093599