Seatbelt Detection Algorithm Improved with Lightweight Approach and Attention Mechanism

Abstract

1. Introduction

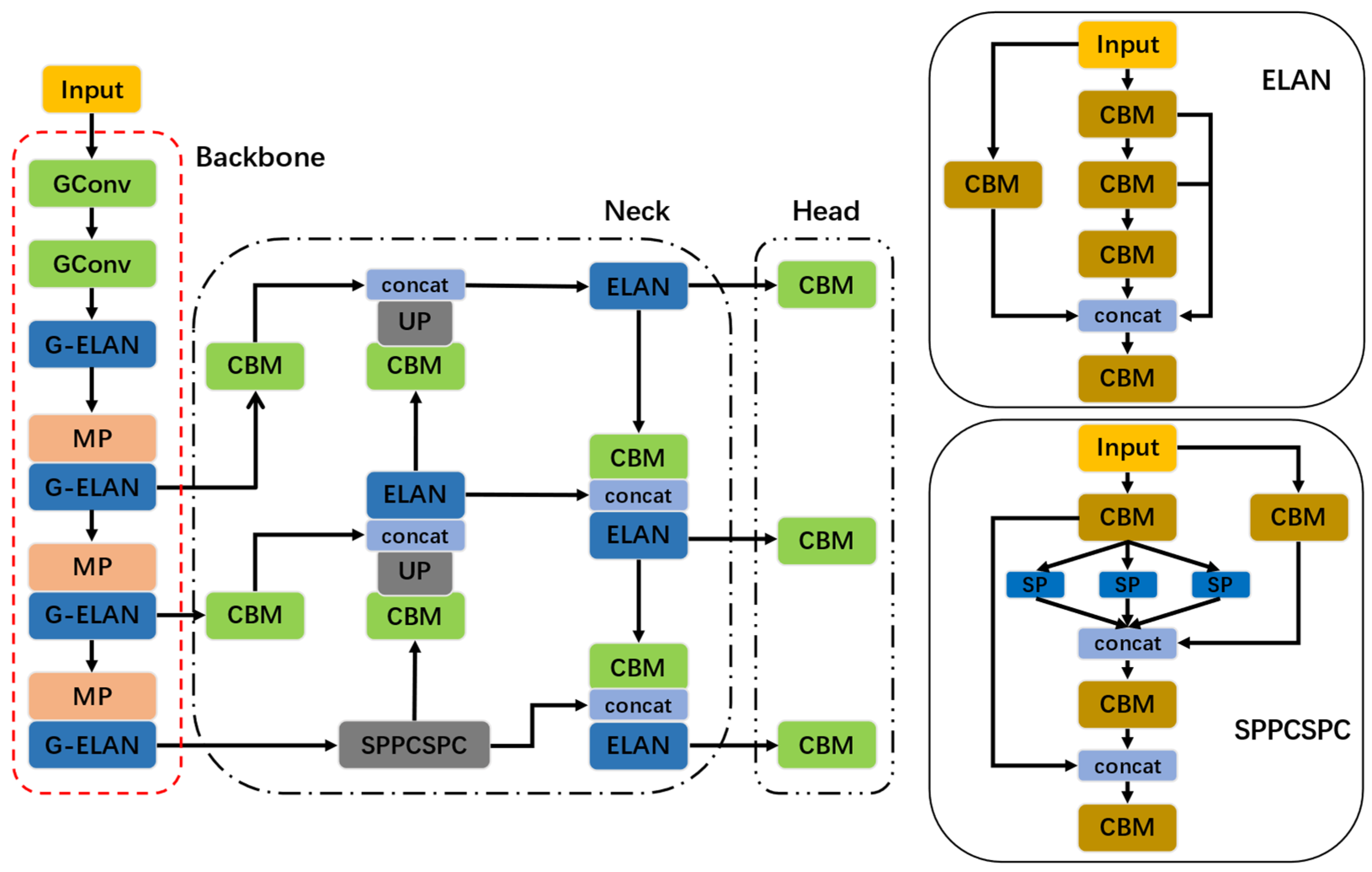

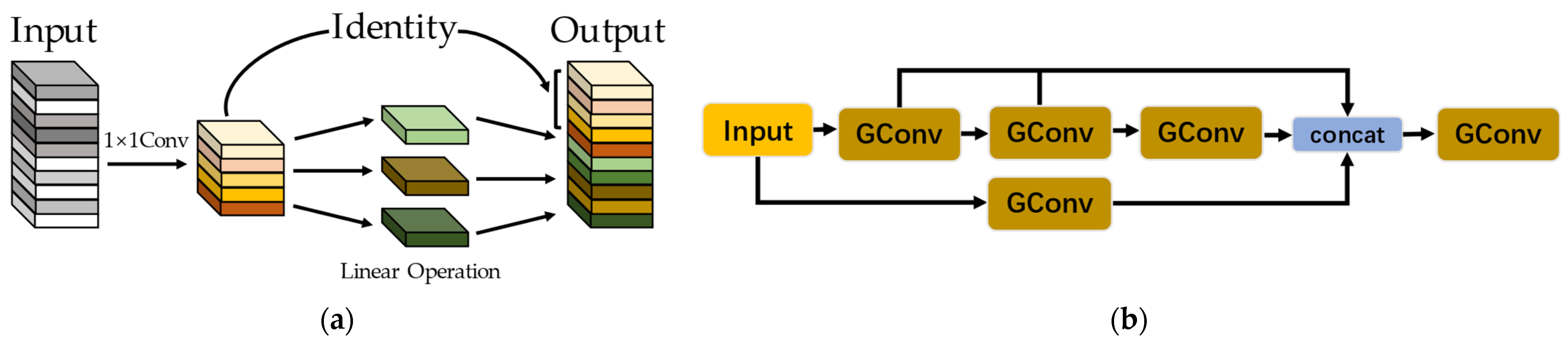

- A lightweight Ghost module [13] is introduced to the YOLOv7-tiny [14] for efficient feature extraction while improving the Efficient Layer Aggregation Network (ELAN) in the backbone to decrease the number of model parameters, computing operations, and overall size. Moreover, the Mish [15] activation function is applied to substitute the Leaky Relu in the neck for feature aggregation, upgrading the network’s non-linear capability and detection performance.

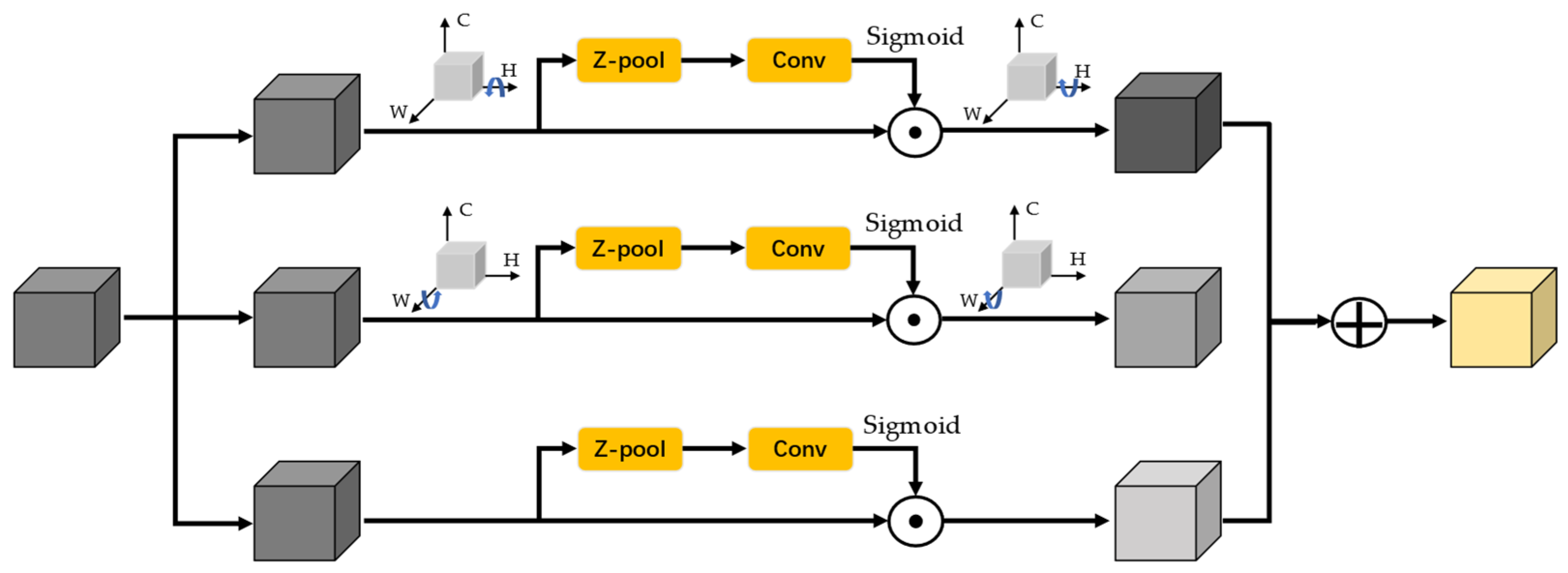

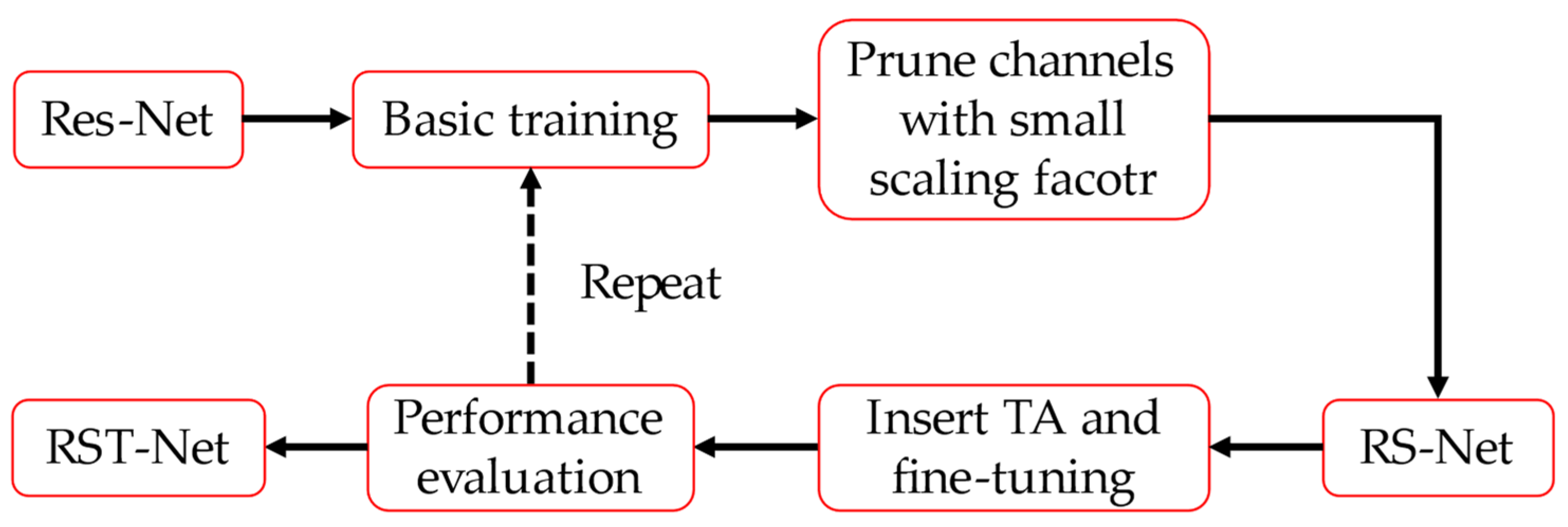

- The lightweight deep learning algorithm is proposed to detect seatbelts by employing the channel pruning technique on the basis of ResNet, which effectively reduces the network’s size and minimizes the run-time memory footprint. Moreover, a novel attention mechanism, Triplet Attention (TA) [16], is employed to improve the importance of the seatbelt feature and restrain irrelevant information. The TA module realizes more detailed attention to feature information in a cross-latitude, interactive way, improving network robustness.

- Finally, a considerable number of experiments verify the validity of the proposed algorithm. The RST-Net has better detection behavior when compared to the well-known approaches. In addition, the Parameters (Params) and Giga Floating-Point Operations (GFLOPs) of GM-YOLOv7 and RST-Net are substantially lower compared to the baseline and other improved networks.

2. Related Studies

2.1. Object Detection Algorithm

2.2. Lightweight Technology

2.3. Attention Mechanism

3. Methodologies

3.1. Method Overview

3.2. GM-YOLOv7 Algorithm Model

3.2.1. Slim Backbone Network Based on the Ghost Module

3.2.2. Improved Activation Function

3.3. Seatbelt Classification Based on RST-Net

3.3.1. Lightweight Residual Network

3.3.2. Triplet Attention

4. Experimental Result

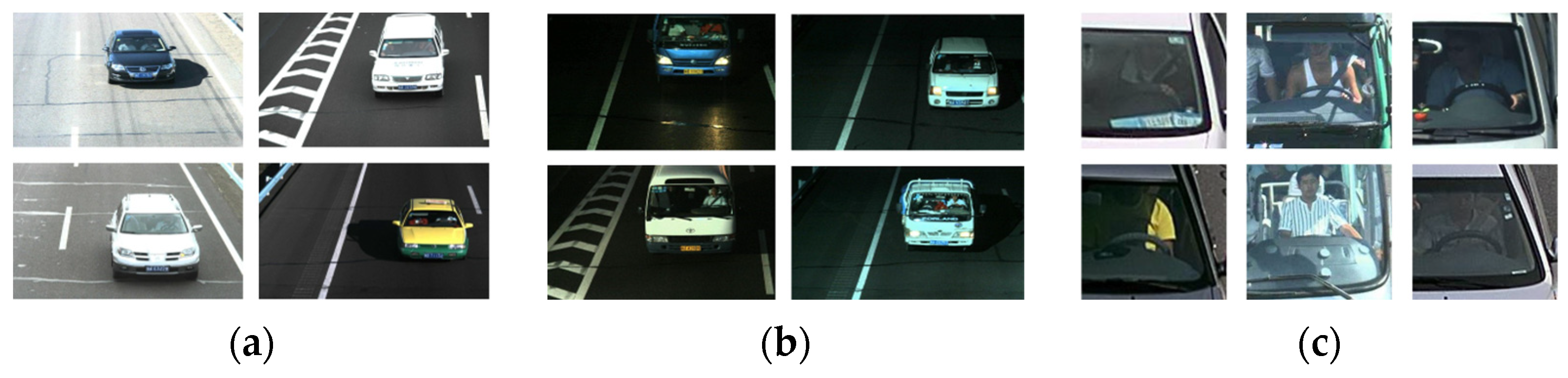

4.1. Dataset and Evaluation Metrics

4.2. Experimental Configurations

4.3. Ablation Studies and Analysis

4.4. Model Comparations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; Ma, Y. Detection and Recognition of Stationary Vehicles and Seat Belts in Intelligent Internet of Things Traffic Management System. Neural Comput. Appl. 2022, 34, 3513–3522. [Google Scholar] [CrossRef]

- Wang, D. Intelligent Detection of Vehicle Driving Safety Based on Deep Learning. Wirel. Commun. Mob. Comput. 2022, 2022, 1095524. [Google Scholar] [CrossRef]

- Zhang, D. Analysis and Research on the Images of Drivers and Passengers Wearing Seat Belt in Traffic Inspection. Clust. Comput. 2019, 22, 9089–9095. [Google Scholar] [CrossRef]

- Chen, Y.; Tao, G.; Ren, H.; Lin, X.; Zhang, L. Accurate Seat Belt Detection in Road Surveillance Images Based on CNN and SVM. Neurocomputing 2018, 274, 80–87. [Google Scholar] [CrossRef]

- Hosameldeen, O. Deep Learning-Based Car Seatbelt Classifier Resilient to Weather Conditions. Int. J. Eng. Technol. 2020, 9, 229–237. [Google Scholar] [CrossRef]

- Luo, J.; Lu, J.; Yue, G. Seatbelt Detection in Road Surveillance Images Based on Improved Dense Residual Network with Two-Level Attention Mechanism. J. Electron. Imag. 2021, 30, 033036. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Hosseini, S.; Fathi, A. Automatic Detection of Vehicle Occupancy and Driver’s Seat Belt Status Using Deep Learning. SIViP 2022, 17, 491–499. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Yang, K.; Zhang, D.; Li, Y. Seat belt detecting of car drivers with deep learning. J. China Univ. Metrol. 2017, 28, 326–333. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2018; pp. 1577–1586. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Misra, D. Mish: A Self Regularized Non-Monotonic Activation Function. arXiv 2020, arXiv:1908.08681. [Google Scholar]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to Attend: Convolutional Triplet Attention Module. arXiv 2020, arXiv:2010.03045. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9905, pp. 21–37. [Google Scholar]

- He, Y.; Zhang, X.; Sun, J. Channel Pruning for Accelerating Very Deep Neural Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1398–1406. [Google Scholar]

- Deng, B.L.; Li, G.; Han, S.; Shi, L.; Xie, Y. Model Compression and Hardware Acceleration for Neural Networks: A Comprehensive Survey. Proc. IEEE 2020, 108, 485–532. [Google Scholar] [CrossRef]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2704–2713. [Google Scholar]

- Zhang, P.; Zhong, Y.; Li, X. SlimYOLOv3: Narrower, Faster and Better for Real-Time UAV Applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), IEEE, Seoul, Republic of Korea, 27–28 October 2019; pp. 37–45. [Google Scholar]

- Wen, W.; Wu, C.; Wang, Y.; Chen, Y.; Li, H. Learning Structured Sparsity in Deep Neural Networks. arXiv 2016, arXiv:1608.03665. [Google Scholar]

- LeCun, Y.; Denker, J.S.; Solla, S.A. Optimal Brain Damage. In Proceedings of the IEEE International Conference on Neural Networks, San Francisco, CA, USA, 28 March–1 April 1993; pp. 293–299. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2017, arXiv:1610.02357. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083. [Google Scholar]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks: Training Deep Neural Networks with Weights and Activations Constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks. arXiv 2016, arXiv:1603.05279. [Google Scholar]

- Kolda, T.G.; Bader, B.W. Tensor Decompositions and Applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-Excite: Exploiting Feature Context in Convolutional Neural Networks. arXiv 2019, arXiv:1810.12348. [Google Scholar]

- Woo, S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. arXiv 2021, arXiv:2103.02907. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-Entropy Loss Functions: Theoretical Analysis and Applications. arXiv 2023, arXiv:2304.07288. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2020, arXiv:1910.03151. [Google Scholar]

- Yang, Q.-L.Z.Y.-B. SA-Net: Shuffle Attention for Deep Convolutional Neural Networks. arXiv 2021, arXiv:2102.00240. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. arXiv 2017, arXiv:1611.05431. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide Residual Networks. arXiv 2017, arXiv:1605.07146. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

| Different Scenarios | Training Set | Validation Set | Validation Set |

|---|---|---|---|

| Windshield | 4000 | 363 | 366 |

| Seatbelt | 3333 | 400 | 250 |

| Item | Edition |

|---|---|

| CPU | Intel i5-12500H |

| GPU | NVIDIA GeForce RTX 3060 |

| OS | Windows 11 |

| CUDA | 11.7.1 |

| Pytorch | 1.13.1 |

| Python | 3.8 |

| Model | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPs | Size/MB |

|---|---|---|---|---|---|

| YOLOv7 | 99.5 | 81.1 | 6.0 | 13 | 12.3 |

| G-YOLOv7 | 98.8 | 79.7 | 4.8 | 9.8 | 9.9 |

| M-YOLOv7 | 99.5 | 81.5 | 6.0 | 13 | 12.3 |

| GM-YOLOv7 | 99.3 | 84.2 | 4.8 | 9.8 | 9.9 |

| Model | TA | Pruning Ratio | Accuracy/% | F1 Score/% | Params/M | GFLOPs | |

|---|---|---|---|---|---|---|---|

| 25% | 50% | ||||||

| ResNet | 94 | 94.07 | 25.5 | 4.1 | |||

| ✓ | 96.75 | 97.76 | 25.6 | 5.4 | |||

| ✓ | ✓ | 98.25 | 98.25 | 13.2 | 3.1 | ||

| ✓ | ✓ | 94.25 | 94.18 | 9.9 | 2.3 | ||

| Model | Attention Module | Accuracy/% | Precision/% | Recall/% |

|---|---|---|---|---|

| ResNet | SE | 97 | 97.47 | 95.5 |

| SA | 95.75 | 96.45 | 95 | |

| ECA | 96 | 95.54 | 96.5 | |

| CBAM | 96.75 | 96.98 | 96.5 | |

| CA | 97.25 | 98.96 | 95.5 | |

| TA | 98.25 | 98.49 | 98 |

| Network | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | GFLOPs | Size/MB |

|---|---|---|---|---|---|

| YOLOv3-tiny | 98.4 | 61.8 | 8.7 | 13.0 | 16.6 |

| YOLOX-s | 99.0 | 79.6 | 8.8 | 26.5 | 68.0 |

| YOLOv5-s | 99.2 | 80.0 | 7.0 | 15.8 | 14.4 |

| YOLOv7-tiny | 99.5 | 81.1 | 6.0 | 13.0 | 12.3 |

| GM-YOLOv7 | 99.3 | 84.2 | 4.8 | 9.8 | 9.9 |

| Model | Accuracy/% | Precision/% | Recall/% | F1 Score/% | Params/M | GFLPOs |

|---|---|---|---|---|---|---|

| AlexNet | 86.74 | 82.67 | 93 | 87.53 | 61.1 | 0.93 |

| DenseNet | 97.75 | 97.99 | 97.50 | 97.74 | 20.0 | 5.7 |

| EfficientNet | 97.50 | 97.98 | 97 | 97.49 | 12.2 | 1.3 |

| ResNeXt | 96.75 | 96.06 | 97.50 | 96.77 | 25.0 | 5.6 |

| Wide ResNet | 97.25 | 95.22 | 99.5 | 97.21 | 68.9 | 15.0 |

| RST-Net | 98.25 | 98.49 | 98 | 98.25 | 13.2 | 3.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, L.; Rao, J.; Zhao, X. Seatbelt Detection Algorithm Improved with Lightweight Approach and Attention Mechanism. Appl. Sci. 2024, 14, 3346. https://doi.org/10.3390/app14083346

Qiu L, Rao J, Zhao X. Seatbelt Detection Algorithm Improved with Lightweight Approach and Attention Mechanism. Applied Sciences. 2024; 14(8):3346. https://doi.org/10.3390/app14083346

Chicago/Turabian StyleQiu, Liankui, Jiankun Rao, and Xiangzhe Zhao. 2024. "Seatbelt Detection Algorithm Improved with Lightweight Approach and Attention Mechanism" Applied Sciences 14, no. 8: 3346. https://doi.org/10.3390/app14083346

APA StyleQiu, L., Rao, J., & Zhao, X. (2024). Seatbelt Detection Algorithm Improved with Lightweight Approach and Attention Mechanism. Applied Sciences, 14(8), 3346. https://doi.org/10.3390/app14083346