Advancements in EEG Emotion Recognition: Leveraging Multi-Modal Database Integration

Abstract

1. Introduction

1.1. Emotion Recognition through Facial Expressions

1.2. Emotion Recognition through EEG Signals

1.3. Privacy and Ethical Considerations

2. Database and Data Collection

2.1. Headset Selection

2.2. Consideration of Participant Situational Context

2.3. Stimulus Videos

2.4. Experimental Setup

- 1 indicates no signal, suggesting that the electrode is most likely dead or disconnected;

- 2 indicates a very weak signal that is not usable;

- 3 indicates an acceptable signal reception;

- 4 indicates a good signal.

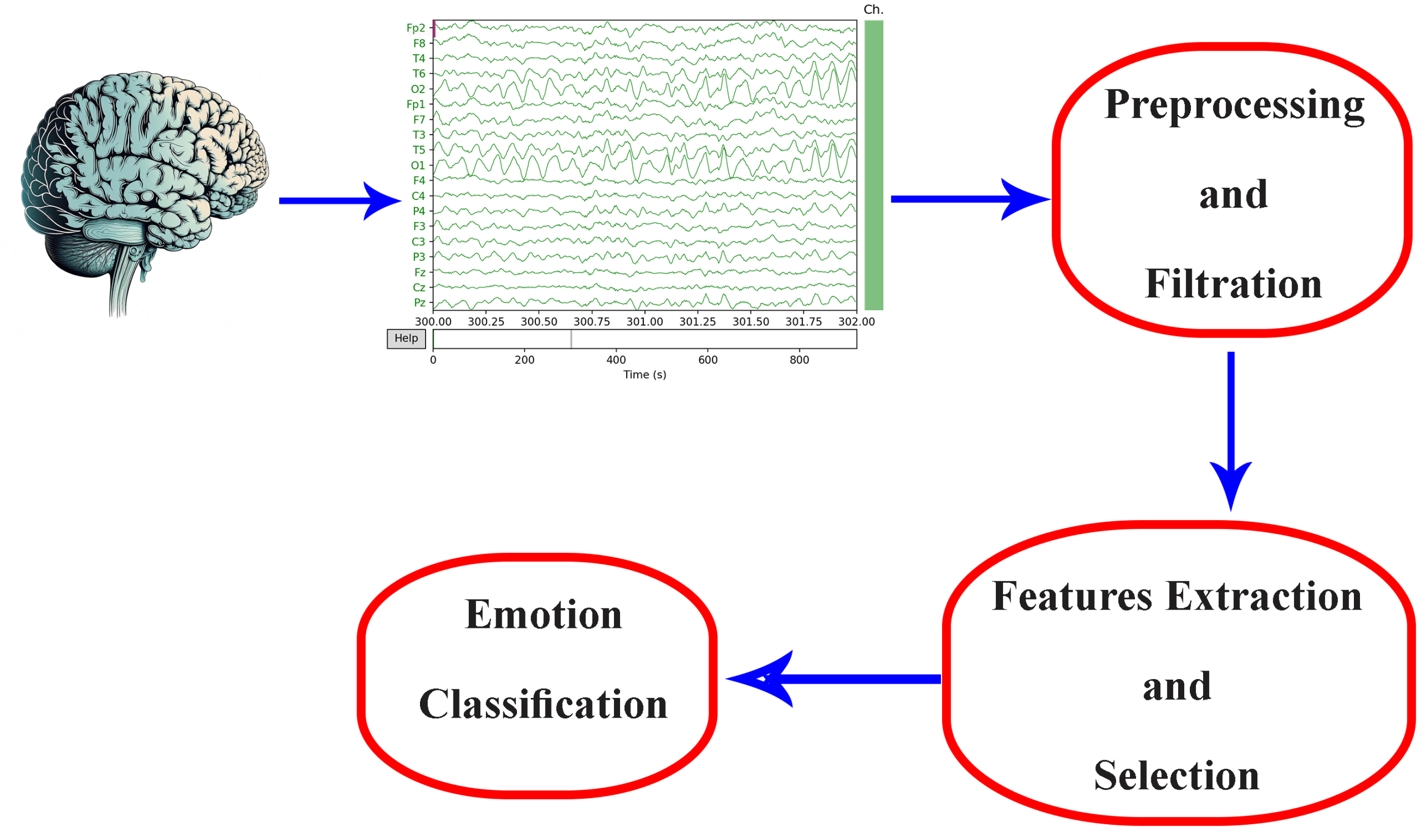

3. Multi-Modal System EEG with Facial Expressions—Proposed Model

3.1. Facial Expression-Based Emotion Recognition

3.2. EEG Topographies and Brain Heat Maps

3.3. EEG Electrode and Frequency Band Selection

3.4. EEG-Based Emotion Recognition

3.5. Fusion of Networks’ Results

- Feature-Level Fusion: Combines features extracted from both EEG and facial expression data before classification.

- Decision-Level Fusion: Independent decisions from each modality are combined to reach a final classification.

- Late Fusion: Integrating the models themselves, such as through ensemble methods or hybrid architectures.

- Early Fusion: Combining the input data from the DeepFace and EEG-based CNNs and sending them to a single CNN for collaborative feature learning.

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Almasoudi, A.; Baowidan, S.; Sarhan, S. Facial Expressions Decoded: A Survey of Facial Emotion Recognition. Int. J. Comput. Appl. 2023, 185, 1–11. [Google Scholar] [CrossRef]

- Leong, S.C.; Tang, Y.M.; Lai, C.H.; Lee, C.K.M. Facial expression and body gesture emotion recognition: A systematic review on the use of visual data in affective computing. Comput. Sci. Rev. 2023, 48, 100545. [Google Scholar] [CrossRef]

- Ancilin, J.; Milton, A. Improved speech emotion recognition with Mel frequency magnitude coefficient. Appl. Acoust. 2021, 179, 108046. [Google Scholar] [CrossRef]

- Miranda-Correa, J.A.; Abadi, M.K.; Sebe, N.; Patras, I. AMIGOS: A Dataset for Affect, Personality and Mood Research on Individuals and Groups. IEEE Trans. Affect. Comput. 2021, 12, 479–493. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Cannon, W.B. The James-Lange theory of emotions: A critical examination and an alternative theory. Am. J. Psychol. 1927, 39, 106–124. [Google Scholar] [CrossRef]

- Dror, O.E. The Cannon–Bard thalamic theory of emotions: A brief genealogy and reappraisal. Emot. Rev. 2014, 6, 13–20. [Google Scholar] [CrossRef]

- Lin, W.; Li, C. Review of Studies on Emotion Recognition and Judgment Based on Physiological Signals. Appl. Sci. 2023, 13, 2573. [Google Scholar] [CrossRef]

- Vasanth, P.C.; Nataraj, K.R. Facial Expression Recognition Using SVM Classifier. Indones. J. Electr. Eng. Inform. IJEEI 2015, 3. [Google Scholar] [CrossRef]

- Yl, M.; Kuilenburg, H. The FaceReader: Online Facial Expression Recognition. In Proceedings of the Measuring Behavior, Wageningen, The Netherlands, 30 August–2 September 2005. [Google Scholar]

- Zhu, D.; Fu, Y.; Zhao, X.; Wang, X.; Yi, H. Facial Emotion Recognition Using a Novel Fusion of Convolutional Neural Network and Local Binary Pattern in Crime Investigation. Comput. Intell. Neurosci. 2022, 2022, 2249417. [Google Scholar] [CrossRef]

- Ji, L.; Wu, S.; Gu, X. A facial expression recognition algorithm incorporating SVM and explainable residual neural network. Signal Image Video Process. 2023, 17, 4245–4254. [Google Scholar] [CrossRef]

- Donuk, K.; Ari, A.; Ozdemir, M.; Hanbay, D. Deep Feature Selection for Facial Emotion Recognition Based on BPSO and SVM. J. Polytech. 2021, 26, 131–142. [Google Scholar] [CrossRef]

- Singh, R.; Saurav, S.; Kumar, T.; Saini, R.; Vohra, A.; Singh, S. Facial expression recognition in videos using hybrid CNN & ConvLSTM. Int. J. Inf. Technol. 2023, 15, 1819–1830. [Google Scholar] [CrossRef] [PubMed]

- Du, G.; Long, S.; Yuan, H. Non-Contact Emotion Recognition Combining Heart Rate and Facial Expression for Interactive Gaming Environments. IEEE Access 2020, 8, 11896–11906. [Google Scholar] [CrossRef]

- Mittal, T.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. M3er: Multiplicative multimodal emotion recognition using facial, textual, and speech cues. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 1359–1367. [Google Scholar]

- Tan, Y.; Sun, Z.; Duan, F.; Solé-Casals, J.; Caiafa, C.F. A multimodal emotion recognition method based on facial expressions and electroencephalography. Biomed. Signal Process. Control 2021, 70, 103029. [Google Scholar] [CrossRef]

- Harmony, T. The functional significance of delta oscillations in cognitive processing. Front. Integr. Neurosci. 2013, 7, 83. [Google Scholar] [CrossRef]

- Theódórsdóttir, D.; Höller, Y. EEG-correlates of emotional memory and seasonal symptoms. Appl. Sci. 2023, 13, 9361. [Google Scholar] [CrossRef]

- Miskovic, V.; Schmidt, L.A. Frontal brain electrical asymmetry and cardiac vagal tone predict biased attention to social threat. Biol. Psychol. 2010, 84, 344–348. [Google Scholar] [CrossRef]

- Roshdy, A.; Al Kork, S.; Karar, A.; Al Sabi, A.; Al Barakeh, Z.; ElSayed, F.; Beyrouthy, T.; Nait-Ali, A. Machine Empathy: Digitizing Human Emotions. In Proceedings of the 2021 International Symposium on Electrical, Electronics and Information Engineering, Seoul, Republic of Korea, 19–21 February 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 307–311. [Google Scholar] [CrossRef]

- Piho, L.; Tjahjadi, T. A Mutual Information Based Adaptive Windowing of Informative EEG for Emotion Recognition. IEEE Trans. Affect. Comput. 2020, 11, 722–735. [Google Scholar] [CrossRef]

- Chen, X.; Zeng, W.; Shi, Y.; Deng, J.; Ma, Y. Intrinsic prior knowledge driven CICA fMRI data analysis for emotion recognition classification. IEEE Access 2019, 7, 59944–59950. [Google Scholar] [CrossRef]

- Li, X.; Song, D.; Zhang, P.; Yu, G.; Hou, Y.; Hu, B. Emotion recognition from multi-channel EEG data through Convolutional Recurrent Neural Network. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; pp. 352–359. [Google Scholar] [CrossRef]

- Candra, H.; Yuwono, M.; Chai, R.; Handojoseno, A.; Elamvazuthi, I.; Nguyen, H.T.; Su, S. Investigation of window size in classification of EEG-emotion signal with wavelet entropy and support vector machine. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 7250–7253. [Google Scholar] [CrossRef]

- Rudakov, E.; Laurent, L.; Cousin, V.; Roshdi, A.; Fournier, R.; Nait-ali, A.; Beyrouthy, T.; Kork, S.A. Multi-Task CNN model for emotion recognition from EEG Brain maps. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 8–10 December 2021. [Google Scholar]

- Vijayan, A.E.; Sen, D.; Sudheer, A. EEG-Based Emotion Recognition Using Statistical Measures and Auto-Regressive Modeling. In Proceedings of the 2015 IEEE International Conference on Computational Intelligence & Communication Technology, Ghaziabad, India, 13–14 February 2015; pp. 587–591. [Google Scholar] [CrossRef]

- Subasi, A.; Tuncer, T.; Dogan, S.; Tanko, D.; Sakoglu, U. EEG-based emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier. Biomed. Signal Process. Control 2021, 68, 102648. [Google Scholar] [CrossRef]

- Vo, H.T.T.; Dang, L.N.T.; Nguyen, V.T.N.; Huynh, V.T. A Survey of Machine Learning algorithms in EEG. In Proceedings of the 2019 6th NAFOSTED Conference on Information and Computer Science (NICS), Hanoi, Vietnam, 12–13 December 2019; pp. 500–505. [Google Scholar] [CrossRef]

- Abdulrahman, A.; Baykara, M.; Alakus, T.B. A Novel Approach for Emotion Recognition Based on EEG Signal Using Deep Learning. Appl. Sci. 2022, 12, 10028. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, M.; Li, C.; Liu, Y.; Song, R.; Liu, A.; Chen, X. Emotion Recognition From Multi-Channel EEG via Deep Forest. IEEE J. Biomed. Health Inform. 2021, 25, 453–464. [Google Scholar] [CrossRef] [PubMed]

- Veeramallu, G.K.P.; Anupalli, Y.; Jilumudi, S.k.; Bhattacharyya, A. EEG based automatic emotion recognition using EMD and Random forest classifier. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying Stable Patterns over Time for Emotion Recognition from EEG. IEEE Trans. Affect. Comput. 2019, 10, 417–429. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Johnston, S.J.; Boehm, S.G.; Healy, D.; Goebel, R.; Linden, D.E.J. Neurofeedback: A promising tool for the self-regulation of emotion networks. NeuroImage 2010, 49, 1066–1072. [Google Scholar] [CrossRef]

- Soleymani, M.; Asghari-Esfeden, S.; Fu, Y.; Pantic, M. Analysis of EEG Signals and Facial Expressions for Continuous Emotion Detection. IEEE Trans. Affect. Comput. 2016, 7, 17–28. [Google Scholar] [CrossRef]

- Topic, A.; Russo, M. Emotion recognition based on EEG feature maps through deep learning network. Eng. Sci. Technol. Int. J. 2021, 24, 1442–1454. [Google Scholar] [CrossRef]

- Roshdy, A.; Al Kork, S.; Beyrouthy, T.; Nait-ali, A. Simplicial Homology Global Optimization of EEG Signal Extraction for Emotion Recognition. Robotics 2023, 12, 99. [Google Scholar] [CrossRef]

- Roshdy, A.; Karar, A.S.; Al-Sabi, A.; Barakeh, Z.A.; El-Sayed, F.; alkork, S.; Beyrouthy, T.; Nait-ali, A. Towards Human Brain Image Mapping for Emotion Digitization in Robotics. In Proceedings of the 2019 3rd International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 24–26 April 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Rayatdoost, S.; Rudrauf, D.; Soleymani, M. Expression-guided EEG representation learning for emotion recognition. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Bashivan, P.; Rish, I.; Yeasin, M.; Codella, N. Learning representations from EEG with deep recurrent-convolutional neural networks. arXiv 2015, arXiv:1511.06448. [Google Scholar] [CrossRef]

- Hu, X.; Chen, J.; Wang, F.; Zhang, D. Ten challenges for EEG-based affective computing. Brain Sci. Adv. 2019, 5, 1–20. [Google Scholar] [CrossRef]

- Emotiv Systems Inc. Emotiv—Brain Computer Interface Technology; Emotiv Systems Inc.: San Francisco, CA, USA, 2013. [Google Scholar]

- Pehkonen, S.; Rauniomaa, M.; Siitonen, P. Participating researcher or researching participant? On possible positions of the researcher in the collection (and analysis) of mobile video data. Soc. Interact.-Video-Based Stud. Hum. Soc. 2021, 4. [Google Scholar] [CrossRef]

- Homan, R.W. The 10-20 electrode system and cerebral location. Am. J. EEG Technol. 1988, 28, 269–279. [Google Scholar] [CrossRef]

- Raghu, M.; Poole, B.; Kleinberg, J.; Ganguli, S.; Sohl-Dickstein, J. On the expressive power of deep neural networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2847–2854. [Google Scholar]

- Dong, Y.; Liu, Q.; Du, B.; Zhang, L. Weighted feature fusion of convolutional neural network and graph attention network for hyperspectral image classification. IEEE Trans. Image Process. 2022, 31, 1559–1572. [Google Scholar] [CrossRef]

- Serengil, S.I.; Ozpinar, A. HyperExtended LightFace: A Facial Attribute Analysis Framework. In Proceedings of the 2021 International Conference on Engineering and Emerging Technologies (ICEET), Istanbul, Turkey, 27–28 October 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Lee, D.H.; Yoo, J.H. CNN Learning Strategy for Recognizing Facial Expressions. IEEE Access 2023, 11, 70865–70872. [Google Scholar] [CrossRef]

- Rullmann, M.; Preusser, S.; Pleger, B. Prefrontal and posterior parietal contributions to the perceptual awareness of touch. Sci. Rep. 2019, 9, 16981. [Google Scholar] [CrossRef] [PubMed]

- Aileni, R.M.; Pasca, S.; Florescu, A. EEG-Brain Activity Monitoring and Predictive Analysis of Signals Using Artificial Neural Networks. Sensors 2020, 20, 3346. [Google Scholar] [CrossRef]

- Fingelkurts, A.A.; Fingelkurts, A.A. Morphology and dynamic repertoire of EEG short-term spectral patterns in rest: Explorative study. Neurosci. Res. 2010, 66, 299–312. [Google Scholar] [CrossRef]

- Zhao, W.; Van Someren, E.J.; Li, C.; Chen, X.; Gui, W.; Tian, Y.; Liu, Y.; Lei, X. EEG spectral analysis in insomnia disorder: A systematic review and meta-analysis. Sleep Med. Rev. 2021, 59, 101457. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Roshdy, A.; Alkork, S.; Karar, A.S.; Mhalla, H.; Beyrouthy, T.; Al Barakeh, Z.; Nait-ali, A. Statistical Analysis of Multi-channel EEG Signals for Digitizing Human Emotions. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 8–10 December 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Apicella, A.; Arpaia, P.; Isgro, F.; Mastrati, G.; Moccaldi, N. A Survey on EEG-Based Solutions for Emotion Recognition with a Low Number of Channels. IEEE Access 2022, 10, 117411–117428. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Cheng, J.; Peng, H. A medical image fusion method based on convolutional neural networks. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion 2017), Xi’an, China, 10–13 July 2017; pp. 1–7. [Google Scholar]

- Jing, L.; Wang, T.; Zhao, M.; Wang, P. An adaptive multi-sensor data fusion method based on deep convolutional neural networks for fault diagnosis of planetary gearbox. Sensors 2017, 17, 414. [Google Scholar] [CrossRef]

- Jiang, S.F.; Zhang, C.M.; Zhang, S. Two-stage structural damage detection using fuzzy neural networks and data fusion techniques. Expert Syst. Appl. 2011, 38, 511–519. [Google Scholar] [CrossRef]

- Zheng, S.; Qi, P.; Chen, S.; Yang, X. Fusion Methods for CNN-Based Automatic Modulation Classification. IEEE Access 2019, 7, 66496–66504. [Google Scholar] [CrossRef]

| Frequency Band | Frequency Range (Hz) | Emotion Recognition Relevance | References |

|---|---|---|---|

| Delta | 0.5–4 | Limited direct relevance in emotion recognition; More related to deep sleep and unconsciousness | [18] |

| Theta | 4–8 | Associated with emotional processing and memory; Some studies link theta activity to emotional arousal and valence | [19] |

| Alpha | 8–13 | Lower alpha band (8–10 Hz) has been found to reflect emotionally induced changes in cognitive and affective processing | [19] |

| Beta | 13–30 | Beta band activity has been associated with emotional regulation and processing, especially in frontal and prefrontal brain regions | [20] |

| Gamma | 30–100 | Some studies suggest a role in emotion perception, attention, and high-level cognitive processing related to emotions | [19] |

| Clip 1 | Clip 2 | Clip 3 | Clip 4 | Clip 5 | Clip 6 | |

|---|---|---|---|---|---|---|

| Happy | 47 | 50 | 49 | 61 | 54 | 56 |

| Angry | 52 | 48 | 62 | 50 | 50 | 49 |

| Sad | 83 | 55 | 42 | 47 | 58 | 58 |

| Hungry | 50 | 66 | 63 | 55 | 33 | 45 |

| Layer (Type) | Output | Number of Param |

|---|---|---|

| 2D Convolutional | (None, 63, 63, 10) | 130 |

| Activation | (None, 63, 63, 10) | 0 |

| 2D Max Pooling | (None, 12, 12, 10) | 0 |

| 2D Convolutional | (None, 11, 11, 10) | 410 |

| 2D Max Pooling | (None, 2, 2, 10) | 0 |

| Activation | (None, 2, 2, 10) | 0 |

| Flatten | (None, 40) | 0 |

| Dense | (None, 10) | 410 |

| Dense | (None, 2) | 22 |

| EEG-Based CNN Result | FE-Based CNN Result | Valence Network CS | Output |

|---|---|---|---|

| Happy/Sad | Not Detected | Any | EEG result |

| Happy/Sad | Happy/Sad | Any | EEG result |

| Happy/Sad | Sad/Happy | >0.7 | EEG result |

| Happy/Sad | Any | ≤0.7 | Result with the highest CS |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roshdy, A.; Karar, A.; Kork, S.A.; Beyrouthy, T.; Nait-ali, A. Advancements in EEG Emotion Recognition: Leveraging Multi-Modal Database Integration. Appl. Sci. 2024, 14, 2487. https://doi.org/10.3390/app14062487

Roshdy A, Karar A, Kork SA, Beyrouthy T, Nait-ali A. Advancements in EEG Emotion Recognition: Leveraging Multi-Modal Database Integration. Applied Sciences. 2024; 14(6):2487. https://doi.org/10.3390/app14062487

Chicago/Turabian StyleRoshdy, Ahmed, Abdullah Karar, Samer Al Kork, Taha Beyrouthy, and Amine Nait-ali. 2024. "Advancements in EEG Emotion Recognition: Leveraging Multi-Modal Database Integration" Applied Sciences 14, no. 6: 2487. https://doi.org/10.3390/app14062487

APA StyleRoshdy, A., Karar, A., Kork, S. A., Beyrouthy, T., & Nait-ali, A. (2024). Advancements in EEG Emotion Recognition: Leveraging Multi-Modal Database Integration. Applied Sciences, 14(6), 2487. https://doi.org/10.3390/app14062487