Abstract

Variational modal decomposition (VMD) is frequently employed for both signal decomposition and extracting features; however, the decomposition outcome is influenced by the quantity of intrinsic modal functions (IMFs) and the specific parameter values of penalty factors. To tackle this issue, we propose an algorithm based on the Halton sequence and the Laplace crossover operator for the sparrow search algorithm–VMD (HLSSA-VMD) to fine-tune the parameters of VMD. First, the population initialization by the Halton sequence yields higher-quality initial solutions, which effectively addresses the issue of the algorithm’s sluggish convergence due to overlapping and the lack of diversity of the initial solutions. Second, the introduction of the Laplace crossover operator (LX) to perturb the position of the best individual in each iteration helps to prevent the algorithm from becoming ensnared in a local optimum and improves the convergence speed of the algorithm. Finally, from the simulation of 17 benchmark test functions, we found that the HLSSA exhibited superior convergence accuracy and accelerated convergence pace, as well as better robustness than the particle swarm optimization (PSO) algorithm, the whale optimization algorithm (WOA), the multiverse optimization (MVO) algorithm, and the traditional sparrow search algorithm (SSA). In addition, we verified the effectiveness of the HLSSA-VMD algorithm on two simulated signals and compared it with PSO-VMD, WOA-VMD, MVO-VMD, and SSA-VMD. The experimental findings indicate that the HLSSA-VMD obtains better parameters, confirming the superiority of the algorithm.

1. Introduction

As science and technology advance swiftly, and with the continuous evolution of social needs, optimization algorithms are becoming increasingly crucial in solving complex problems in various fields. From mechanical design and manufacturing to artificial intelligence, the rapid progress in these fields not only promotes the development of industry but also triggers the demand for optimization algorithms to be more efficient and innovative [1].

Within signal decomposition, numerous techniques have been developed for extracting fault features. Common algorithms include wavelet transform (WT) [2], empirical mode decomposition (EMD) [3], empirical wavelet transform (EWT) [4], and the local mean decomposition (LMD) [5], among others. Although these algorithms are widely applied and play a positive role in many fields, they also exhibit certain issues that impact the effectiveness of signal decomposition. When using WT for signal processing, the fixed choice of mother wavelet leads to a lack of flexibility and less applicability when dealing with different types of signals. EMD suffers from severe mode mixing and endpoint effects when decomposing signals. Although the LMD method efficiently mitigates the emergence of over- and under-envelopes in EMD decomposition, it continues to demonstrate endpoint effects. The limited adaptability and robustness of EWT restrict its capability for signal decomposition.

In 2014, Dragomiretskiy proposed VMD [6], a method with both a strong theoretical basis and notable robustness in extracting fault features in complex environments, such as strong noise. This algorithm has found application across diverse fields and has shown excellent performance [7,8,9]. However, the VMD algorithm itself has limitations, and the effectiveness of its decomposition may be affected by both the quantity of intrinsic mode functions (IMFs), denoted as K, and the parameter α for the penalty factor. With inappropriate parameter settings, issues such as mode mixing may arise when employing the VMD algorithm in signal processing. To address the shortcomings of the VMD algorithm, many researchers opt to employ swarm intelligence optimization algorithms for parameter tuning. Li et al. [10] utilized a genetic algorithm for the adaptive searching of optimal parameters for VMD, while Liu et al. [11] utilized the grey wolf algorithm to acquire the optimal parameters for VMD. Other swarm intelligence algorithms used for optimizing VMD parameters include the PSO [12], WOA [13], multiverse optimization (MVO) algorithm [14], sailfish optimization (SFO) algorithm [15], Archimedes optimization algorithm (AOA) [16], etc.

In 2020, Xue et al. [17] introduced the SSA, known for its computational efficiency, simplicity of implementation, and ease of scalability. According to Xue et al.’s analysis, the SSA demonstrates superior performance compared to several established algorithms like the PSO, GWO, and gravity search algorithm (GSA) regarding convergence accuracy, convergence speed, and robustness. Nevertheless, akin to other swarm intelligence algorithms, the SSA may suffer from low initial solution quality, and in solving complex optimization problems, it may experience a decrease in population diversity and vulnerability to becoming trapped in local optima toward the later stages of the solution process. Researchers have addressed the aforementioned issues and achieved positive results through various studies. Gao et al. [18] proposed a multistrategy enhanced evolutionary sparrow search algorithm (ESSA), integrating tent map chaos during the initialization phase to expedite convergence speed and improve convergence accuracy. Geng et al. [19] introduced chaotic back-propagation learning and dynamic weights to prevent the SSA from being entrapped in local optima. Wu et al. [20] combined Levy flights and nonlinear inertia weight to present an improved SSA. Xiong et al. [21] introduced a fractional-order chaotic improved SSA, demonstrating higher convergence accuracy than the SSA. Sun et al. [22] implemented CAT chaotic mapping to initialize a population and explored the initial population’s randomness and searchability. Han et al. [23] used sin chaotic mapping to initialize the sparrow population, aiming to improve the quality of initial solutions. Liu et al. [24] introduced circular chaotic mapping into the SSA, enhancing the algorithm’s capacity for global exploration during population initialization. They additionally introduced the t-distribution in the formula for updating the positions of sparrows across various iteration cycles, aiding the algorithm in avoiding local optima.

The current research places significant emphasis on the population initialization process in the SSA since the population’s quality distribution significantly influences the efficacy of the search for the global optimum. In the early stages of the algorithm, a well-designed population initialization process can effectively improve the algorithm’s capability for global exploration. Chaos mapping is a frequently used approach in the existing literature on initialization, as mentioned above. However, chaotic systems are highly sensitive to starting conditions and display unpredictable randomness. Additionally, chaotic mapping algorithms involve complex mathematical operations, leading to increased computational costs.

In this paper, we propose the Halton sequence for population initialization. In comparison to the aforementioned methods, the Halton sequence ensures a more uniformly distributed initial population across the entire space and lacks random components, making it more robust. Moreover, its lower sensitivity to initial conditions and parameters enhances its reliability in algorithm applications. The crossover operator plays a crucial role in optimization algorithms by promoting population diversity and exploring the search space. The Laplace crossover operator (LX) is a particularly unique crossover operator that has been applied in various fields [25,26]. Deep et al. [27] first introduced the LX in 2007 and applied it to genetic algorithms (GAs). Experimental results demonstrated that the LX-GA outperformed other types of GAs. Consequently, in many studies related to GAs, researchers tend to utilize the LX for performing crossover operations [28,29]. The LX is suitable for optimization problems involving continuous parameters and generates random numbers based on the Laplace distribution, exhibiting good randomness and perturbation properties. Additionally, the basic operation of the LX is based on the Laplace distribution, requiring minimal parameter tuning. Considering the numerous advantages of the LX, in this study, we utilized the LX to disturb the position of the best individual at each iteration with the goal of improving the algorithm’s ability for local exploration and preventing the population from becoming ensnared in local optima. The primary contributions of this paper are outlined as follows:

- (1)

- In the sparrow initialization stage, cleverly utilizing the Halton sequence to generate initial solutions effectively improves the quality of the initial solutions, thereby significantly enhancing the algorithm’s robustness. This initialization strategy not only aids in a more evenly distributed set of initial solutions across the entire solution space but also reduces the algorithm’s sensitivity to initial conditions.

- (2)

- Introducing the Laplace crossover operator to cleverly perturb the position of the best individual in each iteration successfully mitigates the possibility of the algorithm becoming ensnared in local optima while significantly improving the convergence speed. By incorporating this effective local search strategy during the optimization process, we effectively expanded the algorithm’s exploration range in the solution space.

- (3)

- We extensively validated the effectiveness and outstanding performance of the algorithm on 17 benchmark functions. Moreover, the enhanced algorithm was effectively employed in optimizing parameters for the VMD algorithm, ultimately achieving satisfactory results and thus highlighting the practical value of this algorithm.

The remainder of the paper is organized as follows: Section 2 outlines the pertinent theoretical approaches contributing to our understanding. Section 3 provides a comprehensive description of the method proposed in this paper. Section 4 validates the proposed methodology. Finally, Section 5 presents the conclusions of this study, along with suggestions for future work.

2. The Principles of SSA

In the SSA, a population consisting of n sparrows can be represented in the following form:

where d represents the dimensionality of the problem to be solved. The fitness values of all sparrows can be represented in the following form:

where f represents an individual’s fitness value, and represents the fitness value of all sparrows.

The primary task of the founder in the sparrow population is to search for food in the environment, providing the entire population with the location and direction of the discovered food. Since founders are more likely to find food, the fitness values of producers are superior, and their position in the entire solution space is close to the location of the optimal solution. During each iteration of the search process, the founder updates its position while searching for food, and the specific calculation is expressed with the following equation:

where t denotes the current iteration number; j = 1, 2, 3, ..., d; represents the current maximum iteration count; represents the position of the i-th sparrow along the j-th dimension; and is the uniform random number. () and ST () denote the warning value and safety value, respectively. Q is a randomly generated number following a normal distribution, and L represents a matrix, where every element in the matrix is equal to 1.

indicates that, in the foraging environment, there are no predators present, allowing the founder to explore freely and extensively. However, indicates that certain sparrows within the population have detected predators and signaled warnings to other members. Consequently, all sparrows must promptly relocate to alternative safe locations for foraging.

While foraging, certain scroungers consistently observe the founder. Upon realizing that the founder has discovered superior food, they promptly abandon their current position to vie for the food. The position update process for scroungers is outlined as follows:

where represents the current optimal position held by the founder; represents the current globally worst position; A is a matrix consisting of elements randomly assigned values of either 1 or −1; and . When , the i-th joiner with a lower fitness value has not yet secured food and is in a highly hungry state. Consequently, it must relocate to another area for foraging to replenish its energy.

In the simulation experiments, the proportion of sparrows exhibiting danger perception was determined to range between 10% and 20% of the total population. Their initial positions are randomly distributed throughout the entire population, and their mathematical representation is as follows:

where represents the present global optimal position; represents the step size control parameter, with its value being a randomly generated number following a normal distribution with a mean of 0 and a variance of 1; is the uniform random number; is the fitness score of the present sparrow individual; and represent the current global best and worst fitness values, respectively; and is a numerically small constant to prevent division by zero. When , the sparrow is at the population boundary and susceptible to predator attacks, signifying that the sparrow’s position is the safest at this point. When , sparrows in the centroid of the population have detected danger, so they need to converge with other sparrows to minimize the threat of predation.

3. Improved SSA Based on Halton Sequence and LX

3.1. Population Initialization Based on the Halton Sequence

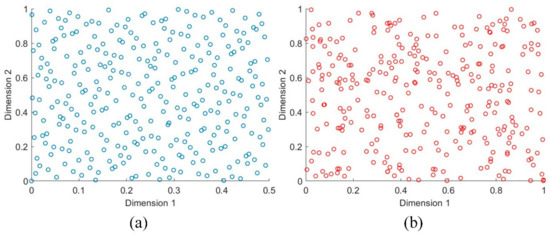

The Halton sequence [30,31,32] is a common multidimensional low-discrepancy sequence with an optimal order difference of , where d is the dimension. As shown in Figure 1, the solutions generated with the Halton sequence are more uniformly distributed in space than those generated using random initialization.

Figure 1.

(a) Halton sequence initialization; (b) random sequence initialization.

The definition of the Halton sequence is based on the inverse radical function, and its defining function is expressed as follows:

where p is a prime number, and is the k-th digit of n in the base-b expansion ().

The Halton sequence in d dimensions can be represented with the following formula:

The initial positions for the population can be generated using the following formula:

where and denote the lower and upper limits of the positions of the sparrow population in the j-th dimension ().

3.2. Optimal Position Perturbation Based on LX

The LX generates a pair of offspring, and , from a pair of parents, namely and . The descendants produced by the LX are symmetrically positioned relative to the parents. LX random numbers are determined according to the following rules [27]:

where and are two random numbers uniformly distributed within the range [0, 1]; is the location parameter, which can be used to control the distribution of the offspring positions in the search space; and is the scale parameter. A smaller q value results in offspring positions being closer to the parents, while a larger q value leads to offspring positions being farther from the parents. In this study, the value of q was 1, and the value of p was 0. The rules for offspring generation are as follows:

If the range of offspring positions exceeds the search space, i.e., or , then is set to a random number from the interval .

The pseudocode of HLSSA is shown in Algorithm 1.

| Algorithm 1 Pseudocode of HLSSA |

| 1. set t = 0 |

| 2. Initialize the population using Equation (9). |

| 3. compute the fitness value for each sparrow individual using the fitness function, and then arrange them in order, noting the best position and best fitness value . |

| 4. While (t < ) |

| determine the proportion of founders PD1, the ratio of scroungers PD2 and the proportion of sparrows with danger perception PD3 |

| For i = 1: PD1 |

| Update the founders positions based on Formula (3). |

| End for |

| For i = 1: PD2 |

| Update the scroungers positions based on Formula (4). |

| End for |

| For i = 1: PD3 |

| Update the sparrows with danger perception positions based on Formula (5). |

| End for |

| Calculate the fitness value for each sparrow and select the best one. |

| Disturb the optimal position according to Formula (11) and compute its fitness value. Compare the fitness values before and after the disturbance and choose the better one. |

| t = t + 1 |

| End while |

| 5. Return , |

4. Method Validation

To assess the effectiveness of the enhanced algorithm, two sets of comparative experiments were devised in this study. In the initial set of experiments, we selected seven unimodal test functions, characterized by having only one extremum, to verify the algorithm’s convergence speed, optimization accuracy, and local search capability. The second set of experiments involved the selection of five multimodal test functions, characterized by having multiple local extremum points, to examine the algorithm’s performance in escaping local optima and possessing global exploration capabilities. To reduce the bias in single-run results, we conducted 30 iterations for each function and documented their optimal values, mean values, and standard deviations. Simultaneously, we compared the improved algorithm with the PSO, MVO, WOA, and SSA. Table 1 provides the detailed parameter settings of each algorithm. All experiments were executed on a computer featuring an Intel i7 processor operating at 2.30 GHz and 16 GB of RAM, utilizing the MATLAB 2023a environment. This experimental design and environment setup aimed to comprehensively and reliably evaluate algorithm performance and facilitate an objective comparison.

Table 1.

Details of algorithm parameter settings.

4.1. Unimodal Test Function Experiments

Evaluating algorithm performance using test functions with known global optimal values is a common approach in this field. Unimodal test functions are particularly useful for validating algorithm performance in local search, as these functions contain only one global optimum without other local optima. Table 2 provides the formulas, dimensions, search space, and global optimal values for unimodal test functions. The optimal value represents the global minimum value, and the search space refers to the range of .

Table 2.

Details of unimodal benchmark functions.

4.1.1. Experimental Results

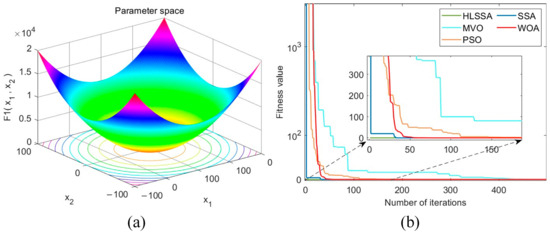

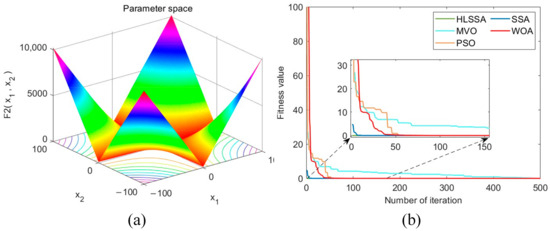

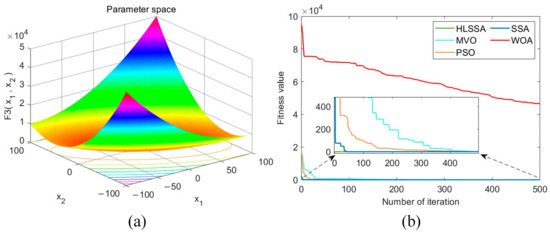

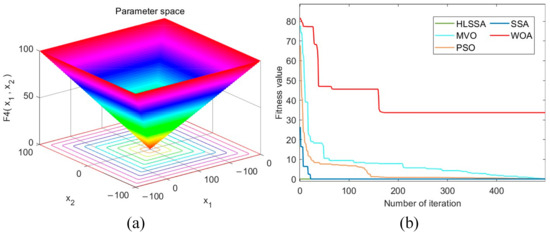

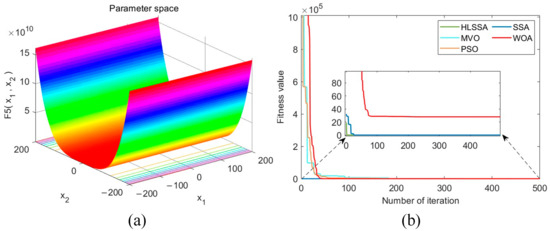

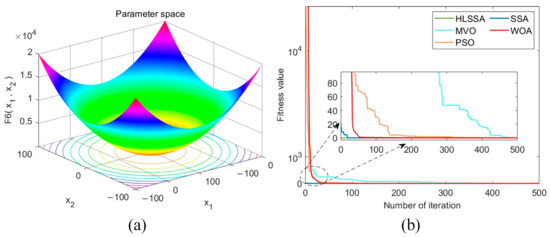

Table 3 presents the optimization outcomes for the five algorithms; the “Best” column lists the best fitness values. Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 present three-dimensional plots and convergence curves for the seven benchmark functions. In Figure 2a, Figure 3a, Figure 4a, Figure 5a, Figure 6a, Figure 7a and Figure 8a, n = 2 for the purpose of displaying the two-dimensional surface of the functions. In the results shown in Figure 2b, Figure 3b, Figure 4b, Figure 5b, Figure 6b, Figure 7b and Figure 8b, the value of n is 30.

Table 3.

Results of optimizing seven unimodal benchmark functions using the five algorithms.

Figure 2.

(a) Three-dimensional plot of F1; (b) convergence curve.

Figure 3.

(a) Three-dimensional plot of F2; (b) convergence curve.

Figure 4.

(a) Three-dimensional plot of F3; (b) convergence curve.

Figure 5.

(a) Three-dimensional plot of F4; (b) convergence curve.

Figure 6.

(a) Three-dimensional plot of F5; (b) convergence curve.

Figure 7.

(a) Three-dimensional plot of F6; (b) convergence curve.

Figure 8.

(a) Three-dimensional plot of F7; (b) convergence curve.

4.1.2. Analysis of the Results

Convergence accuracy analysis: As depicted in Table 3, using the HLSSA, we successfully determined the optimal values for test functions F1 to F4. Although they did not reach the optimal values for F5 to F7, the algorithm’s performance in terms of the obtained optimal values, averages, and standard deviations was significantly better than the PSO, MVO, WOA, and SSA. This indicates that the HLSSA has stronger optimization capabilities when solving unimodal test functions.

Stability analysis: According to the standard deviation (STD) test data in Table 3, the HLSSA had STD values of 0 for F1 to F4 and the lowest STD values for F5 to F7. This suggests that the HLSSA has better stability on unimodal test functions than other algorithms.

Convergence speed analysis: As shown in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8, the HLSSA had an absolute advantage in convergence speed on test functions F1 to F7. On test functions F1 to F4 and F6, HLSSA yielded the optimal values at the beginning of the iterations, indicating that initialization based on the Halton sequence helps the algorithm obtain better initial solutions.

In summary, the proposed HLSSA exhibited stronger optimization capabilities, faster convergence speed, and greater stability when dealing with unimodal functions. This study proposes an efficient and reliable algorithm for unimodal test function optimization problems.

4.2. Multimodal Test Function Experiments

Multimodal test functions typically contain many local optimum values, making them more challenging and difficult to use for finding the global optimum. Therefore, testing on such functions allows for a more comprehensive evaluation of the algorithm’s exploration capabilities and potential to escape local optima. Table 4 provides the formulas, dimensions, search spaces, and global optimal values for multimodal test functions.

Table 4.

Details of multimodal benchmark functions.

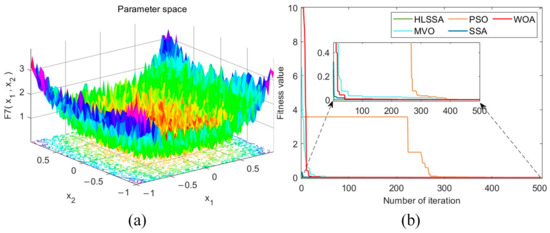

4.2.1. Experimental Results

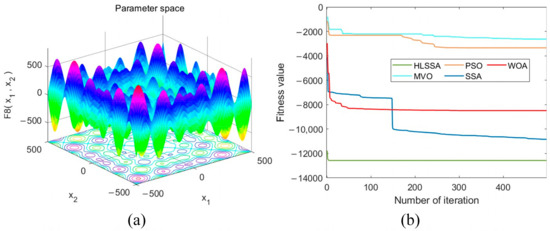

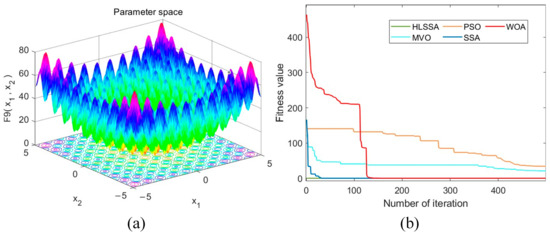

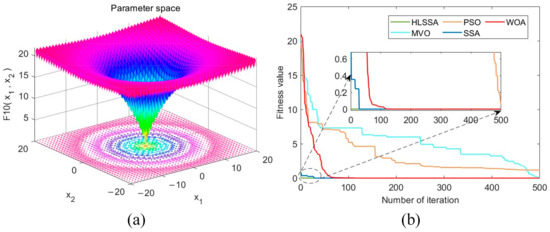

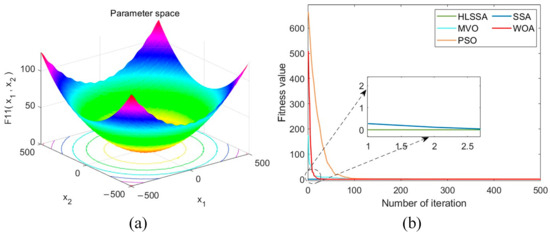

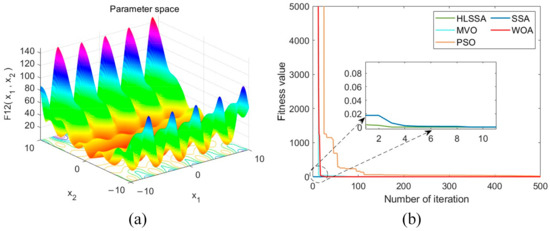

Table 5 presents the optimization results for the five algorithms, and Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 depict the three-dimensional plots and convergence plots for the five test functions. In Figure 9a, Figure 10a, Figure 11a, Figure 12a and Figure 13a, n = 2 for the purpose of displaying the two-dimensional surface of the functions. In the results shown in Figure 9b, Figure 10b, Figure 11b, Figure 12b and Figure 13b, the value of n is 30.

Table 5.

Optimization results of the five algorithms on five unimodal benchmark functions.

Figure 9.

(a) Three-dimensional plot of F8; (b) convergence curve.

Figure 10.

(a) Three-dimensional plot of F9; (b) convergence curve.

Figure 11.

(a) Three-dimensional plot of F10; (b) convergence curve.

Figure 12.

(a) Three-dimensional plot of F11; (b) convergence curve.

Figure 13.

(a) Three-dimensional plot of F12; (b) convergence curve.

4.2.2. Analysis of the Results

Convergence accuracy analysis: As shown in Table 5, the HLSSA successfully yielded the optimal values for test functions F8, F9, and F11, and its optimization performance was significantly better than the PSO, MVO, and WOA. Although the HLSSA did not yield the optimal values for F10 and F12, its performance in terms of the obtained optimal values, averages, and standard deviations was still better than other algorithms. For F9, F10, and F11, the SSA performed similarly to the HLSSA, but regarding the other two functions, the SSA was more prone to becoming entrapped in local optima. Overall, the HLSSA exhibited stronger global search capabilities when solving multimodal test functions, confirming the effectiveness of LX perturbation in helping the SSA escape local optima.

Stability analysis: According to the standard deviation (STD) data in Table 5, the HLSSA exhibited comparable performance to the SSA considering F9, F10, and F11. However, in terms of the remaining multimodal test functions, the HLSSA revealed consistently lower STD values, indicating better stability on multimodal test functions.

Convergence speed analysis: As shown in Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13, the HLSSA had an absolute advantage in convergence speed on the five multimodal test functions, and the initial solutions obtained were very close to the optimal values. This suggests that the HLSSA demonstrates accelerated convergence when addressing high-dimensional complex problems.

In summary, the HLSSA exhibited enhanced convergence accuracy, accelerated convergence speed, and improved stability when dealing with high-dimensional multimodal problems. Compared to the basic SSA, the HLSSA achieved significant improvements in performance.

4.3. HLSSA-VMD Algorithm Validation

Variational mode decomposition (VMD) is one of the most commonly used methods in the field of signal decomposition, and its decomposition performance is often heavily influenced by the number of modes (K) and the quadratic penalty term (α). Improper parameter settings can lead to information loss and frequency aliasing. Researchers typically employ optimization algorithms for parameter tuning, yet different optimization algorithms yield varying degrees of effectiveness in optimization. This section presents two sets of simulated signals for validating the effectiveness of the HLSSA-VMD algorithm. A comparative analysis was conducted with the PSO-VMD, WOA-VMD, MVO-VMD, and SSA-VMD to further highlight the superior performance of the HLSSA-VMD algorithm.

The first set of simulated signals we constructed is the simulated signal representing an outer race fault in a bearing, denoted as x(t). The construction formula is as follows [33]:

where is the natural frequency; is the damping coefficient; A is the amplitude of the pulse fault; and n(t) is Gaussian white noise with an SNR of −10 dB.

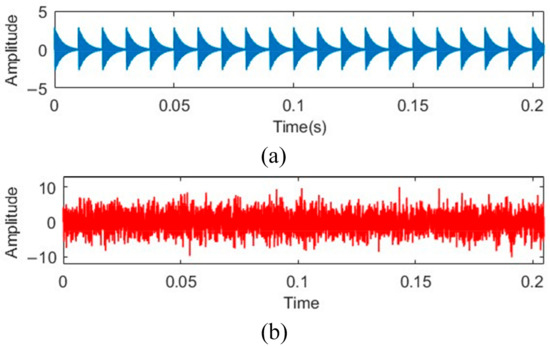

The detailed information of the simulated signal is shown in Table 6, with a sampling frequency of 20 KHz and a number of 4096 data samples. Figure 14a,b display the initial state and the state after adding Gaussian white noise to the simulated bearing fault signal, respectively.

Table 6.

The detailed parameters of the simulated signal.

Figure 14.

Two states of the bearing outer ring fault signal: (a) original state; (b) state after adding noise.

We employed envelope entropy minimization as the fitness function for optimizing VMD parameters through the HLSSA. Envelope entropy reflects the sparsity traits of the original signal. When the obtained intrinsic mode functions (IMFs) after decomposition have more noise and less feature information, the envelope entropy value is larger. Conversely, when there is less noise and more feature information in the decomposed IMFs, the envelope entropy value is smaller. Currently, many studies use envelope entropy as the objective function for optimizing VMD parameters.

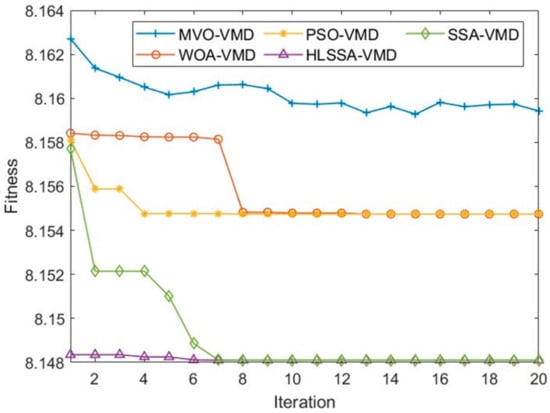

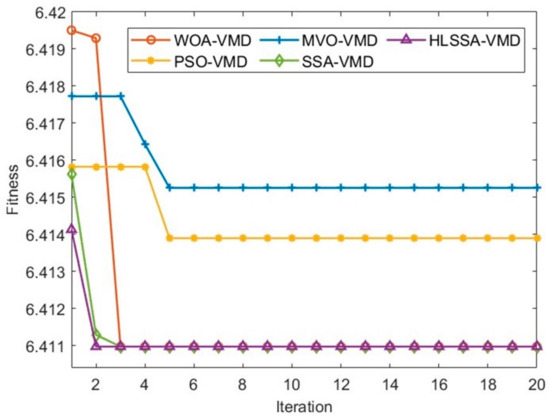

The search range for K was [1, 10], and the search range for α was [100, 3000]. Figure 15 illustrates the iterative optimization process for the five algorithms, and Table 7 presents the optimal solutions obtained with these algorithms.

Figure 15.

The iterative optimization process of the five algorithms.

Table 7.

The optimization results obtained with the five algorithms.

From Table 7, it is evident that both the SSA and HLSSA demonstrated superior performance in terms of convergence accuracy. However, as shown in Figure 15, the HLSSA outperformed the SSA regarding initialization and convergence speed. This indicates that the proposed improvement to the SSA is effective, and the HLSSA demonstrates higher-quality initialization, faster convergence speed, and superior convergence accuracy.

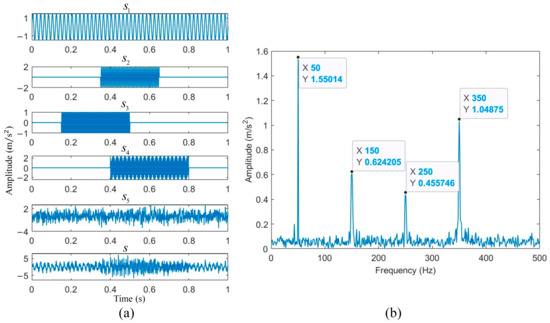

The second set of simulated signals consisted of three signals with different frequencies that were superimposed at different time intervals. To simulate real-world signals more accurately, Gaussian white noise was added to the signal s. The construction process of signal s is as follows:

where is a Gaussian white noise signal with a mean of 0 and a standard deviation of 1.

Figure 16a is a visual representation of each simulated signal. Signal had an amplitude of 1.5, a frequency of 50 Hz, and a duration of 0–1 s; signal had an amplitude of 2, a frequency of 150 Hz, and a duration of 0.35–0.65 s; signal had an amplitude of 1.5, a frequency of 250 Hz, and a duration of 0.15–0.5 s; and signal had an amplitude of 2.5, a frequency of 350 Hz, and a duration of 0.4–0.8 s. Figure 16b shows the amplitude–frequency plot obtained by performing a Fourier transform on the synthesized signal s. Figure 16b demonstrates that the synthesized signal s contained the frequencies corresponding to the simulated signals .

Figure 16.

(a) Simulated signal; (b) amplitude–frequency plot of the synthesized signal s.

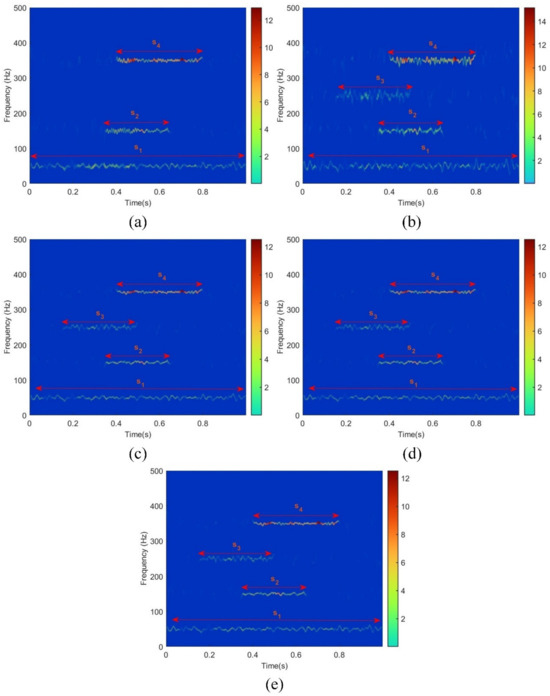

The optimal parameters K and α for the VMD algorithm in decomposing signal s were obtained using the HLSSA. The search ranges for K and α were set to [100, 6000] and [1, 10], respectively. Figure 17 illustrates the iterative optimization process for the five algorithms, and Table 8 presents the optimal solutions obtained with each algorithm.

Figure 17.

The iterative optimization process of the five algorithms.

Table 8.

The optimization results obtained with the five algorithms.

As shown in Figure 17 and Table 8, the MVO and PSO exhibited relatively poorer convergence accuracy, while the WOA, SSA, and HLSSA yielded the same optimization results. However, the HLSSA demonstrated a faster convergence speed, and it had the lowest initial fitness value, indicating that the HLSSA provides a higher quality initial solution.

In Figure 18a, the decomposition results of MVO-VMD are displayed, revealing that the algorithm only decomposed three signals of different frequencies, while the 250 Hz signal was absent. Figure 18b shows the decomposition results of PSO-VMD, and although it successfully decomposed the signals of four different frequencies, each frequency component exhibited significant jitter, resulting in less smooth decomposition. Figure 18c–e demonstrate the decomposition results of WOA-VMD, SSA-VMD, and HLSSA-VMD, respectively. In contrast to PSO-VMD, these three algorithms not only successfully decomposed all frequencies but also provided smoother decomposition results, indicating superior performance.

Figure 18.

Hilbert spectra corresponding to different algorithms: (a) MVO-VMD; (b) PSO-VMD; (c) WOA-VMD; (d) SSA-VMD; (e) HLSSA-VMD.

This comparative analysis further validates the outstanding performance of the HLSSA in optimizing VMD parameters, showcasing its faster convergence speed and higher-quality decomposition results.

5. Conclusions

In this study, we proposed the HLSSA-VMD algorithm for optimizing VMD parameters. Firstly, we validated the performance of the HLSSA across 17 benchmark functions, demonstrating its superiority in convergence accuracy, convergence speed, and robustness compared to the PSO, WOA, MVO, and SSA. Secondly, we evaluated the performance of the HLSSA-VMD algorithm on two simulated signals, revealing that, in comparison with other algorithms, HLSSA-VMD effectively enhances VMD’s parameter optimization capabilities and improves signal decomposition quality.

Author Contributions

Conceptualization, H.D.; methodology, H.D.; software, H.D.; validation, H.D.; formal analysis, H.D.; investigation, H.D.; resources, H.D.; data curation, H.D.; writing—original draft preparation, H.D.; writing—review and editing, W.Q. and X.Z.; visualization, W.Q. and X.Z.; supervision, J.W.; project administration, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Deng, L.; Liu, S. A Novel Hybrid Grasshopper Optimization Algorithm for Numerical and Engineering Optimization Problems. Neural Process. Lett. 2023, 55, 9851–9905. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, F.; He, Y.; Wu, Y. Weak Fault Diagnosis of Rolling Bearing under Variable Speed Condition Using IEWT-Based Enhanced Envelope Order Spectrum. Meas. Sci. Technol. 2019, 30, 035003. [Google Scholar] [CrossRef]

- Xu, Y.; Cai, Z.; Ding, K. An Enhanced Bearing Fault Diagnosis Method Based on TVF-EMD and a High-Order Energy Operator. Meas. Sci. Technol. 2018, 29, 095108. [Google Scholar] [CrossRef]

- Chegini, S.N.; Bagheri, A.; Najafi, F. Application of a New EWT-Based Denoising Technique in Bearing Fault Diagnosis. Measurement 2019, 144, 275–297. [Google Scholar] [CrossRef]

- Keyhani, A.; Mohammadi, S. Structural Modal Parameter Identification Using Local Mean Decomposition. Meas. Sci. Technol. 2018, 29, 025003. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Wang, W.; Guo, S.; Zhao, S.; Lu, Z.; Xing, Z.; Jing, Z.; Wei, Z.; Wang, Y. Intelligent Fault Diagnosis Method Based on VMD-Hilbert Spectrum and ShuffleNet-V2: Application to the Gears in a Mine Scraper Conveyor Gearbox. Sensors 2023, 23, 4951. [Google Scholar] [CrossRef]

- Luo, J.; Wen, G.; Lei, Z.; Su, Y.; Chen, X. Weak Signal Enhancement for Rolling Bearing Fault Diagnosis Based on Adaptive Optimized VMD and SR under Strong Noise Background. Meas. Sci. Technol. 2023, 34, 064001. [Google Scholar] [CrossRef]

- Zhang, M.; Cao, Y.; Sun, Y.; Su, S. Vibration Signal-Based Defect Detection Method for Railway Signal Relay Using Parameter-Optimized VMD and Ensemble Feature Selection. Control Eng. Pract. 2023, 139, 105630. [Google Scholar] [CrossRef]

- Li, T.; Zhang, F.; Lin, J.; Bai, X.; Liu, H. Fading Noise Suppression Method of Φ-OTDR System Based on Non-Local Means Filtering. Opt. Fiber Technol. 2023, 81, 103572. [Google Scholar] [CrossRef]

- Liu, B.; Liu, C.; Zhou, Y.; Wang, D. A Chatter Detection Method in Milling Based on Gray Wolf Optimization VMD and Multi-Entropy Features. Int. J. Adv. Manuf. Technol. 2023, 125, 831–854. [Google Scholar] [CrossRef]

- Mao, M.; Chang, J.; Sun, J.; Lin, S.; Wang, Z. Research on VMD-Based Adaptive TDLAS Signal Denoising Method. Photonics 2023, 10, 674. [Google Scholar] [CrossRef]

- Lu, D.; Shen, S.; Li, Y.; Zhao, B.; Liu, X.; Fang, G. An Ice-Penetrating Signal Denoising Method Based on WOA-VMD-BD. Electronics 2023, 12, 1658. [Google Scholar] [CrossRef]

- Jin, Z.; He, D.; Ma, R.; Zou, X.; Chen, Y.; Shan, S. Fault Diagnosis of Train Rotating Parts Based on Multi-Objective VMD Optimization and Ensemble Learning. Digit. Signal Process. 2022, 121, 103312. [Google Scholar] [CrossRef]

- Jing, L.; Bian, J.; He, X.; Liu, Y. Study on the Optimization of the Classification Method of Rolling Bearing Fault Type and Damage Degree Based on SFO–VMD. Meas. Sci. Technol. 2023, 34, 125047. [Google Scholar] [CrossRef]

- Wang, J.; Zhan, C.; Li, S.; Zhao, Q.; Liu, J.; Xie, Z. Adaptive Variational Mode Decomposition Based on Archimedes Optimization Algorithm and Its Application to Bearing Fault Diagnosis. Measurement 2022, 191, 110798. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A Novel Swarm Intelligence Optimization Approach: Sparrow Search Algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Gao, B.; Shen, W.; Guan, H.; Zheng, L.; Zhang, W. Research on Multistrategy Improved Evolutionary Sparrow Search Algorithm and Its Application. IEEE Access 2022, 10, 62520–62534. [Google Scholar] [CrossRef]

- Geng, J.; Sun, X.; Wang, H.; Bu, X.; Liu, D.; Li, F.; Zhao, Z. A Modified Adaptive Sparrow Search Algorithm Based on Chaotic Reverse Learning and Spiral Search for Global Optimization. Neural Comput. Applic. 2023, 35, 24603–24620. [Google Scholar] [CrossRef]

- Du, Y.; Yuan, H.; Jia, K.; Li, F. Research on Threshold Segmentation Method of Two-Dimensional Otsu Image Based on Improved Sparrow Search Algorithm. IEEE Access 2023, 11, 70459–70469. [Google Scholar] [CrossRef]

- Xiong, Q.; Zhang, X.; He, S.; Shen, J. A Fractional-Order Chaotic Sparrow Search Algorithm for Enhancement of Long Distance Iris Image. Mathematics 2021, 9, 2790. [Google Scholar] [CrossRef]

- Sun, H.; Wang, J.; Chen, C.; Li, Z.; Li, J. ISSA-ELM: A Network Security Situation Prediction Model. Electronics 2022, 12, 25. [Google Scholar] [CrossRef]

- Han, M.; Zhong, J.; Sang, P.; Liao, H.; Tan, A. A Combined Model Incorporating Improved SSA and LSTM Algorithms for Short-Term Load Forecasting. Electronics 2022, 11, 1835. [Google Scholar] [CrossRef]

- Jianhua, L.; Zhiheng, W. A Hybrid Sparrow Search Algorithm Based on Constructing Similarity. IEEE Access 2021, 9, 117581–117595. [Google Scholar] [CrossRef]

- Hanh, N.T.; Binh, H.T.T.; Hoai, N.X.; Palaniswami, M.S. An Efficient Genetic Algorithm for Maximizing Area Coverage in Wireless Sensor Networks. Inf. Sci. 2019, 488, 58–75. [Google Scholar] [CrossRef]

- Ul Haq, E.; Ahmad, I.; Almanjahie, I.M. A Novel Parent Centric Crossover with the Log-Logistic Probabilistic Approach Using Multimodal Test Problems for Real-Coded Genetic Algorithms. Math. Probl. Eng. 2020, 2020, 1–17. [Google Scholar] [CrossRef]

- Deep, K.; Thakur, M. A New Crossover Operator for Real Coded Genetic Algorithms. Appl. Math. Comput. 2007, 188, 895–911. [Google Scholar] [CrossRef]

- Thakur, M.; Meghwani, S.S.; Jalota, H. A Modified Real Coded Genetic Algorithm for Constrained Optimization. Appl. Math. Comput. 2014, 235, 292–317. [Google Scholar] [CrossRef]

- Emami, M.; Mozaffari, A.; Azad, N.L.; Rezaie, B. An Empirical Investigation into the Effects of Chaos on Different Types of Evolutionary Crossover Operators for Efficient Global Search in Complicated Landscapes. Int. J. Comput. Math. 2016, 93, 3–26. [Google Scholar] [CrossRef]

- Halton, J.H. Algorithm 247: Radical-Inverse Quasi-Random Point Sequence. Commun. ACM 1964, 7, 701–702. [Google Scholar] [CrossRef]

- Halton, J.H. On the Efficiency of Certain Quasi-Random Sequences of Points in Evaluating Multi-Dimensional Integrals. Numer. Math. 1960, 2, 84–90. [Google Scholar] [CrossRef]

- Kocis, L.; Whiten, W.J. Computational Investigations of Low-Discrepancy Sequences. ACM Trans. Math. Softw. 1997, 23, 266–294. [Google Scholar] [CrossRef]

- Tian, S.; Zhen, D.; Liang, X.; Feng, G.; Cui, L.; Gu, F. Early Fault Feature Extraction for Rolling Bearings Using Adaptive Variational Mode Decomposition with Noise Suppression and Fast Spectral Correlation. Meas. Sci. Technol. 2023, 34, 065112. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).