Abstract

An increasing number of methods are being used to extract rock discontinuities from 3D point cloud data of rock surfaces. In this paper, a new method for automatic extraction of rock discontinuity based on an improved Naive Bayes classifier is proposed. The method first uses principal component analysis to find the normal vectors of the points, and then generates a certain number of random point sets around the selected training points for training the classifier. The trained, improved Naive Bayes classifier is based on point normal vectors and is able to automatically remove noise points due to various reasons in conjunction with the knee point algorithm, realizing high-precision extraction of the discontinuity sets. Subsequently, the individual discontinuities are segmented using a hierarchical density-based spatial clustering method with noise application. Finally, the PCA algorithm is used to complete the orientation by plane fitting the individual discontinuities. The method was applied in two cases, Kingston and Colorado, and the reliability and advantages of the new method were verified by comparing the results with those of previous research, and the discussion and analysis determined the optimal values of the relevant parameters in the algorithm.

1. Introduction

The presence of rock mass discontinuities, such as bedding planes, joints, faults, and other planes, plays a crucial role in the field of rock mechanics [1], altering the mechanical properties of rock masses, and consequently influencing the stability of rocky slopes [2,3]. The International Society for Rock Mechanics [4] proposed a set of quantitative parameters for characterizing rock discontinuities, including orientation, spacing, persistence, roughness, aperture, filling, wall strength, seepage, number of sets, and block size. Among these parameters, orientation, as a critical factor influencing the deformation of rock masses, holds extraordinary significance in the analysis of rock mass stability [5,6,7,8,9]. The traditional approach necessitates manual measurements of discontinuity orientation using a compass and inclinometer in accessible regions [10]. This technique is characterized by notable labor intensity and time consumption. Meanwhile, its application in regions featuring substantial topographical variations not only jeopardizes the safety of surveyors but also lacks assurance in ensuring the precision of data [11].

With the advancement of remote sensing technology, non-contact measurement methods such as photogrammetry [12] and LiDAR [13] have been introduced, facilitating rapid scanning of the rock surfaces within the study area. This generates high-precision point clouds composed of numerous three-dimensional coordinates, overcoming the limitations associated with traditional methods [14,15]. Currently, a growing number of scholars are actively involved in extracting discontinuities from point clouds to gather comprehensive parameter information. The extraction process essentially involves segmenting points belonging to distinct discontinuities within the point cloud. The predominant methods for extracting discontinuities from point clouds can be classified into two distinct perspectives. Considering that rock mass discontinuities often exhibit a planar feature, one method involves linear or nonlinear fitting through geometric analysis and mathematical models to achieve plane extraction. Some researchers have employed plane detection algorithms, such as Random Sample Consensus (RANSAC) [16,17,18,19], 3D Hough Transform [20,21], and Principal Component Analysis (PCA) [22,23], to extract points belonging to the same plane within a point cloud, treating them as individual discontinuities. The Region Growing algorithm [24,25,26] relies on normal vectors and curvature to identify the nearest neighbors that share the same plane with the seed point, treating them as growth points, and carries out plane expansion to achieve the extraction of discontinuities. However, the method is very time-consuming. In the modified Region Growing algorithm proposed by Ge et al. [25], the homogeneity criterion is modified to increase the probability of potential growth points to become growth points, which improves the computational efficiency. By applying the Region Growing algorithm to the voxelized point cloud, some scholars [27,28] have greatly improved the efficiency of the extraction of the discontinuity. Aiming at the problems caused by the threshold selection of the traditional Region Growing algorithm, Yi et al. [26] improved on it and proposed a multi-rule Region Growing algorithm with higher accuracy. Singh et al. [29] found that the vertical and horizontal angle variations of points belonging to the same discontinuity set in a certain area appear as a sinusoidal signal, and proposed a new method to realize discontinuity set extraction by using the amplitude and phase of this signal. While such methods typically demonstrate effectiveness in simple scenarios, their application becomes time-consuming and vulnerable to interference from factors like noise when confronted with large and complex point clouds.

Given that points located in the same discontinuity in a point cloud often share similar characteristics, mainly including 3D coordinates, normal vectors, curvature, and color, another approach involves employing machine learning (ML) techniques for classification based on extracted features. For point cloud feature extraction, 3D coordinates and colors are directly available through laser scanning and photogrammetry [30]. For normal vectors and curvature, some studies have utilized methods such as triangulation [15,18,31], cube search [8], and other methods for extraction, but compared to the utilization of 3D methods to extract from a point set composed of points and neighboring points [32,33], the former methods may lose a certain amount of point cloud information. Some scholars have utilized normal vectors as features and applied the K-means algorithm [18,34] to cluster the point cloud, followed by the extraction of discontinuities from the clustered result. The Fuzzy C-means (FCM) algorithm, an improvement of K-means, calculates the probability that a sample point belongs to each cluster center, subsequently determining the category of sample points to achieve the automatic identification of discontinuities, and so exhibits reduced sensitivity to noise and superior performance in terms of classification results [35,36]. Nevertheless, both of the aforementioned methods necessitate a pre-determination of the cluster count and exhibit sensitivity to the initial selection of cluster centers. Variations in the choice of initial cluster centers frequently lead to discrepancies in the classification results. At this point, some research has introduced parameters such as Minimum Description Length (MDL) [37], and clustering validity indices [18,35], to provide references for determining the number of clusters. Optimization algorithms, including Particle Swarm Optimization (PSO) [38], Differential Evolution (DE) [39], and the Firefly Algorithm (FA) [40], have been introduced to optimize the update of cluster centers. Xu et al. [41] proposed a new fast fuzzy clustering (FFC) method by combining the FCM algorithm with peak density clustering (CFSFDP), overcoming the respective defects of the two algorithms. Riquelme et al. [32] integrated Density-Based Spatial Clustering of Applications with Noise (DBSCAN), an unsupervised machine learning clustering algorithm based on density that is adept at handling irregular shapes, with Kernel Density Estimation (KDE) for the extraction of discontinuities from point clouds. Singh et al. [29] proposed five features for describing the points after filtering the noisy points in the point cloud using various methods, combined with K-Medoids and DBSCAN to complete the extraction of the discontinuity, which demonstrated strong adaptability in complex scenes. Chen et al. [42] proposed an adaptive DBSCAN (ADBSCAN) algorithm to address the challenge of determining DBSCAN parameters, which further improves the automation of discontinuity extraction. Distinct from unsupervised machine learning algorithms, Ge et al. [43] employed a well-trained artificial neural network model, trained by the manual selection of an ample training dataset, to classify point clouds, greatly enhancing the efficiency of discontinuity extraction. However, achieving a well-trained network involves repeated manual sample selection until the accuracy is satisfied, thereby imposing significant constraints on the level of automation and computational efficiency. To address the aforementioned issues, this paper proposes a semi-automated approach that efficiently generates a training sample set in a simple manner.

The Naive Bayes classifier [44], a supervised machine learning algorithm, is frequently utilized in document classification [45], medical diagnosis [46], slope stability prediction [47], and various other fields. However, there has been limited exploration of this algorithm in the field of rock mass discontinuity identification. This paper proposes a new method for discontinuity recognition based on an improved Naive Bayes classifier and specifically designed to address the needs of point cloud classification tasks. The structure of the article is as follows: Section 2 introduces the steps and principles of the method proposed in this paper through a case study in Colorado; in Section 3, the reliability of the method is validated through its application to the case study B, and a discussion on the selection of relevant parameters is presented; Section 4 compares the proposed method with previous approaches; Section 5 provides the conclusion.

2. Methodology

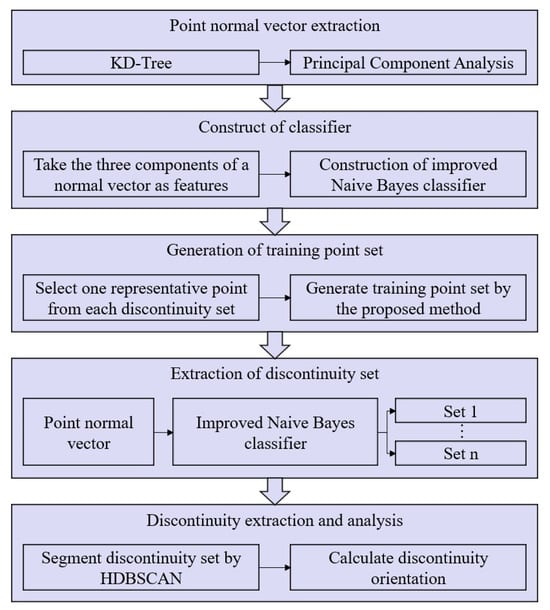

The methodology proposed in this study encompasses the following key steps, as delineated in Figure 1.

Figure 1.

Flow chart of method.

Step 1: Computation of point normal vector. The nearest neighbors of each point in the point cloud are quickly found by building a KD-Tree, and the normal vectors of the fitting plane for these points, obtained through Principal Component Analysis, will be used as the normal vectors of the points.

Step 2: Classifier construction. Three components of the point normal vector are used as features to construct an improved Naive Bayes classifier suitable for point cloud classification.

Step 3: Generation of training point sets. Based on the selection of one point per discontinuity set, the method proposed in this study is employed to generate training point sets.

Step 4: Extraction of discontinuity sets. The extraction of the discontinuity set is achieved by classifying the entire point cloud based on its normal vector using a trained, improved Naive Bayes classifier.

Step 5: Extraction and analysis of individual discontinuities. The Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN) algorithm is utilized to extract individual discontinuities from the discontinuity sets. Principal component analysis for plane fitting is employed to calculate the dip direction and dip of individual discontinuities.

2.1. Case Study A for Validation

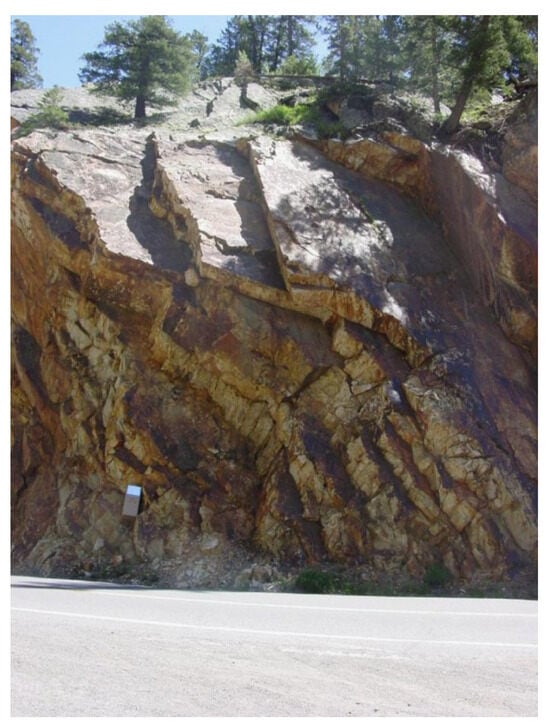

The proposed method in this paper is validated using data from a rock slope in Ouray, CO, USA (Figure 2). The dataset, obtained by Dr. John Kemeny in 2004 using the Optech ILRIS-3D laser scanner, consists of 1,024,521 points with a spacing of less than 2 cm. The data are publicly accessible in the Rockbench database [48]. Many scholars have extensively researched the use of this dataset for the identification of rock mass discontinuities from point clouds [49], providing a convenient basis for comparisons to validate the methods proposed in this study.

Figure 2.

Actual scene of case study A in Ouray, CO, USA.

2.2. Extraction of Point Normal Vector

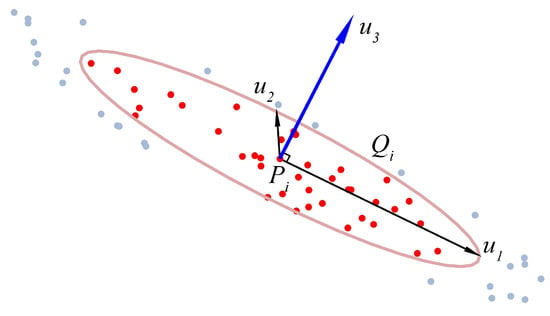

In this section, a KD-Tree is constructed for the rapid retrieval of the k-nearest neighbors for each point Pi within the three-dimensional point cloud, denoted as the point set Qi. The normal vector of Pi is obtained through the PCA algorithm applied to the fitted plane of Qi [50]. In the process of conducting Principal Component Analysis on Qi, it is essential to initially calculate the covariance matrix. Subsequently, eigenvalue decomposition is applied to acquire both the eigenvalues and eigenvectors. The eigenvector corresponding to the smallest eigenvalue signifies the direction with the minimum variance and is commonly selected as the normal vector for Pi (Figure 3).

Figure 3.

Schematic of point normal vector computation. u1, u2, and u3 represent the three eigenvectors of the covariance matrix of Qi, respectively. u3 is the eigenvector corresponding to the minimum eigenvalue. The red dots represent nearest neighbors, and the gray dots represent non-nearest neighbors.

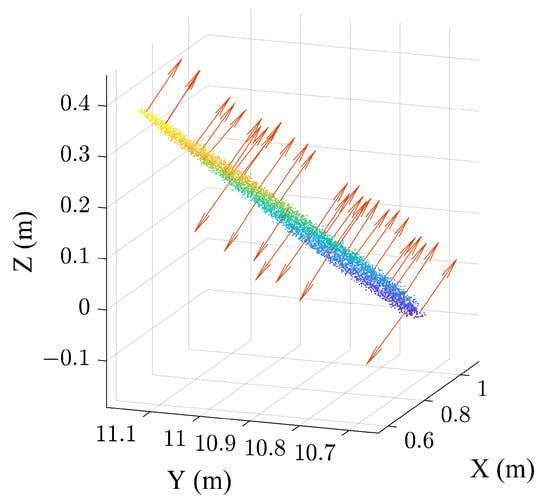

Figure 4 demonstrates that the rough and uneven nature of the rock mass surface (caused by vegetation, among other reasons) may lead to opposite directions of the computed normal vectors for points belonging to the same discontinuity, potentially impacting the accuracy of point cloud classification. To address this issue, Equation (1) is employed to adjust the normal vector direction for each point:

where represents the selected direction, and is the projection of the normal vector onto . The direction of the normal vector remains unchanged when , while the direction of the normal vector is reversed when .

Figure 4.

Varying normal vectors for points on the same discontinuity.

Figure 5 depicts the color mapping of the point normal vector in the RGB color system applied to the point cloud. It can be seen that points on the same discontinuity set have similar colors.

Figure 5.

Point cloud data of case A: assigning RGB colors based on normal vectors. One color per discontinuity set.

2.3. Extraction of Discontinuity Sets

2.3.1. Construction of the Improved Naive Bayes Classifier

The Naive Bayes classifier, a widely employed supervised learning algorithm, is based on the fundamental Bayes’ theorem, expressed as Equation (2) [51]:

where P(A) and P(B) represent the probability of events A and B occurring independently, respectively, with P(A) > 0 and P(B) > 0. P(B|A) signifies the probability of event B occurring given the occurrence of event A, and P(A|B) denotes the probability of event A occurring given the occurrence of event B.

For each sample with d features X = {x1, x2, …, xd} and all discontinuity set categories y1, y2, …, ym, the Naive Bayes classifier is under the assumption that the d features are mutually independent. First of all, the probability P(yj|X) (j = 1, …, m) that the point to be classified belongs to category yj is calculated by Naive Bayes theorem:

where P(yj) is the proportion of training samples belonging to category yj in the training samples. The value of P(X) independent of the category is the evidence factor used for normalization, which can be obtained by the calculation of Equation (4):

Due to the features of the sample X to be categorized being independent of each other, P(X|yj) can be expressed as Equation (5):

Then, substitute Equation (5) into Equation (3):

Considering that P(X) is independent of the category and its value will not affect the classification result, this paper introduces Pj to denote the probability that the sample X belongs to each category:

After calculating the probability that the sample X belongs to each category using Equation (7), the category with the highest probability is selected as the classification result:

The calculation method of P(xi|yj) in Equation (8) depends on the distribution of the features of the samples. In this paper, the components of the normal vectors of the points to be classified in the direction of the x, y, z coordinate axes are used as the three features used for classification. Due to its numerical changes being continuous and approximately normally distributed, P(xi|yj) in Equation (8) needs to be estimated using the following equation:

where µyj,xi, σyj,xi, and σ2yj,xi is the mean, standard deviation, and variance of feature xi of the samples belonging to the yj in the training sample, respectively.

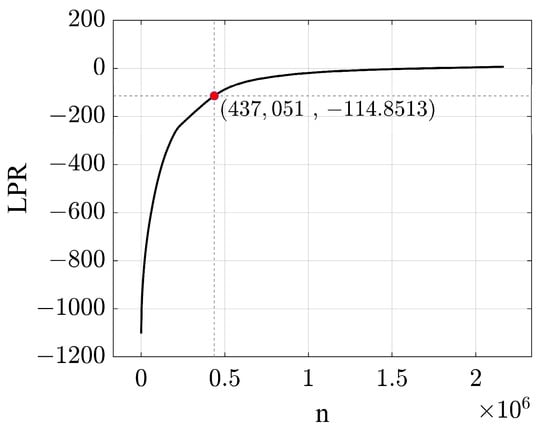

The above Naive Bayes classifier takes the category with the highest probability of belonging as the classification result. However, in the point cloud classification process, there are usually some noise points with a very small probability of belonging to any category that need to be removed. In this paper, the Naive Bayes classifier is improved on the basis of the distribution curve of logarithmic probability (LPR), i.e., ln(Pj), arranged from small to large (logarithmic transformation prevents the multiplication of numbers less than 1 from trending toward zero, and the trend of the logarithmic probability distribution curve is more obvious, and the logarithmic probability is not subject to the restriction of 0–1 which can be an arbitrary positive or negative number). Then, the logarithmic probability threshold value is selected according to the distribution trend. The points with logarithmic probability less than this threshold are removed as noise points, which improves the performance of the Naive Bayes classifier in point cloud classification.

2.3.2. Generation of Training Set

To train the Naive Bayes classifier, a substantial number of training points are needed. Manual selection is not only time-consuming and labor-intensive but also has the potential to introduce noise, affecting the accuracy of point cloud classification. In response, this paper proposes a straightforward and efficient method for generating training point sets as follows:

Firstly, the number of discontinuity sets is determined according to the color of the point cloud (Figure 5). Subsequently, one point from each discontinuity set is selected to use its normal vector as the center, and N normal vectors are randomly generated as the training set in the range where the angle with it is less than the maximum angle maxAGL. Figure 6b shows the training set generated around the normal vectors of the points selected in Figure 6a with maxAGL = 5° and N = 200.

Figure 6.

(a) Training points selection; (b) training sets generation.

2.3.3. Extraction of Discontinuity Sets Based on the Improved Naive Bayes Classifier

The procedure for extracting discontinuity sets using the improved Naive Bayes classifier is outlined as follows:

Step 1: The training set is selected using the method proposed in Section 2.3.2 and the percentage P(yj) of training points belonging to each category within the training sets is calculated.

Step 2: Based on the training sets, Equation (9) is utilized to calculate the conditional probability P(xi|yj) that the ith feature of the point X takes the value of xi, provided that it belongs to each discontinuity set yj.

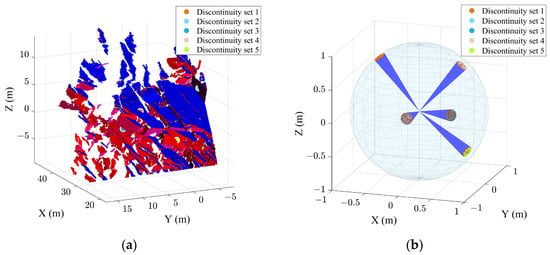

Step 3: The probability Pj that the sample X belongs to each category is calculated by substituting P(yj) and P(xi|yj) derived from Step 1 and Step 2 into Equation (7). The distribution curve of logarithmic probability is plotted (Figure 7), from which it can be seen that the logarithmic probability of the points after the curve inflection point is higher and tends to be level. So, the logarithmic probability of the points at the curve inflection point is selected as the minimum logarithmic probability (minLPR), and the points with logarithmic probability less than minLPR are removed as noise points. Substituting the probability Pj of the remaining points into Equation (8) yields hnb(X) to complete the classification of the entire point cloud. At this point the procedure for extracting the discontinuity set using the improved Naive Bayes classifier terminates, where the points with the same category are those belonging to the same discontinuity set. So, the high accuracy extraction of the discontinuity set was achieved using the improved Naive Bayes classifier.

Figure 7.

Logarithmic probability distribution curve of point classification. LPR: Logarithmic probability of point belonging to a category; n: point index.

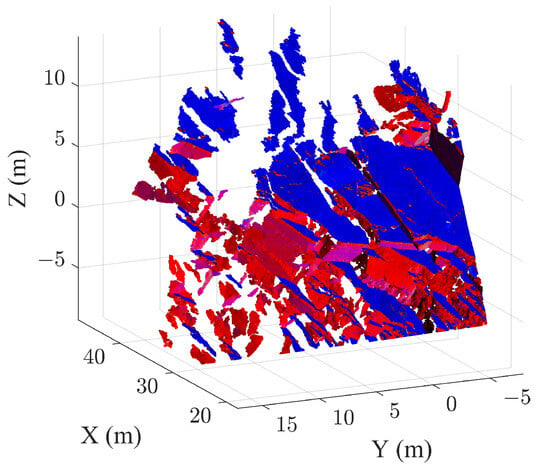

The extraction results of discontinuity sets are shown in Figure 8.

Figure 8.

Results of discontinuity sets extraction. (a) Assigned colors to each discontinuity set; (b–d) selected discontinuities for parameter calculations.

2.4. Extraction of Individual Discontinuity Using the HDBSCAN Algorithm

In this section, the extraction of individual discontinuity within each discontinuity set is accomplished through the utilization of clustering algorithms. Considering the inability to determine the number of individual discontinuities within each discontinuity set and the requirement of a predetermined number of clusters for K-means or FCM, it is deemed inapplicable. Currently, DBSCAN, a prominent density clustering algorithm, is widely used to extract individual discontinuities from discontinuity sets, necessitating input parameters: search radius (ε) and minimum number of points (mpts) to establish a density threshold for distinguishing discrete points. Additionally, variations in dataset density can affect the stability of this algorithm. Campello et al. [52] proposed the HDBSCAN algorithm, which combines hierarchical clustering with DBSCAN, providing enhanced stability and better clustering results for data with uneven density. This algorithm relies on a single key parameter, the minimum cluster size (minCluster), and its performance is not sensitive to the specific value chosen for this parameter. The algorithm defines the mutual reachability distance between two points as follows:

where corek(xp) and corek(xq) denote the distances from points xp and xq to their nearest k neighbors, while d(xp, xq) represents the distance between the points xp and xq. The minimum spanning tree is generated based on the mutual reachability distance, and a hierarchical structure is constructed. Subsequently, the hierarchical structure is recursively split, removing noise clusters with sizes smaller than minCluster. This process accomplishes the compression of the hierarchical structure and the extraction of clusters.

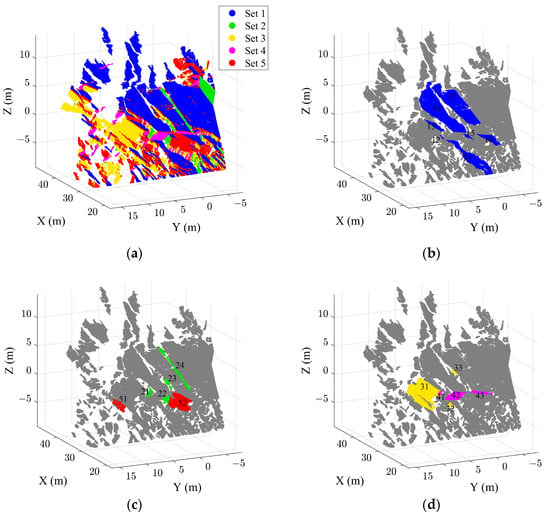

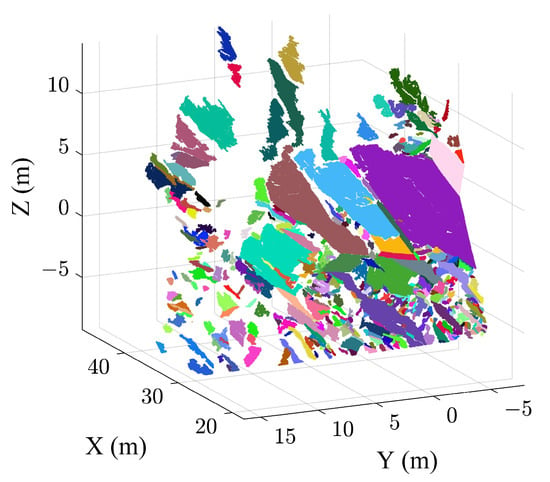

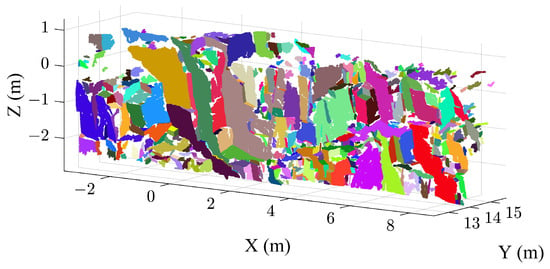

In addition, the parameter minDis is used to eliminate extremely small clusters in the extraction results as noise, thereby further enhancing the accuracy of discontinuity extraction. For case A, after testing, parameters minCluster = 5 and minDis = 200 were chosen to successfully extract individual discontinuities (Figure 9):

Figure 9.

Extraction results of individual discontinuity. One color per individual discontinuity.

2.5. Calculation of Rock Discontinuity Parameters

The PCA algorithm is employed for plane fitting on the extracted discontinuities to determine their normal vector . The components ux, uy, and uz of are then used in Equations (11) and (12) to calculate the dip direction (α) and dip (β) of the discontinuity.

In total, 19 discontinuities previously studied by Riquelme et al. [32] and Chen et al. [18], as shown in Figure 8b–d, were selected for orientation calculation to validate the effectiveness of the proposed approach in this paper. Table 1 presents the dip direction and dip results of the 19 discontinuities extracted using the approach proposed in this study, along with the methods introduced by Riquelme et al. [32] and Chen et al. [18]. It is observed that 94.7% of the discontinuities exhibit orientation differences within 7°, with an average difference remaining within 2°. The maximum deviation in the dip direction appears on Discontinuity 12, which is attributed to surface undulations.

Table 1.

Comparison of orientation results from different methods.

3. Case Study B

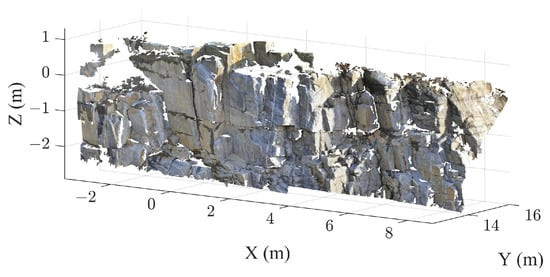

In this section, the practicality of the proposed method is validated using a point cloud case publicly available in the Rockbench repository [48] (Figure 10). The case is located along Highway 15 near Kingston, ON, Canada, and was acquired using a Leica HDS6000 laser scanner. It contains 2,167,515 data points along with their color information.

Figure 10.

The point cloud data of case B.

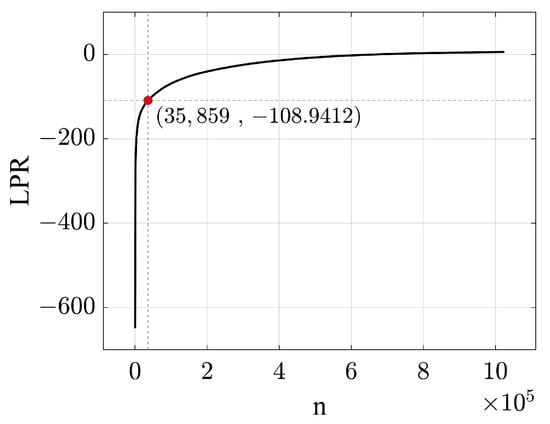

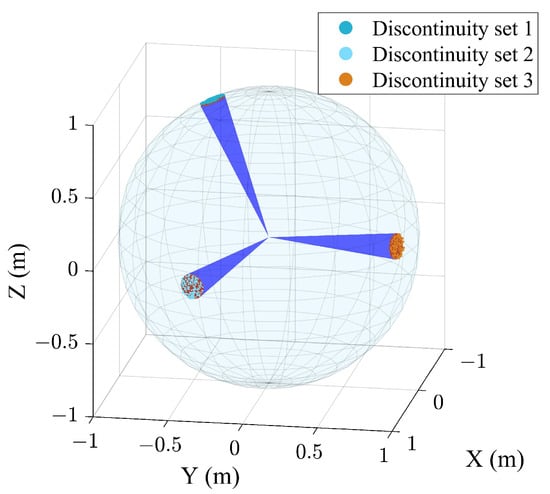

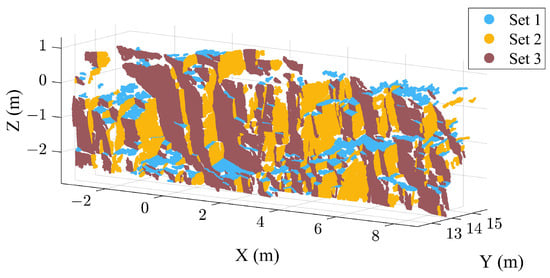

3.1. Extraction Results of the Case B

According to the study by Ge et al. [43], in this section, the number of nearest neighbors is set to K = 40 to calculate the point cloud normal vectors using the PCA algorithm. The colors assigned based on the normal vectors in Figure 11 mainly represent three sets of discontinuities in the rock mass (the artificial plane in the upper-right corner). Selecting three training points corresponding to three discontinuity sets shown in Figure 11 as centers, respectively, and generating 200 random normal vectors with angle less than maxAGL = 5° from each center forms the training set (Figure 12) for training the improved Naive Bayes classifier established in this study.

Figure 11.

Training points selection of case B. One color per discontinuity set.

Figure 12.

Training sets generation of case B.

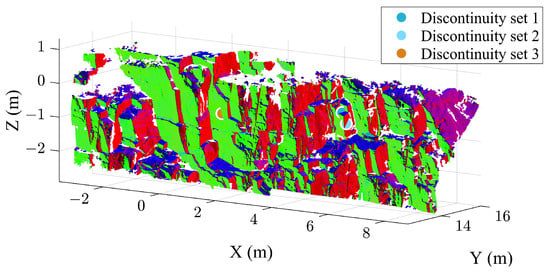

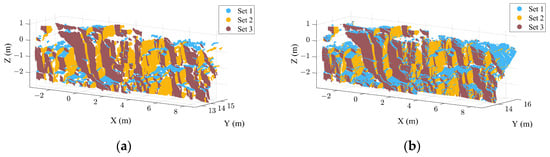

Figure 13 illustrates the outcomes of discontinuity set extraction utilizing the improved Naive Bayes classifier trained with training sets applied to the entire point cloud, where each color denotes a distinct discontinuity set. It is evident that three discontinuity sets have been successfully extracted, and the majority of the artificial plane (the top-right corner of the point cloud), which does not belong to any discontinuity sets, has been effectively excluded by establishing a minimum logarithmic probability threshold minLPR = −114.8513. The results of extracting individual discontinuities using the HDBSCAN algorithm under the minCluster = 10 and minDis = 200 are depicted in Figure 14. Herein, individual discontinuities are effectively extracted, with the removal of some excessively small discontinuities and noise points.

Figure 13.

Extraction results of the discontinuity set for case B.

Figure 14.

Results of extraction of individual discontinuity for case B.

After obtaining the normal vectors of each discontinuity using the PCA algorithm, and substituting them into Equations (11) and (12), the mean dip direction and dip of each discontinuity set are determined. By comparing these results with those obtained by Lato et al. [13] (Table 2), it is evident that Set 1 demonstrates a high level of agreement, with differences of 0° in dip direction and 3° in dip. Although Sets 2 and 3 exhibit slightly lower consistency, with differences of 6° and 7° in dip direction, and 4° in dip, respectively, all differences fall within an acceptable range, affirming the high reliability of the proposed method.

Table 2.

Comparison of orientation results for case B.

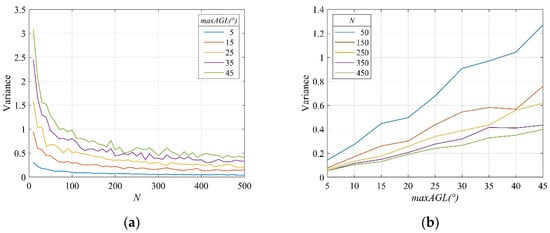

3.2. Maximum Angle maxAGL and Quantity N for Training Sets Generation

Firstly, when generating the training set, the maximum value of the maxAGL should be less than half of the minimum angle between selected point normal vectors. This ensures that each training set is independent and non-overlapping with others. In case B, the minimum angle between selected point normal vectors is 88.5°. Therefore, the training sets should be generated within the range of angles less than 44.25°.

The generated training sets must exhibit uniform distribution within the chosen range to ensure ample stability and representativeness. To determine the optimal values for the maxAGL and N, this study iteratively generated 100 distinct training sets for each different combination of maxAGL and N around selected point normal vectors. The variance in angles between the central vector of the training set and the selected point normal vector served as a criterion for evaluating the stability of the generated training sets. Figure 15 illustrates the variation in the stability of generated training sets with changes in the maxAGL and N over 100 repetitions.

Figure 15.

Variation curves of stability for generated point sets: (a) different maxAGL; (b) different N.

In Figure 15a, the stability of the generated training sets increases with the rise of quantity N, and the variation becomes relatively stable when N exceeds 200. However, there are still differences in stability among different maxAGL. In Figure 15b, under varying N, an increase in maxAGL corresponds to a decrease in stability. Moreover, higher values of quantity N result in less sensitivity of stability to maxAGL. Nevertheless, the impact of quantity N on stability diminishes beyond 150, consistent with the trend shown in Figure 15a.

In summary, the study configures the parameters for generating the training sets using the proposed method with a maxAGL set at 5° and an N set at 200, ensuring optimal performance of the algorithm.

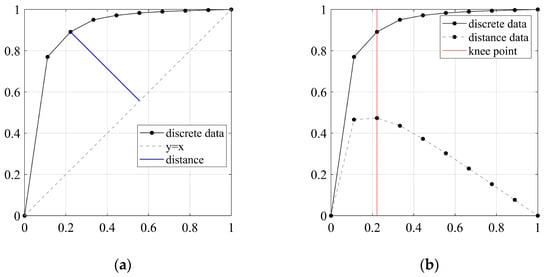

3.3. Minimum Logarithmic Probability minLPR

It is crucial to determine the minLPR for noise point removal when employing the proposed method for point cloud classification. Figure 16 illustrates the logarithmic probability distribution curve of the point cloud classification results for case B, with the minLPR set as −114.8513, determined through the knee point algorithm at the curve’s inflection point. The inflection point is usually defined as the point of maximum curvature in the curve. However, the logarithmic probability distribution curve in Figure 16 is composed of many discrete points, and its inflection point is difficult to accurately obtain by simply taking the second-order derivatives of the curve. This paper therefore uses the “knee point” detection algorithm proposed by Satopaa et al. [53], which is suitable for discrete data. The basic principle of the algorithm is shown in Figure 17, where the discrete data are first normalized and plotted in an interval where the x and y coordinates are both 0–1 (this process preserves the morphology of the original data), and then the perpendicular distance from each discrete data point to the line y = x is found as shown in Figure 17a. The point with the largest perpendicular distance is taken as the knee point (Figure 17b). For more comprehensive information on the algorithm, refer to prior studies [53].

Figure 16.

Logarithmic probability distribution for case B point cloud classification.

Figure 17.

Principle of knee point algorithm: (a) perpendicular distance of the discrete point from the line y = x; (b) selection of knee points.

4. Discussion

The point cloud dataset is extensive, and points representing discontinuities lack distinct characteristics, so it is usually necessary to extract certain features for the point cloud classification. Ge et al. [43] utilized four features—coordinates, normal vector, curvature, and density of points—as the basis input of the Artificial Neural Network (ANN) model, successfully achieving efficient point cloud classification. However, this method involves the manual selection of a sufficient number of training points, a process prone to errors that can adversely affect subsequent point cloud classification. To eliminate such effects as much as possible, it is generally necessary to repeatedly select training sets until better classification results are obtained, making it a time-consuming and labor-intensive process. The proposed method classifies point clouds solely based on the normal vectors of points, simplifying the extraction of point cloud features, and also demonstrating outstanding performance in discontinuity sets extraction. Employing a method that revolves around generating training sets around selected training points reduces the necessity for manual point selection, and decreases the likelihood of choosing erroneous points, thereby enhancing the efficiency and accuracy of acquiring training sets.

Within the three-dimensional point cloud acquired through scanning rock surfaces, the presence of noise points not associated with discontinuity, induced by factors like vegetation coverage, rock weathering, and human activities, adversely impacts subsequent calculations of discontinuity parameters. In the realm of prevailing methodologies for point cloud classification, mitigating the impact of noise points remains a formidable challenge. This study addresses the challenge by enhancing the Naive Bayes classifier through the incorporation of a logarithmic probability threshold selected by the knee point algorithm, enabling more effective filtration of noise points. To gauge the denoising efficacy of the improved Naive Bayes classifier, this paper compares it with the Fuzzy C-Means (FCM) clustering algorithm applied to extract discontinuity sets from the point cloud of case B. As shown in Figure 18, the results of extracting discontinuity sets using the two methods are the same, but there are still slight differences. The FCM algorithm, in its indiscriminate classification of all points, fails to discern and eliminate noise points effectively. Notably, in the upper-right section of the point cloud, artificial rock discontinuities are inaccurately assigned to discontinuity set 1. In contrast, the method proposed in this paper identifies noise points by calculating the logarithmic probability of points on each discontinuity set, as illustrated in Figure 18a where the artificial rock plane has been almost completely removed. Furthermore, upon comparing Figure 18b, it becomes apparent that Figure 18a exhibits some conspicuous blank “voids”, which result from the removal of noise points situated in irregular locations, such as along the edges of the rock mass and in weathered or fragmented areas.

Figure 18.

Extraction results of two methods: (a) proposed method; (b) FCM.

5. Conclusions

This paper introduces an innovative approach for the identification and extraction of rock discontinuities, leveraging an enhanced Naive Bayes classifier. The key conclusions can be summarized as follows:

In response to the arduous and time-intensive process of manually selecting training point sets, fraught with challenges such as randomness that could compromise classification accuracy, this paper introduces an innovative approach to generating training sets around the selected training points, streamlining the selection process and elevating the precision of the training sets. Tailored to meet the demands of point cloud classification tasks, the Naive Bayes classifier is enhanced to effectively filter out noise points during the point cloud classification process by incorporating a logarithmic probability threshold, thereby improving the accuracy of discontinuity set extraction. The HDBSCAN algorithm is employed for the swift extraction of individual discontinuities, while the PCA algorithm is employed to compute the normal vectors of these discontinuities, facilitating accurate positioning.

The proposed novel methodology has been applied to two cases located near Colorado and Kingston, respectively. Leveraging case A, the study explains the principles and procedures of the new method, validating its reliability through comparative analysis with previous research results. Case B is employed to discuss the optimal parameter selection, showcasing the reliable results obtained by the new method through comparisons with prior research outcomes and highlighting its advantages in the realm of noise reduction.

The innovative method proposed in this study for generating training point sets not only minimizes the manual selection of samples but also reduces the likelihood of errors in the training point set. In contrast to existing methods, the use of an improved Naive Bayes classifier for extracting discontinuity sets ensures the removal of noise points while preserving efficiency and reliability, leading to more trustworthy results.

Furthermore, the method proposed in this study operates under the assumption that the features used for classification are independent of each other, but many of the features may not be completely independent of each other, and thus subsequent research could further improve the Naive Bayes classifier in this direction to give it a better performance in terms of discontinuity extraction.

Author Contributions

Conceptualization, G.L.; methodology, X.Z.; software, X.Z.; validation, Y.L. and Z.Y.; formal analysis, G.L.; investigation, X.Z. and B.C.; resources, G.L.; data curation, X.Z. and B.C.; writing—original draft preparation, X.Z.; writing—review and editing, X.Z. and B.C.; visualization, X.Z.; supervision, C.T.; project administration, X.Z.; funding acquisition, G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Research and Development Project of China Railway Co., Ltd. (Grant No. 2022-Special-07-02), the National Natural Science Foundation of China (Grant No. 41974148), the Natural Resources Science and Technology Project of Hunan Province (Grant No. 2022-01), and the Research Foundation of the Department of Natural Resources of Hunan Province (Grant No. 20230101DZ).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data and materials that support the findings of this study are available from the author, Xudong Zhu, upon reasonable request.

Acknowledgments

Thanks to John Kemeny for sharing the point clouds of case A. The raw data of case B was obtained from the Rockbench repository. The authors kindly appreciated M. Lato, J. Kemeny, R. M. Harrap, and G. Bevan for establishing the Rockbench repository. The authors’ special appreciation goes to Editors and anonymous reviewers for valuable comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Slob, S. Automated Rock Mass Characterisation Using 3-D Terrestrial Laser Scanning. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2010. [Google Scholar]

- Ghosh, A.; Daemen, J.J. Fractal Characteristics of Rock Discontinuities. Eng. Geol. 1993, 34, 1–9. [Google Scholar] [CrossRef]

- Zhang, F.; Damjanac, B.; Maxwell, S. Investigating Hydraulic Fracturing Complexity in Naturally Fractured Rock Masses Using Fully Coupled Multiscale Numerical Modeling. Rock Mech. Rock Eng. 2019, 52, 5137–5160. [Google Scholar] [CrossRef]

- Barton, N.R. Suggested Methods for the Quantitative Description of Discontinuities in Rock Masses. Int. J. Rock Mech. Min. Sci. Geomech. Abstr. 1978, 15, 319–368. [Google Scholar]

- Bieniawski, Z.T. Engineering Rock Mass Classifications: A Complete Manual for Engineers and Geologists in Mining, Civil, and Petroleum Engineering; John Wiley & Sons: Hoboken, NJ, USA, 1989; ISBN 0-471-60172-1. [Google Scholar]

- Sturzenegger, M.; Stead, D. Close-Range Terrestrial Digital Photogrammetry and Terrestrial Laser Scanning for Discontinuity Characterization on Rock Cuts. Eng. Geol. 2009, 106, 163–182. [Google Scholar] [CrossRef]

- Mah, J.; Samson, C.; McKinnon, S.D. 3D Laser Imaging for Joint Orientation Analysis. Int. J. Rock Mech. Min. Sci. 2011, 48, 932–941. [Google Scholar] [CrossRef]

- Gigli, G.; Casagli, N. Semi-Automatic Extraction of Rock Mass Structural Data from High Resolution LIDAR Point Clouds. Int. J. Rock Mech. Min. Sci. 2011, 48, 187–198. [Google Scholar] [CrossRef]

- Kong, D.; Wu, F.; Saroglou, C. Automatic Identification and Characterization of Discontinuities in Rock Masses from 3D Point Clouds. Eng. Geol. 2020, 265, 105442. [Google Scholar] [CrossRef]

- Priest, S.D.; Hudson, J. Discontinuity Spacings in Rock. Int. J. Rock Mech. Min. Sci. Geomech. Abstr. 1976, 13, 135–148. [Google Scholar] [CrossRef]

- Gischig, V.; Amann, F.; Moore, J.; Loew, S.; Eisenbeiss, H.; Stempfhuber, W. Composite Rock Slope Kinematics at the Current Randa Instability, Switzerland, Based on Remote Sensing and Numerical Modeling. Eng. Geol. 2011, 118, 37–53. [Google Scholar] [CrossRef]

- Zhang, P.; Zhao, Q.; Tannant, D.D.; Ji, T.; Zhu, H. 3D Mapping of Discontinuity Traces Using Fusion of Point Cloud and Image Data. Bull. Eng. Geol. Environ. 2019, 78, 2789–2801. [Google Scholar] [CrossRef]

- Lato, M.; Diederichs, M.S.; Hutchinson, D.J.; Harrap, R. Optimization of LiDAR Scanning and Processing for Automated Structural Evaluation of Discontinuities in Rockmasses. Int. J. Rock Mech. Min. Sci. 2009, 46, 194–199. [Google Scholar] [CrossRef]

- Umili, G.; Ferrero, A.; Einstein, H. A New Method for Automatic Discontinuity Traces Sampling on Rock Mass 3D Model. Comput. Geosci. 2013, 51, 182–192. [Google Scholar] [CrossRef]

- Li, X.; Chen, Z.; Chen, J.; Zhu, H. Automatic Characterization of Rock Mass Discontinuities Using 3D Point Clouds. Eng. Geol. 2019, 259, 105131. [Google Scholar] [CrossRef]

- Roncella, R.; Forlani, G. Extraction of Planar Patches from Point Clouds to Retrieve Dip and Dip Direction of Rock Discontinuities. In Proceedings of the ISPRS Workshop Laser Scanning, Enschede, The Netherlands, 12–15 September 2005; pp. 162–167. [Google Scholar]

- Ferrero, A.M.; Forlani, G.; Roncella, R.; Voyat, H. Advanced Geostructural Survey Methods Applied to Rock Mass Characterization. Rock Mech. Rock Eng. 2009, 42, 631–665. [Google Scholar] [CrossRef]

- Chen, J.; Zhu, H.; Li, X. Automatic Extraction of Discontinuity Orientation from Rock Mass Surface 3D Point Cloud. Comput. Geosci. 2016, 95, 18–31. [Google Scholar] [CrossRef]

- Chen, N.; Kemeny, J.; Jiang, Q.; Pan, Z. Automatic Extraction of Blocks from 3D Point Clouds of Fractured Rock. Comput. Geosci. 2017, 109, 149–161. [Google Scholar] [CrossRef]

- Leng, X.; Xiao, J.; Wang, Y. A Multi-Scale Plane-Detection Method Based on the Hough Transform and Region Growing. Photogramm. Rec. 2016, 31, 166–192. [Google Scholar] [CrossRef]

- Yang, S.; Liu, S.; Zhang, N.; Li, G.; Zhang, J. A Fully Automatic-Image-Based Approach to Quantifying the Geological Strength Index of Underground Rock Mass. Int. J. Rock Mech. Min. Sci. 2021, 140, 104585. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.-H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in Landslide Investigations: A Review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Gomes, R.K.; de Oliveira, L.P.; Gonzaga Jr, L.; Tognoli, F.M.; Veronez, M.R.; de Souza, M.K. An Algorithm for Automatic Detection and Orientation Estimation of Planar Structures in LiDAR-Scanned Outcrops. Comput. Geosci. 2016, 90, 170–178. [Google Scholar] [CrossRef]

- Wang, X.; Zou, L.; Shen, X.; Ren, Y.; Qin, Y. A Region-Growing Approach for Automatic Outcrop Fracture Extraction from a Three-Dimensional Point Cloud. Comput. Geosci. 2017, 99, 100–106. [Google Scholar] [CrossRef]

- Ge, Y.; Tang, H.; Xia, D.; Wang, L.; Zhao, B.; Teaway, J.W.; Chen, H.; Zhou, T. Automated Measurements of Discontinuity Geometric Properties from a 3D-Point Cloud Based on a Modified Region Growing Algorithm. Eng. Geol. 2018, 242, 44–54. [Google Scholar] [CrossRef]

- Yi, X.; Feng, W.; Wang, D.; Yang, R.; Hu, Y.; Zhou, Y. An Efficient Method for Extracting and Clustering Rock Mass Discontinuities from 3D Point Clouds. Acta Geotech. 2023, 18, 3485–3503. [Google Scholar] [CrossRef]

- Hu, L.; Xiao, J.; Wang, Y. Efficient and Automatic Plane Detection Approach for 3-D Rock Mass Point Clouds. Multimed. Tools Appl. 2020, 79, 839–864. [Google Scholar] [CrossRef]

- Yu, D.; Xiao, J.; Wang, Y. High-Precision Plane Detection Method for Rock-Mass Point Clouds Based on Supervoxel. Sensors 2020, 20, 4209. [Google Scholar] [CrossRef]

- Singh, S.K.; Banerjee, B.P.; Lato, M.J.; Sammut, C.; Raval, S. Automated Rock Mass Discontinuity Set Characterisation Using Amplitude and Phase Decomposition of Point Cloud Data. Int. J. Rock Mech. Min. Sci. 2022, 152, 105072. [Google Scholar] [CrossRef]

- Park, J.; Cho, Y.K. Point Cloud Information Modeling: Deep Learning–Based Automated Information Modeling Framework for Point Cloud Data. J. Constr. Eng. Manag. 2022, 148, 04021191. [Google Scholar] [CrossRef]

- Slob, S.; Hack, R.; Turner, A.K. An Approach to Automate Discontinuity Measurements of Rock Faces Using Laser Scanning Techniques. In Proceedings of the ISRM EUROCK, Madeira, Portugal, 25–28 November 2002; ISRM: London, UK, 2002; p. ISRM-EUROCK-2002-006. [Google Scholar]

- Riquelme, A.J.; Abellán, A.; Tomás, R.; Jaboyedoff, M. A New Approach for Semi-Automatic Rock Mass Joints Recognition from 3D Point Clouds. Comput. Geosci. 2014, 68, 38–52. [Google Scholar] [CrossRef]

- Menegoni, N.; Giordan, D.; Perotti, C.; Tannant, D.D. Detection and Geometric Characterization of Rock Mass Discontinuities Using a 3D High-Resolution Digital Outcrop Model Generated from RPAS Imagery–Ormea Rock Slope, Italy. Eng. Geol. 2019, 252, 145–163. [Google Scholar] [CrossRef]

- Wu, X.; Wang, F.; Wang, M.; Zhang, X.; Wang, Q.; Zhang, S. A New Method for Automatic Extraction and Analysis of Discontinuities Based on TIN on Rock Mass Surfaces. Remote Sens. 2021, 13, 2894. [Google Scholar] [CrossRef]

- Van Knapen, B.; Slob, S. Identification and Characterisation of Rock Mass Discontinuity Sets Using 3D Laser Scanning. Procedia Eng. 2006, 191, 838–845. [Google Scholar]

- Vöge, M.; Lato, M.J.; Diederichs, M.S. Automated Rockmass Discontinuity Mapping from 3-Dimensional Surface Data. Eng. Geol. 2013, 164, 155–162. [Google Scholar] [CrossRef]

- Olariu, M.I.; Ferguson, J.F.; Aiken, C.L.; Xu, X. Outcrop Fracture Characterization Using Terrestrial Laser Scanners: Deep-Water Jackfork Sandstone at Big Rock Quarry, Arkansas. Geosphere 2008, 4, 247–259. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Q.; Chen, J.; Xu, L.; Song, S. K-Means Algorithm Based on Particle Swarm Optimization for the Identification of Rock Discontinuity Sets. Rock Mech. Rock Eng. 2015, 48, 375–385. [Google Scholar] [CrossRef]

- Cui, X.; Yan, E. A Clustering Algorithm Based on Differential Evolution for the Identification of Rock Discontinuity Sets. Int. J. Rock Mech. Min. Sci. 2020, 126, 104181. [Google Scholar] [CrossRef]

- Guo, J.; Liu, S.; Zhang, P.; Wu, L.; Zhou, W.; Yu, Y. Towards Semi-Automatic Rock Mass Discontinuity Orientation and Set Analysis from 3D Point Clouds. Comput. Geosci. 2017, 103, 164–172. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, Y.; Li, X.; Wang, X.; Ma, F.; Zhao, J.; Zhang, Y. Extraction and Statistics of Discontinuity Orientation and Trace Length from Typical Fractured Rock Mass: A Case Study of the Xinchang Underground Research Laboratory Site, China. Eng. Geol. 2020, 269, 105553. [Google Scholar] [CrossRef]

- Chen, J.; Huang, H.; Zhou, M.; Chaiyasarn, K. Towards Semi-Automatic Discontinuity Characterization in Rock Tunnel Faces Using 3D Point Clouds. Eng. Geol. 2021, 291, 106232. [Google Scholar] [CrossRef]

- Ge, Y.; Cao, B.; Tang, H. Rock Discontinuities Identification from 3D Point Clouds Using Artificial Neural Network. Rock Mech. Rock Eng. 2022, 55, 1705–1720. [Google Scholar] [CrossRef]

- Bayes, F.R.S. An Essay towards Solving a Problem in the Doctrine of Chances. Biometrika 1958, 45, 296–315. [Google Scholar] [CrossRef]

- McCallum, A.; Nigam, K. A Comparison of Event Models for Naive Bayes Text Classification. In Proceedings of the AAAI-98 Workshop on Learning for Text Categorization, Madison, WI, USA, 26–27 June 1998; Volume 752, pp. 41–48. [Google Scholar]

- Berchialla, P.; Foltran, F.; Gregori, D. Naïve Bayes Classifiers with Feature Selection to Predict Hospitalization and Complications Due to Objects Swallowing and Ingestion among European Children. Saf. Sci. 2013, 51, 1–5. [Google Scholar] [CrossRef]

- Feng, X.; Li, S.; Yuan, C.; Zeng, P.; Sun, Y. Prediction of Slope Stability Using Naive Bayes Classifier. KSCE J. Civ. Eng. 2018, 22, 941–950. [Google Scholar] [CrossRef]

- Lato, M.; Kemeny, J.; Harrap, R.; Bevan, G. Rock Bench: Establishing a Common Repository and Standards for Assessing Rockmass Characteristics Using LiDAR and Photogrammetry. Comput. Geosci. 2013, 50, 106–114. [Google Scholar] [CrossRef]

- Kemeny, J.; Turner, K.; Norton, B. LIDAR for Rock Mass Characterization: Hardware, Software, Accuracy and Best-Practices. In Laser and Photogrammetric Methods for Rock Face Characterization; ARMA: Golden, CO, USA, 2006; pp. 49–62. [Google Scholar]

- Huang, C.M.; Tseng, Y.-H. Plane Fitting Methods of LIDAR Point Cloud. In Proceedings of the 29th Asian Conference on Remote Sensing 2008, ACRS 2008, Colombo, Sri Lanka, 10–14 November 2008; pp. 1925–1930. [Google Scholar]

- Tsangaratos, P.; Ilia, I. Landslide Susceptibility Mapping Using a Modified Decision Tree Classifier in the Xanthi Perfection, Greece. Landslides 2016, 13, 305–320. [Google Scholar] [CrossRef]

- Campello, R.J.; Moulavi, D.; Sander, J. Density-Based Clustering Based on Hierarchical Density Estimates. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Gold Coast, Australia, 14–17 April 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 160–172. [Google Scholar]

- Satopaa, V.; Albrecht, J.; Irwin, D.; Raghavan, B. Finding a “Kneedle” in a Haystack: Detecting Knee Points in System Behavior. In Proceedings of the 2011 31st International Conference on Distributed Computing Systems Workshops, Minneapolis, MN, USA, 20–24 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 166–171. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).