An Adaptive Weighted Method for Remote Sensing Image Retrieval with Noisy Labels

Abstract

1. Introduction

2. Related Works

2.1. CBRSIR Based on Deep Learning

2.2. Noise Robust Loss Functions

2.3. Multilayer Perceptron for Remote Sensing

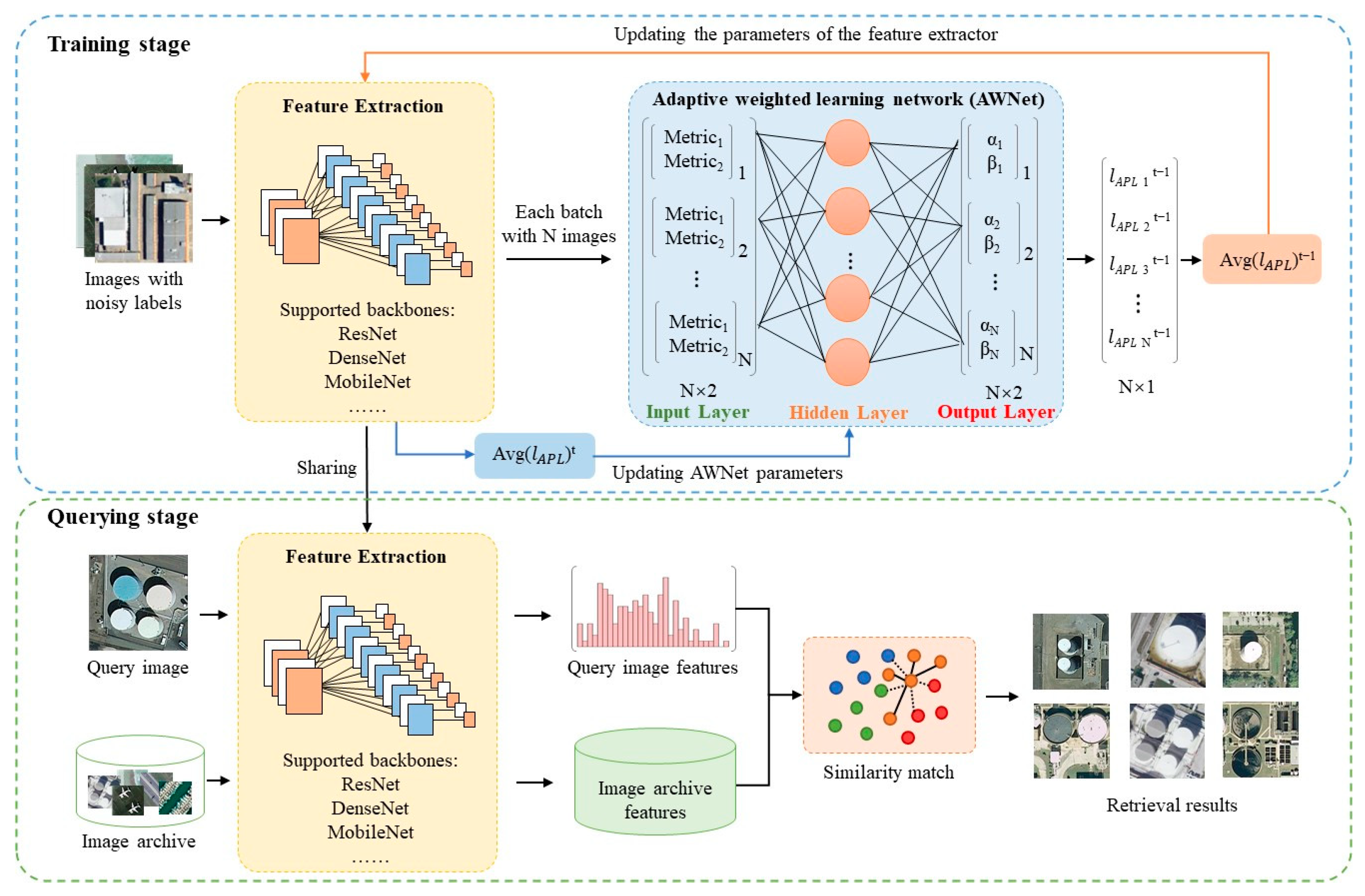

3. Methodology

3.1. The Active Passive Loss (APL)

3.2. Framework of Our Method

3.3. Two Metrics

3.4. Adaptive Weighted Learning Network

| Algorithm 1: Training Process of AWNet and classifier |

| Input: Data: training dataset with noisy labels Dn; Component: classifier f(·) and AWNet w(·); Parameter: α, β pretraining epochs tp, max epochs tmax and iteration per-epoch e; |

| Output: Well-trained f(·) and w(·). |

| 1. i = 1; 2. while i < tmax + 1 do: 3. if i < tp + 1, then: 4. j = 1, α = 1, β = 1; 5. while j < e + 1 do: 6. Train the classifier f(·) by Dn; 7. Update f(·) according to Equation (1); 8. j = j + 1 9. end while 10. else: 11. k = 1 12. while k < e + 1 do: 13. Train the classifier f(·) by Dn; 14. Calculate RES by Equation (4) and δ by Equation (5); 15. Get α and β by Equation (6) 16. Update f(·) according to (1); 17. Train the classifier f(·) by Dn; 18. Update w(·) according to (1); 19. k = k + 1 20. end while 21. i = i + 1 22. end while |

4. Experiments and Analysis

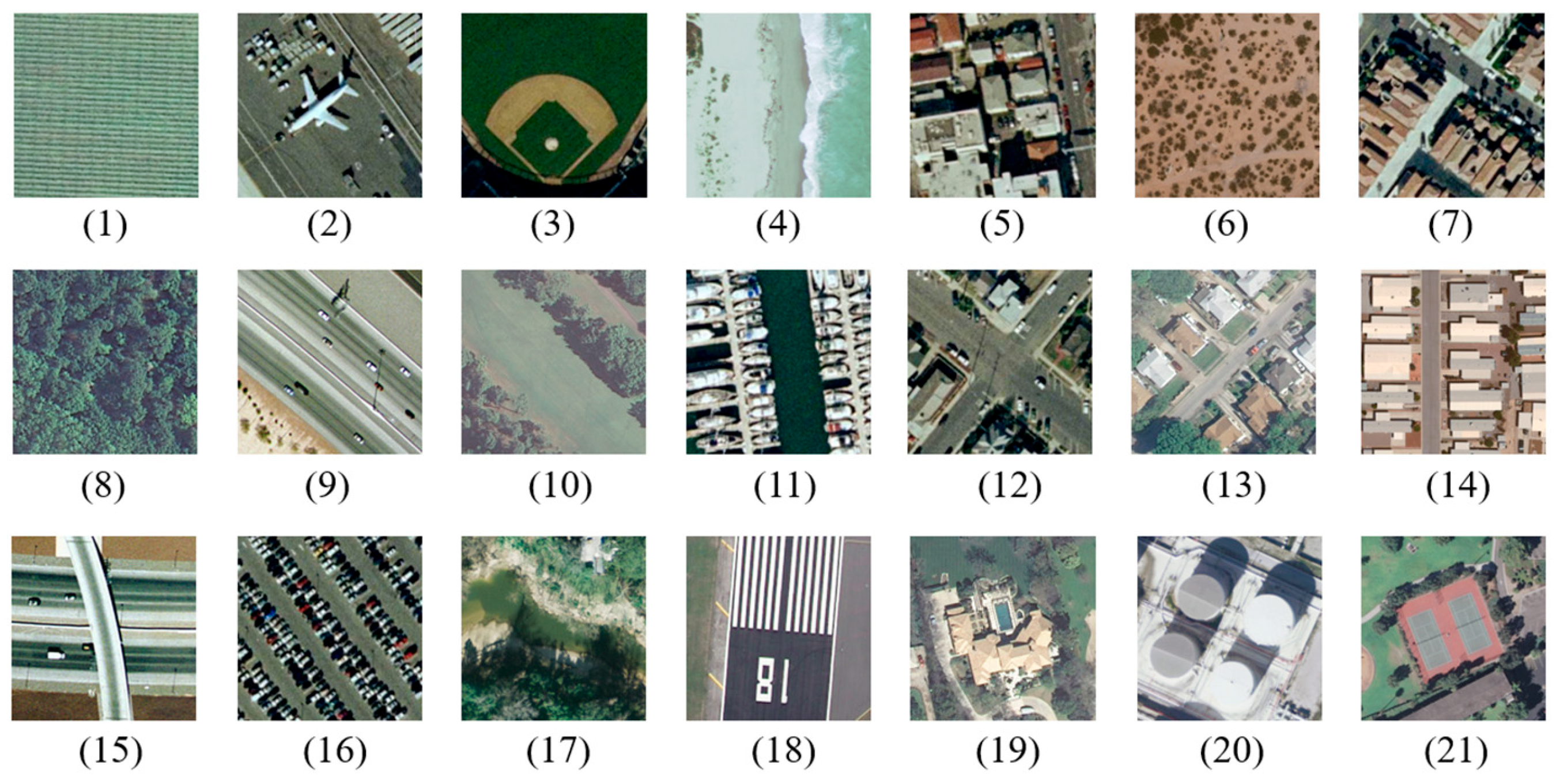

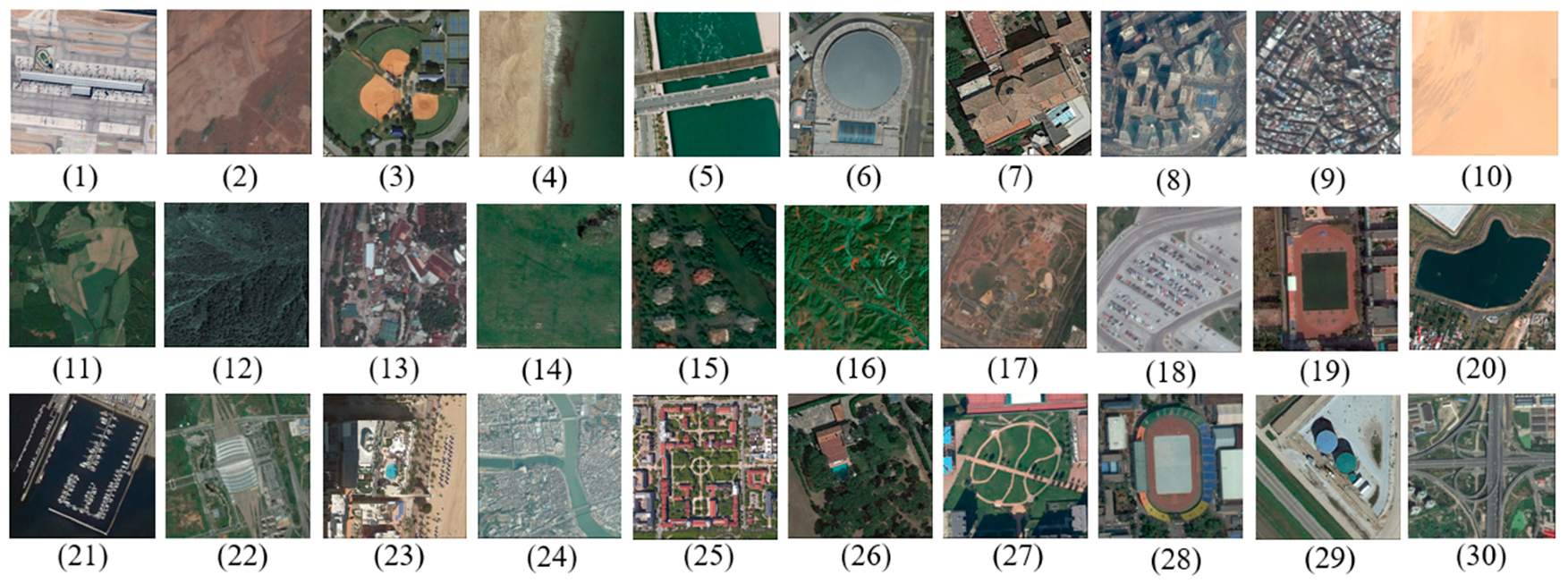

4.1. Datasets and Experimental Setup

4.2. Experiments on Adaptive Weights versus Manual Weights

4.3. Comparison with Various SOTA Losses

- (1)

- (2)

- (3)

- RCE [15]: It can be seen as the reverse version of the CE, as it exchanges the positions of the predicted probability and the one-hot coding in the formula of the cross-entropy loss function. However, it also converges slowly.

- (4)

- SCE [15]: It combines the CE loss with the RCE. Its robustness and convergence stability are guaranteed by RCE and CE, respectively. However, it requires the adjustment of two hyperparameters.

- (5)

- ACE [16]: It uses the predicted probabilities pt of the true labels of the samples to adaptively de-termine the two weights in SCE. As the pt of the sample tends toward zero, it gradually transforms into RCE.

- (6)

- (7)

- AEL [31]: It is an asymmetric noise-robust function, which assumes that the noise distribution in the data satisfies the clean label domination assumption.

4.4. Efficiency and Backbone Analysis

4.5. Ablation Experiment of Two Metrics

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, D.-R.; Shao, Z.-F.; Zhang, R.-Q. Advances of geo-spatial intelligence at LIESMARS. Geo-Spat. Inf. Sci. 2020, 23, 40–51. [Google Scholar] [CrossRef]

- Ye, F.-M.; Xiao, H.; Zhao, X.-Q.; Dong, M.; Luo, W.; Min, W.-D. Remote sensing image retrieval using convolutional neural network features and weighted distance. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1535–1539. [Google Scholar] [CrossRef]

- Dubey, S.R. A Decade Survey of Content Based Image Retrieval Using Deep Learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2687–2704. [Google Scholar] [CrossRef]

- Hou, D.; Wang, S.; Tian, X.; Xing, H. PCLUDA: A Pseudo-Label Consistency Learning-Based Unsupervised Domain Adaptation Method for Cross-Domain Optical Remote Sensing Image Retrieval. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5600314. [Google Scholar] [CrossRef]

- Liu, E.; Zhang, X.; Xu, X.; Fan, S. Slice-feature based deep hashing algorithm for remote sensing image retrieval. Infrared Phys. Technol. 2020, 107, 103299. [Google Scholar] [CrossRef]

- Hou, D.-Y.; Miao, Z.-L.; Xing, H.-Q.; Wu, H. V-RSIR: An Open Access Web-Based Image Annotation Tool for Remote Sensing Image Retrieval. IEEE Access 2019, 7, 83852–83862. [Google Scholar] [CrossRef]

- Hou, D.-Y.; Miao, Z.-L.; Xing, H.-Q.; Wu, H. Two novel benchmark datasets from ArcGIS and bing world imagery for remote sensing image retrieval. Int. J. Remote Sens. 2021, 42, 220–238. [Google Scholar] [CrossRef]

- Jin, P.; Xia, G.-S.; Hu, F.; Lu, Q.-K.; Zhang, L.-P. AID++: An updated version of AID on scene classification. In Proceedings of the 2018 IEEE IGARSS, 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4721–4724. [Google Scholar]

- Song, H.; Kim, M.; Park, D.; Shin, Y.; Lee, J.-G. Learning from Noisy Labels with Deep Neural Networks: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 8135–8153. [Google Scholar] [CrossRef]

- Miao, Q.; Wu, X.; Xu, C.; Zuo, W.; Meng, Z. On better detecting and leveraging noisy samples for learning with severe label noise. Pattern Recognit. 2023, 136, 109210. [Google Scholar] [CrossRef]

- Li, J.; Wong, Y.; Zhao, Q.; Kankanhalli, M.S. Learning to Learn from Noisy Labeled Data. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5046–5054. [Google Scholar]

- Kang, J.; Fernandez-Beltran, R.; Kang, X.; Ni, J.; Plaza, A. Noise-Tolerant Deep Neighborhood Embedding for Remotely Sensed Images with Label Noise. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2551–2562. [Google Scholar] [CrossRef]

- Ghosh, A.; Kumar, H.; Sastry, P.S. Robust loss functions under label noise for deep neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. In Proceedings of the NeurIPS, Montréal, QC, Canada, 4–5 December 2018. [Google Scholar]

- Wang, Y.; Ma, X.; Chen, Z.; Luo, Y.; Yi, J.; Bailey, J. Symmetric Cross Entropy for Robust Learning with Noisy Labels. In Proceedings of the 2019 IEEE ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 322–330. [Google Scholar]

- Chen, H.D.; Tan, W.M.; Li, J.C.; Guan, P.F.; Wu, L.J.; Yan, B.; Li, J.; Wang, Y.F. Adaptive Cross Entropy for ultrasmall object detection in Computed Tomography with noisy labels. Comput. Biol. Med. 2022, 147, 105763. [Google Scholar] [CrossRef]

- Ma, X.-J.; Huang, H.-X.; Wang, Y.-S.; Romano, S.; Erfani, S.; Bailey, J. Normalized Loss Functions for Deep Learning with Noisy Labels. In Proceedings of the ICML, PMLR, Vienna, Austria, 12–18 July 2020; pp. 6543–6553. [Google Scholar]

- Zhou, W.X.; Newsam, S.; Li, C.M.; Shao, Z.F. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef]

- Wang, S.; Hou, D.; Xing, H. A Self-Supervised-Driven Open-Set Unsupervised Domain Adaptation Method for Optical Remote Sensing Image Scene Classification and Retrieval. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605515. [Google Scholar] [CrossRef]

- Hou, D.; Wang, S.; Tian, X.; Xing, H. An Attention-Enhanced End-to-End Discriminative Network with Multiscale Feature Learning for Remote Sensing Image Retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8245–8255. [Google Scholar] [CrossRef]

- Wang, S.Y.; Hou, D.Y.; Xing, H.Q. A novel multi-attention fusion network with dilated convolution and label smoothing for remote sensing image retrieval. Int. J. Remote Sens. 2022, 43, 1306–1322. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Zhu, Z. Error-tolerant deep learning for remote sensing image scene classification. IEEE Trans. Cybern. 2020, 51, 1756–1768. [Google Scholar] [CrossRef] [PubMed]

- Damodaran, B.B.; Flamary, R.; Seguy, V.; Courty, N. An entropic optimal transport loss for learning deep neural networks under label noise in remote sensing images. Comput. Vis. Image Underst. 2020, 191, 102863. [Google Scholar] [CrossRef]

- Manwani, N.; Sastry, P.S. Noise Tolerance Under Risk Minimization. IEEE Trans. Cybern. 2013, 43, 1146–1151. [Google Scholar] [CrossRef]

- Jiang, J.; Ma, J.; Wang, Z.; Chen, C.; Liu, X. Hyperspectral Image Classification in the Presence of Noisy Labels. IEEE Trans. Geosci. Remote Sens. 2019, 57, 851–865. [Google Scholar] [CrossRef]

- Lee, K.H.; He, X.; Zhang, L.; Yang, L. CleanNet: Transfer Learning for Scalable Image Classifier Training with Label Noise. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5447–5456. [Google Scholar]

- Van Rooyen, B.; Menon, A.; Williamson, R.C. Learning with symmetric label noise: The importance of being unhinged. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada 7–12 December 2015; Volume 28. [Google Scholar]

- Charoenphakdee, N.; Lee, J.; Sugiyama, M. On symmetric losses for learning from corrupted labels. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 961–970. [Google Scholar]

- Zhou, X.; Liu, X.; Wang, C.; Zhai, D.; Jiang, J.; Ji, X. Learning with noisy labels via sparse regularization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 72–81. [Google Scholar]

- Kim, Y.; Yim, J.; Yun, J.; Kim, J. NLNL: Negative Learning for Noisy Labels. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 101–110. [Google Scholar]

- Zhou, X.; Liu, X.; Zhai, D.; Jiang, J.; Ji, X. Asymmetric Loss Functions for Noise-Tolerant Learning: Theory and Applications. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 8094–8109. [Google Scholar] [CrossRef]

- Mittal, P.; Sharma, A.; Singh, R. Deformable patch-based-multi-layer perceptron Mixer model for forest fire aerial image classification. J. Appl. Remote Sens. 2023, 17, 022203. [Google Scholar] [CrossRef]

- Akbari, D.; Ashrafi, A.; Attarzadeh, R. A New Method for Object-Based Hyperspectral Image Classification. J. Indian Soc. Remote Sens. 2022, 50, 1761–1771. [Google Scholar] [CrossRef]

- Gong, N.; Zhang, C.; Zhou, H.; Zhang, K.; Wu, Z.; Zhang, X. Classification of hyperspectral images via improved cycle-MLP. IET Comput. Vis. 2022, 16, 468–478. [Google Scholar] [CrossRef]

- Huang, K.; Tian, C.; Li, G. Bidirectional mutual guidance transformer for salient object detection in optical remote sensing images. Int. J. Remote Sens. 2023, 44, 4016–4033. [Google Scholar] [CrossRef]

- Wang, L.; Li, H. HMCNet: Hybrid Efficient Remote Sensing Images Change Detection Network Based on Cross-Axis Attention MLP and CNN. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5236514. [Google Scholar] [CrossRef]

- Shi, G.; Mei, Y.; Wang, X.; Yang, Q. DAHT-Net: Deformable Attention-Guided Hierarchical Transformer Network Based on Remote Sensing Image Change Detection. IEEE Access 2023, 11, 103033–103043. [Google Scholar] [CrossRef]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J. Mlp-mixer: An all-mlp architecture for vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar]

- Ding, X.; Xia, C.; Zhang, X.; Chu, X.; Han, J.; Ding, G. Repmlp: Re-parameterizing convolutions into fully-connected layers for image recognition. arXiv 2021, arXiv:2105.01883. [Google Scholar]

- Zhang, C.; Pan, X.; Li, H.; Gardiner, A.; Sargent, I.; Hare, J.; Atkinson, P.M. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote Sens. 2018, 140, 133–144. [Google Scholar] [CrossRef]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-Learning in Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5149–5169. [Google Scholar] [CrossRef]

- Zhao, Q.-H.; Hu, W.; Huang, Y.-Y.; Zhang, F. P-DIFF plus: Improving learning classifier with noisy labels by Noisy Negative Learning loss. Neural Netw. 2021, 144, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL, International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Xia, G.-S.; Hu, J.-W.; Hu, F.; Shi, B.-G.; Bai, X.; Zhong, Y.-F.; Zhang, L.-P.; Lu, X.-Q. AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.-W.; Lu, X.-Q. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- He, K.-M.; Zhang, X.-Y.; Ren, S.-Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

| Loss | The Noise Rate of UCMD | ||||

|---|---|---|---|---|---|

| Clean (0%) | 5.0% | 10.0% | 20.0% | 30% | |

| CE | 97.09 ± 0.37 | 94.37 ± 0.02 | 89.81 ± 0.40 | 78.89 ± 0.87 | 74.58 ± 2.99 |

| 0.1 NCE + 0.1 RCE | 95.82 ± 0.24 | 95.49 ± 0.85 | 94.16 ± 0.64 | 90.81 ± 0.66 | 84.91 ± 1.45 |

| 0.1 NCE + 1 RCE | 96.63 ± 0.24 | 95.12 ± 1.02 | 93.59 ± 1.00 | 91.01 ± 0.66 | 85.79 ± 0.91 |

| 0.1 NCE + 10 RCE | 96.64 ± 0.48 | 95.01 ± 0.74 | 93.54 ± 0.15 | 92.33 ± 0.67 | 87.48 ± 0.52 |

| 0.1 NCE + 100 RCE | 96.66 ± 0.39 | 94.01 ± 0.73 2 | 94.13 ± 0.96 | 89.91 ± 0.67 2 | 84.14 ± 1.04 2 |

| 1 NCE + 0.1 RCE | 95.44 ± 0.50 2 | 95.46 ± 0.66 | 94.61 ± 0.60 | 92.56 ± 0.31 1 | 88.25 ± 0.74 |

| 1 NCE + 1 RCE | 95.95 ± 0.37 | 95.63 ± 0.17 | 94.18 ± 0.39 | 90.12 ± 0.50 | 86.17 ± 0.90 |

| 1 NCE + 10 RCE | 96.64 ± 0.49 | 95.58 ± 0.39 | 94.50 ± 0.84 | 91.67 ± 0.93 | 86.39 ± 0.59 |

| 1 NCE + 100 RCE | 96.80 ± 0.45 | 95.33 ± 0.98 | 94.56 ± 0.45 | 91.74 ± 0.29 | 87.90 ± 0.68 |

| 10 NCE + 0.1 RCE | 96.77 ± 0.70 | 95.74 ± 0.29 1 | 93.28 ± 0.71 2 | 91.10 ± 1.67 | 89.19 ± 0.67 1 |

| 10 NCE + 1 RCE | 96.82 ± 0.59 1 | 95.65 ± 0.42 | 94.79 ± 0.71 1 | 92.43 ± 1.26 | 89.15 ± 0.68 |

| 10 NCE + 10 RCE | 96.54 ± 0.57 | 95.73 ± 0.93 | 94.63 ± 0.79 | 91.23 ± 1.19 | 87.42 ± 1.69 |

| 10 NCE + 100 RCE | 96.47 ± 0.57 | 94.78 ± 0.61 | 94.33 ± 0.72 | 91.28 ± 1.45 | 86.44 ± 0.99 |

| A-NCE + RCE (ours) | 97.00 ± 0.87 * | 96.51 ± 0.40 * | 95.02 ± 0.51 * | 93.00 ± 0.32 * | 90.01 ± 0.88 * |

| Loss | The Noise Rate of AID | ||||

|---|---|---|---|---|---|

| Clean (0%) | 5.0% | 10.0% | 20.0% | 30% | |

| CE | 93.17 ± 0.94 | 90.07 ± 0.39 | 84.15 ± 0.46 | 71.95 ± 0.15 | 69.45 ± 3.41 |

| 0.1 NCE + 0.1 RCE | 92.58 ± 0.62 | 92.06 ± 0.40 | 90.90 ± 0.48 | 88.38 ± 0.18 | 85.99 ± 0.61 |

| 0.1 NCE + 1 RCE | 92.89 ± 0.48 | 92.13 ± 0.27 | 91.30 ± 0.22 1 | 88.06 ± 0.47 | 85.71 ± 0.32 |

| 0.1 NCE + 10 RCE | 92.34 ± 0.44 | 91.69 ± 0.22 | 90.82 ± 0.28 | 88.63 ± 0.30 | 84.79 ± 0.82 |

| 0.1 NCE + 100 RCE | 92.93 ± 0.42 | 91.91 ± 0.21 | 89.80 ± 0.46 2 | 88.34 ± 0.63 | 84.28 ± 0.20 2 |

| 1 NCE + 0.1 RCE | 92.55 ± 0.27 | 92.43 ± 0.11 1 | 91.01 ± 0.44 | 89.74 ± 0.43 | 86.94 ± 0.37 1 |

| 1 NCE + 1 RCE | 92.39 ± 0.32 | 91.98 ± 0.48 | 91.05 ± 0.53 | 89.37 ± 0.36 | 86.53 ± 0.11 |

| 1 NCE + 10 RCE | 91.95 ± 0.30 2 | 92.36 ± 0.32 | 91.25 ± 0.33 | 89.06 ± 0.75 | 85.50 ± 0.32 |

| 1 NCE + 100 RCE | 92.60 ± 0.26 | 92.38 ± 0.57 | 90.93 ± 0.54 | 87.85 ± 0.88 2 | 85.51 ± 0.63 |

| 10 NCE + 0.1 RCE | 93.25 ± 0.17 1 | 92.41 ± 0.75 | 90.26 ± 0.30 | 89.30 ± 0.99 | 86.78 ± 1.57 |

| 10 NCE + 1 RCE | 92.76 ± 0.31 | 92.27 ± 0.12 | 90.89 ± 0.13 | 89.79 ± 0.68 1 | 86.53 ± 0.35 |

| 10 NCE + 10 RCE | 92.94 ± 0.32 | 92.40 ± 0.11 | 91.08 ± 0.12 | 89.66 ± 0.30 | 86.63 ± 0.30 |

| 10 NCE + 100 RCE | 92.89 ± 0.33 | 91.58 ± 0.13 2 | 91.15 ± 0.35 | 88.48 ± 0.40 | 85.14 ± 0.98 |

| A-NCE + RCE (ours) | 93.68 ± 0.38 * | 92.74 ± 0.05 * | 92.37 ± 0.22 * | 90.22 ± 0.70 * | 87.17 ± 0.54 * |

| Loss | The Noise Rate of NWPU | ||||

|---|---|---|---|---|---|

| Clean (0%) | 5.0% | 10.0% | 20.0% | 30% | |

| CE | 90.99 ± 0.75 | 85.96 ± 0.82 | 81.57 ± 1.84 | 80.41 ± 0.52 | 77.07 ± 0.03 |

| 0.1 NCE + 0.1 RCE | 89.55 ± 0.42 | 89.47 ± 0.53 | 87.92 ± 0.63 | 86.41 ± 0.26 | 84.15 ± 0.26 |

| 0.1 NCE + 1 RCE | 89.33 ± 0.28 | 89.47 ± 0.12 | 88.04 ± 0.54 | 86.99 ± 0.42 | 84.16 ± 0.59 |

| 0.1 NCE + 10 RCE | 89.58 ± 0.36 | 88.68 ± 0.38 | 87.86 ± 0.29 2 | 86.73 ± 0.57 | 84.33 ± 0.31 |

| 0.1 NCE + 100 RCE | 88.92 ± 0.61 | 88.67 ± 0.73 | 88.34 ± 0.28 | 85.80 ± 0.16 2 | 84.90 ± 0.62 |

| 1 NCE + 0.1 RCE | 90.13 ± 0.27 | 88.96 ± 0.75 | 88.91 ± 0.41 | 87.32 ± 0.42 | 85.27 ± 0.36 |

| 1 NCE + 1 RCE | 89.89 ± 0.14 | 89.04 ± 0.47 | 88.32 ± 0.26 | 87.11 ± 0.11 | 83.82 ± 0.45 2 |

| 1 NCE + 10 RCE | 89.46 ± 0.61 | 88.84 ± 0.40 | 88.53 ± 0.15 | 85.93 ± 0.09 | 84.18 ± 0.52 |

| 1 NCE + 100 RCE | 88.89 ± 0.12 2 | 88.74 ± 0.39 | 88.02 ± 0.55 | 86.38 ± 0.33 | 85.02 ± 0.40 |

| 10 NCE + 0.1 RCE | 90.17 ± 0.83 1 | 89.68 ± 0.19 1 | 89.62 ± 0.35 1 | 87.70 ± 0.11 1 | 85.51 ± 0.78 1 |

| 10 NCE + 1 RCE | 89.62 ± 0.31 | 88.69 ± 0.19 | 88.60 ± 0.64 | 87.01 ± 0.50 | 84.69 ± 0.58 |

| 10 NCE + 10 RCE | 89.17 ± 0.25 | 88.60 ± 0.44 2 | 88.11 ± 0.10 | 86.38 ± 0.35 | 84.74 ± 0.36 |

| 10 NCE + 100 RCE | 89.76 ± 0.10 | 88.96 ± 0.24 | 88.19 ± 0.87 | 86.79 ± 0.34 | 84.26 ± 0.40 |

| A-NCE + RCE (ours) | 90.60 ± 0.52 * | 90.12 ± 0.53 * | 90.04 ± 0.55 * | 88.37 ± 0.29 * | 86.88 ± 0.45 * |

| Loss | Noise Rates | ||||

|---|---|---|---|---|---|

| Clean (0%) | 5.0% | 10.0% | 20.0% | 30% | |

| NCE + RCE | 98.05 ± 0.16 | 97.58 ± 0.44 | 96.58 ± 0.24 | 95.92 ± 0.52 | 95.27 ± 0.31 |

| A-NCE + RCE (ours) | 98.57 ± 0.40 | 98.70 ± 0.49 * | 97.72 ± 0.09 * | 96.97 ± 0.68 * | 96.68 ± 0.42 * |

| The Weight of APL | The Type of APL | |||

|---|---|---|---|---|

| NCE + RCE | NCE + MAE | NFL + RCE | NFL + MAE | |

| = 0.1 | 90.81 ± 0.66 | 90.75 ± 1.36 | 91.10 ± 0.62 | 90.05 ± 0.18 2 |

| = 1 | 91.01 ± 0.66 | 91.67 ± 0.79 | 91.86 ± 1.07 | 92.07 ± 0.54 1 |

| = 10 | 92.33 ± 0.67 | 90.70 ± 0.42 | 91.52 ± 1.10 | 90.87 ± 0.59 |

| = 100 | 89.91 ± 0.67 2 | 91.59 ± 1.48 | 91.24 ± 0.13 | 90.77 ± 0.39 |

| = 0.1 | 92.56 ± 0.31 1 | 91.34 ± 2.49 | 91.39 ± 0.13 | 91.15 ± 1.94 |

| = 1 | 90.12 ± 0.50 | 91.29 ± 0.23 | 90.57 ± 0.51 | 91.61 ± 0.68 |

| = 10 | 91.67 ± 0.93 | 91.76 ± 1.31 1 | 90.00 ± 0.71 2 | 91.33 ± 1.08 |

| = 100 | 91.74 ± 0.29 | 90.48 ± 0.95 | 91.43 ± 0.98 | 90.76 ± 0.64 |

| = 0.1 | 91.10 ± 1.67 | 88.73 ± 1.84 2 | 90.88 ± 2.83 | 90.23 ± 0.26 |

| = 1 | 92.43 ± 1.26 | 91.68 ± 0.51 | 91.88 ± 0.41 1 | 91.95 ± 0.62 |

| = 10 | 91.23 ± 1.19 | 91.64 ± 1.04 | 91.82 ± 0.74 | 90.42 ± 1.41 |

| = 100 | 91.28 ± 1.45 | 90.51 ± 1.54 | 90.94 ± 1.37 | 91.12 ± 0.85 |

| A-APL (ours) | 93.00 ± 0.32 * | 92.28 ± 0.88 * | 92.03 ± 0.79 * | 92.54 ± 0.29 * |

| Methods | mAP | Methods | mAP |

|---|---|---|---|

| CE | 80.41 ± 0.52 | NCE + RCE [17] | 87.70 ± 0.11 |

| MAE [13] | 86.53 ± 0.13 | NCE + MAE [17] | 85.82 ± 0.53 |

| GCE [14] | 87.97 ± 0.48 | NFL + RCE [17] | 88.04 ± 0.32 |

| RCE [15] | 86.39 ± 0.37 | NFL + MAE [17] | 84.07 ± 1.20 |

| SCE [15] | 86.51 ± 0.19 | A-NCE + RCE (ours) | 88.37 ± 0.29 * |

| ACE [16] | 86.52 ± 0.33 | A-NCE + MAE (ours) | 88.95 ± 0.45 * |

| AUL [31] | 86.74 ± 0.82 | A-NFL + RCE (ours) | 88.76 ± 0.25 * |

| AEL [31] | 85.79 ± 0.08 | A-NFL + MAE (ours) | 88.49 ± 0.26 * |

| Backbone | Loss | mAP | Training Time (min) | FLOPs (G) |

|---|---|---|---|---|

| ResNet18 | CE | 69.48 ± 1.54 | 1.05 | 2.375 |

| NCE + RCE | 87.21 ± 1.15 | 1.07 × 12 | 2.375 | |

| A-NCE + RCE | 87.78 ± 0.78 * | 1.40 | 2.375 | |

| ResNet50 | CE | 78.89 ± 0.87 | 1.83 | 5.368 |

| NCE + RCE | 92.56 ± 0.31 | 1.88 × 12 | 5.368 | |

| A-NCE + RCE | 93.00 ± 0.32 * | 2.98 | 5.368 | |

| ResNet101 | CE | 79.55 ± 0.79 | 2.73 | 10.230 |

| NCE + RCE | 89.72 ± 1.34 | 3.33 × 12 | 10.230 | |

| A-NCE + RCE | 91.94 ± 0.67 * | 4.75 | 10.230 | |

| DenseNet169 | CE | 87.46 ± 0.17 | 2.40 | 4.436 |

| NCE + RCE | 94.93 ± 0.55 | 2.40 × 12 | 4.436 | |

| A-NCE + RCE | 95.45 ± 0.50 * | 4.1 | 4.436 | |

| MobileNetV3_large | CE | 81.18 ± 0.86 | 0.95 | 0.292 |

| NCE + RCE | 89.63 ± 0.86 | 0.97 × 12 | 0.292 | |

| A-NCE + RCE | 90.99 ± 0.33 * | 1.37 | 0.292 | |

| MobileNetV3_small | CE | 75.43 ± 1.13 | 0.68 | 0.076 |

| NCE + RCE | 84.59 ± 0.27 | 0.68 × 12 | 0.076 | |

| A-NCE + RCE | 87.80 ± 0.15 * | 0.88 | 0.076 |

| Metrics | 20% | 30% |

|---|---|---|

| Prediction probability | 91.17 ± 0.58 | 88.52 ± 0.05 |

| Entropy | 91.83 ± 0.37 | 86.82 ± 1.95 |

| S | 91.39 ± 0.74 | 87.38 ± 1.50 |

| 91.14 ± 0.59 | 87.94 ± 0.78 | |

| RES | 92.44 ± 0.36 | 86.47 ± 1.42 |

| 93.00 ± 0.32 * | 90.01 ± 0.88 * |

| tp = 1 | tp = 2 | tp = 3 | tp = 4 | tp = 5 |

|---|---|---|---|---|

| 91.48 ± 1.05 | 92.25 ± 0.77 | 93.00 ± 0.32 * | 92.88 ± 0.47 | 92.55 ± 0.68 |

| The Number of Hidden Layers | |||

|---|---|---|---|

| The Number of Neurons in Each Hidden Layer | 1 | 2 | 3 |

| 50 | 90.65 ± 0.72 | 91.99 ± 0.75 | 91.37 ± 0.97 |

| 100 | 93.00 ± 0.32 * | 91.41 ± 0.27 | 92.06 ± 0.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, X.; Hou, D.; Wang, S.; Liu, X.; Xing, H. An Adaptive Weighted Method for Remote Sensing Image Retrieval with Noisy Labels. Appl. Sci. 2024, 14, 1756. https://doi.org/10.3390/app14051756

Tian X, Hou D, Wang S, Liu X, Xing H. An Adaptive Weighted Method for Remote Sensing Image Retrieval with Noisy Labels. Applied Sciences. 2024; 14(5):1756. https://doi.org/10.3390/app14051756

Chicago/Turabian StyleTian, Xueqing, Dongyang Hou, Siyuan Wang, Xuanyou Liu, and Huaqiao Xing. 2024. "An Adaptive Weighted Method for Remote Sensing Image Retrieval with Noisy Labels" Applied Sciences 14, no. 5: 1756. https://doi.org/10.3390/app14051756

APA StyleTian, X., Hou, D., Wang, S., Liu, X., & Xing, H. (2024). An Adaptive Weighted Method for Remote Sensing Image Retrieval with Noisy Labels. Applied Sciences, 14(5), 1756. https://doi.org/10.3390/app14051756