Abstract

The presence of unknown heavy-tailed noise can lead to inaccuracies in measurements and processes, resulting in instability in nonlinear systems. Various estimation methods for heavy-tailed noise exist. However, these methods often trade estimation accuracy for algorithm complexity and parameter sensitivity. To tackle this challenge, we introduced an improved variational Bayesian (VB)-based adaptive iterative extended Kalman filter. In this VB framework, the inverse Wishart distributionis used as the prior for the state prediction covariance matrix. The system state and noise parameter posterior distributions are then iteratively updated for adaptive estimation. Furthermore, we make adaptive adjustments to the IEKF filter parameters to enhance sensitivity and filtering accuracy, thus ensuring robust prediction estimation. A two-dimensional target tracking and nonlinear numerical UNGM simulation validated our algorithm. Compared to existing algorithms RKF-ML and GA-VB, our method showed significant improvements in and , with increases of 21.81% and 22.11% respectively, and a 49.04% faster convergence speed. These results highlight the method’s reliability and adaptability.

1. Introduction

Given the extensive use of nonlinear systems [1,2] in various domains, including radar [3,4], communication [5,6], navigation [7,8], control [9,10], finance [11], and statistics [12,13], the nonlinear dynamics of these systems require sophisticated filtering techniques for robust and precise estimation [14,15]. However, the effectiveness of these filtering techniques significantly diminishes in the presence of heavy-tailed noise and errors. According to previous research [16,17], this leads to a marked decline in estimation accuracy.

Numerous filters have been proposed to address the issue of heavy-tailed noise impacting system tracking. Notable among these are the robust variational Bayesian filter [18,19,20], Student’s t-based filters (RSTFs) [21,22], Huber’s M-estimation-based filters [23,24], and the maximum correlation entropy-based filters [25]. While these filters demonstrate enhanced tracking performance and effectively mitigate the negative impact of heavy-tailed noise, they face challenges in precise parameter selection and computational complexity. The need for accurate parameter calibration, a potentially time-consuming and obstructive task, combined with the computational demands of these methods, may limit their practical applicability in real-time scenarios. Consequently, there is an urgent need to improve and streamline the parameter selection process, thereby reducing computational complexity.

As a key technique in nonlinear recursive filtering, the Extended Kalman Filter (EKF) is widely recognized and utilized [16,26,27]. Its popularity stems from several advantages, such as obviating the need for calculating nominal trajectories, its straightforward methodology, and ease of implementation. However, enhancing the functionality and effectiveness of state estimation filters in real-world applications remains crucial. The Kalman filter algorithm based on Huber [28,29], for example, shows resilience to outliers and superior performance in non-Gaussian settings. Yet, it faces increased computational complexity, requires fine-tuning of parameters, and may be less efficient in Gaussian environments. The Interactive Multiple Model (IMM)-based Kalman filter [30,31] offers improved estimation accuracy and robustness amidst model uncertainties, but this benefit comes with increased computational and implementation complexities, as well as reliance on precise model selection and meticulous parameter tuning. A robust Gaussian approximate filter, integrating unknown scale matrices and degrees of freedom parameters, was introduced in the literature (GA-VB) [32]. To enhance this algorithm, the variational Bayesian (VB) method was employed to modify the state and posterior probability density functions (PDFs) for each parameter individually, aiming to reduce the overall complexity while inadvertently increasing sensitivity to parameters. Concurrently, literature (RKF-ML) [33] presented a robust state estimation algorithm using the maximum likelihood (ML) distribution to model heavy-tailed noise. This method notably avoids the need for manually selecting the degrees of freedom (DOF) parameters, thus being less sensitive to free parameters. However, it requires a VB approach to adaptively estimate ML distribution parameters in a closed recursive state, adding to the overall complexity. These algorithms underscore the trade-offs between complexity and sensitivity in algorithm design, highlighting the necessity for a nuanced approach that carefully balances these factors to achieve optimal performance in state estimation algorithms under challenging conditions.

Addressing parameter sensitivity and algorithmic complexity, this paper introduces a new approach: an improved variational Bayesian (VB)-based adaptive iterative extended Kalman filter (VBAIEKF). This method aims to enhance the accuracy of filters handling imprecise heavy-tailed noise while simultaneously addressing parameter sensitivity and reducing algorithmic complexity. Initially, the inverse Wishart (IW) distribution is utilized to model inaccurate measurement noise, treating the system state and measurement noise covariance as parameters to be estimated. To estimate these parameters, the variational Bayesian (VB) method computes the joint posterior probability density functions (PDFs) for these parameters, thereby accomplishing the state estimation of the system. This approach effectively addresses variability in both noise and prediction error covariances. The algorithm exhibits the following highlights:

(1) The approach is characterized by a notable insensitivity to its parameters, possessing the ability to withstand variations in parameter values.

(2) The approach exhibits lower computational complexity, rendering it suitable for real-time applications.

(3) This approach exhibits significant adaptability and reliability when confronted with an uncertain environment.

The algorithm proposed in this article adeptly addresses the challenge of concurrently managing noise fluctuations and prediction error covariance in the state estimation process. When compared with two optimized algorithms, GA-VB [32] and RKF-ML [33], the performance of the VBAIEKF is notably impressive. Characterized by low sensitivity to parameters, minimal complexity, excellent convergence, and adaptability, these attributes render it a particularly promising solution for achieving precise state estimation in scenarios with heavy-tailed noise.

The primary framework is outlined as follows. Section 2 delineates the procedural workflow of the VBAIEKF algorithm, where we deduce the filter and emphasize its unique characteristics. In Section 3, we conduct a thorough comparison between the proposed VBAIEKF and the GA-VB [32] and RKF-ML [33] algorithms. This comparison focuses on two-dimensional target tracking with nonlinear uncertain heavy-tailed noise and nonlinear numerical UNGM. The comparative analysis clearly demonstrates the superior performance of the VBAIEKF. Section 4 provides definitive remarks that summarize the findings and implications of the study.

2. Computational Procedure

2.1. System Model

Consider the following noisy nonlinear system shown by the state space model:

where is an observable yet unmeasurable state vector, is a vector of measurements that may be observed, and are recognized nonlinear functions. To simplify computations and ensure the convergence of the Taylor series expansion, assume that and are, without loss of generality, analytic functions that are sufficiently smooth and differentiable. The covariance matrices of the random variables and are denoted as and , respectively. The process noise is a Gaussian process with a mean of zero and an unknown covariance matrix . The measurement noise, denoted as , is a type of Gaussian white noise with a mean of zero. The covariance matrix associated with this noise is unknown. First, a Taylor Series expansion (TSE) [34], which can be stated as the following, is used to linearize locally the nonlinear functions and :

The requirement for bounded higher-order terms (H.O.T) is crucial to guarantee the convergence and accuracy of the Taylor series expansion. Following the application of the Taylor series expansion, the nonlinear functions and are approximated by linear functions with error terms and , respectively.

When there is heavy-tailed noise, the covariance matrix of the process and measurement noise is usually imprecise. To resolve this problem, one can utilize a variational Bayesian (VB) method [19,35,36], and subsequently apply an iterative extended Kalman filter to estimate the state vector . This work presents an improved variational Bayesian iterative extended Kalman filtering (VBAIEKF) technique designed for tracking estimation.

2.2. Prior Distributions

Provide a detailed description of the updating process for prior distributions.

In the Kalman filtering paradigm, the likelihood probability density function (PDF) and the one-step anticipated PDF are Gaussian distributions:

where the Gaussian distribution has the following mean and variance: , , and its PDF is:

The anticipated state vector and the related one-step predicted covariance matrix can be represented as follows:

where and denote the state estimate and estimation error covariance matrix at time , respectively. Where and represent the state estimation and the covariance matrix of the estimate error at time , respectively. The coefficient in Equation (9) is imprecise, and the actual process noise covariance matrix is not known.

The inverse Wishart (IW) distribution is considered the conjugate prior distribution for a Gaussian distribution with a specified mean covariance matrix in Bayesian statistics. Variational Bayesian (VB) approaches offer a closed-form analytical solution to this intricate problem. Let us consider the Wishart distribution , where is the inverse matrix of a positive definite matrix . The matrix is distributed according to the inverse Wishart (IW) distribution [37].

where represents the degree of freedom, represents a positive definite matrix, represents the dimension of represents the multivariate gamma distribution, and trace represents the trace function. The expected value of the matrix , if it follows the IW distribution, is . Due to the fact that and are the normal PDF covariance matrices, and can be written as IW distributions:

where and denote the degrees of freedom and scale matrices of , respectively, and denote the degrees of freedom and scale matrices of . Then, it is necessary to determine the values of and .

The nominal error covariance matrix can be set equal to the mean of matrix .

Using , we arrive at the following:

where n stands for the dimension of the state , and stands for the nominal process noise covariance. Let:

where is the correction factor. The outcome of entering Equation (14) into (13) is:

The prior probability density function (PDF) distribution of the measurement noise covariance matrix is influenced by the inverse Wishart (IW) distribution. Similarly, the posterior PDF distribution of this matrix should also follow an IW distribution, as stated in Equation (12):

It is unknown what the dynamic model of the noise variance in Equation (17) appears like in real-world applications: . To preserve and propagate the approximate posterior at the prior moment, we select the forgetting factor and formulate the prior parameters as follows:

The forgetting factor reflects the degree of temporal variation, which ranges from 0 to 1, inclusive. A value of indicates the variance at a steady state, with lower values of corresponding to higher frequencies of variations throughout time.

2.3. Posterior PDFs

Here, we elaborate on the meticulous process of updating posterior PDFs.

In order to calculate , , and , the joint posterior probability density function needs to be determined. Since there is no analytical solution available for this joint posterior probability density function (PDF), the variational Bayesian (VB) approach is employed to derive an approximate PDF that is factorized and flexible for:

where corresponds to the somewhat posterior PDF of . Therefore, it is possible to produce the VB approximation by minimizing the Kullback–Leibler Divergence (KLD) [38] between the posterior PDFs of the genuine joint and the approximation :

Therefore, it can also be written as:

Additionally, Equations (22) and (27) can be obtained. represents the approximate PDF of the th iteration of , and is defined as follows:

where represents the expected value of variable at iteration i-th.

The IW distribution with the parameter of freedom and scale matrix can be regarded as :

where is the parameter of freedom and is the scale matrix:

Its comprehensive form is:

where has the following definition:

Equation (26) states that may be thought of as the IW distribution, where stands in for the freedom parameter and for the scale matrix.

When the scale matrix and the freedom parameter are represented as:

where can be represented as:

After the th iteration update, the one-step predicted PDF and likelihood PDF may be described by the following equations:

Following are the formulations for the matrix of the corrected predicted error covariance and matrix of the measured noise covariance:

Equations (33)–(36) can be transformed into a Gaussian distribution with a mean of and a variance of by considering the following:

The predicted value and prediction error covariance are then calculated using IEKF at the th iteration as follows:

is reached. The following expressions provide variational approximations of posterior PDFs after N iterations:

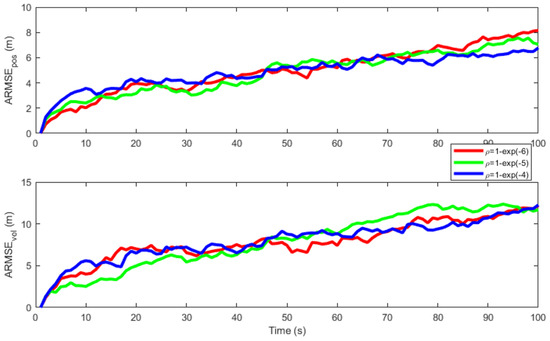

2.4. Algorithm 1

The recursive operation of the improved adaptive iterative extended Kalman filter based on variational Bayesian (VBAIEKF) is depicted in the pseudocode in Algorithm 1.

| Algorithm 1 One time step of the proposed VBAIEKF |

Input:

Output: |

3. Illustrative Example

3.1. Two-Dimensional Target Tracking

In order to showcase the effectiveness of the suggested algorithm, we will employ the conventional scenario of two-dimensional target tracking and conduct a comparative analysis with various relevant approaches [38]. The state equation is represented as Equation (44). The observation quantity includes the target’s distance and bearing, and the observation equation is given as Equation (45):

where is the state of the target at moment is the position state, is the velocity state in x and y directions. is the sampling time. is the zero-mean Gaussian process noise with the covariance .

The initial position and velocity of the state are as , , with covariance . The typical heavy-tailed noise is selected as the measurement noise, and the Gaussian mixture distribution is used:

where is the measurement noise covariance, and set . In the simulation experiment, the improved robust GA-VB [32], RKF-ML [33], and the proposed VBAIEKF were used to test the filtering effect. In the proposed VBAIEKF and the improved robust GA-VB and RKF-ML, the individual parameters were set as follows: parameter , parameter , parameter , parameter , forgetting factor , adjustment factor , and the number of iterations . All algorithms were coded with MATLAB R2020b on a computer.

To compare the state estimation accuracy of the aforementioned nonlinear filtering algorithms, the root mean square error (RMSE) [39] and the average root mean square error (ARMSE) of the state vector is chosen to represent the filtering accuracy, and is defined as follows:

where is the true position, is the estimated position, and N denotes the number of times the algorithm is run.

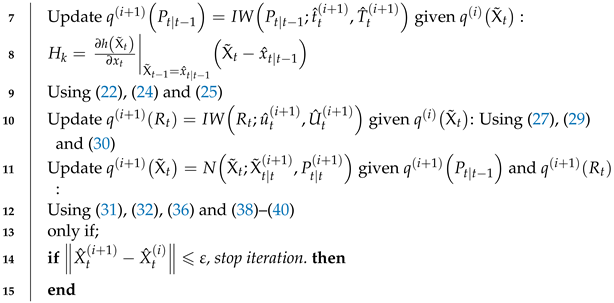

In Figure 1, the impact of iteration numbers on the VBAIEKF algorithm is examined. The iteration count influences the proposed VBAIEKF in a distinct manner, with values set at . The observed trend indicates that as the number of iterations increases, the convergence speed gradually decreases, while the computational load correspondingly increases. Analysis of the ARMSE in position and velocity reveals a clear pattern; the ARMSE values demonstrate that accuracy improves with an increase in iterations, ultimately converging. When the iteration count reaches , the ARMSE values tend to stabilize. This iterative improvement is significant, despite the anticipated increase in computational demands.

Figure 1.

and for different iterations N.

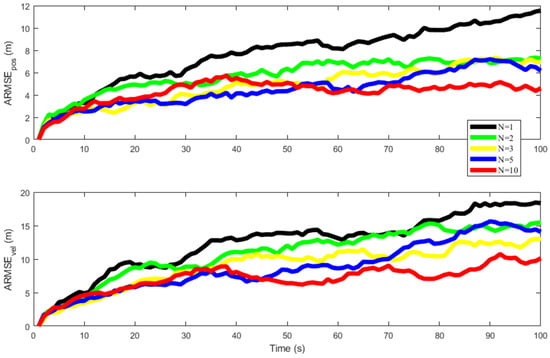

In Figure 2, a detailed examination is conducted to measure the impact of the adjustment factor on the performance of the proposed VBAIEKF algorithm. The adjustment factor systematically varies within the range of . The results demonstrate that throughout the entire random process, the algorithm is uniformly influenced within a predetermined stable range. Notably, the operation of the algorithm is subject to a slight but not significant influence by the adjustment factor and minimization and precision enhancement. The VBAIEKF exhibits low sensitivity to parameters, displaying minimal influence from varying parameters. Therefore, was selected. This influence consistently remains within a specific stable range throughout the random process. At , the algorithm achieves an optimal balance between error algorithm comparison experiments.

Figure 2.

and for different adjustment factor .

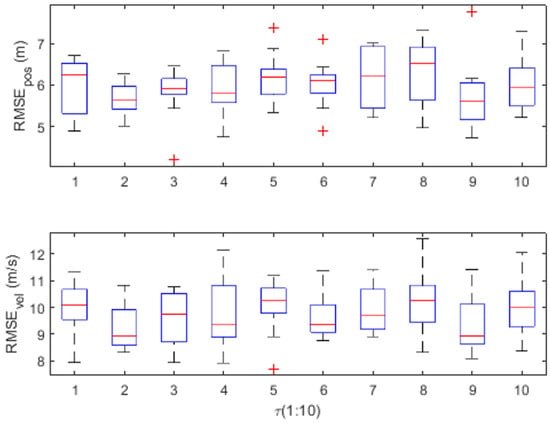

In Figure 3, we conducted a comprehensive analysis to evaluate the impact of the forgetting factor on the performance of the proposed VBAIEKF algorithm. Systematic variations of the variable within a certain range can identify various factors affecting the algorithm’s performance. Affecting the algorithm’s performance, , the impact of the forgetting factor significantly diminishes, thereby accelerating the convergence speed, reducing the RMSE, and making the algorithm output trajectory more perfect. The current configuration appears to have achieved the optimal balance between convergence speed, accuracy, and overall stability. Therefore, we chose for the subsequent comparative analysis.

Figure 3.

and for different forgetting factor .

Table 1 provides a comprehensive summary of the relative performance of the proposed VBAIEKF, GA-VB [32], and RKF-ML [33] at various levels of noise. Table 1 lists several key metrics: average root mean square error (ARMSE) for position and velocity, and average execution time. The results show that VBAIEKF has better convergence properties and adaptability. Compared to GA-VB and RKF-ML, the VBAIEKF shows a decrease in both the average position ARMSE and average velocity ARMSE, indicating improved accuracy in position and velocity estimation. Furthermore, this algorithm reduces the average execution time, highlighting its computational efficiency. Under various noise levels, using example noise as a reference, the convergence ranges under noises and are computed. From Table 1, it is evident that the VBAIEKF algorithm under different levels of noise exhibits a position convergence range of +0.058%∼+0.58% and a velocity convergence range of −0.17%∼+0.21%, significantly smaller than RKF-ML and GA-VB. This indicates that the algorithm possesses excellent convergence and adaptability. Comparative analysis reveals that the proposed VBAIEKF is a highly reliable and effective solution, surpassing both GA-VB and RKF-ML methods across different noise levels. The results obtained in this study provide robust evidence of the reliability and effectiveness of the proposed VBAIEKF in various scenarios. The results of these studies confirm that this algorithm can be used as a reliable and efficient tool.

Table 1.

, and the average execution time for the RKF-ML, GA-VB, and VBAIEKF under different noise.

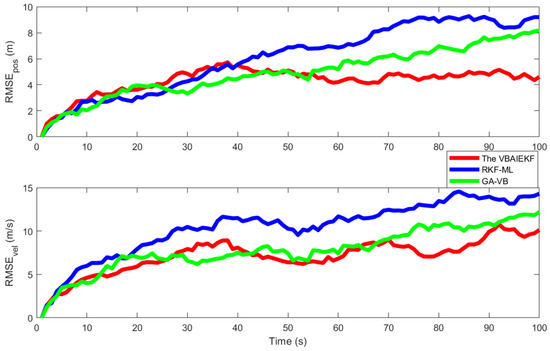

Figure 4 presents a thorough comparative analysis, wherein the proposed VBAIEKF algorithm is juxtaposed with existing optimization algorithms GA-VB and RKF-ML in terms of the noise , a specific number of iterations (). The comparative analysis is based on the root mean square absolute error (ARMSE) of velocity and position estimates. The results underscore the efficacy of the VBAIEKF algorithm; compared to the existing optimization algorithms RKF-ML and GA-VB, the VBAIEKF exhibited significant improvements in and , with increases of 21.81% and 22.11%, respectively. Table 1 shows that under noise , the convergence speed was enhanced by 49.04%, demonstrating accelerated convergence and significantly enhanced estimation precision. This highlights the algorithm’s capacity to provide reliable and accurate estimates.

Figure 4.

and for different algorithms.

3.2. Nonlinear Numerical UNGM

This paper employs a nonlinear numerical UNGM [40] to demonstrate the effectiveness of VBRAIEKF. The equation representing the state space of the UNGM model is as follows:

where and represent measurement noise with a mean of zero and covariance, and represents non-Gaussian process noise .

The measurement noise is selected to be the typical heavy-tailed noise [25].

The symbol represents the measurement noise covariance matrix, which is defined as . By adjusting the parameters to optimize the algorithm’s performance, setting the forgetting factor significantly reduces its impact, resulting in a significant decrease in influence. By selecting , the algorithm’s convergence speed is greatly enhanced. By setting the tuning factor , the algorithm attains an optimal equilibrium in error algorithm comparative experiments. Performing 10 iterations decreases the average root mean square error (ARMSE), resulting in a more precise trajectory for the algorithm’s output. During the simulation experiment, GA-VB, RKF-ML, and VBAIEKF are employed to evaluate the filtering efficacy.

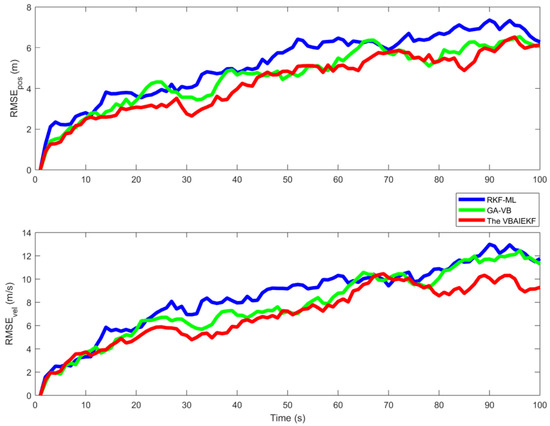

Figure 5 examines the comparability of and for the GA-VB, RKF-ML, and the VBAIEKF algorithms. The results indicate that the estimation state of VBAIEKF demonstrates higher precision, with RMSE values lower than those of GA-VB and RKF-ML. It can more accurately estimate the expected state covariance and the actual state covariance. The improvement in estimation accuracy for both position and velocity signifies its reliability.

Figure 5.

and for different algorithms.

4. Conclusions

We have successfully developed an improved adaptive iterative extended Kalman filter based on variational Bayesian for nonlinear situations (VBAIEKF). This innovative approach not only enhances the accuracy of state estimation and broadens the applicability of the algorithm in dynamic and complex environments but also addresses the issue of algorithm parameter sensitivity. The foundation of this algorithm is the principle of variational Bayesian, which significantly boosts its capability to handle uncertainties and effectively resolve nonlinear problems. Through extensive simulation experiments, the convergence of the algorithm under various conditions, including different noise levels and outlier scenarios, has been confirmed. Our research results emphasize the algorithm’s resilience, demonstrating its ability to maintain stability and accurate estimation in challenging and unpredictable situations. Compared with existing algorithms, the VBAIEKF shows advantages in multiple aspects:

(1) The VBAIEKF achieves enhanced estimation accuracy, with a 21.81% improvement in position estimation precision and a 22.11% improvement in velocity estimation.

(2) The VBAIEKF exhibits superior convergence characteristics and adaptability to different noise levels, with a position convergence range of +0.058%∼+0.58% and a velocity convergence range of −0.17%∼+0.21%.

(3) The VBAIEKF demonstrates low sensitivity to various parameters, addressing the impact of parameters on the outcomes.

(4) The VBAIEKF attains heightened operational efficiency with lower complexity, resulting in a 49.04% increase in convergence speed.

In future research endeavours, validation of robustness and stability will be pursued. This exploration will unfold on two fronts: one involving theoretical substantiation and the other focusing on the real variability of the experimental process of the experimental process and the treatment of noise anomalies.

Author Contributions

Conceptualization, L.W. and Q.F.; methodology, L.W. and Q.X.; validation, Q.F. and Q.X.; data analysis, L.W. and Q.F.; writing—original draft preparation, L.W.; writing—review and editing, Q.F., L.W., Q.X. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No.62373 067) and the Postgraduate Scientific Research Innovation Project of Hunan Province (no.CX20220921).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shen, Q.; Jiang, B.; Shi, P.; Zhao, J. Cooperative Adaptive Fuzzy Tracking Control for Networked Unknown Nonlinear Multiagent Systems with Time-Varying Actuator Faults. IEEE Trans. Fuzzy Syst. 2014, 22, 494–504. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Chen, H.; Zhang, Y.; Zhang, D.; Peng, C. Trajectory Tracking Control of a Skid-Steer Mobile Robot Based on Nonlinear Model Predictive Control with a Hydraulic Motor Velocity Mapping. Appl. Sci. 2024, 14, 122. [Google Scholar] [CrossRef]

- Petrov, E.P.; Kharina, N.L. Digital Radar Imaging by Nonlinear Filtering Methods of Discrete and Continuous Parameters (Amplitude and Delay) of Reflected PM Signals. In Proceedings of the 2020 Dynamics of Systems, Mechanisms and Machines (Dynamics), Omsk, Russia, 10–12 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Senel, N.; Kefferpütz, K.; Doycheva, K.; Elger, G. Multi-Sensor Data Fusion for Real-Time Multi-Object Tracking. Processes 2023, 11, 501. [Google Scholar] [CrossRef]

- Rahimi, F.; Rezaei, H. A Distributed Fault Estimation Approach for a Class of Continuous-Time Nonlinear Networked Systems Subject to Communication Delays. IEEE Control. Syst. Lett. 2022, 6, 295–300. [Google Scholar] [CrossRef]

- Li, Z.; Zhong, L.; Yang, H.; Zhou, L. Distributed Cooperative Tracking Control Strategy for Virtual Coupling Trains: An Event-Triggered Model Predictive Control Approach. Processes 2023, 11, 3293. [Google Scholar] [CrossRef]

- Li, B.; Xiao, M. Nonlinear algorithm based on new Kalmanfiltering method for integrated SINS/GPS Navigation System. In Proceedings of the 2009 IEEE International Conference on Intelligent Computing and Intelligent Systems, Shanghai, China, 20–22 November 2009; pp. 557–561. [Google Scholar] [CrossRef]

- Sun, X.; Cai, M.; Ding, J. A GPU-Accelerated Method for 3D Nonlinear Kelvin Ship Wake Patterns Simulation. Appl. Sci. 2023, 13, 12148. [Google Scholar] [CrossRef]

- Eremin, E.L.; Nikiforova, L.V.; Shelenok, E.A. Combined Nonlinear Control System for Non-Affine Multi-Loop Plant with Control and State Delays. In Proceedings of the 2022 4th International Conference on Control Systems, Mathematical Modeling, Automation and Energy Efficiency (SUMMA), Lipetsk, Russia, 9–11 November 2022; pp. 23–29. [Google Scholar] [CrossRef]

- Sha’aban, Y.A. Distributed Control of an Ill-Conditioned Non-Linear Process Using Control Relevant Excitation Signals. Processes 2023, 11, 3320. [Google Scholar] [CrossRef]

- Kai, G.; Zhang, W. Numerical study of a class of nonlinear financial system. J. Dyn. Control 2016, 14, 407–411. [Google Scholar]

- Zhang, Y.; Wang, Z. Statistics Character and Complexity in Nonlinear Systems. In Machine Learning; InTechOpen: London, UK, 2010. [Google Scholar]

- Zhang, Y.; Wu, W.; He, W.; Zhao, N. Algorithm Design and Convergence Analysis for Coexistence of Cognitive Radio Networks in Unlicensed Spectrum. Sensors 2023, 23, 9705. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Li, N.; Chambers, J. A Robust Gaussian Approximate Fixed-Interval Smoother for Nonlinear Systems with Heavy-Tailed Process and Measurement Noises. IEEE Signal Process. Lett. 2016, 23, 468–472. [Google Scholar] [CrossRef]

- Xu, D.; Wang, B.; Zhang, L.; Chen, Z. A New Adaptive High-Degree Unscented Kalman Filter with Unknown Process Noise. Electronics 2022, 11, 1863. [Google Scholar] [CrossRef]

- Zhao, J. Dynamic State Estimation with Model Uncertainties Using H∞ Extended Kalman Filter. IEEE Trans. Power Syst. 2018, 33, 1099–1100. [Google Scholar] [CrossRef]

- Luo, X.; Zhao, J.; Xiong, Y.; Xu, H.; Chen, H.; Zhang, S. Parameter Identification of Five-Phase Squirrel Cage Induction Motor Based on Extended Kalman Filter. Processes 2022, 10, 1440. [Google Scholar] [CrossRef]

- Huang, W.; Fu, H.; Zhang, W. A Novel Robust Variational Bayesian Filter for Unknown Time-Varying Input and Inaccurate Noise Statistics. IEEE Sensors Lett. 2023, 7, 7001104. [Google Scholar] [CrossRef]

- Lu, X.; Jing, D.; Jiang, D.; Gao, Y.; Yang, J.; Li, Y.; Li, W.; Tao, J.; Liu, M. Trajectory PHD Filter for Adaptive Measurement Noise Covariance Based on Variational Bayesian Approximation. Appl. Sci. 2022, 12, 6388. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Xu, B.; Wu, Z.; Chambers, J.A. A New Adaptive Extended Kalman Filter for Cooperative Localization. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 353–368. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, S.; Wu, H.; Chen, K. Robust Student’s t Mixture Probability Hypothesis Density Filter for Multi-Target Tracking with Heavy-Tailed Noises. IEEE Access 2018, 6, 39208–39219. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, H. Student’s t-Kernel-Based Maximum Correntropy Kalman Filter. Sensors 2022, 22, 1683. [Google Scholar] [CrossRef] [PubMed]

- Chang, L.; Li, K.; Hu, B. Huber’s M-Estimation-Based Process Uncertainty Robust Filter for Integrated INS/GPS. IEEE Sensors J. 2015, 15, 3367–3374. [Google Scholar] [CrossRef]

- Gao, W.; Liu, Y.; Xu, B. Robust Huber-Based Iterated Divided Difference Filtering with Application to Cooperative Localization of Autonomous Underwater Vehicles. Sensors 2014, 14, 24523–24542. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Zhang, Y.; Wang, X. Iterated maximum correntropy unscented Kalman filters for non-Gaussian systems. Signal Process. 2019, 163, 87–94. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, H.; Hao, P.; Deng, H. Observer-Based Approximate Affine Nonlinear Model Predictive Controller for Hydraulic Robotic Excavators with Constraints. Processes 2023, 11, 1918. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, Z.; Xiong, H.; Zhu, T.; Long, Z.; Wu, W. Transformer Aided Adaptive Extended Kalman Filter for Autonomous Vehicle Mass Estimation. Processes 2023, 11, 887. [Google Scholar] [CrossRef]

- Wang, H.; Li, H.; Zhang, W.; Zuo, J.; Wang, H. Derivative-Free Huber-Kalman Smoothing Based on Alternating Minimization. Signal Process. 2019, 163, 115–122. [Google Scholar] [CrossRef]

- Wang, X.; Cui, N.; Guo, J. Huber-based Unscented Filtering and its Application to Vision-based Relative Navigation. Radar Sonar Navig. Iet 2010, 4, 134–141. [Google Scholar] [CrossRef]

- Wei, X.; Hua, B.; Wu, Y.; Chen, Z. Robust Interacting Multiple Model Cubature Kalman Filter for Nonlinear Filtering with Unknown Non-Gaussian Noise. Digit. Signal Process. 2023, 136, 103982. [Google Scholar] [CrossRef]

- Kheirish, M.; Yazdi, E.A.; Mohammadi, H.; Mohammadi, M. A Fault-tolerant Sensor Fusion in Mobile Robots Using Multiple Model Kalman Filters. Robot. Auton. Syst. 2023, 161, 104343. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Li, N.; Chambers, J. A Robust Gaussian Approximate Filter for Nonlinear Systems with Heavy Tailed Measurement Noises. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 4209–4213. [Google Scholar] [CrossRef]

- Wang, G.; Yang, C.; Ma, X. A Novel Robust Nonlinear Kalman Filter Based on Multivariate Laplace Distribution. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 2705–2709. [Google Scholar] [CrossRef]

- Jacquemin, T.; Tomar, S.; Agathos, K.; Mohseni-Mofidi, S.; Bordas, S.P.A. Taylor-series Expansion based Numerical Methods: A Primer, Performance Benchmarking and New Approaches for Problems with Mon-smooth Solutions. Arch. Comput. Methods Eng. 2020, 27, 1465–1513. [Google Scholar] [CrossRef]

- Blei, D.M.; Kucukelbir, A.; Mcauliffe, J.D. Variational Inference: A Review for Statisticians. J. Amer. Statist. Assoc. 2017, 112, 859–877. [Google Scholar] [CrossRef]

- Dong, P.; Jing, Z.; Leung, H.; Shen, K. Variational Bayesian adaptive cubature information filter based on Wishart distribution. IEEE Trans. Autom. Control 2017, 2, 6051–6057. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Shi, P.; Chambers, J. Variational Adaptive Kalman Filter with Gaussian-Inverse-Wishart Mixture Distribution. IEEE Trans. Autom. Control 2021, 66, 1786–1793. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, Y.; Wang, X. Maximum Correntropy Rauch-Tung-Striebel Smoother for Nonlinear and Non-Gaussian Systems. IEEE Trans. Autom. Control 2021, 66, 1270–1277. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root Mean Square Error (RMSE) or Mean Absolute Error (MAE)?—Arguments Against Avoiding RMSE in the Literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Liu, X.; Liu, X.; Yang, Y.; Gao, Y.; Zhang, W. Variational Bayesian-Based Robust Cubature Kalman Filter with Application on SINS/GPS Integrated Navigation System. IEEE Sensors J. 2022, 22, 489–500. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).