Adaptive Kalman Filter for Real-Time Visual Object Tracking Based on Autocovariance Least Square Estimation

Abstract

1. Introduction

1.1. Object Detection

- The simple BS techniques including the frame differencing [10], average filtering [11,12], median filtering [13,14], and histogram over time [15], model the background simply, often by just using the previous frames or an average/median of the recent frames. These are fast and easy to implement but lack adaptability to changes in the background.

- The statistical BS techniques including the mixture of Gaussians (MoG) [16,17], kernel density estimation [18,19,20], support vector models [21,22], and principal component analysis [23,24], build a statistical model of the background and classify pixels based on the model. They model the background more robustly using the history of pixels but are prone to the setting parameters and models.

- NN-based BS techniques including the radial basis function NN [25], self-organizing NN [26,27], the convolutional NN [28,29], and the generative adversarial networks (GAN) [30,31], learn the specialized NN architectures that can adapt to changes in the background model to detect foreground over time. These can learn complex representations of background appearance and maintain robust models of multi-modal backgrounds, but they require large training datasets and have expensive computation loads to train and run, which may not be available in a real-time environment.

1.2. Object Tracking

- The proposed AKF-ALS algorithm provides a robust and efficient solution for noise covariance estimation in the visual object tracking problem.

- A novel adaptive thresholding method based on the estimated process noise covariance that can predict sudden variations without heavy computations is proposed to improve the robustness of the BS method.

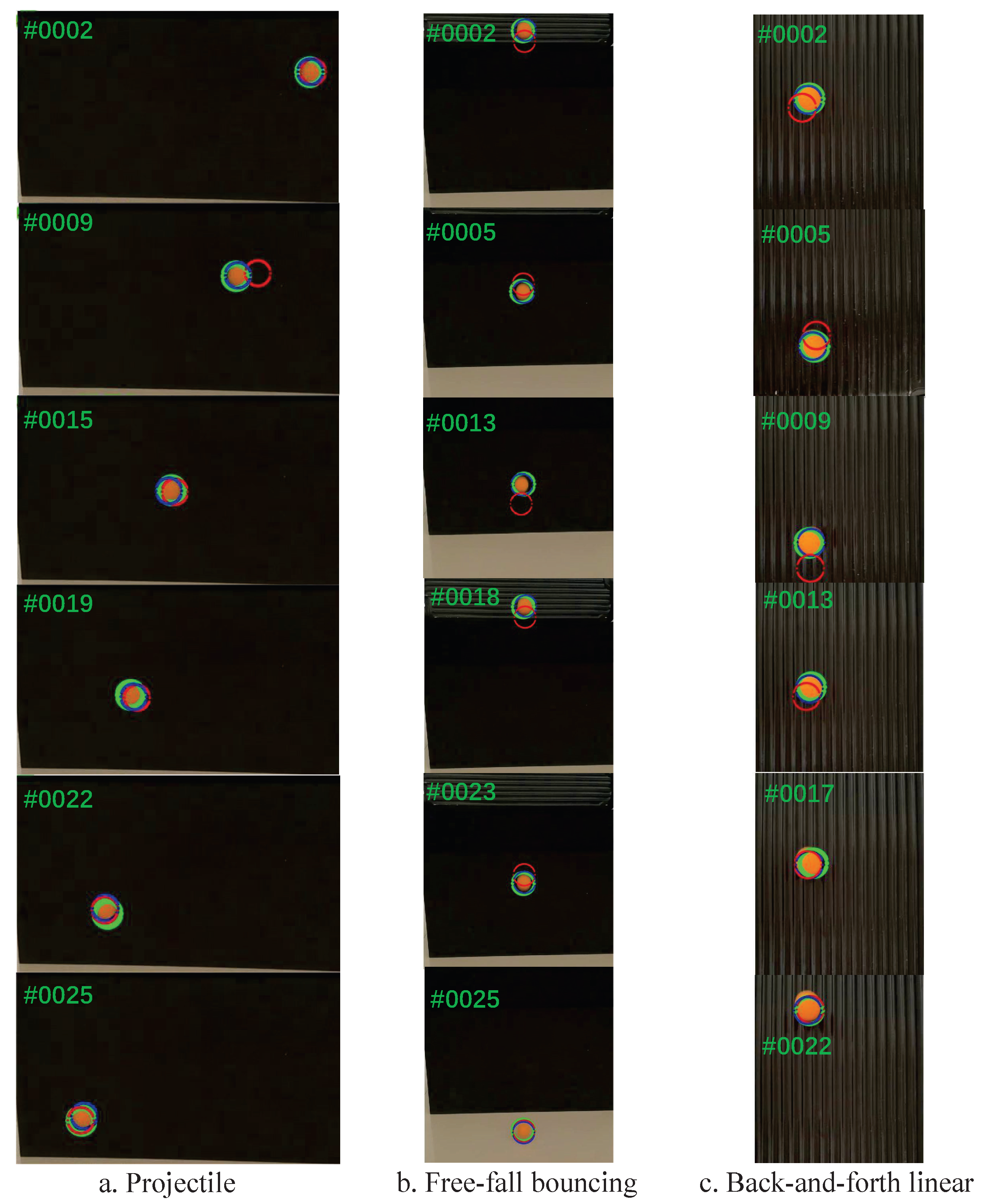

- The experiments on tracking the centroid of a moving ball subjected to projectile motion, free-fall bouncing motion, and back-and-forth linear motion are conducted to show the efficiency and superiority of the proposed AKF-ALS algorithm.

2. Problem Formulation and Preliminaries

2.1. Background Subtraction

2.2. Kalman Filter

2.3. Auto-Covariance Least-Squares (ALS) Method

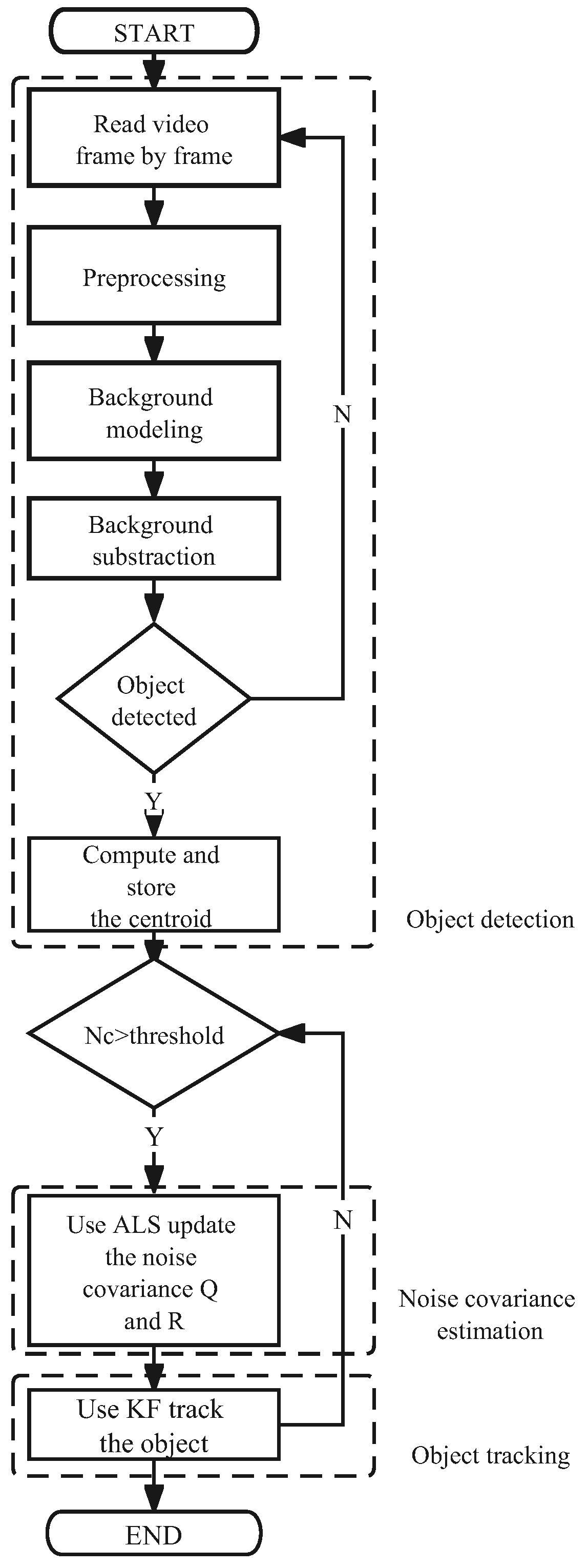

3. ALS-KF Based Visual Object Tracking Algorithm

3.1. Object Detection Using Background Subtraction

3.2. Object Tracking Using Kalman Filter

3.3. Noise Covariance Estimation Using ALS

| Algorithm 1 AKF-ALS |

- Initialize the state estimate , the state covariance matrix , the system matrices F and H, the Kalman gain matrix K, the window sizes N and , and the noise covariance matrices Q and R.

- The main loop of the algorithm iterates until the ALS method has converged. At each iteration, The state estimate and covariance matrix are updated recursively using the observed measurement , the measurement model defined by H, and the noise covariance matrix R.

- After a sufficient number of iterations k exceeds , the ALS method can be applied to estimate the noise covariance matrices. We compute the autocovariance of the innovations, stack them into a vector , and compute the matrix as per the provided equations. A least-squares problem is then solved to get an estimate , which we then unstack into the individual matrices and .

4. Experiment Study

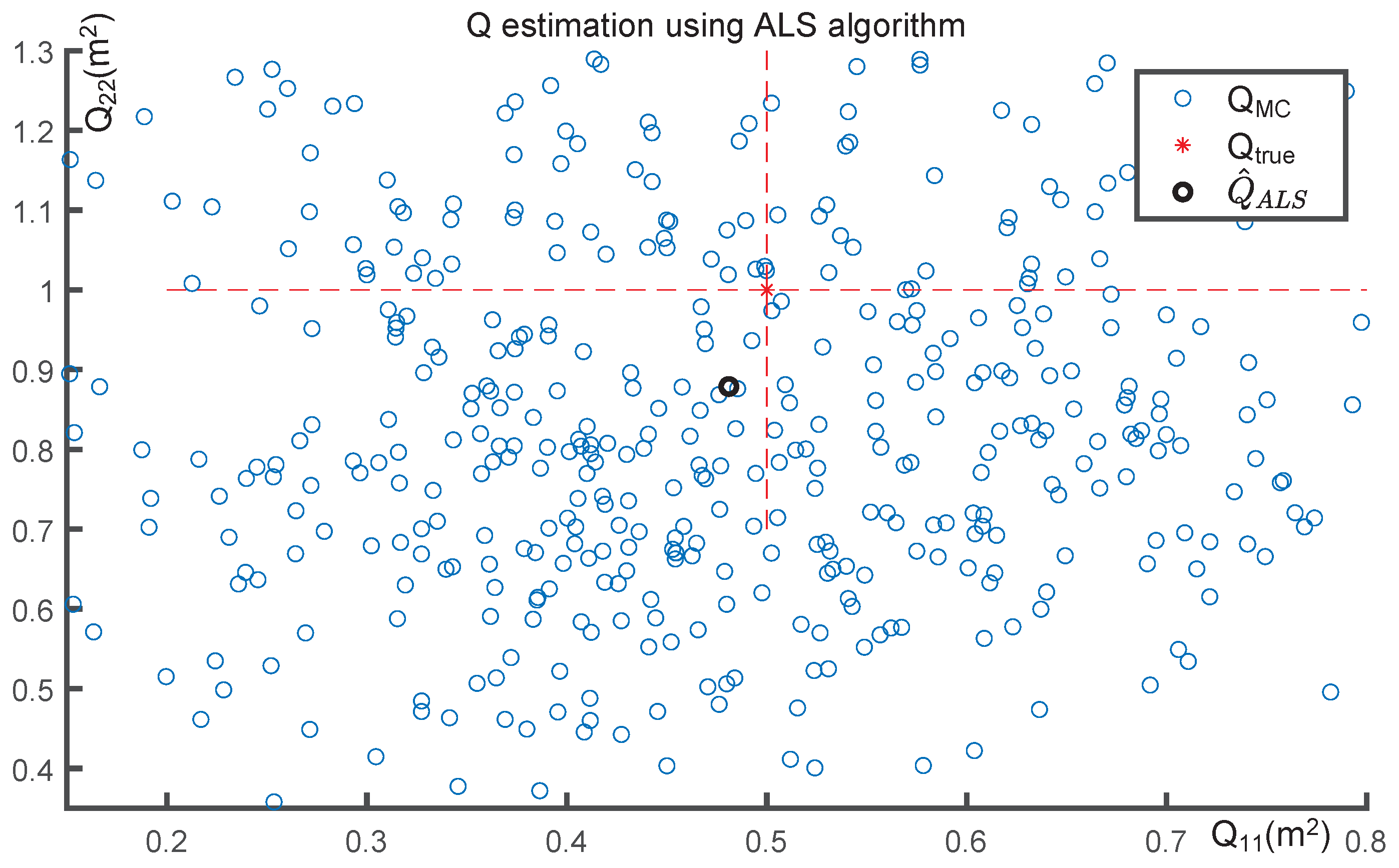

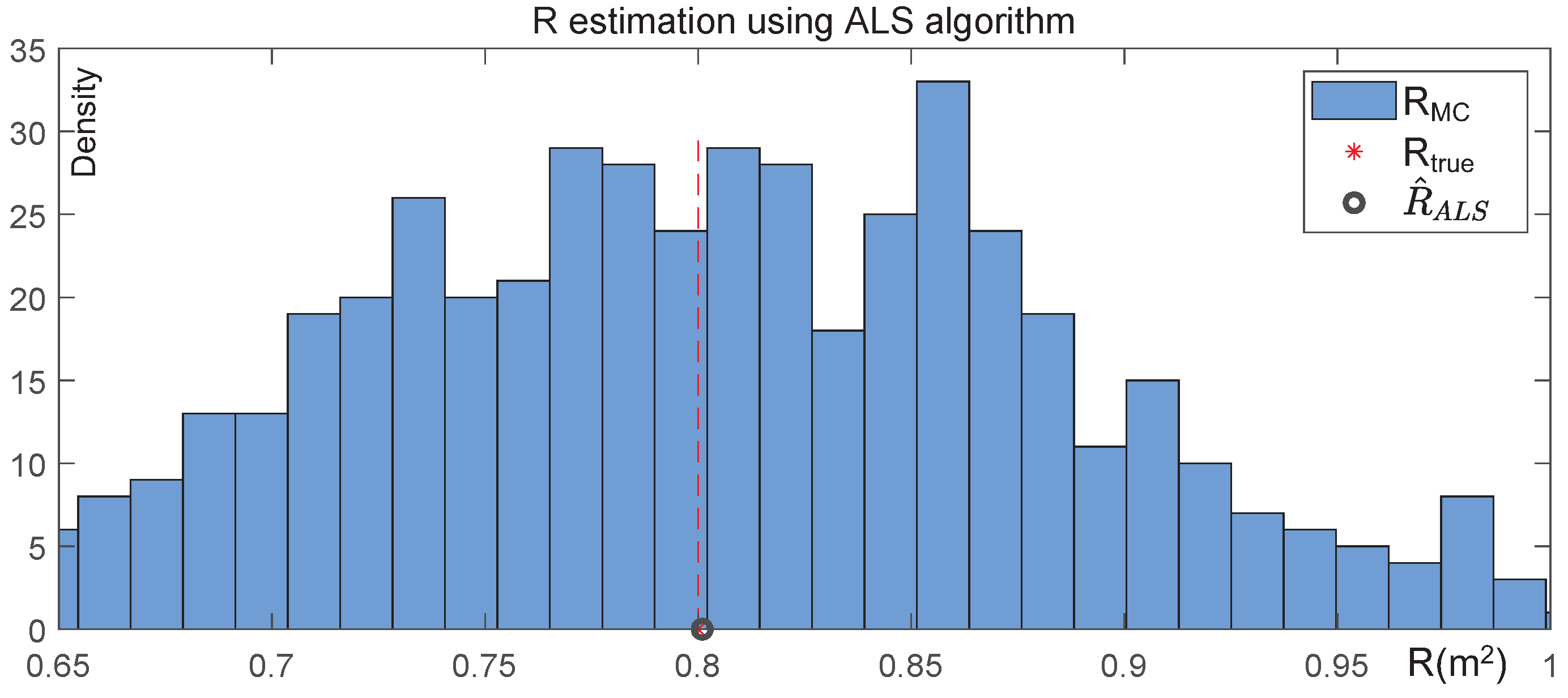

4.1. Numerical Analysis of Noise Covariance Estimation

4.2. Centroid-Based Moving Ball Tracking Subjected to Three Motions

- Projectile motion following a parabolic path refers to the motion of an object thrown into the air and subject to downward acceleration due to gravity. The challenge in tracking such motion is the constantly changing speed and direction of the object.

- Free-fall bouncing motion is the motion of an object falling under gravity and then bouncing back upwards. The challenge is the object’s velocity changes rapidly at the point of impact, which can be difficult for a tracking algorithm to handle.

- Back-and-forth linear motion refers to an object moving to and fro along a straight line. The challenge is the abrupt change in velocity when the object changes direction.

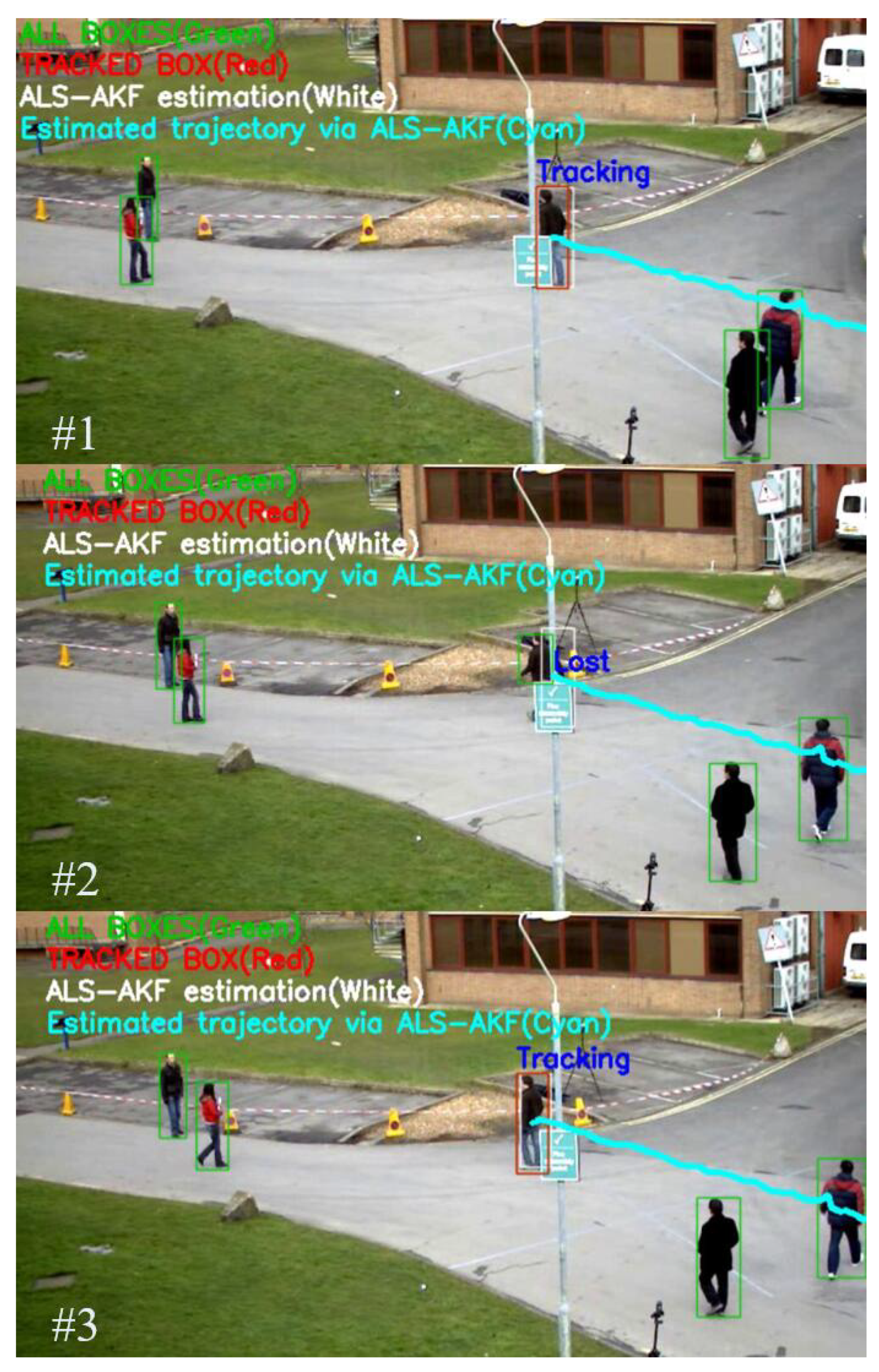

4.3. Bounding Box-Based Pedestrian Tracking with Occlusions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yadav, S.P. Vision-based detection, tracking, and classification of vehicles. IEIE Trans. Smart Process. Comput. 2020, 9, 427–434. [Google Scholar] [CrossRef]

- Abdulrahim, K.; Salam, R.A. Traffic surveillance: A review of vision based vehicle detection, recognition and tracking. Int. J. Appl. Eng. Res. 2016, 11, 713–726. [Google Scholar]

- Gad, A.; Basmaji, T.; Yaghi, M.; Alheeh, H.; Alkhedher, M.; Ghazal, M. Multiple Object Tracking in Robotic Applications: Trends and Challenges. Appl. Sci. 2022, 12, 9408. [Google Scholar] [CrossRef]

- Gammulle, H.; Ahmedt-Aristizabal, D.; Denman, S.; Tychsen-Smith, L.; Petersson, L.; Fookes, C. Continuous human action recognition for human-machine interaction: A review. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Dong, X.; Shen, J.; Yu, D.; Wang, W.; Liu, J.; Huang, H. Occlusion-aware real-time object tracking. IEEE Trans. Multimed. 2016, 19, 763–771. [Google Scholar] [CrossRef]

- Setitra, I.; Larabi, S. Background subtraction algorithms with post-processing: A review. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 2436–2441. [Google Scholar]

- Shaikh, S.H.; Saeed, K.; Chaki, N.; Shaikh, S.H.; Saeed, K.; Chaki, N. Moving Object Detection Using Background Subtraction. In Moving Object Detection Using Background Subtraction; SpringerBriefs in Computer Science; Springer: Cham, Switzerland, 2014. [Google Scholar] [CrossRef]

- Bouwmans, T.; Javed, S.; Sultana, M.; Jung, S.K. Deep neural network concepts for background subtraction: A systematic review and comparative evaluation. Neural Netw. 2019, 117, 8–66. [Google Scholar] [CrossRef]

- Kalsotra, R.; Arora, S. Background subtraction for moving object detection: Explorations of recent developments and challenges. Vis. Comput. 2022, 38, 4151–4178. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, K. A vehicle detection algorithm based on three-frame differencing and background subtraction. In Proceedings of the 2012 Fifth International Symposium on Computational Intelligence and Design, Hangzhou, China, 28–29 October 2012; Volume 1, pp. 148–151. [Google Scholar] [CrossRef]

- Li, J.; Pan, Z.M.; Zhang, Z.H.; Zhang, H. Dynamic ARMA-based background subtraction for moving objects detection. IEEE Access 2019, 7, 128659–128668. [Google Scholar] [CrossRef]

- Zhang, R.; Gong, W.; Grzeda, V.; Yaworski, A.; Greenspan, M. An adaptive learning rate method for improving adaptability of background models. IEEE Signal Process. Lett. 2013, 20, 1266–1269. [Google Scholar] [CrossRef]

- Shi, P.; Jones, E.G.; Zhu, Q. Median model for background subtraction in intelligent transportation system. In Image Processing: Algorithms and Systems III; SPIE: Bellingham, WA, USA, 2004; Volume 5298, pp. 168–176. [Google Scholar]

- Li, X.; Ng, M.K.; Yuan, X. Median filtering-based methods for static background extraction from surveillance video. Numer. Linear Algebra Appl. 2015, 22, 845–865. [Google Scholar] [CrossRef]

- Roy, S.M.; Ghosh, A. Real-time adaptive histogram min-max bucket (HMMB) model for background subtraction. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1513–1525. [Google Scholar] [CrossRef]

- Stauffer, C.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), Fort Collins, CO, USA, 23–25 June 1999; Volume 2, pp. 246–252. [Google Scholar]

- Zivkovic, Z.; Van Der Heijden, F. Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Sheikh, Y.; Shah, M. Bayesian modeling of dynamic scenes for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1778–1792. [Google Scholar] [CrossRef]

- Han, B.; Comaniciu, D.; Zhu, Y.; Davis, L.S. Sequential kernel density approximation and its application to real-time visual tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1186–1197. [Google Scholar] [PubMed]

- Zhu, Q.; Shao, L.; Li, Q.; Xie, Y. Recursive kernel density estimation for modeling the background and segmenting moving objects. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 1769–1772. [Google Scholar]

- Lin, H.H.; Liu, T.L.; Chuang, J.H. A probabilistic SVM approach for background scene initialization. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Volume 3, pp. 893–896. [Google Scholar]

- Cheng, L.; Gong, M.; Schuurmans, D.; Caelli, T. Real-time discriminative background subtraction. IEEE Trans. Image Process. 2010, 20, 1401–1414. [Google Scholar] [CrossRef]

- Bouwmans, T. Subspace learning for background modeling: A survey. Recent Patents Comput. Sci. 2009, 2, 223–234. [Google Scholar] [CrossRef]

- Djerida, A.; Zhao, Z.; Zhao, J. Background subtraction in dynamic scenes using the dynamic principal component analysis. IET Image Process. 2020, 14, 245–255. [Google Scholar] [CrossRef]

- Buccolieri, F.; Distante, C.; Leone, A. Human posture recognition using active contours and radial basis function neural network. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Como, Italy, 15–16 September 2005; pp. 213–218. [Google Scholar]

- Maddalena, L.; Petrosino, A. A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans. Image Process. 2008, 17, 1168–1177. [Google Scholar] [CrossRef] [PubMed]

- Gemignani, G.; Rozza, A. A robust approach for the background subtraction based on multi-layered self-organizing maps. IEEE Trans. Image Process. 2016, 25, 5239–5251. [Google Scholar] [CrossRef]

- Braham, M.; Van Droogenbroeck, M. Deep background subtraction with scene-specific convolutional neural networks. In Proceedings of the 2016 International Conference on Systems, Signals and Image Processing (IWSSIP), Bratislava, Slovakia, 23–25 May 2016; pp. 1–4. [Google Scholar]

- Babaee, M.; Dinh, D.T.; Rigoll, G. A deep convolutional neural network for video sequence background subtraction. Pattern Recognit. 2018, 76, 635–649. [Google Scholar] [CrossRef]

- Bakkay, M.C.; Rashwan, H.A.; Salmane, H.; Khoudour, L.; Puig, D.; Ruichek, Y. BSCGAN: Deep background subtraction with conditional generative adversarial networks. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4018–4022. [Google Scholar]

- Sultana, M.; Mahmood, A.; Bouwmans, T.; Jung, S.K. Dynamic background subtraction using least square adversarial learning. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 3204–3208. [Google Scholar]

- McHugh, J.M.; Konrad, J.; Saligrama, V.; Jodoin, P.M. Foreground-adaptive background subtraction. IEEE Signal Process. Lett. 2009, 16, 390–393. [Google Scholar] [CrossRef]

- Li, X.; Hu, W.; Shen, C.; Zhang, Z.; Dick, A.; Hengel, A.V.D. A survey of appearance models in visual object tracking. ACM Trans. Intell. Syst. Technol. (TIST) 2013, 4, 1–48. [Google Scholar] [CrossRef]

- Fiaz, M.; Mahmood, A.; Javed, S.; Jung, S.K. Handcrafted and deep trackers: Recent visual object tracking approaches and trends. ACM Comput. Surv. (CSUR) 2019, 52, 1–44. [Google Scholar] [CrossRef]

- Javed, S.; Danelljan, M.; Khan, F.S.; Khan, M.H.; Felsberg, M.; Matas, J. Visual object tracking with discriminative filters and siamese networks: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6552–6574. [Google Scholar] [CrossRef]

- Ondrašovič, M.; Tarábek, P. Siamese visual object tracking: A survey. IEEE Access 2021, 9, 110149–110172. [Google Scholar] [CrossRef]

- Chen, F.; Wang, X.; Zhao, Y.; Lv, S.; Niu, X. Visual object tracking: A survey. Comput. Vis. Image Underst. 2022, 222, 103508. [Google Scholar] [CrossRef]

- Zhou, H.; Yuan, Y.; Shi, C. Object tracking using SIFT features and mean shift. Comput. Vis. Image Underst. 2009, 113, 345–352. [Google Scholar] [CrossRef]

- Khan, Z.H.; Gu, I.Y.H.; Backhouse, A.G. Robust visual object tracking using multi-mode anisotropic mean shift and particle filters. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 74–87. [Google Scholar] [CrossRef]

- Cai, Z.; Gu, Z.; Yu, Z.L.; Liu, H.; Zhang, K. A real-time visual object tracking system based on Kalman filter and MB-LBP feature matching. Multimed. Tools Appl. 2016, 75, 2393–2409. [Google Scholar] [CrossRef]

- Farahi, F.; Yazdi, H.S. Probabilistic Kalman filter for moving object tracking. Signal Process. Image Commun. 2020, 82, 115751. [Google Scholar] [CrossRef]

- Kim, T.; Park, T.H. Extended Kalman filter (EKF) design for vehicle position tracking using reliability function of radar and lidar. Sensors 2020, 20, 4126. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Wu, D.; Zhu, Z. Object tracking based on Kalman particle filter with LSSVR. Optik 2016, 127, 613–619. [Google Scholar] [CrossRef]

- Iswanto, I.A.; Li, B. Visual object tracking based on mean-shift and particle-Kalman filter. Procedia Comput. Sci. 2017, 116, 587–595. [Google Scholar] [CrossRef]

- Rao, G.M.; Satyanarayana, C. Visual object target tracking using particle filter: A survey. Int. J. Image Graph. Signal Process. 2013, 5, 1250. [Google Scholar] [CrossRef][Green Version]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Chen, J.; Li, J.; Yang, S.; Deng, F. Weighted optimization-based distributed Kalman filter for nonlinear target tracking in collaborative sensor networks. IEEE Trans. Cybern. 2016, 47, 3892–3905. [Google Scholar] [CrossRef]

- Zheng, B.; Fu, P.; Li, B.; Yuan, X. A robust adaptive unscented Kalman filter for nonlinear estimation with uncertain noise covariance. Sensors 2018, 18, 808. [Google Scholar] [CrossRef] [PubMed]

- Jeong, J.; Yoon, T.S.; Park, J.B. Mean shift tracker combined with online learning-based detector and Kalman filtering for real-time tracking. Expert Syst. Appl. 2017, 79, 194–206. [Google Scholar] [CrossRef]

- Sun, J.; Xu, X.; Liu, Y.; Zhang, T.; Li, Y. FOG random drift signal denoising based on the improved AR model and modified Sage-Husa adaptive Kalman filter. Sensors 2016, 16, 1073. [Google Scholar] [CrossRef]

- Särkkä, S.; Nummenmaa, A. Recursive Noise Adaptive Kalman Filtering by Variational Bayesian Approximations. IEEE Trans. Autom. Control 2009, 54, 596–600. [Google Scholar] [CrossRef]

- Kashyap, R. Maximum likelihood identification of stochastic linear systems. IEEE Trans. Autom. Control 1970, 15, 25–34. [Google Scholar] [CrossRef]

- Zagrobelny, M.A.; Rawlings, J.B. Identifying the uncertainty structure using maximum likelihood estimation. In Proceedings of the 2015 American Control Conference (ACC), Chicago, IL, USA, 1–3 July 2015; pp. 422–427. [Google Scholar]

- Myers, K.; Tapley, B. Adaptive sequential estimation with unknown noise statistics. IEEE Trans. Autom. Control 1976, 21, 520–523. [Google Scholar] [CrossRef]

- Solonen, A.; Hakkarainen, J.; Ilin, A.; Abbas, M.; Bibov, A. Estimating model error covariance matrix parameters in extended Kalman filtering. Nonlinear Process. Geophys. 2014, 21, 919–927. [Google Scholar] [CrossRef]

- Yu, C.; Verhaegen, M. Subspace identification of distributed clusters of homogeneous systems. IEEE Trans. Autom. Control 2016, 62, 463–468. [Google Scholar] [CrossRef]

- Mehra, R.K. On the identification of variances and adaptive Kalman filtering. IEEE Trans. Autom. Control 1970, 15, 175–184. [Google Scholar] [CrossRef]

- Odelson, B.J.; Rajamani, M.R.; Rawlings, J.B. A new autocovariance least-squares method for estimating noise covariances. Automatica 2006, 42, 303–308. [Google Scholar] [CrossRef]

- Duník, J.; Straka, O.; Simandl, M. On Autocovariance Least-Squares Method for Noise Covariance Matrices Estimation. IEEE Trans. Autom. Control 2017, 62, 967–972. [Google Scholar] [CrossRef]

- Duník, J.; Straka, O.; Kost, O.; Havlík, J. Noise covariance matrices in state-space models: A survey and comparison of estimation methods—Part I. Int. J. Adapt. Control Signal Process. 2017, 31, 1505–1543. [Google Scholar] [CrossRef]

- Li, J.; Ma, N.; Deng, F. Distributed noise covariance matrices estimation in sensor networks. In Proceedings of the 2020 59th IEEE Conference on Decision and Control (CDC), Jeju, Republic of Korea, 14–18 December 2020; pp. 1158–1163. [Google Scholar]

- Arnold, T.J.; Rawlings, J.B. Tractable Calculation and Estimation of the Optimal Weighting Matrix for ALS Problems. IEEE Trans. Autom. Control 2021, 67, 6045–6052. [Google Scholar] [CrossRef]

- Meng, Y.; Gao, S.; Zhong, Y.; Hu, G.; Subic, A. Covariance matching based adaptive unscented Kalman filter for direct filtering in INS/GNSS integration. Acta Astronaut. 2016, 120, 171–181. [Google Scholar] [CrossRef]

- Fu, Z.; Han, Y. Centroid weighted Kalman filter for visual object tracking. Measurement 2012, 45, 650–655. [Google Scholar] [CrossRef]

- Ferryman, J.; Shahrokni, A. PETS2009: Dataset and challenge. In Proceedings of the 2009 Twelfth IEEE International Workshop on Performance Evaluation of Tracking and Surveillance, Snowbird, UT, USA, 7–9 December 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Tripathi, R.P.; Singh, A.K. Moving Object Tracking Using Optimal Adaptive Kalman Filter Based on Otsu’s Method. In Innovations in Electronics and Communication Engineering, Proceedings of the 8th ICIECE 2019, Hyderabad, India, 2–3 August 2019; Springer: Singapore, 2020; pp. 429–435. [Google Scholar]

| RMSE (in Pixels) | Projectile | Free-Fall Bouncing | Back-and-Forth Linear |

|---|---|---|---|

| AKF-ALS | 1.05 | 2.76 | 0.89 |

| KF | 4.67 | 8.35 | 2.31 |

| Metric | AKF ALS VOT | Classic AKF VOT [66] |

|---|---|---|

| RMSE | 5.5 pixels | 10.7 pixels |

| Identity switches | 1 | 8 |

| Track fragmentation | 2 | 8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Xu, X.; Jiang, Z.; Jiang, B. Adaptive Kalman Filter for Real-Time Visual Object Tracking Based on Autocovariance Least Square Estimation. Appl. Sci. 2024, 14, 1045. https://doi.org/10.3390/app14031045

Li J, Xu X, Jiang Z, Jiang B. Adaptive Kalman Filter for Real-Time Visual Object Tracking Based on Autocovariance Least Square Estimation. Applied Sciences. 2024; 14(3):1045. https://doi.org/10.3390/app14031045

Chicago/Turabian StyleLi, Jiahong, Xinkai Xu, Zhuoying Jiang, and Beiyan Jiang. 2024. "Adaptive Kalman Filter for Real-Time Visual Object Tracking Based on Autocovariance Least Square Estimation" Applied Sciences 14, no. 3: 1045. https://doi.org/10.3390/app14031045

APA StyleLi, J., Xu, X., Jiang, Z., & Jiang, B. (2024). Adaptive Kalman Filter for Real-Time Visual Object Tracking Based on Autocovariance Least Square Estimation. Applied Sciences, 14(3), 1045. https://doi.org/10.3390/app14031045