1. Introduction

Recent advancements in machine learning have facilitated the interaction between robots and humans, enabling robots to offer adept services in diverse applications, such as autonomous driving systems [

1] and collaborative assembly in smart factories [

2]. Given these developments, it is crucial that robotic systems dynamically adapt to the preferences of users involved in these interactions. Recent approaches in robotics have employed preference-based learning (PBL) to learn user preference [

3,

4,

5,

6,

7,

8,

9]. In PBL, human preferences are modeled as a reward function, learned by presenting users with diverse robot behaviors and having them select preferred ones. The robot’s behavior can be tailored to align with the user’s preference by maximizing the learned reward model.

While most existing methods [

3,

4,

5,

6,

7,

8,

9] have successfully captured user preferences in an online manner, a clear limitation arises from the presumption of a stationary reward function to model user preference. Notably, user preference might evolve while interacting with robotic systems [

10,

11]. Hence, robots must adeptly adjust to evolving preferences to maintain appropriate behavior. For instance, initial encounters with service robots may prompt users to favor cautious behavior due to unfamiliarity, but as users grow accustomed to the robot’s presence, preferences often shift towards more task-specific behaviors, as depicted in

Figure 1. Beyond this scenario, user preferences can evolve due to diverse factors, encompassing trends, emotions, and age. To address these dynamic preferences, we present a method capable of adapting to evolving user inclinations.

The challenge of adapting to dynamic user preferences can be addressed by using a non-stationary bandit framework [

12]. The bandit framework [

13] is extensively utilized to optimize decision-making processes under uncertain and unknown rewards, where an agent selects from a set of options, each providing stochastic rewards. The primary goal of this framework is to maximize the cumulative reward over time, thereby finding an optimal option even without the explicit knowledge of the rewards. This framework strategically balances the selection of high-potential queries against those already known to align with user preferences, enabling efficient and adaptive query selection to acquire time-varying reward functions.

In this paper, we propose a novel preference-based learning method, called discounted preference bandits (DPBs), to address time-varying preferences. First, our algorithm is inherently adaptive to time-varying environments by updating parameters based on penalized likelihood. Second, we theoretically demonstrate a no-regret convergence for the proposed method. In the simulation, the proposed method outperforms existing methods [

3,

5,

6,

7] in terms of cosine similarity, simple regret, and cumulative regret in time-varying scenarios. Finally, simulation and real-world user studies confirm that the proposed method successfully adapts to time-varying scenarios, especially with respect to robot behavior adaptation and environmental changes.

4. Methods

We present a novel discounted preference bandit (DPB) to estimate the time-varying preference with the minimum number of queries. First, we newly define a context vector as the difference of feature vectors between two trajectories, i.e.,

, which represents the information of comparison. Let

be a set of possible context vectors that are converted from all trajectory pairs. Then, the query selection problem is converted to choosing a proper query vector in

. Furthermore, based on

X, the probabilistic model in (

2) can be converted into the following logistic distribution,

where

indicates a context vector of

that are compared at round

t. By introducing a context vector

, PBL can be reduced to the online learning problem. Algorithm 1 demonstrates the online learning process of DPB to acquire time-varying reward functions. In each round

t, DPB selects a batch of queries as outlined in line 4. The human then observes these queries and provides preference labels for each query as described in line 5. Finally, the parameter is updated with the collected preference data as shown in line 6.

| Algorithm 1 Discounted Preference Bandits (DPBs) |

- Require:

- Ensure:

- 1:

, , and - 2:

while

do - 3:

Set in Theorem 1 - 4:

Select top- b queries from ( 4) - 5:

Demonstrate and collect - 6:

Estimate by solving ( 5) and - 7:

end while

|

4.1. Absolute Upper Confidence Bound

Suppose that the parameter

is estimated, which will be explained later. At round

t, DPB chooses an action based on the following action selection rule

where

,

is a past query vector from

to

,

is a regularization coefficient,

is an identity matrix, and

is a scale parameter that controls the importance between the first and second term.

The first term indicates the absolute difference of the estimated rewards between two trajectories. Since we construct a context vector from two trajectories,

X and

contain the same information, i.e.,

and

. Hence, computing the reward via the absolute value ensures the equivalence between selecting

X and

. Based on this trick, the query selection method (

4) employs the upper confidence bound (UCB) [

20]. The second term in (

4) represents the confidence bound that magnifies the amount of the uncertainty of the first term

. Particularly,

, called a design matrix, embodies the empirical covariance of

X, and the parameter

of

is a discount factor in

which penalizes an effect of past data. Intuitively, as

t grows, additional query vectors are added into

, thereby augmenting the minimum eigenvalue of

and, thus, diminishing the term

. Consequently, the confidence bound eventually decreases.

Our proposed query selection method simultaneously considers two key factors by leveraging UCB. The first factor evaluates how much the chosen query contributes to learning the relevant parameters. The second factor considers how much the user will like the query when presented as a demonstration. While conventional approaches focus on the first factor [

4,

5,

6,

7], the proposed approach incorporates the second factor by applying the UCB method. The consideration of the quality of queries experienced by the user [

5] is vital because it may help build user familiarity and trust with the robot. If users consistently encounter undesirable queries, it might lead to mistrust in the robot’s behavior. Therefore, our approach carefully balances these factors, adjusting the trade-off between two factors via

, which will be further examined in

Section 5.

While the query selection rule selects a single query, choosing a batch of queries is more efficient in practice. In PBL, extended durations for query generation and parameter updates can challenge users, particularly those who are less patient. Thus, we adopt a simple batched version by selecting the top

b queries based on the UCB score (

4), where

b is the number of queries in a single batch. To approximate the solution for (

4), we prepare a finite set of trajectory pairs by randomly generating and selecting two trajectories. The feature vectors derived from this set are used to compute (

4) and to select the top-

b queries among the finite set.

4.2. Discounted Parameter Estimation

After selecting a query and receiving its label, the parameter of the user preference is estimated considering changes over time. Let

denote

. Suppose

t data points are given, i.e.,

. We can estimate

by using the discounted maximum log-likelihood scheme [

12] as follows,

where

is a discount factor in

. This discounted negative log-likelihood (

5) intuitively shows that the parameter

is about to be learned as the most recent optimal parameter

that changes over time. Note that the minimizer of (

5) satisfies

, which makes the gradient of (

5) be equal to zero.

5. Theoretical Analysis

In this section, we analyze the cumulative regret of the proposed method. The cumulative regret is defined as

where

is a logistic function, i.e.,

,

, and

T is the number of iterations.

indicates the optimal query that contains the optimal trajectory such that

. The cumulative regret is widely employed in bandit settings as a measure to assess the efficiency of exploration methods [

13]. Then, we prove that our method has the sub-linear regret under the mild assumption on

. In other words, our theoretical results tell us that the proposed method efficiently adapts to the time-varying parameters. First, we introduce the assumptions.

Assumption 1. For , , and , there exist D and S such that holds and holds.

Assumption 2. Let be the number of changing points. Assume that is changed up to times during T rounds.

Initially, we make Assumption 1 that both feature vectors and the parameters are bounded. Assumption 2 tells us that the user parameter is changed discretely times. Note that indicates the most volatile user, and indicates a stationary user. Furthermore, we define the lower bound of the derivative of the logistic function.

Definition 1. For the logistic function μ, there exists a positive constant such that . Note that always exists for bounded θ and x.

Now, the set of time indices used for analysis is defined.

Definition 2. For fixed γ, let us define and define an index set as .

For t in , we can find the interval where does not change. In other words, is fixed for rounds. Then, we prove that the proposed method can adapt in at least rounds and, hence, the proposed method is no regret. Now, we first derive the confidence bound of the estimated parameter as follows.

Theorem 1. Suppose that Assumptions 1–2 hold. Consider the gap between and . For all , the following inequality holds with probability at least ,where . is used to compute the confidence bound of the estimated parameter. By using Theorem 1, we can derive the regret bound of the proposed DPB. The detailed proofs of Theorem 1 can be found in

Appendix A.

Theorem 2. Suppose that Assumptions 1–2 hold; then, for fixed , with probability at least , the regret of DPB is bounded as follows: .

If is sub-linear with respect to T, then, the proposed DPB is called no-regret. However, the sub-linearity of DPB depends on . In particular, if holds for , then, the DPB finally converges to the time-varying user preferences. This result shows some theoretical limitations of the proposed method since it cannot overcome the time-varying tendency of if grows faster than . This regret bound is the first result in preference-based learning for time-varying settings.

6. Experimental Settings

Simulation Setup. We validate our work in three simulation environments:

Driver [

1],

Tosser [

21], and

Avoiding. The

Driver environment aims to drive while aware of the other vehicle and the

Tosser environment learns to put the ball in a certain basket with diverse trajectories. The features utilized are identical to [

5], distance to the closest lane, speed, heading angle, and distance to the other vehicles for

Driver and maximum horizontal range, maximum altitude, the sum of angular displacements at each timestep, and final distance to the closest basket for

Tosser. We newly created an

Avoiding environment where the robot moves the object over the laptop to place the final target pose, similarly to [

22]. Four-dimensional hand-coded features: The height of the end-effector from the table, the distance between the end-effector and the laptop, the moving distance, and the distance between the end-effector and the user, are utilized in

Avoiding. Optimal parameters were randomly generated for each seed.

Dataset. To discretize a trajectory space, a query set is predefined in Driver and Tosser by sampling K trajectories with uniformly random controls. In Avoiding, RRT* is used to create trajectories after randomly sampling the passing midpoint through the fixed start and target point. We set K to 20,000 for Driver and Tosser, and to 5000 for Avoiding.

Evaluation Metrics. In our experiments, we use the following three suitable metrics: the cosine similarity

, the simple regret

, and the cumulative regret

. First, cosine similarity

is measured as the alignment metric leveraged in most existing research [

4,

5,

6]. Simple regret is defined as

, where

is an optimal trajectory of learned parameter, i.e.,

. The quality of the optimized trajectory can be measured by the simple regret, with smaller values indicating better performance. Cumulative regret

, defined in

Section 5, illustrates how much reward will be lost by exploration. Minimizing cumulative regret is often the object of the bandit framework.

Baselines. We compare the performance of DPB with other methods using different criteria for query selection, such as batch active learning [

5,

7], information gain [

6], and maximum regret [

3]. All these baseline algorithms were adapted into batch selection versions to select the top-

b queries to ensure fair comparisons.

7. Simulation Results

We validated the superiority of DPB in three different preference changing scenarios: smooth preference changes in

Section 7.1, abrupt preference changes in

Section 7.2, and static preferences in

Section 7.3. The experiments in this section were conducted using synthetic data, and the results from real-world scenarios involving users and physical robots will be presented in

Section 8.

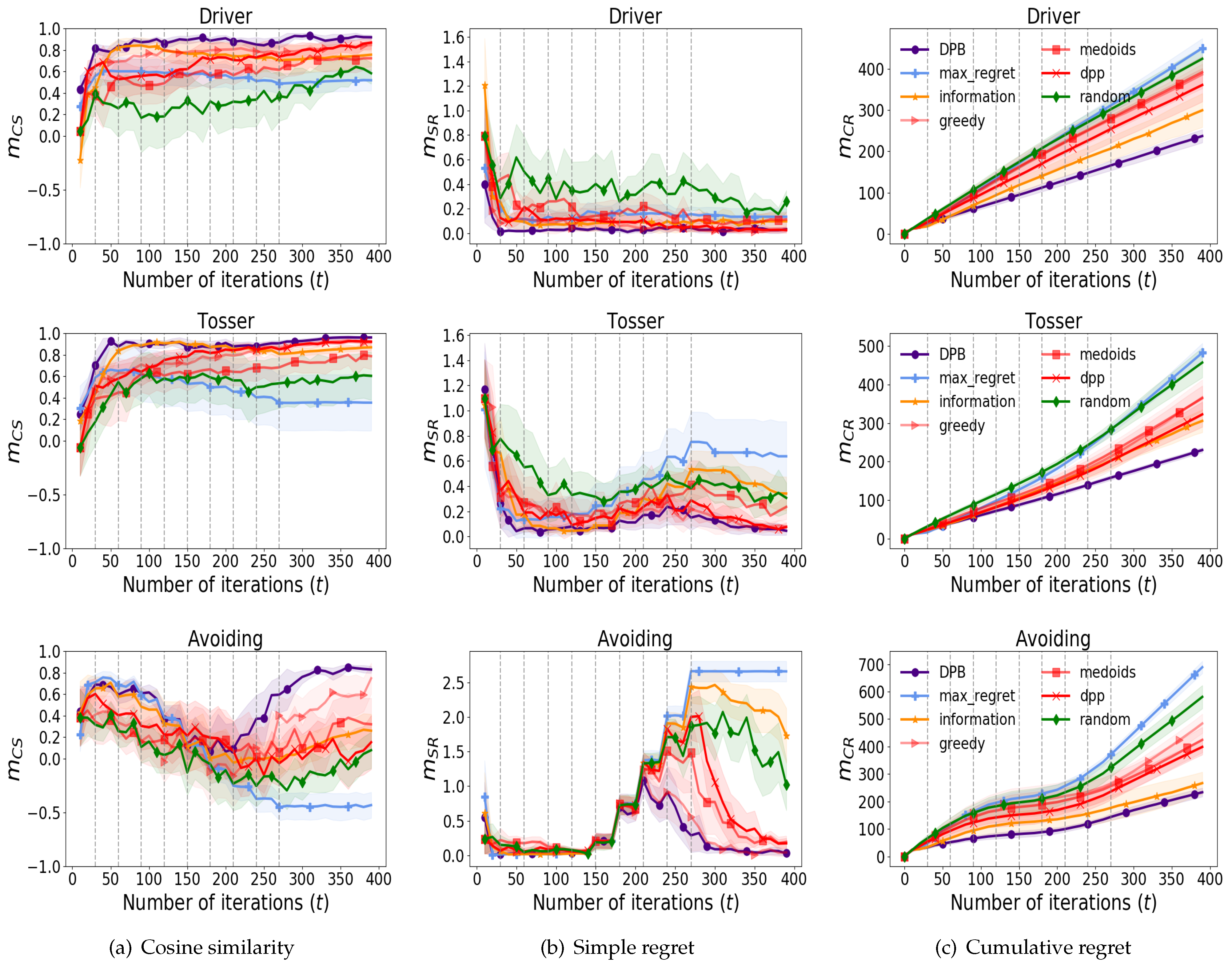

7.1. Performance on Smooth Preference Changes

To simulate smooth preference changes, we randomly select two parameters, , within a proper range and linearly interpolate them by dividing the interval into 10 points. is changed every 30 rounds, making a total of nine changes. After reaching , 120 additional rounds are executed; hence, 390 rounds are conducted in total.

Each row in

Figure 2 shows the performances of each algorithm in

,

, and

, respectively. For

Figure 2c, DPB clearly outperforms the baselines on

since other baselines cannot consider

. Lower values of

indicate that the proposed query selection rule effectively balances the trade-off between the user’s preference for the presented trajectories and their associated uncertainties, as discussed in

Section 4.1. This balance enables the generation of high-quality queries, which are well suited for eliciting meaningful user feedback in real-world scenarios with smooth preference changes. Regarding

in

Figure 2a, it can be observed that DPB adapts to smooth parameter changes faster than other algorithms in terms of parameter estimation. We presume that the superior performance of DPB is derived from the effect of discounts on the past data. Finally, the DPB algorithm also outperforms with respect to

as demonstrated in

Figure 2b. Thus, in scenarios characterized by smoothly varying preferences, the DPB method demonstrates the capability to generate well-suited queries, facilitating superior estimation of reward parameters and optimal trajectories compared to baseline approaches.

Interestingly, baseline algorithms adopt poorly in . We believe that this effect is correlated with the abruptness at which the parameters are changed. As the optimal parameters are linearly interpolated, the deviation is consistent with every parameter changes. The average deviation over the seeds is computed as , , and for , , and , respectively. For Avoiding, the optimal parameter changes comparatively drastically, resulting in a bad performance for the baseline algorithms. Furthermore, the limited adaptability in time-varying scenarios for the maximum regret algorithm might stem from its query selection rule over the solution space. This algorithm tends to converge towards local optima due to its greedy selection that restricts the trajectories to be compared, wherein it selects two trajectories exhibiting the highest estimated regret based on parameter obtained from Markov chain Monte Carlo. However, the results of all simulations support that DPB adapts to the smooth preference changes faster than baselines.

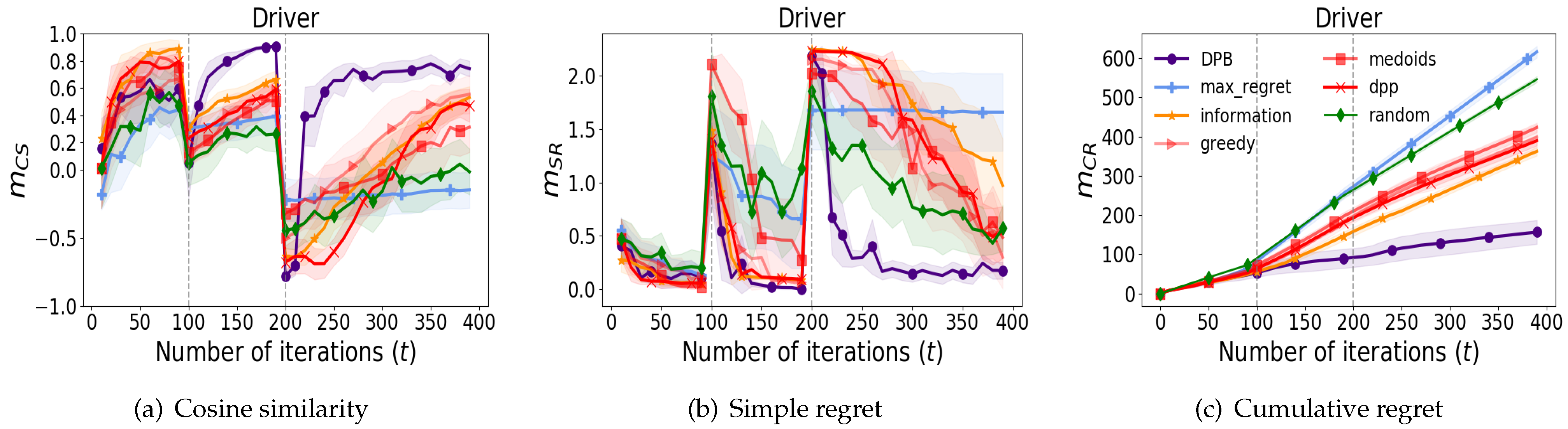

7.2. Performance on Abrupt Preference Changes

To analyze scenarios involving more realistic changes in human preferences, which are not likely to be smooth, simulations are designed to accommodate abrupt changes in preference. For abrupt preference changes, we conducted experiments in

Driver involving two significant alterations at 100 and 200 rounds, resulting in

and

values of

and

, respectively.

Figure 3c similarly demonstrates that DPB achieves sub-linear convergence in cumulative regret. While other algorithms do not adapt well to sudden changes in preference,

Figure 3a,b show that DPB adapts to abrupt changes in preference. A comparative analysis of the results presented in

Figure 2 and

Figure 3 reveals that the DPB method consistently outperforms baseline approaches, particularly in scenarios involving abrupt and realistic changes in human preferences. These findings underscore the effectiveness of DPB in addressing dynamic preference scenarios.

In the case of the max regret algorithm, metrics such as

and

struggle to adapt to changing parameters. They exhibit consistent behavior even when user preferences shift. This issue strongly aligns with the findings from the

Avoiding task illustrated in

Figure 2. We attribute this limitation to the inherent characteristics of the max regret algorithm, which has difficulty adjusting to abrupt changes in preferences and tends to converge to local optima.

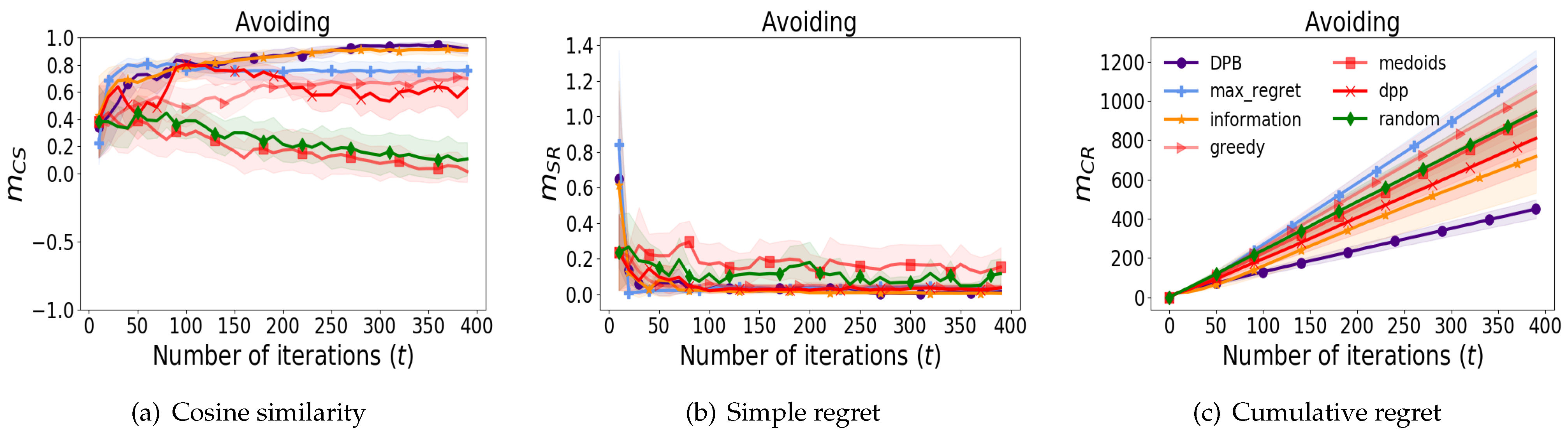

7.3. Sanity Check on Static Preferences

To ensure the performance of baseline algorithms,

Figure 4 presents the results of

Avoiding in conventional static human preference scenarios; i.e., parameter

does not change over time. We opted for the

Avoiding environment due to its relatively inferior performance compared to the environments discussed in

Section 7.1 and

Section 7.2. The optimal parameter

is identical to the simulation experiments in

Section 7.1. In

Figure 4a,b, we can observe that DPB converges faster than other baselines except for the information gain method that shows a similar convergence speed. The results indicate that DPB demonstrates strong performance even in scenarios involving conventional static preferences. The algorithm effectively estimates preference parameters while identifying optimal trajectories that align with user preferences. Its adaptability to both time-varying and static preferences highlights the practical capability of DPB to accurately estimate preference parameters and generate user-preferred trajectories, reinforcing its applicability in diverse real-world scenarios. Moreover,

Figure 4c also supports that DPB guarantees to minimize the cumulative regret. The generated queries also demonstrate superior quality in scenarios with static preferences. It is noteworthy that the maximum regret algorithm in

Figure 4 shows reasonable performance in a static setting unlike in the time-varying setting shown in

Figure 2 and

Figure 3.

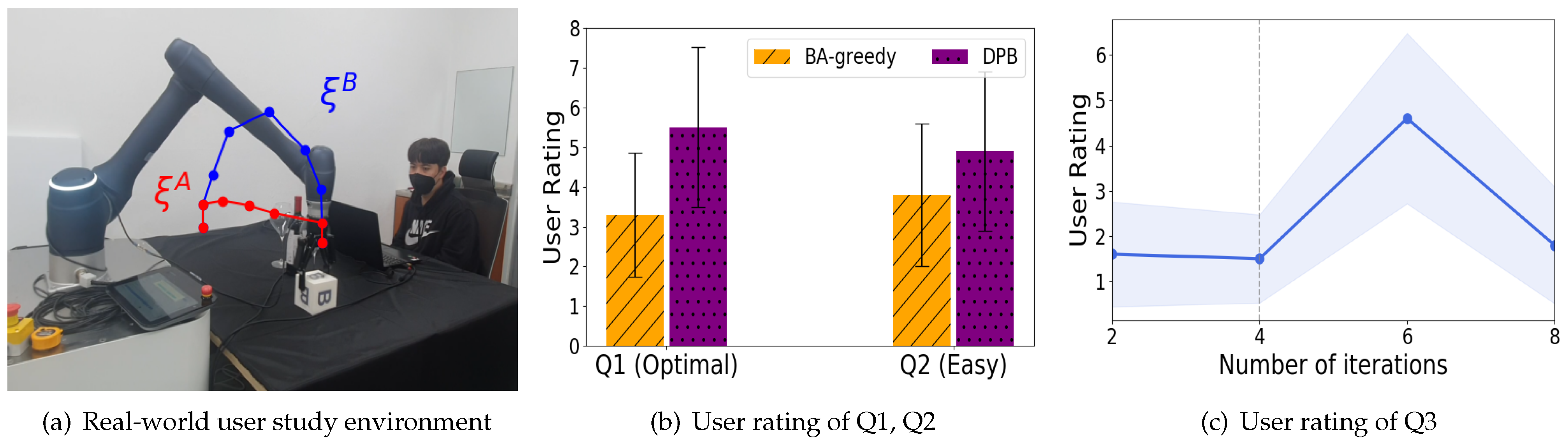

9. Conclusions

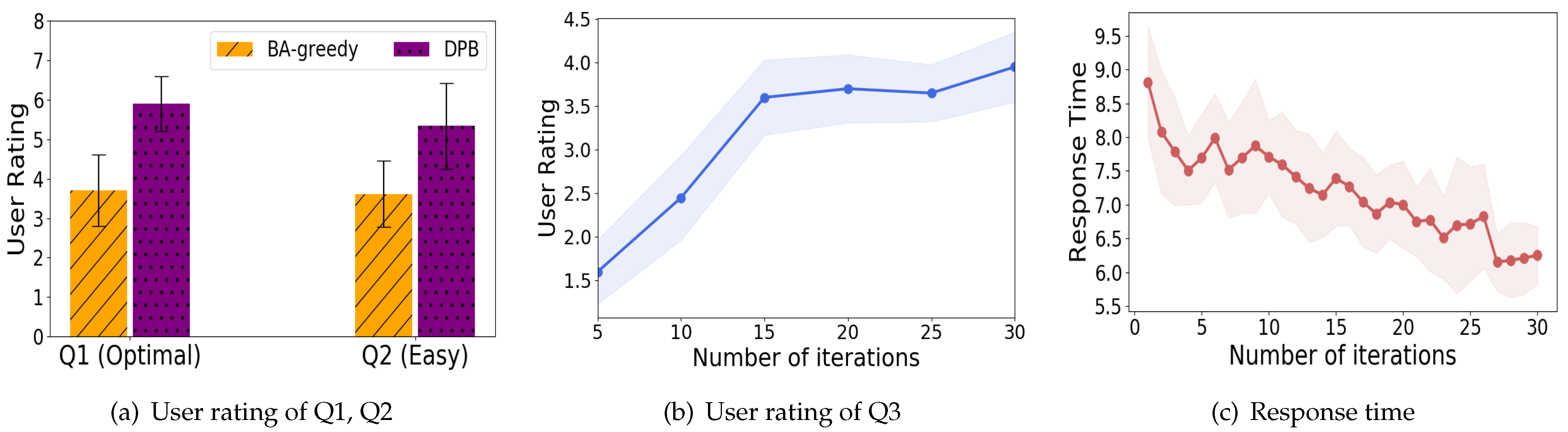

Our proposed algorithm introduces a novel approach to address time-varying preferences using discounted likelihood. Then, our theoretical analysis establishes that DPB demonstrates sub-linear cumulative regret under preference changes occurring less than times. Experimental outcomes highlight the adaptiveness of our framework compared to previous methods in handling time-varying user preferences. Particularly, our DPB method effectively minimizes cumulative regret, while other approaches struggle in this regard. User studies further validate the competitiveness of DPB in time-varying environments with environmental changes and robot behavior adaptation over repeated interactions.

In robotics, addressing the time-varying preferences of users is crucial, as their expectations can shift depending on the context or environment. Robotic systems are required to perform a wide range of actions across diverse scenarios, adapting their behavior to evolving human needs. For instance, in autonomous driving systems, the DPB method enables vehicles to modify their driving styles—such as acceleration, braking, or lane-changing. Passengers preferring a smoother, more conservative ride may find the vehicle adjusting its behavior accordingly, while those prioritizing efficiency could benefit from optimized travel times. This continuous learning and adaptation enhance the personalization and comfort of autonomous systems. It would be interesting to consider additional factors such as emotions or social contexts that influence preference changes. Exploring these aspects and developing a unified model capable of perceiving user states and learning preferences across diverse users represent promising directions for future research.