Research Status and Prospect of the Key Technologies for Environment Perception of Intelligent Excavators

Abstract

1. Introduction

- Low construction accuracy, low work efficiency, and high energy consumption. Conventional excavators are affected by human factors, and the accuracy of operation cannot be guaranteed. The low full bucket rate reduces the mining efficiency and energy utilization rate and requires a lot of energy consumption.

- Severe challenges are brought by special working environments. In the process of underwater operation, space development, emergency rescue, etc., the traditional excavator is restricted by the uncertainty and safety of the working environment, so it is difficult to realize normal operation.

- Frequent failures and poor safety. Some operators are accustomed to digging deeply with brute force, resulting in strong impact loads on the bucket. The whole machine vibrates violently, and accidents such as broken shafts and pins and overturning of the lifting arm are likely to happen. When the bucket is loading, collisions happen easily due to human error or limited vision.

1.1. Literature Review Methodology

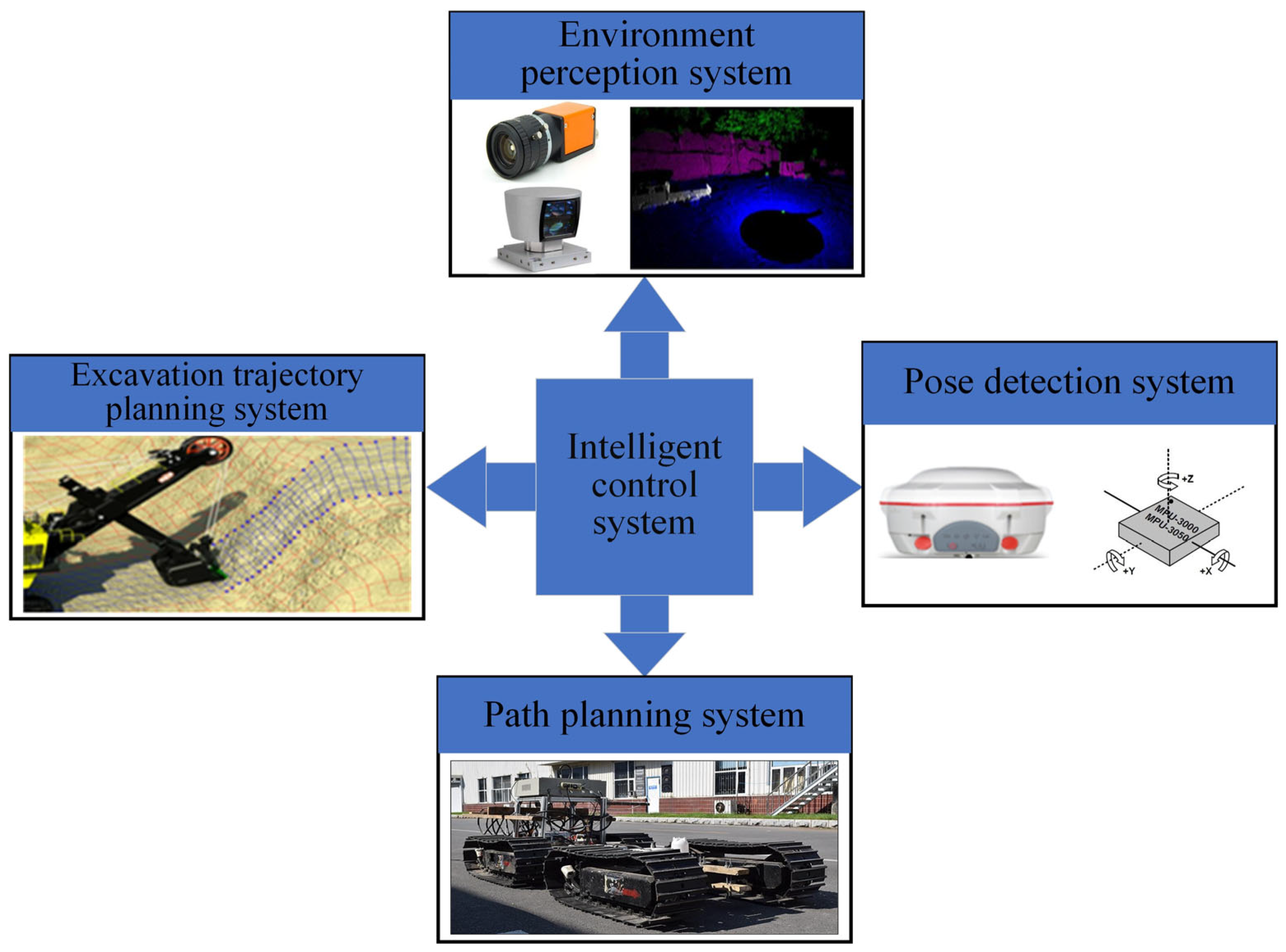

1.2. Research Status of Intelligent Excavators

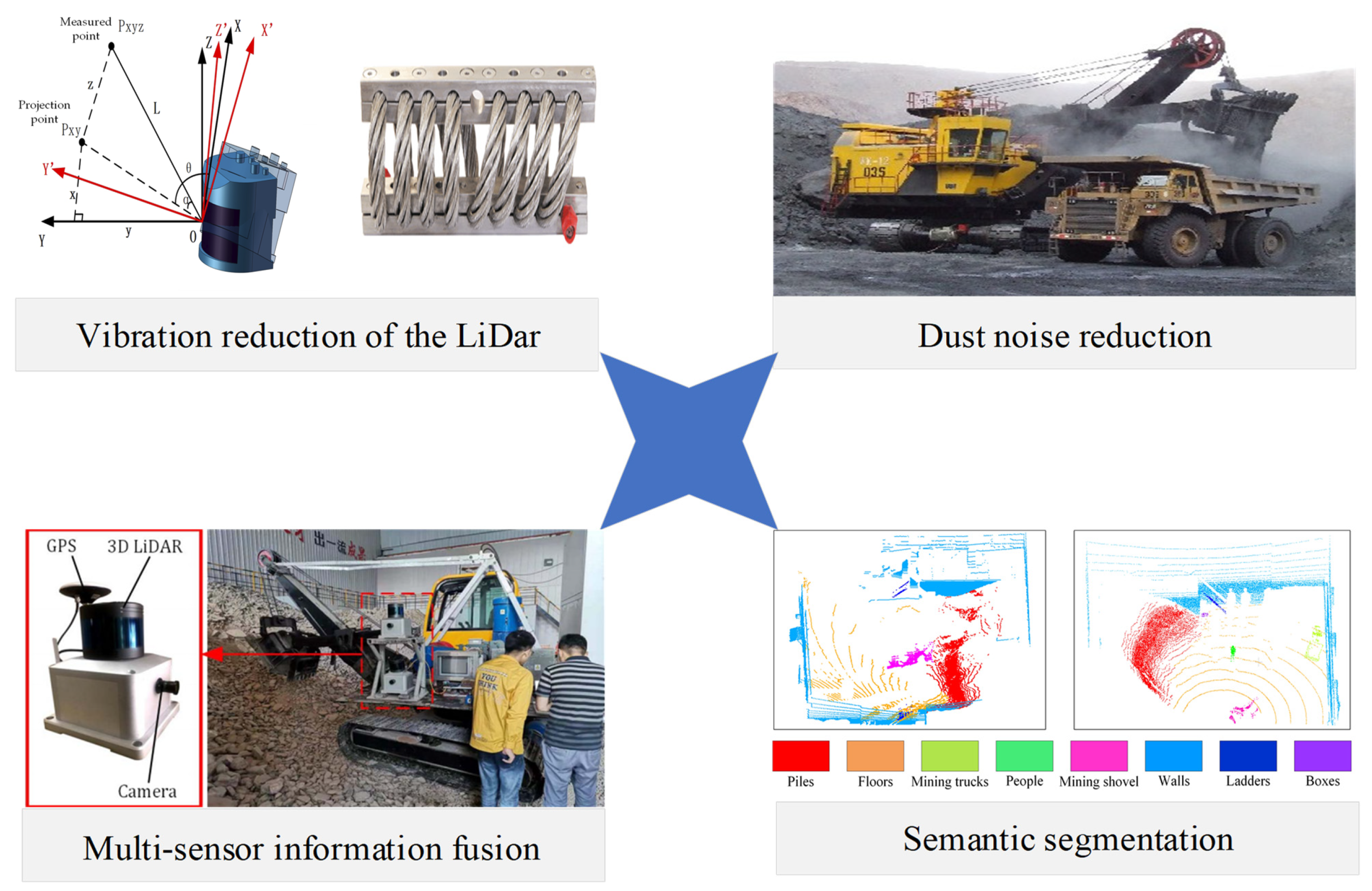

- The operation of intelligent excavators is often accompanied by strong vibrations, which will cause linear deviation and attitude angle change of the light detection and ranging scanner, thus affecting the detection accuracy of the LiDAR scanner. In addition, strong vibrations will cause fatigue and wear of the precision components inside the light detection and ranging scanner, affect the service life of the equipment, and even cause damage to the equipment. Therefore, it is necessary to study the vibration characteristics of the excavator, design a reasonable vibration-damping platform, and measure the light detection and ranging scanner displacement and attitude data caused by vibration, as well as modify the data through registration, filtering, and other algorithms as far as possible to eliminate the impact of vibration on the measurement accuracy of the light detection and ranging scanner.

- The intelligent excavator will produce a large amount of dust during the excavation and loading process. When the dust concentration in the air reaches a certain degree, it will seriously affect the effective measurement accuracy of the Light Detection and Ranging scanner on the working environment and the working target, and more serious cases will appear blocking phenomenon. To study the acquisition and noise reduction processing of three-dimensional point cloud data of excavator operating environment under dusty conditions, and to reduce the influence of dust on LiDAR measurement data. It is of great significance to improve the sensing ability of the operating environment of intelligent excavators.

- The excavation targets and loading vehicles faced by intelligent excavators in the construction process are often complex in structure, with diverse details such as shape, color, texture, etc., and outdoor operations susceptible to the impact of light, weather, etc. These will seriously affect the environmental perception effect of intelligent excavators. Multi-sensor information fusion technology is of great significance in improving the working environmental perception ability of intelligent excavators under complex working conditions. In environmental perception tasks, a single sensor faces many shortcomings, and LiDAR can obtain accurate position information. But the collected point cloud data lack important information such as color and texture, and the point cloud data are relatively sparse. In complex operating environments, this will affect the ability to identify fine target features. The camera can acquire rich color and texture information, and the resolution of the image data is relatively high. However, since the camera is a passive measurement sensor, it is less robust, seriously affected by the environment, and unable to directly obtain depth information. Compared with the traditional single sensor, the multi-sensor information fusion technology can obtain more comprehensive, accurate, and robust operating environment information. Therefore, it plays a crucial role in improving the operating environmental perception ability of intelligent excavators under complex conditions.

- The segmentation and recognition of the three-dimensional operation scene of the intelligent excavator (as shown in Figure 4) is the basis for realizing the intelligence of the excavator. Since the three-dimensional point cloud can accurately record the position and geometric information of the measured target, it has advantages that cannot be compared with two-dimensional (2D) images. This important information is the basis for intelligent excavators to carry out tasks such as path planning and trajectory planning, so the perception data of the operating scene is mainly based on the three-dimensional point cloud. Compared with two-dimensional images, the data structure of a three-dimensional point cloud is more complex and disordered, and the intelligent excavator operation scene is complex and dynamic, which brings great challenges to segmentation and recognition. Therefore, the research of three-dimensional operation scene segmentation and recognition technology is of great significance to the realization of excavator intelligence.

2. Research Status of Key Technology for Environmental Perception of Intelligent Excavators

2.1. Research Status of LiDAR Vibration Reduction Technology

- In terms of software, vibration theory analysis or simulation studies of the LiDAR detection process under vibration conditions are conducted. A response model is established between the LiDAR measurement data and vibrations, enabling compensation and correction of measurement data affected by vibration.

- In terms of hardware, the main vibration parameters, such as amplitude and frequency, are measured through experiments, and the vibration patterns are analyzed. Then, a vibration-damping platform is designed, and an optimal installation position is selected, effectively reducing the impact of vibration on the LiDAR.

2.2. Research Status of LiDAR Dust Noise Reduction Technology

2.3. Research Status of Multi-Sensor Information Fusion Technology

2.4. Research Status of Segmentation and Recognition Technology of 3D Operation Scenes

3. Research Prospect of Key Technologies of Intelligent Excavator Environmental Perception

3.1. Research Prospect of LiDAR Vibration Reduction Technology

3.1.1. Experimental Measurement of Vibration

3.1.2. The Vibration Analysis Model Is Established

3.1.3. Vibration Mechanism Analysis

3.1.4. Hardware Design of Vibration Reduction Platform

3.1.5. Point Cloud Vibration Error Correction

3.2. Research Prospects of LiDAR Dust Noise Reduction Technology

3.2.1. Final Echo Technique

3.2.2. Compare and Select the Best Quality Point Cloud Data

3.2.3. Filtering and Noise Reduction Based on Multi-Modal Fusion

3.2.4. Sparse Repair

3.3. Research Prospect of Multi-Sensor Information Fusion Technology

3.3.1. Sensor Calibration

3.3.2. Data Synchronization

3.3.3. Multiple Sight Registration

3.4. Research Prospect of 3D Operation Scene Segmentation and Recognition Technology

3.4.1. Point Cloud Data Feature Enhancement

3.4.2. Network Structure Design

3.4.3. Online Learning Style Changes

4. Conclusions

Funding

Conflicts of Interest

References

- Shi, Y.; Xia, Y.; Zhang, Y.; Yao, Z. Intelligent Identification for Working-Cycle Stages of Excavator Based on Main Pump Pressure. Autom. Constr. 2020, 109, 102991. [Google Scholar] [CrossRef]

- He, X.; Jiang, Y. Review of Hybrid Electric Systems for Construction Machinery. Autom. Constr. 2018, 92, 286–296. [Google Scholar] [CrossRef]

- Yusof, A.A.; Saadun, M.N.A.; Sulaiman, H.; Sabaruddin, S.A. The Development of Tele-Operated Electro-Hydraulic Actuator (T-EHA) for Mini Excavator Tele-Operation. In Proceedings of the 2016 2nd IEEE International Symposium on Robotics and Manufacturing Automation (ROMA), Ipoh, Malaysia, 25–27 September 2016; pp. 1–6. [Google Scholar]

- Papadopoulos, E.; Mu, B.; Frenette, R. On Modeling, Identification, and Control of a Heavy-Duty Electrohydraulic Harvester Manipulator. IEEE/ASME Trans. Mechatron. 2003, 8, 178–187. [Google Scholar] [CrossRef]

- Lee, S.U.; Chang, P.H. Control of a Heavy-Duty Robotic Excavator Using Time Delay Control with Integral Sliding Surface. Control Eng. Pract. 2002, 10, 697–711. [Google Scholar] [CrossRef]

- Bradley, D.A.; Seward, D.W. Developing Real-Time Autonomous Excavation-the LUCIE Story. In Proceedings of the 1995 34th IEEE Conference on Decision and Control, New Orleans, LA, USA, 13–15 December 1995; Volume 3, pp. 3028–3033. [Google Scholar]

- Seward, D.; Margrave, F.; Sommerville, I.; Morrey, R. LUCIE the Robot Excavator-Design for System Safety. In Proceedings of the IEEE International Conference on Robotics and Automation, Minneapolis, MN, USA, 22–28 April 1996; Volume 1, pp. 963–968. [Google Scholar]

- Seward, D.; Pace, C.; Morrey, R.; Sommerville, I. Safety Analysis of Autonomous Excavator Functionality. Reliab. Eng. Syst. Saf. 2000, 70, 29–39. [Google Scholar] [CrossRef]

- Stentz, A.; Bares, J.; Singh, S.; Rowe, P. A Robotic Excavator for Autonomous Truck Loading. Auton. Robot. 1999, 7, 175–186. [Google Scholar] [CrossRef]

- Patrick, R. Adaptive Motion Planning for Autonomous Mass Excavation. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PN, USA, 1999. [Google Scholar]

- Ha, Q.P.; Nguyen, Q.H.; Rye, D.C.; Durrant-Whyte, H.F. Impedance Control of a Hydraulically Actuated Robotic Excavator. Autom. Constr. 2000, 9, 421–435. [Google Scholar] [CrossRef]

- Ha, Q.P.; Rye, D.C. A Control Architecture for Robotic Excavation in Construction. Comput. Aided Civ. Eng. 2004, 19, 28–41. [Google Scholar] [CrossRef]

- Dunbabin, M.; Corke, P. Autonomous Excavation Using a Rope Shovel. J. Field Robot. 2006, 23, 379–394. [Google Scholar] [CrossRef]

- Gu, Y.M. Research on Image Processing Technology in Vision Systems of Intelligent Excavators. Ph.D. Thesis, Northeastern University, Shenyang, China, 2009. [Google Scholar]

- Yamamoto, H.; Moteki, M.; Ootuki, T.; Yanagisawa, Y.; Nozue, A.; Yamaguchi, T.; Yuta, S. Development of the Autonomous Hydraulic Excavator Prototype Using 3-D Information for Motion Planning and Control. Trans. Soc. Instrum. Control Eng. 2012, 48, 488–497. [Google Scholar] [CrossRef][Green Version]

- He, J.L.; Zhao, X.; Zhang, D.Q.; Song, J. Research on Automatic Trajectory Control of a New Intelligent Excavator. J. Guangxi Univ. 2012, 37, 259–265. [Google Scholar]

- Kwon, S.; Lee, M.; Lee, M.; Lee, S.; Lee, J. Development of Optimized Point Cloud Merging Algorithms for Accurate Processing to Create Earthwork Site Models. Autom. Constr. 2013, 35, 618–624. [Google Scholar] [CrossRef]

- Wang, X.; Sun, W.; Li, E.; Song, X. Energy-Minimum Optimization of the Intelligent Excavating Process for Large Cable Shovel through Trajectory Planning. Struct. Multidisc. Optim. 2018, 58, 2219–2237. [Google Scholar] [CrossRef]

- Zhang, L.Z. Trajectory Planning and Task Decision Making of Intelligent Excavator Robots. Master’s Thesis, Zhejiang University, Hangzhou, China, 2019. [Google Scholar]

- Sun, H.Y. Identification of Typical Operational Stages and Trajectory Control of Work Devices for Intelligent Excavators. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2021. [Google Scholar]

- Liu, W.S. 3D Point Cloud-Based Perception of the Working Environment for Intelligent Mining Excavator. Master’s Thesis, Dalian University of Technology, Dalian, China, 2022. [Google Scholar]

- Zhang, T.; Fu, T.; Ni, T.; Yue, H.; Wang, Y.; Song, X. Data-Driven Excavation Trajectory Planning for Unmanned Mining Excavator. Autom. Constr. 2024, 162, 105395. [Google Scholar] [CrossRef]

- Chen, F.Y.; Li, L. Development of a Vibration Monitoring Recorder for Mining Excavators. J. Inn. Mong. Univ. Nat. 2019, 34, 242–244. [Google Scholar]

- Huang, Z.X.; He, Q.H. Denoising of Vibration Signals in the Hydraulic Excavator Backhoe Digging Process. J. Cent. South Univ. 2013, 44, 2267–2273. [Google Scholar]

- Zhou, J.; Chen, J. Analysis and Optimization Design of Noise and Vibration Characteristics in Excavator Cabins. Noise Vib. Control 2013, 33, 87–90. [Google Scholar]

- Zhang, Y.M. Vibration Study of Lifting Mechanism in Large Mining Excavators. J. Mech. Eng. Autom. 2017, 2, 4–5. [Google Scholar]

- Ma, H.; Wu, J. Analysis of Positioning Errors Caused by Platform Vibration of Airborne LiDAR System. In Proceedings of the 2012 8th IEEE International Symposium on Instrumentation and Control Technology, London, UK, 11–13 July 2012; pp. 257–261. [Google Scholar]

- Ma, M.; Li, D.; Du, J. Image Processing of Airborne Synthetic Aperture Laser Radar Under Vibration Conditions. J. Radars 2014, 3, 591–602. [Google Scholar] [CrossRef]

- Hu, X.; Li, D.; Du, J.; Ma, M.; Zhou, J. Vibration Estimation of Synthetic Aperture Lidar Based on Division of Inner View Field by Two Detectors along Track. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016; pp. 4561–4564. [Google Scholar]

- Cui, S.; Qi, X.; Wang, X. Research on Impact Vibration Noise Reduction Method for Vehicle-Borne Laser Radar Based on Wavelet Analysis. Automob. Technol. 2017, 10, 24–28. [Google Scholar]

- Su, H.; Yan, H.; Zhang, X.; Yang, C. Study on Laser Radar Imaging Blur Induced by Residual Vibration. Infrared Laser Eng. 2011, 40, 2174–2179. [Google Scholar]

- Hong, G.; Guo, L. Analysis of the Effect of Linear Vibration on Synthetic Aperture Laser Radar Imaging. Acta Opt. Sin. 2012, 32, 0428001. [Google Scholar] [CrossRef]

- Hong, G.; Guo, L. E Influence of Angular Vibration on Synthetic Aperture Laser Radar Imaging. J. Infrared Millim. Waves 2011, 30, 571–576. [Google Scholar] [CrossRef]

- Song, X.; Chen, C.; Liu, B.; Xia, J.; Stanic, S. Design and Implementation of Vibration Isolation System for Mobile Doppler Wind LIDAR. J. Opt. Soc. Korea JOSK 2013, 17, 103–108. [Google Scholar] [CrossRef]

- Veprik, A.M.; Babitsky, V.I.; Pundak, N.; Riabzev, S.V. Vibration Protection of Sensitive Components of Infrared Equipment in Harsh Environments. Shock Vib. 2001, 8, 55–69. [Google Scholar] [CrossRef]

- Veprik, A.M. Vibration Protection of Critical Components of Electronic Equipment in Harsh Environmental Conditions. J. Sound Vib. 2003, 259, 161–175. [Google Scholar] [CrossRef]

- Wang, P.; Wang, W.; Ding, J. Design of Composite Vibration Isolation for Airborne Electro-Optical Surveillance Platform. Opt. Precis. Eng. 2011, 19, 83–89. [Google Scholar] [CrossRef]

- Chen, C.; Song, X.; Xia, J. Vibration Isolation Design of Vehicle-Borne Doppler Wind Lidar System. J. Atmos. Environ. Opt. 2011, 19, 83–89. [Google Scholar]

- Li, Y. Studies on Environmental Adaptability of Airborne Electro-Optical Reconnaissance Platform. Ph.D. Thesis, University of Chinese Academy of Sciences, Beijing, China, 2014. [Google Scholar]

- Try, P.; Gebhard, M. A Vibration Sensing Device Using a Six-Axis IMU and an Optimized Beam Structure for Activity Monitoring. Sensors 2023, 23, 8045. [Google Scholar] [CrossRef]

- Sun, Z.; Wu, Z.; Ren, X.; Zhao, Y. IMU Sensor-Based Vibration Measuring of Ship Sailing. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems, Jiaxing, China, 27–31 July 2021; pp. 845–849. [Google Scholar]

- Kaswekar, P.; Wagner, J.F. Sensor Fusion Based Vibration Estimation Using Inertial Sensors for a Complex Lightweight Structure. In Proceedings of the 2015 DGON Inertial Sensors and Systems Symposium, Karlsruhe, Germany, 22–23 September 2015; pp. 1–20. [Google Scholar]

- Jin, L. Studies on Passive Vibration Isolation Method Using Second-Order Spring-Damping System for Lidar SLAM Mapping in Orchards. Master’s Thesis, Hangzhou University of Electronic Science and Technology, Hangzhou, China, 2024. [Google Scholar]

- Wang, H.N. Design, Modeling, and Experimental Validation of Rubber Vibration Isolator for UAV LiDAR. Master’s Thesis, Northeastern University, Nanjing, China, 2020. [Google Scholar]

- Li, D.; Liu, Z. Studies on Multi-Modal Vibration Suppression Algorithm for Hydraulic Excavator Track Chassis. Hydraul. Pneumat. Seals 2024, 44, 24–30. [Google Scholar]

- Lu, C.; Chai, P.; Liu, Z.; Liu, Y. Study on Noise and Vibration Performance of Excavator Based on Substructure Power Flow. J. Huazhong Univ. Sci. Technol. (Nat. Sci.) 2021, 49, 37–42. [Google Scholar]

- Phillips, T.G.; Guenther, N.; McAree, P.R. When the Dust Settles: The Four Behaviors of LiDAR in the Presence of Fine Airborne Particulates. J. Field Robot. 2017, 34, 985–1009. [Google Scholar] [CrossRef]

- Laux, T.E.; Chen, C. 3D Flash LIDAR Vision Systems for Imaging in Degraded Visual Environments. In Proceedings of the Degraded Visual Environments: Enhanced, Synthetic, and External Vision Solutions, Baltimore, MD, USA, 7–8 May 2014. [Google Scholar]

- Cao, N.; Zhu, C.; Kai, Y.; Yan, P. A Method of Background Noise Reduction in Lidar Data. Appl. Phys. B 2013, 113, 115–123. [Google Scholar] [CrossRef]

- Cheng, Y.; Cao, J.; Hao, Q.; Xiao, Y.; Zhang, F.; Xia, W.; Zhang, K.; Yu, H. A Novel De-Noising Method for Improving the Performance of Full-Waveform LiDAR Using Differential Optical Path. Remote Sens. 2017, 9, 1109. [Google Scholar] [CrossRef]

- Goodin, C.; Durst, P.J.; Prevost, Z.T.; Compton, P.J. A Probabilistic Model for Simulating the Effect of Airborne Dust on Ground-Based LIDAR. In Proceedings of the Active and Passive Signatures IV, Baltimore, MD, USA, 1–2 May 2013; Volume 8734, pp. 83–90. [Google Scholar]

- Ryde, J.; Hillier, N. Performance of Laser and Radar Ranging Devices in Adverse Environmental Conditions. J. Field Robot. 2009, 26, 712–727. [Google Scholar] [CrossRef]

- Du, X.; Jiang, X.; Hao, C.; Wang, Y. Bilateral Filtering Denoising Algorithm for Point Cloud Models. Comput. Appl. Softw. 2010, 27, 245–264. [Google Scholar]

- Cao, S.; Yue, J.; Ma, W. Bilateral Filtering Point Cloud Denoising Algorithm Based on Feature Selection. J. Southeast Univ. 2013, 43, 351–354. [Google Scholar]

- Wu, L.; Shi, H.; Chen, H. Feature-Based Classification for 3D Point Data Denoising. Opt. Precis. Eng. 2016, 24, 1465–1473. [Google Scholar]

- Li, S.; Mao, J.; Li, Z. An EEMD-SVD Method Based on Gray Wolf Optimization Algorithm for Lidar Signal Noise Reduction. Int. J. Remote Sens. 2023, 44, 5448–5472. [Google Scholar] [CrossRef]

- Zhang, L.; Chang, J.; Li, H.; Liu, Z.X.; Zhang, S.; Mao, R. Noise Reduction of LiDAR Signal via Local Mean Decomposition Combined with Improved Thresholding Method. IEEE Access 2020, 8, 113943–113952. [Google Scholar] [CrossRef]

- Dai, H.; Gao, C.; Lin, Z.; Wang, K.; Zhang, X. Wind Lidar Signal Denoising Method Based on Singular Value Decomposition and Variational Mode Decomposition. Appl. Opt. 2021, 60, 10721–10726. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Pan, Z.; Mao, F.; Gong, W.; Chen, S.; Min, Q. De-Noising and Retrieving Algorithm of Mie Lidar Data Based on the Particle Filter and the Fernald Method. Opt. Express 2015, 23, 26509. [Google Scholar] [CrossRef]

- Yoo, H.S.; Kim, Y.S. Development of a 3D Local Terrain Modeling System of Intelligent Excavation Robot. KSCE J. Civ. Eng. 2017, 21, 565–578. [Google Scholar] [CrossRef]

- Graeter, J.; Wilczynski, A.; Lauer, M. LIMO: Lidar-Monocular Visual Odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 7872–7879. [Google Scholar]

- Shin, Y.S.; Park, Y.S.; Kim, A. Direct Visual SLAM Using Sparse Depth for Camera-LiDAR System. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 5144–5151. [Google Scholar]

- De Silva, V.; Roche, J.; Kondoz, A. Fusion of LiDAR and Camera Sensor Data for Environment Sensing in Driverless Vehicles. 2018; Preprint. [Google Scholar]

- Akhtar, M.R.; Qin, H.; Chen, G. Velodyne LiDAR and Monocular Camera Data Fusion for Depth Map and 3D Reconstruction. In Proceedings of the Eleventh International Conference on Digital Image Processing, Guangzhou, China, 10–13 May 2019; 2019; Volume 11179, pp. 87–97. [Google Scholar]

- Lee, H.; Song, S.; Jo, S. 3D Reconstruction Using a Sparse Laser Scanner and a Single Camera for Outdoor Autonomous Vehicle. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016; pp. 629–634. [Google Scholar]

- An, Y.; Li, B.; Hu, H.; Zhou, X. Building an Omnidirectional 3-D Color Laser Ranging System Through a Novel Calibration Method. IEEE Trans. Ind. Electron. 2019, 66, 8821–8831. [Google Scholar] [CrossRef]

- Yang, L.; Sheng, Y.; Wang, B. 3D Reconstruction of Building Facade with Fused Data of Terrestrial LiDAR Data and Optical Image. Optik 2016, 127, 2165–2168. [Google Scholar] [CrossRef]

- Li, Y.; Wu, H.; An, R.; Xu, H.; He, Q.; Xu, J. An Improved Building Boundary Extraction Algorithm Based on Fusion of Optical Imagery and LIDAR Data. Optik 2013, 124, 5357–5362. [Google Scholar] [CrossRef]

- Yu, Y.; Zhao, H. Localization in Unstructured Environments Based on Camera and Swing LiDAR Fusion. Acta Autom. Sin. 2019, 45, 1791–1798. [Google Scholar]

- Wang, X.; He, L.; Zhao, T. SLAM for Mobile Robots Based on LiDAR and Stereo Vision. J. Sens. Technol. 2019, 45, 1791–1798. [Google Scholar]

- Qu, Z.; Wei, F.; Wei, W.; Li, Z.; Hu, H. Pedestrian Detection Method Based on Fusion of Radar and Vision Information. J. Jilin Univ. 2019, 45, 1791–1798. [Google Scholar]

- Wu, G.; Zhao, C.; Liu, J.; Hu, B. Road Surface Vehicle Detection Based on Multi-Sensor Fusion. J. Huazhong Univ. Sci. Technol. 2015, 43, 250–262. [Google Scholar]

- Shao, P. Research on Multi-UAV SLAM Technology Based on Multi-Sensor Information Fusion. Master’s Thesis, University of Electronic Science and Technology, Chengdu, China, 2024. [Google Scholar]

- Zhao, Q.; Chen, H. Robot Motion Trajectory Tracking Based on Sensor Information Fusion. Inf. Technol. 2024, 182–186. [Google Scholar] [CrossRef]

- Quan, M. Research on Monocular Vision SLAM Algorithm Based on Multi-Sensor Information Fusion. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2021. [Google Scholar]

- Zhao, S. Research on Multi-Sensor Information Fusion Method for Intelligent Vehicles. Master’s Thesis, Jilin University, Changchun, China, 2020. [Google Scholar]

- Yang, L. Research on Target Recognition and Localization Technology for Autonomous Operation of Intelligent Excavators. Master’s Thesis, Jilin University, Changchun, China, 2004. [Google Scholar]

- Zhu, J.; Shen, D.; Wu, K. Target Recognition of Intelligent Excavators Based on LiDAR Point Clouds. Comput. Eng. 2017, 43, 297–302. [Google Scholar]

- Phillips, T.G.; McAree, P.R. An Evidence-Based Approach to Object Pose Estimation from LiDAR Measurements in Challenging Environments. J. Field Robot. 2018, 35, 921–936. [Google Scholar] [CrossRef]

- Phillips, T.G.; Green, M.E.; McAree, P.R. Is It What I Think It Is? Is It Where I Think It Is? Using Point-Clouds for Diagnostic Testing of a Digging Assembly’s Form and Pose for an Autonomous Mining Shovel. J. Field Robot. 2016, 33, 1013–1033. [Google Scholar] [CrossRef]

- Agrawal, A.; Nakazawa, A.; Takemura, H. MMM-Classification of 3D Range Data. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 2003–2008. [Google Scholar]

- Zhu, J.; Pierskalla, W.P. Applying a Weighted Random Forests Method to Extract Karst Sinkholes from LiDAR Data. J. Hydrol. 2016, 533, 343–352. [Google Scholar] [CrossRef]

- Lai, X.; Yuan, Y.; Li, Y.; Wang, M. Full-Waveform LiDAR Point Clouds Classification Based on Wavelet Support Vector Machine and Ensemble Learning. Sensors 2019, 19, 3191. [Google Scholar] [CrossRef]

- Karsli, F.; Dihkan, M.; Acar, H.; Ozturk, A. Automatic Building Extraction from Very High-Resolution Image and LiDAR Data with SVM Algorithm. Arab. J. Geosci. 2016, 9, 635. [Google Scholar] [CrossRef]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual Classification of Lidar Data and Building Object Detection in Urban Areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Golovinskiy, A.; Kim, V.G.; Funkhouser, T. Shape-Based Recognition of 3D Point Clouds in Urban Environments. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2154–2161. [Google Scholar]

- Yao, W.; Wei, Y. Detection of 3-D Individual Trees in Urban Areas by Combining Airborne LiDAR Data and Imagery. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1355–1359. [Google Scholar] [CrossRef]

- Zhao, H.; Liu, Y.; Zhu, X.; Zhao, Y.; Zha, H. Scene Understanding in a Large Dynamic Environment through a Laser-Based Sensing. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 127–133. [Google Scholar]

- Wang, H.; Wang, C.; Luo, H.; Li, P.; Cheng, M.; Wen, C.; Li, J. Object Detection in Terrestrial Laser Scanning Point Clouds Based on Hough Forest. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1807–1811. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, L.; Fang, T.; Mathiopoulos, P.T.; Tong, X.; Qu, H.; Xiao, Z.; Li, F.; Chen, D. A Multiscale and Hierarchical Feature Extraction Method for Terrestrial Laser Scanning Point Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2409–2425. [Google Scholar] [CrossRef]

- Zeng, A.; Yu, K.-T.; Song, S.; Suo, D.; Walker, E.; Rodriguez, A.; Xiao, J. Multi-View Self-Supervised Deep Learning for 6D Pose Estimation in the Amazon Picking Challenge. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; p. 1386. [Google Scholar]

- Li, Z.; Gan, Y.; Liang, X.; Yu, Y.; Cheng, H.; Lin, L. LSTM-CF: Unifying Context Modeling and Fusion with LSTMs for RGB-D Scene Labeling. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 541–557. [Google Scholar]

- Lawin, F.J.; Danelljan, M.; Tosteberg, P.; Bhat, G.; Khan, F.S.; Felsberg, M. Deep Projective 3D Semantic Segmentation. In Proceedings of the Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; pp. 95–107. [Google Scholar]

- Zhang, Z.; Cui, Z.; Xu, C.; Jie, Z.; Li, X.; Yang, J. Joint Task-Recursive Learning for Semantic Segmentation and Depth Estimation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 235–251. [Google Scholar]

- Chen, Y.; Yang, M.; Wang, C.; Wang, B. 3D Semantic Modelling with Label Correction For Extensive Outdoor Scene. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; pp. 1262–1267. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Wang, L.; Huang, Y.; Shan, J.; He, L. MSNet: Multi-Scale Convolutional Network for Point Cloud Classification. Remote Sens. 2018, 10, 612. [Google Scholar] [CrossRef]

- Roynard, X.; Deschaud, J.-E.; Goulette, F. Classification of Point Cloud Scenes with Multiscale Voxel Deep Network. arXiv 2018, arXiv:1804.03583. [Google Scholar]

- Qi, C.R. Deep Learning on Point Clouds for 3D Scene Understanding. Ph.D. Thesis, Standford University, Stanford, CA, USA, 2018. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Jiang, M.; Wu, Y.; Zhao, T.; Zhao, Z.; Lu, C. PointSIFT: A SIFT-like Network Module for 3D Point Cloud Semantic Segmentation. arXiv 2018, arXiv:1807.00652. [Google Scholar]

- Zhao, H.; Jiang, L.; Fu, C.-W.; Jia, J. PointWeb: Enhancing Local Neighborhood Features for Point Cloud Processing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5565–5573. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Ren, D.; Li, J.; Wu, Z.; Guo, J.; Wei, M.; Guo, Y. MFFNet: Multimodal Feature Fusion Network for Point Cloud Semantic Segmentation. Vis. Comput. 2024, 40, 5155–5167. [Google Scholar] [CrossRef]

- Poliyapram, V.; Wang, W.; Nakamura, R. A Point-Wise LiDAR and Image Multimodal Fusion Network (PMNet) for Aerial Point Cloud 3D Semantic Segmentation. Remote Sens. 2019, 11, 2961. [Google Scholar] [CrossRef]

- Peng, J.; Cui, Y.; Zhong, Z.; An, Y. Ore Rock Fragmentation Calculation Based on Multi-Modal Fusion of Point Clouds and Images. Appl. Sci. 2023, 13, 12558. [Google Scholar] [CrossRef]

- An, Y.; Liu, W.; Cui, Y.; Wang, J.; Li, X.; Hu, H. Multilevel Ground Segmentation for 3-D Point Clouds of Outdoor Scenes Based on Shape Analysis. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Hu, X.; An, Y.; Shao, C.; Hu, H. Distortion Convolution Module for Semantic Segmentation of Panoramic Images Based on the Image-Forming Principle. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Cui, Y.; Zhang, Z.; An, Y.; Zhong, Z.; Yang, F.; Wang, J.; He, K. Memory-Augmented 3D Point Cloud Semantic Segmentation Network for Intelligent Mining Shovels. Sensors 2024, 24, 4364. [Google Scholar] [CrossRef] [PubMed]

- Si, L.; Wang, Z.; Liu, P.; Tan, C.; Chen, H.; Wei, D. A Novel Coal–Rock Recognition Method for Coal Mining Working Face Based on Laser Point Cloud Data. IEEE Trans. Instrum. Meas. 2021, 70, 1–18. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, Y.; Yang, S.; Xiang, Z. A Method of Blasted Rock Image Segmentation Based on Improved Watershed Algorithm. Sci. Rep. 2022, 12, 7143. [Google Scholar] [CrossRef]

- Xiao, D.; Liu, X.; Le, B.T.; Ji, Z.; Sun, X. An Ore Image Segmentation Method Based on RDU-Net Model. Sensors 2020, 20, 4979. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.-S. SCA-CNN: Spatial and Channel-Wise Attention in Convolutional Networks for Image Captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5659–5667. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhu, X.; Zhang, J.; Chen, G. ASAN: Self-Attending and Semantic Activating Network towards Better Object Detection. IEICE Trans. Inf. Syst. 2020, 103, 648–659. [Google Scholar] [CrossRef]

- Zeng, Y.; Ritz, C.; Zhao, J.; Lan, J. Attention-Based Residual Network with Scattering Transform Features for Hyperspectral Unmixing with Limited Training Samples. Remote Sens. 2020, 12, 400. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Application. Autom. Constr. 2020, 109, 102991. [Google Scholar]

| Time | Research Institution | Major Contribution | Literature |

|---|---|---|---|

| 1995 | Lancaster University, UK | Using a potentiometer, axis tilt sensor, optical laser ranging sensor global positioning system, and other equipment, it can efficiently complete the independent excavation of long straight ditches. | [6,7,8] |

| 1999 | Carnegie Mellon University | Two laser rangefinders are used to scan the working space, identify the excavation surface, trucks, and obstacles, and combine the on-board computer and positioning system to carry out the excavation motion planning and dumping motion planning, which can realize the autonomous operation of slope excavation. | [9,10] |

| 2000 | University of Sydney | By using a laser rangefinder to scan the working environment, the functions of task decomposition, state monitoring, and path planning are realized, and the automatic mining operation in simple working conditions is realized. | [11,12] |

| 2006 | Commonwealth Scientific and Industrial Organisation of Australia | SICK LMS LiDAR is used to scan the working environment and construct a 3D topographic map to describe the working environment and excavation surface. The operation time is basically the same as the manual operation time, and the energy consumption is less. | [13] |

| 2009 | Northeastern University | The binocular CCD sensor is used to collect environmental information such as road, bucket and target, which lays a foundation for the autonomous walking and operation of the excavator. | [14] |

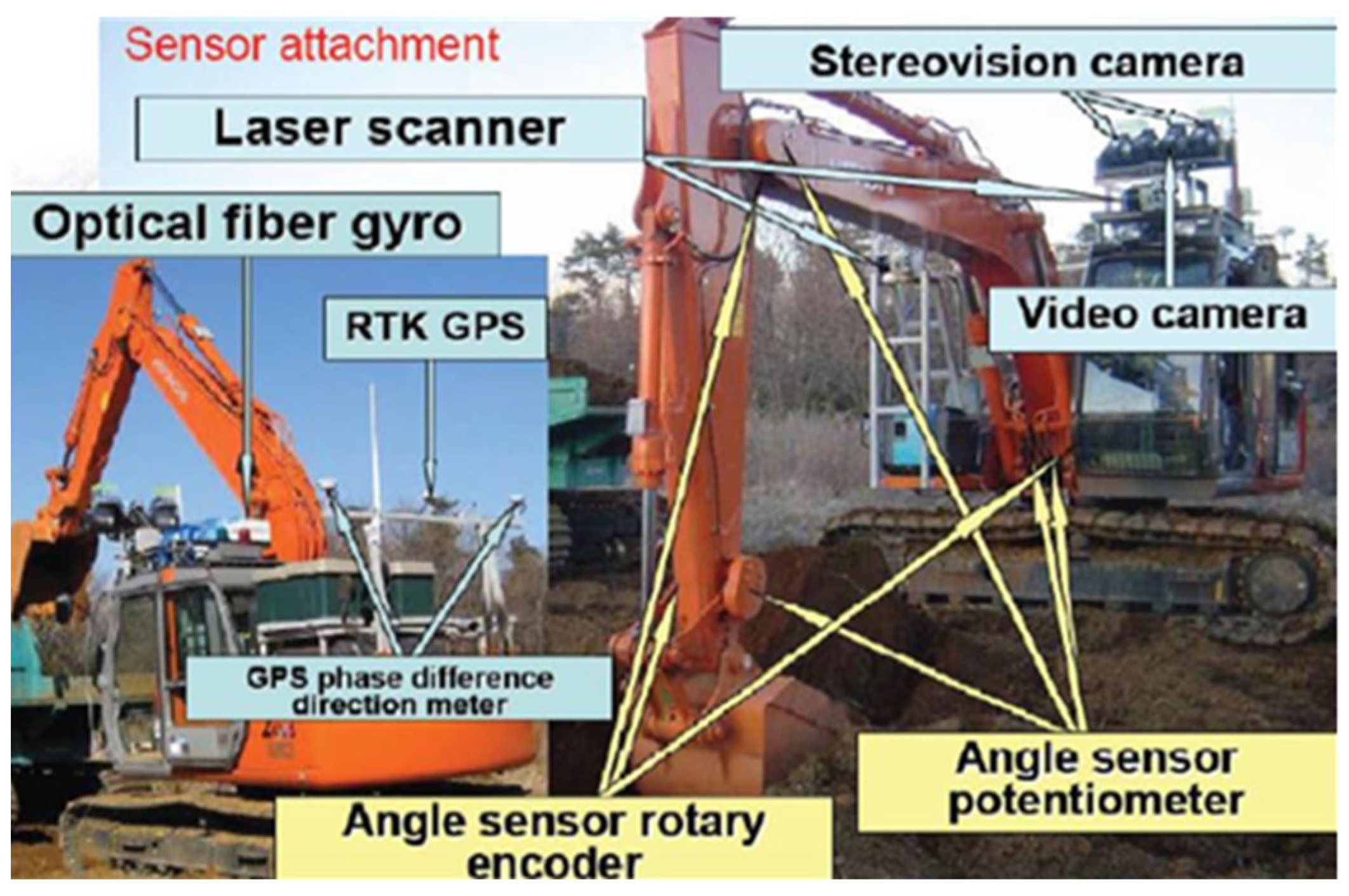

| 2012 | University of Tsukuba and Ministry of Land and Resources, Japan | The use of pressure flow sensors, tilt sensors, gyroscopes, GPS, binocular cameras, LiDAR, and other sensors can sense the working environment independently plan obstacle avoidance, and initially realize the independent operation of the excavator. | [15] |

| 2012 | Central South University, Sanhe Intelligent | The kinematics and dynamics model of the excavator are established, and the cerebellar model neural network control method is used to realize the real-time and accurate control of the intelligent excavator. | [16] |

| 2013 | Sungkyunkwan University, South Korea | An intelligent mining system for cluster control of construction machinery is developed, which is mainly composed of a task planning system, task execution system and man–machine interface system. The system has a high degree of intelligence and realizes the preliminary independent mining process. | [17] |

| 2018 | Dalian University of Technology | The intelligent excavator prototype is developed, and the dynamic excavation resistance prediction model is constructed to provide theoretical support for the intelligent excavator to minimize energy consumption. | [18] |

| 2019 | Zhejiang University | The experimental mining robot is developed, which can realize the functions of trajectory planning, trajectory tracking and remote control, and has a certain degree of automation. | [19] |

| 2021 | Harbin Institute of Technology | Computer vision was introduced into the identification of the working cycle stage of the excavator, and the depth vision target detector was established by using the YoloV2 algorithm to locate and classify the three feature parts (bucket, arm joint and body). | [20] |

| 2022 | Dalian University of Technology | The environmental perception algorithm is designed to process the point cloud data, carry out multi-level ground extraction for the point cloud and identify the mining loading target based on feature computing, and help the excavator to determine the location of the material pile and truck in the working environment, so as to realize the perception of the working environment of the excavator. | [21] |

| 2024 | Yanshan University | A time-convolutional recurrent neural network (TCRNN) combining stackable extended convolution with an attention-based Sequence-to-sequence (Seq2Seq) module is proposed to accurately predict excavation forces and a data-driven excavation trajectory planning framework based on TCRNN is established to improve operational performance in autonomous excavation scenarios. | [22] |

| Literature | Keywords | Major Contribution | Time |

|---|---|---|---|

| [23] | Vibration monitoring recorder | To collect real-time excavator vibration data, an excavator vibration monitoring recorder is designed. | 2019 |

| [24] | Vibration signal noise reduction | Wavelet packet frequency band energy decomposition and Hilbert–Huang transform are used to study the vibration signal denoising method in the process of excavator shoveling. | 2013 |

| [25] | Cab vibration characteristics | The modal analysis of the noise and vibration characteristics of the excavator cab is carried out, and the cab structure is optimized to reduce the vibration and noise effectively. | 2013 |

| [26] | Vibration characteristics of the lifting mechanism | The vibration characteristics of the lifting mechanism of the excavator are studied, and the reasonable vibration range of the lifting mechanism is obtained through experimental analysis. | 2017 |

| [27] | Simulation and evaluation | The simulation method is used to evaluate the influence of vibration error on the scanning accuracy of LiDAR. | 2012 |

| [28] | Estimation and compensation | Estimation of phase error of LiDAR caused by compensated vibration based on trajectory two-detector interferometry. | 2014 |

| [29] | Vibration estimation | To solve the problem of large vibration estimation error under the condition of a wide azimuth beam and low SNR. | 2016 |

| [30] | Mathematical model, noise cancellation | The mathematical model of vibration and noise is established, and the method of vibration and noise elimination based on wavelet analysis is proposed. | 2017 |

| [31] | Vibration blur, exposure time | A random vibration fuzzy theoretical model is established to obtain the optimal exposure time satisfying the resolution. | 2011 |

| [32,33] | Angular vibration, line vibration | The vibration is divided into angular vibration and linear vibration, and the mathematical model of angular vibration and linear vibration is established. | 2012 |

| [34] | Damping table | An aluminum alloy frame, an optical platform, and a steel wire rope damper are used to design the radar damping table. | 2013 |

| [35] | Wire rope shock absorber | The model of the anti-vibration system is established, and the structure layout and design of the steel wire rope shock absorber are selected. | 2001 |

| [36] | Damping table | The elastic damping damper and installation position of the damping table are selected according to the vibration response characteristics. | 2003 |

| [37] | Shock absorber | The shock absorber with nonlinear hysteresis characteristics is selected, and the layout of the shock absorber is designed. | 2011 |

| [38] | Wire rope shock absorber | A metal wire rope shock absorber is selected, a reasonable layout is designed, and a vibration reduction scheme for the optical platform is also designed. | 2011 |

| [39] | Damping table | The installation position of the platform is determined, the vibration model is established, and the damping table is designed. | 2014 |

| [43] | Passive vibration isolator | Through mathematical modeling, dynamic simulation, and response surface analysis, the structure parameters of optimal rod length, assembly angle, spring stiffness, and damping coefficient are selected, and a passive isolator mechanism based on a second-order spring damping vibration system is constructed. | 2024 |

| [44] | Rubber shock absorber | According to the vibration-damping design requirements, the rubber shock absorber was designed, and the finite element simulation method of rubber structural parts was summarized. According to the simulation requirements, the material parameters of rubber samples were tested, and the superelastic model parameters of rubber materials and the viscoelastic results of materials were obtained through the test. | 2020 |

| [45] | Multimodal vibration suppression | According to the characteristic design and equivalent principle of the crawler chassis of hydraulic excavators, the multi-mode vibration suppression algorithm is adopted to realize the multi-mode vibration suppression of the crawler chassis of hydraulic excavators. | 2024 |

| [46] | Finite element; substructure | The substructure power flow method is comprehensively used to study the influence of vibration characteristics of the rotating platform on cab noise and improve the dynamic solving efficiency to accelerate the analysis and optimization process of excavator noise and vibration performance. | 2021 |

| Literature | Keywords | Major Contribution | Time |

|---|---|---|---|

| [9] | Final echo technique | By extracting the edge of the final pulse, the target and dust signal are distinguished, which effectively improves the loading target recognition effect under dust conditions. | 1999 |

| [47] | Dust influence rule | The dust influence rule of common LiDAR under different measuring distances, different dust concentrations, and different reflectance is summarized. | 2017 |

| [48] | Registration fusion | The influence of dust on environmental perception accuracy is reduced through the fusion of two-dimensional color images and three-dimensional point cloud registration. | 2014 |

| [49] | Background noise equation | The noise level equation is derived from the LiDAR equation, and the background noise equation is verified by using the observed LiDAR data and the simulated signal. | 2013 |

| [50] | Differential optical path | A LiDAR denoising method based on differential optical path is proposed, the mathematical model is derived and verified, and the signal-to-noise ratio is simulated based on this method. | 2017 |

| [51] | Probabilistic model | A probabilistic model of the interaction between LiDAR and dust is established, and the model is verified by simulation and experiment. | 2013 |

| [52] | Measuring suspended dust levels | A reliable method for measuring suspended dust levels by determining the transmission coefficient is developed and used for quantitative evaluation of the environmental sensing performance of LiDAR. | 2009 |

| [53] | Bilateral filtering | Based on the two-sided filtering algorithm, the point cloud is filtered and denoised, and the point cloud is denoised by adjusting the normal position of sampling points. | 2010 |

| [54] | Bilateral filtering | A two-sided filter point cloud denoising method based on feature selection is proposed, which can effectively preserve the features of scanned objects while removing noise. | 2013 |

| [55] | Feature information classification | A 3D point cloud denoising algorithm based on feature information classification is proposed. | 2016 |

| [56] | Linear grey Wolf optimization algorithm; Singular value decomposition; Empirical mode decomposition | A new joint denoising method EEMD-GGO-SVD, which includes empirical mode decomposition (EEMD), Grey Wolf optimization (GWO), and singular value decomposition (SVD), is proposed to improve the signal-to-noise ratio and extract useful signals. | 2023 |

| [57] | Local mean score; Threshold method | The local mean decomposition and the improved threshold method (LMD-ITM) are combined to process the noisy LiDAR signals, thus avoiding the loss of useful information. | 2020 |

| [58] | Singular value decomposition; Variational mode decomposition | A denoising method for wind LiDAR based on singular value decomposition (SVD) and variational mode decomposition (VMD) is proposed. | 2021 |

| [59] | Particle filter | A new scheme is proposed, which uses a particle filter (PF) instead of EnKF in the denoising algorithm to avoid the near distance deviation (bias) caused by excessive smoothing caused by EnKF. | 2015 |

| Literature | Keywords | Major Contribution | Time |

|---|---|---|---|

| [15] | Monocular camera, binocular stereo camera, and LiDAR | Using the monocular camera, binocular stereo camera, and LiDAR as environmental sensing equipment, an autonomous intelligent excavator based on a comprehensive sensing system was developed. Through multi-sensor information fusion, obstacles and targets in the mining scene were detected, and real-time three-dimensional modeling of mining results was achieved. | 2012 |

| [17] | Cameras and LiDAR | Cameras and LiDAR are used for environmental perception, and the ICP algorithm is used to fuse images and point cloud information. | 2013 |

| [60] | LiDAR and binocular camera | The local terrain reconstruction system of intelligent excavators is developed by using LiDAR and binocular cameras, which effectively improves the environmental perception ability of intelligent excavators. | 2017 |

| [61,62] | projection | The point cloud data is projected onto the image for data fusion. | 2018 |

| [63] | Gaussian interpolation | After projection fusion, the geometric transformation relationship between different sensors is calculated, and the image pixels lacking depth information are compensated by the Gaussian interpolation algorithm. | 2018 |

| [64] | Gaussian process regression interpolation | The color information of the image is assigned to the point cloud by projection, and the resolution of the LiDAR data is matched by Gaussian process regression interpolation. | 2019 |

| [65] | Local distance modeling, 3D depth map reconstruction | In the local scale modeling stage, the three-dimensional point cloud is interpolated using Gaussian process regression, and in the reconstruction stage, the image and interpolation points are fused to build a three-dimensional depth map, and the merged data is optimized based on Markov random fields. | 2016 |

| [66] | Full-dimensional color laser ranging system | An omnidirectional 3D color laser ranging system is developed. Based on the improved rectangular hole checkerboard calibration method, the 2D image and point cloud are fused into a 3D color point cloud. | 2019 |

| [67] | Registration, plane fitting | The initial feature is extracted from the image according to the gradient direction, and the conversion from 2D image to 3D point cloud is realized on the basis of registration, and then the point cloud data around the feature line is fitted in the plane. | 2016 |

| [68] | Complementary advantages | Taking full advantage of the complementary advantages of LiDAR data and optical imaging, different architectural features are extracted from the two data sources and fused to form the final complete architectural boundary. | 2013 |

| [69] | Feature point matching | Combining the depth information of LiDAR and the color texture information of an image, the matching relationship of feature points is constructed to solve the location problem of outdoor robots in complex environments. | 2019 |

| [70] | Timely positioning and accurate composition | The visual information, LiDAR information, and odometer information are integrated to solve the problem of timely positioning and accurate composition of mobile robots in an unknown environment. | 2019 |

| [71] | Fusion, clustering | The laser radar depth information is mapped to two-dimensional camera images for fusion, and real-time pedestrian detection is realized by cluster analysis and support vector machine classification. | 2019 |

| [72] | Vehicle real-time detection | Hough transform and Chebyshev’s theorem is used to remove the non-target points in the radar data channel, and the significant image of the vehicle is generated in the image data channel, and then the vehicle position is located in real-time by segmentation method. | 2015 |

| [73] | Loop, high-precision | A multi-sensor data fusion scheme of a tightly coupled LiDAR-camera-IMU system is proposed, and the loop optimization of single-vehicle and multi-vehicle is realized. | 2024 |

| [74] | Information fusion; Tracking method; Particle filter algorithm | Multiple sensors are used to collect the trajectory information of the robot at the same time, and the information is fused accordingly. Then, the particle filter algorithm is introduced to realize the trajectory tracking of the robot. Finally, the comparison experiment of the trajectory tracking of the robot is carried out with other methods. | 2024 |

| [75] | Monocular Visual SLAM | A monocular VISLAM algorithm based on EKF and graph optimization complementary framework is proposed to improve the positioning accuracy and computational cost ratio of free mobile robots in three-dimensional space. | 2021 |

| [76] | Data association | An object association fusion algorithm among distributed sensors based on the covariance of object state estimation error is proposed. Independent sequential object association algorithm and weighted fusion algorithm are used to associate and fuse each sensor object after a coordinate transformation, which effectively improves the reliability and accuracy of the fusion system. | 2020 |

| Literature | Keywords | Major Contribution | Time |

|---|---|---|---|

| [77] | Multi-scale edge detection | A multi-scale edge detection algorithm is proposed by constructing quadratic B-spline wavelet, and based on this algorithm, the edge features of intelligent excavator loaded vehicles are detected and recognized. | 2004 |

| [78] | Point cloud clustering feature histogram | In this paper, a method based on point cloud clustering feature histogram is proposed, which can identify multiple targets such as intelligent excavator loading vehicle and material pile at the same time and has good stability. | 2017 |

| [79] | Maximum evidence | Aiming at the intelligent mining excavator under the influence of dust, a method of target segmentation recognition based on maximum evidence is proposed, which realizes the segmentation and recognition of operation targets such as buckets and piles. | 2018 |

| [80] | Buckets and mining cards | Aiming at the problem of anti-collision between the mine excavator bucket and the mine card, the paper divides and identifies the bucket and mine card. | 2016 |

| [85] | Random forest classifier, conditional random field | By using a random forest classifier and conditional random field, three-dimensional point cloud segmentation is realized by using reflection intensity and curvature. | 2014 |

| [86] | Graph cutting method, support vector machine | The point cloud scene is segmented by graph cutting method, then the features of target location and distance are extracted, and the target is classified and recognized by support vector machine. | 2009 |

| [87] | AdaBoost classifier | The spectral and spatial features of point cloud are extracted, and the target is identified by AdaBoost classifier. | 2013 |

| [88] | Scan line algorithm | The three-dimensional point cloud is segmented by scanning line algorithm, and the features such as height and normal vector variance are extracted, and then the target is classified and recognized by support vector machine. | 2010 |

| [89] | Rasterization, Hofer Forest | The point cloud data after the removal of ground points is segmulated by raster processing, and the grid and its neighborhood are set as local point clouds, then the reflection features are extracted, and the Hough Forest is used to achieve multi-target recognition. | 2014 |

| [90] | Multi-scale sampling, Bayes | Multi-scale sampling of point clouds is carried out, and the sampling points are classified into different levels of point cloud clusters, and then the target is identified based on Bayesian probability model and AdaBoost classifier. | 2015 |

| [101] | Work directly with the original point cloud | Directly input the original point cloud data for segmentation and identification, to solve the problem of point cloud disorder, geometric rotation invariance and so on. | 2017 |

| [102] | Hierarchical neural network structure | A hierarchical neural network structure is constructed to improve the local feature extraction ability of point cloud, and effectively improve the accuracy of point cloud classification and semantic segmentation. | 2017 |

| [103] | Scale invariant feature | A 3D point cloud segmentation model based on scale-invariant feature transformation is proposed. Multiple main direction information is encoded by directional coding units, and multiple coding units are stacked to obtain multi-scale features. | 2018 |

| [104] | k-means, k-NN | The k-means clustering algorithm and k-NN algorithm are used to select the neighborhood in the global space and the feature space, respectively, and pairwise distance loss and centroid loss are introduced into the loss function. | 2019 |

| [105] | Dynamic graph convolution | The graph convolutional neural network is applied to 3D point cloud processing, and a dynamic map volume point cloud processing algorithm is proposed, which effectively improves the local spatial feature perception ability. | 2019 |

| [106] | Random sampling | The most efficient random sampling is selected, and a local feature sampler is proposed to reduce the information lost in random sampling, which effectively improves the efficiency of target segmentation recognition. | 2020 |

| [112] | Three-dimensional point cloud semantic segmentation | A three-dimensional point cloud semantic segmentation network based on memory enhancement and lightweight attention mechanism is proposed to solve the problems of semantic segmentation accuracy and deployment capability. | 2024 |

| [109] | Multi-mode fusion, segmentation map | A new ore-rock fragmentation calculation method (ORFCM) based on multi-modal fusion of point cloud and image is proposed to solve the problem that the resolution of point cloud model is low and the image model lacks spatial position information, so the ore-rock fragmentation can not be accurately calculated. | 2023 |

| [113] | Point cloud data segmentation | A simplified method of coal-rock point cloud data is designed on the basis of retaining the characteristic points, and an improved ant colony optimization (IACO) algorithm is proposed to realize the segmentation and recognition of coal-rock point cloud data. | 2021 |

| [114] | Adaptive watershed segmentation | By introducing the Phansalkar binarization method, a watershed seed point marking method based on block contour firmness is proposed, and an adaptive watershed segmentation algorithm based on block shape is formed to better solve the segmentation errors caused by adhesion, stacking, and edge blurring in blast rock images. | 2022 |

| [115] | DUNet; Residual connection | By combining the residual structure of the convolutional neural network with DUNet model, the accuracy of image segmentation is greatly improved. | 2020 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, Y.; Du, Y.; Han, J.; An, Y. Research Status and Prospect of the Key Technologies for Environment Perception of Intelligent Excavators. Appl. Sci. 2024, 14, 10919. https://doi.org/10.3390/app142310919

Cui Y, Du Y, Han J, An Y. Research Status and Prospect of the Key Technologies for Environment Perception of Intelligent Excavators. Applied Sciences. 2024; 14(23):10919. https://doi.org/10.3390/app142310919

Chicago/Turabian StyleCui, Yunhao, Yingke Du, Jianhai Han, and Yi An. 2024. "Research Status and Prospect of the Key Technologies for Environment Perception of Intelligent Excavators" Applied Sciences 14, no. 23: 10919. https://doi.org/10.3390/app142310919

APA StyleCui, Y., Du, Y., Han, J., & An, Y. (2024). Research Status and Prospect of the Key Technologies for Environment Perception of Intelligent Excavators. Applied Sciences, 14(23), 10919. https://doi.org/10.3390/app142310919