ReZNS: Energy and Performance-Optimal Mapping Mechanism for ZNS SSD

Abstract

1. Introduction

- We perform an in-depth breakdown to understand the internal behaviors of ZNS SSDs, including the mapping mechanism and zone-reset command.

- We design and implement ReZNS, a novel mapping mechanism for ZNS SSDs that includes the management policy for the zones that are no longer in use and the mapping policy for sharing unused capacity.

- We evaluate and quantitatively compare the benefits of ReZNS using not only a set of synthetic but also real-world workloads. ReZNS significantly reduces the number of zone-reset commands by up to 61%.

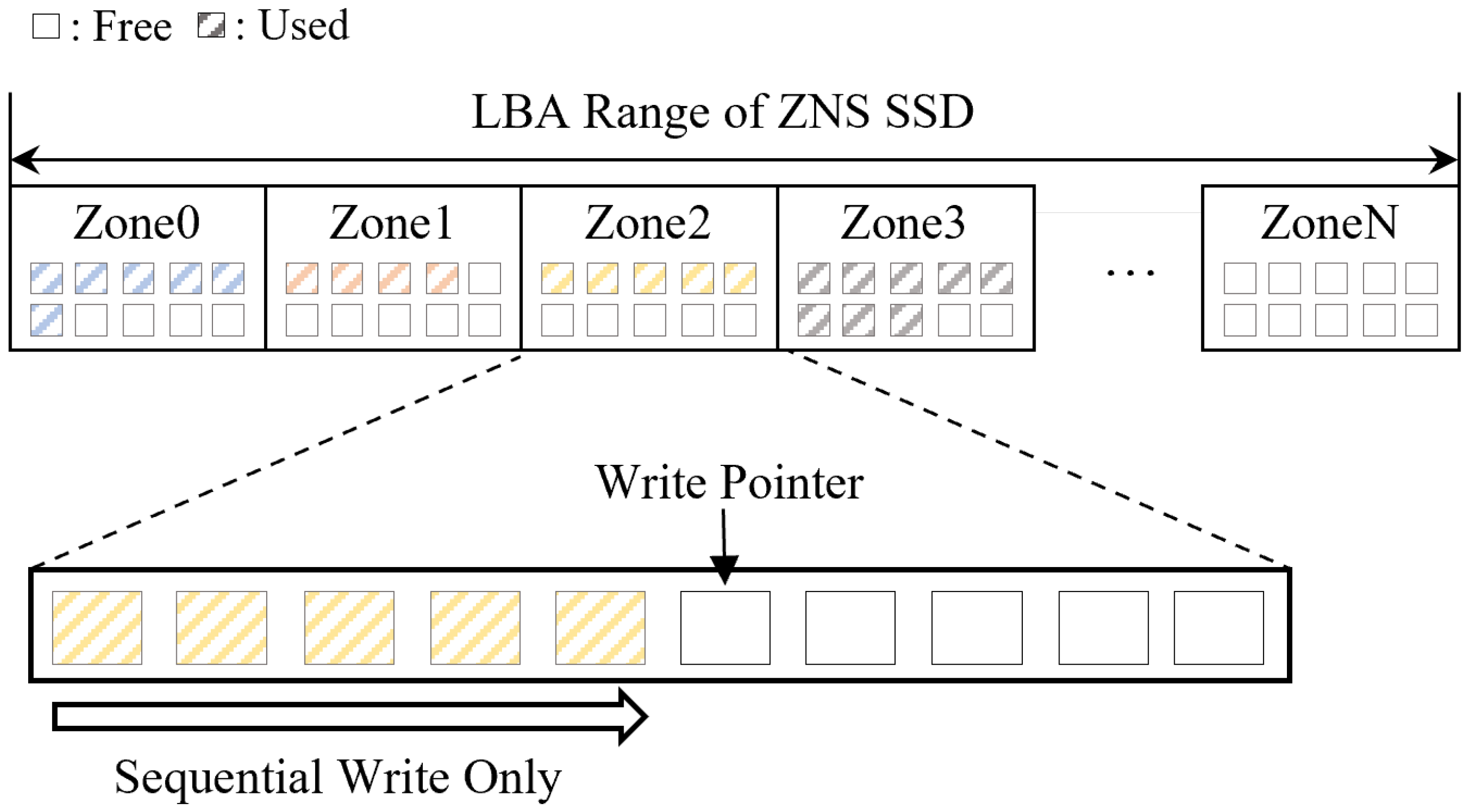

2. Background

3. Design and Implementation

| Algorithm 1 Sample pseudocode of ReZNS. |

|

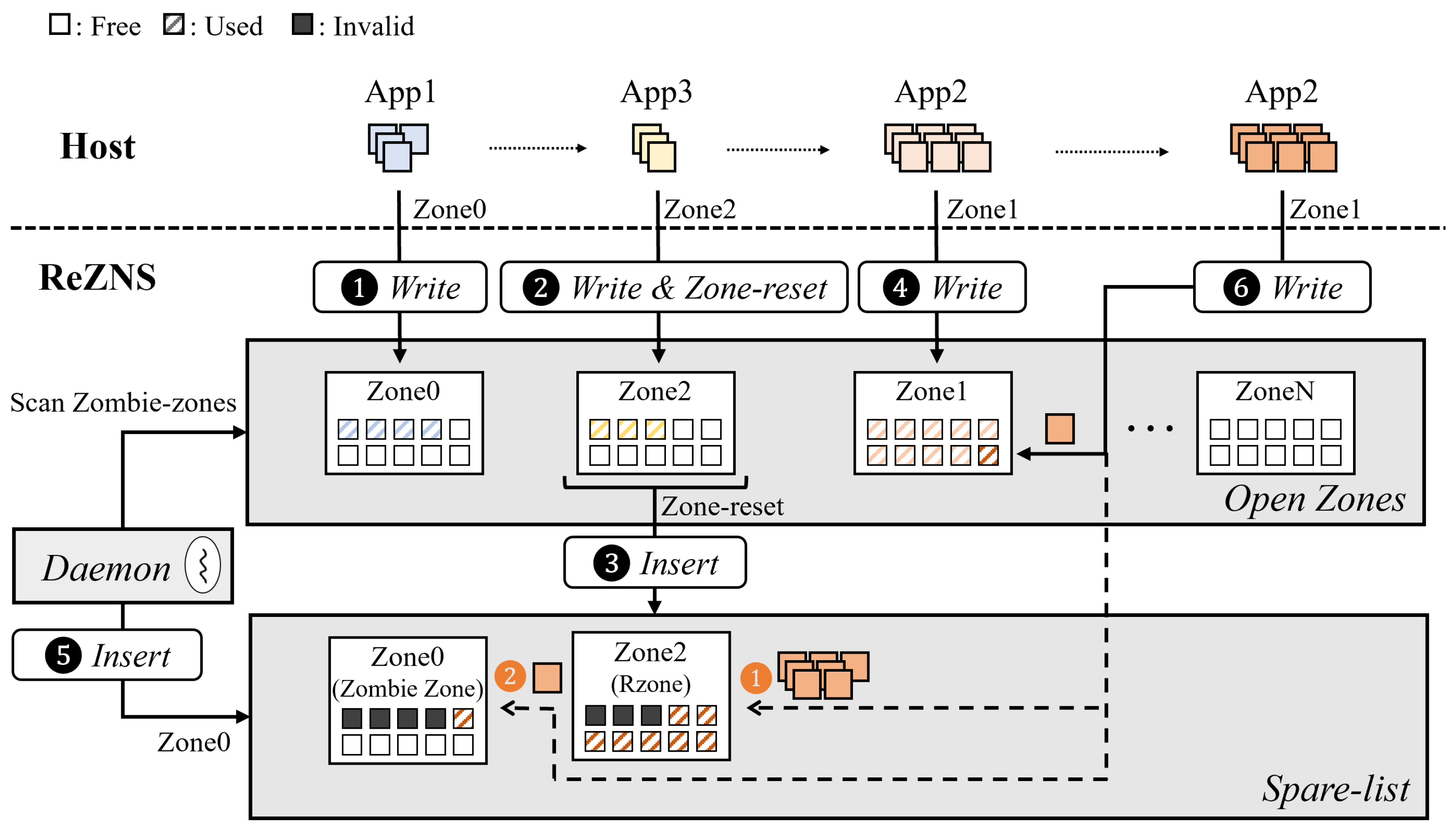

3.1. Overall Architecture of ReZNS

3.2. Example

). Then, App3 called upon the zone-reset command for Zone2 after using just three flash blocks with write operations (Step

). Then, App3 called upon the zone-reset command for Zone2 after using just three flash blocks with write operations (Step  ). In this case, ReZNS inserts Zone2 into the spare-list to reuse it in the future as its remaining capacity is 70% (Step

). In this case, ReZNS inserts Zone2 into the spare-list to reuse it in the future as its remaining capacity is 70% (Step  ). After 30 min, App2 starts to work and it consumes nine flash blocks to store its data (Step

). After 30 min, App2 starts to work and it consumes nine flash blocks to store its data (Step  ). Next, the daemon of ReZNS inserts Zone0, which has not used for one hour, into the spare-list by identifying it as a zombie zone(Step

). Next, the daemon of ReZNS inserts Zone0, which has not used for one hour, into the spare-list by identifying it as a zombie zone(Step  ).

). ). In this case, App2 first uses the last flash block belonging to Zone1 because it is available. After that, it tries to create a new zone because more flash blocks are needed in practice. At this time, since the spare-list includes two Rzones, ReZNS assigns Zone2 to App2 to recycle its remaining capacity again instead of making a new zone. Note that Zone2 has a high priority in the list because the remaining capacity for Zone2 and Zone0 is 70% and 60%, respectively. Then, App2 resumes a series of write operations from the second block to the end block (Step

). In this case, App2 first uses the last flash block belonging to Zone1 because it is available. After that, it tries to create a new zone because more flash blocks are needed in practice. At this time, since the spare-list includes two Rzones, ReZNS assigns Zone2 to App2 to recycle its remaining capacity again instead of making a new zone. Note that Zone2 has a high priority in the list because the remaining capacity for Zone2 and Zone0 is 70% and 60%, respectively. Then, App2 resumes a series of write operations from the second block to the end block (Step  ). Meanwhile, App2 never recognizes that it uses the remaining space of Zone2 (i.e., 70%); thus, ReZNS should add three flash blocks to provide ten flash blocks per zone, consistently. To do so, ReZNS assigns Zone0 to App2 once again when free space in Zone2 is insufficient and then records data belonging to the following 9 blocks in Zone0 (Step

). Meanwhile, App2 never recognizes that it uses the remaining space of Zone2 (i.e., 70%); thus, ReZNS should add three flash blocks to provide ten flash blocks per zone, consistently. To do so, ReZNS assigns Zone0 to App2 once again when free space in Zone2 is insufficient and then records data belonging to the following 9 blocks in Zone0 (Step  ).

). and improve space efficiency by reusing eight flash blocks across Zone2 and Zone0 (Step

and improve space efficiency by reusing eight flash blocks across Zone2 and Zone0 (Step  –Step

–Step  ). We believe this is very meaningful in that ReZNS reduces the overall energy consumption in storage devices.

). We believe this is very meaningful in that ReZNS reduces the overall energy consumption in storage devices.4. Evaluation

4.1. Experimental Setup

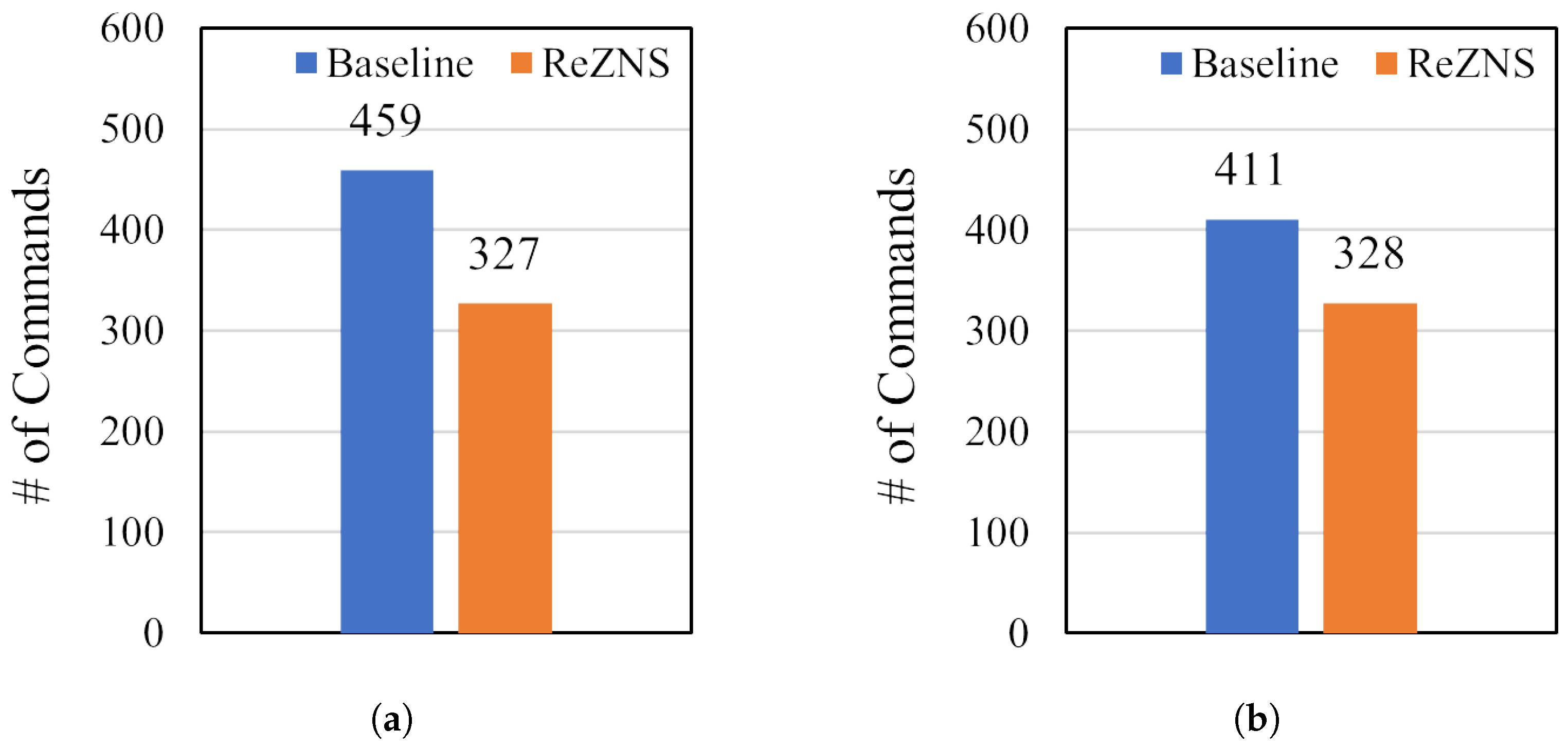

4.2. FIO Benchmark

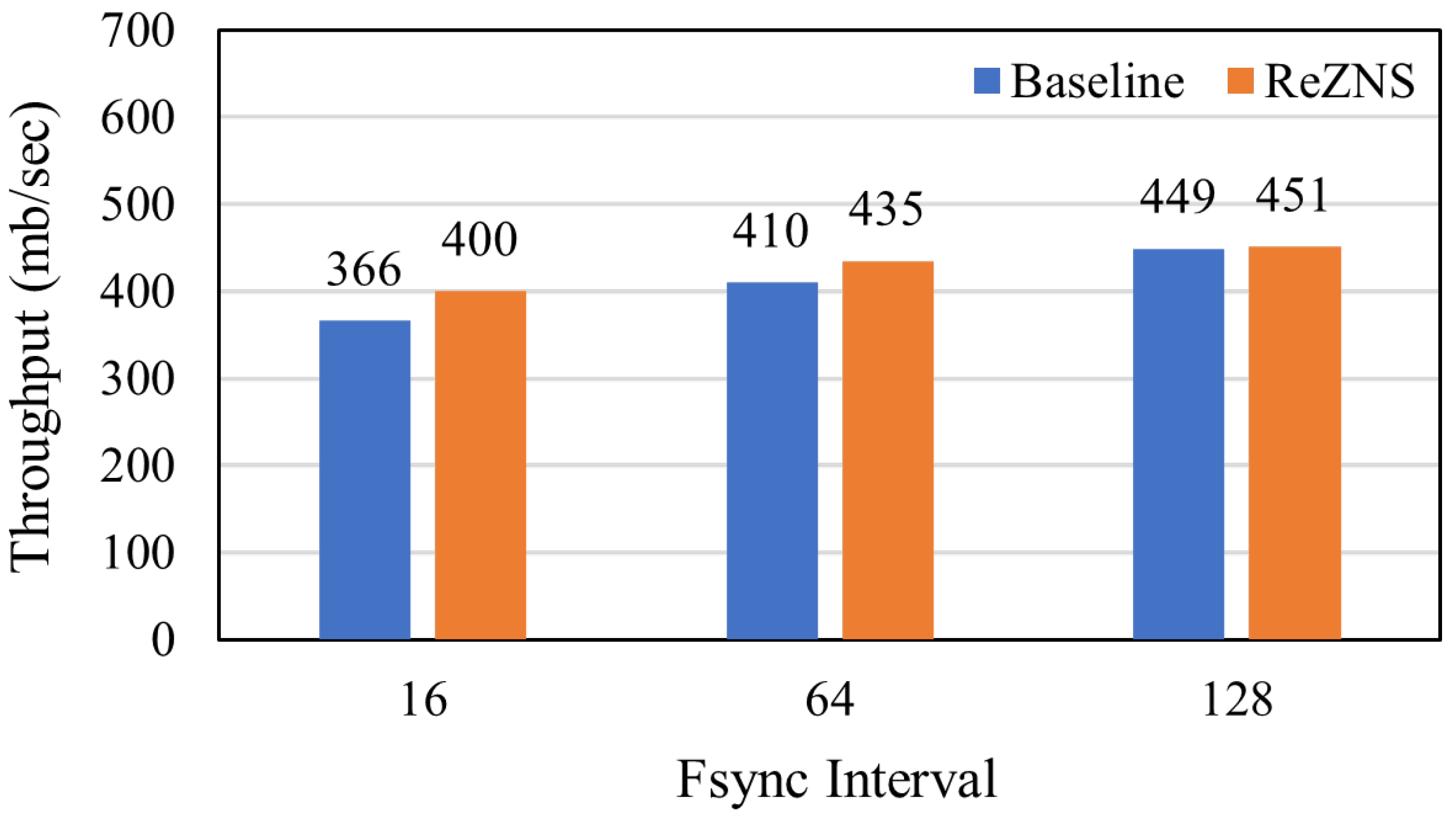

4.3. Filebench Benchmark

4.4. YCSB Benchmark

4.5. Energy Efficiency

5. Related Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SSD | Solid State Disk |

| HDD | Hard Disk Drive |

| ZNS | Zoned Namespace |

| ReZNS | Renewable-Zoned Namespace |

| DL | Deep Learning |

| MLC | Multi-Level Cell |

| TLC | Triple-Level Cell |

| QLC | Quadruple Level Cell |

| FTL | Flash Translation Layer |

| GC | Garbage Collection |

| LFS | Log-structured Filesystem |

| Nzone | Normal Zone |

| Rzone | Renewable Zone |

| ReGC | Renewable-zone Garbage Collection |

References

- Geng, H.; Sun, Y.; Li, Y.; Leng, J.; Zhu, X.; Zhan, X.; Li, Y.; Zhao, F.; Liu, Y. TESLA: Thermally Safe, Load-Aware, and Energy-Efficient Cooling Control System for Data Centers. In Proceedings of the 53rd International Conference on Parallel Processing, Gotland, Sweden, 12–15 August 2024; pp. 939–949. [Google Scholar]

- Anderson, T.; Belay, A.; Chowdhury, M.; Cidon, A.; Zhang, I. Treehouse: A case for carbon-aware datacenter software. ACM SIGENERGY Energy Inform. Rev. 2023, 3, 64–70. [Google Scholar] [CrossRef]

- Eilam, T.; Bose, P.; Carloni, L.P.; Cidon, A.; Franke, H.; Kim, M.A.; Lee, E.K.; Naghshineh, M.; Parida, P.; Stein, C.S.; et al. Reducing Datacenter Compute Carbon Footprint by Harnessing the Power of Specialization: Principles, Metrics, Challenges and Opportunities. IEEE Trans. Semicond. Manuf. 2024, 1–8. [Google Scholar] [CrossRef]

- Bose, R.; Roy, S.; Mondal, H.; Chowdhury, D.R.; Chakraborty, S. Energy-efficient approach to lower the carbon emissions of data centers. Computing 2021, 103, 1703–1721. [Google Scholar] [CrossRef]

- Qiao, F.; Fang, Y.; Cidon, A. Energy-Aware Process Scheduling in Linux. In Proceedings of the 3rd Workshop on Sustainable Computer Systems (HotCarbon 2024); ACM: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- Park, S.J.; Govindan, R.; Shen, K.; Culler, D.; Özcan, F.; Kim, G.W.; Levy, H. Lovelock: Towards Smart NIC-hosted Clusters. arXiv 2023, arXiv:2309.12665. [Google Scholar]

- SAMSUNG. TV, AV & Displays. Available online: https://www.samsung.com/global/sustainability/focus/products/tv-av-displays/ (accessed on 15 September 2024).

- Radovanović, A.; Koningstein, R.; Schneider, I.; Chen, B.; Duarte, A.; Roy, B.; Xiao, D.; Haridasan, M.; Hung, P.; Care, N.; et al. Carbon-aware computing for datacenters. IEEE Trans. Power Syst. 2022, 38, 1270–1280. [Google Scholar] [CrossRef]

- Cao, Z.; Zhou, X.; Hu, H.; Wang, Z.; Wen, Y. Toward a systematic survey for carbon neutral data centers. IEEE Commun. Surv. Tutor. 2022, 24, 895–936. [Google Scholar] [CrossRef]

- Acun, B.; Lee, B.; Kazhamiaka, F.; Maeng, K.; Gupta, U.; Chakkaravarthy, M.; Brooks, D.; Wu, C.J. Carbon explorer: A holistic framework for designing carbon aware datacenters. In Proceedings of the 28th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Vancouver, BC, Canada, 25–29 March 2023; Volume 2, pp. 118–132. [Google Scholar]

- Lyu, J.; Wang, J.; Frost, K.; Zhang, C.; Irvene, C.; Choukse, E.; Fonseca, R.; Bianchini, R.; Kazhamiaka, F.; Berger, D.S. Myths and misconceptions around reducing carbon embedded in cloud platforms. In Proceedings of the 2nd Workshop on Sustainable Computer Systems, Boston, MA, USA, 9 July 2023; pp. 1–7. [Google Scholar]

- 24/7 Carbon-Free Energy by 2030. Available online: https://www.google.com/about/datacenters/cleanenergy/ (accessed on 30 August 2024).

- Energy. Available online: https://sustainability.atmeta.com/energy/ (accessed on 30 August 2024).

- PyTorch. Available online: https://pytorch.org/ (accessed on 20 July 2021).

- Tensorflow. Available online: https://www.tensorflow.org/?hl=en (accessed on 20 July 2021).

- Panda, P.; Sengupta, A.; Roy, K. Energy-efficient and improved image recognition with conditional deep learning. ACM J. Emerg. Technol. Comput. Syst. (JETC) 2017, 13, 1–21. [Google Scholar] [CrossRef]

- Peng, Y.; Bao, Y.; Chen, Y.; Wu, C.; Guo, C. Optimus: An efficient dynamic resource scheduler for deep learning clusters. In Proceedings of the Thirteenth EuroSys Conference, Porto, Portugal, 23–26 April 2018; pp. 1–14. [Google Scholar]

- Menghani, G. Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Bjørling, M.; Aghayev, A.; Holmberg, H.; Ramesh, A.; Le Moal, D.; Ganger, G.R.; Amvrosiadis, G. ZNS: Avoiding the block interface tax for flash-based SSDs. In Proceedings of the 2021 USENIX Annual Technical Conference (USENIX ATC 21), Virtual, 14–16 July 2021; pp. 689–703. [Google Scholar]

- Han, K.; Gwak, H.; Shin, D.; Hwang, J. ZNS+: Advanced zoned namespace interface for supporting in-storage zone compaction. In Proceedings of the 15th USENIX Symposium on Operating Systems Design and Implementation (OSDI 21), Virtual, 14–16 July 2021; pp. 147–162. [Google Scholar]

- Song, I.; Oh, M.; Kim, B.S.J.; Yoo, S.; Lee, J.; Choi, J. Confzns: A novel emulator for exploring design space of zns ssds. In Proceedings of the 16th ACM International Conference on Systems and Storage, Haifa, Israel, 5–7 June 2023; pp. 71–82. [Google Scholar]

- Long, L.; He, S.; Shen, J.; Liu, R.; Tan, Z.; Gao, C.; Liu, D.; Zhong, K.; Jiang, Y. WA-Zone: Wear-Aware Zone Management Optimization for LSM-Tree on ZNS SSDs. ACM Trans. Archit. Code Optim. 2024, 21, 1–23. [Google Scholar] [CrossRef]

- Liu, R.; Tan, Z.; Shen, Y.; Long, L.; Liu, D. Fair-zns: Enhancing fairness in zns ssds through self-balancing I/O scheduling. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2022, 43, 2012–2022. [Google Scholar] [CrossRef]

- Byeon, S.; Ro, J.; Jamil, S.; Kang, J.U.; Kim, Y. A free-space adaptive runtime zone-reset algorithm for enhanced ZNS efficiency. In Proceedings of the 15th ACM Workshop on Hot Topics in Storage and File Systems, Boston, MA, USA, 9 July 2023; pp. 109–115. [Google Scholar]

- Huang, D.; Feng, D.; Liu, Q.; Ding, B.; Zhao, W.; Wei, X.; Tong, W. SplitZNS: Towards an efficient LSM-tree on zoned namespace SSDs. ACM Trans. Archit. Code Optim. 2023, 20, 1–26. [Google Scholar] [CrossRef]

- Liu, R.; Chen, J.; Chen, P.; Long, L.; Xiong, A.; Liu, D. Hi-ZNS: High Space Efficiency and Zero-Copy LSM-Tree Based Stores on ZNS SSDs. In Proceedings of the 53rd International Conference on Parallel Processing, Gotland, Sweden, 12–15 August 2024; pp. 1217–1226. [Google Scholar]

- Hwang, J.Y.; Kim, S.; Park, D.; Song, Y.G.; Han, J.; Choi, S.; Cho, S.; Won, Y. ZMS: Zone Abstraction for Mobile Flash Storage. In Proceedings of the 2024 USENIX Annual Technical Conference (USENIX ATC 24), Santa Clara, CA, USA, 10–12 July 2024; pp. 173–189. [Google Scholar]

- Kim, S.H.; Shim, J.; Lee, E.; Jeong, S.; Kang, I.; Kim, J.S. NVMeVirt: A Versatile Software-defined Virtual NVMe Device. In Proceedings of the 21st USENIX Conference on File and Storage Technologies (FAST 23), Santa Clara, CA, USA, 21–23 February 2023; pp. 379–394. [Google Scholar]

- Cooper, B.F.; Silberstein, A.; Tam, E.; Ramakrishnan, R.; Sears, R. Benchmarking cloud serving systems with YCSB. In Proceedings of the 1st ACM Symposium on Cloud Computing, Indianapolis, IN, USA, 10–11 June 2010; pp. 143–154. [Google Scholar]

- Cai, Y.; Ghose, S.; Haratsch, E.F.; Luo, Y.; Mutlu, O. Reliability issues in flash-memory-based solid-state drives: Experimental analysis, mitigation, recovery. InInside Solid State Drives (SSDs); Springer: Singapore, 2018; pp. 233–341. [Google Scholar]

- Pan, Y.; Li, Y.; Zhang, H.; Chen, H.; Lin, M. GFTL: Group-level mapping in flash translation layer to provide efficient address translation for NAND flash-based SSDs. IEEE Trans. Consum. Electron. 2020, 66, 242–250. [Google Scholar] [CrossRef]

- Yadgar, G.; Gabel, M.; Jaffer, S.; Schroeder, B. SSD-based workload characteristics and their performance implications. ACM Trans. Storage (TOS) 2021, 17, 1–26. [Google Scholar] [CrossRef]

- Liu, C.Y.; Lee, Y.; Jung, M.; Kandemir, M.T.; Choi, W. Prolonging 3D NAND SSD lifetime via read latency relaxation. In Proceedings of the 26th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Virtual, 19–23 April 2021; pp. 730–742. [Google Scholar]

- Zhu, G.; Han, J.; Son, Y. A preliminary study: Towards parallel garbage collection for NAND flash-based SSDs. IEEE Access 2020, 8, 223574–223587. [Google Scholar] [CrossRef]

- Jazzar, M.; Hamad, M. Comparing hdd to ssd from a digital forensic perspective. In Proceedings of the International Conference on Intelligent Cyber-Physical Systems: ICPS 2021, Jessup, MD, USA, 18–24 September 2021; Springer: Singapore, 2022; pp. 169–181. [Google Scholar]

- Shi, L.; Luo, L.; Lv, Y.; Li, S.; Li, C.; Sha, E.H.M. Understanding and optimizing hybrid ssd with high-density and low-cost flash memory. In Proceedings of the 2021 IEEE 39th International Conference on Computer Design (ICCD), Storrs, CT, USA, 24–27 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 236–243. [Google Scholar]

- Takai, Y.; Fukuchi, M.; Kinoshita, R.; Matsui, C.; Takeuchi, K. Analysis on heterogeneous ssd configuration with quadruple-level cell (qlc) nand flash memory. In Proceedings of the 2019 IEEE 11th International Memory Workshop (IMW), Monterey, CA, USA, 12–15 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Liang, S.; Qiao, Z.; Tang, S.; Hochstetler, J.; Fu, S.; Shi, W.; Chen, H.B. An empirical study of quad-level cell (qlc) nand flash ssds for big data applications. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3676–3685. [Google Scholar]

- Li, Q.; Li, H.; Zhang, K. A survey of SSD lifecycle prediction. In Proceedings of the 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 18–20 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 195–198. [Google Scholar]

- Han, L.; Shen, Z.; Shao, Z.; Li, T. Optimizing RAID/SSD controllers with lifetime extension for flash-based SSD array. ACM SIGPLAN Not. 2018, 53, 44–54. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, K.; Huang, P.; Wang, H.; Hu, J.; Wang, Y.; Ji, Y.; Cheng, B. A machine learning based write policy for SSD cache in cloud block storage. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1279–1282. [Google Scholar]

- Chen, X.; Li, Y.; Zhang, T. Reducing flash memory write traffic by exploiting a few MBs of capacitor-powered write buffer inside solid-state drives (SSDs). IEEE Trans. Comput. 2018, 68, 426–439. [Google Scholar] [CrossRef]

- Wang, H.; Yi, X.; Huang, P.; Cheng, B.; Zhou, K. Efficient SSD caching by avoiding unnecessary writes using machine learning. In Proceedings of the 47th International Conference on Parallel Processing, Eugene, OR, USA, 13–16 August 2018; pp. 1–10. [Google Scholar]

- Jung, M.; Choi, W.; Kwon, M.; Srikantaiah, S.; Yoo, J.; Kandemir, M.T. Design of a host interface logic for GC-free SSDs. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2019, 39, 1674–1687. [Google Scholar] [CrossRef]

- Garrett, T.; Yang, J.; Zhang, Y. Enabling intra-plane parallel block erase in NAND flash to alleviate the impact of garbage collection. In Proceedings of the International Symposium on Low Power Electronics and Design, Seattle, WA, USA, 23–25 July 2018; pp. 1–6. [Google Scholar]

- Chen, H.; Li, C.; Pan, Y.; Lyu, M.; Li, Y.; Xu, Y. HCFTL: A locality-aware page-level flash translation layer. In Proceedings of the 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), Florence, Italy, 25–29 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 590–593. [Google Scholar]

- Zhou, Y.; Wu, Q.; Wu, F.; Jiang, H.; Zhou, J.; Xie, C. Remap-SSD: Safely and Efficiently Exploiting SSD Address Remapping to Eliminate Duplicate Writes. In Proceedings of the 19th USENIX Conference on File and Storage Technologies (FAST 21), Virtual, 23–25 February 2021; pp. 187–202. [Google Scholar]

- Kim, S.; Han, J.; Eom, H.; Son, Y. Improving I/O performance in distributed file systems for flash-based SSDs by access pattern reshaping. Future Gener. Comput. Syst. 2021, 115, 365–373. [Google Scholar] [CrossRef]

- Liu, J.; Chai, Y.P.; Qin, X.; Liu, Y.H. Endurable SSD-based read cache for improving the performance of selective restore from deduplication systems. J. Comput. Sci. Technol. 2018, 33, 58–78. [Google Scholar] [CrossRef]

- Lee, C.; Sim, D.; Hwang, J.; Cho, S. F2FS: A new file system for flash storage. In Proceedings of the 13th USENIX Conference on File and Storage Technologies (FAST 15), Santa Clara, CA, USA, 16–19 February 2015; pp. 273–286. [Google Scholar]

- Li, H.L.; Yang, C.L.; Tseng, H.W. Energy-Aware Flash Memory Management in Virtual Memory System. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2008, 16, 952–964. [Google Scholar] [CrossRef]

- Axboe, J. Flexible I/O Tester (fio). 2024. Available online: https://github.com/axboe/fio (accessed on 30 August 2024).

- Vasily, T. Filebench: A flexible framework for file system benchmarking. login. USENIX Mag. 2016, 41, 6. [Google Scholar]

| Item | Specifications | Unit |

|---|---|---|

| Capacity | 40 GiB | - |

| Page Size | 32 KiB | - |

| Block Size | 2 MiB | - |

| Zone Size | 64 MiB | - |

| Number of Zones | 640 | - |

| Flash Blocks per Zone | 32 | - |

| Read Latency | 47.2 s | Page |

| Write Latency | 533 s | Page |

| Erase Latency | 96 ms | Zone |

| Energy Consumption for Read | 679 nJ | Page |

| Energy Consumption for Write | 7.66 J | Page |

| Energy Consumption for Zone-reset | 1.38 mJ | Zone |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, C.; Lee, S.; Moon, G.; Kim, H.; An, D.; Kang, D. ReZNS: Energy and Performance-Optimal Mapping Mechanism for ZNS SSD. Appl. Sci. 2024, 14, 9717. https://doi.org/10.3390/app14219717

Lee C, Lee S, Moon G, Kim H, An D, Kang D. ReZNS: Energy and Performance-Optimal Mapping Mechanism for ZNS SSD. Applied Sciences. 2024; 14(21):9717. https://doi.org/10.3390/app14219717

Chicago/Turabian StyleLee, Chanyong, Sangheon Lee, Gyupin Moon, Hyunwoo Kim, Donghyeok An, and Donghyun Kang. 2024. "ReZNS: Energy and Performance-Optimal Mapping Mechanism for ZNS SSD" Applied Sciences 14, no. 21: 9717. https://doi.org/10.3390/app14219717

APA StyleLee, C., Lee, S., Moon, G., Kim, H., An, D., & Kang, D. (2024). ReZNS: Energy and Performance-Optimal Mapping Mechanism for ZNS SSD. Applied Sciences, 14(21), 9717. https://doi.org/10.3390/app14219717