Real-Time Motion Adaptation with Spatial Perception for an Augmented Reality Character

Abstract

1. Introduction

2. Related Work

2.1. Interactive Augmented Reality Technologies

2.2. Augmented Reality (MR) Character

2.3. Motion Adaptation in AR Environments

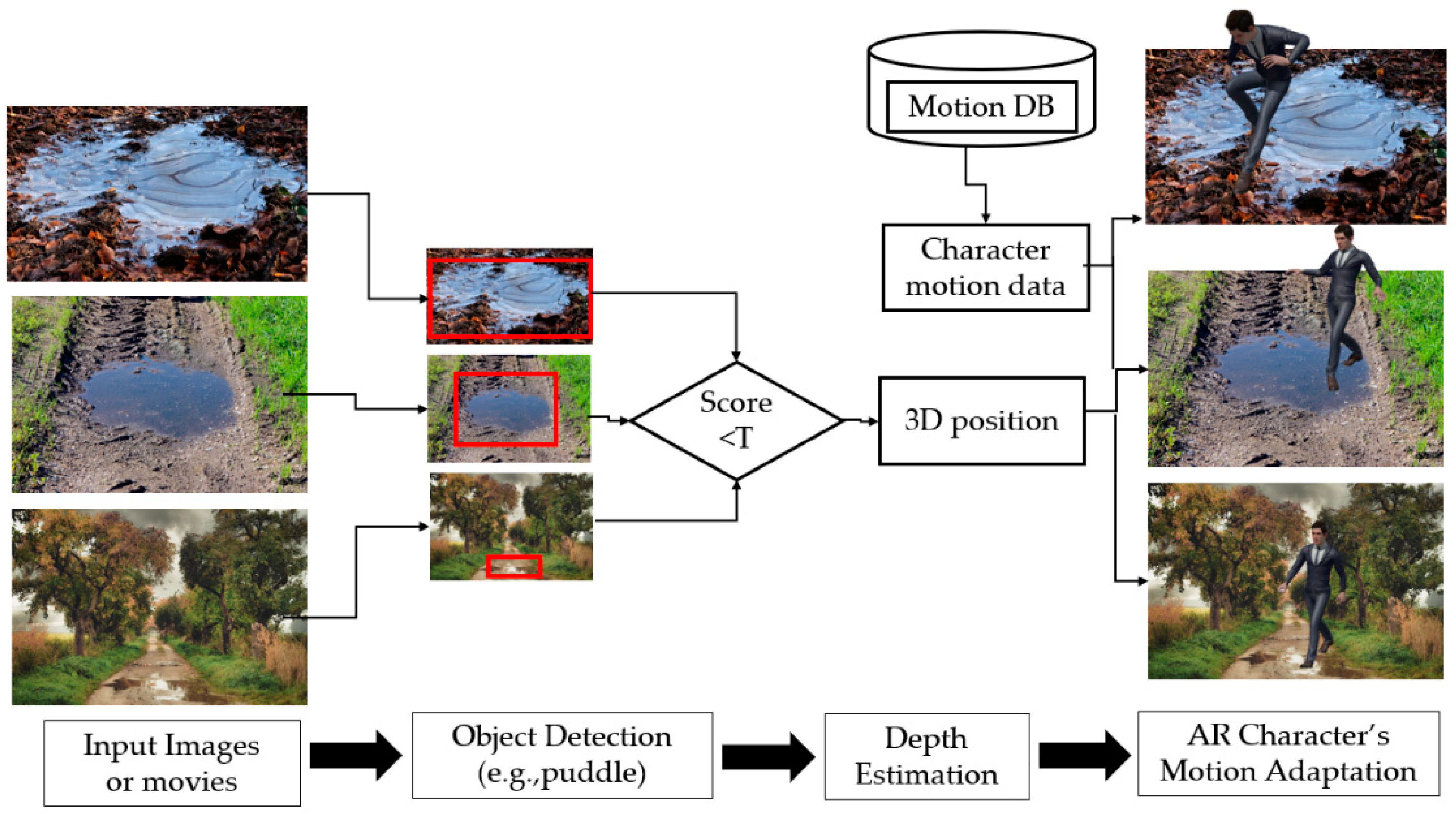

3. System Overview and Approach

4. Implementation

| Algorithm 1. Motion adaption algorithm of the AR character determined by spatial properties in the real-world configurations |

| Input: Original motions and configurations of the AR character’s surrounding environment |

| Output: Adapted motions |

| Function Adapted_motion(Detected object, Original motion) |

| // Estimate newly updated motion to apply detected objects |

| if motion_transition_value > detection_threshold |

| // Calculate 3D position/rotation/scale factors to be superimposed |

| 3D transformation ← depth maps from multi-view 2D images |

| for each motion(m) in all motions(M) |

| // match the character behavior using fuzzy inference |

| updated_character_motion ← original_motion + detected object |

| End for |

| End if |

| Return Adapted motion for animation controller, 3D transformation data |

5. Experiments and Results

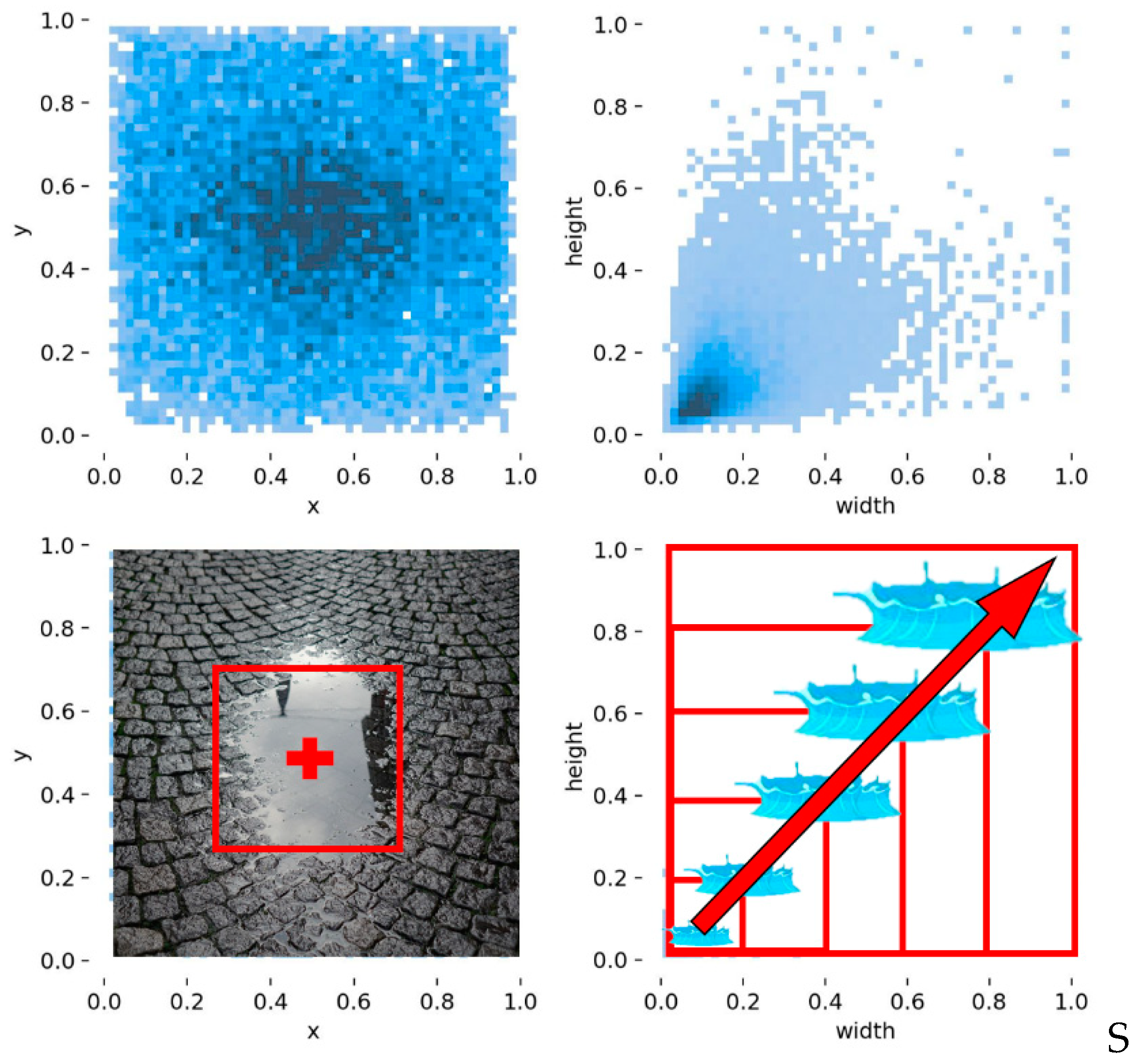

5.1. Experiment 1: Object Detection Accuracy for an AR Environment

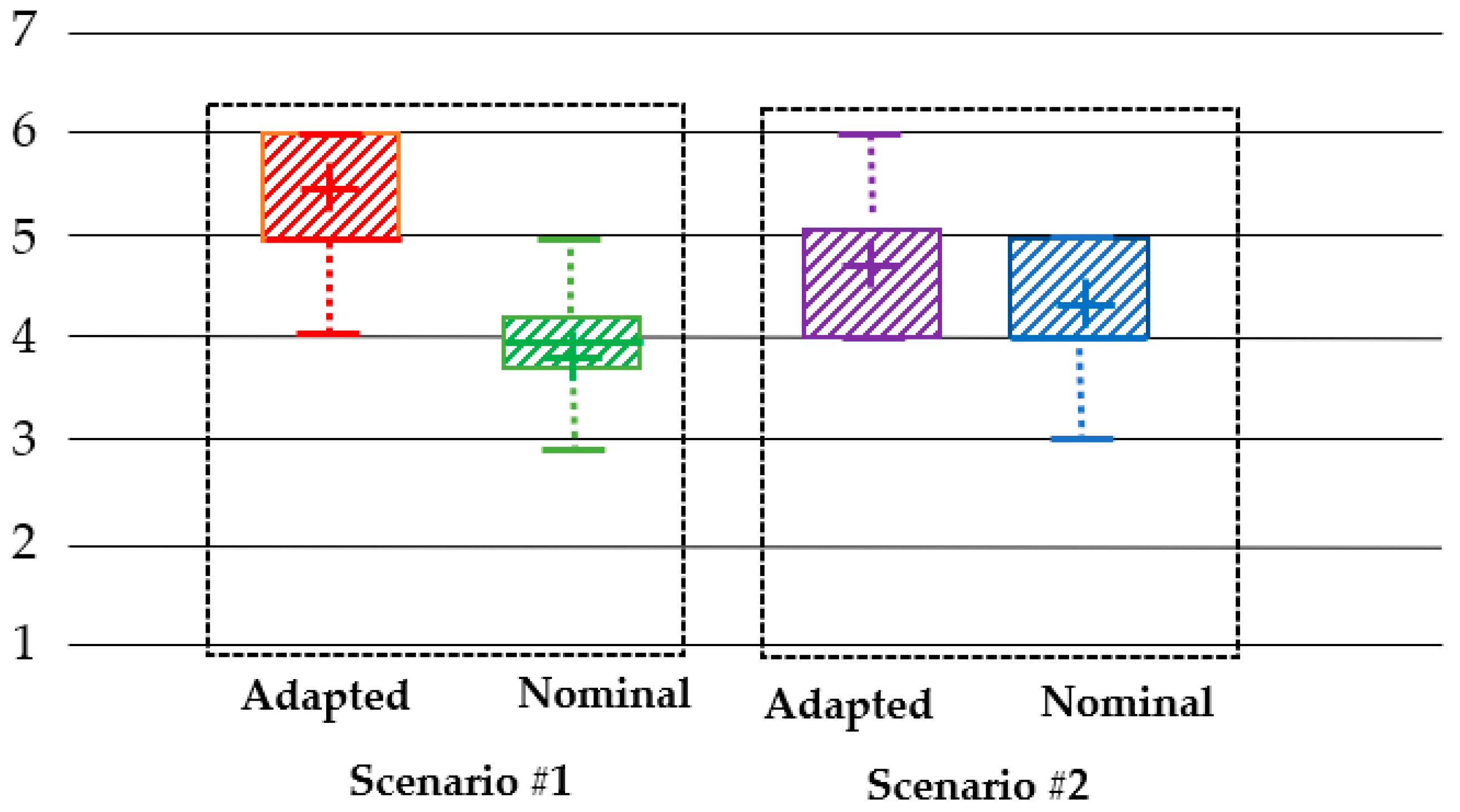

5.2. Experiment 2: Qualitative Comparison with Co-Presence

5.3. Discussion and Limitations

6. Conclusions and Future Research Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rokhsaritalemi, S.; Niaraki, A.; Choi, S. A review on mixed reality: Current trends, challenges, and prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef]

- Pejsa, T.; Kantor, J.; Benko, H.; Ofek, E.; Wilson, A. Room2Room: Enabling life-size telepresence in a projected augmented reality environment. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW), San Francisco, CA, USA, 27 February–2 March 2016. [Google Scholar]

- Jo, D.; Kim, K.-H.; Kim, G.J. Spacetime: Adaptive control of the teleported avatar for improved AR tele-conference experience. Comput. Animat. Virtual Worlds 2015, 26, 259–269. [Google Scholar] [CrossRef]

- Yoon, D.; Oh, A. Design of metaverse for two-way video conferencing platform based on virtual reality. J. Inf. Commun. Converg. Eng. 2022, 20, 189–194. [Google Scholar] [CrossRef]

- Paavilainen, J.; Korhonen, H.; Alha, K.; Stenros, J.; Joshinen, E.; Mayra, F. The Pokemon GO experience: A location-based augmented reality mobile game goes mainstream. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017. [Google Scholar]

- Liu, C.; Wang, L.; Li, Z.; Quan, S.; Xu, Y. Real-time lighting estimation for augmented reality via differentiable screen-space rendeing. IEEE Trans. Vis. Comput. Graph. 2022, 29, 2132–2145. [Google Scholar] [CrossRef] [PubMed]

- Ihsani, A.; Sukardi, S.; Soenarto, S.; Agustin, E. Augmented reality (AR)-based smartphone application as student learning media for javanese wedding make up in central java. J. Inf. Commun. Converg. Eng. 2021, 19, 248–256. [Google Scholar]

- Raskar, R.; Welch, G.; Cutts, M.; Lake, A.; Stesin, L.; Fuchs, H. The office of the future: A unified approach to image-based modeling and spatially immersive displays. In Proceedings of the SIGGRAPH, Orlando, FL, USA, 19–24 July 1998. [Google Scholar]

- Lehment, N.; Merget, D.; Rigoll, G. Creating automatically aligned consensus realities for AR videoconferencing. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014. [Google Scholar]

- Hui, Y.; Chung, K.; Wanchao, S.; Hongbo, F. ARAnimator: In-situ character animation in mobile AR with user-defined motion gestures. ACM Trans. Graph. 2020, 39, 1–12. [Google Scholar]

- Jack, C.; Keyu, C.; Weiwei, C. Comparison of marker-based AR and markerless AR: A case study on indoor decoration system. In Proceedings of the Joint Conference on Computing in Construction, Heraklion, Greece, 4–7 July 2017. [Google Scholar]

- Jo, D.; Choi, M. A real-time motion adaptation method using spatial relationships between a virtual character and its surrounding environment. J. Korea Soc. Comput. Inf. 2019, 24, 45–50. [Google Scholar]

- Reitmayr, G.; Drummond, T. Going out: Robust model-based tracking for outdoor augmented reality. In Proceedings of the IEEE/ACM International Symposium on Mixed and Augmented Reality, Santa Barbara, CA, USA, 22–25 October 2006. [Google Scholar]

- Alhakamy, A.; Tuceryan, M. AR360: Dynamic illumination for augmented reality with real-time interaction. In Proceedings of the IEEE 2nd International Conference on Information and Computer Technologies, Kahului, HI, USA, 14–17 March 2019. [Google Scholar]

- Maimone, A.; Yang, X.; Dierk, N.; State, A.; Dou, M.; Fuchs, H. General-purpose telepresence with head-worn optical see-through displays and projector-based lighting. In Proceedings of the IEEE Virtual Reality, Orlando, FL, USA, 16–20 March 2013. [Google Scholar]

- Osti, F.; Santi, G.; Caligiana, G. Real time shadow mapping for augmented reality photorealistic renderings. Appl. Sci. 2019, 9, 2225. [Google Scholar] [CrossRef]

- Alfakhori, M.; Barzallo, J.; Coors, V. Occlusion handling for mobile AR applications in indoor and outdoor scenarios. Sensors 2023, 23, 4245. [Google Scholar] [CrossRef] [PubMed]

- Kan, P.; Kafumann, H. DeepLight: Light source estimation for augmented reality using deep learning. Vis. Comput. 2019, 35, 873–883. [Google Scholar] [CrossRef]

- Beck, S.; Kunert, A.; Kulik, A.; Froehlich, B. Immersive group-to-group telepresence. IEEE Trans. Vis. Comput. Graph. 2013, 19, 616–625. [Google Scholar] [CrossRef] [PubMed]

- Feng, A.; Shapiro, A.; Ruizhe, W.; Bolas, M.; Medioni, G.; Suma, E. Rapid avatar capture and simulation using commodity depth sensors. In Proceedings of the SIGGRAPH, Vancouver, BC, Canada, 10–14 August 2014. [Google Scholar]

- Feng, A.; Casas, D.; Shapiro, A. Avatar reshaping and automatic rigging using a deformable model. In Proceedings of the 8th ACM SIGGRAPH Conference on Motion in Games (MIG), Paris, France, 16–18 November 2015. [Google Scholar]

- Wang, L.; Li, Y.; Xiong, F.; Zhang, W. Gait recognition using optical motion capture: A decision fusion based method. Sensors 2021, 21, 3496. [Google Scholar] [CrossRef] [PubMed]

- Chatzitofis, A.; Zarpalas, D.; Kollias, S.; Daras, P. DeepMoCap: Deep optical motion capture using multiple depth sensors and retro-reflectors. Sensors 2019, 19, 282. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; Jo, D. Effects on co-presence of a virtual human: A comparison of display and interaction types. Electronics 2022, 11, 367. [Google Scholar] [CrossRef]

- Kostak, M.; Slaby, A. Designing a simple fiducial marker for localization in spatial scenes using neural networks. Sensors 2021, 21, 5407. [Google Scholar] [CrossRef]

- Wang, X.; Ye, H.; Sandor, C.; Zhang, W.; Fu, H. Predict-and-drive: Avatar motion adaption in room-scale augmented reality telepresence with heterogeneous spaces. IEEE Trans. Vis. Comput. Graph. 2022, 28, 3705–3714. [Google Scholar] [CrossRef]

- Ho, E.; Komura, T.; Tai, C. Spatial relationship preserving character motion adaptation. ACM Trans. Graph. 2010, 29, 1–8. [Google Scholar] [CrossRef]

- Wang, C.; Zhou, Q.; Fitzmaurice, G.; Anderson, F. VideoPoseVR: Authoring virtual reality character animations with online videos. In Proceedings of the ACM on Human-Computer Interaction, New Orleans, LA, USA, 30 April–5 May 2022. [Google Scholar]

- Karthi, M.; Muthulakshmi, V.; Priscilla, R.; Praveen, P.; Vanisri, K. Evolution of YOLO-V5 algorithm for object detection: Automated detection of library books and performance validation of dataset. In Proceedings of the 2021 International Conference on Innovative Computing, Intelligent Communication and Smart Electrical Systems (ICSES), Chennai, India, 24–25 September 2021. [Google Scholar]

- Ghasemi, Y.; Jeong, H.; Choi, S.; Park, K.; Lee, J. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661. [Google Scholar] [CrossRef]

- Thalmann, N.M.; Yumak, Z.; Beck, A. Autonomous virtual humans and social robots in telepresence. In Proceedings of the 16th International Workshop on Multimedia Signal Processing (MMSP), Jakarta, Indonesia, 22–24 September 2014. [Google Scholar]

- Hendrawan, A.; Gernowo, R.; Nurhayati, O.; Warsito, B.; Wibowo, A. Improvement object detection algorithm based on YoloV5 with BottleneckCSP. In Proceedings of the 2022 IEEE International Conference on Communication, Networks and Satellite (COMNETSAT), Solo, Indonesia, 3–5 November 2022. [Google Scholar]

- Godard, C.; Aodha, O.; Firman, M.; Brostow, G. Digging into self-supervised monocular depth estimation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Singh, N.; Sharma, B.; Sharma, A. Performance analysis and optimization techniques in Unity3D. In Proceedings of the 3rd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 20–22 October 2022. [Google Scholar]

- Villegas, R.; Yang, J.; Ceylan, D.; Lee, H. Neural kinematic networks for unsupervised motion retargetting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Grahn, I. The Vuforia SDK and Unity3D game engine. Bachelor Thesis, Linkoping University, Linköping, Sweden, 2017. [Google Scholar]

- Paludan, A.; Elbaek, J.; Mortensen, M.; Zobbe, M. Disquising rotational gain for redirected walking in virtual reality: Effect of visual density. In Proceedings of the IEEE Virtual Reality, Greenville, SC, USA, 19–23 March 2016. [Google Scholar]

- Niklas, O.; Michael, P.; Oliver, B.; Gordon, G.B.; Marc, J.; Nicolas, K. The role of social presence for cooperation in augmented reality on head mounted devices: A literature review. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021. [Google Scholar]

- Witmer, G.; Singer, M.J. Measuring presence in virtual environments: A Presence questionnaire. Presence Teleoperators Virtual Environ. 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Lee, T.; Jung, C.; Lee, K.; Seo, S. A study on recognizing multi-real world object and estimating 3D position in augmented reality. J. Supercomput. 2022, 78, 7509–7528. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.; Chae, H.; Kim, Y.; Choi, J.; Kim, K.-H.; Jo, D. Real-Time Motion Adaptation with Spatial Perception for an Augmented Reality Character. Appl. Sci. 2024, 14, 650. https://doi.org/10.3390/app14020650

Kim D, Chae H, Kim Y, Choi J, Kim K-H, Jo D. Real-Time Motion Adaptation with Spatial Perception for an Augmented Reality Character. Applied Sciences. 2024; 14(2):650. https://doi.org/10.3390/app14020650

Chicago/Turabian StyleKim, Daehwan, Hyunsic Chae, Yongwan Kim, Jinsung Choi, Ki-Hong Kim, and Dongsik Jo. 2024. "Real-Time Motion Adaptation with Spatial Perception for an Augmented Reality Character" Applied Sciences 14, no. 2: 650. https://doi.org/10.3390/app14020650

APA StyleKim, D., Chae, H., Kim, Y., Choi, J., Kim, K.-H., & Jo, D. (2024). Real-Time Motion Adaptation with Spatial Perception for an Augmented Reality Character. Applied Sciences, 14(2), 650. https://doi.org/10.3390/app14020650