An Algorithm for Predicting Vehicle Behavior in High-Speed Scenes Using Visual and Dynamic Graphical Neural Network Inference

Abstract

1. Introduction

- (1)

- Visual and graph neural combination: integrating visual perception with dynamic graph neural networks (DMR-GCN) to enhance behavior prediction in high-speed scenes;

- (2)

- Multilayer graph modeling: creating a graph model to capture vehicle interactions at different spatio-temporal levels. Nodes represent vehicles or road objects, while edges reflect real-time interactions, with DMR-GCN dynamically adjusting edge weights;

- (3)

- Adaptive network tuning: introducing an adaptive mechanism in DMR-GCN for real-time interaction capture and feature processing to improve prediction accuracy;

- (4)

- Dynamic scene learning: implementing real-time learning strategies based on vehicle position, speed, and road conditions to improve accuracy and safety.

2. Related Work

2.1. Vehicle and Traffic Element Identification

2.2. Vehicle Behavior Understanding

2.3. Graph Neural Networks

2.4. Current Challenges and Future Directions of Work

- (1)

- Developing more robust graph neural network architectures that adapt to varying traffic complexities;

- (2)

- Integrating diverse data streams from multiple sources, such as radar, LiDAR, and telematics, to enhance model comprehensiveness and accuracy;

- (3)

- Investigating online learning and self-adaptive mechanisms to enable dynamic adjustment to shifting traffic environments;

- (4)

- Optimizing computational resource usage to meet real-time application requirements.

3. Materials and Methods

- (1)

- The dynamic multilevel relational graph (DMRG) design accurately captures the complex dynamics of vehicle lane changes by representing different spatio-temporal granularities through multiple layers and updating edge weights in real time;

- (2)

- The method for generating temporal interaction graphs focuses on capturing temporal changes and complex interaction patterns such as vehicle acceleration, sharp braking, and behaviors under challenging traffic conditions (e.g., rainy days and nights). This comprehensive approach enhances the model’s understanding of dynamic vehicle interactions, improving prediction accuracy and adaptability to diverse traffic scenarios;

- (3)

- The DMR-GCN structure includes a dynamic multi-relational graph convolutional network (DMR-GCN), dynamic scene perception, and an interactive learning mechanism.

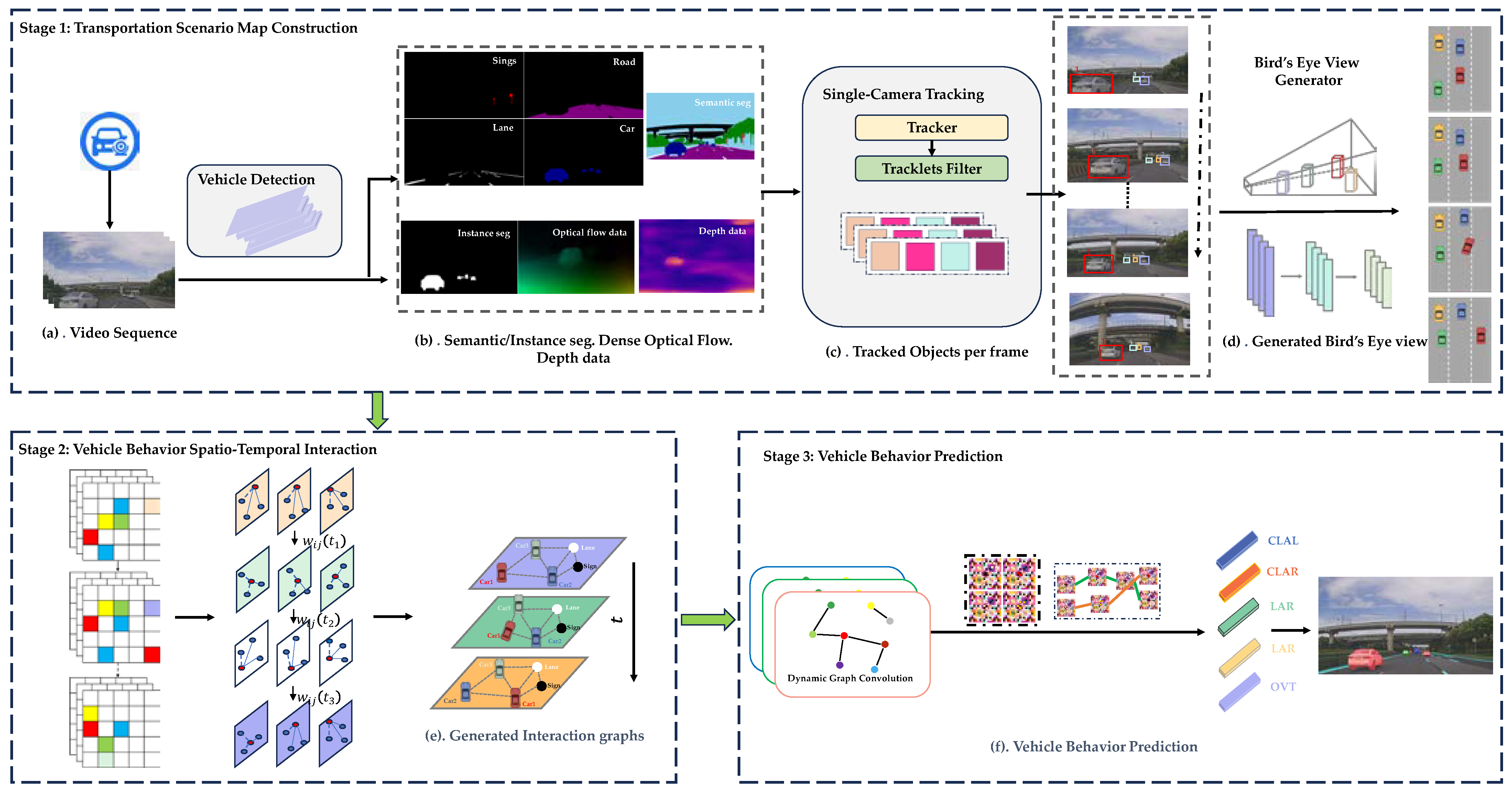

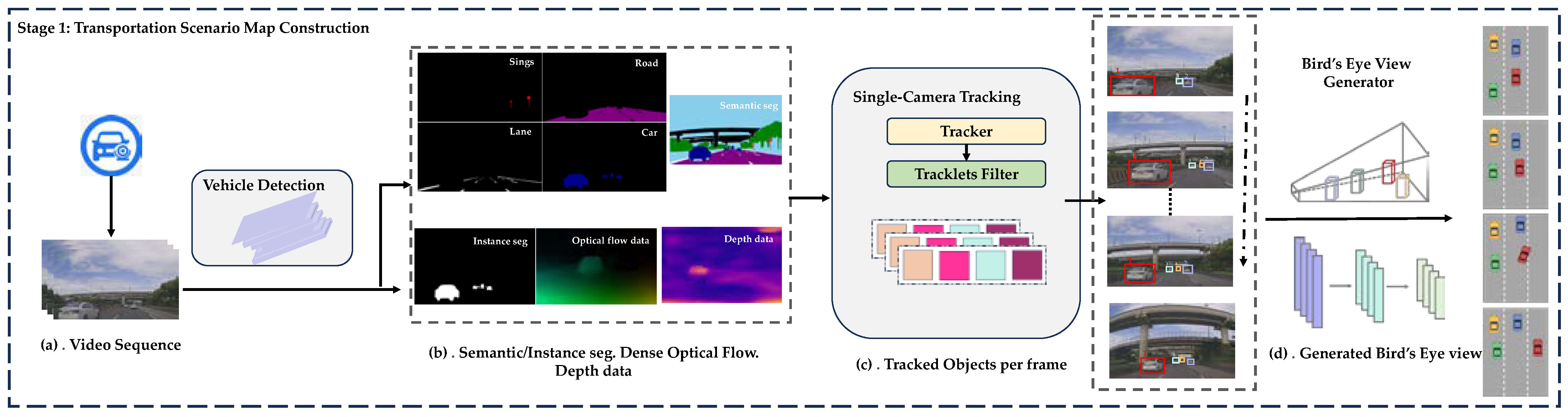

3.1. Traffic Scenario Map Construction

3.1.1. Object Tracking

3.1.2. Monocular to Bird’s-Eye View

3.1.3. Spatial Scene Maps

3.2. Vehicle Behavior Spatio-Temporal Interaction

3.2.1. Dynamic Multi-Level Relationship Diagram Modeling

3.2.2. Enhanced Temporal Interaction Diagram Generation

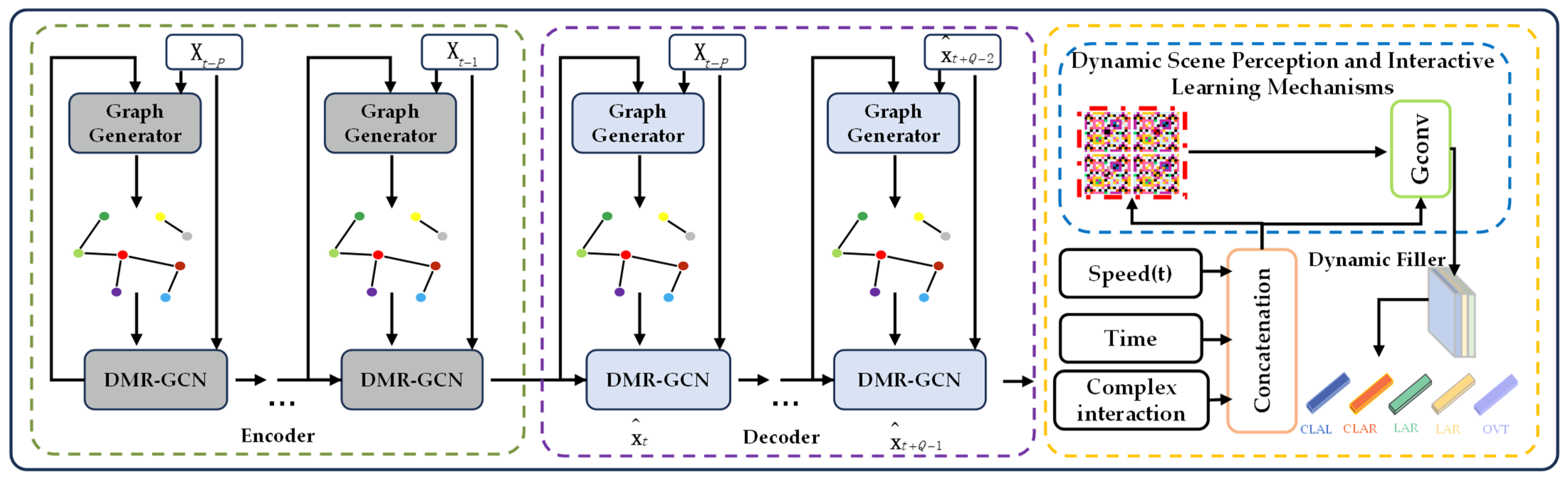

3.3. Vehicle Behavior Prediction

3.3.1. DMR-GCN

- (1)

- Adaptive Neighborhood Matrix Update Mechanism:

- (1)

- Node Feature Conversion

- (2)

- Similarity calculation

- (3)

- Weight normalization

- (4)

- Dynamic neighborhood matrix update

- (2)

- Multi-Relational Feature Fusion Mechanisms

- (1)

- Multi-relational graph convolution

- (3)

- Feature Fusion

- (4)

- Representation of Interlayer Stacking and Multi-Hop Relationships

3.3.2. Dynamic Scene Perception and Interactive Learning Mechanism

4. Experimentation and Analysis

4.1. Datasets

4.2. Qualitative Results

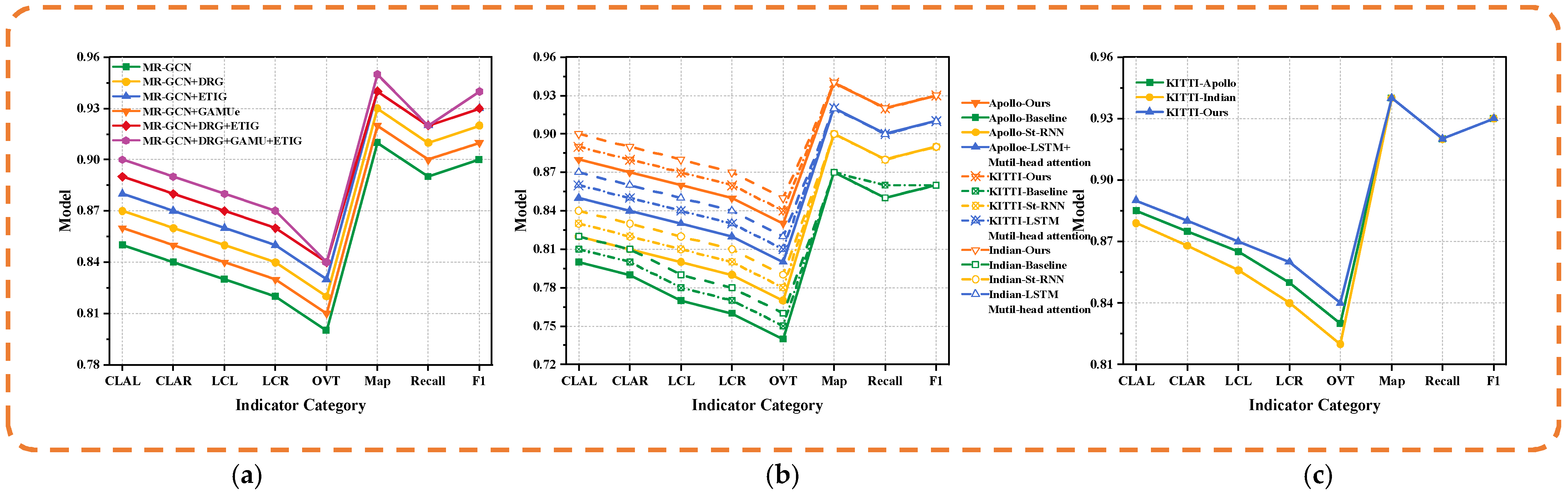

4.2.1. Ablation Experiments

4.2.2. Comparison Experiments

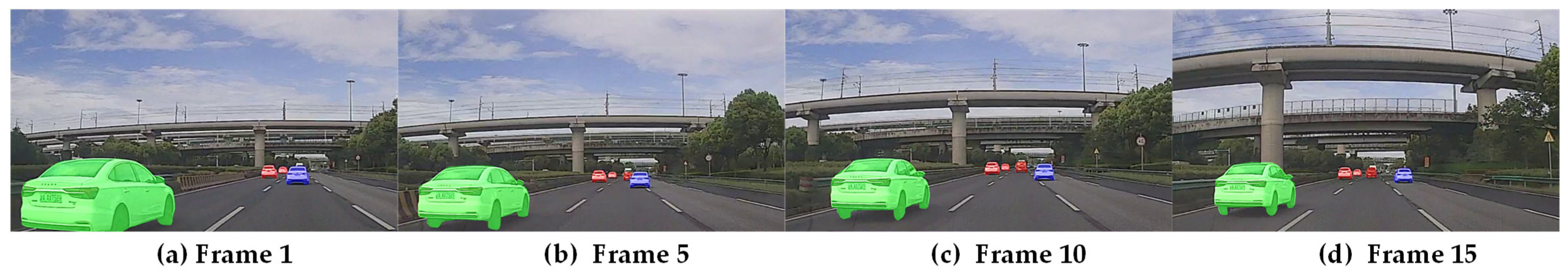

4.2.3. Visualization of Results

4.3. Transfer Learning

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1341–1360. [Google Scholar] [CrossRef]

- Kuefler, A.; Morton, J.; Wheeler, T.; Kochenderfer, M. Imitating driver behavior with generative adversarial networks. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 204–211. [Google Scholar]

- Cui, Z.; Ke, R.; Pu, Z.; Wang, Y. Stacked bidirectional and unidirectional LSTM recurrent neural network for forecasting network-wide traffic state with missing values. Transp. Res. Part C Emerg. Technol. 2020, 118, 102674. [Google Scholar] [CrossRef]

- Sharma, S.; Das, A.; Sistu, G.; Halton, M.; Eising, C. BEVSeg2TP: Surround View Camera Bird’s-Eye-View Based Joint Vehicle Segmentation and Ego Vehicle Trajectory Prediction. arXiv 2023, arXiv:2312.13081. [Google Scholar]

- Messaoud, K.; Yahiaoui, I.; Verroust-Blondet, A.; Nashashibi, F. Attention based vehicle trajectory prediction. IEEE Trans. Intell. Veh. 2020, 6, 175–185. [Google Scholar] [CrossRef]

- Chen, F.; Li, P.; Wu, C. Dgc: Training dynamic graphs with spatio-temporal non-uniformity using graph partitioning by chunks. Proc. ACM Manag. Data 2023, 1, 1–25. [Google Scholar] [CrossRef]

- Zheng, Y.; Wei, Z.; Liu, J. Decoupled graph neural networks for large dynamic graphs. arXiv 2023, arXiv:2305.08273. [Google Scholar] [CrossRef]

- Mo, X.; Xing, Y.; Lv, C. Graph and recurrent neural network-based vehicle trajectory prediction for highway driving. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 1934–1939. [Google Scholar]

- Yang, M.; Zhu, H.; Wang, T.; Cai, J.; Weng, X.; Feng, H.; Fang, K. Vehicle Interactive Dynamic Graph Neural Network Based Trajectory Prediction for Internet of Vehicles. IEEE Internet Things J. 2024. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, L.; Liu, B.; Liang, Z.; Zhang, X. Transport-Hub-Aware Spatial-Temporal Adaptive Graph Transformer for Traffic Flow Prediction. arXiv 2023, arXiv:2310.08328. [Google Scholar]

- Han, X.; Gong, S. LST-GCN: Long Short-Term Memory embedded graph convolution network for traffic flow forecasting. Electronics 2022, 11, 2230. [Google Scholar] [CrossRef]

- Kumar, R.; Mendes Moreira, J.; Chandra, J. DyGCN-LSTM: A dynamic GCN-LSTM based encoder-decoder framework for multistep traffic prediction. Appl. Intell. 2023, 53, 25388–25411. [Google Scholar] [CrossRef]

- Katayama, H.; Yasuda, S.; Fuse, T. Traffic density based travel-time prediction with GCN-LSTM. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 2908–2913. [Google Scholar]

- Zhang, T.; Guo, G. Graph attention LSTM: A spatiotemporal approach for traffic flow forecasting. IEEE Intell. Transp. Syst. Mag. 2020, 14, 190–196. [Google Scholar] [CrossRef]

- Kosaraju, V.; Sadeghian, A.; Martín-Martín, R.; Reid, I.; Rezatofighi, H.; Savarese, S. Social-bigat: Multimodal trajectory forecasting using bicycle-gan and graph attention networks. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Sun, J.; Jiang, Q.; Lu, C. Recursive social behavior graph for trajectory prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 660–669. [Google Scholar]

- Ivanovic, B.; Pavone, M. The trajectron: Probabilistic multi-agent trajectory modeling with dynamic spatiotemporal graphs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2375–2384. [Google Scholar]

- Li, X.; Ying, X.; Chuah, M.C. Grip: Graph-based interaction-aware trajectory prediction. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 3960–3966. [Google Scholar]

- Chandra, R.; Bhattacharya, U.; Bera, A.; Manocha, D. Traphic: Trajectory prediction in dense and heterogeneous traffic using weighted interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8483–8492. [Google Scholar]

- Lu, Y.; Chen, Y.; Zhao, D.; Liu, B.; Lai, Z.; Chen, J. CNN-G: Convolutional neural network combined with graph for image segmentation with theoretical analysis. IEEE Trans. Cogn. Dev. Syst. 2020, 13, 631–644. [Google Scholar] [CrossRef]

- Gao, J.; Sun, C.; Zhao, H.; Shen, Y.; Anguelov, D.; Li, C.; Schmid, C. Vectornet: Encoding hd maps and agent dynamics from vectorized representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11525–11533. [Google Scholar]

- Chaochen, Z.; Zhang, Q.; Li, D.; Li, H.; Pang, Z. Vehicle trajectory prediction based on graph attention network. In Proceedings of the Cognitive Systems and Information Processing: 6th International Conference, ICCSIP 2021, Suzhou, China, 20–21 November 2021; pp. 427–438. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 JUne 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Siradjuddin, I.A.; Muntasa, A. Faster region-based convolutional neural network for mask face detection. In Proceedings of the 2021 5th International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 24–25 November 2021; pp. 282–286. [Google Scholar]

- Li, M.; Liu, M.; Zhang, W.; Guo, W.; Chen, E.; Zhang, C. A Robust Multi-Camera Vehicle Tracking Algorithm in Highway Scenarios Using Deep Learning. Appl. Sci. 2024, 14, 7071. [Google Scholar] [CrossRef]

- Sivaraman, S.; Trivedi, M.M. Looking at vehicles on the road: A survey of vision-based vehicle detection, tracking, and behavior analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Kitani, K.M.; Ziebart, B.D.; Bagnell, J.A.; Hebert, M. Activity forecasting. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 201–214. [Google Scholar]

- Lee, J.; Balakrishnan, A.; Gaurav, A.; Czarnecki, K.; Sedwards, S. W ise m ove: A framework to investigate safe deep reinforcement learning for autonomous driving. In Proceedings of the Quantitative Evaluation of Systems: 16th International Conference, QEST 2019, Glasgow, UK, 10–12 September 2019; Proceedings 16, 2019. pp. 350–354. [Google Scholar]

- Neumann, L.; Vedaldi, A. Pedestrian and ego-vehicle trajectory prediction from monocular camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10204–10212. [Google Scholar]

- Meyer, E.; Brenner, M.; Zhang, B.; Schickert, M.; Musani, B.; Althoff, M. Geometric deep learning for autonomous driving: Unlocking the power of graph neural networks with CommonRoad-Geometric. In Proceedings of the 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 4–7 June 2023; pp. 1–8. [Google Scholar]

- Lee, D.; Gu, Y.; Hoang, J.; Marchetti-Bowick, M. Joint interaction and trajectory prediction for autonomous driving using graph neural networks. arXiv 2019, arXiv:1912.07882. [Google Scholar]

- Li, F.-J.; Zhang, C.-Y.; Chen, C.P. STS-DGNN: Vehicle Trajectory Prediction Via Dynamic Graph Neural Network with Spatial-Temporal Synchronization. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Zhang, K.; Feng, X.; Wu, L.; He, Z. Trajectory prediction for autonomous driving using spatial-temporal graph attention transformer. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22343–22353. [Google Scholar] [CrossRef]

- Mylavarapu, S.; Sandhu, M.; Vijayan, P.; Krishna, K.M.; Ravindran, B.; Namboodiri, A. Towards accurate vehicle behaviour classification with multi-relational graph convolutional networks. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 321–327. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Pham, V.; Pham, C.; Dang, T. Road damage detection and classification with detectron2 and faster r-cnn. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5592–5601. [Google Scholar]

- Teed, Z.; Deng, J. Raft: Recurrent all-pairs field transforms for optical flow. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II 16, 2020. pp. 402–419. [Google Scholar]

- Gong, S.; Ye, X.; Tan, X.; Wang, J.; Ding, E.; Zhou, Y.; Bai, X. Gitnet: Geometric prior-based transformation for birds-eye-view segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 396–411. [Google Scholar]

- Ammar Abbas, S.; Zisserman, A. A geometric approach to obtain a bird’s eye view from an image. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Huang, X.; Cheng, X.; Geng, Q.; Cao, B.; Zhou, D.; Wang, P.; Lin, Y.; Yang, R. The apolloscape dataset for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 954–960. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

| Classes | CLAL | CLAR | LCL | LCR | OVT | Map | Recall | F1 | |

|---|---|---|---|---|---|---|---|---|---|

| Architecture | |||||||||

| MR-GCN | 0.85 | 0.84 | 0.83 | 0.82 | 0.80 | 0.91 | 0.89 | 0.90 | |

| MR-GCN + DRG | 0.87 | 0.86 | 0.85 | 0.84 | 0.82 | 0.93 | 0.91 | 0.92 | |

| MR-GCN + ETIG | 0.88 | 0.87 | 0.86 | 0.85 | 0.83 | 0.94 | 0.92 | 0.93 | |

| MR-GCN + GAMU | 0.86 | 0.85 | 0.84 | 0.83 | 0.81 | 0.92 | 0.90 | 0.91 | |

| MR-GCN + DRG + ETIG | 0.89 | 0.88 | 0.87 | 0.86 | 0.84 | 0.94 | 0.92 | 0.93 | |

| MR-GCN + DRG + GAMU + ETIG | 0.89 | 0.88 | 0.87 | 0.86 | 0.84 | 0.94 | 0.92 | 0.93 | |

| Train and Test On | Apollo | KITTI | Indian | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Baseline | St-RNN | LSTM+ Mutil-Head Attention | Ours | Baseline | St-RNN | LSTM+ Mutil-Head Attention | Ours | Baseline | St-RNN | LSTM+ Mutil-Head Attention | Ours | |

| Action | |||||||||||||

| CLAL | 0.80 | 0.82 | 0.85 | 0.88 | 0.81 | 0.83 | 0.86 | 0.89 | 0.82 | 0.84 | 0.87 | 0.90 | |

| CLAR | 0.79 | 0.81 | 0.84 | 0.87 | 0.80 | 0.82 | 0.85 | 0.88 | 0.81 | 0.83 | 0.86 | 0.89 | |

| LCL | 0.77 | 0.80 | 0.83 | 0.86 | 0.78 | 0.81 | 0.84 | 0.87 | 0.79 | 0.82 | 0.85 | 0.88 | |

| LCR | 0.76 | 0.79 | 0.82 | 0.85 | 0.77 | 0.80 | 0.83 | 0.86 | 0.78 | 0.81 | 0.84 | 0.87 | |

| OVT | 0.74 | 0.77 | 0.80 | 0.83 | 0.75 | 0.78 | 0.81 | 0.84 | 0.76 | 0.79 | 0.82 | 0.85 | |

| Map | 0.87 | 0.90 | 0.92 | 0.94 | 0.87 | 0.90 | 0.92 | 0.94 | 0.87 | 0.90 | 0.92 | 0.94 | |

| Recall | 0.85 | 0.88 | 0.90 | 0.92 | 0.86 | 0.88 | 0.90 | 0.92 | 0.85 | 0.88 | 0.90 | 0.92 | |

| F1 | 0.86 | 0.89 | 0.91 | 0.93 | 0.86 | 0.89 | 0.91 | 0.93 | 0.86 | 0.89 | 0.91 | 0.93 | |

| IT | 50 | 45 | 40 | 35 | 52 | 46 | 41 | 36 | 51 | 44 | 39 | 34 | |

| FPS | 20 | 22.2 | 25 | 28.6 | 19.2 | 21.7 | 24.4 | 27.8 | 19.6 | 22.7 | 25.6 | 29.4 | |

| Train On | KITTI | ||

|---|---|---|---|

| Test On | Apollo | Indian | Ours |

| CLAL | 0.88 | 0.87 | 0.89 |

| CLAR | 0.87 | 0.86 | 0.88 |

| LCL | 0.86 | 0.85 | 0.87 |

| LCR | 0.85 | 0.84 | 0.86 |

| OVT | 0.83 | 0.82 | 0.84 |

| Map | 0.94 | 0.94 | 0.94 |

| Recall | 0.92 | 0.92 | 0.92 |

| F1 | 0.93 | 0.93 | 0.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Liu, M.; Zhang, W.; Guo, W.; Chen, E.; Hu, C.; Zhang, M. An Algorithm for Predicting Vehicle Behavior in High-Speed Scenes Using Visual and Dynamic Graphical Neural Network Inference. Appl. Sci. 2024, 14, 8873. https://doi.org/10.3390/app14198873

Li M, Liu M, Zhang W, Guo W, Chen E, Hu C, Zhang M. An Algorithm for Predicting Vehicle Behavior in High-Speed Scenes Using Visual and Dynamic Graphical Neural Network Inference. Applied Sciences. 2024; 14(19):8873. https://doi.org/10.3390/app14198873

Chicago/Turabian StyleLi, Menghao, Miao Liu, Weiwei Zhang, Wenfeng Guo, Enqing Chen, Chunguang Hu, and Maomao Zhang. 2024. "An Algorithm for Predicting Vehicle Behavior in High-Speed Scenes Using Visual and Dynamic Graphical Neural Network Inference" Applied Sciences 14, no. 19: 8873. https://doi.org/10.3390/app14198873

APA StyleLi, M., Liu, M., Zhang, W., Guo, W., Chen, E., Hu, C., & Zhang, M. (2024). An Algorithm for Predicting Vehicle Behavior in High-Speed Scenes Using Visual and Dynamic Graphical Neural Network Inference. Applied Sciences, 14(19), 8873. https://doi.org/10.3390/app14198873