Emotional Temperature for the Evaluation of Speech in Patients with Alzheimer’s Disease through an Automatic Interviewer

Abstract

1. Introduction

Related Works

2. Materials and Methods

2.1. Method

2.1.1. Calculation of Emotional Temperature

2.1.2. Descriptive Statistics

- : the discrete emotional temperature of the recording (see Section 2.1.1);

- Average of the continuous emotional temperature : refers to the continuous emotional temperature vector values and describes the mean value of the different ET values of the sound fragments in a recording. It is estimated using the following estimator of the arithmetic mean [42]:where is the value of for each fragment into which each voice recording is divided;

- Variance in the continuous emotional temperature : refers to the continuous emotional temperature vector values and describes the variation in the different fragments in a recording. It is estimated using the following estimator of the variance [42]:

- Skewness of the continuous emotional temperature : refers to the continuous emotional temperature vector values. This measure allows for characterising the behaviour of the probability distribution function of the ET values of the different fragments. This measure quantifies [43] the lack of symmetry of the average ET values of the voice fragments. Positive or negative values of indicate data skewed to the right of their distribution curve or to the left, respectively. The skewness of ET of speech is calculated using the following estimator:where is the ET value of each sound fragment, is the average of the ET values, is the variance in the ET values, and is the number of sound fragments in the sample of speech;

- Kurtosis of the continuous emotional temperature (: refers to the continuous emotional temperature vector values. This is a measure that allows for characterising another aspect of the behaviour of the probability distribution function of the ET values of the different fragments. This measure states the quantity of sound fragments in a recording with an ET value that is close to the average ET (. The larger the value of , the steeper its distribution curve. is calculated using the following estimator [43]:

2.1.3. Univariate Analysis

2.1.4. Multivariate Analysis

2.1.5. Feature Selection

2.2. Materials

Database

3. Results

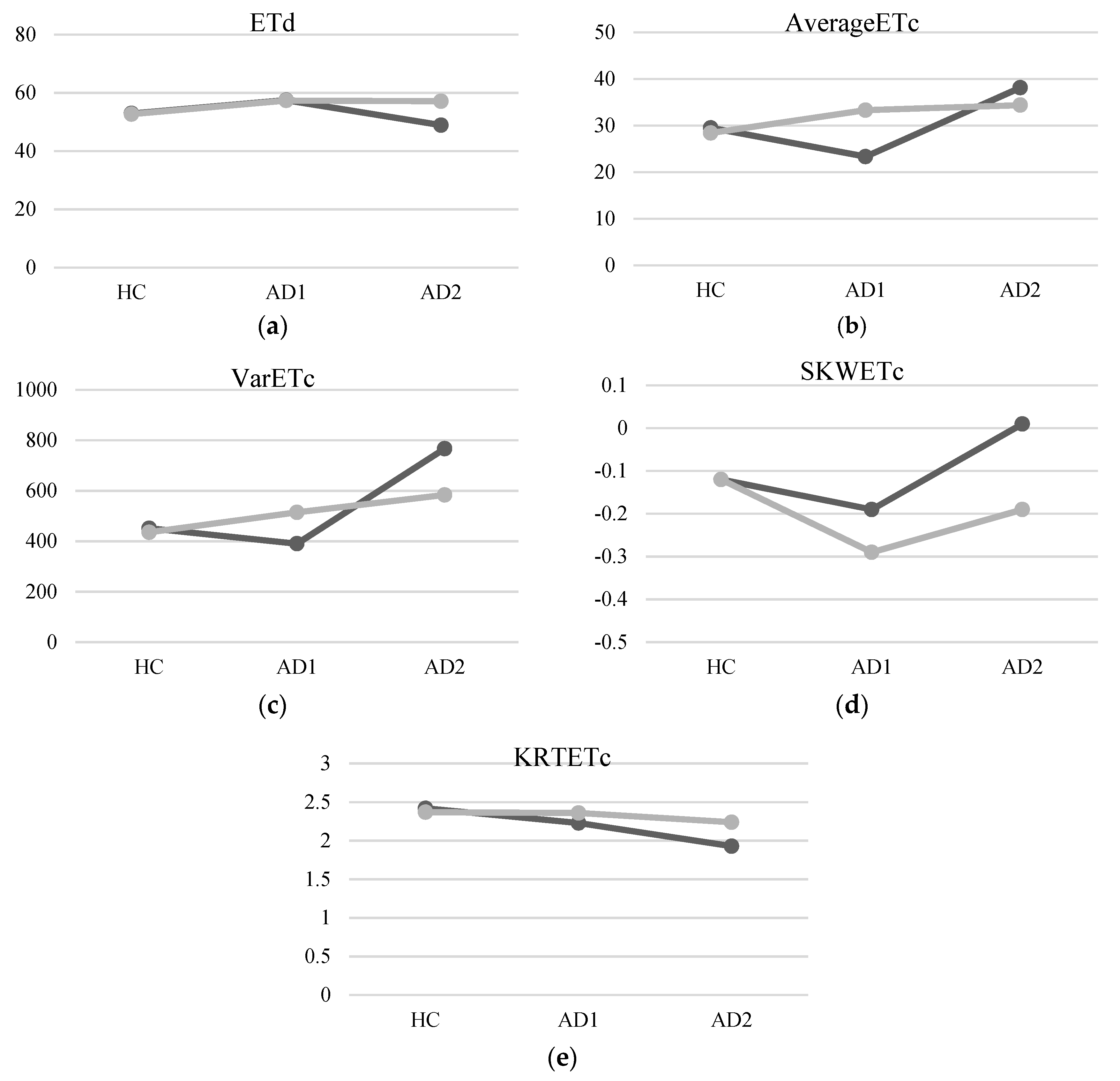

3.1. Univariate Analysis

3.1.1. Descriptive Statistical Analysis

3.1.2. Parametric Analysis

3.1.3. Nonparametric Analysis

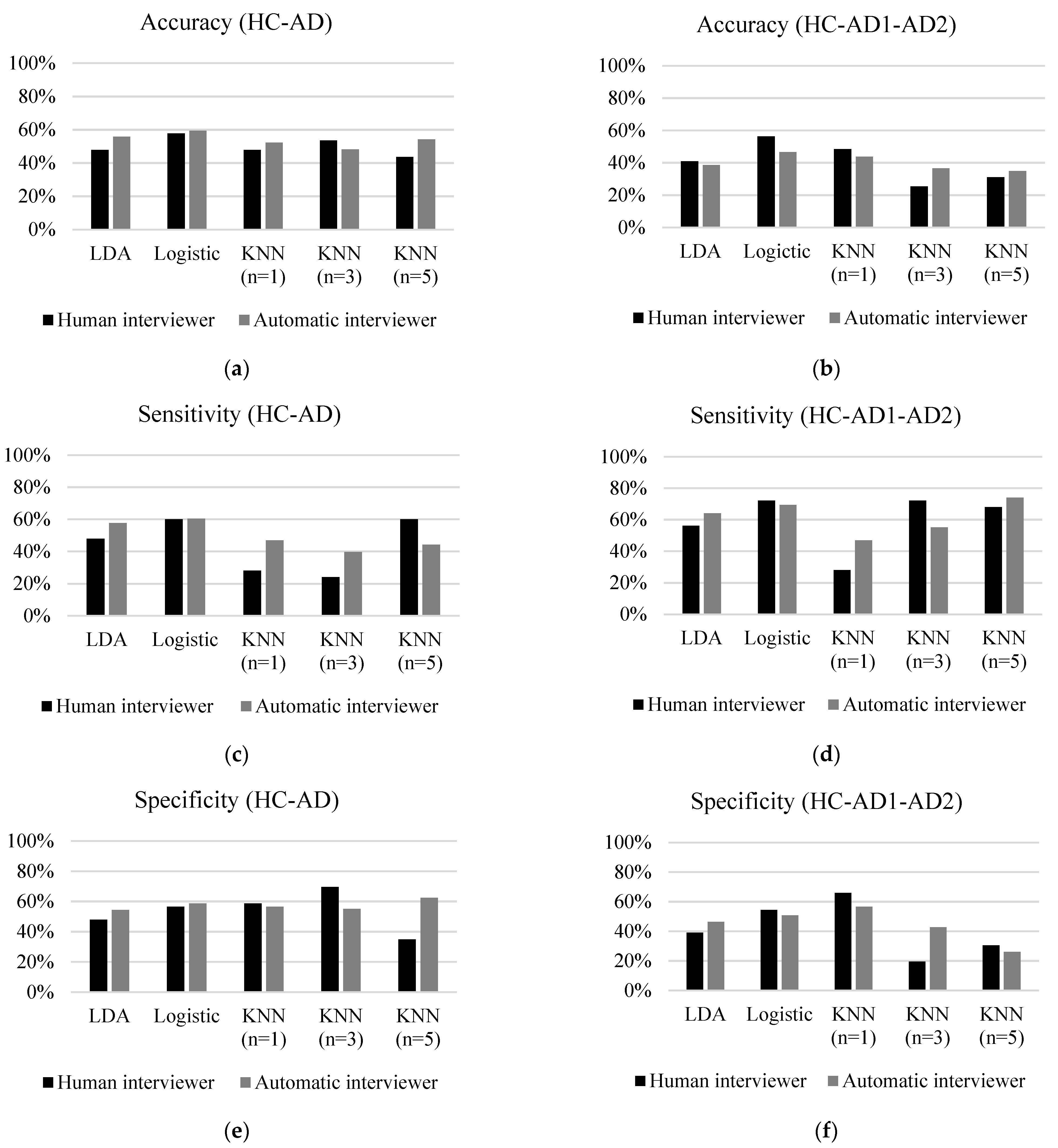

3.2. Multivariate Analysis

3.2.1. Multivariate Classification Based on the Presence or Absence of Disease

3.2.2. Multivariate Classification Based on Different Grades of the Disease

3.2.3. Multivariate Classification MANOVA

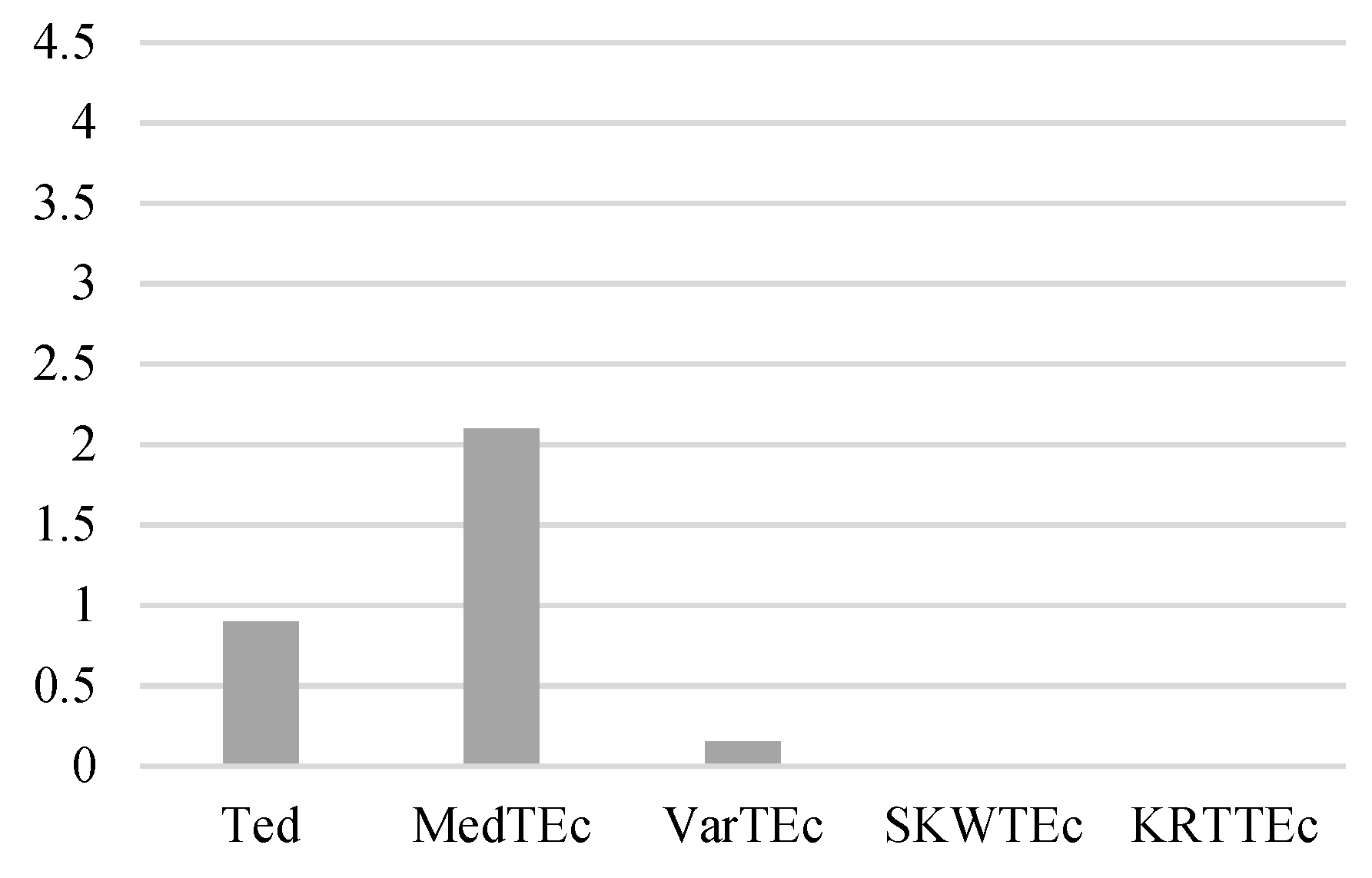

3.3. Feature Selection

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Molinuevo, L. Role of biomarkers in the early diagnosis of Alzheimer’s disease. Rev. Esp. Geriatr. Gerontol. 2011, 46 (Suppl. 1), 39–41. [Google Scholar]

- Guix, J.L.M. Papel de los biomarcadores en el diagnóstico precoz de la enfermedad de Alzheimer. Rev. Esp. Geriatr. Gerontol. 2011, 46, 39–41. [Google Scholar] [CrossRef] [PubMed]

- Andersen, C.K.; Wittrup-Jensen, K.U.; Lolk, A.; Andersen, K.; Kragh-Sørensen, P. Ability to perform activities of daily living is the main factor affecting quality of life in patients with dementia. Health Qual. Life Outcomes 2004, 2, 52. [Google Scholar] [CrossRef]

- Association, A. 2017 Alzheimer’s disease facts and figures. Alzheimer’s Dement. 2017, 13, 325–373. [Google Scholar]

- Laske, C.; Sohrabi, H.R.; Frost, S.M.; López-de-Ipiña, K.; Garrard, P.; Buscema, M. Innovative diagnostic tools for early detection of Alzheimer’s disease. Alzheimer’s Dement. 2015, 11, 561–578. [Google Scholar] [CrossRef]

- Bäckman, L.; Jones, S.; Berger, A.-K.; Laukka, J.E.; Small, B.J. Cognitive impairment in preclinical Alzheimer’s disease: A meta-analysis. Neuropsychology 2005, 19, 520–531. [Google Scholar] [CrossRef] [PubMed]

- Deramecourt, V.; Lebert, F.; Debachy, B.; Mackowiak-Cordoliani, M.A.; Bombois, S.; Kerdraon, O.; Buée, L.; Maurage, C.-A.; Pasquier, F. Prediction of pathology in primary progressive language and speech disorders. Neurology 2010, 74, 42–49. [Google Scholar] [CrossRef] [PubMed]

- McKhann, G.M.; Knopman, D.S.; Chertkow, H.; Hyman, B.T.; Jack, C.R., Jr.; Kawas, C.H.; Klunk, W.E.; Koroshetz, W.J.; Manly, J.J.; Mayeux, R.; et al. The diagnosis of dementia due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s Dement. 2011, 7, 263–269. [Google Scholar] [CrossRef]

- Barragán-Pulido, M.L.; Alonso-Hernández, J.B.; Ferrer-Ballester, M.A.; Travieso-González, C.M.; Mekyska, J.; Smékal, Z. Alzheimer’s disease and automatic speech analysis: A review. Expert Syst. Appl. 2020, 150, 113213. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, H.; Provost, E.M. Deep learning for robust feature generation in audiovisual emotion recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 3687–3691. [Google Scholar]

- Khodabakhsh, A.; Yesil, F.; Guner, E.; Demiroglu, C. Evaluation of linguistic and prosodic features for detection of Alzheimer’s disease in Turkish conversational speech. EURASIP J. Audio Speech Music Process. 2015, 2015, 9. [Google Scholar] [CrossRef]

- Tanaka, H.; Adachi, H.; Ukita, N.; Kudo, T.; Nakamura, S. Automatic detection of very early stage of dementia through multimodal interaction with computer avatars. In Proceedings of the 18th ACM International Conference on Multimodal Interaction-ICMI 2016, Tokyo, Japan, 12–16 November 2016; pp. 261–265. [Google Scholar]

- Rentoumi, V.; Paliouras, G.; Danasi, E.; Arfani, D.; Fragkopoulou, K.; Varlokosta, S.; Papadatos, S. Automatic detection of linguistic indicators as a means of early detection of Alzheimer’s disease and of related dementias: A computational linguistics analysis. In Proceedings of the 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Debrecen, Hungary, 11–14 September 2017; pp. 33–38. [Google Scholar]

- Winblad, B.; Amouyel, P.; Andrieu, S.; Ballard, C. Defeating Alzheimer’s disease and other dementias: A priority for European science and society. Lancet Neurol. 2016, 15, 455–532. [Google Scholar] [CrossRef]

- Farrús, M.; Codina-Filbà, J. Combining Prosodic, Voice Quality and Lexical Features to Automatically Detect Alzheimer’s Disease. arXiv 2020, arXiv:2011.09272v1. [Google Scholar]

- Park, C.-Y.; Kim, M.; Shim, Y.; Ryoo, N.; Choi, H.; Jeong, H.T.; Yun, G.; Lee, H.; Kim, H.; Kim, S.; et al. Harnessing the Power of Voice: A Deep Neural Network Model for Alzheimer’s Disease Detection. Dement. Neurocogn. Disord. 2024, 23, 1. [Google Scholar] [CrossRef] [PubMed]

- Hajjar, I.; Okafor, M.; Choi, J.D.; Moore, E.; Abrol, A.; Calhoun, V.D.; Goldstein, F.C. Development of digital voice biomarkers and associations with cognition, cerebrospinal biomarkers, and neural representation in early Alzheimer’s disease. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2023, 15, e12393. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Fu, F.; Li, L.; Yu, J.; Zhong, D.; Zhu, S.; Zhou, Y.; Liu, B.; Li, J. Efficient Pause Extraction and Encode Strategy for Alzheimer’s Disease Detection Using Only Acoustic Features from Spontaneous Speech. Brain Sci. 2023, 13, 477. [Google Scholar] [CrossRef] [PubMed]

- Campbell, E.L.; Mesía, R.Y.; Docío-Fernández, L.; García-Mateo, C. Paralinguistic and linguistic fluency features for Alzheimer’s disease detection. Comput. Speech Lang 2021, 68, 101198. [Google Scholar] [CrossRef]

- Cowie, R.; Cornelius, R.R. Describing the emotional states that are expressed in speech. Speech Commun. 2003, 40, 5–32. [Google Scholar] [CrossRef]

- Chavhan, Y.D.; Yelure, B.S.; Tayade, K.N. Speech emotion recognition using RBF kernel of LIBSVM. In Proceedings of the 2015 2nd International Conference on Electronics and Communication Systems (ICECS), Coimbatore, India, 26–27 February 2015; pp. 1132–1135. [Google Scholar]

- Balti, H.; Elmaghraby, A.S. Emotion analysis from speech using temporal contextual trajectories. In Proceedings of the 2014 IEEE Symposium on Computers and Communications (ISCC), Madeira, Portugal, 23–26 June 2014; pp. 1–7. [Google Scholar]

- Laukka, P. Vocal Expression of Emotion: Discrete-Emotions and Dimensional Accounts. Ph.D. Thesis, Uppsala Universitet, Uppsala, Sweden, 2004. [Google Scholar]

- Alonso, J.B.; Cabrera, J.; Medina, M.; Travieso, C.M. New approach in quantification of emotional intensity from the speech signal: Emotional temperature. Expert Syst. Appl. 2015, 42, 9554–9564. [Google Scholar] [CrossRef]

- Goudbeek, M.; Scherer, K. Beyond arousal: Valence and potency/control cues in the vocal expression of emotion. J. Acoust. Soc. Am. 2010, 128, 1322. [Google Scholar] [CrossRef]

- Kwon, O.W.; Chan, K.; Hao, J.; Lee, T.W. Emotion recognition by speech signals. In Proceedings of the Eighth European Conference on Speech Communication and Technology, Geneva, Switzerland, 1–4 September 2003. [Google Scholar]

- Lee, C.; Narayanan, S. Emotion recognition using a data-driven fuzzy inference system. In Proceedings of the Eighth European Conference on Speech Communication and Technology, Geneva, Switzerland, 1–4 September 2003. [Google Scholar]

- Harimi, A.; Shahzadi, A.; Ahmadyfard, A. Recognition of emotion using non-linear dynamics of speech. In Proceedings of the 7th International Symposium on Telecommunications (IST’2014), Tehran, Iran, 9–11 September 2014; pp. 446–451. [Google Scholar]

- Altun, H.; Polat, G. Boosting selection of speech related features to improve performance of multi-class SVMs in emotion detection. Expert Syst. Appl. 2009, 36, 8197–8203. [Google Scholar] [CrossRef]

- Amlerova, J.; Laczó, J.; Nedelska, Z.; Laczó, M.; Vyhnálek, M.; Zhang, B.; Sheardova, K.; Angelucci, F.; Andel, R. Emotional prosody recognition is impaired in Alzheimer’s disease. Alzheimer’s Res. Ther. 2022, 14, 50. [Google Scholar] [CrossRef] [PubMed]

- Bhaduri, S.; Bhaduri, A.; Sarkar, R.; Analytics, M. Language Independent Speech Emotion and Non-invasive Early Detection of Neurocognitive Disorder. arXiv 2021, arXiv:2106.01684v1. [Google Scholar]

- Gong, Y.; Yang, L.; Zhang, J.; Chen, Z.; He, S.; Zhang, X.; Zhang, W. Using Speech Emotion Recognition as a Longitudinal Biomarker for Alzheimer’s Disease. Int. J. Biomed. Biol. Eng. 2023, 17, 267–272. Available online: https://publications.waset.org/10013336/using-speech-emotion-recognition-as-a-longitudinal-biomarker-for-alzheimers-disease (accessed on 9 June 2024).

- Bernieri, G.; Duarte, J.C. Identificação da Doença de Alzheimer Através da Fala Utilizando Reconhecimento de Emoções. J. Health Informatics 2023, 15, 1–14. [Google Scholar] [CrossRef]

- López-De-Ipiña, K.; Alonso, J.B.; Solé-Casals, J.; Barroso, N.; Henriquez, P.; Faundez-Zanuy, M.; Travieso, C.M.; Ecay-Torres, M.; Martínez-Lage, P.; Eguiraun, H. On Automatic Diagnosis of Alzheimer’s Disease Based on Spontaneous Speech Analysis and Emotional Temperature. Cognit. Comput. 2015, 7, 44–55. [Google Scholar] [CrossRef]

- Lopez-de-Ipiña, K.; Alonso, J.B.; Barroso, N.; Faundez-Zanuy, M.; Ecay, M.; Solé-Casals, J.; Travieso, C.M.; Estanga, A. New Approaches for Alzheimer’s Disease Diagnosis Based on Automatic Spontaneous Speech Analysis and Emotional Temperature. In Ambient Assisted Living and Home Care. IWAAL 2012. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7657, pp. 407–414. [Google Scholar]

- López de Ipiña, K.; Alonso, J.B.; Solé-Casals, J.; Barroso, N.; Faundez, M.; Ecay, M.; Travieso, C.; Ezeiza, A.; Estanga, A. Alzheimer disease diagnosis based on automatic spontaneous speech analysis. In Proceedings of the IJCCI 2012: 4th International Joint Conference on Computational Intelligence, Barcelona, Spain, 5–7 October 2012; pp. 698–705. [Google Scholar]

- López-De-Ipiña, K.; Alonso-Hernández, J.; Solé-Casals, J.; Travieso-González, C.; Ezeiza, A.; Faúndez-Zanuy, M.; Calvo, P.; Beitia, B. Feature selection for automatic analysis of emotional response based on nonlinear speech modeling suitable for diagnosis of Alzheimer’s disease. Neurocomputing 2015, 150, 392–401. [Google Scholar] [CrossRef][Green Version]

- Barragán Pulido, M.L. Avances en el Análisis del Habla Mediante Sistemas Conversacionales Automáticos Aplicados a la Enfermedad de Alzheimer. Ph.D. Thesis, University of Murcia, Murcia, Spain, 2022. [Google Scholar]

- Alonso-Hernández, J.B.; Barragán-Pulido, M.L.; Gil-Bordón, J.M.; Ferrer-Ballester, M.Á.; Travieso-González, C.M. Using a Human Interviewer or an Automatic Interviewer in the Evaluation of Patients with AD from Speech. Appl. Sci. 2021, 11, 3228. [Google Scholar] [CrossRef]

- De Cheveigné, A.; Kawahara, H. YIN, a fundamental frequency estimator for speech and music. J. Acoust. Soc. Am. 2002, 111, 1917–1930. [Google Scholar] [CrossRef] [PubMed]

- Lazar, N.A. Basic Statistical Analysis. In The Statistical Analysis of Functional MRI Data; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–36. [Google Scholar]

- Sarhan, A.E. Estimation of the mean and standard deviation by order statistics. Ann. Math. Stat. 1954, 25, 317–328. [Google Scholar] [CrossRef]

- Groeneveld, R.A.; Meeden, G. Measuring Skewness and Kurtosis. J. R. Stat. Soc. Ser. D Stat. 1984, 33, 391–399. [Google Scholar] [CrossRef]

- Stata: Software for Statistics and Data Science. Available online: https://www.stata.com/ (accessed on 20 November 2019).

- GoodData. Normality Testing-Skewness and Kurtosis. 2020. Available online: https://www.gooddata.com/ (accessed on 18 January 2022).

- Wilcoxon, F. Some rapid approximate statistical procedures. Ann. N. Y. Acad. Sci. 1950, 52, 808–814. [Google Scholar] [CrossRef]

- Kruskal, W.H.; Wallis, W.A. Use of Ranks in One-Criterion Variance Analysis. J. Am. Stat. Assoc. 1952, 47, 583–621. [Google Scholar] [CrossRef]

- Hernández, J.; Pulido, M.; Bordón, J.; Ballester, M.; González, C. Speech Evaluation of patients with Alzheimer’s Disease using an automatic interviewer. Expert Syst. Appl. 2022, 192, 116386. [Google Scholar] [CrossRef]

| Populations | ||||||||

|---|---|---|---|---|---|---|---|---|

| HC * | AD1 * | AD2 * | AD (AD1 + AD2) | |||||

| Variable/Interview | Human | Automatic | Human | Automatic | Human | Automatic | Human | Automatic |

| 52.92 (13.79) | 52.68 (14.05) | 57.49 (9.01) | 57.37 (11.94) | 48.91 (8.82) | 57.13 (14.48) | 56.12 (9.37) | 57.29 (12.75) | |

| 29.53 (29.4) | 28.42 (27.76) | 23.36 (29.84) | 33.32 (29.84) | 38.16 (26.41) | 34.40 (29.89) | 25.73 (28.47) | 33.67 (29.72) | |

| 451.50 (445.2) | 435.72 (431.75) | 390.53 (471.28) | 514.74 (476.92) | 767.26 (514.69) | 583.80 (504.09) | 487.93 (487.93) | 537.14 (484.69) | |

| −0.12 (0.51) | −0.12 (0.5) | −0.19 (0.38) | −0.29 (0.50) | 0.01 (0.28) | −0.19 (0.53) | −0.16 (0.36) | −0.26 (0.50) | |

| 2.42 (0.6) | 2.37 (0.73) | 2.23 (0.46) | 2.36 (0.64) | 1.93 (0.19) | 2.24 (0.76) | 2.18 (0.44) | 2.32 (0.68) | |

| Wilcoxon Test | Kruskal–Wallis Test | Median Test | ||||

|---|---|---|---|---|---|---|

| Prob|z| | χ2 | Pearson χ2 | ||||

| Variable/Interviewer | Human | Automatic | Human | Automatic | Human | Automatic |

| HC * vs. AD | ||||||

| 0.34 | 0.05 | 0.342 | 0.05 | 0.56 | 0.12 | |

| 0.61 | 0.19 | 0.634 | 0.21 | 0.68 | 0.18 | |

| 0.81 | 0.08 | 0.824 | 0.09 | 0.93 | 0.28 | |

| 0.79 | 0.06 | 0.791 | 0.06 | 0.93 | 0.05 | |

| 0.13 | 0.55 | 0.129 | 0.55 | 0.37 | 0.96 | |

| HC vs. AD1 * | ||||||

| 0.17 | 0.05 | 0.17 | 0.05 | 0.26 | 0.13 | |

| 0.47 | 0.28 | 0.50 | 0.30 | 0.66 | 0.23 | |

| 0.74 | 0.25 | 0.76 | 0.28 | 0.66 | 0.36 | |

| 0.60 | 0.02 | 0.60 | 0.02 | 0.93 | 0.03 | |

| 0.34 | 0.93 | 0.34 | 0.93 | 0.66 | 0.36 | |

| HC vs. AD2 * | ||||||

| 0.41 | 0.27 | 0.41 | 0.27 | 0.60 | 0.46 | |

| 0.69 | 0.30 | 0.71 | 0.32 | 0.60 | 0.57 | |

| 0.12 | 0.06 | 0.14 | 0.07 | 0.60 | 0.35 | |

| 0.54 | 0.85 | 0.54 | 0.s8483 | 0.60 | 0.85 | |

| 0.05 | 0.16 | 0.05 | 0.16 | 0.12 | 0.35 | |

| AD1 vs. AD2 | ||||||

| 0.08 | 0.75 | 0.08 | 0.75 | 0.12 | 0.59 | |

| 0.52 | 0.87 | 0.55 | 0.87 | 0.53 | 0.89 | |

| 0.11 | 0.32 | 0.14 | 0.34 | 0.53 | 0.79 | |

| 0.18 | 0.20 | 0.18 | 0.20 | 0.65 | 0.28 | |

| 0.24 | 0.14 | 0.24 | 0.14 | 0.12 | 0.08 | |

| Automatic Interviewer | Human Interviewer | ||||||

|---|---|---|---|---|---|---|---|

| Classifier | True Disease | 0 | 1 | Total | 0 | 1 | Total |

| LDA | 0 | 75 (54.35%) | 63 (45.65%) | 138 (100%) | 22 (47.83%) | 24 (52.17%) | 46 (100%) |

| 1 | 47 (42.34%) | 64 (57.66%) | 111 (100%) | 13 (52.00%) | 12 (48.00%) | 25 (100%) | |

| Total | 122 (49.00%) | 127 (51%) | 249 (100%) | 35 (49.30%) | 36 (50.70%) | 71 (100%) | |

| Logistic | 0 | 81 (58.70%) | 57 (41.30%) | 138 (100%) | 26 (56.52%) | 20 (43.48%) | 46 (100%) |

| 1 | 44 (39.64%) | 67 (60.36%) | 111 (100%) | 10 (40.00%) | 15 (60.00%) | 25 (100%) | |

| Total | 125 (50.20%) | 124 (49.80%) | 249 (100%) | 36 (50.70%) | 35 (49.30%) | 71 (100%) | |

| KNN (n = 1) | 0 | 78 (56.52%) | 60 (43.48%) | 138 (100%) | 27 (58.70%) | 19 (41.30%) | 46 (100%) |

| 1 | 59 (53.15%) | 52 (46.85%) | 111 (100%) | 18 (72.00%) | 7 (28.00%) | 25 (100%) | |

| Total | 137 (55.02%) | 112 (44.98%) | 249 (100%) | 45 (63.38%) | 26 (36.62%) | 71 (100%) | |

| KNN (n = 3) | 0 | 76 (55.07%) | 62 (44.93%) | 138 (100%) | 32 (69.57%) | 14 (30.43%) | 46 (100%) |

| 1 | 67 (60.36%) | 44 (39.64%) | 111 (100%) | 19 (76.00%) | 6 (24.00%) | 25 (100%) | |

| Total | 143 (57.43%) | 106 (42.57%) | 249 (100%) | 51 (71.83%) | 20 (28.17%) | 71 (100%) | |

| KNN (n = 5) | 0 | 86 (62.32%) | 52 (37.68%) | 138 (100%) | 16 (34.78%) | 30 (65.22%) | 46 (100%) |

| 1 | 62 (55.86%) | 49 (44.14%) | 111 (100%) | 10 (40.00%) | 15 (60.00%) | 25 (100%) | |

| Total | 148 (59.44%) | 101 (40.56%) | 249 (100%) | 26 (36.62%) | 45 (63.38) | 71 (100%) | |

| Classifier | Accuracy [%] | Sensitivity [%] | Specificity [%] | |

|---|---|---|---|---|

| Automatic interviewer | LDA | 55.82% | 57.66% | 54.35% |

| Logistic | 59.44% | 60.36% | 58.70% | |

| KNN (n = 1) | 52.21% | 46.85% | 56.52% | |

| KNN (n = 3) | 48.19% | 39.64% | 55.07% | |

| KNN (n = 5) | 54.22% | 44.14% | 62.32% | |

| Human interviewer | LDA | 47.89% | 48.00% | 47.83% |

| Logistic | 57.75% | 60.00% | 56.52% | |

| KNN (n = 1) | 47.89% | 28.00% | 58.70% | |

| KNN (n = 3) | 53.52% | 24.00% | 69.57% | |

| KNN (n = 5) | 43.66% | 60.00% | 34.78% |

| Classifier | True Grade | Automatic Interviewer | Human Interviewer | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | Total | 0 | 1 | 2 | Total | ||

| LDA | 0 | 64 (46.38%) | 34 (24.64%) | 40 (28.99%) | 138 (100%) | 18 (39.13%) | 14 (30.43%) | 14 (30.43%) | 46 (100%) |

| 1 | 28 (37.33%) | 18 (24.00%) | 29 (38.67%) | 75 (100%) | 10 (47.62%) | 8 (38.10%) | 3 (14.29%) | 21 (100%) | |

| 2 | 12 (33,33%) | 10 (27.78%) | 14 (38.89%) | 36 (100%) | 1 (25.00%) | 0 (0%) | 3 (75,00%) | 4 (100%) | |

| Total | 104 (41.77%) | 62 (24.90%) | 83 (33.33%) | 249 (100%) | 29 (40.85%) | 22 (30.99%) | 20 (28.17%) | 71 (100%) | |

| Logistic | 0 | 70 (50.72%) | 30 (21.74%) | 38 (27.54%) | 138 (100%) | 25 (54.35%) | 15 (32.61%) | 6 (13.04%) | 46 (100%) |

| 1 | 22 (29.33%) | 31 (41.33%) | 22 (29.33%) | 75 (100%) | 7 (33.33%) | 11 (52.38%) | 3 (14.29%) | 21 (100%) | |

| 2 | 12 (33.33%) | 9 (25.00%) | 15 (41.67%) | 36 (100%) | 0 (0%) | 0 (0%) | 4 (100%) | 4 (100%) | |

| Total | 104 (41.77%) | 70 (28.11%) | 75 (30.12%) | 249 (100%) | 32 (45.07%) | 26 (36.62%) | 13 (18.31%) | 71 (100%) | |

| KNN (n = 1) | 0 | 78 (56.52%) | 38 (27.54%) | 22 (15.94%) | 138 (100%) | 27 (58.70%) | 13 (39.13%) | 1 (2.17%) | 46 (100%) |

| 1 | 42 (56.00%) | 21 (28.00%) | 12 (16.00%) | 75 (100%) | 15 (71.43%) | 5 (23.81%) | 1 (4.76%) | 21 (100%) | |

| 2 | 17 (47.22%) | 9 (25,00) | 10 (27.78%) | 36 (100%) | 3 (75.00%) | 1 (25.00%) | 0 (0%) | 4 (100%) | |

| Total | 137 (55.02%) | 68 (27.31%) | 44 (17.67%) | 249 (100%) | 45 (63.38%) | 24 (33.80%) | 2 (2.82%) | 71 (100%) | |

| KNN (n = 3) | 0 | 59 (42.75%) | 28 (20.29%) | 51 (36.96%) | 138 (100%) | 9 (19.57%) | 27 (58.70%) | 10 (21.74%) | 46 (100%) |

| 1 | 34 (45.33%) | 17 (22.67%) | 24 (32.00%) | 75 (100%) | 7 (33.33%) | 9 (42.86%) | 5 (23.81%) | 21 (100%) | |

| 2 | 16 (44.44%) | 5 (13.89%) | 15 (41.67%) | 36 (100%) | 0 (0%) | 4 (100%) | 0 (0%) | 4 (100%) | |

| Total | 109 (43.78%) | 50 (20.08%) | 90 (36.14%) | 249 (100%) | 16 (22.54%) | 40 (56.34%) | 15 (21.13%) | 71 (100%) | |

| KNN (n = 5) | 0 | 36 (26.09%) | 54 (39.13%) | 48 (34.78%) | 138 (100%) | 14 (30.43%) | 17 (36.96%) | 15 (32.61%) | 46 (100%) |

| 1 | 21 (28.00%) | 29 (38.67%) | 25 (33.33%) | 75 (100%) | 7 (33.33%) | 7 (33.33%) | 7 (33.33%) | 21 (100%) | |

| 2 | 8 (22.22%) | 6 (16.67%) | 22 (61.11%) | 36 (100%) | 1 (25.00%) | 2 (50.00%) | 1 (25.00%) | 4 (100%) | |

| Total | 65 (26.10%) | 89 (35.74%) | 95 (38.15%) | 249 (100%) | 22 (30.99%) | 26 (36.62%) | 23 (32.39%) | 71 (100%) | |

| Classifier | Accuracy [%] | Sensitivity [%] | Specificity [%] | |

|---|---|---|---|---|

| Automatic interviewer | LDA | 38.55% | 63.96% | 46.38% |

| Logistic | 46.59% | 69.37% | 50.72% | |

| KNN (n = 1) | 43.78% | 46.85% | 56.52% | |

| KNN (n = 3) | 36.55% | 54.95% | 42.75% | |

| KNN (n = 5) | 34.94% | 73.87% | 26.09% | |

| Human interviewer | LDA | 40.85% | 56.00% | 39.13% |

| Logistic | 56.34% | 72.00% | 54.35% | |

| KNN (n = 1) | 48.48% | 28.00% | 65.85% | |

| KNN (n = 3) | 25.35% | 72.00% | 19.57% | |

| KNN (n = 5) | 30.99% | 68.00% | 30.43% |

| MANOVA | ||||||||

|---|---|---|---|---|---|---|---|---|

| Disease (HC *-AD) | Grade (HC-AD1 *) | Grade (HC-AD2 *) | Grade (AD1-AD2) | |||||

| p-Value | p-Value | p-Value | p-Value | |||||

| Statistic/ Interviewer | H | A | H | A | H | A | H | A |

| W * | 0.14 | 0.01 | 0.12 | 0.05 | 0.40 | 0.06 | 0.35 | 0.74 |

| P * | 0.14 | 0.01 | 0.12 | 0.05 | 0.40 | 0.06 | 0.35 | 0.74 |

| L * | 0.14 | 0.01 | 0.12 | 0.05 | 0.40 | 0.06 | 0.35 | 0.74 |

| R * | 0.14 | 0.01 | 0.12 | 0.05 | 0.40 | 0.06 | 0.35 | 0.74 |

| Classification | Interviewer | |||||

|---|---|---|---|---|---|---|

| Based on absence or presence of disease | Automatic | 0.9 | 2.1 | 0.15 | 0 | 0 |

| Human | 0.55 | 1 | 0.35 | 0 | 0 | |

| Based on different grades of disease | Automatic | 0.4 | 1.7 | 0.1 | 0 | 0 |

| Human | 0.45 | 0.95 | 0.3 | 0 | 0 |

| Emotional Feature | Relevance |

|---|---|

| 0.9 | |

| 2.1 | |

| 0.15 | |

| 0 | |

| 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alonso-Hernández, J.B.; Barragán-Pulido, M.L.; Santana-Luis, A.; Ferrer-Ballester, M.Á. Emotional Temperature for the Evaluation of Speech in Patients with Alzheimer’s Disease through an Automatic Interviewer. Appl. Sci. 2024, 14, 5588. https://doi.org/10.3390/app14135588

Alonso-Hernández JB, Barragán-Pulido ML, Santana-Luis A, Ferrer-Ballester MÁ. Emotional Temperature for the Evaluation of Speech in Patients with Alzheimer’s Disease through an Automatic Interviewer. Applied Sciences. 2024; 14(13):5588. https://doi.org/10.3390/app14135588

Chicago/Turabian StyleAlonso-Hernández, Jesús B., María Luisa Barragán-Pulido, Aitor Santana-Luis, and Miguel Ángel Ferrer-Ballester. 2024. "Emotional Temperature for the Evaluation of Speech in Patients with Alzheimer’s Disease through an Automatic Interviewer" Applied Sciences 14, no. 13: 5588. https://doi.org/10.3390/app14135588

APA StyleAlonso-Hernández, J. B., Barragán-Pulido, M. L., Santana-Luis, A., & Ferrer-Ballester, M. Á. (2024). Emotional Temperature for the Evaluation of Speech in Patients with Alzheimer’s Disease through an Automatic Interviewer. Applied Sciences, 14(13), 5588. https://doi.org/10.3390/app14135588