Enhanced Tuna Detection and Automated Counting Method Utilizing Improved YOLOv7 and ByteTrack

Abstract

1. Introduction

2. Related Work

3. Research Methods

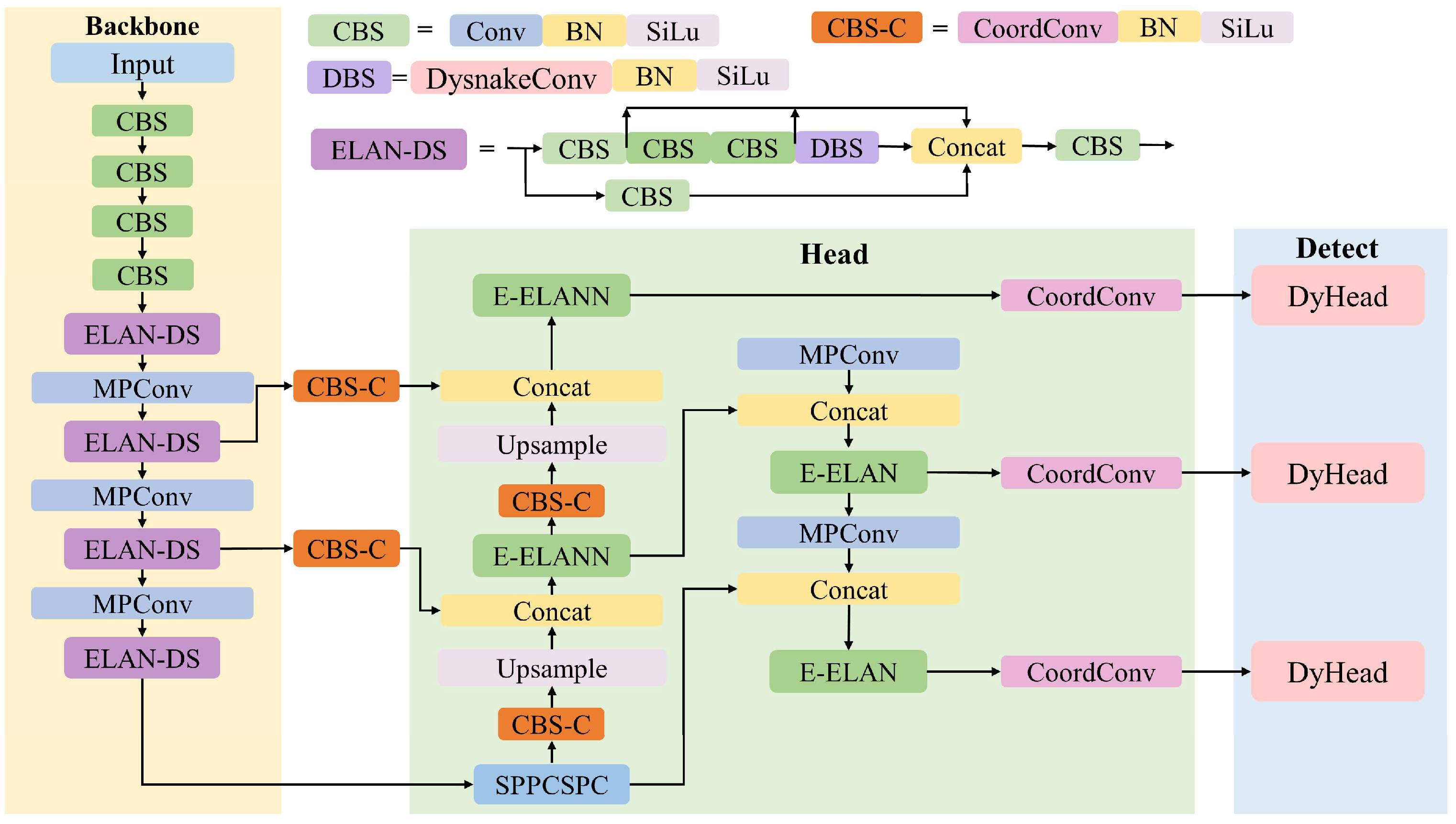

3.1. The Network Structure of YOLOv7-Tuna

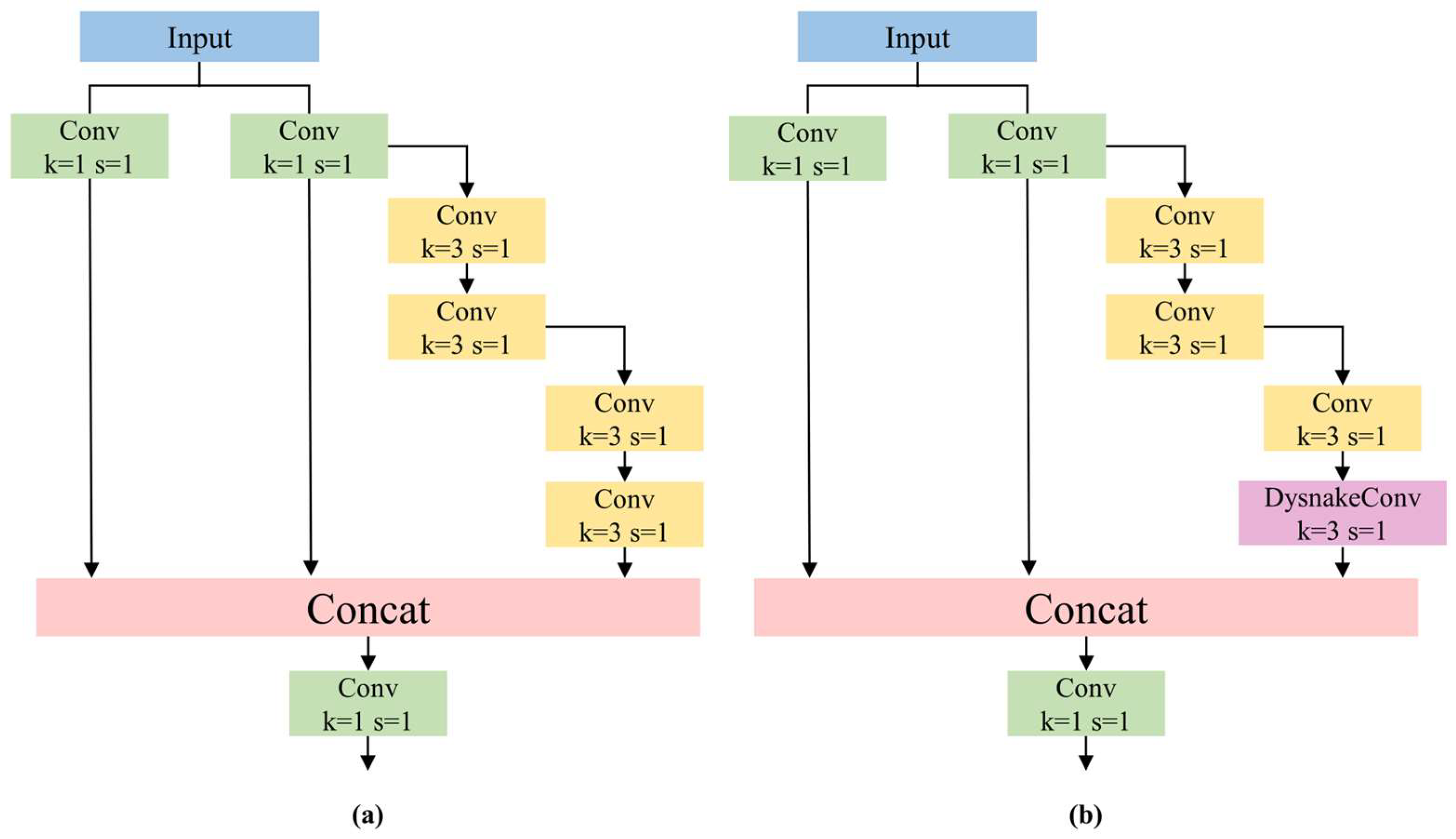

3.1.1. ELAN-DS Module

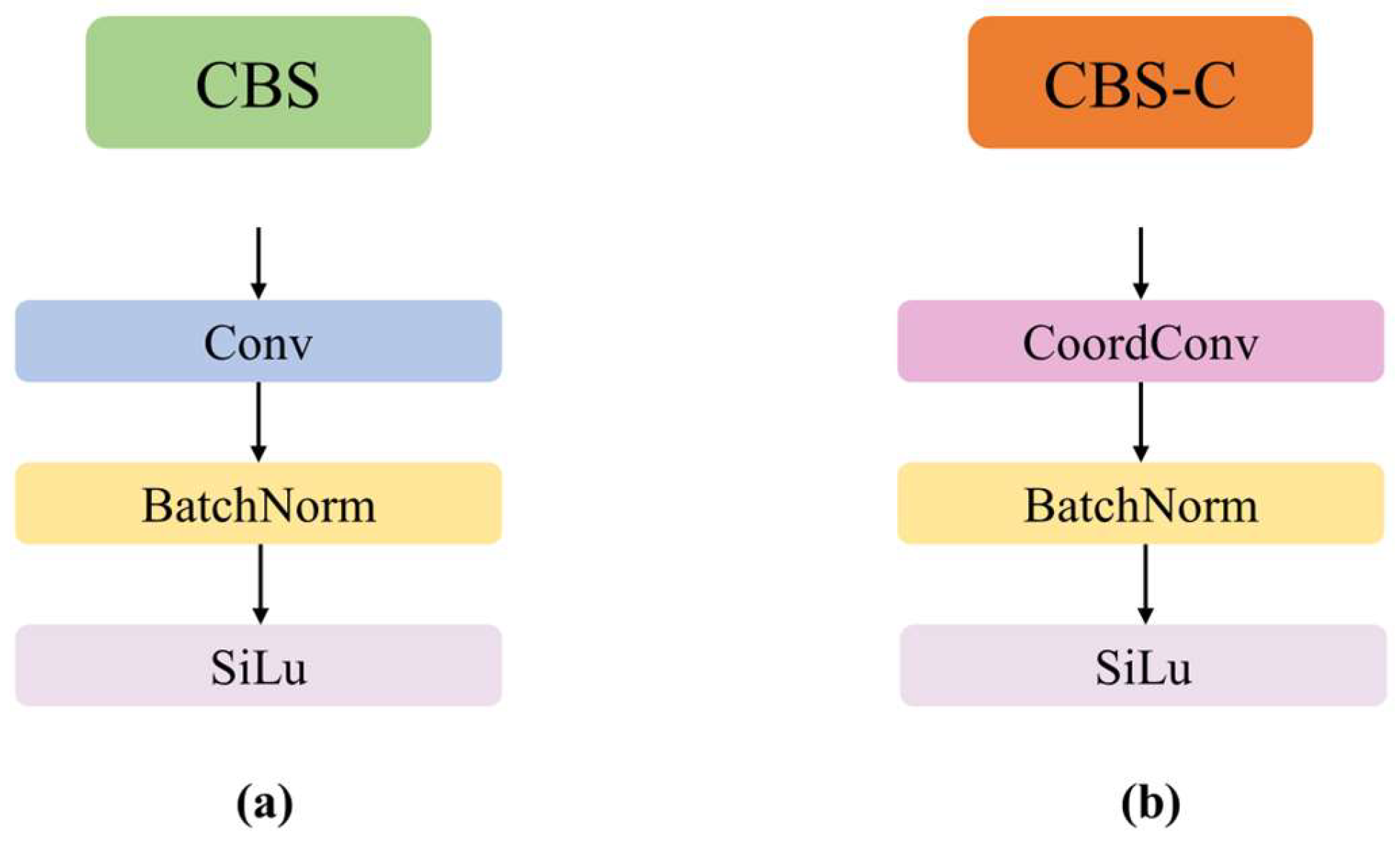

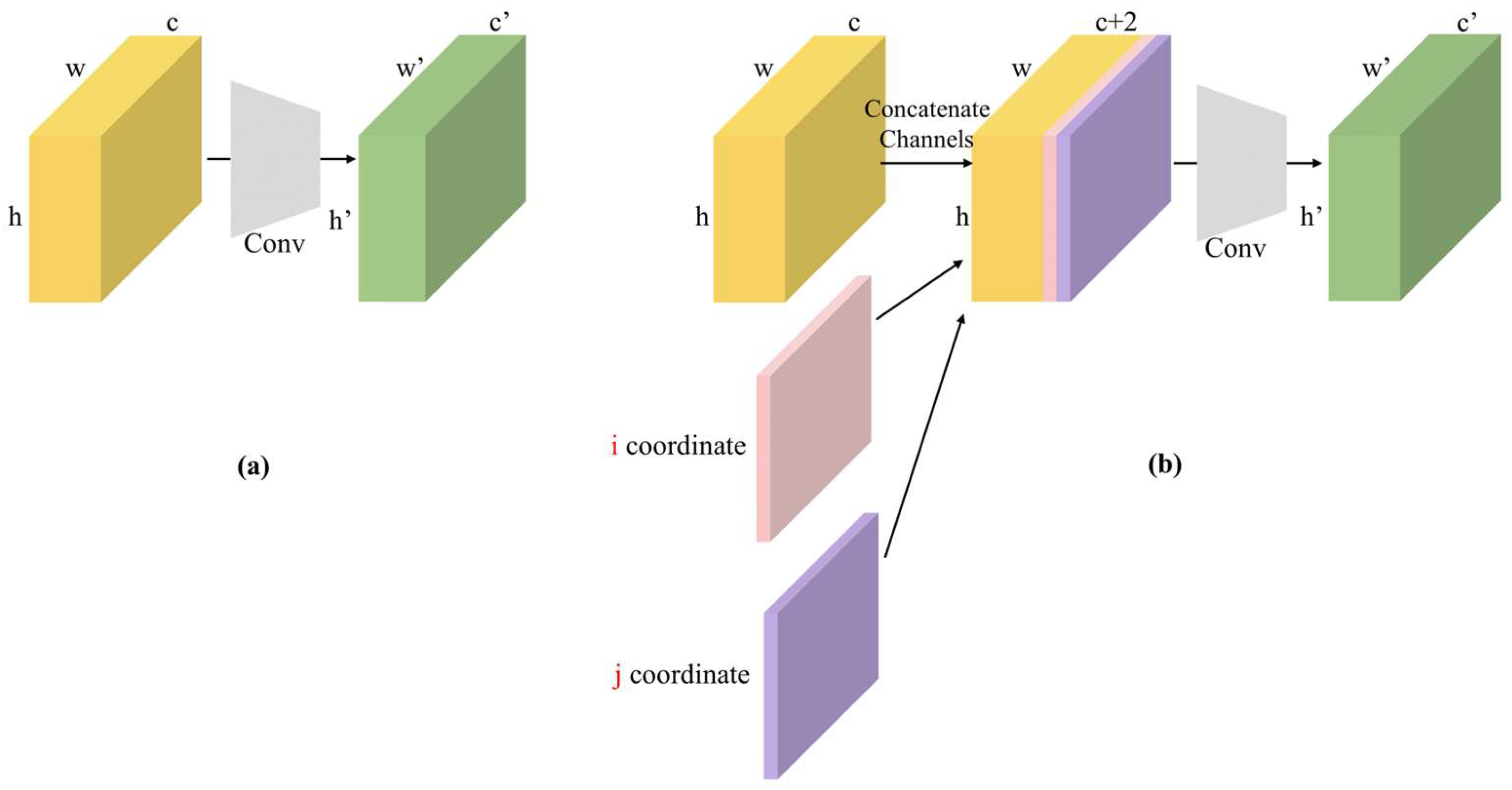

3.1.2. CBS-C Module

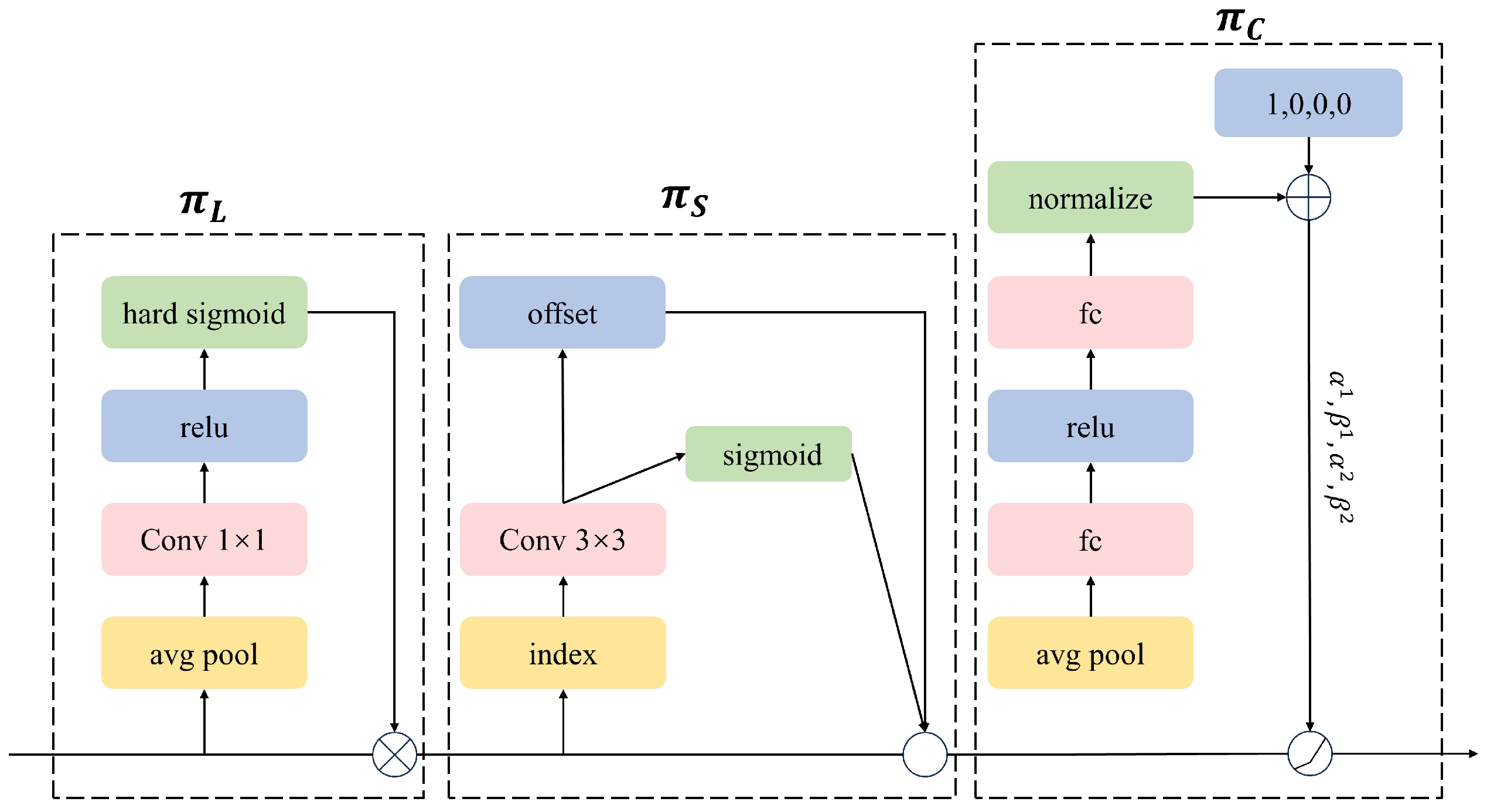

3.1.3. Detection Head

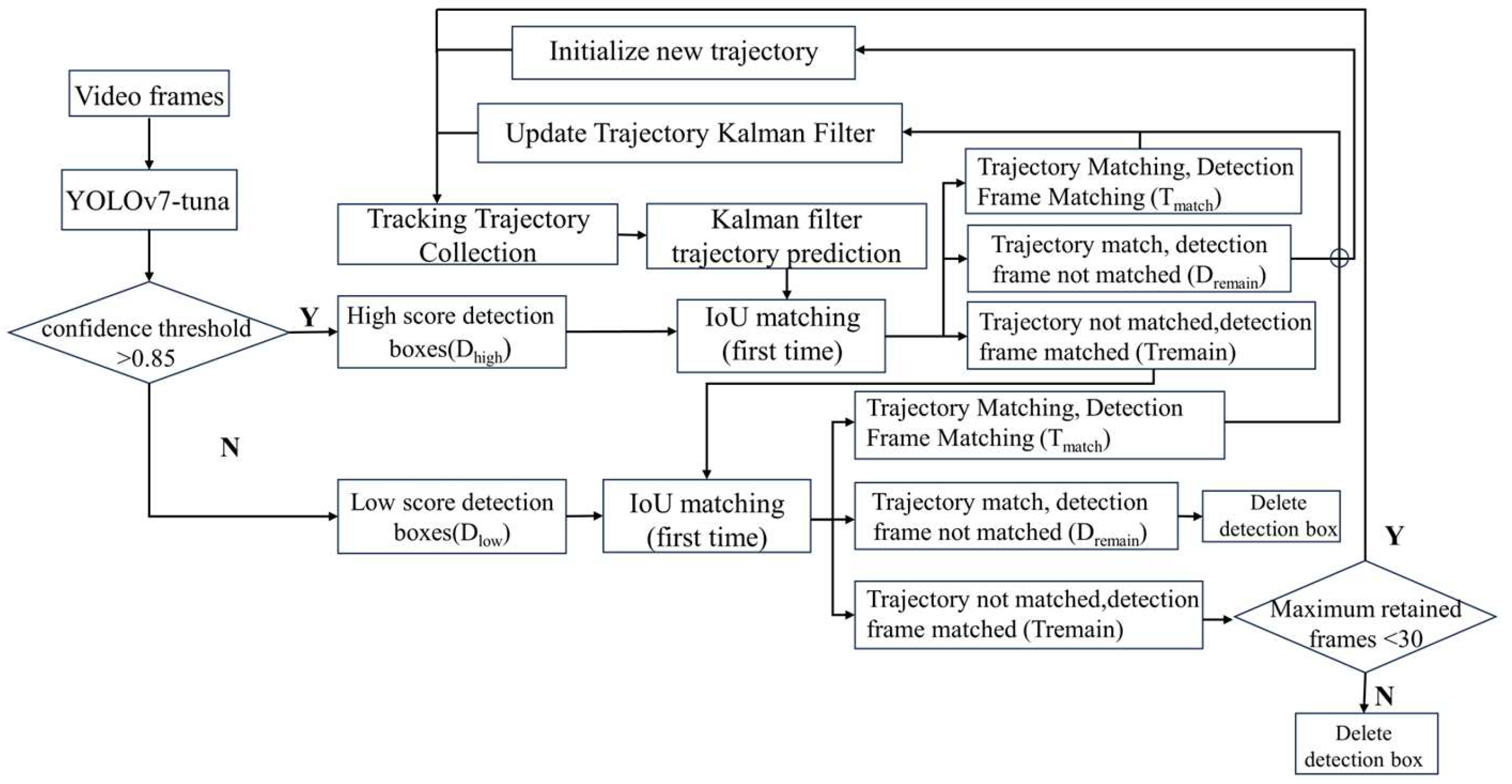

3.2. Tuna Target Tracking Counting Based on ByteTrack Algorithm

3.2.1. ByteTrack Algorithm

3.2.2. Area-Specific Tracking Counting Method

4. Experiment and Results

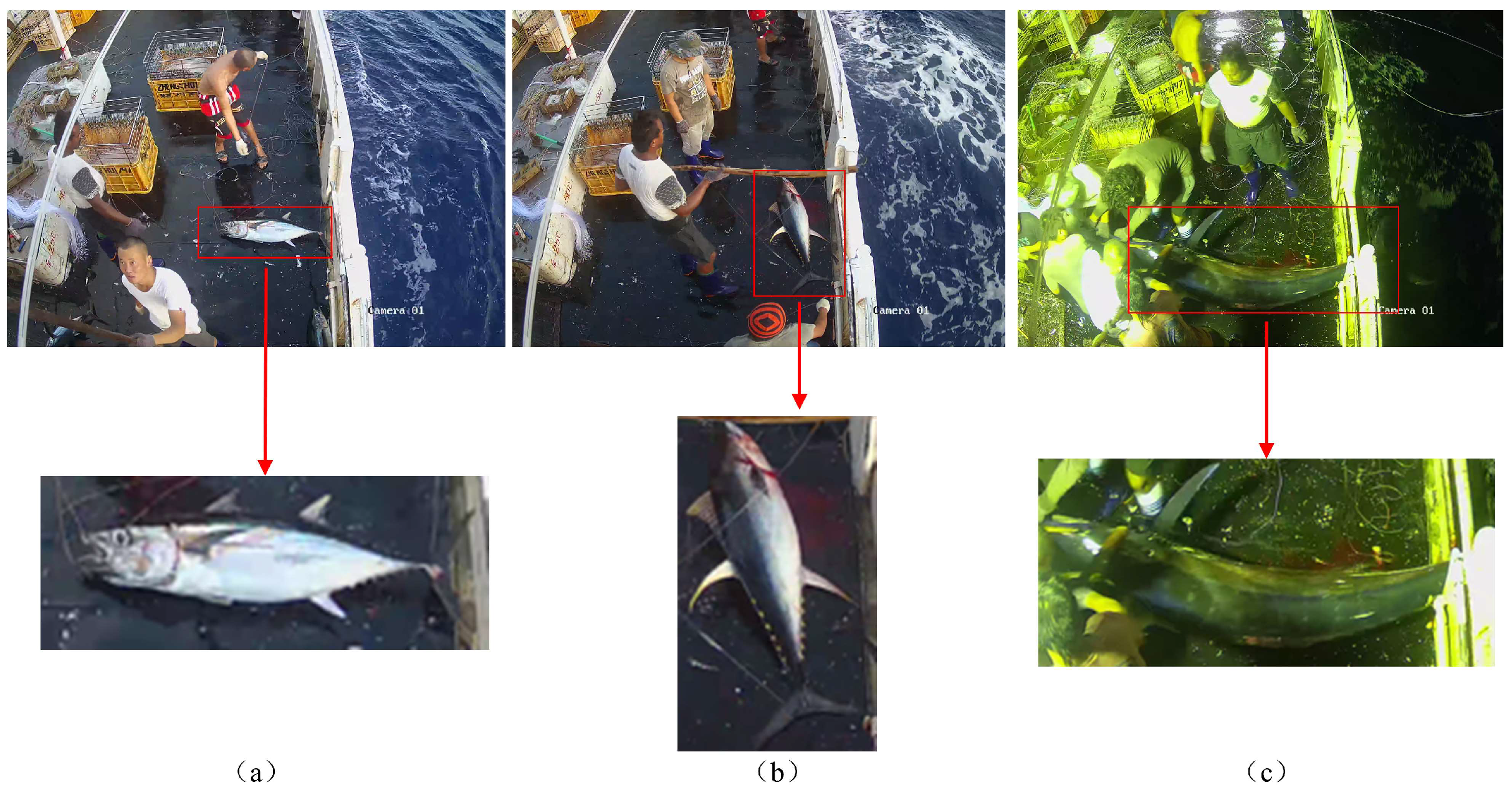

4.1. Dataset and Evaluation Metrics Introduction

4.1.1. Dataset Introduction

4.1.2. Experimental Setup

4.1.3. Evaluation Metrics

4.2. Analysis of Experimental Results

4.2.1. Efficiency on the Depth of DyHead

4.2.2. Ablation Experiment

- Adding DySnakeConv, CoordConv, and DyHead to the YOLOv7 model resulted in YOLOv7-Tuna. Compared to the original YOLOv7, the precision increased from 91.1% to 96.3%, marking a 5.2% improvement; The recall rate increased from 95.8% to 98.9%, marking a 3.1% improvement; The mAP@0.5 increased from 98% to 98.5%, representing a 0.5% improvement; The mAP@0.5:0.95 increased from 58.5% to 68.5%, marking a 10% improvement. Therefore, YOLOv7-Tuna demonstrates improvements across all metrics, validating the effectiveness of the proposed algorithm in this study.

- The models with DySnakeConv, CoordConv, and DyHead added individually show improvements over the original YOLOv7 in various metrics, including precision, recall, mAP@0.5, and mAP@0.5:0.95. Furthermore, by comparing the enhancement effects of the three models, it can be observed that the model with DySnakeConv added exhibits the highest improvement in recall rate, increasing from 95.8% to 99%, marking a 3.2% improvement; The model with CoordConv added shows the most notable improvement in precision, increasing from 91.1% to 95.9%, marking a 4.8% improvement. The model with DyHead added exhibits the best performance in terms of mAP@0.5, increasing from 98% to 98.7%, marking a 0.7% improvement. Therefore, the three modules added in this study effectively enhance the performance of YOLOv7, each demonstrating advantages in three different aspects. This confirms the effectiveness of the added modules in this study.

4.2.3. Contrast Experiment

4.2.4. Counting Method Comparison Experiment

4.2.5. Target Tracking Algorithm Comparison Experiment

4.2.6. Model Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cremers, K.; Wright, G.; Rochette, J. Strengthening monitoring, control and surveillance of human activities in marine areas beyond national jurisdiction: Challenges and opportunities for an international legally binding instrument. Mar. Policy 2020, 122, 103976. [Google Scholar] [CrossRef]

- Heidrich, K.N.; Juan-Jordá, M.J.; Murua, H.; Thompson, C.D.; Meeuwig, J.J.; Zeller, D. Assessing progress in data reporting by tuna Regional Fisheries Management Organizations. Fish Fish. 2022, 23, 1264–1281. [Google Scholar] [CrossRef]

- Iqbal, M.A.; Wang, Z.; Ali, Z.A.; Riaz, S. Automatic fish species classification using deep convolutional neural networks. Wirel. Pers. Commun. 2021, 116, 1043–1053. [Google Scholar] [CrossRef]

- Cai, K.; Miao, X.; Wang, W.; Pang, H.; Liu, Y.; Song, J. A modified YOLOv3 model for fish detection based on MobileNetv1 as backbone. Aquac. Eng. 2020, 91, 102117. [Google Scholar] [CrossRef]

- Yingyi, C.; Chuanyang, G.; Yeqi, L. Fish identification method based on FTVGG16 convolutional neural network. Trans. Chin. Soc. Agric. Mach. 2019, 50, 223–231. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Shaobo, L.; Ling, Y.; Huihui, Y.; Yingyi, C. Underwater Fish Species Identification Model and Real-Time Identification System. Smart Agric. 2022, 4, 130. [Google Scholar] [CrossRef]

- Al Muksit, A.; Hasan, F.; Emon, M.F.H.B.; Haque, M.R.; Anwary, A.R.; Shatabda, S. YOLO-Fish: A robust fish detection model to detect fish in realistic underwater environment. Ecol. Inform. 2022, 72, 101847. [Google Scholar] [CrossRef]

- Ayuningtias, I.; Jaya, I.; Iqbal, M. Identification of yellowfin tuna (Thunnus albacares), mackerel tuna (Euthynnus affinis), and skipjack tuna (Katsuwonus pelamis) using deep learning. In Proceedings of the Earth and Environmental Science, Bogor, Indonesia, 24–25 August 2021; p. 012009. [Google Scholar]

- Albuquerque, P.L.F.; Garcia, V.; Junior, A.d.S.O.; Lewandowski, T.; Detweiler, C.; Gonçalves, A.B.; Costa, C.S.; Naka, M.H.; Pistori, H. Automatic live fingerlings counting using computer vision. Comput. Electron. Agric. 2019, 167, 105015. [Google Scholar] [CrossRef]

- Tseng, C.-H.; Kuo, Y.-F. Detecting and counting harvested fish and identifying fish types in electronic monitoring system videos using deep convolutional neural networks. ICES J. Mar. Sci. 2020, 77, 1367–1378. [Google Scholar] [CrossRef]

- Yu, X.; Wang, Y.; An, D.; Wei, Y. Counting method for cultured fishes based on multi-modules and attention mechanism. Aquac. Eng. 2022, 96, 102215. [Google Scholar] [CrossRef]

- Kibet, D.; Shin, J.-H. Counting Abalone with High Precision Using YOLOv3 and DeepSORT. Processes 2023, 11, 2351. [Google Scholar] [CrossRef]

- Zhang, S.; Yang, X.; Wang, Y.; Zhao, Z.; Liu, J.; Liu, Y.; Sun, C.; Zhou, C. Automatic fish population counting by machine vision and a hybrid deep neural network model. Animals 2020, 10, 364. [Google Scholar] [CrossRef] [PubMed]

- Qian, Z.-M.; Chen, X.; Jiang, H. Fish tracking based on YOLO and ByteTrack. In Proceedings of the 16th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Taizhou, China, 28–30 October 2023; pp. 1–5. [Google Scholar]

- Liu, Y.; Li, B.; Zhou, X.; Li, D.; Duan, Q. FishTrack: Multi-object tracking method for fish using spatiotemporal information fusion. Expert Syst. Appl. 2024, 238, 122194. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Wu, D.; Jiang, S.; Zhao, E.; Liu, Y.; Zhu, H.; Wang, W.; Wang, R. Detection of Camellia oleifera fruit in complex scenes by using YOLOv7 and data augmentation. Appl. Sci. 2022, 12, 11318. [Google Scholar] [CrossRef]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6070–6079. [Google Scholar]

- Liu, R.; Lehman, J.; Molino, P.; Petroski Such, F.; Frank, E.; Sergeev, A.; Yosinski, J. An intriguing failing of convolutional neural networks and the coordconv solution. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar]

| Block | FLOPs/G | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|---|

| 1 | 101.1 | 94.6% | 98.5% | 98.7% | 65.6% |

| 2 | 102.4 | 93% | 98.9% | 98.5% | 64.1% |

| 3 | 103.6 | 92.5% | 98.8% | 98.6% | 67.7% |

| 4 | 104.9 | 92.2% | 96.7% | 98.5% | 62.6% |

| Test | DySnakeConv | CoordConv | DyHead | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|---|---|---|

| 1 | ✕ | ✕ | ✕ | 91.1% | 95.8% | 98% | 58.5% |

| 2 | √ | ✕ | ✕ | 92.9% | 99% | 98.5% | 63.5% |

| 3 | ✕ | √ | ✕ | 95.9% | 97.4% | 98.3% | 66.8% |

| 4 | ✕ | ✕ | √ | 94.6% | 98.5% | 98.7% | 65.6% |

| 5 | √ | √ | √ | 96.3% | 98.9% | 98.5% | 68.5% |

| Algorithm | Precision | Recall | mAP@0.5 |

|---|---|---|---|

| Faster R-CNN | 81.6% | 83.4% | 86.7% |

| YOLOv5 | 90.7% | 95.2% | 98.1% |

| YOLOv7 | 91.1% | 95.8% | 98% |

| YOLOv7-Tuna | 96.3% | 98.9% | 98.5% |

| Counting Method | Repeated Count | Missed Count | Total Miscount | Total Count | Counting Error |

|---|---|---|---|---|---|

| ①YOLOv5 + ByteTrack | +9 | −4 | 13 | 37 | 15.6% |

| ②YOLOv5 + ByteTrack + ours | +5 | −3 | 8 | 34 | 6.3% |

| ③YOLOv7 + ByteTrack | +6 | −3 | 9 | 35 | 9.4% |

| ④YOLOv7 + ByteTrack + ours | +3 | −1 | 4 | 34 | 6.3% |

| ⑤YOLOv7-Tuna + ByteTrack | +4 | −1 | 5 | 35 | 9.4% |

| ⑥YOLOv7-Tuna + ByteTrack + ours | +1 | 0 | 1 | 33 | 3.1% |

| Counting Method | Repeated Count | Missed Count | Total Miscount | Total Count | Counting Error |

|---|---|---|---|---|---|

| ①YOLOv7 + DeepSort | +11 | −5 | 16 | 38 | 18.8% |

| ②YOLOv7 + DeepSort + ours | +4 | −2 | 6 | 34 | 6.3% |

| ③YOLOv7-Tuna + DeepSort | +5 | −1 | 6 | 36 | 12.5% |

| ④YOLOv7-Tuna + DeepSort + ours | +1 | 0 | 1 | 33 | 3.1% |

| ⑤YOLOv7-Tuna + ByteTrack + ours | +1 | 0 | 1 | 33 | 3.1% |

| Fish Species | Count Quantity | Actual Quantity | Miscounted Quantity |

|---|---|---|---|

| Albacore-day | 12 | 11 | +1 |

| Albacore-night | 8 | 7 | +1 |

| Yellowfin-day | 4 | 4 | 0 |

| Yellowfin-night | 2 | 3 | −1 |

| Blackfin-day | 1 | 1 | 0 |

| Blackfin-night | 2 | 2 | 0 |

| Sum | 29 | 28 | +1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Song, L.; Li, J.; Cheng, Y. Enhanced Tuna Detection and Automated Counting Method Utilizing Improved YOLOv7 and ByteTrack. Appl. Sci. 2024, 14, 5321. https://doi.org/10.3390/app14125321

Liu Y, Song L, Li J, Cheng Y. Enhanced Tuna Detection and Automated Counting Method Utilizing Improved YOLOv7 and ByteTrack. Applied Sciences. 2024; 14(12):5321. https://doi.org/10.3390/app14125321

Chicago/Turabian StyleLiu, Yuqing, Ling Song, Jie Li, and Yuanchen Cheng. 2024. "Enhanced Tuna Detection and Automated Counting Method Utilizing Improved YOLOv7 and ByteTrack" Applied Sciences 14, no. 12: 5321. https://doi.org/10.3390/app14125321

APA StyleLiu, Y., Song, L., Li, J., & Cheng, Y. (2024). Enhanced Tuna Detection and Automated Counting Method Utilizing Improved YOLOv7 and ByteTrack. Applied Sciences, 14(12), 5321. https://doi.org/10.3390/app14125321