Abstract

Traffic flow prediction is crucial in intelligent transportation systems. Considering the severe disruptions caused by traffic accidents or congestion, a time series model is developed for traffic flow prediction based on multiple random walks on graphs (MRWG) and the bidirectional spatiotemporal attention mechanism (BSAM), which can adapt to both normal and exceptional situations. The MRWG mechanism is applied to capture spatial features of urban areas during traffic accidents and congestion, especially the spatial dependencies among neighboring regions. Further, a local position attention module is applied to acquire the spatial correlations between different regions to investigate their impact on the global area, while a local temporal attention module is adopted to extract short-term periodic time correlations from traffic flow data. Finally, a spatiotemporal bidirectional attention module is applied to simultaneously extract both the temporal and spatial correlations of the historical traffic flow data in order to generate the output prediction. Experiments have been conducted on NYCTaxi and NYCBike datasets with abnormal events, and the results indicate that the developed model can efficiently predict traffic flow in abnormal events, especially short-term traffic disruptions, outperforming the baseline methods under both abnormal and normal conditions.

1. Introduction

With the rapid development of new technologies and urbanization, the number of vehicles in the global transportation sector has increased sharply, which makes the operation of the urban transportation networks face greater pressure in terms of infrastructure capacity and environmental sustainability. Therefore, accurate traffic prediction in urban areas plays an important role in the management of intelligent transportation systems (TIS) [1,2] that can guide individuals to avoid congested routes, saving travel time and reducing greenhouse gas emission [3,4]. The extensive research on traffic flow prediction suggests that a transportation system can be categorized into two scenarios when modelling traffic flow, namely general conditions and abnormal conditions, whereas the prediction models in turn can be classified into statistical models [5], shallow machine learning models [6], and deep learning algorithms [7] in the current transport modeling studies.

A thorny issue arising from the statistical theory-based methods [8,9,10,11] and machine learning-based approaches [12,13,14] is that these models have struggled to capture the high-dimensional spatiotemporal features in the historical data. With deep learning methods demonstrating advantages in capturing the hidden nonlinear relationships embedded in the existing data, they can be applied to predict the traffic flow with satisfied performance. As the LSTM (Long-Short Term Memory) network has the advantages of dealing with time series data, it has gradually been used for traffic flow prediction. An LSTM Neural Network (LSTM-NN) is developed to extract the nonlinear dynamics in the traffic historical data [15], while a deep bidirectional uni-directional stacked LSTM-NN architecture considers the forward and backward dependencies of the traffic flow data to predict the network flow speed [16]. However, these LSTM-based methods only model the temporal relationships of traffic flow data, without considering the inherent spatial relationship in those data. Therefore, convolution neural networks [17] or deep residual convolutional structures [18] are employed to capture the spatial correlations in the data. However, either spatial or temporal correlations of the data have extensively been considered individually in the existing studies, leading to suboptimal prediction performance in complex traffic flow data.

Convolutional Neural Networks (CNN) and LSTM can be combined together for traffic flow prediction, considering both temporal and spatial correlations simultaneously [19]. The city-into-regions and a CNN-based end-to-end spatiotemporal residual network is developed to extract the spatiotemporal correlation among different regions [20]. A multi-view spatiotemporal network is developed where a CNN is employed to learn the local spatial correlations under shared similar temporal patterns [21]. However, the spatial information is easily removed or neglected by the global average pooling layer in the CNN, and the short-term temporal correlation is also twisted and cannot be considered continuously via the long-term dependency of the recursive network. Graph-based deep learning methods have been applied to capture the dependencies in non-Euclidean spaces. Examples include Graph Convolutional Networks (GCN) [22] and Graph Attention [23], which can encode the topological network of road traffic by integrating the graph-based LSTM and CNN structures, thereby enhancing the spatiotemporal correlations. Cui et al. have proposed a traffic graph convolutional LSTM-NN to model the traffic network as a graph and embed the traffic flow network into the graph so as to enhance the prediction accuracy [24]. A deep learning framework with a dynamic spatial-temporal graph convolutional network has been proposed to capture the dynamic spatial and temporal dependencies simultaneously, and an attention mechanism is incorporated to dynamically learn weights between traffic nodes based on graph features together with dilated causal convolution to capture the long-term tendencies of traffic data [25].

However, the impact of exceptional events, i.e., traffic accidents or congestion, on traffic flow prediction has seldom been considered, while the dissipation of spatiotemporal correlation features within the depth of the network are also not taken into account in their modeling framework.

The occurrence of abnormal events on a road could lead to significant deviations in traffic patterns as the traffic conditions during such periods are more dependent on instantaneous variations. In such scenarios, they can be classified into two categories: (1) long-term and planned events, such as weekday rush hours and holidays; (2) short-term unexpected events, including traffic accidents, adverse weather conditions, temporary traffic controls, etc. Hence, real-world traffic data can be used, while similarity features among regions are used as the spatial information [26] and contextual data locations, weather conditions, and traffic accidents are useful for the traffic flow prediction with improved prediction performance [27].

Shallow machine learning methods (in contrast to deep learning) can be adopted for traffic prediction under accidental conditions. For instance, an online regression support vector machine model (RSVR) has been proposed with higher performance with respect to traffic flow prediction in holiday and traffic accident scenarios [28]. Guo et al. [29] have developed a traffic predictor based on KNN (k-nearest neighbors) combined with singular spectrum analysis technology to predict short-term traffic flow via an integrated gradient lifting regression tree. Further, Chen et al. [30] have conducted traffic flow prediction in the short term via integrated gradient lifting regression trees. However, these methods only directly use traffic data under abnormal conditions or varied conditions without explicitly modeling the spatiotemporal impact of the local abnormal events on traffic flow.

Yu et al. [31] extracted the temporal correlation of multi-scale traffic flow by stacking LSTM networks and used the stacked autoencoders to extract the potential feature representations under peak periods and traffic accidents. Jiang et al. [32] designed an encoder and decoder architecture via ConvLSTM to predict citywide crowd dynamics during major events (e.g., earthquakes, national holidays). Liu et al. [33] designed a spatiotemporal convolutional sequence learning architecture based on accident encoding, which can effectively capture the short-term spatiotemporal correlations via unidirectional convolution, and it employs a self-attention mechanism to obtain long-term spatiotemporal periodic correlations.

To extract the complicated spatiotemporal correlations efficiently, Huang et al. [34] have proposed an encoder–decoder model for multi-step output prediction based on the bidirectional attention mechanism and multi-head spatial attention mechanism. The model uses bidirectional attention flows to investigate the intricate spatiotemporal correlations between the prediction data and historical data, predicting real-time ride-hailing demand during the COVID-19 pandemic. Luo et al. [35] have developed a Multi-Task Multi-Range Multi-Subgraph Attention Network (M3AN) which can be adaptable for both normal and abnormal traffic situations. Different subgraphs are used to model node attributes while attention mechanisms are employed to measure the dynamic spatial correlations. However, most current condition-aware traffic-prediction methods fall short of meeting expectations.

It is noted that various abnormal events (i.e., type, duration, and intensity) also impact traffic conditions across areas. For example, traffic congestion in one region could cause congestion in adjacent regions with comparable traffic change patterns. However, these changes in similar patterns could vary over time, indicating diverse spatial correlations. Spatiotemporal correlations between metropolitan regions are complicated within a given time frame, and precise traffic flow prediction necessitates extracting the spatiotemporal correlations of different locations at various time intervals.

We suggest a seq2seq model called multiple random walks on graphs BiAttenn (MRWG BiAtten), which combines multiple random walks on graphs and bidirectional spatiotemporal attention mechanisms for traffic flow prediction under abnormal events, to tackle these difficulties. The contributions of the manuscript are summarized as follows:

- (1)

- A traffic flow-prediction model based on Transformer is proposed with the applied encoder–decoder structure to integrate the graph random walk and spatiotemporal bidirectional attention mechanisms, in light of the impact of traffic accidents and congestion on urban traffic conditions.

- (2)

- A positional attention module is introduced allowing simultaneous concentration on both global and local spatial links by aggregating the global spatial correlations on local spatial features.

- (3)

- To enable the extraction of intricate and dynamic temporal dependencies, a time attention module is proposed to model the interdependencies between the long-term and short-term temporal features.

- (4)

- Two baseline datasets, TaxiNYC and BikeNYC, are used in the experiments for performance comparison to verify the superiority of the proposed prediction model.

The remainder of the paper is organized as follows. Section 2 introduces the problem formulation, and Section 3 describes the proposed traffic flow-prediction model in detail. In Section 4, in-depth experiments with public traffic datasets are carried out to validate the performance of the proposed model. Conclusions are provided in Section 5.

2. Problem Formulation

Certain symbols are first defined for the traffic flow-prediction problem formulation, where the traffic flow in the temporal dimension is frequently a continuation of the historical traffic flow states with periodicity.

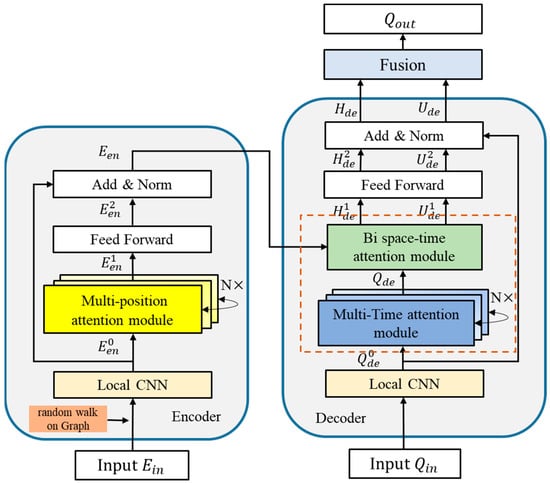

The entire city can be represented as a grid, with N regions (); denotes each region, and represents the entire time span of the historical observed data. The MRWG model is demonstrated in Figure 1, including an encoder and a decoder, which can be used to predict the traffic flow volume. in urban areas l time stamps into the future based on historical traffic flow dataset . Here, records the traffic flow volume in each urban area during the historical time period , and represents the predicted traffic flow volume for each urban area at future time stamps .

Figure 1.

The structure diagram of the proposed MRWG BiAtten model.

When processing the spatial and temporal correlations in the historical traffic flow data, sudden occurrences of traffic accidents or congestion from one area to another would cause a rapid spread of local traffic states to the surrounding areas after they have been maintained for some time [36]. Furthermore, different types of abnormal accidents have varied effects on the traffic conditions in the surrounding regions.

Given the historical traffic flow data of the target region and the abnormal events Y, the surrounding region subgraphs are formed based on the total abnormal event types. The historical traffic flow data are divided into B groups (the same as regions), denoted as . Considering the historical traffic flow and transient data and , as well as the accident data , the traffic flow prediction can be formalized as a learning function that maps the input to the output prediction of the traffic flow at the next timestep:

where and represent the predicted traffic flow and learnable parameters, respectively.

3. The Proposed Traffic Flow-Prediction Model

The developed encoder–decoder model structure is depicted in Figure 1. The input sequence () is the historical traffic flow data which enter the encoder to capture the spatiotemporal features in the representation tensor (), then they enter into the decoder along with supplementary information to produce an output sequence () of the future traffic flow status. Here, Qtj represents the predicted results for each grid during time period tj. Next, the encoder and decoder are formally described in detail.

- (1)

- Encoder: Four layers are included in the encoder: a Local CNN layer, an MH-SSA layer (multi-position attention machine layer), an FC-FF layer (fully connected feed-forward network layer), and a residual connection [37] with a Norm layer [38]. Here, Ein is the input of the encoder and Een is the encoded output.

- (2)

- Decoder: The structure of the decoder is similar to that of the encoder, consisting of a Local CNN layer, double-attention mechanism layers (dotted brown rectangle), an FC-FF layer and a Norm layer. The first layer of the attention mechanism (blue-filled rectangle in Figure 1) is the MT-SSA layer, which takes the output of the Local CNN layer as input to obtain the spatiotemporal feature Qde. The second layer is the BiAtten layer (green-filled rectangle in Figure 1), which takes Een and Qde as the inputs to calculate the bidirectional attention matrix and . After the FC-FF layer and Norm layer, the decoded states can be obtained as Hde and Ude.

3.1. The Random Walk Mechanism Based on Multi-Graph Attention

To thoroughly investigate the impact of traffic accidents and congestion, the regions of a city are encoded where abnormal traffic events occurred at the same time intervals so as to extract the positional features and spatial details of the abnormal traffic events. Next, the encoded regional graph is subjected to a multi-graph attention mechanism to dynamically model the spatial correlations of various kinds of abnormal events, as local subgraphs. When the captured abnormal traffic events occur between regions, the spatial features can be captured via the introduced attention mechanism to especially focus on the common variations in the traffic data across regions after the occurrence of the same type of abnormal event. Therefore, spatiotemporal features can be extracted via the multi-graph attention mechanism with the random walk mechanism. When traffic anomalies occur in the future, the random walk mechanism can account for the local and global traffic flow changes in the urban regional network.

- (1)

- Location coding

Map coding is applied [29] to extract the future location of the abnormal traffic events, representing potential traffic congestion. The coded location is used as the location accident information, and the event location feature is represented as the one-hot vector , where v is the amount of time intervals. Let represent the number of urban areas. Thus, the accident location coding can be embedded in ,

where is an activation function and are the learnable parameters in different layers of the network.

- (2)

- Multiple subgraph attention modules

According to the location feature AEi, the subgraph B is formed via the historical traffic data . Then, the historical traffic data from various regions with the same abnormal event type at different time steps are aggregated as a virtual node v, and the node subset (Vb) is constructed with K neighboring regions. Then, the traffic flow data are integrated into subgraph B via the Graph Attention (GAT) block as [39],

where vi is the hidden state of the node, denoted as , representing the bth GAT block (); represents the correlation coefficient between node v and the adjacent node vi; Wb is the hyper-parameter of the bth graph. denotes the concatenation operation; represents the inner product; is a single-layer feed-forward neural network which maps the concatenated high-dimensional features to a real number. For the bth GAT block, the output of the multi-subgraph attention module is represented as .

Considering the highly dynamic characteristics of the traffic flow conditions in the accident-prone regions, the impact on the traffic flow of the surrounding areas varies along with time and different types of ambient events. Here, the output of the multi-subgraph attention module is used with a random walk mechanism (RWA) to adaptively capture the correlations among various neighboring target nodes at low computational cost, improving the system ability to adapt to local variations in different abnormal event locations.

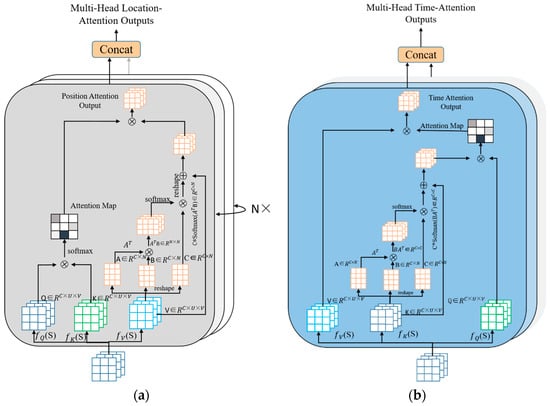

3.2. The Attention Mechanism Layer

The attention mechanism layer consists of the MH-SSA layer (multi-position attention layer), the MT-SSA layer (multi-time attention layer), and the BiAtten layer (the spatiotemporal bidirectional attention layer). Figure 2 depicts the configuration of the MH-SSA layer and the MT-SSA layer.

Figure 2.

The structure of the location and temporal attention modules. (a) The location attention module. (b) The temporal attention module.

- (1)

- The multi-position attention module

A location attention module is introduced in the encoder to extract the spatial correlation between potential local and global regions in the traffic flow history data. The location attention module weights all the location features based on similarities between regions in order to focus on both the local spatial correlation and global spatial correlations at the same time.

As shown in Figure 2a, given a historical dataset , it is passed through a convolutional layer so as to generate two new feature maps B and C, i.e., . Further, they are reshaped into , and is the amount of city regions. Then, matrix C is multiplied by the transpose of B, and the spatial positional attention map is calculated via the sofmax function,

where Sij is the impact of position i on position j. The greater the similarity of the feature representations, the greater the correlation between the two locations.

In the meantime, feature A is input into the convolution layer, and a new feature map D is generated and reshaped into R. The transpositions of D are multiplied by S and then the result is reshaped into R as well. Afterwards, the reshaped result is multiplied with the scaling parameter and summed up piecewise to obtain the final output,

where is the learning parameter with initial value 0 [28]. From Equation (7), it can be obtained that feature E is the weighted sum of the global spatial correlation features and local spatial correlation, and similar regional spatial correlations are mutually enhanced accordingly. Then, E is used as a value matrix to participate in the calculation of the attention matrix.

- (2)

- The multi-temporal attention module

A temporal attention module is designed to capture the long-term and short-term temporal periodical correlation from historical traffic flow data. The module emphasizes the interrelated long-term time features to improve the short-term time-dependent feature representation, shown in Figure 2b. Unlike the location-attention module, the temporal attention graph is calculated from the history traffic flow data . is reshaped to , , then the time attention map is obtained via the softmax layer:

where represents the impact of the ith timestamp on the jth timestamp. Subsequently, matrix multiplication is performed between X and the transpose of A to capture the periodicity in the long term, reshaping the result into . Then the standard parameter is applied with the obtained results and the final output can be obtained with the element-wise summation of the original data A:

where is the learnable weight with initial value 0. Equation (9) indicates that the final feature of each timestamp is a weighted summation of the periodic features of all timestamps with the correlation features of short-term timestamps. Then K is used as a key matrix to participate in the calculation of the attention matrix.

- (3)

- The bidirectional spatiotemporal attention module

Inspired by the design of the BiDAF network [40], the decoder includes a bidirectional spatiotemporal attention module designed to handle both the historical data (Een) and predicted data (Qde). To combine the spatial correlation features from the location attention module (LAM) with temporal correlation features from the temporal attention module (TAM), it computes a set of attention feature vectors. Following that, the spatiotemporal bidirectional attention features are computed at each timestamp, based not only on the features from the previous time step but also the interactions of all traffic flow situations.

In this module, the input consists of the spatial correlation features and . We compute the attention scores from both directions, to and to , for the calculations, i.e., the historical spatiotemporal awareness vector and the predicted spatiotemporal awareness vector .

- (4)

- The multi-output strategy

The MRWG BiAtten model can be used to predict the future traffic demand for all regions, thus maintaining the spatiotemporal correlations between regions and prediction time intervals. Inspired by the design of the BiDAF network [40], the integrated attention vectors , , the processed decoder states , , and the encoder states are all combined together,

where “◦” denotes the element-wise multiplication and [;] represents the concatenation of the cross columns. is then transformed to obtain the final result via a one-dimensional CNN, where each column of can be assumed as the predicted representation of each input of the historical regional traffic data.

4. Experiment and Result Analysis

4.1. Data Acquisition and Processing

The performance of the proposed MRWG BiAtten model is examined on two datasets, i.e., the BikeNYC dataset and the TaxiNYC dataset. Both datasets are associated with traffic flow demand, including time, region, demand request times, etc. In addition, motor vehicle accident data of New York City (NYC) are added to analyze the impact of abnormal events. The NYC dataset consists of the traffic records of in total 60 days in 2016, including 8000 traffic accidents per month, where each record contains the location of the vehicles and the start and end times of the journey. Table 1 is a summary of the two datasets.

Table 1.

Dataset details.

The first 45 days of the dataset are used as the training data, and the remainder for the test set. Before the training, min-max scaling is used to unify the data in the range of [0, 1], and the prediction output is de-normalized for evaluation. The Local CNN networks have a space pool size of m = 7, a {7 × 7} convolution kernel. In our proposed model, the dropout rate is set to dprate = 0.1, and the number of stacked model layers and N multi-head attention modules is set as 4 and 8, respectively. The performed hardware environment contains 1 × NVIDIA GeForce RTX 3080(10G) GPUs and 2 × Intel Xeon Silver 4214R CPU. The learning rate is set as 0.001 and the batch size is fixed as 64. The training epoch is set as 500 to ensure convergence and the Adam optimizer is used to train the model.

4.2. Data Analysis and Evaluation Metrics

In order to verify the prediction performance of the proposed method, the root mean square error (RMSE) and mean absolute error (MAE) are used as the evaluation indexes:

where and represent the actual and predicted traffic flow, respectively; n is the number of traffic zones.

Several prediction models are used for comparison, HA, ARIMA, LSTM, ConvLSTM, and STDN (Spatiotemporal Dynamic Network). These methods can be divided into three categories: (1) traditional statistics-based time series prediction model (HA, ARIMA); (2) time series prediction model based on deep learning (LSTM); (3) deep learning network for traffic flow prediction based on spatiotemporal feature mining (ConvLSTM, STDN). The details of these comparison methods are described below.

4.3. Comparison Experiments and Analysis

The test results with the two datasets are listed in Table 2. The MAE and RMSE of TaxiNYC are higher than those of BikeNYC, as the data scale of TaxiNYC is larger than that of BikeNYC. In both metrics, the statistical time series analysis methods HA and ARIMA have significantly larger prediction deviations than that of the LSTM method for both datasets. This indicates that manually extracting the temporal features cannot precisely obtain the nonlinear temporal correlations in traffic data.

Table 2.

Comparison results conducted on BikeNYC and TaxiNYC datasets.

We take inflow data as an example. On the BikeNYC dataset, the ConvLSTM method has a lower RMSE and MAE than LSTM by 1.22% and 2.01%, respectively. On the TaxiNYC dataset, ConvLSTM outperforms LSTM with 7.59% lower RMSE and 5.61% lower MAE. This suggests that spatial and temporal correlations are more effective than temporal correlations alone, emphasizing the significance of modeling spatial dependencies between regions.

On the BikeNYC dataset, we take the inflow data as an example, where our proposed approach outperforms ConvLSTM by 18.59% and 14.22%, and STDN by 9.49% and 2.17%, when it comes to capturing spatial and short-term/long-term temporal correlations. On the TaxiNYC dataset, our proposed method can achieve lower RMSE and MAE compared to ConvLSTM by 10.35% and 20.79%, and compared to STDN by 3.25% and 6.64%.

It is noteworthy that STDN with an attention mechanism outperforms the ConvLSTM model. This observation confirms that the attention mechanism can be used to enhance the capability of spatiotemporal feature extraction. Our approach is designed with a multi-head position attention module for extracting spatial features, which includes a multi-head time attention module to extract the temporal features and a bidirectional attention module to extract both the temporal and spatial features. This allows our model to have superior spatiotemporal prediction capability compared to the baseline models.

The suboptimal performance of ConvLSTM may be attributed to its incapacity to completely exploit the potential spatiotemporal correlations in traffic flow data. Therefore, in order to improve the prediction accuracy, STDN makes an effort to capture spatial correlations as well as short- and long-duration temporal correlations via multi-perspective and long-term attention processes. However, the prediction accuracy is lower than that of the MRWG BiAtten due to the omitted effects of other events, including accidents in traffic flow.

Our approach utilizes a random walk method based on a multi-graph attention mechanism to encode the accident data as spatial features to depict traffic congestion, and performs much better than the networks without the accident-encoding structure. As indicated in Table 3, the role of accident data in traffic flow prediction is evaluated via RMSE. The right two columns indicate whether the accident data module is considered; the majority of the aforementioned techniques perform better when the accident data are taken into account, particularly for models which take spatial correlations into account. It is seen that the proposed model can achieve the best improvement since the accident data are incorporated via the random walk method based on a multi-graph attention mechanism. However, LSTM, due to its focus only on the temporal features, performs poorly in extracting spatial relationships from external features, indicating that accident data can be used to extract useful local semantic information variations and apply them to traffic flow prediction.

Table 3.

Analysis of the role of the accident module.

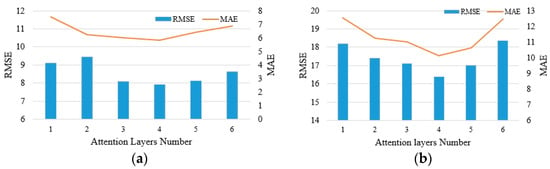

4.4. Sensitivity Analysis

The sensitivity of the proposed MRWG BiAtten model is analyzed. Figure 3a demonstrates that the RMSE error first drops then rises with the increase in attention layers performed on the BikeNYC inflow dataset, and the MAE error has a similar tendency. Therefore, moderately increasing the network depth can increase the prediction accuracy. However, it could face overfitting or other issues if the network is too deep, which can explain the reason that the RMSE error increases with more than four attention layers. Figure 3b shows that the model also behaves similarly on the TaxiNYC inflow dataset.

Figure 3.

Attention layer analysis for MRWG BiAtten. (a) Results of adding more attention layers on BikeNYC dataset. (b) Results of adding more attention layers on TaxiNYC dataset.

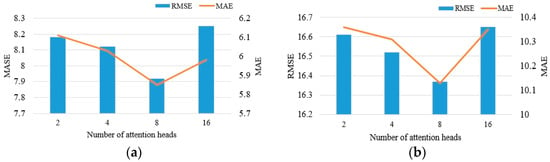

Within the constraints of the specified attention head count, we have explored the impact of different numbers of heads. Figure 4 depicts the RMSE and MAE values on the BikeNYC and TaxiNYC datasets as the number of attention heads varies. The results indicate that, when the number of the attention heads is set to less than 8, both RMSE and MAE values gradually decrease with the increase in the attention head count. However, once the count exceeds 8, RMSE and MAE values begin to rise, suggesting that appropriately increasing the number of attention heads can enhance the performance. Nevertheless, exceeding a certain number may lead to overfitting.

Figure 4.

Attention head analysis for MRWG BiAtten. (a) Results of adding attention heads on the BikeNYC dataset. (b) Results of adding attention heads on the TaxiNYC dataset.

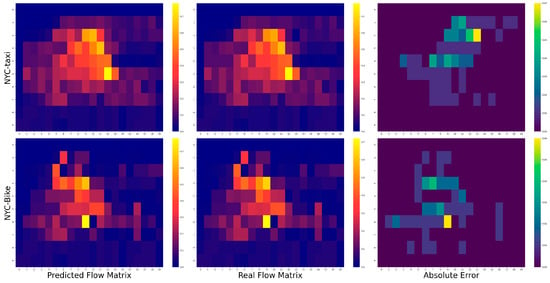

Eventually, the best prediction performance is achieved when all components are combined together. As displayed in Figure 5, high prediction accuracy can be obtained in most regions with high proportion, indicating that the model performs satisfactorily in traffic flow prediction.

Figure 5.

Flow matrix at the latest timestamp on the two datasets.

There are two main reasons that the MRWG BiAtten model works better than the alternative methods: (1) The MRWG BiAtten model uses the position attention mechanism to capture both global and local spatial correlations in the data and the temporal attention modules to model the interdependencies between short-term and long-term temporal features, while bidirectional attention mechanisms are applied to integrate spatiotemporal features, designating the entire traffic data tensor as the input. (2) The MRWG BiAtten model addresses the impact of abnormal traffic events by designing a random walk mechanism based on the multi-graph attention mechanism to account for the fluctuations in the local and global traffic flow of the city region network when abnormal traffic events occur in the future. This could be beneficial for making accurate short-term traffic flow predictions in the event of traffic accidents and congestion.

5. Conclusions

This paper proposes a traffic flow-prediction method based on a multiple random walk on graphs and bidirectional attention mechanism within an encoder–decoder framework, focusing on the impact of spatial and time correlations under traffic accidents and congestion. It also utilizes a spatiotemporal bidirectional attention mechanism to capture the interaction between spatial correlations and temporal correlations in historical, current, and future demand representations. On the practical function level, the MRWG BiAtten model allows us to address the impact of abnormal events in traffic system modeling. Experiments on the TaxiNYC and BikeNYC datasets demonstrate the superiority in traffic flow-prediction efficiency of our MRWG BiAtten model in abnormal events, especially short-term traffic disruptions. When compared with ConvLSTM and STDN, it is confirmed that a location attention mechanism and a time attention mechanism are useful for capturing global and local spatiotemporal information in the data. Overall, the research results of this paper can facilitate the development and renewal of ITS, and make substantial contributions to ameliorating traffic congestion dilemmas in fast developing countries both domestically and internationally. Future work will continue to consider other impacts on traffic situations, such as traffic control, while it is also worthwhile to investigate how to lower the number of parameters to enhance model prediction speed.

Author Contributions

Methodology, S.Y.; Validation, S.Y.; Writing—review & editing, Y.Z.; Supervision, Y.Z. and Z.W.; Funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key R&D Program of China (No. 2022YFB2403805), in part by the Shenzhen Basic Program No. JCYJ20200109114839874.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. The TaxiNYC data can be found here: [https://www.nyc.gov/site/tlc/about/tlc-trip-record-data.page]. The BikeNYC data can be found here: [https://citibikenyc.com/system-data]. This Accident data can be found here: [https://opendata.cityofnewyork.us/], all accessed on 5 May 2024.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, J.; Wang, F.-Y.; Wang, K.; Lin, W.-H.; Xu, X.; Chen, C. Data driven intelligent transportation systems: A survey. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1624–1639. [Google Scholar] [CrossRef]

- Zhu, L.; Yu, F.R.; Wang, Y.; Ning, B.; Tang, T. Big data analytics in intelligent transportation systems: A survey. IEEE Trans. Intell. Transp. Syst. 2019, 20, 383–398. [Google Scholar] [CrossRef]

- Zheng, J.; Ni, L.M. Time-dependent trajectory regression on road networks via multi-task learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 14–18 July 2013; pp. 1048–1055. [Google Scholar]

- Wang, Z.; Lv, C.; Wang, F.-Y. A New Era of Intelligent Vehicles and Intelligent Transportation Systems: Digital Twins and Parallel Intelligence. IEEE Trans. Intell. Veh. 2023, 8, 2619–2627. [Google Scholar] [CrossRef]

- Lippi, M.; Bertini, M.; Frasconi, P. Short-term traffic flow forecasting: An experimental comparison of time-series analysis and supervised learning. IEEE Trans. Intell. Transp. Syst. 2013, 14, 871–882. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, Y.; Qi, D.; Li, R.; Yi, X. DNN-based prediction model for spatio-temporal data. In Proceedings of the IGSPATIAL’16: 24th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Burlingame, CA, USA, 31 October–3 November 2016; pp. 1–4. [Google Scholar]

- Veres, M.; Moussa, M. Deep learning for intelligent transportation systems: A survey of emerging trends. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3152–3168. [Google Scholar] [CrossRef]

- Williams, B.M.; Hoel, L.A. Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Wu, C.-H.; Ho, J.-M.; Lee, D.T. Travel-time prediction with support vector regression. IEEE Trans. Intell. Transp. Syst. 2004, 5, 276–281. [Google Scholar] [CrossRef]

- Nikovski, D.; Nishiuma, N.; Goto, Y.; Kumazawa, H. Univariate short-term prediction of road travel times. In Proceedings of the 2005 IEEE Intelligent Transportation Systems, 2005, Vienna, Austria, 16 September 2005; pp. 1074–1079. [Google Scholar]

- Shekhar, S.; Williams, B.M. Adaptive seasonal time series models for forecasting short-term traffic flow. Transp. Res. Rec. J. Transp. Res. Board 2007, 2024, 116–125. [Google Scholar] [CrossRef]

- Li, X.; Pan, G.; Wu, Z.; Qi, G.; Li, S.; Zhang, D.; Zhang, W.; Wang, Z. Prediction of urban human mobility using large-scale taxi traces and its applications. Front. Comput. Sci. 2012, 6, 111–121. [Google Scholar] [CrossRef]

- Moreira-Matias, L.; Gama, J.; Ferreira, M.; Mendes-Moreira, J.; Damas, L. Predicting taxi-passenger demand using streaming data. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1393–1402. [Google Scholar] [CrossRef]

- Chen, X.; He, Z.; Wang, J. Spatial-temporal traffic speed patterns discovery and incomplete data recovery via SVD-combined tensor decomposition. Transp. Res. Part C Emerg. Technol. 2018, 86, 59–77. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Cui, Z.; Ke, R.; Pu, Z.; Wang, Y. Deep bidirectional and unidirec-tional LSTM recurrent neural network for network-wide traffic speed prediction. arXiv 2018, arXiv:1801.02143. [Google Scholar]

- Van Den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W.-C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the NIPS’15: Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Zhang, J.; Zheng, Y.; Qi, D. Deep spatio-temporal residual networks for citywide crowd flows prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 1655–1661. [Google Scholar]

- Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; Chuxing, D.; Li, Z. Deep multi-view spatial-temporal network for taxi demand prediction. In Proceedings of the AAAI’18: AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 2588–2595. [Google Scholar]

- Geng, X.; Li, Y.; Wang, L.; Zhang, L.; Yang, Q.; Ye, J.; Liu, Y. Spatiotemporal multi-graph convolution network for ride-hailing demand forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3656–3663. [Google Scholar]

- Zhang, C.; James, J.; Liu, Y. Spatial-temporal graph attention networks: A deep learning approach for traffic forecasting. IEEE Access 2019, 7, 166246–166256. [Google Scholar] [CrossRef]

- Cui, Z.; Henrickson, K.; Ke, R.; Wang, Y. Traffic graph convolutional recurrent neural network: A deep learning framework for network-scale traffic learning and forecasting. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4883–4894. [Google Scholar] [CrossRef]

- Liu, T.; Jiang, A.; Zhou, J.; Li, M.; Kwan, H.K. GraphSAGE-based dynamic spatial–temporal graph convolutional network for traffic prediction. IEEE Trans. Intell. Transp. Syst. 2023, 24, 11210–11224. [Google Scholar] [CrossRef]

- Deng, D.; Shahabi, C.; Demiryurek, U.; Zhu, L.; Yu, R.; Liu, Y. Latent space model for road networks to predict time-varying traffic. In Proceedings of the KDD’16: The 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1525–1534. [Google Scholar]

- Pan, B.; Demiryurek, U.; Shahabi, C. Utilizing real-world transportation data for accurate traffic prediction. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining, Brussels, Belgium, 10–13 December 2012; pp. 595–604. [Google Scholar]

- Castro-Neto, M.; Jeong, Y.S.; Jeong, M.K.; Han, L.D. Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert Syst. Appl. 2009, 36, 6164–6173. [Google Scholar] [CrossRef]

- Guo, F.; Krishnan, R.; Polak, J.W. Short-term traffic prediction under normal and abnormal traffic conditions on urban roads. In Proceedings of the Transportation Research Board 91st Annual Meeting, Washington, DC, USA, 22–26 January 2012; pp. 1–17. [Google Scholar]

- Chen, X.M.; Zhang, S.; Li, L. Multi-model ensemble for shortterm traffic flow prediction under normal and abnormal conditions. IET Intell. Transp. Syst. 2018, 13, 260–268. [Google Scholar] [CrossRef]

- Yu, R.; Li, Y.; Shahabi, C.; Demiryurek, U.; Liu, Y. Deep learning: A generic approach for extreme condition traffic forecasting. In Proceedings of the 2017 SIAM International Conference on Data Mining, Houston, TX, USA, 27–29 April 2017; pp. 777–785. [Google Scholar]

- Jiang, R.; Song, X.; Huang, D.; Song, X.; Xia, T.; Cai, Z.; Wang, Z.; Kim, K.S.; Shibasaki, R. Deepurbanevent: A system for predicting citywide crowd dynamics at big events. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2114–2122. [Google Scholar]

- Liu, Z.; Zhang, R.; Wang, C.; Xiao, Z.; Jiang, H. Spatial-Temporal Conv-Sequence Learning With Accident Encoding for Traffic Flow Prediction. IEEE Trans. Netw. Sci. Eng. 2022, 9, 1765–1775. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, D.; Yin, Y.; Li, X. A spatiotemporal bidirectional attention-based ride-hailing demand prediction model: A case study in beijing during COVID-19. IEEE Trans. Intell. Transp. Syst. 2021, 23, 25115–25126. [Google Scholar] [CrossRef]

- Luo, D.; Zhao, D.; Cao, Z.; Wu, M.; Liu, L.; Ma, H. M3AN: Multitask Multirange Multisubgraph Attention Network for Condition-Aware Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2023, 24, 218–232. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, W.; Liang, Y.; Zhang, W.; Yu, Y.; Zhang, J.; Zheng, Y. Spatio-temporal meta learning for urban traffic prediction. IEEE Trans. Knowl. Data Eng. 2022, 34, 1462–1476. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Seo, M.; Kembhavi, A.; Farhadi, A.; Hajishirzi, H. Bidirectional attention flow for machine comprehension. arXiv 2016, arXiv:1611.01603. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).