Abstract

To address the current issues of complex structures and low accuracies in citrus pest identification models, a lightweight pest identification model was proposed. First, a parameterized linear rectification function was introduced to avoid neuronal death. Second, the model’s attention to pest characteristics was improved by incorporating an improved mixed attention mechanism. Subsequently, the network structure of the original model was adjusted to reduce architectural complexity. Finally, by employing transfer learning, an SCHNet model was developed. The experimental results indicated that the proposed model achieved an accuracy rate of 94.48% with a compact size of 3.84 MB. Compared to the original ShuffleNet V2 network, the SCHNet model showed a 3.12% accuracy improvement while reducing the model size by 22.7%. The SCHNet model exhibited an excellent classification performance for citrus pest identification, enabling the accurate identification of citrus pests.

1. Introduction

Citrus, the most extensively cultivated fruit tree variety in China, is predominantly distributed in the southern regions of the country owing to its high profitability. In 2021, the annual citrus production in China reached 57.3216 million tons, representing roughly one third of the global total output [1,2]. Due to the excessive application of pesticides, expansion of citrus cultivation areas, and global climate change, the incidence and spread of citrus pests and diseases are worsening. China may encounter new challenges in controlling citrus pests.

Currently, traditional pest identification methods include manual identification [3] and instrument-based identification. The former method exhibits strong subjectivity, low efficiency, and high manual cost consumption [4,5,6], whereas the instrument recognition method is susceptible to external environmental factors, hardware devices, and software systems. These shortcomings may result in the delayed detection and treatment of pests, causing crop damage and reduced yields. Deep learning image recognition technology can effectively address these issues, facilitate the diagnosis and prevention of crop pests, and promote rapid advancements in agriculture [7,8,9].

Common deep learning networks can be classified into two categories, including large networks (e.g., ResNet [10], VggNet [11]) and lightweight networks (e.g., SqueezeNet [12], ShuffleNet [13], and MobileNet [14]). In research on large-scale networks, Pan Chenlu et al. [15] introduced a G-ECA Layer structure into the DenseNet201 model to enhance its feature extraction capability. The new model achieved an accuracy of 83.52% in recognizing five categories of images of rice diseases and insect pests. In addition, Cen Xiao et al. [16] integrated the architecture of SeparableConv with deep separable convolutional layers based on the Xception network, achieving complete decoupling of spatial and cross-channel correlations. This model improved the effectiveness without increasing the network complexity. Furthermore, it achieved an identification accuracy of 81.9% in recognizing four citrus diseases and pests, such as woodlice and fruit flies. Zeba et al. [17] proposed an ensemble-based model using transfer learning, where an amalgamation of pretrained models was experimented with. The ensemble model comprising VGG16, VGG19, and ResNet50, followed by a voting classifier ensemble, yielded the most promising results, achieving an accuracy of approximately 82.5%. Su Hong et al. [18] used an R-CNN model based on a 33-layer ResNet main trunk network to identify citrus huanglongbing, red spider infection, and canker disease. Although there were few convolutional layer networks selected, they were still able to achieve a high recognition accuracy.

The studies under discussion encountered two main challenges: the complexity of the models and their suboptimal recognition accuracy. Recognized for their minimal computational demands and robust learning capacities, lightweight networks also offer modest memory usage and adaptability. These advantages have made them increasingly preferred for pest identification tasks. In their study, Ganyu et al. [19] enhanced EfficientNet. They integrated a coordinated attention mechanism and implemented a hybrid training approach. This approach combines data augmentation with the Adam optimization algorithm. It effectively improves the model’s generalization capabilities. Despite these improvements, the model attained a modest accuracy of 69.45% with 5.38 M parameters. Zhang Pengcheng et al. [20] also sought to improve model performance. They augmented MobileNet V2 by incorporating the efficient channel attention (ECA) mechanism. This enhancement improved the network’s capability for cross-channel interaction and feature extraction. Their model achieved a classification accuracy of 93.63% for citrus pests, utilizing 3.5 M parameters. Setiawan et al. [21] proposed an efficient training framework tailored for optimizing small-sized MobileNetV2 models. This approach leveraged dynamic learning rate adjustments, CutMix augmentation, layer freezing, and sparse regularization. By integrating these techniques during training, an accuracy of 71.32% was achieved with a parameter count of 4.2 M. However, models with reduced parameter settings did not yield high accuracy. Zhou et al. [22] proposed a GE-ShuffleNet convolutional neural network model for rice disease identification; the new model can reach a 96.6% accuracy but the model size is 5.879 M. Liu et al. [23] propose a training model based on bidirectional encoder representation from transformers (BERT). When tested on a created dataset, the model achieved an accuracy rate of 0.9423. However, none of the above models combine the two main properties of a low parameter and high accuracy at the same time.

Considering the issues highlighted in the aforementioned research, this study focused on optimizing the ShuffleNet V2 lightweight convolutional neural network model. The objective was to enhance its structure to develop a streamlined architecture capable of achieving high recognition rates for pest identification.

2. Materials and Methods

2.1. Data Set Collection and Processing

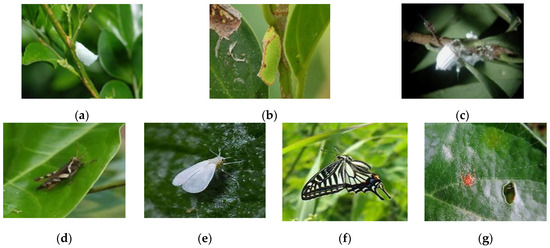

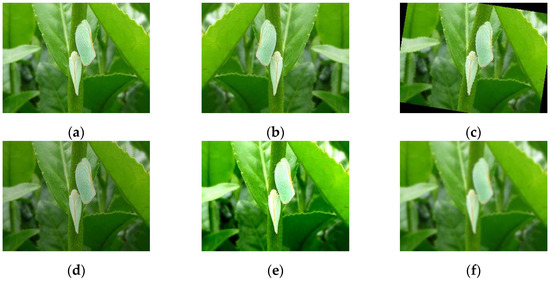

The research dataset included images from citrus orchards at various locations around Guilin and online sources. The study focused on seven common citrus pests. These include the Lawana imitate, Geisha distinctissma, Icerya purchase, Xenocatantops prachycerus, Dialeu-rodes citri, Papilio xuthus, and Panonychus citri. Among them, the adults and wakame of the white moth Lawana imitate [24] and Icerya purchase [25] cluster on hidden branches and trunks to suck sap, leading to tree weakness. The honeydew discharged also induces sooty disease and affects photosynthesis; Panonychus citri, also known as the citrus whole-clawed mite, has a strong reproduction ability and obvious alternation of generations and occurs throughout the year in some citrus production areas, mainly as adult mites and as mites sucking sap from citrus leaves as well as fruits, which has a serious impact on citrus yield and quality [26], and the damage of the above pests is the most serious. These pests are depicted in Figure 1. To enhance model stability and mitigate overfitting, the original dataset underwent augmentation. This process is demonstrated in augmented Figure 2. Techniques such as flipping, brightness adjustment, and Gaussian blur were employed. As a result, the dataset expanded to include 7089 images. This augmentation improved the model’s accuracy, robustness, and reliability. The dataset was divided into two subsets: the training set and the test set. Eighty percent of the images for each pest type were allocated to the training set. The remaining 20% formed the test set. The training and test sets contained 5676 and 1413 images, respectively. Table 1 illustrates the distribution of these sets.

Figure 1.

Pest images. (a) Lawana imitata (b) Geisha distinctissma (c) Icerya purchasi (d) Xenocatantops prachycerus (e) Dialeurodes citri (f) Papilio xuthus (g) Panonychus citri.

Figure 2.

Amplified pictures (a) Original (b) Horizontal flip (c) Flip (d) Adjust brightness (e) Adjust contrast brightness (f) Gaussian blur.

Table 1.

Data set.

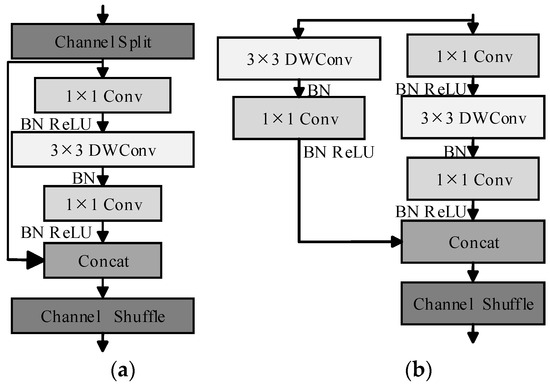

2.2. ShuffleNet V2 Model

The ShuffleNet V2 network developed by Ma et al. [13] is a lightweight model that builds upon the ShuffleNet V1 architecture. The following four key factors can significantly affect the speed of the ShuffleNet V2 network. (1) When the input and output channels of the convolutional layer are equal, the model operates at its maximum speed with minimal memory access time. (2) Excessive convolution operations can increase memory access time, resulting in a slower model speed. (3) The fewer the branches in the model network, the faster the speed. (4) Adding point-by-point operations can decelerate the model and the frequency of such additions can be minimized.

The ShuffleNet V2 comprises two main components: the basic unit and the downsampling unit, as depicted in Figure 3a,b, respectively. In the basic unit, the input image features are divided evenly into two groups under a channel split operation. The right branch sequentially traverses the 1 × 1 convolution, 3 × 3 depth-wise convolution, and a 1 × 1 convolution, while the left branch remains unprocessed. Subsequently, the left and right branches are concatenated, and channel shuffling is performed to enhance the information exchange between different groups. In the downsampling unit, the image features can directly enter both branches. The right branch undergoes sequential processing through a 1 × 1 convolution, 3 × 3 depth-wise convolution with a stride of 2, and a 1 × 1 convolution, and the left branch first undergoes a 3 × 3 depth-wise convolution with a stride of 2 and then a 1 × 1 convolution. Subsequently, the left and right branches are concatenated and channel shuffling enhances information exchange between different groups.

Figure 3.

Basic module of ShuffleNet V2. (a) Basic unit (b) downsampling unit.

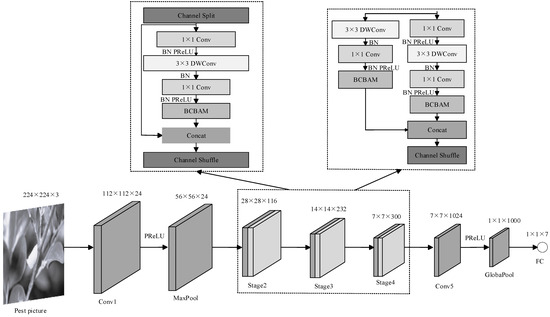

2.3. Improved SCHNet Model

The dataset created for this study faced challenges, such as pest blending with the background, complex backgrounds in pest images, and variations in pest sizes. Therefore, ShuffleNet V2 1.0× was selected as the backbone network and was adapted accordingly. The SCHNet architecture is illustrated in Figure 4. In this figure, Conv, Conv1, and Conv5 represent the convolution operations, Channel split denotes the channel separation, DWConv represents the depth-wise convolution, Concat indicates the channel concatenation, Channel Shuffle represents the channel shuffling, and MaxPool denotes the max pooling. Stage2, Stage3, and Stage4 comprise the enhanced basic and downsampling units stacked together. GlobalPool represents the global average pooling. FC represents the fully connected layer, PReLU denotes the activation function, and BCABM denotes the improved CBAM attention mechanism module.

Figure 4.

SCHNet structure.

2.3.1. Subsubsection Parametric Linear Rectification Function

ReLU was selected as the activation function for the convolutional layers in the ShuffleNet structure because of its two primary benefits: overcoming gradient vanishing issues and accelerating training. However, the ReLU has several drawbacks. During training, the ReLU neurons may become inactive when negative values or large gradients pass through them, thereby preventing the corresponding parameters from being updated. To address the limitations of the ReLU, a parametric linear rectifier function (PReLU) was adopted as a replacement. Initially proposed by He et al. [27] in 2016, PReLU introduced a learnable parameter α as the base of the ReLU. Consequently, when the input is negative, the output becomes α times the input value instead of simply being 0. This adjustment mitigates the dead neuron problem associated with ReLU, as even negative inputs yield nonzero outputs with a controllable slope. This slope could be learned during training, thereby enhancing the performance of the model. The PReLU activation function is represented by Equation (1).

where α is a random number following a normal distribution between 0 and 1 and i represents a specific channel. When αi = 0, the PReLU(χ) function becomes an ReLU function. When αi > 0, the PReLU(χ) function becomes leaky. When αi is a variable parameter, PReLU(χ) becomes a parametric ReLU function.

2.3.2. Subsubsection Improved Mixed Attention Mechanism

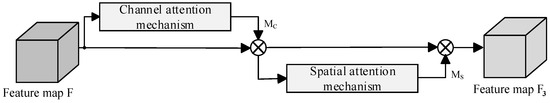

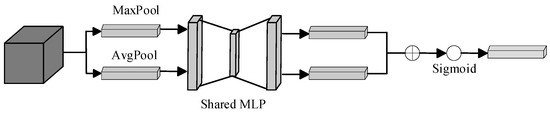

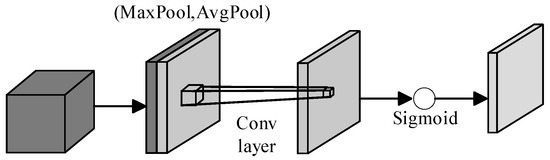

The convolutional block attention module (CBAM), also known as a mixed attention mechanism [28], was inserted into the above base block. It is a serial structure, as shown in Figure 5, which takes the image feature map F output by the convolutional layer as input, sequentially enters the channel attention module to obtain F2, and then obtains F3 through the spatial attention module, as shown in Equation (2). The structures of the channel and spatial attention mechanisms are depicted in Figure 6 and Figure 7, respectively. Pest feature map multiplication operations consume additional computational resources and increase the computational complexity of the model, especially if the network is deep or the feature map is large. In the sequential connection of CBAM, the modules independently calculate the weights, resulting in higher computational costs and memory usage. Moreover, the modules can only attend to the features of the preceding modules, disregarding those of the subsequent modules.

where MC(F) represents the output weight resulting from F passing through the channel attention module and MS(F2) represents the output weight following F2’s traversal through the spatial attention module. The F2 can function as an intermediate variable, and represents the operator for weighted multiplication of the feature map.

Figure 5.

Structure of mixed attention mechanism.

Figure 6.

Channel attention mechanism.

Figure 7.

Spatial attention mechanism.

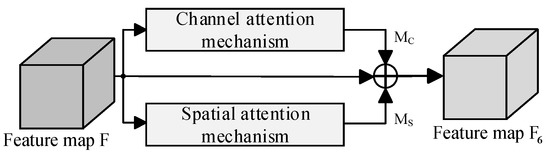

To solve those issues, the serial connection of the hybrid attention mechanism was altered to a parallel connection. Additionally, the transformation from multiplication to summation of the feature maps before the output enhanced information integration, facilitating the extraction of features with enhanced representational power. In parallel connections, multiple modules can concurrently compute weights and the inclusion of feature maps facilitated alleviating gradient vanishing, thereby promoting improved training results for deep neural networks. The proposed BCBAM attention mechanism is illustrated in Figure 8 and its functional expression is presented in Equation (3).

where MS(F) denotes the output weight after F passes through the spatial attention module. F4 and F5 are intermediate variables and represents the operator for the weighted addition of the feature map.

Figure 8.

Structure of BCBAM attention mechanism.

2.3.3. Adjusting Network Architecture

The ShuffleNet V2 1× network incorporated the BCBAM attention mechanism and utilized the PReLU activation function to enhance its performance. However, this addition increased the complexity of the model. To maintain a balance between performance and lightweight architecture, this study modified the Conv5 structural layer by adjusting the stride from 1 to 2 and introducing a parameter with an interval of 2, thereby implementing dilated convolution. Dilated convolution [29] primarily aimed to expand the receptive field to improve the network performance. In addition, the output channels of Stage4 were reduced from the original 464 to 300. The experimental results confirmed the effectiveness of these architectural adjustments.

2.3.4. Transfer Learning

Transfer learning [30] involves learning features from a pretrained network model and transferring them to one’s own network model, thereby reducing training steps, shortening training time, and decreasing overfitting. Transfer learning is effective in addressing the challenges posed by images of insufficient size or quality within the datasets. Specifically, certain categories of the dataset featured a limited number of images or images with intricate backgrounds, necessitating up to 200 training iterations, which significantly extended the training duration. Consequently, transfer learning emerged as a pivotal component in this study.

2.4. Experimental Setup and Parameters

The experiments in this study were conducted using Python 3.9 and the PyTorch deep learning framework. The operating system was a 64-bit Windows 10 Professional Edition running on an Intel(R) Core (TM) i5-10400 CPU @ 2.90GHz2.90 GHz processor (Intel, Santa Clara, CA, USA), coupled with an NVIDIA GeForce RTX 3060 GPU (NVIDIA, Santa Clara, CA, USA). A stochastic gradient descent (SGD) optimizer was utilized during training, with a cross-entropy loss function, learning rate set at 0.01, 200 iterations, and batch size of 32.

3. Results and Discussion

3.1. Experimental Setup and Parameters

The performance metrics evaluated in this study for pest identification included accuracy, precision, recall, and the F1 score. Furthermore, the complexity of the model was assessed based on the number of model parameters and the volume of floating-point operations.

Accuracy refers to the proportion of correctly predicted samples among all the samples, as shown in Equation (4).

Precision refers to the proportion of samples that were correctly predicted as positive among all actual positive samples, as shown in Equation (5).

Recall refers to the proportion of positive samples that were correctly predicted as positive, as indicated by Equation (6).

The F1 score is the harmonic mean of precision and recall, as shown in Equation (7).

where TP represents the number of actual positive samples that were correctly predicted as positive, TN represents the number of actual negative samples correctly predicted as negative, FP represents the number of actual negative samples incorrectly predicted as positive, and FN represents the number of actual positive samples incorrectly predicted as negative.

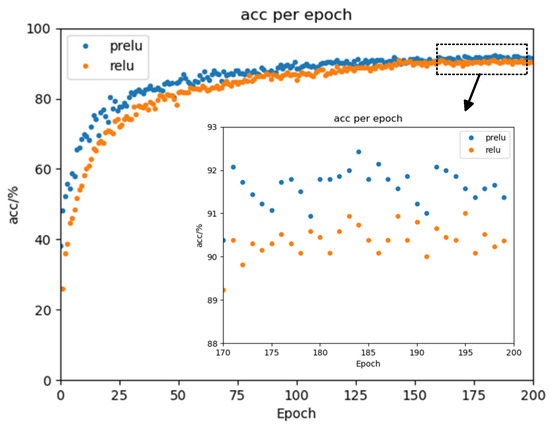

3.2. Replacing the ReLU Activation Function

In this study, the ReLU activation function utilized in the basic unit, downsampling unit, Conv1 structure layer, and Conv5 structure layer was substituted with the PReLU activation function. The experimental findings indicated that the PReLU activation function outperformed the ReLU function in our network model. Figure 9 illustrates the superior performance of the PReLU function in terms of accuracy, demonstrating a consistent and rapid improvement. This suggested that the PReLU function effectively mitigated the issues related to nonupdatable weights caused by inputs in the hard saturation zone, thereby more efficiently preventing “neuron death”. The analysis of the results presented in Table 2 suggested that the PReLU activation function achieved higher accuracy, precision, and recall rates of 92.42%, 92.32%, and 92.25%, respectively. These rates were 1.06%, 0.95%, and 1.11% higher than those achieved by the ReLU activation function, respectively. These findings further validated the suitability of the PReLU activation function in our model.

Figure 9.

Accuracy comparison.

Table 2.

Comparison of activation functions based on experimental results.

3.3. Impact of the Attention Mechanism on Model Performance

To assess the effect of integrating the BCBAM attention mechanism on the performance of our model, we conducted comparative experiments under identical conditions. We added SE, CBAM, and BCBAM attention mechanisms to the original ShuffleNet V2 model, labeled Schemes 1, 2, and 3, respectively, as shown in Table 3. The results indicated that Scheme 3, incorporating the BCBAM attention mechanism, achieved superior accuracy, precision, recall, and F1 scores compared with Schemes 1 and 2. Notably, all three schemes had an equal number of parameters. Although the floating-point operations for Schemes 2 and 3 were marginally higher than those for Scheme 1, Scheme 3 demonstrated the best performance across the evaluated metrics, suggesting a slight superiority of the BCBAM attention mechanism module over the SE and CBAM attention mechanisms in our model.

Table 3.

Comparison results of attention mechanisms.

3.4. Ablation Study

To evaluate the impact of each enhancement on the experimental outcomes, we conducted an ablation study, the details of which are presented in Table 4. In this table, the symbol “✓” indicates the inclusion of improvements to the ShuffleNet V2 network, whereas “✗” denotes no improvements. The integration of the BCBAM attention mechanism into the original ShuffleNet V2 model increased accuracy by 2.41% and the F1 score by 2.59%. However, this integration also led to increases in the number of parameters, floating-point operations, and model size by 0.2 × 106, 1.48 × 106, and 0.81 MB, respectively, thereby increasing the complexity of the network. The application of transfer learning methods did not change the number of parameters, floating-point operations, or model size but resulted in improvements of 0.78% in accuracy and 0.87% in the F1 score. Substituting the ReLU activation function with the PReLU function in the original ShuffleNet V2 model led to an increase of 1.06% in accuracy and 1.04% in the F1 score. Although the number of parameters and model size remained unchanged, the floating-point operations increased by 2 × 106. When combining the BCBAM attention mechanism, transfer learning methods, and PReLU activation function with the original ShuffleNet V2 model, the accuracy improved by 2.48% and the F1 score by 2.6%. These enhancements, however, also increased the model complexity. Following the architectural adjustments, there was a notable decrease in the number of parameters, floating-point operations, and model size.

Table 4.

Ablation experiments.

The SCHNet recognition model proposed in this study achieved an accuracy rate of 94.48% and an F1 score of 94.38%, marking a 3.12% and 3.13% increase, respectively, compared to the original ShuffleNet V2 model. The model comprised 1.97 × 106 parameters, executed 121.11 × 106 floating-point operations, and had a size of 3.84 MB. Compared to the original ShuffleNet V2 model, the parameters decreased by 0.31 × 106, floating-point operations by 31.6 × 106, and model size by 1.13 MB, corresponding to reductions of 13.6%, 20.7%, and 22.7%, respectively. In summary, the introduction of the BCBAM attention mechanism notably enhanced the model performance. Architectural adjustments effectively reduced the structural complexity without compromising the accuracy. The SCHNet recognition model in this study is feasible due to the high precision and lightweight design (annotation: TL: Transfer Learning; AAd: Architectural Adjustment; Acc: Accuracy; Par: Parameters; MS: Model Size; ShuNet: ShuffleNet V2).

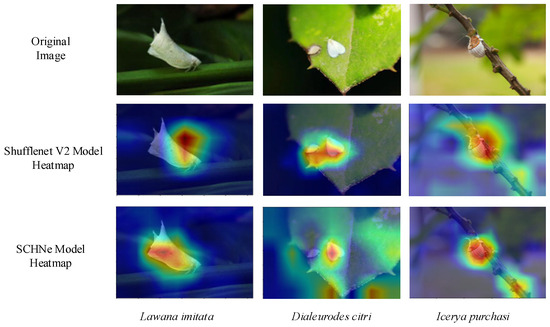

3.5. Heatmaps

To provide a concrete representation of the focal points in our model, we employed Grad-CAM [31] to visualize class activation maps for pest detection. These visualizations were compared with those generated by the original model. The heatmaps in Figure 10 suggested that the ShuffleNet V2 model may display a tendency to either shift its focus areas for pests or cover a wider area. Conversely, the SCHNet model introduced in this study demonstrated a more concentrated focus on pests with fewer notable positional shifts.

Figure 10.

Heat maps.

3.6. Comparative Experiments with Different Network Models

This study comprehensively assessed the efficacy of various models in identifying citrus pests and diseases. It selected high-performance networks, including AlexNet, ResNet50, and EfficientNet_b2, from a pool of eight models for detailed comparison. The experimental results are outlined in Table 5. According to the data presented in Table 5, although the SqueezeNet1_0 model had fewer parameters than the SCHNet model, it was outperformed by SCHNet in terms of computational parameters and floating-point operation capacity. SqueezeNet1_0 recorded an accuracy of 79.97%, a precision of 80.35%, a recall rate of 78.94%, and an F1 score of 79.64%. These figures represent declines of 14.51%, 14.05%, 15.41%, and 14.74%, respectively, compared to SCHNet. Similarly, the other models also lagged behind SCHNet in these critical performance metrics. These findings underscore the dual advantages of the SCHNet model in enhancing performance and managing network complexity, establishing its superiority in pest identification tasks.

Table 5.

Comparative experimental results of different models.

From Table 6, it can be seen that the citation large network has a higher number of parameters, with a maximum accuracy of 95.3%, which is 0.82% higher than the lower accuracy of the SCHNet model, but the number of parameters is too high for deployment on mobile devices. In the citation’s lightweight network, Zhang Pengcheng et al. [19]’s model has a higher accuracy and lower parameter count. However, compared to the model in this article, the accuracy is 0.85% lower and the parameter count is higher by 1.53M. This further illustrates that the SCHNet model in this article is superior to the network model chosen in the citation in terms of both accuracy and parameter count.

Table 6.

Comparison of neural network models and citation models.

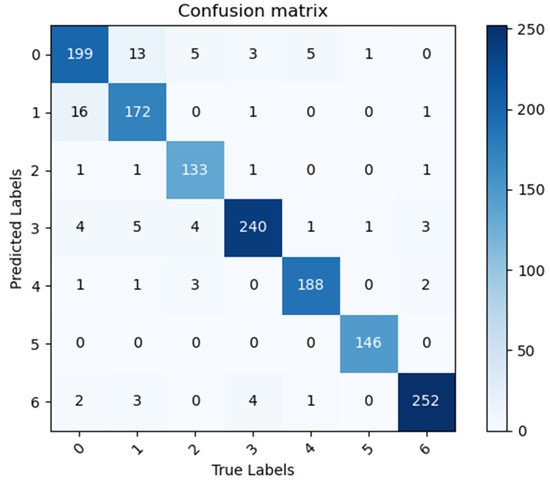

Moreover, to illustrate the remarkable classification performance of the SCHNet model, this study employed the visualization analysis of the confusion matrix to demonstrate its ability to distinctly classify various types of citrus pest images. Each column in the confusion matrix represented the predicted category and each row corresponded to the actual category. The examination of Figure 11 reveals the proficiency of the SCHNet model in extracting features from each type of pest image and its efficient classification capabilities.

Figure 11.

Confusion matrix. 0: Lawana imitata; 1: Geisha distinctissma; 2: Icerya purchasi; 3: Xenocatantops prachycerus 4: Dialeurodes citri; 5: Papilio xuthus; 6: Panonychus citri.

4. Conclusions

Deep learning has been widely applied in the field of agriculture. Particularly in the cultivation of citrus, its impact is notable for tasks such as detecting crop diseases, insect pests, and the level of fruit maturity. This study involves adjustments and optimizations to the ShuffleNet network architecture to more effectively identify and classify insect pests during the citrus-growing process. The dataset was also expanded to enhance the model’s ability to recognize insect pests under different environmental conditions. Several key improvements were made to the original ShuffleNet network, including changing the activation functions, incorporating an enhanced attention mechanism module, and adjusting the network structure. These improvements significantly increased the accuracy of the model and achieved a more lightweight design. Moreover, by changing the training strategy through transfer learning, not only was the training time reduced but the costs were also lowered. Experiments with comparison tests, ablation studies, and heatmap validations have proven that these improvements indeed enhance the performance of the network. Ultimately, the improved ShuffleNet network model in this paper has a better overall performance with a recognition accuracy of 94.48% and a model size of 3.84 MB, which can be used as a reference for citrus pest recognition and classification techniques.

While prioritizing lightweight computations, the SCHNet pest identification model achieved a high identification rate. The subsequent phase will focus on deploying the model within a WeChat mini-program or mobile app.

Author Contributions

Conceptualization, Y.-N.Y. and C.-L.X.; methodology, C.-L.X. and J.-C.Y.; software, J.-C.Y. and C.-L.X.; validation, Y.-B.M.; formal analysis, S.-Q.D.; investigation, Z.-H.W.; resources, R.-F.Y.; data curation, Y.-B.M.; writing—original draft preparation, C.-L.X.; writing—review and editing, C.-L.X. and J.-C.Y.; visualization, C.-L.X. and J.-C.Y.; supervision, Y.-N.Y.; project administration, C.-L.X.; funding acquisition, Y.-N.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number 42061059), Guilin Technology Application and Promotion Plan (grant number 20210226-2), and China-ASEAN Huawei Artificial Intelligence Innovation Center 2022 subsidy project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deng, X.X. Thinking on some problems in the development of fruit industry in China. J. Fruit Sci. 2021, 38, 121–127. (In Chinese) [Google Scholar]

- Qi, C.J.; Gu, Y.M.; Zeng, Y. Research progress of citrus industry economy in China. J. Huazhong Agric. Univ. 2021, 40, 58–69. (In Chinese) [Google Scholar]

- Zhai, Z.Y.; Cao, Y.F.; Xu, H.L.; Yuan, P.S.; Wang, H.Y. Review on key technologies of crop pest identification. Trans. Chin. Soc. Agric. Mach. 2021, 52, 1–18. (In Chinese) [Google Scholar]

- Cai, Y.H.; Guo, J.W.; Li, Y.C.; Chen, F.Y. Citrus disease field image dataset and deep learning model testing. Cent. South Agric. Sci. Technol. 2023, 44, 235–237. (In Chinese) [Google Scholar]

- Huang, L.S.; Luo, Y.W.; Yang, X.D.; Yang, G.J.; Wang, D.Y. Crop disease recognition based on attention mechanism and multi-scale residual network. Trans. Chin. Soc. Agric. Mach. 2021, 52, 264–271. (In Chinese) [Google Scholar]

- Chen, J.; Chen, L.Y.; Wang, S.S.; Zhao, H.Y.; Wen, C.J. Image recognition of garden pests based on improved residual network. Trans. Chin. Soc. Agric. Mach. 2019, 50, 187–195. (In Chinese) [Google Scholar]

- Song, Y.Q.; Xie, X.; Liu, Z.; Zou, X.B. Crop pests and diseases recognition method based on multi-level EESP model. Trans. Chin. Soc. Agric. Eng. 2020, 51, 196–202. (In Chinese) [Google Scholar]

- Wang, M.H.; Wu, Z.X.; Zhou, Z.G. Research on Fine-grain recognition of crop pests and diseases based on improved CBAM. Trans. Chin. Soc. Agric. Mach. 2021, 52, 239–247. (In Chinese) [Google Scholar]

- Ye, Z.H.; Zhao, M.X.; Jia, L. Research on crop disease image recognition with complex background. Trans. Chin. Soc. Agric. Mach. 2021, 52, 118–124+147. (In Chinese) [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 2015 International Conference on Learning Representations, International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Iandola, F.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. Available online: https://arxiv.org/abs/1602.07360 (accessed on 24 February 2016).

- Zhang, X.Y.; Zhou, X.Y.; Lin, M.X.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.L.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Pan, C.L.; Zhang, Z.H.; Gui, W.H.; Ma, J.J.; Yan, C.X.; Zhang, X.M. Identification of rice pests and diseases by combining ECA mechanism with DenseNet201. Smart Agric. 2023, 5, 45–55. (In Chinese) [Google Scholar]

- Cen, X. Application of image recognition technology based on deep learning in citrus disease and insect recognition. Equip. Manuf. Technol. 2023, 96–99. (In Chinese) [Google Scholar] [CrossRef]

- Zeba, A.; Sarfaraz, M. Exploring Deep Ensemble Model for Insect and Pest Detection from Images. Procedia Comput. Sci. 2023, 218, 2328–2337. [Google Scholar]

- Su, H.; Wen, G.; Xie, W.; Wei, M.; Wang, X. Research on Citrus Pest and Disease Recognition Method in Guangxi Based on Regional Convolutional Neural Network Mode. Southwest China J. Agric. Sci. 2020, 33, 805–810. [Google Scholar]

- Gan, Y.; Guo, Q.W.; Wang, C.T.; Liang, W.J.; Xiao, D.Q.; Wu, H.L. Crop pest identification based on improved EfficientNet model. Trans. Chin. Soc. Agric. Eng. 2022, 38, 203–211. (In Chinese) [Google Scholar]

- Zhang, P.C.; Yu, Y.H.; Chen, C.W.; Zheng, W.Y.; Li, S.J. Classification and recognition method of citrus pests based on improved MobileNetV2. J. Huazhong Agric. Univ. 2023, 42, 161–168. (In Chinese) [Google Scholar]

- Adhi, S.; Novanto, Y.; Cahya, R.W. Large scale pest classification using efficient Convolutional Neural Network with augmentation and regularizers. Comput. Electron. Agric. 2022, 200, 107204. [Google Scholar]

- Zhon, Y.; Fu, C.J.; Zhai, Y.T.; Li, J.; Jin, Z.Q.; Xu, Y.L. Identification of Rice Leaf Disease Using Improved ShuffleNet V2. Comput. Mater. Contin. 2023, 75, 4501–4517. [Google Scholar]

- Liu, Y.F.; Wei, S.Q.; Huang, H.J.; Lai, Q.; Li, M.S.; Guan, L.X. Naming entity recognition of citrus pests and diseases based on the BERT-BiLSTM-CRF model. Expert Syst. Appl. 2023, 234, 121103. [Google Scholar] [CrossRef]

- Liu, C.Q. Occurrence and control technology of citrus white moth waxhopper. Plant Dr. 2009, 22, 25. [Google Scholar]

- Fu, S.Q.; Zhou, X.W.; Huang, J.J. Effect of different agents on the control of citrus psyllid. China Agric. Ext. 2020, 36, 69–70. [Google Scholar]

- Zhang, X.Q.; Kong, X.Y.; Yang, C.; Liu, W.Q.; Yuan, Y.Y.; Zhou, Z.W.; Xia, B.; Xin, T.R. Cloning of fatty acid binding protein gene and response to starvation stress in the citrus alligator mite. J. Appl. Entomol. 2023, 60, 1–10. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imageNet classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, Z.Z.; Liang, K.; Wang, Z.Y.; Zhang, Q.; Guo, Y.X.; Guo, J.Q. Classification of wheat plaques by improved CBAM and MobileNet V2 algorithm. J. Nanjing Agric. Univ. 2023, 1–11. Available online: https://link.cnki.net/urlid/32.1148.S.20231019.1117.002 (accessed on 20 May 2024). (In Chinese).

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. Available online: https://arxiv.org/abs/1511.07122 (accessed on 23 November 2015).

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).