Vehicle Activity Dataset: A Multimodal Dataset to Understand Vehicle Emissions with Road Scenes for Eco-Routing

Abstract

:1. Introduction

2. Related Work

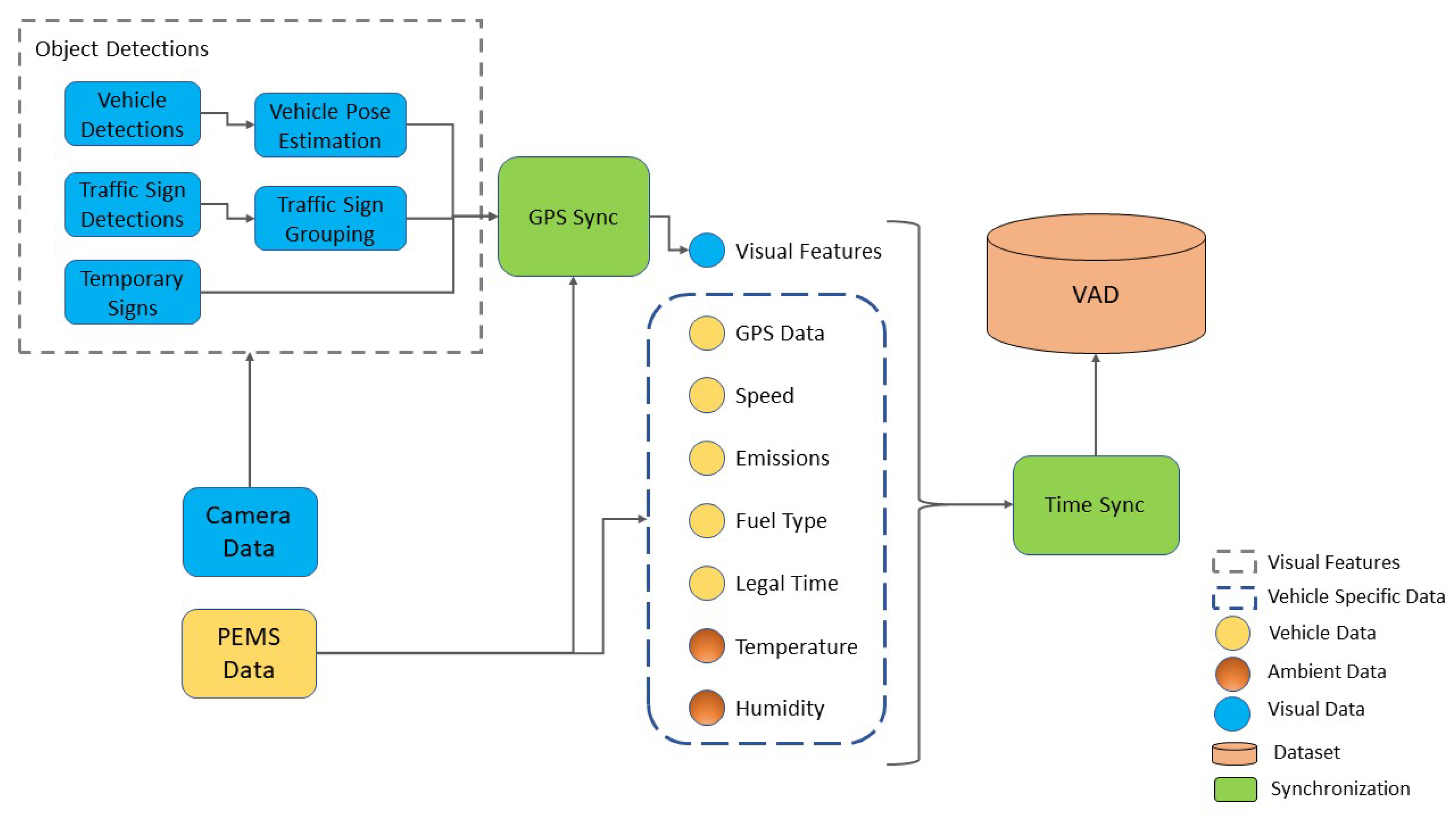

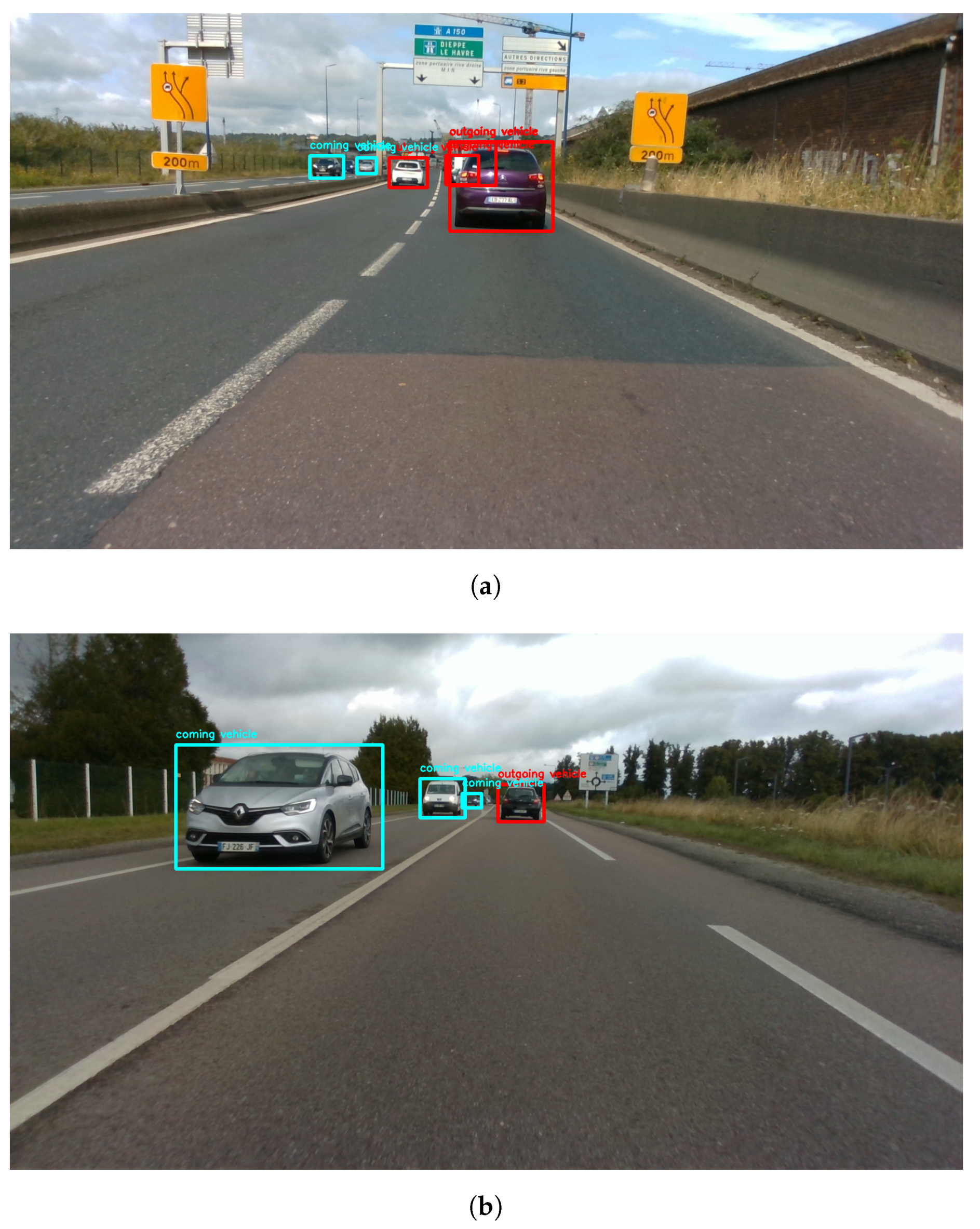

3. Vehicle Activity Dataset

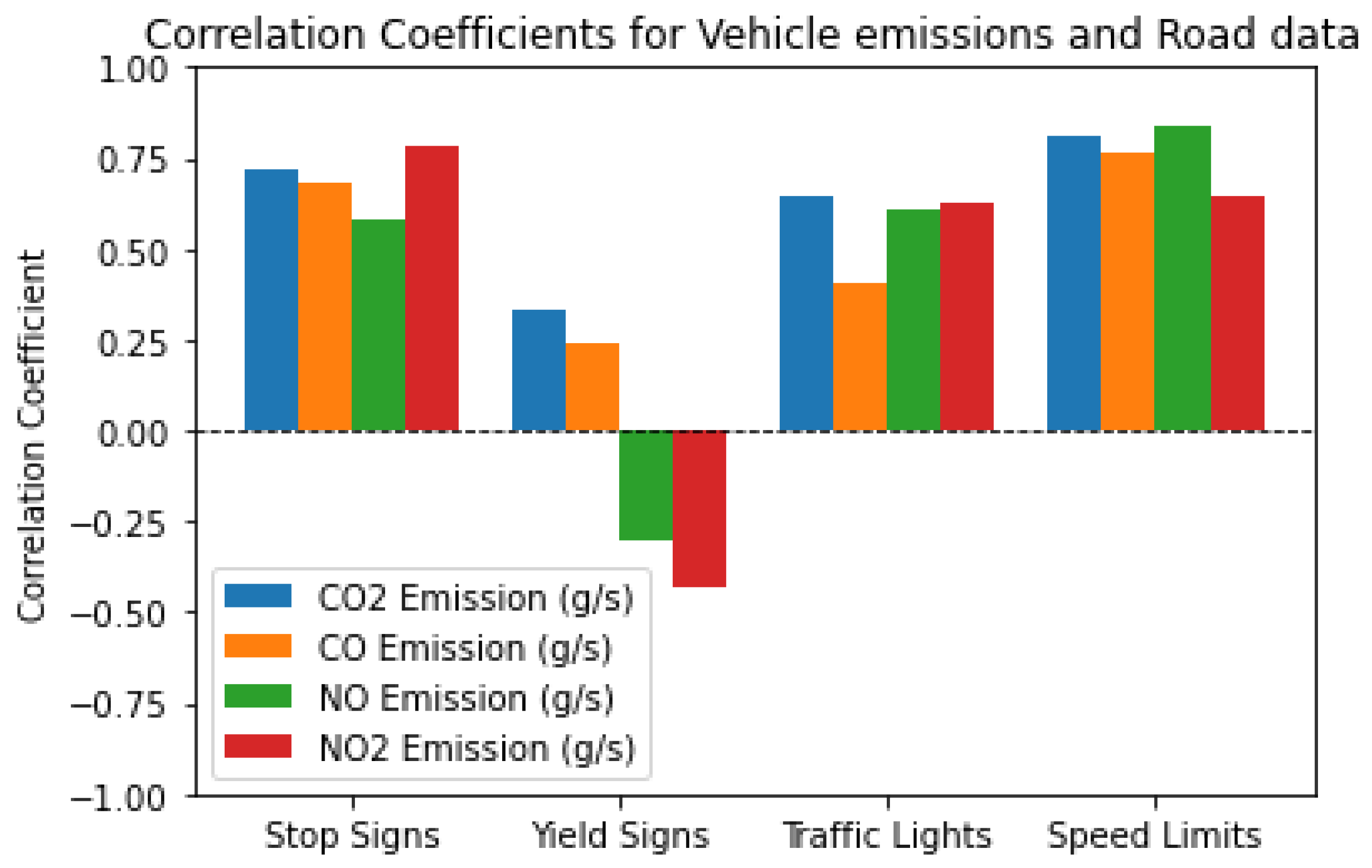

4. Experimental Results and Analysis

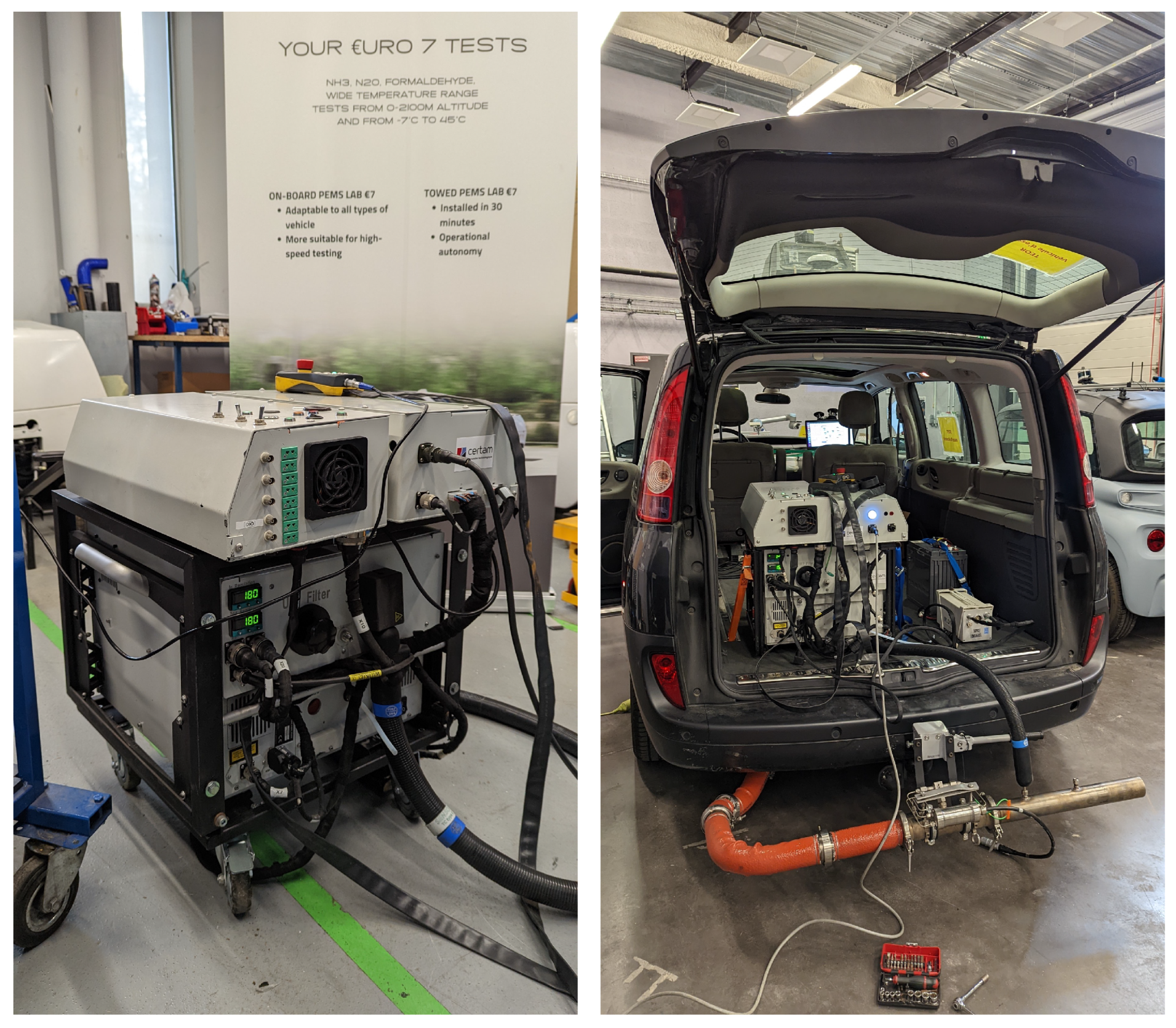

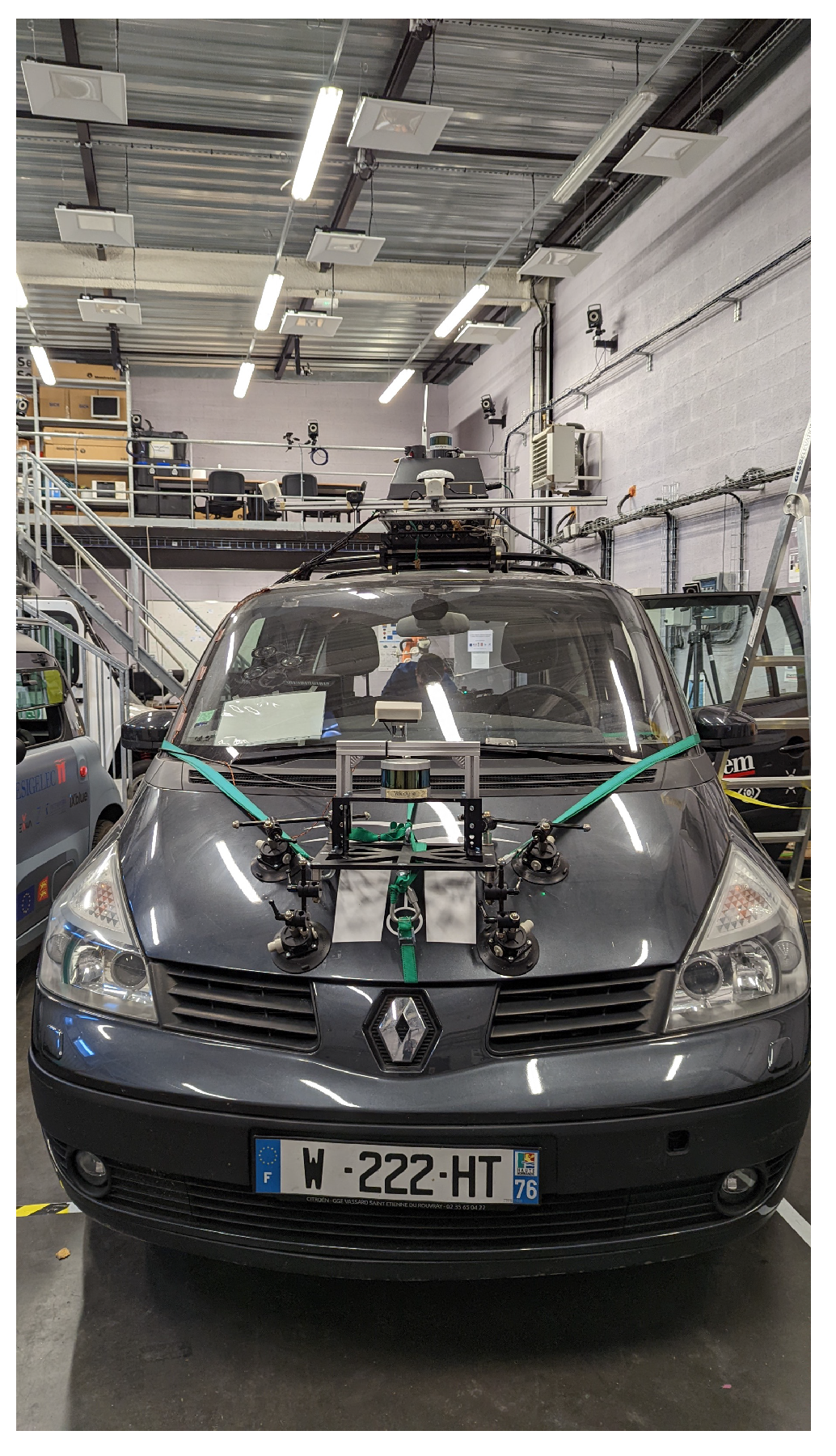

4.1. Data Collection

4.2. Road Data Extraction

4.2.1. Traffic Density Detection

4.2.2. Traffic Light Detection

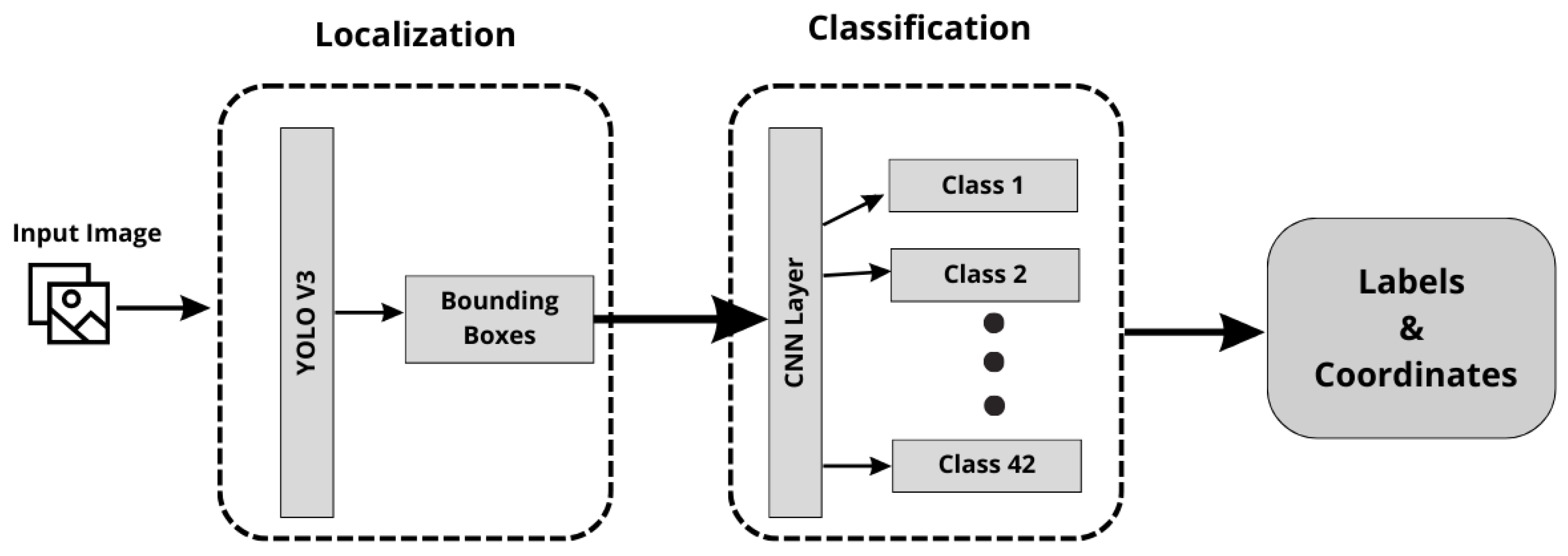

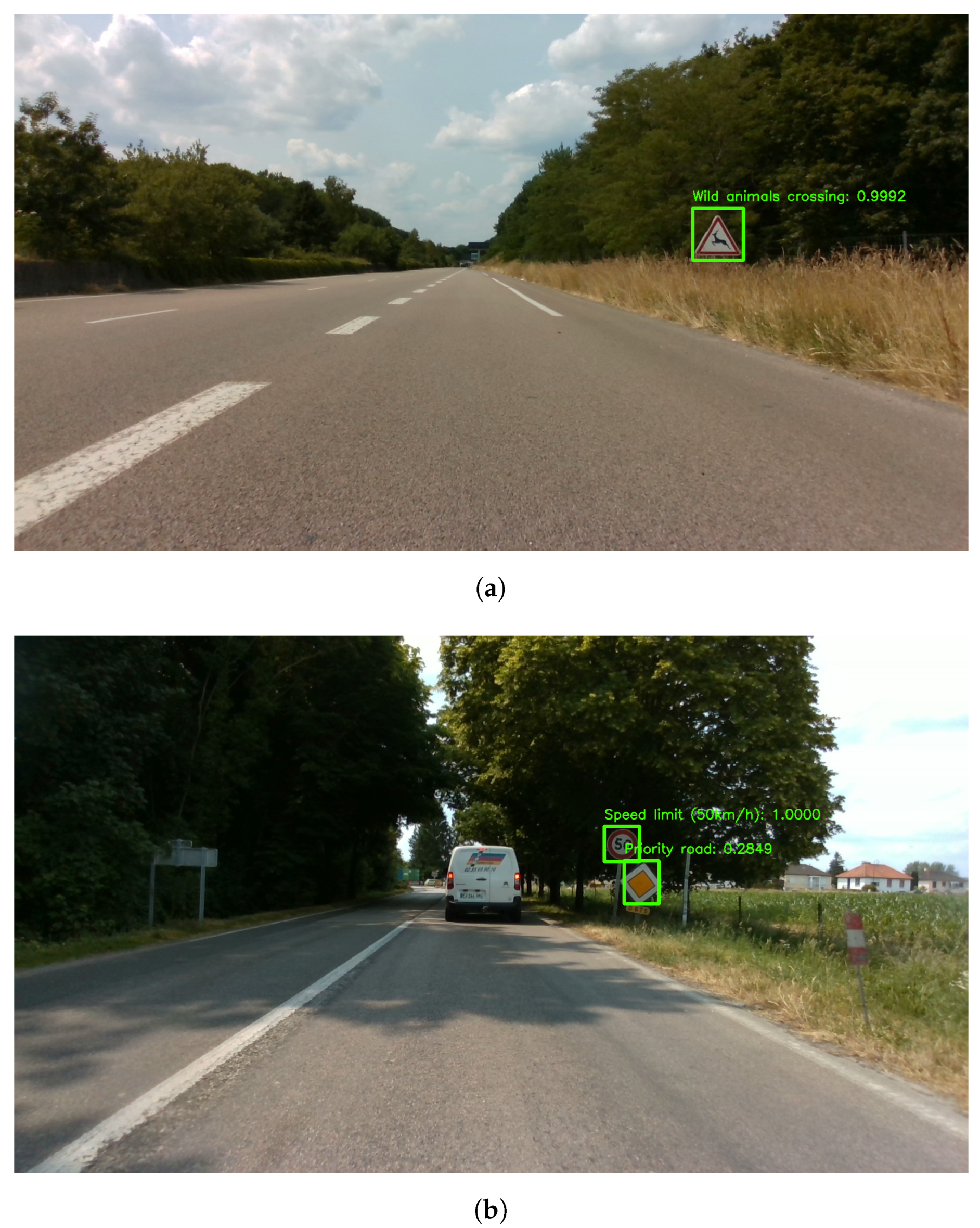

4.2.3. Traffic Signs Detection

4.3. Data Synchronization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| VAD | Vehicle Activity Dataset |

| ICT | Information and Communication Technologies |

| ITS | Intelligent Transportation Systems |

| EV | Electric Vehicle |

| CNN | Convolutional Neural Networks |

| COCO | Common Objects in Context |

| CRIANN | Centre Régional Informatique et d’Applications Numériques de Normandie (Regional Center for Computer Science and Digital Applications of Normandy) |

| UN | United Nations |

| GHG | GreenHouse Gases |

| VRP | Vehicle Routing Problems |

| AI | Artificial Intelligence |

| PEMS | Portable Emissions Measurement Systems |

| ESRORAD | Esigelec Engineering High School and Segula technologies ROad and RAilway Dataset |

| CERTAM | Centre Régional d’Innovation et de Transfert Technologique (Regional Center for Innovation and Technology Transfer) |

| FPS | Frame-Per-Second |

| GPS | Global Positioning System |

| GTA | Grand Theft Auto |

| IMU | Inertial Measurement Unit |

| KITTI | Karlsruhe Institute of Technology & Toyota Technological Institute at Chicago vision benchmark suite |

| RGB | Red Green Blue |

| LIDAR | Light Detection Furthermore, Ranging |

| mAP | Mean Average Precision |

| AP | Average Precision |

| MOT | Multi-Object Tracking |

| NUScenes | NuTonomy Scenes |

| SORT | Simple Online and Realtime Tracking |

| SOTA | State Of The Art |

| SYNTHIA | SYNTHetic Collection of Imagery and Annotations |

| YOLO | You Look Only Once |

References

- Benítez-López, A.; Alkemade, R.; Verweij, P.A. The impacts of roads and other infrastructure on mammal and bird populations: A meta-analysis. Biol. Conserv. 2010, 143, 1307–1316. [Google Scholar] [CrossRef]

- Trabelsi, R.; Khemmar, R.; Decoux, B.; Ertaud, J.Y.; Butteau, R. Recent advances in vision-based on-road behaviors understanding: A critical survey. Sensors 2022, 22, 2654. [Google Scholar] [CrossRef]

- Pantangi, S.S.; Ahmed, S.S.; Fountas, G.; Majka, K.; Anastasopoulos, P.C. Do high visibility crosswalks improve pedestrian safety? A correlated grouped random parameters approach using naturalistic driving study data. Anal. Methods Accid. Res. 2021, 30, 100155. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, L.; Xia, Y.; Wang, L.; Ye, J.; Hou, T.; Zhang, Y.; Li, M.; Li, Z.; Song, Z.; et al. Quantifying on-road vehicle emissions during traffic congestion using updated emission factors of light-duty gasoline vehicles and real-world traffic monitoring big data. Sci. Total Environ. 2022, 847, 157581. [Google Scholar] [CrossRef] [PubMed]

- Negre, E.; Rosenthal-Sabroux, C.; Gascó, M. A knowledge-based conceptual vision of the smart city. In Proceedings of the 2015 48th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2015; pp. 2317–2325. [Google Scholar]

- Chai, N.; Abidin, W.; Ibrahim, W.; Ping, K.H. Energy Efficient Approach Through Intelligent Transportation System: A Review. In Proceedings of the 6th International Engineering Conference, Energy and Environment (ENCON 2013), Kuching, Malaysia, 1–4 July 2013; pp. 165–170. [Google Scholar]

- Online. World Urbanization Prospects. 2023. Available online: https://www.un.org/en/development/desa/population/theme/urbanization/index.asp (accessed on 23 March 2023).

- Online. World Energy Usage by 2050. 2023. Available online: https://www.eia.gov/outlooks/ieo/ (accessed on 6 March 2023).

- Liu, L.; Wang, K.; Wang, S.; Zhang, R.; Tang, X. Assessing energy consumption, CO2 and pollutant emissions and health benefits from China’s transport sector through 2050. Energy Policy 2018, 116, 382–396. [Google Scholar] [CrossRef]

- Ayyildiz, K.; Cavallaro, F.; Nocera, S.; Willenbrock, R. Reducing fuel consumption and carbon emissions through eco-drive training. Transp. Res. Part F Traffic Psychol. Behav. 2017, 46, 96–110. [Google Scholar] [CrossRef]

- Singh, H.; Kathuria, A. Profiling drivers to assess safe and eco-driving behavior—A systematic review of naturalistic driving studies. Accid. Anal. Prev. 2021, 161, 106349. [Google Scholar] [CrossRef] [PubMed]

- Muslim, N.H.; Keyvanfar, A.; Shafaghat, A.; Abdullahi, M.M.; Khorami, M. Green driver: Travel behaviors revisited on fuel saving and less emission. Sustainability 2018, 10, 325. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, Y.; Liu, H.; Huang, R.; Yang, L.; Li, Z.; Fu, L.; Hao, J. Real-world fuel consumption and CO2 emissions of urban public buses in Beijing. Appl. Energy 2014, 113, 1645–1655. [Google Scholar] [CrossRef]

- Srivatsa Srinivas, S.; Gajanand, M. Vehicle routing problem and driver behaviour: A review and framework for analysis. Transp. Rev. 2017, 37, 590–611. [Google Scholar] [CrossRef]

- Sheykhfard, A.; Haghighi, F.; Bakhtiari, S.; Moridpour, S.; Xie, K.; Fountas, G. Analysis of traffic conflicts with right-turning vehicles at unsignalized intersections in suburban areas. Int. J. Transp. Sci. Technol. 2023. [Google Scholar] [CrossRef]

- Garcia-Castro, A.; Monzon, A. Using floating car data to analyse the effects of its measures and eco-driving. Sensors 2014, 14, 21358–21374. [Google Scholar] [CrossRef] [PubMed]

- Cabani, A.; Khemmar, R.; Ertaud, J.Y.; Rossi, R.; Savatier, X. ADAS multi-sensor fusion system-based security and energy optimisation for an electric vehicle. Int. J. Veh. Auton. Syst. 2019, 14, 345–366. [Google Scholar] [CrossRef]

- Sagaama, I.; Kchiche, A.; Trojet, W.; Kamoun, F. Evaluation of the energy consumption model performance for electric vehicles in SUMO. In Proceedings of the 2019 IEEE/ACM 23rd International Symposium on Distributed Simulation and Real Time Applications (DS-RT), Cosenza, Italy, 7–9 October 2019; pp. 1–8. [Google Scholar]

- Benevolo, C.; Dameri, R.P.; D’auria, B. Smart mobility in smart city: Action taxonomy, ICT intensity and public benefits. In Empowering Organizations: Enabling Platforms and Artefacts; Springer: Cham, Switzerland, 2016; pp. 13–28. [Google Scholar]

- Ceylan, R.; Özbakır, A. Increasing Energy Conservation Behavior of Individuals towards Sustainable and Energy-Efficient Communities. Smart Cities 2022, 5, 1611–1634. [Google Scholar] [CrossRef]

- Bahn, O.; Marcy, M.; Vaillancourt, K.; Waaub, J.P. Electrification of the Canadian road transportation sector: A 2050 outlook with TIMES-Canada. Energy Policy 2013, 62, 593–606. [Google Scholar] [CrossRef]

- Karademir, M.; Ozbakir, B.A. Environmental pollution analysis from urban tranformation and construction and demolition wastes management: Istanbul Kadikoy case study. In Proceedings of the CPUD’18, Istanbul, Türkiye, 11–12 March 2018; p. 108. [Google Scholar]

- Kumar, A.; Kashiyama, T.; Maeda, H.; Omata, H.; Sekimoto, Y. Real-time citywide reconstruction of traffic flow from moving cameras on lightweight edge devices. ISPRS J. Photogramm. Remote Sens. 2022, 192, 115–129. [Google Scholar] [CrossRef]

- Sekilab. Vehicle Orientation Dataset. 2023. Available online: https://github.com/sekilab/VehicleOrientationDataset/ (accessed on 12 June 2023).

- Kumar, A.; Kashiyama, T.; Maeda, H.; Sekimoto, Y. Citywide reconstruction of cross-sectional traffic flow from moving camera videos. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 1670–1678. [Google Scholar]

- Kairess. Crosswalk-Traffic-Light-Detection-Yolov5. 2022. Available online: https://github.com/kairess/crosswalk-traffic-light-detection-yolov (accessed on 28 June 2023).

- Sichkar, V. Traffic Signs Detection by YOLO v3, OpenCV, Keras. 2021. Available online: https://www.kaggle.com/code/valentynsichkar/traffic-signs-detection-by-yolo-v3-opencv-keras (accessed on 5 July 2023).

- Sichkar, V.; Kolyubin, S.A. Real time detection and classification of traffic signs based on YOLO version 3 algorithm. Sci. Tech. J. Inf. Technol. Mech. Opt. 2020, 20, 418–424. [Google Scholar] [CrossRef]

- Sichkar, V.; Kolyubin, S.A. Effect of various dimension convolutional layer filters on traffic sign classification accuracy. Sci. Tech. J. Inf. Technol. Mech. Opt. 2019, 19, 546–552. [Google Scholar] [CrossRef]

| Fuel Type | Variables | Data Size | Minimum | Maximum | Mean | STD |

|---|---|---|---|---|---|---|

| Diesel | CO (g/s) | 28,972 (Rows) | 6.51 × 10 | 16.359 | 3.102 | 3.009 |

| CO (g/s) | 3.38 × 10 | 0.368 | 1.3 × 10 | 0.01 | ||

| O (g/s) | 0.0002 | 17.398 | 3.818 | 2.389 | ||

| NO (g/s) | 2.74 × 10 | 0.124 | 0.014 | 0.018 | ||

| NO (g/s) | 2.01 × 10 | 0.0198 | 0.0025 | 0.0032 | ||

| Vehicle Speed (km/h) | 0 | 136.899 | 62.876 | 39.623 | ||

| Ambient Pressure (kPa) | 994 | 1025 | 1007.672 | 35.875 | ||

| Ambient Humidity (%) | 29.2 | 100.7 | 67.917 | 15.910 | ||

| Ambient Temperature (K) | 290.75 | 301.85 | 293.917 | 2.471 |

| Date (DD/MM/YY) | Source | Destination | Distance (Km) | Time (Min) | Number of Images | PEMS Data | |

|---|---|---|---|---|---|---|---|

| Eco-route | 16/06/2023 | ESIGELEC | Bosgouet | 27 | 28 | 6785 | 1334 |

| 16/06/2023 | Bosgouet | ESIGELEC | 26 | 24 | 5087 | 1125 | |

| Fastest route | 16/06/2023 | ESIGELEC | Bosgouet | 24 | 27 | 6109 | 1278 |

| 16/06/2023 | Bosgouet | ESIGELEC | 29 | 23 | 4955 | 1752 | |

| Eco-route | 24/07/2023 | ESIGELEC | Yvetot | 44 | 46 | 11,224 | 3211 |

| 24/07/2023 | Yvetot | ESIGELEC | 46 | 43 | 11,379 | 2545 | |

| Fastest route | 24/07/2023 | ESIGELEC | Yvetot | 43 | 44 | 11,139 | 3132 |

| 24/07/2023 | Yvetot | ESIGELEC | 42 | 39 | 10,144 | 2195 | |

| Eco-route | 25/07/2023 | ESIGELEC | Saint-Saens | 42 | 55 | 12,664 | 3819 |

| 25/07/2023 | Saint-Saens | ESIGELEC | 43 | 52 | 12,162 | 3322 | |

| Fastest route | 25/07/2023 | ESIGELEC | Saint-Saens | 39 | 48 | 12,001 | 3052 |

| 25/07/2023 | Saint-Saens | ESIGELEC | 39 | 46 | 11,586 | 2984 |

| Group | Class | Number of Detections |

|---|---|---|

| Traffic Signs | Speed Limit | 757 |

| Stop Sign | 206 | |

| Animal Crossing | 95 | |

| Bicycle Crossing | 209 | |

| Zebra Crossing | 4846 | |

| Yield Sign | 982 | |

| Roundabout Sign | 664 | |

| School Zone | 21 | |

| Temporary Signs | Road work | 192 |

| Traffic Lights | Red and Green | 1089 |

| Traffic Density | Ongoing | 34,891 |

| Incoming | 21,058 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jendoubi, F.; Pradeep, V.; Khemmar, R.; Berradia, T.; Rossi, R.; Sibbille, B.; Fourre, J.; Ohayon, A.; Jouni, M. Vehicle Activity Dataset: A Multimodal Dataset to Understand Vehicle Emissions with Road Scenes for Eco-Routing. Appl. Sci. 2024, 14, 338. https://doi.org/10.3390/app14010338

Jendoubi F, Pradeep V, Khemmar R, Berradia T, Rossi R, Sibbille B, Fourre J, Ohayon A, Jouni M. Vehicle Activity Dataset: A Multimodal Dataset to Understand Vehicle Emissions with Road Scenes for Eco-Routing. Applied Sciences. 2024; 14(1):338. https://doi.org/10.3390/app14010338

Chicago/Turabian StyleJendoubi, Firas, Vishnu Pradeep, Redouane Khemmar, Tahar Berradia, Romain Rossi, Benjamin Sibbille, Jérémy Fourre, Avigaël Ohayon, and Mohammad Jouni. 2024. "Vehicle Activity Dataset: A Multimodal Dataset to Understand Vehicle Emissions with Road Scenes for Eco-Routing" Applied Sciences 14, no. 1: 338. https://doi.org/10.3390/app14010338

APA StyleJendoubi, F., Pradeep, V., Khemmar, R., Berradia, T., Rossi, R., Sibbille, B., Fourre, J., Ohayon, A., & Jouni, M. (2024). Vehicle Activity Dataset: A Multimodal Dataset to Understand Vehicle Emissions with Road Scenes for Eco-Routing. Applied Sciences, 14(1), 338. https://doi.org/10.3390/app14010338