Abstract

In this paper, based on the idea of “step-by-step accuracy”, a novel multiscale feature-based infrared ship-detection method (MSFISD) is proposed. The proposed method can achieve efficient and effective infrared ship detection in complex scenarios, which may provide assistance in applications such as night surveillance. First, candidate regions (CRs) are extracted from the whole image by extracting the sea–sky line and region of interest (ROI). The real sea–sky line is extracted based on the gradient features enhanced by large-scale gradient operators. The coarse segmentation results are obtained by the optimization method and are then refined by incorporating the edge features of the ship to reduce false alarms and obtain the CRs. Second, by analyzing the shape features of ships, the feature quantity is established, and the ships in CRs are finally accurately segmented. Experimental results demonstrate that compared with the other five methods, the proposed method has higher detection accuracy with a lower false-alarm rate and performs better in complex sea scenarios.

1. Introduction

Night vision imaging technology serves as a critical component in a wide range of contemporary optics-based applications, including night surveillance, assisted driving, and underwater detection. Its significance stems from its ability to enable effective visual perception in low-light environments, for example, in nighttime marine scenarios where the detection of ships is undertaken. The precise identification of ships in sea scenarios is of great significance for marine resource monitoring and coastguard enforcement, among other applications [1,2]. Infrared cameras, known for their advantages of long detection ranges and all-weather functionality, are widely utilized in various applications. However, the presence of buildings, reefs, islands, and sea clutter in complex sea surface scenarios, as well as limited resolution, poses significant challenges to the task of ship detection. Infrared image processing plays a paramount role in night vision imaging technology, enabling the extraction of valuable information from infrared images. It employs sophisticated algorithms and techniques to enhance image quality, detect objects, and facilitate object recognition [3,4,5].

In recent years, researchers have been exploring various methods for target detection in complex sea scenarios. The existing methods can be broadly divided into the following four categories: background-suppression based [6,7,8,9], active-contour based [10,11], feature-analysis based [12,13,14], and deep learning [15,16,17]. The background-suppression based method assumes that the grayscale distribution of the sea background is stable so that the modeling and estimation of the sea background can be achieved using different statistical models. After that, the foreground image is obtained by subtracting the background estimation from the original image. However, this method is not suitable for complex sea scenarios with a large amount of sea-clutter interference. By establishing an energy functional model, the active contour-based method firstly obtains the initial contour of the target and then continuously iterates until the contour converges with the real boundary. This method introduces the contour features of the ship in order to assist the segmentation while dealing with the complex sea surface background; disturbances such as islands, reefs, and clutter have similar contour characteristics to the target, which may lead to false alarms as well as the selection of the initial contour; the number of iterations will also affect the detection accuracy.

The key to the feature-analysis method is to select suitable features, not only to ensure that the features of the target itself are prominent and the difference between the target and the background is noticeable, but also to assess the difficulty and calculate the amount of feature extraction. In recent years, the deep-learning method has been widely studied with many detection algorithms proposed. Such methods face the problem of insufficient samples, and the number of network layers and parameter settings will directly affect the final detection accuracy and efficiency. In addition, a common issue in the existing methods is that it is hard to ensure the efficiency and real-time performance of target detection in global images; this remains an ongoing research challenge.

In view of the above problems, this paper proposes an infrared ship-detection method based on the idea of “step-by-step accuracy”, while considering various characteristics of the sea background and ships. First, CRs are extracted from the whole image by extracting the sea–sky line and ROI, and then, the final ship target extraction is accomplished by incorporating the shape features of the ship. The main purpose of this study is to improve the performance of infrared ship detection in complex scenarios, ensuring both effectiveness and high processing efficiency. The rest of this paper is organized as follows. In Section 2, the details of the proposed sea–sky line extraction and feature-based target extraction method are described. In Section 3, the experimental results, including the comparisons between the proposed method and the other five methods, are presented. Finally, the conclusion is presented in Section 4.

2. Materials and Methods

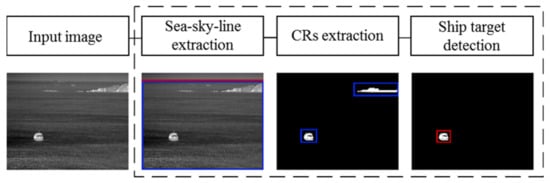

In this section, the detection framework of the proposed method is illustrated in Figure 1. We first extract the sea–sky line from the input image, then extract the CRs in the region below the sea–sky line. Finally, taking into account the distinct shape feature differences between ships, and interferences like islands and clutter, a set of feature-based thresholds is adopted to separate targets from CRs.

Figure 1.

Framework of the proposed method. The red line shows the position of the extracted sea-sky-line, and the blue bounding box represent CRs where ship might exists.

2.1. Multiscale Gradient Feature-Based Sea–Sky Line Extraction

In the images captured by forward-looking infrared cameras, ships are typically located below the sea–sky line. Therefore, the extraction of the sea–sky line in the image can exclude the interference from the sky and shore background and simultaneously reduce the area to be detected from the global image to the area below the sea–sky line. The sea surface exhibits a different radiation intensity and depth of field from the sky and shore background. As a result, the grayscale difference between the areas above and below the sea–sky line in the infrared image is distinct and can be characterized using gradient features. However, due to the low spatial resolution of infrared images, neighboring pixels exhibit a certain degree of correlation. Consequently, the sea–sky line may not be sufficiently prominent when calculating the gradient image using a small-scale gradient operator. In our method, interlacing in both rows and columns is used to increase the scale of gradient calculations, which can be described as Equation (1):

where gradx(i, j), grady(i, j) represent the gradient value in the horizontal and vertical direction, respectively. f(i, j) is the intensity value of the pixel (i, j) in the input image.

As shown in Figure 2, the results of large-scale gradient map are presented, in which the values of d1 and d2 are set as 3 and 4, respectively. It is evident that the sea–sky lines in the vertical gradient images are significantly enhanced, appearing as generally long, approximately horizontal straight lines that traverse the entire image. Consequently, the vertical gradient images can be cumulated along the horizontal direction to highlight the position of the sea–sky line, which can be represented as Equation (2):

where width represents the number of the columns in the original image.

Figure 2.

Large-scale gradient map. (a) Original image. (b) Horizontal gradient map. (c) Vertical gradient map.

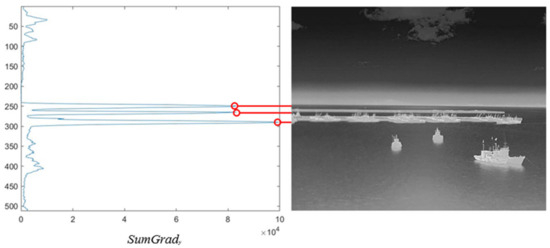

According to Equation (2), the results of SumGrady are presented in Figure 3, in which three local maximum values are highlighted. From the above results, it is observed that when there is more interference near the actual sea–sky line, the value of SumGrad at the location of the real sea–sky line may not be the highest but is still one of the three peaks. Therefore, SumGrady can serve as an effective basis for extracting the candidate positions of the sea–sky line. The extraction process for Pos(i), the candidate position of the sea–sky line, is shown in Figure 4.

Figure 3.

Result of SumGrady.

Figure 4.

Flowchart of the candidate positions for extraction of the sea–sky line.

The candidate positions derived from the previous processes may contain both cloud and clutter disturbances as well as the real sea–sky line, and so, further screening is necessary. As illustrated in Figure 2, cloud interference in the original image typically exists in the top area, while clutter interference occurs in the bottom area, with the real sea–sky line located in between. The grayscale changes more dramatically above the clouds and below the sea clutter, while it changes relatively gently above and below the sea–sky line. Therefore, a criterion for discriminating the sea–sky line can be proposed: the gradient values above the real sea–sky line are smaller, while the gradient values below it are larger. In contrast, the gradient values are larger above the cloud and smaller below it. Additionally, the gradient values are larger both above and below the sea clutter.

We select the areas above and below the candidate locations within the ω-rows range to calculate the gradient sum. Specifically, g1 and g2 denote the sum of the horizontal gradient, while g3 and g4 represent the sum of the vertical gradient, which can be written as Equations (3) and (4):

Then, two feature quantities for measuring the gradient features of the area near the candidate positions are defined as follows:

where f1 and f2 represent the ratio of the horizontal gradient sum of the region below and above the candidate location and the ratio of the vertical gradient sum, respectively. Larger values of f1 and f2 indicate greater gradient differences between the regions above and below the candidate positions. According to the aforementioned criterion, the calculated f1 and f2 at the real sea–sky line position should be the largest of all candidate positions, which can be represented as Equation (6):

2.2. Candidate-Region Extraction Based on Grayscale and Edge Features

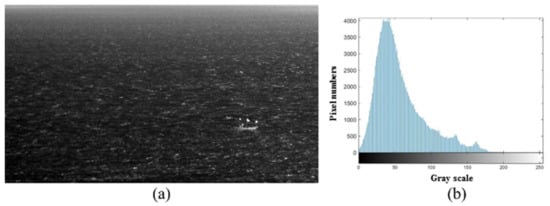

The images of the sea surface area excluding the sky and land are shown in Figure 5a, where the sea surface background occupies most of the pixels in the image and the ship target area is relatively small. There is a significant difference in grayscale distribution between the ship and the background. As is shown in Figure 5b, the histogram of the image of the sea surface area exhibits the distinct characteristics of a unimodal distribution, and the pixels of the sea surface background occupy most of the area.

Figure 5.

(a) Image and (b) histogram of the sea surface area.

The maximum entropy method [18] can calculate the segmentation threshold based on the intra-class grayscale probability of pixels, which can determine a reasonable segmentation threshold for the histogram with unimodal distribution and improve the segmentation effect when there exists significant contrast between the target and the background. However, this method involves multiple logarithmic operations when calculating the entropy values of the foreground and background, resulting in a time complexity of O(N2). In the case where the grayscale level of the image is 256, a total of 2562 or 65,536 logarithmic operations are required. According to fractal theory, both entropy and correlation can be utilized to measure images; however, the time complexity of the latter is only O(N), meaning that when the grayscale of the image is 256, only 256 × 2 or 512 logarithmic operations are required. Therefore, correlation is employed here as a substitute replacing the entropy used to determine coarse segmentation [19].

Assuming that the sea surface area image has the number of rows H and the number of columns W and that the number of occurrences of the grayscale i in the histogram is hi, the probability pi of each grayscale can be calculated as:

Using the threshold T to split the image into background class B and foreground class O, the probability of foreground class and background class can be written as:

Supposing that X = {x0, x1, x2,…} is a set of discrete random variables where pi represents the probability of the variable xi, the correlation of X can be defined as

The sum of the correlations of the foreground and background after the segmentation, and the optimal segmentation threshold selection criterion, can be written as:

Therefore, a coarsely segmented image can be obtained by using the following Equation (13):

Since the coarse segmentation based on Equations (11)–(13) only takes grayscale features into consideration and ignores spatial information, it may result in the presence of significant clutter and isolated noise in the segmented image, as shown in Figure 6b. Taking into account the fact that ships in sea surface scenarios are generally closed connected regions with discernible edge contours, edge features can be adopted to further improve segmentation results. Here, we choose the Robert operator (Equation (14)), which allows us to calculate the cross gradient and extract the edge profile in the sea surface image; the edge feature image is calculated according to the following Equation (15):

Figure 6.

Process of extracting the CRs and the results. (a) Input preprocessed image. (b) Coarse-segmented image. (c) Binary edge feature map BWMC. (d) ROI map. (e) CRs map.

In order to remove false edges, the image IEdge needs to be segmented using a threshold, which is calculated by using Equation (16):

where Mean and Std represent the mean value and standard deviation, respectively. The mean value is used as the basis of the threshold, while the standard deviation is able to characterize the number of edges present in the image, and finally, a control parameter ρ is employed to achieve adaptive segmentation. The final binary edge feature image can be calculated as:

On the basis of the above results, we define here edge intensity En as a feature quantity to measure the edge features in the local area: the window of r × r size is moved in steps of r/2 across the binary edge feature map BWEdge, from the top left to the bottom right, and the original image can be decomposed into image patches. Then, the number of non-zero pixels in each image block is counted as the edge intensity En according to Equation (19):

where BWn is the nth local image patch and #{} represents a counting operation.

Compared with the isolated noise points and residual sea surface clutter, ships tend to exhibit distinct edge contour characteristics, that is, they have large values of En. Therefore, the value of the edge intensity can be used to determine whether there is a candidate target in the local image patch:

After judging the edge intensity of all local image patches, those marked as 1 are clustered and remapped into the image matrix to obtain ROI images:

The ROI images and the coarse-segmented images (based on Equation (13)) are then logically combined to obtain the final CRs:

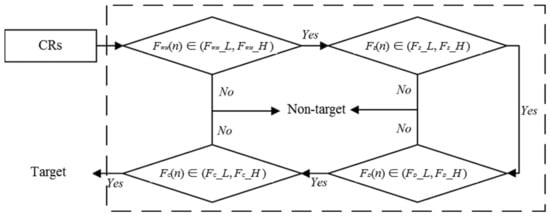

2.3. Ship Targets Extraction Based on Shape Features

In complex sea surface scenarios, apart from the ships, there might still exist a series of interferences in the extracted CRs, such as sea clutter, islands, reefs, and buildings along the shore, which can lead to false alarms. Therefore, it is necessary to distinguish all the CRs to accurately detect the real ship targets. Shape is one of the most important features of infrared targets, and ship targets tend to have more regular shapes in the sea surface scene. In contrast, interferences are more random and have varied shape characteristics. Here, we choose aspect ratio FWH, compactness Fz, duty cycle FD, and signal-to-clutter ratio FC as the shape characteristics to measure the ships:

where Width and Height are the size, Perimeter and Area represent the circumference and the area of the CRs, Rectangle is the area of the bounded rectangle of the CRs, and μT and μB are the average gray value of the target area and background area with σB as the standard deviation, respectively, as shown in Figure 7.

Figure 7.

Shape features of the ship.

The key to identifying ships using shape features is to establish an appropriate range of parameters that can accurately distinguish between targets and other disturbances on the sea surface. In order to ensure the effectiveness and stability of the parameter range, we utilize here the infrared offshore ship dataset provided by IRay Technology Co., Ltd. (Yantai, Shandong, China), which contains more than 1000 infrared images of ships. After screening and deduplication, a total of 300 ships in five categories—warships, cruise ships, cargo ships, fishing boats, and yachts—are selected as positive samples to calculate and count the feature quantities according to Equation (23). Some of the ship slices and their binary images are shown in Figure 8. The statistical results are shown in Table 1.

Figure 8.

Positive samples and binary slices of different categories of ships.

Table 1.

The statistical results of the shape features of the 300 ships.

It should be noted that the objects of this paper are tangible ships occupying a certain area, so CRs of less than 30 pixels will be excluded. After obtaining the statistical results, the constraint ranges of the feature quantities are set as follows:

The corresponding discriminant method is shown in Figure 9:

Figure 9.

Flow chart of ship discrimination.

In order to prove the effectiveness of the constraint range and discriminant method proposed, the disturbing substances in different sea surface scenarios are selected as negative samples, and their feature quantities are calculated and then evaluated according to the above method. The selected negative samples are shown in Figure 10, with the calculated feature quantities displayed in Table 2. It is observed that one or more of the feature quantities of the negative samples do not fall within the constraint range and are therefore determined to be non-targeted. This empirical analysis confirms that the proposed method based on constraint ranges and discriminant features can effectively distinguish between real ship targets and other interferences present on the sea surface.

Figure 10.

Eight kinds of disturbing substances and binary slices.

Table 2.

The feature quantities of the negative samples and judgment results.

3. Results

Here, different sea surface scene images were selected for testing, in which scenes 1–2 were collected using medium-wave thermal imagers without coolers, and scenes 3–6 were collected using medium-wave refrigerated thermal imagers, with the resolutions set as 640 pixels × 512 pixels. All the experiments were carried out in MATLAB R2018b, on a computer with Intel(R) Core(TM) i5 CPU and 8 GB memory. To provide a comprehensive assessment, we compared our method with five other algorithms: top-hat, K-means clustering, fuzzy C-means clustering (FCM), mean shift, and grab-cut (GC). The results of all the tested algorithms, including our method and the five comparison algorithms, are presented in Figure 11. These results allow for a visual comparison and analysis of the segmentation outcomes obtained by each algorithm on the tested sea surface scene images.

Figure 11.

Processed results of different algorithms. (a) Original image. (b) Top-hat. (c) K-mean. (d) FCM. (e) Mean-shift. (f) GC. (g) Our method. The red boxes show the detected targets, and the yellow boxes represent the false alarms.

Figure 11a shows the original infrared image in different scenarios. Interferences such as buildings, reefs, and sea clutter in the sea surface area can disturb the detection of the real ship targets. It can be seen from Figure 11b that the top-hat can detect all the real ship targets in different scenarios, while there still exist a large number of false alarms. Moreover, depending on the selected structural elements’ size, some detected ship targets may appear incomplete. The K-means, a cluster algorithm with strong spatial correlation, adopting the Euclidean distance between gray values, achieves relatively complete segmentation results, while it generates numerous false alarms in complex areas. Unlike the K-means, the FCM is a fuzzy clustering algorithm, into which membership is introduced to classify different pixels. As is shown in Figure 11d, the FCM is highly sensitive to noise. While targets in relatively flat areas can be separated from the background, this method generates the highest number of false alarms among all the methods. By utilizing merge operations based on the grayscale features of the local area, the mean-shift can reduce false alarms caused by small-scale interferences. However, if the grayscale difference between the ship and the background is small, this method is prone to missed detections and sometimes fails to detect the real targets. The GC, combining the ideas of K-means clustering and background modeling, can perform clustering and determine whether pixels are the target or not by establishing background and foreground models using the Gaussian mixture model. However, manual selection of the candidate area is required, as all the unselected area will be considered as background, and the final segmentation result is dependent on the box selection in scenarios where only parts of the sea surface area, including all the ships, are manually selected. As shown in Figure 11f, the ships in the first scenario were extracted completely, while in other scenarios, some targets were absorbed by the background. Among the above comparison methods, iterative calculation is inevitable in all the methods except for the top-hat, and as a result, the calculation amount is huge, and real-time processing cannot be achieved. Nevertheless, our method can detect and segment all the targets and remove false alarms in CRs with single determination. Figure 11g gives the results of our method, and it can be seen that the segmented ship targets exhibit complete shape and contour, showing clear advantages over other methods evaluated.

4. Discussion

In order to quantitatively evaluate the performance of each algorithm, two evaluation indicators, true positive rate (TPR) and false positive rate (FPR), are selected for comparison. TPR and FPR are calculated as follows:

where TP and FN represent the number of the detected and undetected pixels of the real targets, with FP, the number of misjudged pixels as the target, and TN, the number of correctly judged pixels as the target.

In order to quantitatively evaluate the detection performance of each algorithm, a reference image containing the ground truth labels for real targets and background pixels is manually annotated. The test results of each method are compared with the reference image and calculated by Equation (28). Table 3 presents the comparison results. The definition of the TPR and FPR indicates that the larger the calculated TPR value and the smaller the FPR value, the better the performance of the detection algorithm. That is, it has a low false-alarm rate while having high detection accuracy. The comparison results in Table 3 and Figure 12 demonstrate that the top-hat performs well in scenarios 4–6, with a relatively flat background, but shows a higher false-alarm rate in the more complex scenarios 1–3. The calculation results of TPR indexes obtained by K-means and FCM are similar, both being close to 1, indicating that both methods can completely extract targets, but there still exists the problem of a high false-alarm rate, especially for the KCM. The mean-shift has a small FPR across all the selected scenarios, but its TPR indicator is also small, especially in scenarios 4 and 5, where targets are missed, in which the TPR values are close to 0. The GC algorithm shows unstable TPR and FPR values; although the detection rate is high in some scenarios, some targets are still missed in other scenarios, and the TPR value is close to or equal to 0, which proves that there exists instability because manual selection is inevitable for the GC. In contrast, the proposed method consistently achieves both ideal TPR and FPR across different scenarios, indicating that it can accurately detect ships in both simple and complex sea surface scenarios, unaffected by various disturbances. In summary, our method has significant advantages over the other five methods in terms of both detection accuracy and low false-alarm rate and can complete the task of accurate infrared detection of ships under a complex sea surface background. Moreover, the calculation of the selected feature quantities is simple; thus, it can achieve highly efficient detection.

Table 3.

The comparison results of all the algorithms. The bold represent the best results in different scenarios.

Figure 12.

TPR and FPR curves of different scenarios.

5. Conclusions

In this paper, based on the idea of “step-by-step accuracy”, we propose a novel multiscale feature-based infrared ship-detection method (MSFISD). The proposed method can efficiently and effectively achieve infrared ship detection in complex scenarios, which may provide assistance in applications such as night surveillance or remote piloting. First, CRs are extracted from the whole image by extracting the sea–sky line and ROI and are refined by incorporating the edge features of the ship to reduce false alarms. Finally, by analyzing the shape features, the ship targets are finally accurately extracted. Experimental results demonstrate that compared with the other five methods, our method has a higher detection accuracy with a lower false-alarm rate and shows excellent detection performance. However, our method still has limitations. Firstly, in scenes with large-scale interferences, such as islands or bright and dark bands, it is a challenging task to accurately extract the sea clutter. In these scenarios, large areas of islands or reefs may obscure parts of the sea–sky line in images, resulting in ocean boundaries with potentially intricate shapes. Secondly, under certain lighting conditions in which the sea surface exhibits severe fluctuations, it becomes difficult to distinguish clutter, which resembles the grayscale and shape of ships. In future work, we will extend the method of sea–sky line extraction to a more universal marine area extraction and improve and innovate both the single-frame detection and multi-frame detection algorithms to achieve more accurate, stable, and robust infrared ship detection.

Author Contributions

Conceptualization, D.L.; methodology, H.T.; software, H.T.; validation, J.T. and L.T.; formal analysis, M.W.; investigation, L.T.; resources, J.T.; data curation, M.W.; writing—original draft preparation, H.T.; writing—review and editing, D.L.; visualization, Z.T.; supervision, D.L.; project administration, L.W.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Prasad, D.; Rajan, D.; Rachmawati, L.; Rajabaly, E.; Quek, C. Video Processing from Electro-Optical Sensors for Object Detection and Tracking in a Maritime Environment: A Survey. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1993–2016. [Google Scholar] [CrossRef]

- Zhang, Z.; Shao, Y.; Tian, W.; Wei, Q.; Zhang, Y.; Zhang, Q. Application Potential of GF-4 Images for Dynamic Ship Monitoring. IEEE Geosci. Remote Sens. Lett. 2017, 14, 911–915. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F.; Jin, T.; Xie, Y. Infrared Small Target Detection and Tracking under the Conditions of Dim Target Intensity and Clutter Background. Proc. SPIE 2007, 6786, 683–691. [Google Scholar] [CrossRef]

- Wang, B.; Motai, Y.; Dong, L.; Xu, W. Detecting Infrared Maritime Targets Overwhelmed in Sun Glitters by Antijitter Spatiotemporal Saliency. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5159–5173. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Zhu, Y.; Li, B.; Xiong, W.; Huang, Y. Thermal Infrared Small Ship Detection in Sea Clutter Based on Morphological Reconstruction and Multi-Feature Analysis. Appl. Sci. 2019, 9, 3786. [Google Scholar] [CrossRef]

- Gao, C.; Deyu, M.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptman, A. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Zhou, A.; Xie, W.; Pei, J. Background Modeling in the Fourier Domain for Maritime Infrared Target Detection. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2634–2649. [Google Scholar] [CrossRef]

- Zhou, A.; Xie, W.; Pei, J. Background Modeling Combined with Multiple Features in the Fourier Domain for Maritime Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Zhou, A.; Xie, W.; Pei, J. Maritime Infrared Target Detection Using a Dual-Mode Background Model. Remote Sens. 2023, 15, 2354. [Google Scholar] [CrossRef]

- Fang, L.; Zhao, W.; Li, X.; Wang, X. A Convex Active Contour Model Driven by Local Entropy Energy with Applications to Infrared Ship Target Segmentation. Opt. Laser Technol. 2017, 96, 166–175. [Google Scholar] [CrossRef]

- Fang, L.; Wang, X.; Wan, Y. Adaptable Active Contour Model with Applications to Infrared Ship Target Segmentation. J. Electron. Imaging 2016, 25, 041010. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, F.; Chen, X.; Bai, X.; Sun, C. Iterative Infrared Ship Target Segmentation Based on Multiple Features. Pattern Recognit. 2014, 47, 2839–2852. [Google Scholar] [CrossRef]

- Liu, J.; Bai, X.; Sun, C.; Zhou, F.; Li, Y. Infrared Ship Target Segmentation through Integration of Multiple Feature Maps. Image Vis. Comput. 2016, 48, 14–25. [Google Scholar] [CrossRef]

- Bai, X.; Chen, Z.; Zhang, Y.; Liu, J.; Lu, Y. Spatial Information Based FCM for Infrared Ship Target Segmentation. In Proceedings of the 2014 IEEE International Conference on Image Processing, ICIP 2014, Paris, France, 27–30 October 2014; Volume 46, pp. 5127–5131. [Google Scholar] [CrossRef]

- Li, L.; Jiang, L.; Zhang, J.; Wang, S.; Chen, F. A Complete YOLO-Based Ship Detection Method for Thermal Infrared Remote Sensing Images under Complex Backgrounds. Remote Sens. 2022, 14, 1534. [Google Scholar] [CrossRef]

- Han, Y.; Liao, J.; Lu, T.; Pu, T.; Peng, Z. KCPNet: Knowledge-Driven Context Perception Networks for Ship Detection in Infrared Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 61, 1–19. [Google Scholar] [CrossRef]

- Wu, T.; Li, B.; Luo, Y.; Wang, Y.; Xiao, C.; Liu, T.; Yang, J.; An, W.; Guo, Y. MTU-Net: Multi-Level TransUNet for Space-Based Infrared Tiny Ship Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Kapur, J.N.; Sahoo, P.; Wong, A.K.C. A New Method for Gray-Level Picture Thresholding Using the Entropy of the Histogram. Comput. Vis. Graph. Image Process. 1980, 29, 273–285. [Google Scholar] [CrossRef]

- Yen, J.-C.; Chang, F.-J.; Chang, S. New Criterion for Automatic Multilevel Thresholding. Image Process. IEEE Trans. 1995, 4, 370–378. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).