1. Introduction

Recently, the notion of color has played a relevant role in a large number of computer vision applications. Color information provides features that are invariant to scale, translation, and rotation changes, which are suitable for image segmentation [

1], image classification [

2,

3], or image retrieval [

4,

5]. One of the most critical tasks in computer vision applications is image denoising, which involves recovering an image from a degraded noisy version. Various types of noise can affect digital color images, particularly impulse noise [

6].

Impulse noise in digital images is a random variation in the intensity of pixels caused by short-duration pulses of high energy. This type of noise can significantly degrade the quality of images and poses various challenges in real-world applications. For example, the impulse noise in dashboard camera footage under low-light conditions [

7] can lead to the misinterpretation of videos, making it challenging to accurately identify vehicles involved in incidents. Additionally, impulse noise is a kind of ordinary noise in medical imaging (X-rays, MRIs, and CT scans) [

8] that can result in the misinterpretation or disappearance of critical details important in diagnosis.

Impulse noise commonly occurs during the acquisition or transmission of an image caused by imperfections on the device lens, malfunctioning camera photosensors, the aging of the storage material, errors during the compression process, and the electronic instability of the image signal [

9]. Addressing impulse noise in real-world applications often involves the use of various image processing techniques to restore the integrity of images. Impulse noise affects color digital images in such a way that the perturbed pixels differ significantly from their local neighborhood in the image domain.

Nature-inspired optimization algorithms have been widely applied in the image processing literature to address various challenges, including optimizing image quality evaluation [

10], feature selection [

11], and image reconstruction [

12]. Of particular note is genetic programming [

13], an evolutionary computing technique based on the principle of natural selection, which offers a flexible and adaptive methodology for addressing image processing problems. Like other evolutionary algorithms, the main idea is to transform a population of individuals (programs) by applying natural genetic operations such as reproduction, mutation, and selection [

14]. The adaptability and robustness of genetic programming make it well suited for addressing the challenges associated with impulse noise removal, particularly for color digital images.

Outline and Contribution

This paper proposes a novel adaptive filter to remove impulse noise in color images. The contribution of this work is twofold. First, this is the first filter to remove noise in color images by exploiting the correlation among the image channels through the genetic programming paradigm. The filter consists of a two-stage solution comprising detection and correction processes. The detection stage decomposes the input color image into its red-green-blue channels; then, it uses a binary classification model to identify which channel values for each image pixel are perturbed by noise. The correction stage modifies each pixel identified as noisy according to its perturbed channels and neighborhood. Second, the detection stage of the proposed filter is an interpretable model that performs very well under different conditions by considering three impulse noise models: salt and pepper, uniform, and correlated. Experimental results show that the filter is the second closest to an equilibrium point in terms of the performance balance, outperformed only by a filter using a black-box deep learning-based approach.

The remainder of this paper is organized as follows.

Section 2 presents the related works on color image denoising methods for impulse noise.

Section 3 introduces some preliminary concepts for the problem of color image denoising.

Section 4 describes the proposed adaptive filter and the evolutionary process used for its training.

Section 5 shows the experimental results obtained from a comparison of the proposed filter with other methods for filtering. Finally,

Section 6 presents some concluding remarks.

2. Related Works

A large number of filters for impulse noise removal from color images have been proposed in the literature, e.g., [

15]. Two types of filters, robust and adaptive, stand out in scenarios where images are degraded by complex noise patterns or variations in lighting and contrast. Robust filters deal with noisy pixels as a violation of the spatial coherence of the image intensities. The most well-known robust filter is the vector median filter (VMF) [

16], a non-linear method that is still a reference in the field. The VMF orders the color input vectors based on their relative magnitude differences in a predefined sliding window. However, robust filters apply a correction procedure to every image pixel, even if the pixels are not noisy. Therefore, filtered images commonly present too much smoothing and extensive blurring [

9]. Other methods exploit the sparsity of the image in some transform domains, formulating noise removal as an optimization problem. For example, the generalized synthesis and analysis prior algorithm (GSAPA) proposed in [

17] uses the split-Bregman technique to break down the optimization problem with multiple regularization parameters into relatively easy-to-solve subproblems. In [

18], an extension of the mean-shift technique was introduced to effectively reduce Gaussian and impulsive noise in color digital images; utilizing a novel similarity measure between pixels and a patch at the block’s center, the technique demonstrates efficiency in restoring heavily disturbed images.

On the other hand, adaptive filters assume that only some image pixels are perturbed by noise. These filters adapt to various image characteristics and noise statistics using different approaches. One of these approaches is the fuzzy peer-group concept, which associates a set of neighboring pixels whose distance to a central pixel does not exceed a threshold founded on fuzzy logic (see, for example, [

19]). Under this approach, the fuzzy modified peer-group filter (FMPGF) [

20] stands out due to its efficiency. Other approaches use fuzzy logic tools to measure magnitude differences, providing an adaptive framework to distinguish between noise and data. For example, the adaptive fuzzy filter proposed in [

21] uses a noise-detection mechanism to select a small portion of input image pixels and convolves them with a set of weighted kernels to create a layer of convolved feature vectors. The feature vectors are then fed into a fuzzy inference system, where fuzzy membership degrees and a reduced set of fuzzy rules play a crucial role in categorizing pixels as either noise-free, associated with edges, or noisy. Another fuzzy inference rule-based filter for impulse noise is the noise adaptive fuzzy switching median (NAFSM) filter [

22]. The NAFSM filter uses two-stage processing specialized for salt-and-pepper noise detection and removal, preserving strong edges through spatial averaging. A recent contribution in a similar vein is the adaptive fuzzy filter based on histogram estimation (AFHE) [

23]. The AFHE filter dynamically adjusts the window size based on local noise densities using fuzzy-based criteria. Additionally, it incorporates an iterative procedure based on the Gaussian mean for further processing. Another recent development presented in [

24] introduces a spatio-temporal filter designed to eliminate impulse noise from videos through the application of fuzzy logic. The distinctive feature of this filter is its ability to leverage both spatial and temporal correlations and color components in a sequential process, allowing it to effectively handle high impulse noise levels, even up to 70%. Other adaptive approaches are based on more straightforward decisions, such as the decision-based algorithm for the removal of impulse noise (DBAIN) [

25]. The DBAIN filter processes a noisy image by first detecting salt-and-pepper noise (as its major decision) and then replacing the noisy pixel values with the median of their neighboring pixels. Some comprehensive studies of adaptive filters for impulse noise removal from color images are presented in [

15,

26].

Machine learning-based techniques have also been applied to remove impulse noise from color images. For example, a support vector machine (SVM)-based filtering technique was proposed in [

27]. Later, fuzzy c-means (FCM) clustering combined with a fuzzy-support vector machine (FSVM) was introduced in [

28]. Additionally, multiple neuro-fuzzy filters were trained with an artificial bee colony algorithm and combined with a decision tree algorithm in [

29] to denoise corrupted images. Also, neural network-based denoising methods are another popular approach. For instance, a convolutional neural network-based denoising method mainly composed of a sparse block, a feature enhancement block, an attention block, and a reconstruction block was described in [

30]. Also, a convolutional autoencoder-based feature map domain was combined with low-rank models to improve denoising quality in [

31]. Recently, a novel denoising network called DeQCANet was introduced in [

32] for removing color random-valued impulse noise. This work implements a quaternion convolutional neural network, incorporating a novel quaternion map construction strategy to enhance color features across channels. The proposed denoising technique exhibits competitive performance compared to other well-established methods for denoising color images. Another recent development is the impulse detection convolutional neural network (IDCNN) introduced in [

33], which utilizes a switching filtering technique consisting of a deep neural network architecture to detect noisy pixels, followed by a restoration stage of these pixels through the fast adaptive mean filter. In [

34], a neural network architecture was introduced to recognize images affected by random-valued impulse noise, comprising a preprocessing stage and incorporating a pixel distortion detector, a cleaning mechanism, and a neural network complex for image recognition. An overview of deep learning techniques on image denoising can be found in [

35]. While convolutional neural networks and deep learning-based approaches excel in performance, they are complex and opaque. Their intricate architectures and complex hierarchical representations in these techniques make it challenging to decipher the exact decision-making process, limiting the transparency and understanding of how the denoising outcomes are achieved. Achieving transparency is crucial for various reasons, especially in applications where interpretability is essential for user trust, accountability, and ethical considerations.

Alternative filtering techniques tackle the challenge of image denoising through an evolutionary computing approach. For instance, in [

36], Toledo et al. introduced a denoising method employing a genetic algorithm. In their method, an input noisy image is represented as an individual, and the individuals of the initial population are represented as mutated versions of the image. The evolutionary process applied to the population allows for the continuous refinement and adaptation of the original noisy individual until an enhanced image is found. In a similar vein, in [

37], we addressed image denoising by merging output images of robust and adaptive filters to integrate them as an evolving population for a genetic algorithm. Genetic algorithms, in this context, are frequently employed to fine-tune specific filter parameters, essentially framing them as an optimization task. On the other hand, genetic programming has emerged as a highly promising field for the creation of adaptive filters aimed at noise reduction; however, no filters use genetic programming to remove impulse noise from color digital images. Nevertheless, there are a few works that have addressed impulse noise removal for grayscale images, the most relevant being [

38,

39,

40].

Petrovic and Crnojevic [

38] used genetic programming to produce two binary classification models for identifying noisy pixels in grayscale images following a cascade approach. While the first model identifies the majority of noisy pixels located within homogeneous regions of the image, the second is trained to specialize in identifying complex cases of noisy pixels (e.g., those with amplitudes close to their local neighborhood). The classification models were trained using test images contaminated with a mixture of two impulse noise models: salt and pepper and uniform. Once the noisy pixels are detected, they are corrected using a conventional

-trimmed mean method as part of a two-stage filter. On the other hand, Majid et al. [

39] used genetic programming to estimate the optimal value of every noisy pixel in a grayscale image. The estimation combines the useful information of local clean pixels within a small neighborhood with arithmetic operators. In their approach, Majid et al. used the directional derivative to detect pixels perturbed by salt-and-pepper and uniform noise. Their filter was tested using standard images perturbed with a noise density from 10 to 90%. Their experimental results showed that, compared to other approaches, their filter can restore noisy images while preserving edges and fine details, especially in the presence of high impulse noise density. Khmag et al. [

40] presented a filter based on a two-step switching scheme to remove both salt-and-pepper and additive white Gaussian noises. Their filter uses a patch-based approach, which decomposes a noisy image into a group of patches and then applies a clustering process to gather all patches with the same features and textures. They use genetic programming to generate an adaptive local filter to denoise each singular class of patches with different textural forms. The restoration process of these filters is performed by applying second-generation wavelet thresholding to the clustered patches. Other applications of genetic programming in image processing can be found in [

41]. It is important to emphasize that although many of the techniques developed for monochrome images can be directly applied to color image denoising, the independent processing of color image channels is commonly inappropriate, leading to the generation of strong artifacts. To address this challenge, developing methodologies that effectively exploit the inherent correlation among color channels becomes imperative, ensuring more accurate and artifact-free denoising results in the realm of color image processing.

4. Methodology

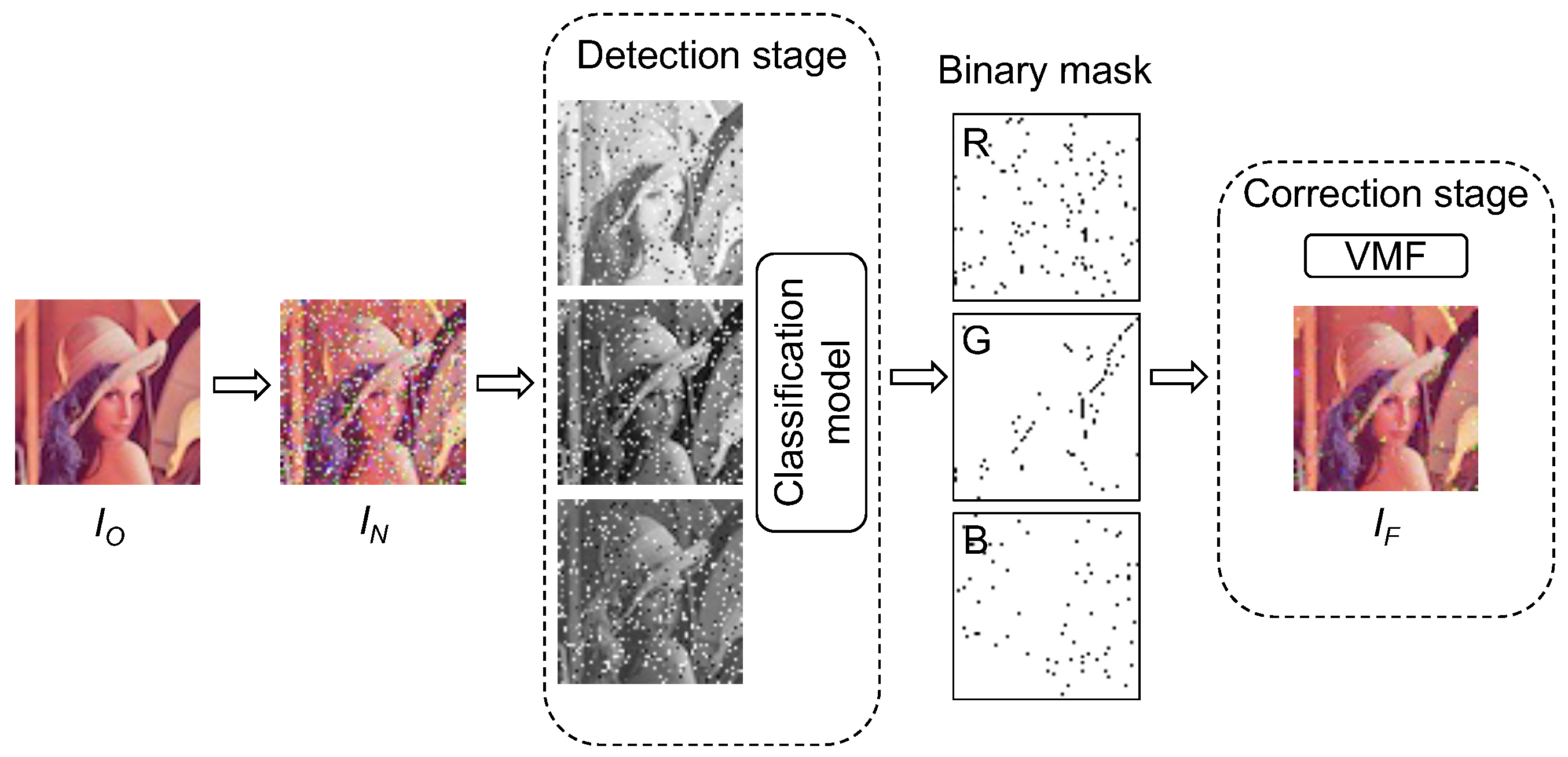

The proposed adaptive filter consists of two main stages for color image denoising: detection and correction. The detection stage takes a color image perturbed by impulse noise as input. In this stage, the color image is split into R, G, and B channels to separately evaluate each pixel in a binary classification model. For each channel, the model produces a binary mask representing the set of pixels classified as noisy.

The correction stage uses a version of a vector median filter applied only to the identified noisy pixels. The correction process of any pixel

consists of modifying one or more of its RGB channel values according to the pixel intensity values of its neighbors. In this context, the set of neighboring pixels of

, denoted as

, represents a

square window centered on

in the spatial domain of the image. Modifying the pixel value of

requires computing the sum of the absolute differences of the pixel intensities between

and its neighborhood. These differences among pixel intensities around

are denoted as

such that

Let

be the set of the pixel intensity differences between every pair of pixels considering

and its neighborhood, i.e.,

. Then, a noisy pixel

is replaced (in one or more of its channels) with the element with the minimum values from

. If only one channel of pixel

is identified as noisy (and the other two are not), only the perturbed value of this channel is replaced. On the other hand, if at least two channels are noisy in

, then the pixel is replaced entirely. Since building the set

requires

time given a fixed

window, the correction of the noisy pixels takes

time to complete. After the correction process, a new color image

is generated.

Figure 1 illustrates the two stages of the adaptive filter.

4.1. Genetic Programming Design

We use genetic programming to generate the binary classification model for detecting noisy pixels in a color image. In a typical machine learning workflow, the training stage involves using a dataset to produce the classification model, whereas only a single image is required in the proposed evolutionary approach. This approach leverages the versatility and adaptability of the genetic programming design, allowing it to effectively learn and adapt to complex noise patterns using only a single training instance. This training image

I has a noised version

perturbed by the impulse noise models described in

Section 3.1.

In this context, an individual is a classification model used to evaluate any pixel of an image I to decide whether is noisy. An individual is represented by a parse tree structure with nodes selected from a set of primitives consisting of functions and terminals. The internal nodes of the tree consist of a set of functions add(x,y), sub(x,y), mul(x,y), mydiv(x,y), mysigmoid(x)}. Four elements of the set of functions denote the basic arithmetic operations of addition, subtraction, multiplication, and division. In particular, the division operator mydiv(x,y) is protected in the sense that it does not signal “division by zero”. The sigmoid function guarantees that the results range between 0 and 1.

Additionally, the leaves of the tree consist of a set of terminals

pc_dist,

mu_dist,

median_dist,

sd_dist,

pxc} that apply statistics operations to the set of neighboring pixels

of pixel

in image

I. Given a fixed

window size, the computations of these statistics take

time.

Table 1 describes the set of terminals. It should be noted that the selection of the set of primitives (functions and terminals) was mainly carried out through some preliminary experimental tests.

On the other hand, an individual’s fitness represents its ability to correctly detect noisy pixels in an image. For this aim, an image

is perturbed using the three impulse noise models, each with a density of 10%, as described in

Section 3.1. The produced noisy image

is used as input for each individual of the initial population. Each individual of the population independently evaluates each pixel

of

to decide whether

is perturbed by noise. Evaluating any pixel requires

time for any individual; therefore, the detection process requires

time to complete. The output generated by each individual is a binary mask indicating which pixels are identified as noisy in

. This binary mask is used in the correction stage to produce the filtered image

, which is compared to

via the image quality metrics PSNR and MAE described in

Section 3.2. The results of these metrics are used to compute the individual’s fitness in the detection stage. It is worth noting that only these two metrics were chosen due to their low computational complexity.

Figure 2 illustrates this procedure.

4.2. The Proposed Evolutionary Algorithm

As part of the evolutionary process (also called training), the population of individuals produces offspring through crossover and mutation operators. The offspring have their fitness evaluated and compete for a place in the next generation. This process iterates until a certain number of generations is reached. This evolutionary process is described in Algorithm 1.

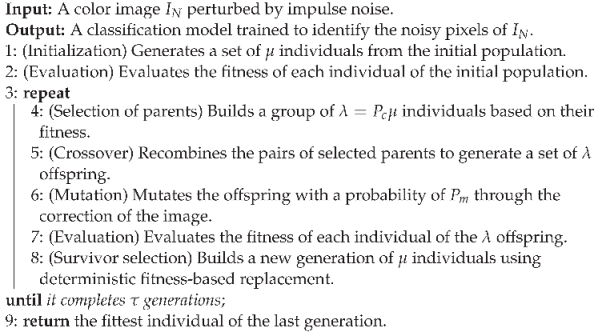

| Algorithm 1: The evolutionary process of the detection stage |

![Applsci 14 00126 i001]() |

Line 1 of Algorithm 1 generates an initial population of random individuals. To generate this population, we use the simplest and most popular full method to produce complete random trees. Each tree is generated recursively by randomly selecting primitives from the sets of functions and terminals. Primitives from the set of functions F are selected as the internal nodes of the tree, whereas those from the set of terminals T are selected as the leaves. The maximum depth of each of the initial trees is constrained to three. Considering random trees of size , the initial population generation requires time.

Lines 2 and 7 of Algorithm 1 perform the fitness evaluation of each individual of the population. The quality metrics MAE and PSNR, described in

Section 3.2, are used for this aim. These metrics compare a filtered image

, produced by the detection and correction stages according to each individual, with its noiseless version

(see

Figure 2). The MAE indicates a better filter performance with a lower value, and the PSNR does the same with a higher value. Then, maximizing fitness is equivalent to maximizing the ratio between the MAE and PSNR. The fitness evaluation for all the

individuals of the population takes

time. Note that the SSIM and FSIM are not considered in the fitness evaluation due to their higher computational complexity.

Line 4 of Algorithm 1 performs a random selection of pairs of individuals with replacement until it generates a group of individuals, where denotes the crossover ratio. The tournament selection is used to randomly select three individuals from the population and then select the fittest two. Implementing the tournament selection requires time.

Line 5 of Algorithm 1 recombines pairs of individuals from the group selected by Line 4. A one-point crossover is used, where a random vertex is chosen within two copies of parent individuals, and then they exchange the subtrees rooted at the selected vertices between them. Recombining the entire population requires time.

Line 6 of Algorithm 1 implements the uniform mutation operator to introduce diversity in the population. This operator takes as input an individual with probability , and then it randomly selects a vertex of the tree to replace it with a new random subtree. Each subtree is generated using the full method in time. Thus, uniform mutation applied to the offspring takes time.

Line 8 of Algorithm 1 generates a new population through the union of the individuals from the last population and their offspring. A fitness-based replacement (implemented with a sorting algorithm) is used, guaranteeing the survival of the fittest individuals. The execution time of this step is .

Finally, Algorithm 1 iterates Lines 4–8 until they complete generations. Then, the algorithm returns the fittest individual of the last generation (Line 9). The overall execution time of Algorithm 1 is .

5. Experimental Results

Experiments were conducted to evaluate the performance of the proposed adaptive filter. The filter was implemented using scikit-image 0.18.3, a Python module that includes a collection of algorithms designed for image processing. Additionally, the evolutionary process (described in Algorithm 1) and its variation operations were implemented using DEAP 1.2.2, an open source evolutionary computation framework. The experiments were carried out on a 3.6 GHz Intel Core i7 (Mac) with four cores, 8 GB of RAM, and OS X 11.5.2.

5.1. Determination of Parameters

The classification model’s training process was performed using a 24-bit color version of the popular image of Lena. This training image, denoted as

in

Section 4, was simultaneously contaminated by the three impulse noise models with a total density of 30%, i.e., 10% for each of the models described in

Section 3.1. Combining the impulse noise models into a single image provides insights into the algorithm’s ability to handle various noise types and densities by evaluating the proposed method’s robustness, generalization capabilities, and potential practical utility in image processing applications.

On the other hand,

Table 2 shows the evolutionary settings used by the genetic program during the training process. These settings were estimated via experimentation and validation. A set of preliminary trials was conducted to find the best parameters of Algorithm 1 by considering the tradeoff between time and efficiency; however, similar to other evolutionary techniques, this approach has the limitations expressed in the NFL (no free lunch) by considering that there is no single set of evolutionary settings that will perform optimally on all possible color digital images.

After model training, a set of trees (representing the individuals of the last generation) was produced. Each of these trees comprises the set of primitives (functions and terminals) described in

Section 4.1. The tree identified as the fittest individual is available in a PDF, which can be downloaded from

https://bitbucket.org/dfajardod/impulse_noise_gpfilter/src/ (accessed on 20 October 2023).

5.2. Benchmarks

Thirty benchmarking color images were used as test images for the proposed adaptive filter (GP). Each of these images was perturbed by the three impulse noise models with four different densities (5%, 10%, 15%, and 20%), i.e., a total of 360 test color images. These noise densities were selected to ensure a more equitable comparison among the different comparison filters. This decision stems from the recognition that certain filters have been specifically designed for higher noise densities, whereas others may perform more effectively in scenarios with lower noise levels. By evaluating the filters across an intersecting range of noise densities, we aim to conduct a comprehensive and balanced assessment. In this vein, the comparison of filters encompassed a diversity of robust and adaptive filters, some of which are widely used in digital image processing, whereas others use advanced adaptive methods. Filters using fuzzy logic tools to provide adaptiveness to local features were used, such as the fuzzy metric peer-group filter (FMPGF) and the noise adaptive fuzzy switching median (NASFM). Additionally, the decision-based algorithm for removing impulse noise (DBAIN) was also used, a filter designed for highly corrupted color images. Given their performance, the vector median filter (VMF) and the generalized synthesis and analysis prior algorithm (GSAPA) were also considered. Finally, a deep learning approach, the impulse detection convolutional neural network (IDCNN), was also used. The performance metrics used to compare the quality of the resulting images of these filters were the MAE, PSNR, SSIM, and FSIM (described in

Section 3.2).

5.3. Performance of the Detection Stage

Experiments were conducted to measure the performance of the binary classification model implemented in the detection stage of the proposed GP filter. To this end, prior information about noisy pixel locations was stored by comparing the 360 test noisy images with their noiseless original counterparts. Later, the detection stage of the proposed GP was applied by classifying pixels as noisy or not noisy. Finally, a comparison of the model predictions with the stored prior information enabled the generation of a confusion matrix containing true positive (tp), true negative (tn), false positive (fp), and false negative (fn) predictions. The performance metrics applied to these predictions were the following: accuracy, precision, recall, specificity, and F1 score.

Accuracy (Acc.) indicates the extent to which the predictions about the condition of the pixels differed from the real conditions, i.e., Acc = (tp + tn)/(tp + tn + fp + fn). Precision (Prec.) indicates how well the classifier performed concerning each pixel’s noisy or noiseless status, computed by tp/(tp + fp). On the other hand, recall (or sensitivity) is the percentage of noisy pixels that were correctly identified, calculated by tp/(tp + fn). Similarly, regarding the noiseless pixels, specificity (Spec.) is calculated by tn/(tn + fp). Finally, the F1 score represents the harmonic mean of precision and recall, i.e., F1 = (Prec·Recall)/(Prec + Recall).

Table 3 shows the average results of the performance metrics regarding the proposed binary classification model. As can be observed, in general, the model increased the accuracy, precision, and F1 score according to the increase in the image’s noise density, whereas the sensibility and specificity decreased in this respect. Note that the model is highly efficient in detecting noisy rather than noiseless pixels. Finally, although the model achieved a better balance between precision and recall in terms of salt-and-pepper noise, better accuracy was obtained in terms of uniform and correlated noise.

Since the correction stage of the proposed GP filter consists of a modified version of the VMF (see

Section 4), the average performance comparisons shown in the following section allow us to determine the influence of the detection stage on the efficiency of the GP filter.

5.4. Performance of the Proposed Filter

Table 4 and

Table 5 show the average results of the quality metrics obtained after filtering the test images to remove each of the impulse noise models: salt and pepper, uniform, and correlated.

Table 4 presents the average values of the MAE and PSNR, whereas

Table 5 shows those corresponding to the SSIM and FSIM.

As observed in

Table 4 and

Table 5, in general, the proposed GP filter ranked second in average values across all quality metrics (MAE, PSNR, SSIM, and FSIM) for the uniform and correlated noise models, closely following the IDCNN filter. This suggests that the GP filter performed well overall compared to the other filters, particularly in conjunction with these noise models. Regarding salt-and-pepper noise, the DBAIN filter excelled across all quality metrics, closely paralleled by the NAFSM and IDCNN filters. This behavior was due to their specialization in detecting and removing this noise model. However, the competitiveness of the NAFSM and DBAIN filters declined for the uniform and correlated noise. Furthermore, the difference in magnitude of their average values for these noise models was significant, becoming more pronounced as the noise became more prevalent, showcasing a specialization that might not generalize well to other noise types. The IDCNN filter, based on a deep learning model, exhibited robustness to variations in the scale, orientation, and lighting conditions of color images, demonstrating its adaptability to different image textures and noise models. VMF, on the other hand, notably produced images with lower-quality values. This is because it did not differentiate between pixels based on their noise levels or the type of noise they exhibited, resulting in excessive blurring in images with large contrasting regions. Finally, the proposed GP filter generally outperformed the GSAPA and FMPGF filters across all noise models, demonstrating competitive performance across different noise models and ranking consistently well compared to other filters.

The average results presented in

Table 4 and

Table 5 encompass a diverse set of 360 test color images with different features such as size, shape, color texture, light conditions, edge characteristics, etc. The complete set of resulting filtered images, along with their original sizes and denoised versions, is available in the

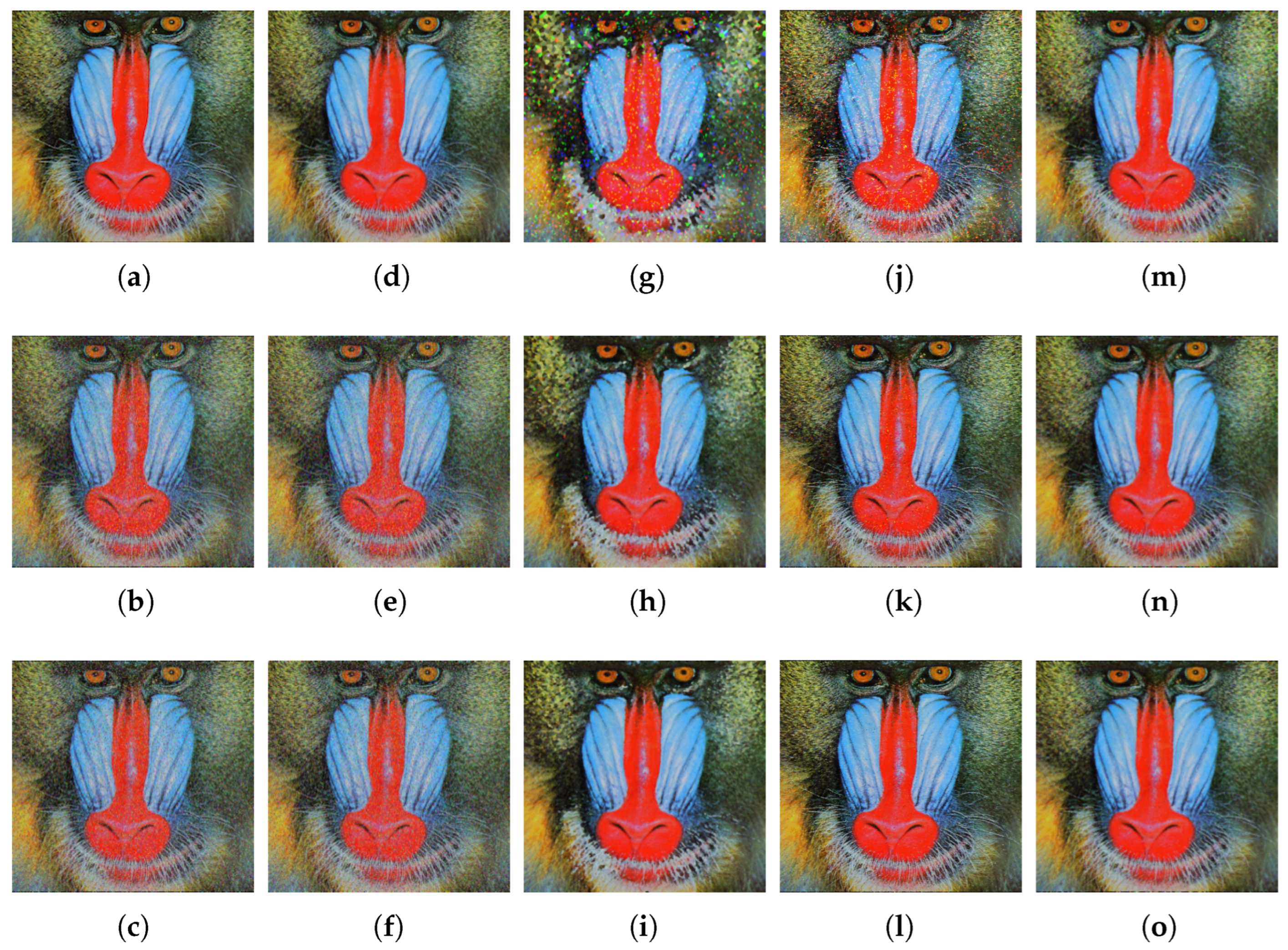

Supplementary Materials. However, four test images with different distinguishable characteristics were selected for illustrative purposes to visually analyze the filters’ efficiency: Baboon, Goldhill, Pepper, and Caps.

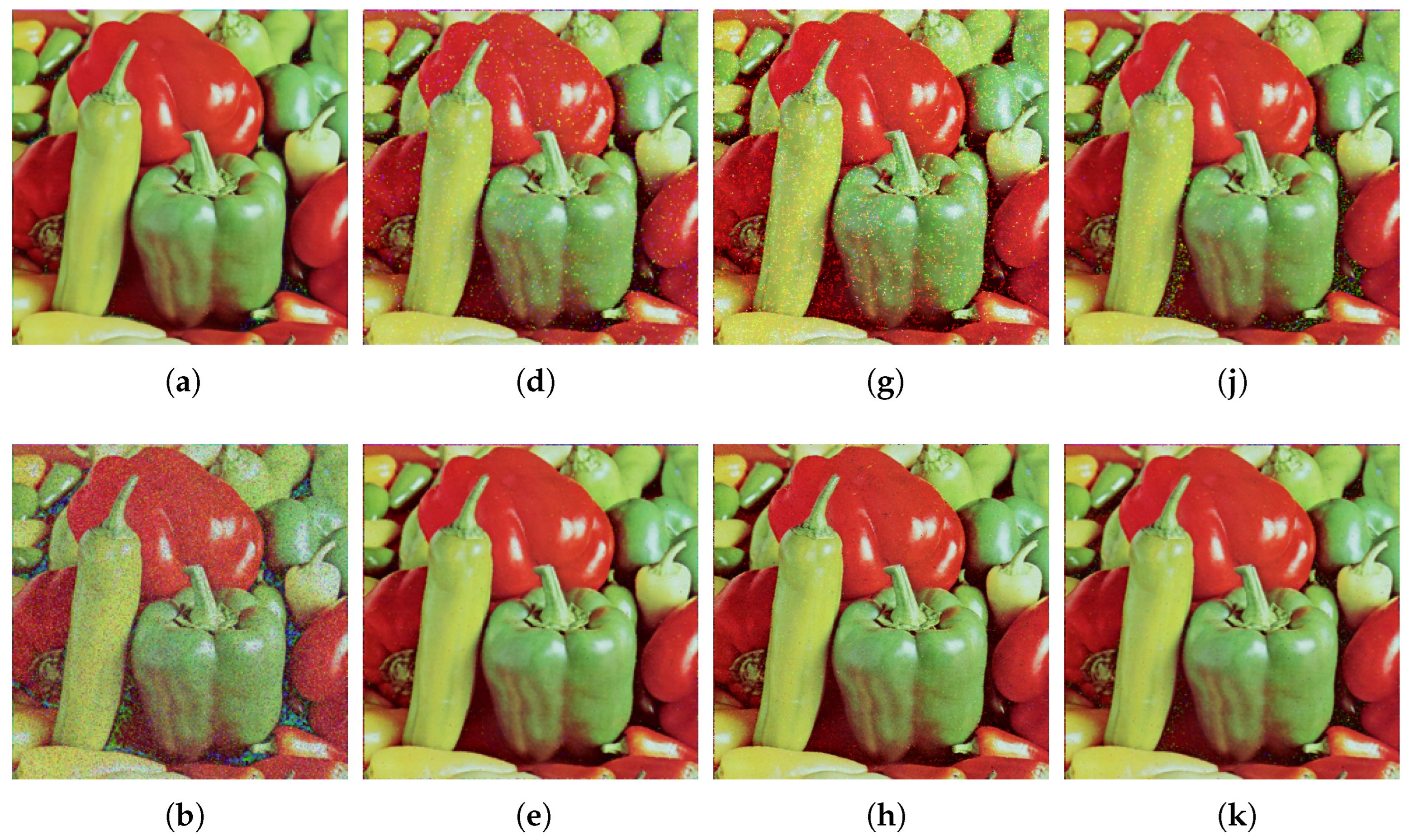

Figure 3 shows the visual results of some of the comparison filters applied to the Baboon image, considering the salt-and-pepper, uniform, and correlated noise models from top to bottom, respectively. Since the Baboon image has more profound and larger textured areas compared to the other test images, robust filters such as the VMF exhibited lower performance. Because of this, the resulting images of this filter were visually omitted. Among the filtered images,

Figure 3a closely resembles the version of the image before adding noise. On the other hand, some filtered images show additional artifacts, e.g.,

Figure 3g. The ICDNN filter (

Figure 3j–l) and the proposed GP filter (

Figure 3m–o) excelled in noise removal while preserving the details of the images across the three different noise models.

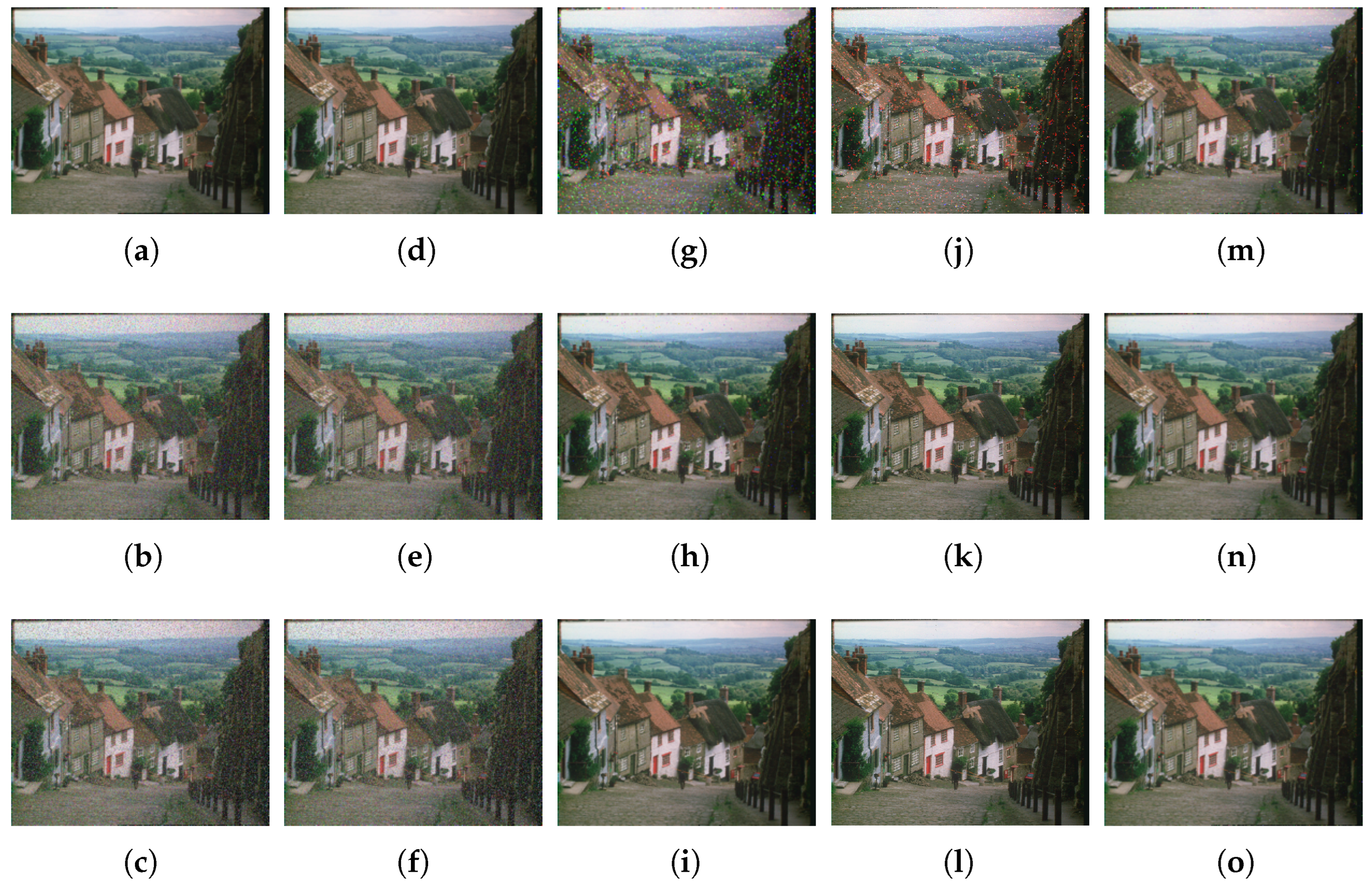

Figure 4 shows the resulting images produced by some of the comparison filters applied to the Goldhill image, arranged according to the three noise models from top to bottom. The Goldhill image has similar color tonalities to the Baboon image but has a few smooth areas and complex geometric patterns, resulting in significant contrast differences. For this reason, the filtered images shown here exhibit similar visual behavior to the filtered Baboon images. As observed in the filtered images, the NAFSM filter performed the best for salt-and-pepper noise (very close to the version of the image before adding noise). Although the IDCNN filter (

Figure 4j–l) excelled in noise removal while preserving the details of the images for uniform and correlated noise, the proposed GP (

Figure 4m–o) filter maintained consistent performance across the three different noise models. In general, the proposed GP filter ranked second for uniform noise and achieved some of the best MAE values for correlated noise.

Figure 5 and

Figure 6 show the filtered images produced by some of the filters applied to the images of the Peppers and Caps, respectively, including the VMF (which does not appear in

Figure 3 and

Figure 4). Unlike the previous images, in these images, the VMF demonstrates very competitive results across the three noise models, even producing some of the best images considering uniform and correlated noise. This is because these images have large, smooth regions with more contrast information. The VMF, IDCNN, and the proposed GP filters were generally competitive in these test images for both the uniform and correlated noise models.

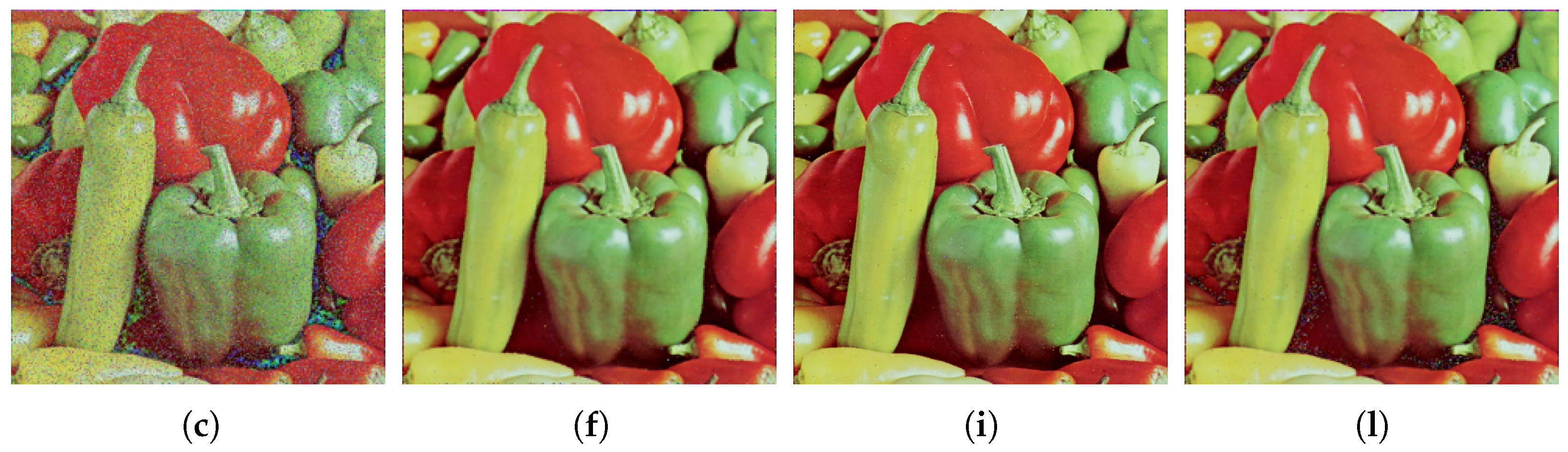

5.5. Discussion

In this context, overall performance relies on the capacity of a filter to simultaneously detect and remove any impulse noise model (salt and pepper, uniform, or correlated) without a preference or specialization. Therefore, the filtering process for each noise model can be seen as an objective function for a multi-objective problem. Each objective function depends on the image quality metrics used. Since a lower MAE value means fewer lost details in the image, searching for the optimum performance considering the MAE can be seen as a minimization multi-objective problem. Conversely, the cases for the PSNR, SSIM, and FSIM all represent maximization multi-objective problems (see

Section 3.2).

Figure 7,

Figure 8,

Figure 9 and

Figure 10 illustrate the extent to which the performance of the comparison filters deviates with respect to a hypothetical optimum, also known as an equilibrium point in the context of multi-objective optimization [

45]. In this work, the equilibrium point represents the best values obtained simultaneously for each corresponding noise model.

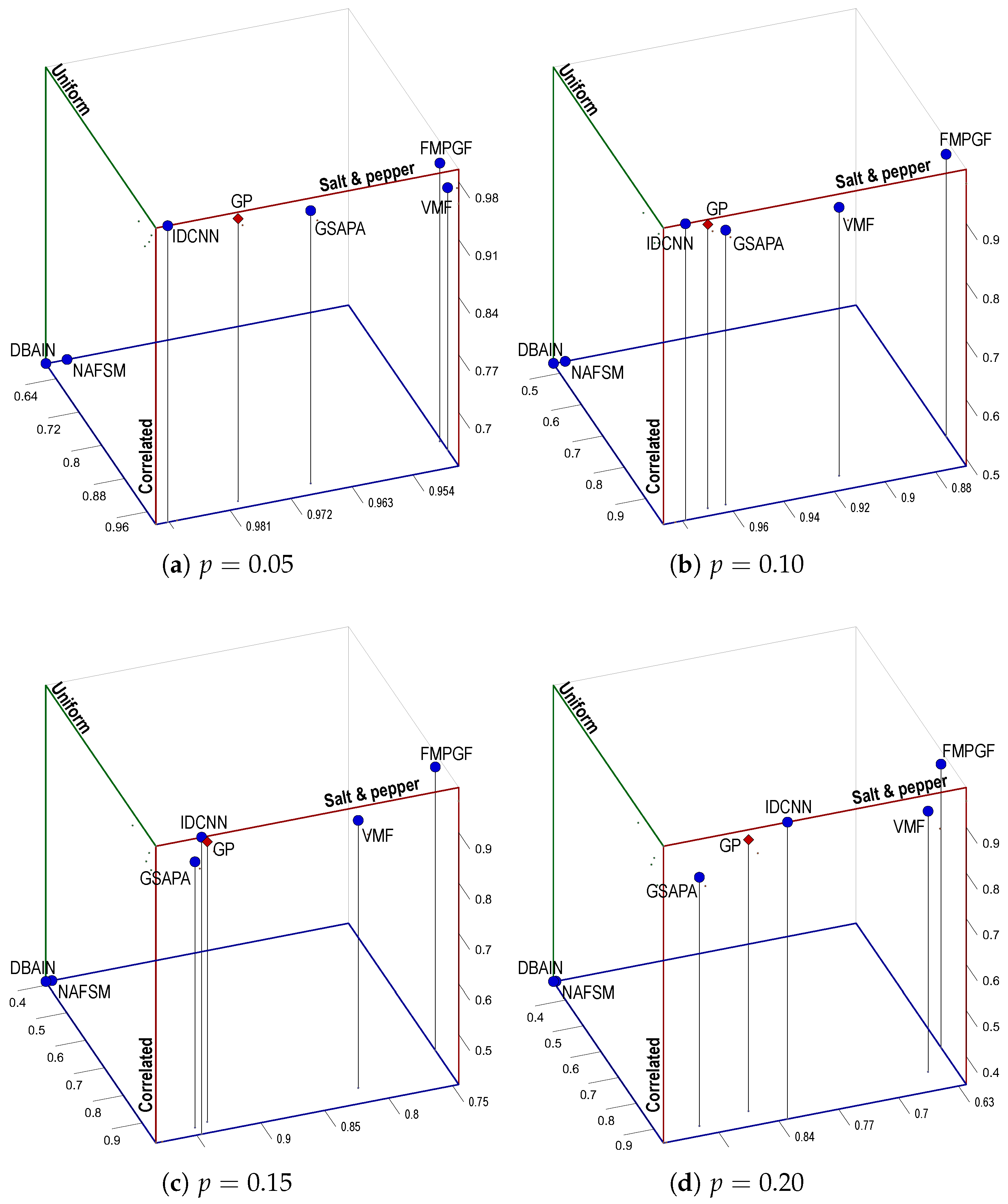

Figure 7 presents a three-dimensional Pareto front for the MAE average values, considering the complete set of test images with noise densities

and

. As can be observed, the MAE average values of the proposed GP filter (indicated by a red diamond) are among the closest to the equilibrium point for all noise densities, on par with the IDCNN and GSAPA filters. Given their specialization in salt-and-pepper noise, the NAFSM and DBAIN move away from the equilibrium point as the noise density level increases for the other two noise models. The average values of these two filters are located in a two-dimensional opposite extreme point at

. Consistent with the results of

Table 4, the NAFSM and DBAIN are the furthest from the optimum.

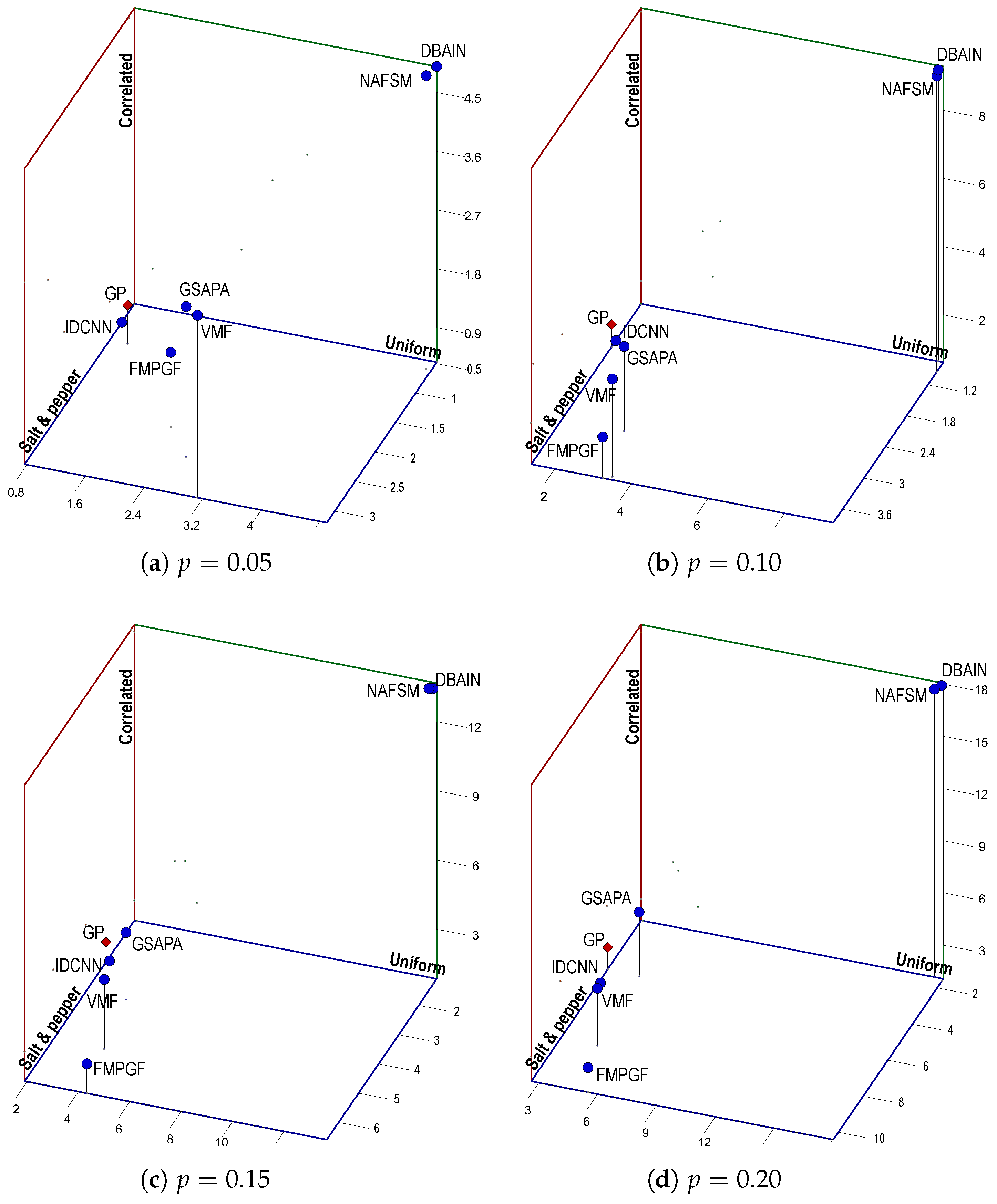

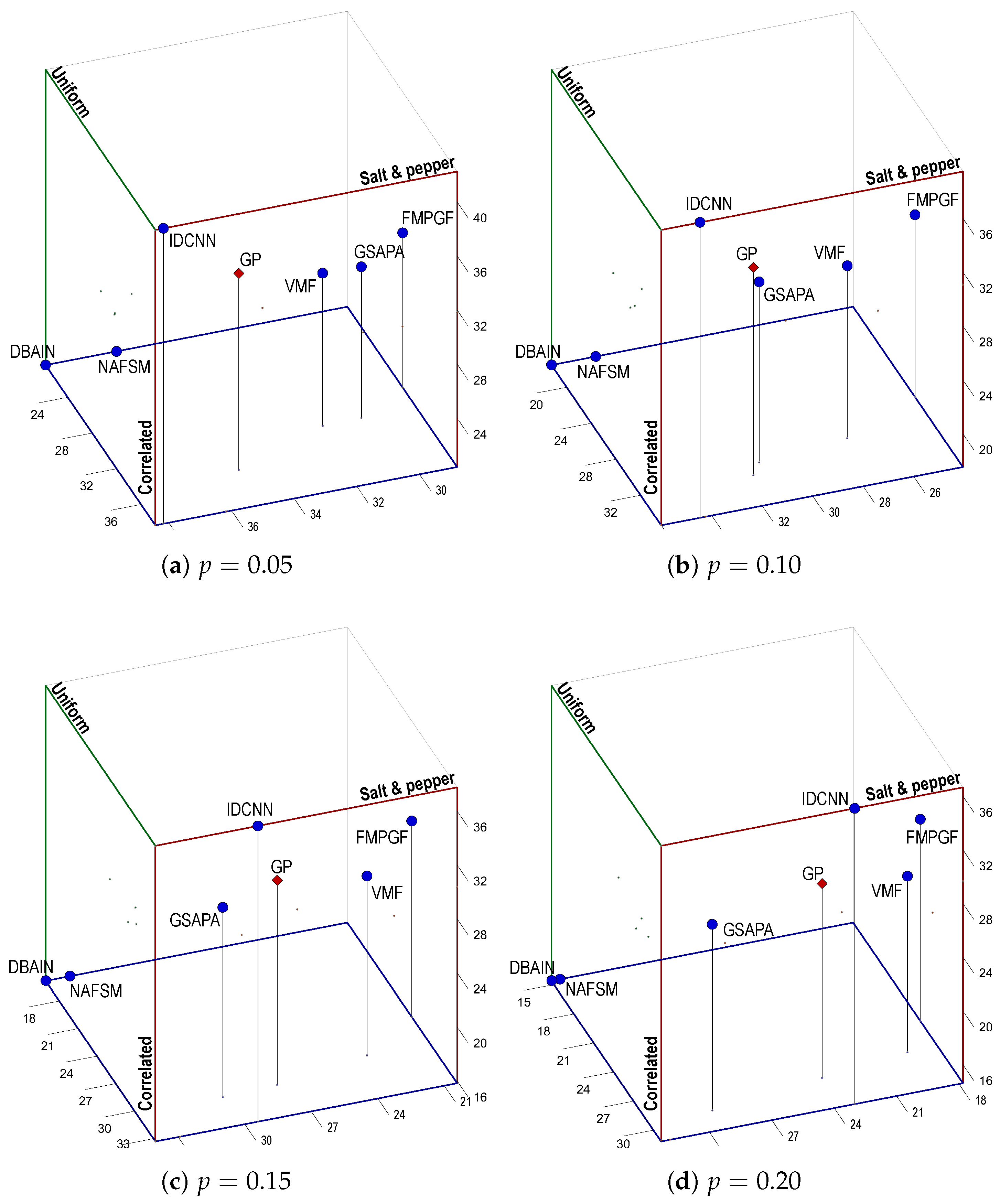

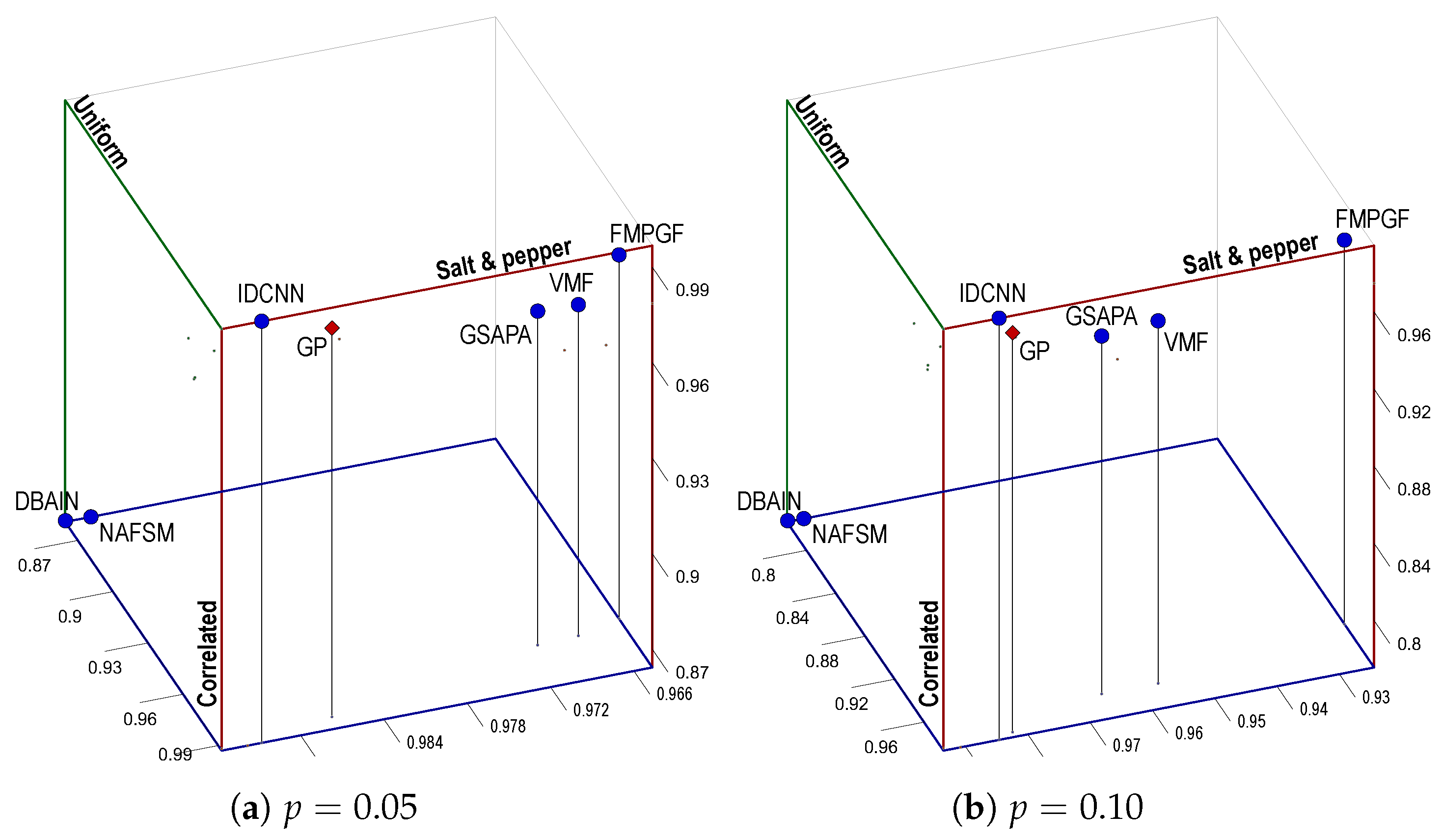

Similar to the behaviors shown in

Figure 7 but oriented in the opposite direction,

Figure 8 depicts the three-dimensional Pareto front for the PSNR average values. In this metric, the IDCNN filter demonstrates the best average values for the maximization multi-objective problem, followed by the GP filter, for

and

. However, it is unclear which one of the average values of the IDCNN, GP, or GSAPA filters is the second closest to equilibrium for

and

. It is also hard to determine which of the filters yields the furthest average values from the optimum. Unlike in

Figure 7, the DBAIN is in a two-dimensional extreme point concerning the equilibrium point across all noise densities, followed by the NAFSM. Similarly to

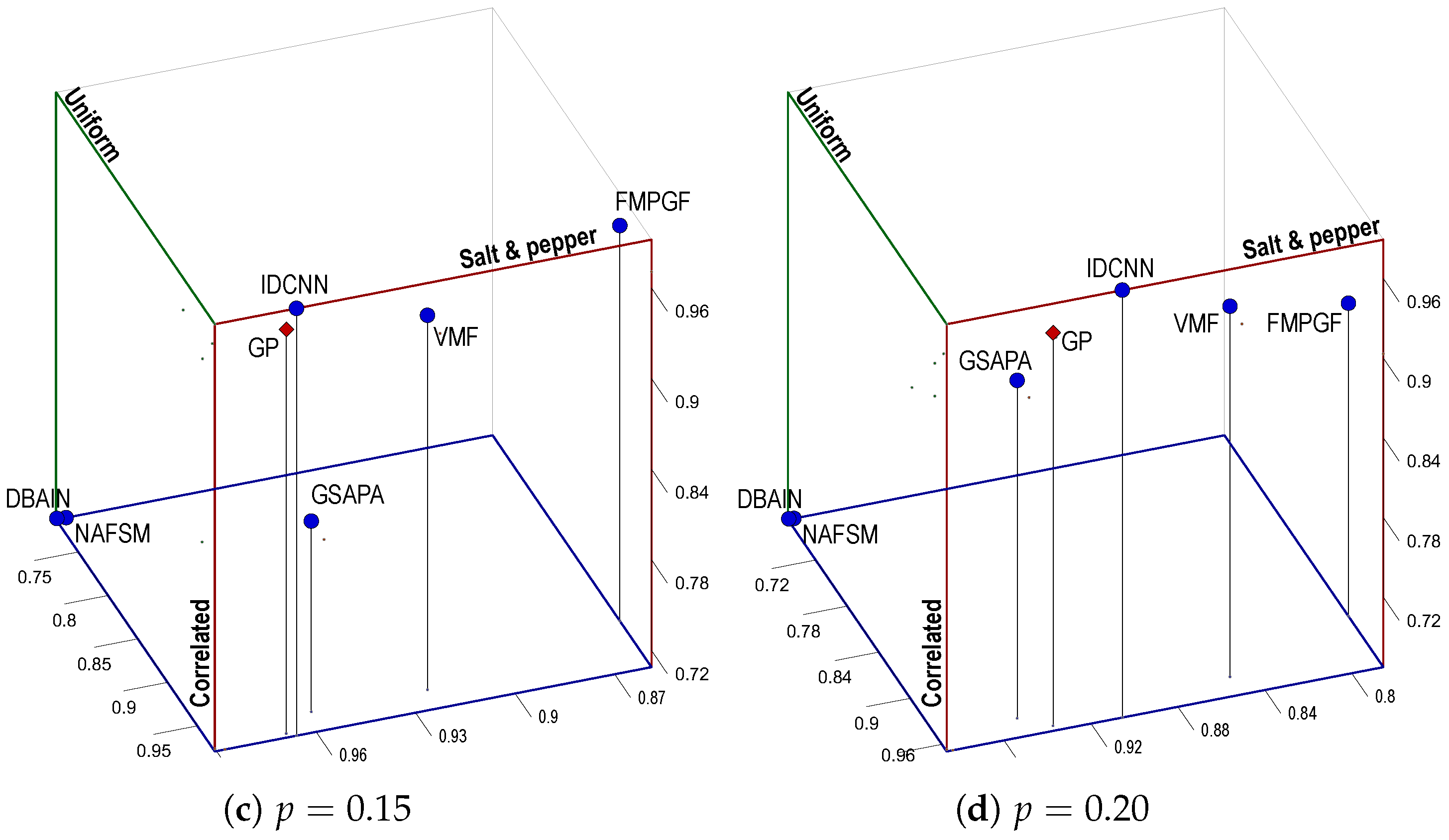

Figure 8, but with normalized values,

Figure 9 and

Figure 10 present the three-dimensional Pareto fronts for the SSIM and FSIM average values, respectively.

6. Conclusions and Future Work

This work presents an adaptive filter designed for the removal of three prevalent types of impulse noise, namely salt and pepper, uniform, and correlated noise, in color digital images. The proposed filter employs a two-step process, leveraging a binary classification model to identify noisy pixels within the image, followed by a correction step tailored to the specific color channels. What sets this proposed filter apart from the majority of filters utilizing classification models for noise removal is its high level of interpretability. This interpretability is a result of the evolutionary approach taken during model training—a process rooted in the principles of the genetic programming paradigm. By evolving the model through an iterative genetic programming framework, the resulting filter not only achieves effective noise reduction but also provides insights into the decision-making process of the classification model. Another distinctive feature of the training is that it only required a single image purposefully contaminated by the impulse noise models. Using a unique training image was particularly useful in reducing the computational complexity.

An experimental study was conducted to evaluate the performance of the proposed adaptive filter. A comparison with other filters was performed via the four image quality metrics (PSNR, MAE, SSIM, and FSIM). The experimental results show that most comparison filters present variability in the values of their quality metrics depending on the noise model and the image characteristics. In this vein, the proposed filter, called GP in

Table 4 and

Table 5, consistently obtained good performance values, second only to the ICDNN, which uses a deep learning-based approach. When measuring efficiency as a minimizing/maximizing multi-objective problem, as shown in

Figure 7,

Figure 8,

Figure 9 and

Figure 10, the proposed filter is one of the closest to an equilibrium point across all images and noise models used in the experiment, on par with the ICDNN and GSAPA filters.

Future work will focus on the integration of alternative machine learning techniques for the identification and correction of noisy pixels in color digital images. An intriguing avenue of exploration would be predicting the correlation of color channels to facilitate improved pixel replacement strategies within the image. This innovative approach holds promise for further enhancing the robustness and adaptability of the proposed adaptive filter in diverse real-world scenarios. It would also be interesting to explore how the genetic programming algorithm can be fine-tuned or adapted according to user preferences and specific requirements for noise removal in color images. Finally, optimizing the evolutionary settings of the genetic programming algorithm by employing other metaheuristic algorithms or ensemble approaches is also a topic left for future research.