A Safe Admittance Boundary Algorithm for Rehabilitation Robot Based on Space Classification Model

Abstract

1. Introduction

- (1)

- An algorithm model is proposed for identifying the safe space range based on the density of neighboring points in the point cloud.

- (2)

- The working space of the robot’s end degree of freedom is morphologically and reasonably restricted in the robot control layer.

- (3)

- This algorithm can improve the safety of HRI for collaborative robots in the field of upper limb rehabilitation.

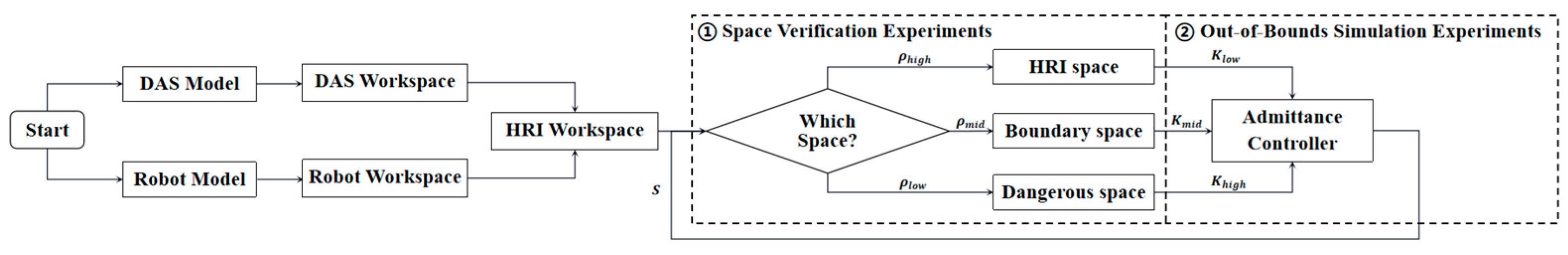

2. Safe Admittance Boundary Algorithm

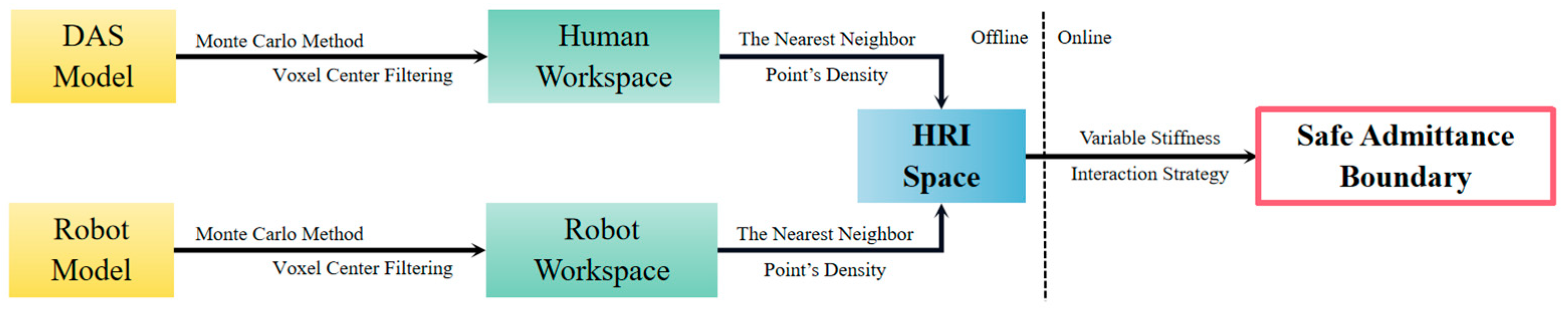

2.1. Overview of the Algorithm

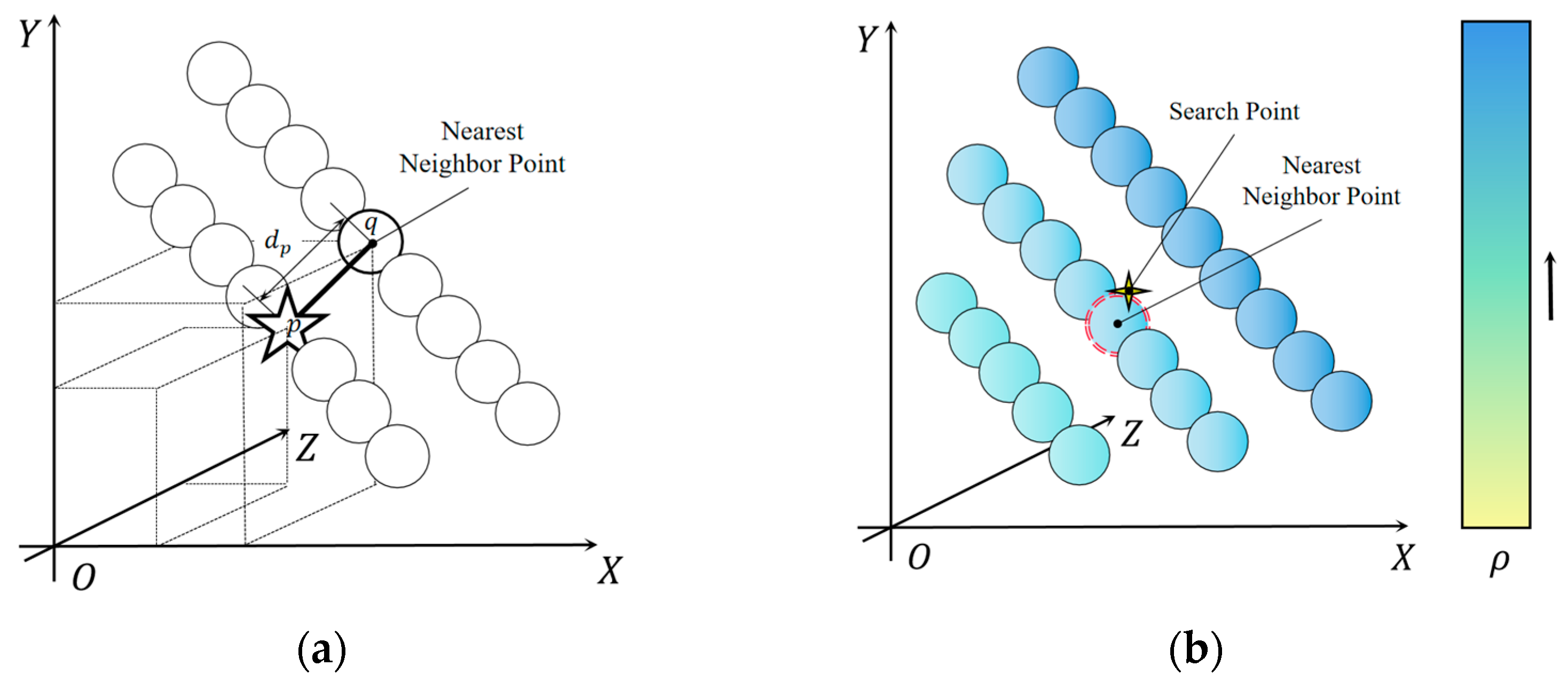

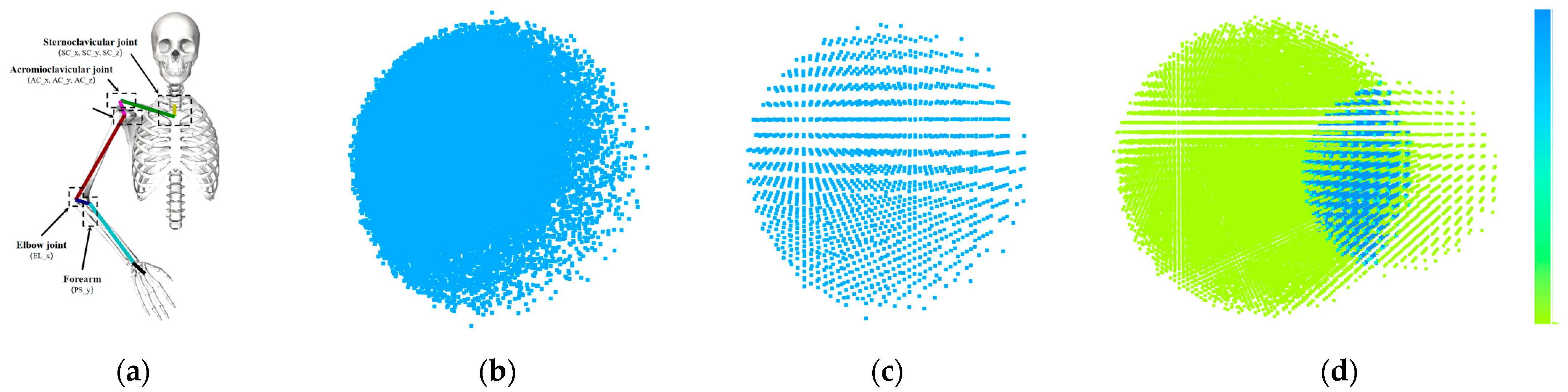

2.2. Point Cloud Isodensification

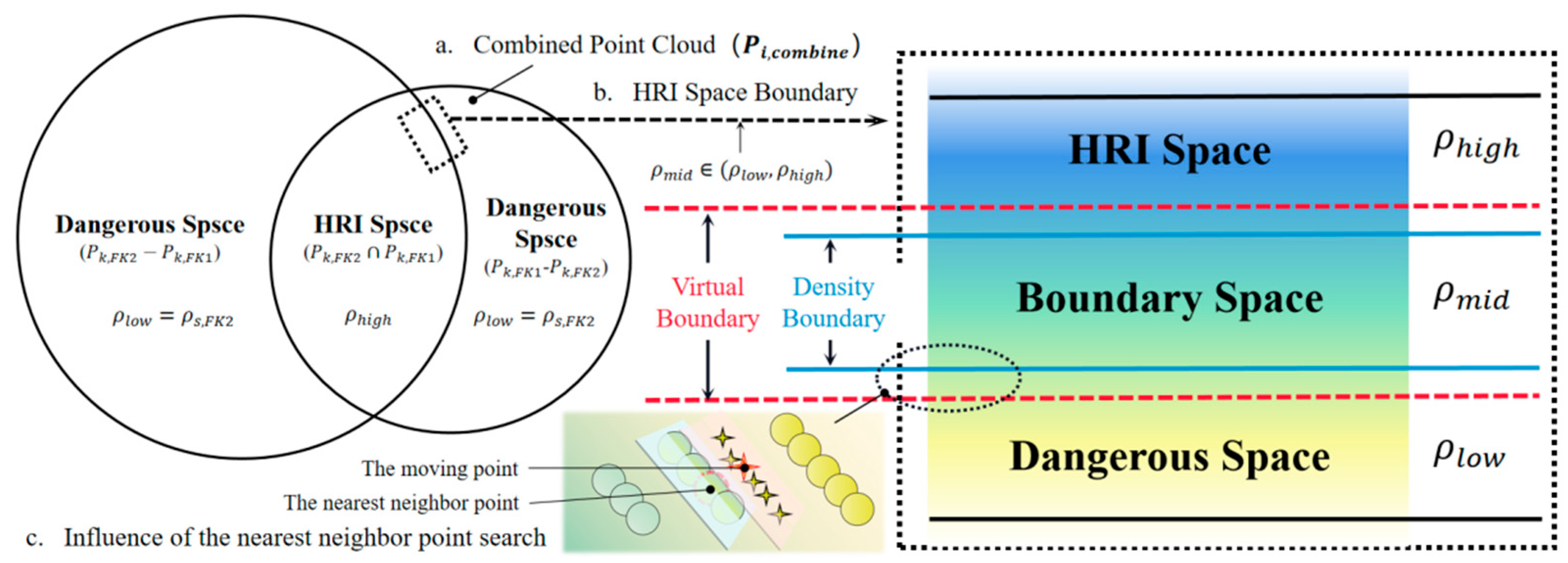

2.3. Space Classification Model

| Algorithm 1 Space classification algorithm |

|

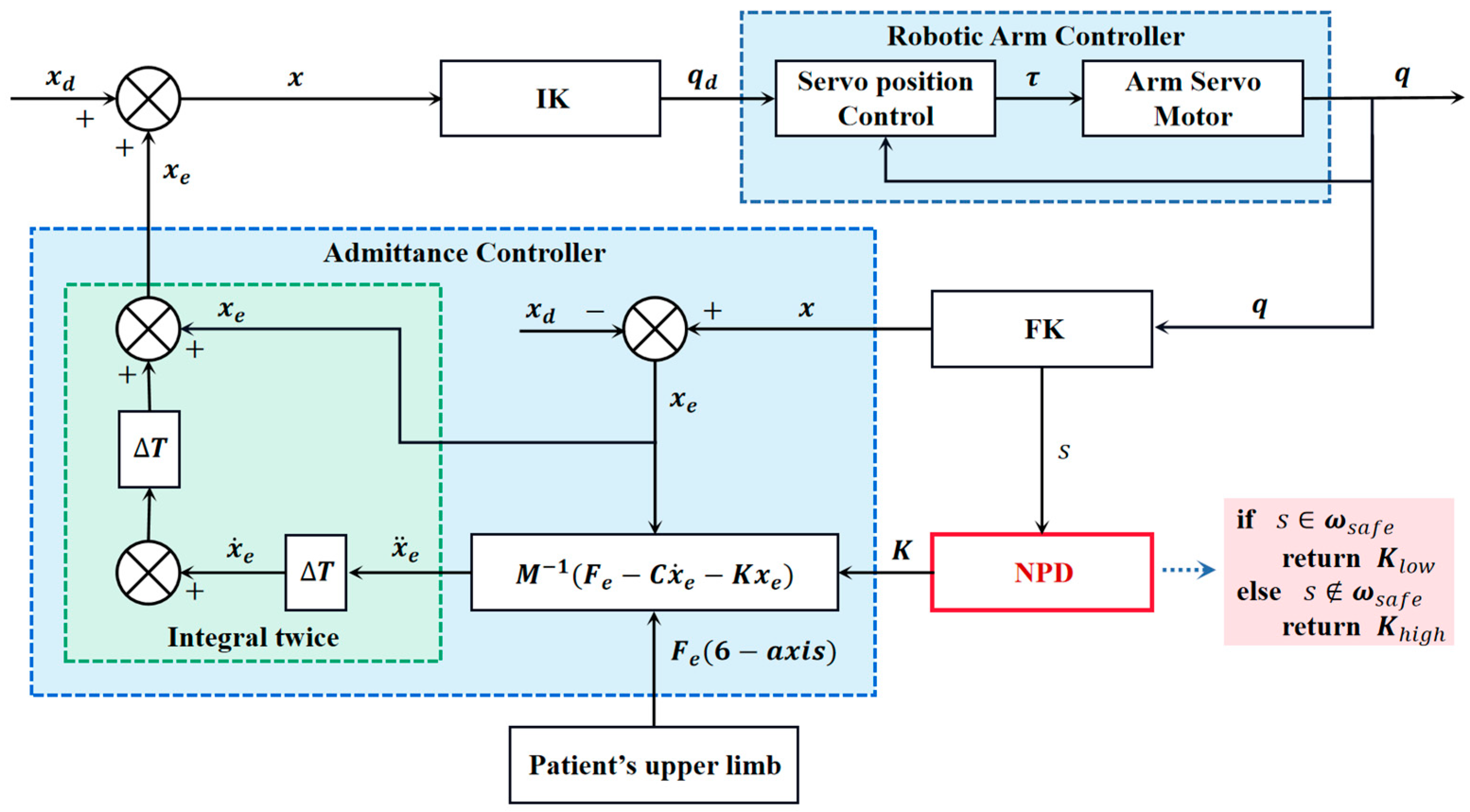

2.4. Variable Stiffness Admittance Strategy

| Algorithm 2 Variable stiffness admittance strategy algorithm |

|

3. Experiments

3.1. Experimental Settings

3.1.1. Space Verification Experimental Settings

3.1.2. Out–of–Bounds Simulation Experimental Settings

3.2. Results

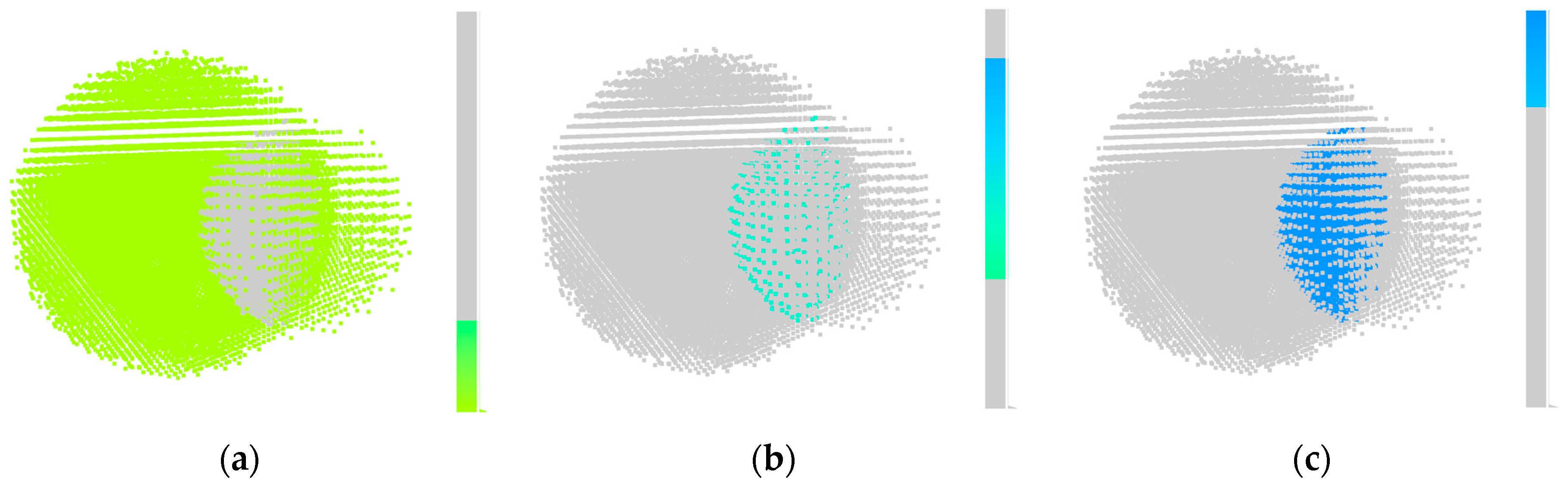

3.2.1. Space Verification Experiments

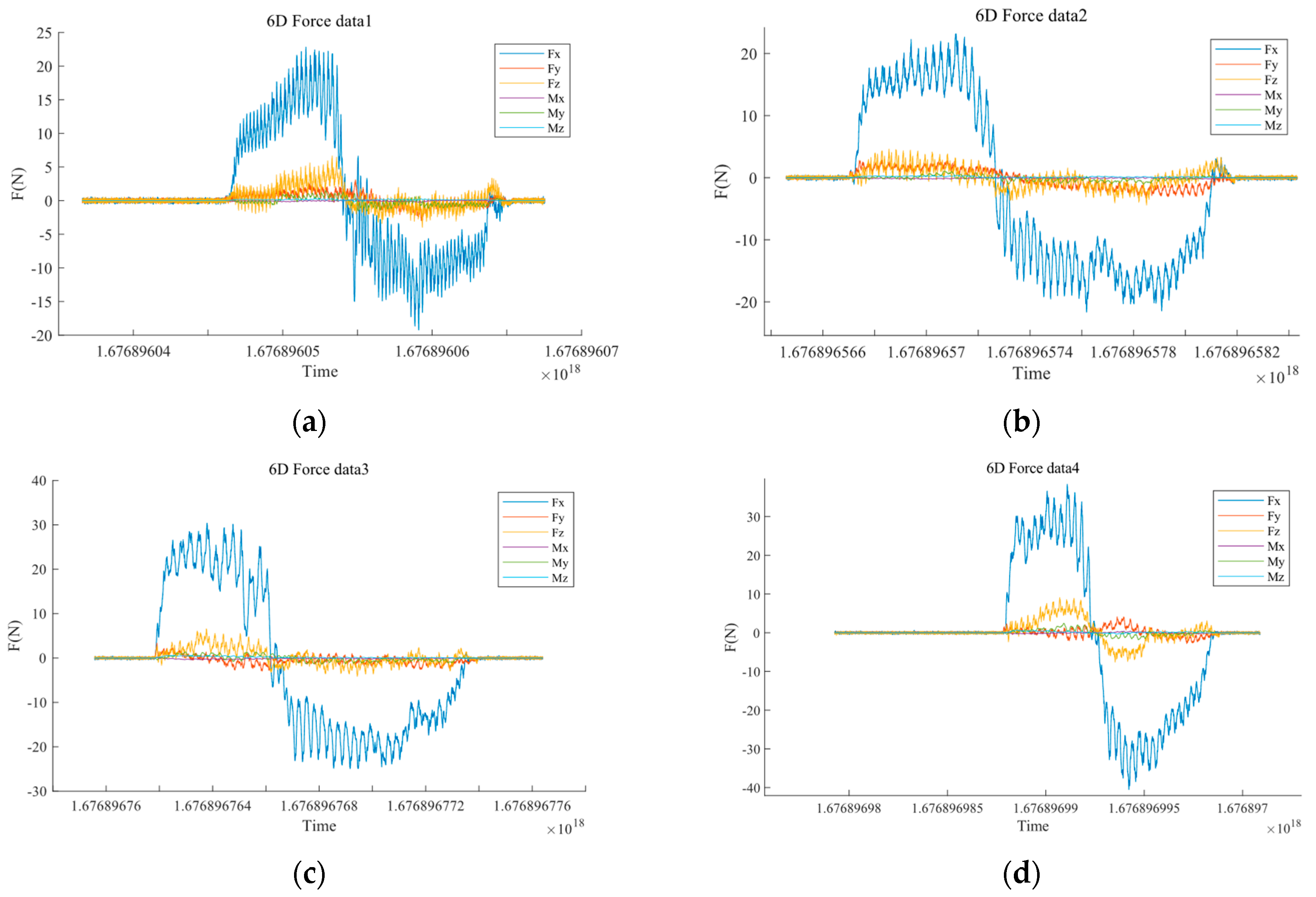

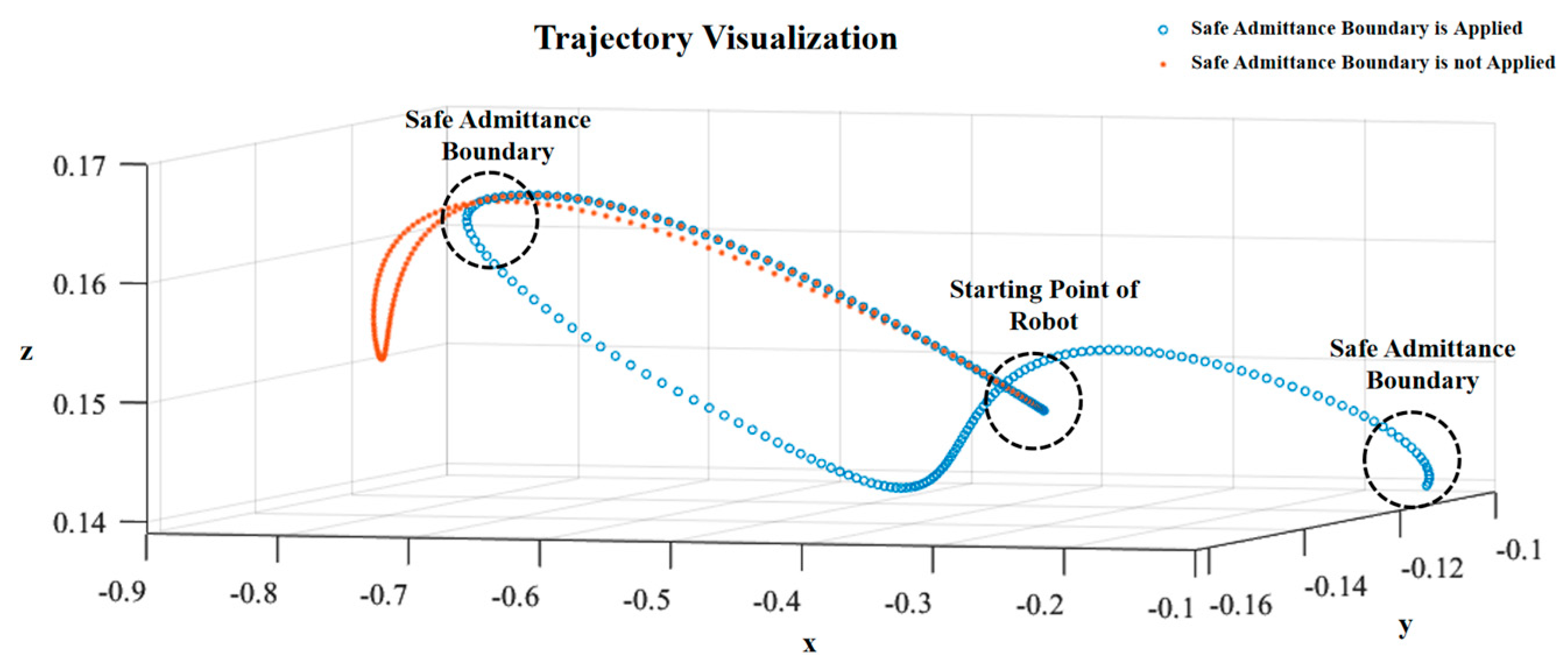

3.2.2. Out–of–Bounds Simulation Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Beach, B. Ageing Populations and changing worlds of work. Maturitas 2014, 78, 241–242. [Google Scholar] [CrossRef] [PubMed]

- Heiland, E.G.; Welmer, A.-K.; Wang, R.; Santoni, G.; Fratiglioni, L.; Qiu, C. Cardiovascular Risk Factors and the Risk of Disability in Older Adults: Variation by Age and Functional Status. J. Am. Med. Dir. Assoc. 2019, 20, 208–212.e203. [Google Scholar] [CrossRef] [PubMed]

- Lopreite, M.; Zhu, Z. The effects of ageing population on health expenditure and economic growth in China: A Bayesian-VAR approach. Soc. Sci. Med. 2020, 265, 113513. [Google Scholar] [CrossRef] [PubMed]

- Krebs, H.I.; Ferraro, M.; Buerger, S.P.; Newbery, M.J.; Makiyama, A.; Sandmann, M.; Lynch, D.; Volpe, B.T.; Hogan, N. Rehabilitation robotics: Pilot trial of a spatial extension for MIT-Manus. J. NeuroEng. Rehabil. 2004, 1, 5. [Google Scholar] [CrossRef]

- Mohammadi, E.; Zohoor, H.; Khadem, S.M. Design and prototype of an active assistive exoskeletal robot for rehabilitation of elbow and wrist. Sci. Iran. 2016, 23, 998–1005. [Google Scholar] [CrossRef]

- Centonze, D.; Koch, G.; Versace, V.; Mori, F.; Rossi, S.; Brusa, L.; Grossi, K.; Torelli, F.; Prosperetti, C.; Cervellino, A.; et al. Repetitive transcranial magnetic stimulation of the motor cortex ameliorates spasticity in multiple sclerosis. Neurology 2007, 68, 1045. [Google Scholar] [CrossRef]

- Wu, Q.; Wang, X.; Chen, B.; Wu, H. Development of an RBFN-based neural-fuzzy adaptive control strategy for an upper limb rehabilitation exoskeleton. Mechatronics 2018, 53, 85–94. [Google Scholar] [CrossRef]

- Pérez-Ibarra, J.C.; Siqueira, A.A.G.; Silva-Couto, M.A.; Russo, T.L.d.; Krebs, H.I. Adaptive Impedance Control Applied to Robot-Aided Neuro-Rehabilitation of the Ankle. IEEE Robot. Autom. Lett. 2019, 4, 185–192. [Google Scholar] [CrossRef]

- Riener, R.; Frey, M.; Bernhardt, M.; Nef, T.; Colombo, G. Human-centered rehabilitation robotics. In Proceedings of the 9th International Conference on Rehabilitation Robotics, 2005. ICORR 2005, Chicago, IL, USA, 28 June–1 July 2005; pp. 319–322. [Google Scholar]

- Roveda, L.; Testa, A.; Shahid, A.A.; Braghin, F.; Piga, D. Q-Learning-based model predictive variable impedance control for physical human-robot collaboration. Artif. Intell. 2022, 312, 103771. [Google Scholar] [CrossRef]

- Dalla Gasperina, S.; Roveda, L.; Pedrocchi, A.; Braghin, F.; Gandolla, M. Review on patient-cooperative control strategies for upper-limb rehabilitation exoskeletons. Front. Robot. AI 2021, 8, 745018. [Google Scholar] [CrossRef]

- Hussain, S.; Xie, S.Q.; Jamwal, P.K. Adaptive Impedance Control of a Robotic Orthosis for Gait Rehabilitation. IEEE Trans. Cybern. 2013, 43, 1025–1034. [Google Scholar] [CrossRef]

- Wu, Q.; Wang, X.; Chen, B.; Wu, H. Development of a Minimal-Intervention-Based Admittance Control Strategy for Upper Extremity Rehabilitation Exoskeleton. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1005–1016. [Google Scholar] [CrossRef]

- Xiao, F. Proportional myoelectric and compensating control of a cable-conduit mechanism-driven upper limb exoskeleton. ISA Trans. 2019, 89, 245–255. [Google Scholar] [CrossRef]

- Van der Loos, H.F.M.; Reinkensmeyer, D.J.; Guglielmelli, E. Rehabilitation and Health Care Robotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1685–1728. [Google Scholar]

- Wendong, W.; Hanhao, L.; Menghan, X.; Yang, C.; Xiaoqing, Y.; Xing, M.; Bing, Z. Design and verification of a human–robot interaction system for upper limb exoskeleton rehabilitation. Med. Eng. Phys. 2020, 79, 19–25. [Google Scholar] [CrossRef]

- Charles, S.K.; Krebs, H.I.; Volpe, B.T.; Lynch, D.; Hogan, N. Wrist rehabilitation following stroke: Initial clinical results. In Proceedings of the 9th International Conference on Rehabilitation Robotics, 2005. ICORR 2005, Chicago, IL, USA, 28 June–1 July 2005; pp. 13–16. [Google Scholar]

- Tejima, N.; Stefanov, D. Fail-safe components for rehabilitation robots—A reflex mechanism and fail-safe force sensor. In Proceedings of the 9th International Conference on Rehabilitation Robotics, 2005. ICORR 2005, Chicago, IL, USA, 28 June–1 July 2005; pp. 456–460. [Google Scholar]

- Makoto, H.; Takehito, K.; Ying, J.; Kazuki, F.; Junji, F.; Akio, I. 3-D/Quasi-3-D rehabilitation systems for upper limbs using ER actuators with high safety. In Proceedings of the 2007 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 15–18 December 2007; pp. 1482–1487. [Google Scholar]

- Takesue, N.; Asaoka, H.; Lin, J.; Sakaguchi, M.; Zhang, G.; Furusho, J. Development and experiments of actuator using MR fluid. In Proceedings of the 2000 26th Annual Conference of the IEEE Industrial Electronics Society. IECON 2000. 2000 IEEE International Conference on Industrial Electronics, Control and Instrumentation. 21st Century Technologies, Nagoya, Japan, 22–28 October 2000; Volume 1833, pp. 1838–1843. [Google Scholar]

- Duchemin, G.; Poignet, P.; Dombre, E.; Peirrot, F. Medically safe and sound [human-friendly robot dependability]. IEEE Robot. Autom. Mag. 2004, 11, 46–55. [Google Scholar] [CrossRef]

- Bae, J.h.; Hwang, S.j.; Moon, I. Evaluation and Verification of A Novel Wrist Rehabilitation Robot employing Safety-related Mechanism. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; pp. 288–293. [Google Scholar]

- Simonetti, D.; Zollo, L.; Papaleo, E.; Carpino, G.; Guglielmelli, E. Multimodal adaptive interfaces for 3D robot-mediated upper limb neuro-rehabilitation: An overview of bio-cooperative systems. Robot. Auton. Syst. 2016, 85, 62–72. [Google Scholar] [CrossRef]

- Scotto di Luzio, F.; Simonetti, D.; Cordella, F.; Miccinilli, S.; Sterzi, S.; Draicchio, F.; Zollo, L. Bio-Cooperative Approach for the Human-in-the-Loop Control of an End-Effector Rehabilitation Robot. Front. Neurorobot. 2018, 12, 67. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, Q. Design of a New Wrist Rehabilitation Robot Based on Soft Fluidic Muscle. In Proceedings of the 2019 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Hong Kong, China, 8–12 July 2019; pp. 595–600. [Google Scholar]

- Jeong, J.; Hyeon, K.; Han, J.; Park, C.H.; Ahn, S.Y.; Bok, S.K.; Kyung, K.U. Wrist Assisting Soft Wearable Robot with Stretchable Coolant Vessel Integrated SMA Muscle. IEEE/ASME Trans. Mechatron. 2022, 27, 1046–1058. [Google Scholar] [CrossRef]

- Noronha, B.; Ng, C.Y.; Little, K.; Xiloyannis, M.; Kuah, C.W.K.; Wee, S.K.; Kulkarni, S.R.; Masia, L.; Chua, K.S.G.; Accoto, D. Soft, Lightweight Wearable Robots to Support the Upper Limb in Activities of Daily Living: A Feasibility Study on Chronic Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1401–1411. [Google Scholar] [CrossRef]

- Alicea, R.; Xiloyannis, M.; Chiaradia, D.; Barsotti, M.; Frisoli, A.; Masia, L. A soft, synergy-based robotic glove for grasping assistance. Wearable Technol. 2021, 2, e4. [Google Scholar] [CrossRef]

- Mancisidor, A.; Zubizarreta, A.; Cabanes, I.; Bengoa, P.; Brull, A.; Jung, J.H. Inclusive and seamless control framework for safe robot-mediated therapy for upper limbs rehabilitation. Mechatronics 2019, 58, 70–79. [Google Scholar] [CrossRef]

- Prendergast, J.M.; Balvert, S.; Driessen, T.; Seth, A.; Peternel, L. Biomechanics Aware Collaborative Robot System for Delivery of Safe Physical Therapy in Shoulder Rehabilitation. IEEE Robot. Autom. Lett. 2021, 6, 7177–7184. [Google Scholar] [CrossRef]

- Demofonti, A.; Carpino, G.; Zollo, L.; Johnson, M.J. Affordable Robotics for Upper Limb Stroke Rehabilitation in Developing Countries: A Systematic Review. IEEE Trans. Med. Robot. Bionics 2021, 3, 11–20. [Google Scholar] [CrossRef]

- Lei, B.; Xu, G.; Feng, M.; Zou, Y.; Van der Heijden, F.; De Ridder, D.; Tax, D.M. Classification, Parameter Estimation and State Estimation: An Engineering Approach Using MATLAB; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Maccarini, M.; Pura, F.; Piga, D.; Roveda, L.; Mantovani, L.; Braghin, F. Preference-Based Optimization of a Human-Robot Collaborative Controller. IFAC-PapersOnLine 2022, 55, 7–12. [Google Scholar] [CrossRef]

- Seth, A.; Hicks, J.L.; Uchida, T.K.; Habib, A.; Dembia, C.L.; Dunne, J.J.; Ong, C.F.; DeMers, M.S.; Rajagopal, A.; Millard, M.; et al. OpenSim: Simulating musculoskeletal dynamics and neuromuscular control to study human and animal movement. PLoS Comput. Biol. 2018, 14, e1006223. [Google Scholar] [CrossRef]

- Chadwick, E.K.; Blana, D.; Kirsch, R.F.; Bogert, A.J.v.d. Real-Time Simulation of Three-Dimensional Shoulder Girdle and Arm Dynamics. IEEE Trans. Biomed. Eng. 2014, 61, 1947–1956. [Google Scholar] [CrossRef]

- Blana, D.; Hincapie, J.G.; Chadwick, E.K.; Kirsch, R.F. A musculoskeletal model of the upper extremity for use in the development of neuroprosthetic systems. J. Biomech. 2008, 41, 1714–1721. [Google Scholar] [CrossRef]

- Klein Breteler, M.D.; Spoor, C.W.; Van der Helm, F.C.T. Measuring muscle and joint geometry parameters of a shoulder for modeling purposes. J. Biomech. 1999, 32, 1191–1197. [Google Scholar] [CrossRef]

- Chernikoff, R.; Taylor, F.V. Reaction time to kinesthetic stimulation resulting from sudden arm displacement. J. Exp. Psychol. 1952, 43, 1. [Google Scholar] [CrossRef]

| Side Length of the Voxel Grid (m) | Average Judgment Time (ms) | Success Rate (%) |

|---|---|---|

| 11.60 | 99.00 | |

| 9.98 | 98.80 | |

| 5.72 | 98.40 |

| Tester Number | Number of Experiments | Maximum Response Time (ms) | Average Response Time (ms) |

|---|---|---|---|

| 1 | 4 | 165.63 | 161.54 |

| 2 | 4 | 162.59 | 159.64 |

| 3 | 4 | 170.84 | 161.91 |

| 4 | 4 | 167.12 | 162.80 |

| 5 | 4 | 161.91 | 158.44 |

| Average | 4 | 165.62 | 160.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tao, Y.; Ji, Y.; Han, D.; Gao, H.; Wang, T. A Safe Admittance Boundary Algorithm for Rehabilitation Robot Based on Space Classification Model. Appl. Sci. 2023, 13, 5816. https://doi.org/10.3390/app13095816

Tao Y, Ji Y, Han D, Gao H, Wang T. A Safe Admittance Boundary Algorithm for Rehabilitation Robot Based on Space Classification Model. Applied Sciences. 2023; 13(9):5816. https://doi.org/10.3390/app13095816

Chicago/Turabian StyleTao, Yong, Yuanlong Ji, Dongming Han, He Gao, and Tianmiao Wang. 2023. "A Safe Admittance Boundary Algorithm for Rehabilitation Robot Based on Space Classification Model" Applied Sciences 13, no. 9: 5816. https://doi.org/10.3390/app13095816

APA StyleTao, Y., Ji, Y., Han, D., Gao, H., & Wang, T. (2023). A Safe Admittance Boundary Algorithm for Rehabilitation Robot Based on Space Classification Model. Applied Sciences, 13(9), 5816. https://doi.org/10.3390/app13095816