A Novel Spatiotemporal Analysis Framework for Air Pollution Episode Association in Puli, Taiwan

Abstract

1. Introduction

2. Literature Review

2.1. Air-Pollution Spatiotemporal Analysis

2.2. Video Analysis

3. Proposed Methods

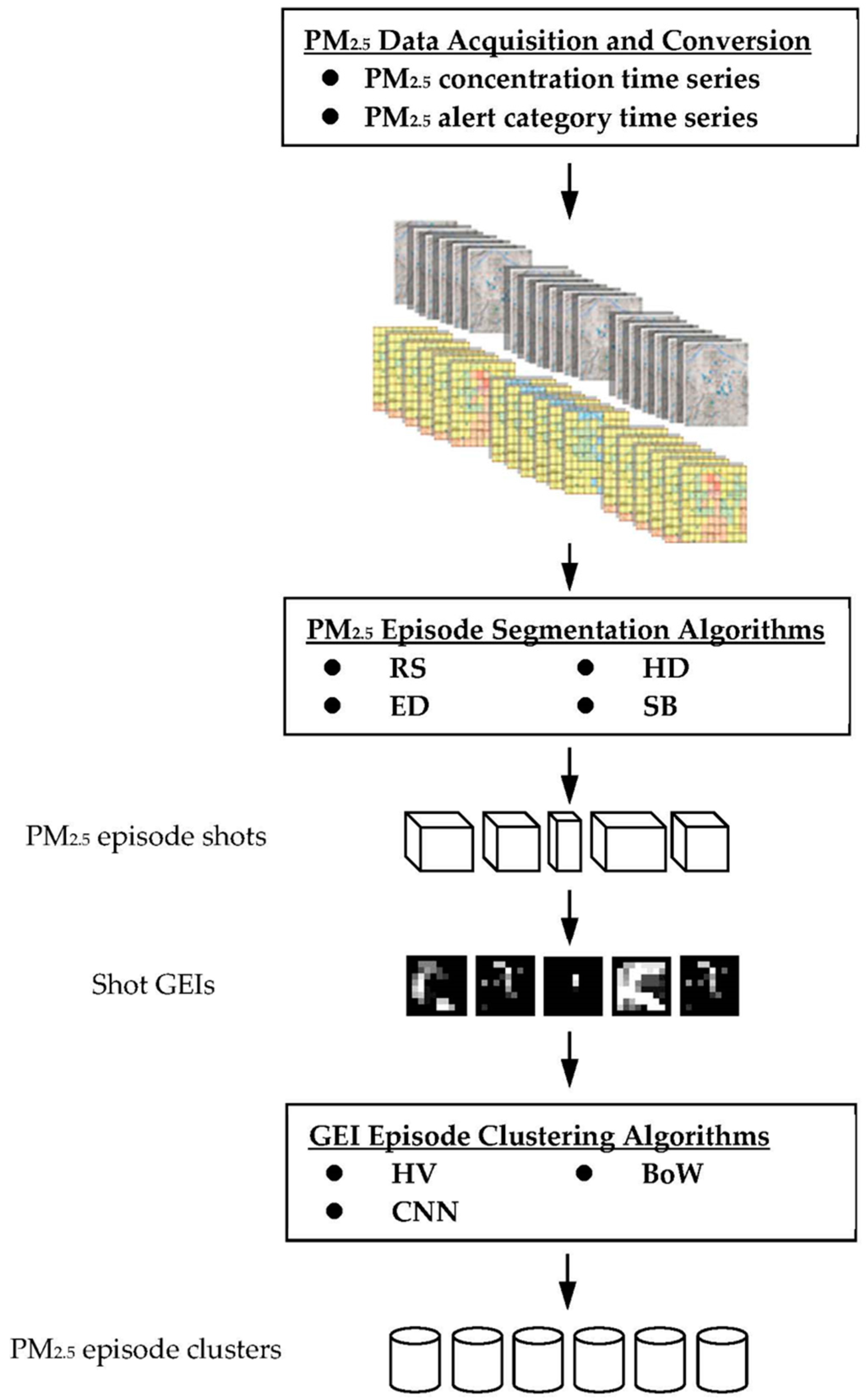

3.1. Framework Architecture

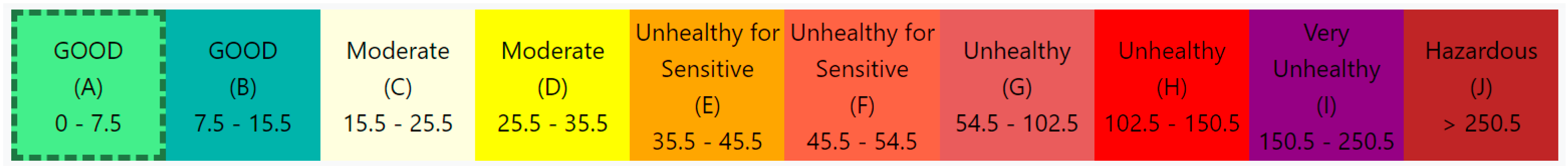

3.2. Air-Quality Data Acquisition and Conversion

3.3. Pollution Episode Segmentation

- (1)

- Raster Difference (RS). The RS method reserves all of the n × n grid values in a raster-scan order and calculates the difference of the corresponding grid values between different frames within a time window. The sum of the raster differences over the entire frame is compared to a threshold for shot detection.

- (2)

- Histogram Difference (HD). Instead of reserving the raster sequence of grid values, the HD method constructs a histogram by counting the number of grids having each level of the 10 alert categories. The 10 occurrence numbers on the histogram are used as the feature vector to calculate the difference between different frames within a time window. As compared to the RS method, the HD method is less sensitive to rotation and translation of the same pollution patterns and is more likely to extract complete shots of various episodes.

- (3)

- Edge Difference (ED). The ED method applies the Sobel edge detectors, which are commonly used in the field of image processing, to extract the boundaries between adjacent grids having significantly distinct PM2.5 alert categories. The implementation of the ED method is motivated by the assumption that the edge orientation of the pollution region changes abruptly at the transition to the next episode. The four 3 × 3 Sobel edge filters, as depicted in Figure 3, for extracting vertical, horizontal, and two diagonal edges are employed to convolute the frame image. The response at each pixel to the four filters is summed up to obtain 64 edge response values as the feature vector, which is used to calculate the difference between different frames.

- (4)

- Statistics Based (SB). The SB method extracts the properties of the alert category map and detects the cutting frames, which are assumed to have large variations in these properties as compared to the frames in the next shot. In particular, we use six moment statistics; three are non-positional and the others are positional. Let the position of the n × n grids be resolved as a two-dimensional array, and the grid value at position [x, y] is denoted as g[x, y]. The three non-positional moments are as follows:where is the mean of g[x, y] over all n × n grids.

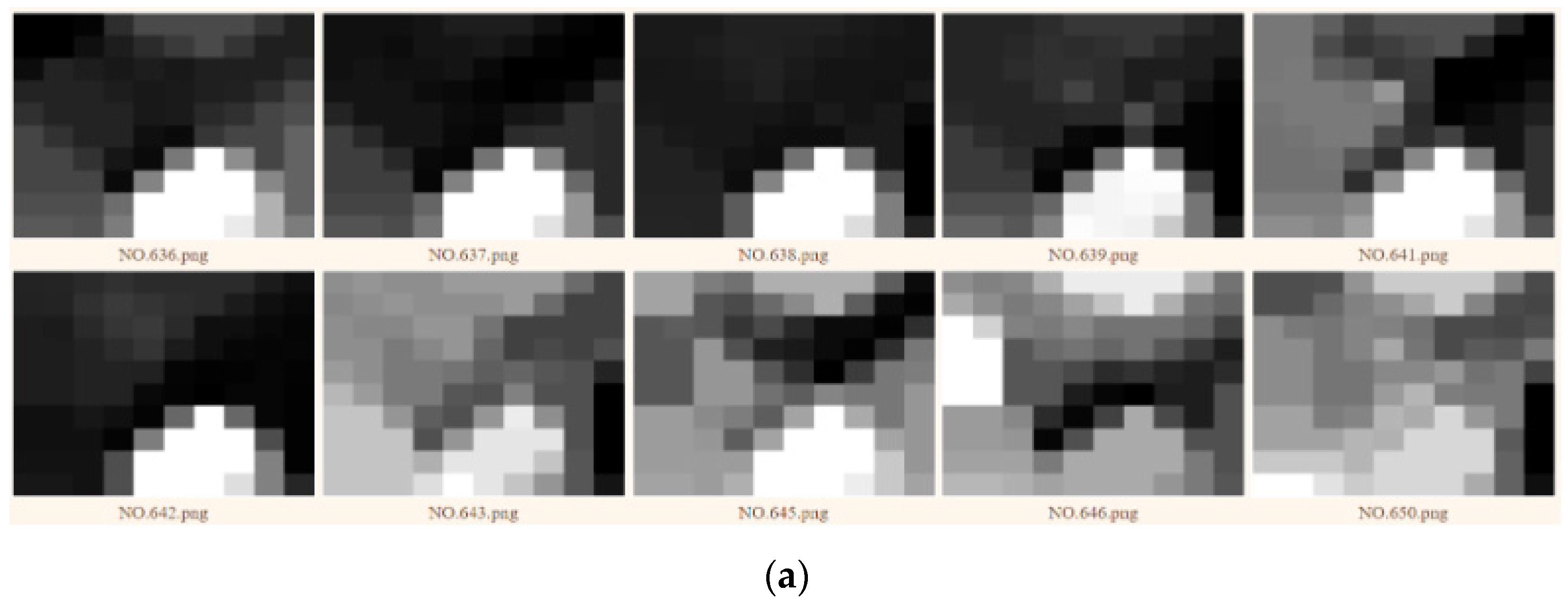

3.4. Pollution Episode Clustering

- (1)

- HV. Following the same fashion as our HD method, a histogram is constructed by counting the number of grids having each level of the 10 alert categories. The 10 occurrence numbers on the histogram are used as the feature vector for conducting the k-means clustering method. To evaluate the quality of the clustering result, several different numbers of clusters are specified when conducting k-means clustering.

- (2)

- BoW. BoW was originally proposed for document retrieval by using keywords, and it has been extended for image retrieval by replacing the keywords with visual words [20]. We further extend this idea for GEI image clustering. We associate Sobel edge filters with visual words. The GEI is divided into four quadrants. Every quadrant is processed by the four 3 × 3 Sobel filters for extracting horizontal, vertical, and diagonal edges. The response values for the same filter within every quadrant are summed up to simulate the occurrence count of the corresponding visual word. As such, we obtain a 4(quadrant) × 4(filter) = 16 visual-word histogram. Furthermore, the histogram is used as the feature vector for the k-means clustering method.

- (3)

- CNN. In contrast to HV and BoW, which learn prespecified features designed by human experts, CNN automatically learns the representative features for the designated task. In particular, we apply VGG-19 [26] by using Keras. VGG-19 was trained with one million images selected from the ImageNet dataset for classification into 1000 generic classes. VGG-19 has 16 convolutional layers with a large number of filters, followed by three fully connected layers. We fed VGG-19 with all GEIs and summed up the collected softmax values for each classification class. The top 20 softmax-value classes are used to construct features for GEI clustering. Further, each GEI obtains a 20-dimensional feature vector, and the k-means clustering method is applied to the feature vectors to generate the clustering result.

4. Experimental Results and Comparative Performances

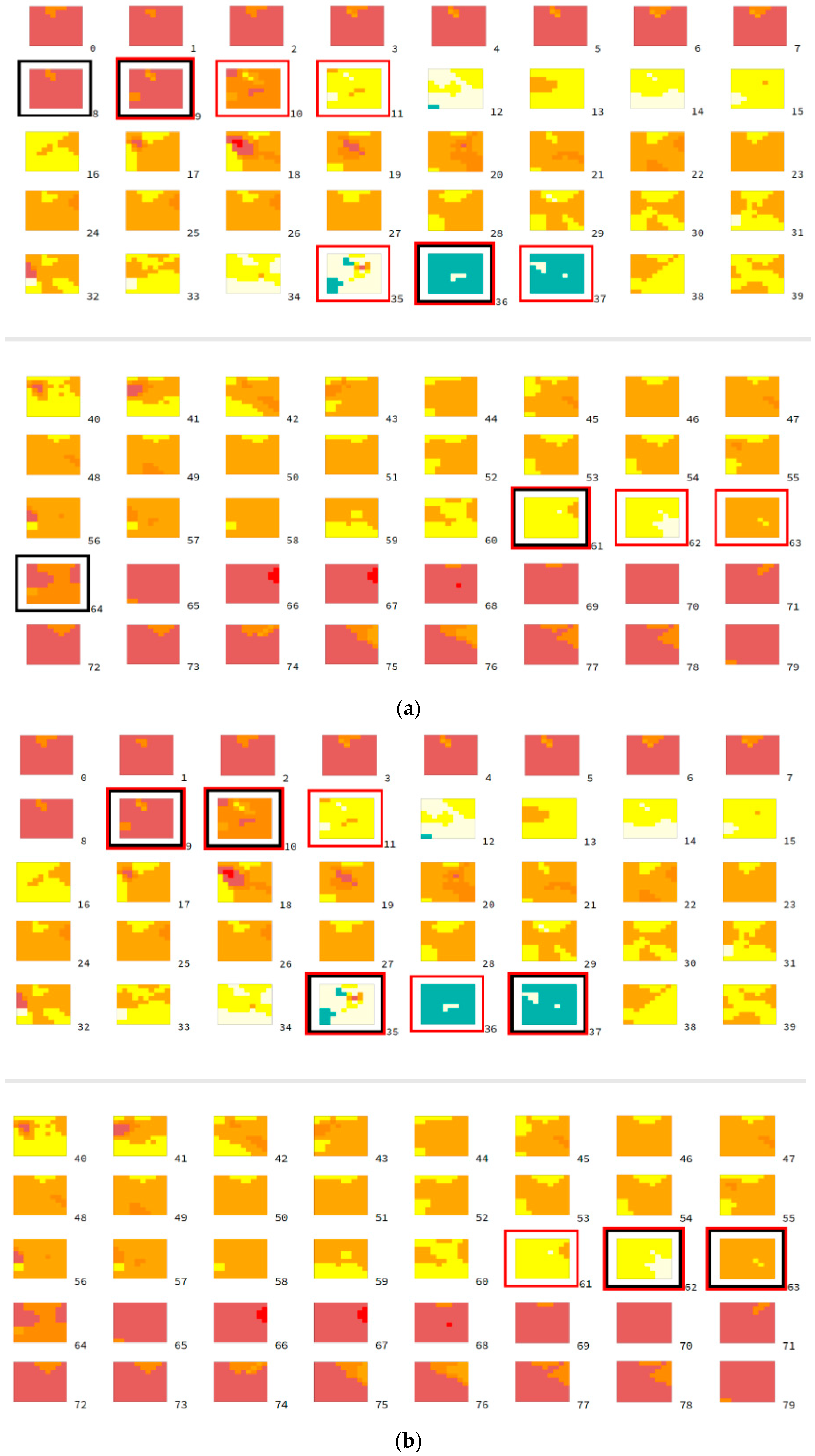

4.1. Pollution Episode Segmentation

4.2. Pollution Episode Clustering

5. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations Department of Economic and Social Affairs. The 2030 Agenda for Sustainable Development. Available online: https://sdgs.un.org/goals (accessed on 5 April 2023).

- WHO Media Centre. Ambient (Outdoor) Air Pollution. 2022. Available online: https://www.who.int/en/news-room/fact-sheets/detail/ambient-(outdoor)-air-quality-and-health (accessed on 25 April 2023).

- Singh, N.; Murari, V.; Kumar, M.; Barman, S.C.; Banerjee, T. Fine particulates over South Asia: Review and meta-analysis of PM2.5 source apportionment through receptor model. Environ. Pollut. 2017, 223, 121–136. [Google Scholar] [CrossRef]

- Valerino, M.; Ratnaparkhi, A.; Ghoroi, C.; Bergin, M. Seasonal photovoltaic soiling: Analysis of size and composition of deposited particulate matter. Sol. Energy 2021, 227, 44–55. [Google Scholar] [CrossRef]

- Liang, C.S.; Duan, F.K.; He, K.B.; Ma, Y.L. Review on recent progress in observations, source identifications and countermeasures of PM2.5. Environ. Int. 2016, 86, 150–170. [Google Scholar] [CrossRef]

- Fontes, T.; Li, P.; Barros, N.; Zhao, P. Trends of PM2.5 concentrations in China: A long term approach. J. Environ. Manag. 2017, 196, 719–732. [Google Scholar] [CrossRef]

- Yin, P.Y.; Yen, A.Y.; Chao, S.E.; Day, R.F.; Bhanu, B. A Machine Learning-based Ensemble Framework for Forecasting PM2.5 Concentrations in Puli, Taiwan. Appl. Sci. 2022, 12, 2484. [Google Scholar] [CrossRef]

- Lung, S.C.C.; Wang, W.C.V.; Wen, T.Y.J.; Liu, C.H.; Hu, S.C. A versatile low-cost sensing device for assessing PM2.5 spatiotemporal variation and quantifying source contribution. Sci. Total Environ. 2020, 716, 137145. [Google Scholar] [CrossRef]

- Liu, X.J.; Xia, S.Y.; Yang, Y.; Wu, J.F.; Zhou, Y.N.; Ren, Y.W. Spatiotemporal dynamics and impacts of socioeconomic and natural conditions on PM2.5 in the Yangtze River Economic Belt. Environ. Pollut. 2020, 263, 114569. [Google Scholar] [CrossRef]

- Yan, D.; Lei, Y.; Shi, Y.; Zhu, Q.; Li, L.; Zhang, Z. Evolution of the spatiotemporal pattern of PM2.5 concentrations in China–A case study from the Beijing-Tianjin-Hebei region. Atmos. Environ. 2018, 183, 225–233. [Google Scholar] [CrossRef]

- Cao, R.; Li, B.; Wang, Z.; Peng, Z.; Tao, S.; Lou, S. Using a distributed air sensor network to investigate the spatiotemporal patterns of PM2.5 concentrations. Environ. Pollut. 2020, 264, 114549. [Google Scholar] [CrossRef]

- Song, R.; Yang, L.; Liu, M.; Li, C.; Yang, Y. Spatiotemporal Distribution of Air Pollution Characteristics in Jiangsu Province, China. Adv. Meteorol. 2019, 2019, 5907673. [Google Scholar] [CrossRef]

- Yang, D.; Chen, Y.; Miao, C.; Liu, D. Spatiotemporal variation of PM2.5 concentrations and its relationship to urbanization in the Yangtze river delta region. China Atmos. Pollut. Res. 2020, 11, 491–498. [Google Scholar] [CrossRef]

- Jiang, Z.; Jolley, M.D.; Fu, T.M.; Palmer, P.I.; Ma, Y.; Tian, H.; Li, J.; Yang, X. Spatiotemporal and probability variations of surface PM2.5 over China between 2013 and 2019 and the associated changes in health risks: An integrative observation and model analysis. Sci. Total Environ. 2020, 723, 137896. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.L.; Wang, C.H. Retrospective prediction of intra-urban spatiotemporal distribution of PM2.5 in Taipei. Atmos. Environ. 2010, 44, 3053–3065. [Google Scholar]

- Lyu, Y.; Ju, Q.; Lv, F.; Feng, J.; Pang, X.; Li, X. Spatiotemporal variations of air pollutants and ozone prediction using machine learning algorithms in the Beijing-Tianjin-Hebei region from 2014 to 2021. Environ. Pollut. 2022, 306, 119420. [Google Scholar] [CrossRef] [PubMed]

- Chicas, S.D.; Valladarez, J.G.; Omine, K. Spatiotemporal distribution, trend, forecast, and influencing factors of transboundary and local air pollutants in Nagasaki Prefecture, Japan. Sci. Rep. 2023, 13, 851. [Google Scholar] [CrossRef]

- Koprinska, I.; Carrato, S. Temporal video segmentation: A survey. Signal Process. Image Commun. 2001, 16, 477–500. [Google Scholar] [CrossRef]

- Cotsaces, C.; Nikolaidis, N.; Pitas, I. Video Shot Boundary Detection and Condensed Representation: A Review. IEEE Signal Process. Mag. 2006, 23, 28–37. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Ramli, A.R.; Saripan, M.I.; Mahmmod, B.M.; Al-Haddad, S.A.R.; Jassim, W.A. Methods and Challenges in Shot Boundary Detection: A Review. Entropy 2018, 20, 214. [Google Scholar] [CrossRef]

- Han, J.; Bhanu, B. Individual recognition using gait energy image. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 316–322. [Google Scholar] [CrossRef]

- Lee, F.F.; Fergus, R.; Torralba, A. Recognizing and learning object categories: Year 2007. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Yin, P.Y.; Tsai, C.C.; Day, R.F.; Tung, C.Y.; Bhanu, B. Ensemble learning of model hyperparameters and spatiotemporal data for calibration of low-cost PM2.5 sensors. Math. Biosci. Eng. 2019, 16, 6858–6873. [Google Scholar] [CrossRef] [PubMed]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Calinski, T.; Harabasz, J. A dendrite method for cluster analysis. Commun. Stat. 1974, 3, 1–27. [Google Scholar]

- Davies, D.L.; Bouldin, D.W. A Cluster Separation Measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, 1, 224–227. [Google Scholar] [CrossRef]

| Precision | Recall | F1-Score | |

|---|---|---|---|

| RS | 0.7225909 | 0.74270123 | 0.732508063 |

| HD | 0.6956363 | 0.78076398 | 0.735745954 |

| ED | 0.55856833 | 0.702592087 | 0.622356495 |

| SB | 0.6792861 | 0.68540246 | 0.682330574 |

| K | SC | CHI | DBI |

|---|---|---|---|

| 2 | 0.501591138 | 600.3940942 | 1.108177263 |

| 3 | 0.647946873 | 1004.078699 | 1.352115359 |

| 4 | 0.692444416 | 1254.325130 | 1.805470173 |

| 5 | 0.699810907 | 1283.911012 | 1.857432596 |

| 6 | 0.621794592 | 1407.539037 | 1.537950880 |

| 7 | 0.617850347 | 1414.988043 | 1.664281480 |

| 8 | 0.567976152 | 1390.361033 | 1.288370949 |

| 9 | 0.563132577 | 1366.345924 | 1.295097528 |

| K | SC | CHI | DBI |

|---|---|---|---|

| 2 | 0.301451198 | 265.2936270 | 0.837995961 |

| 3 | 0.374914193 | 276.7063533 | 0.893594959 |

| 4 | 0.352490637 | 267.9174494 | 0.966339562 |

| 5 | 0.361700044 | 295.2575573 | 1.107479698 |

| 6 | 0.282343080 | 305.9140231 | 1.074090025 |

| 7 | 0.277204879 | 305.1391090 | 1.059764619 |

| 8 | 0.283878016 | 292.5594372 | 1.020158004 |

| 9 | 0.257572259 | 285.0925611 | 0.984416778 |

| K | SC | CHI | DBI |

|---|---|---|---|

| 2 | 0.426706271 | 681.1978051 | 1.142826628 |

| 3 | 0.312079422 | 566.5341183 | 0.861172038 |

| 4 | 0.268289956 | 493.324105 | 0.817149345 |

| 5 | 0.278307506 | 469.2196243 | 0.898008358 |

| 6 | 0.271767518 | 428.1691441 | 0.758196849 |

| 7 | 0.264814784 | 400.385274 | 0.77981305 |

| 8 | 0.245515484 | 379.3548626 | 0.778609268 |

| 9 | 0.230716353 | 359.9879666 | 0.774757245 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, P.-Y. A Novel Spatiotemporal Analysis Framework for Air Pollution Episode Association in Puli, Taiwan. Appl. Sci. 2023, 13, 5808. https://doi.org/10.3390/app13095808

Yin P-Y. A Novel Spatiotemporal Analysis Framework for Air Pollution Episode Association in Puli, Taiwan. Applied Sciences. 2023; 13(9):5808. https://doi.org/10.3390/app13095808

Chicago/Turabian StyleYin, Peng-Yeng. 2023. "A Novel Spatiotemporal Analysis Framework for Air Pollution Episode Association in Puli, Taiwan" Applied Sciences 13, no. 9: 5808. https://doi.org/10.3390/app13095808

APA StyleYin, P.-Y. (2023). A Novel Spatiotemporal Analysis Framework for Air Pollution Episode Association in Puli, Taiwan. Applied Sciences, 13(9), 5808. https://doi.org/10.3390/app13095808