Abstract

The cleaning and maintenance of large-scale façades is a high-risk industry. Although existing wall-climbing robots can replace humans who work on façade surfaces, it is difficult for them to operate on façade protrusions due to a lack of perception of the surrounding environment. To address this problem, this paper proposes a binocular vision-based method to assist wall-climbing robots in performing autonomous rust removal and painting. The method recognizes façade protrusions through binocular vision, compares the recognition results with an established dimension database to obtain accurate information on the protrusions and then obtains parameters from the process database to guide the operation. Finally, the robot inspects the operation results and dynamically adjusts the process parameters according to the finished results, realizing closed-loop feedback for intelligent operation. The experimental results show that the You Only Look Once version 5 (YOLOv5) recognition algorithm achieves a 99.63% accuracy for façade protrusion recognition and a 93.33% accuracy for the detection of the rust removal effect using the histogram comparison method. The absolute error of the canny edge detection algorithm is less than 3 mm and the average relative error is less than 2%. This paper establishes a vision-based façade operation process with good inspection effect, which provides an effective vision solution for the automation operation of wall-climbing robots on the façade.

1. Introduction

The cleaning and maintenance of large façades, such as ships and tanks, is a high-risk industry, and currently, the rust removal and painting of large façades are mostly carried out by hand with high-pressure water guns and spray guns [1,2]. There are many drawbacks to manual work, the most prominent of which is safety. The person operating the high-pressure water gun at heights runs the risk of falling. In addition, large amounts of toluene in the paint can cause serious damage to the operator’s health. Wall-climbing robots are therefore beginning to gradually replace risky manual work at heights.

The use of wall-climbing robots reduces the risk of manual work to some extent. In addition to improving safety, they can also increase task efficiency and reduce the labor costs. G. Fang et al. reviewed the characteristics, research fields and designs of wall-climbing robots in the last decade, and many institutions have studied designs of mechanical structures to improve wall-climbing robots [3]. Wall-climbing robots have been developed using negative pressure adsorption, magnetic adsorption and bionic adsorption, all of which have good climbing capabilities. They have also been designed to climb and move on corresponding large façades in complex environments, such as ships and oil tanks.

Developments in convolutional neural networks and image-processing technologies have driven the automated application of wall-climbing robots, making machine vision increasingly important [4,5]. The application of machine vision not only enables efficient and highly accurate product manufacturing in factory production but also allows robots to replace humans in hazardous environments for inspection and operational tasks. Surface corrosion inspection and quality inspection problems for large façades, such as ships and storage tanks, are also increasingly being addressed by wall-climbing robots, and much research has been carried out regarding this problem. This includes the mechanical design of robots to work in complex environments, such as ships and tanks; inspection systems to enable autonomous robotic inspection; and rust removal inspection algorithms to solve façade operation problems.

Real-time ranging based on binocular vision has also been the focus of computer vision development in recent years. With the development of computer vision theory, binocular stereo-vision measurement is playing an increasingly important role in industrial measurement [6]. The accuracy of matching a robot’s operating parameters depends on the accuracy of the ranging, so this paper has requirements for ranging accuracy. In dimensional calculations, the binocular camera eliminates scale uncertainty compared to the monocular camera, allowing for more accurate dimensional calculations. Therefore, binocular vision is chosen to implement the recognition and ranging functions in this paper.

In order to solve the problem of automated operation and effect inspection for large façade cleaning and the maintenance of ships and storage tanks, this paper proposes a method based on binocular vision that can operate on façade protrusions and can assist wall-climbing robots in autonomous rust removal and painting. Combined with binocular vision, a closed-loop feedback intelligent operation of protrusions recognition, dimension parameter matching, process parameter matching, real-time operation effect inspection and the dynamic adjustment of the operation parameters can be achieved. The main contributions of this research are as follows:

- A method of operating on façade protrusions is proposed in combination with a database that provides a parameter reference for façade operation and increases the reliability and adaptability of the operation on different façade protrusions.

- Based on the results of the operational effect inspection, a solution for the adjustment of operational parameters is proposed, which enables dynamic parameter adjustment and ensures the quality of the façade operation.

The rest of the paper is organized as follows. Section 2 reviews related works on the inspection of wall-climbing robots and provides a comparison with this research. Section 3 presents the overall system of the wall-climbing robot. Section 4 describes the process of recognition and operations of façade protrusions. Section 5 discusses approaches of operation effect inspection and dynamic parameters adjustment. Section 6 shows the results of the experiments. Section 7 contains the conclusions and future works.

2. Related Works

Many studies have been carried out on the structural design and adsorption principles of wall-climbing robots: S. Choudhary et al. designed a lightweight wall-climbing robot for glass cleaning, which uses a centrifugal impeller to generate a low-pressure zone for good adhesion on the wall surface [7]. W. K. Huang et al. proposed a bionic crawling modular wall-climbing robot based on a modular design and inspired by leech peristalsis and internal soft-bone connection [8]. Environments that require large façade cleanings, such as ships and oil tanks, are more complex and have higher demands regarding robot adaptability and wall-climbing capabilities [9]. H. F. Fang et al. proposed a magnetic adsorption wall-climbing robot, realizing reliable adsorption and flexible walking on ships through a multitrack-integrated mobile wall-climbing robot [10]. T. Guo et al. designed a negative pressure adsorption underwater wall-climbing robot with good adaptability to various surface conditions and suitability for large loads by exploiting the Bernoulli negative pressure effect [11]. J. Hu et al. presented a magnetic crawler wall-climbing robot with a high payload capacity on a convex surface, which increases the load capacity through a load dispersal mechanism and uses a flexible connection scheme for the robot’s body to achieve curved movement [12]. S. Hong et al. proposed a magnetically adherent quadrupedal wall-climbing robot with permanent magnet adsorption on the foot and a model predictive control framework to enable rapid movement over hulls and tanks [13].

The essential aspect of a wall-climbing robot’s inspection system is the inspection algorithm, and there is more need for rust detection on, for example, the large façades of ships and storage tanks. Many rust-detection algorithms have developed rapidly in recent years, mainly including image processing and machine learning. Image processing can calculate and analyze changes in color and surface dimensions due to hull rusting. It is widely used in rust detection for its small computation requirements and convenience. X. Huang proposed an image processing-based method to identify the degree of rusting in vibration dampers [14]. Y. Tian et al. demonstrated an approach for identifying rust as a key technology in the rust-removal process, which is designed to be robust to variations in the conditions of rusted areas caused by the presence of working tools or rust powders in the images [15]. J. Yang proposed a rust segmentation method based on the Gaussian mixture model and super-pixel segmentation, which classified the rust degree by determining the rust probability through rust segmentation, the hue saturation value (HSV) and the color space feature distribution of the input image [16]. Compared to image processing, machine learning has higher accuracy and greater computation abilities. Z. M. Guo proposed a new robust and faster region-based convolutional neural network (faster R-CNN) model with the feature enhancement mechanism for the rust detection of transmission line fitting [17]. A. R. M. Forkan used a deep learning method based on convolutional neural networks for structure identification and corrosion feature extraction [18]. The above studies show that computer vision-based rust detection algorithms are widely used and meet requirements.

Because of developments in detection algorithms and image-processing technology, wall-climbing robots combined with vision can perform façade inspection tasks. A. V. Le et al. proposed a water-blasting framework with an adhesion mechanism of permanent magnetism, which is able to evaluate the severity of the corrosion region by using a benchmarking algorithm [19]. J. Li proposed an intelligent inspection robotic system based on deep learning to achieve weld seam identification and tracking, and the average time taken for identifying and calculating weld paths was about 180 ms [20]. K. Zhang et al. proposed a weld-descaling robot based on three-line laser-structured light vision recognition, capable of identifying cross and t-shaped welds for descaling under various working conditions [21]. J. Li et al. presented a robotic system for the inspection of welds in spherical tanks, using deep learning to identify welds, and an improved Fleury algorithm to plan the shortest path to follow the welds [22]. X. Zhang et al. designed a multiple sensors integration wall-climbing robot for magnetic particle testing, which cooperatively controlled multiple components and sensors to achieve efficient surface defect detection [23].

A number of wall-climbing robots with autonomous inspection capabilities are being proposed for the automated cleaning and maintenance of large façades on ships and tanks, with rust removal and weld seam inspection being more widely studied. Wall-climbing robots can enhance operational efficiency, reduce labor costs and automate façade cleaning and maintenance operations. M. Martin-Abadal et al. used a deep learning method to identify pipes and valves in underwater scenes, achieving an accuracy of 97.2% [24]. D. Shang et al. used the YOLOv5 algorithm to recognize equipment, such as diesel engines and pumps, in the engine room of a ship, achieving an accuracy of 100% and 95.91%, respectively [25].

In summary, the existing wall-climbing robots for the cleaning and maintenance of large façades on ships and tanks are mainly working on surfaces without protrusion. Although research exists on the recognition of such protrusions, it has not been applied to large outdoor façades, and there is a lack of corresponding cleaning and maintenance operations. When working on façades, such protrusions can cause significant interference with a robot’s detection and movement, and they are also necessary targets for inspection and operation, making the recognition and inspection of façade protrusions important. In order to solve the problem, this paper proposes a method based on binocular vision that can recognize and inspect the operation effects of façade protrusions. By adding parameter adjustment to the workflow, the robot is able to adjust the operating parameters from the results of the operation effect inspection, allowing the dynamic adjustment of the operation. In addition, a database of common façade protrusions is collected to provide guidance to the wall-climbing robot.

3. System Overview

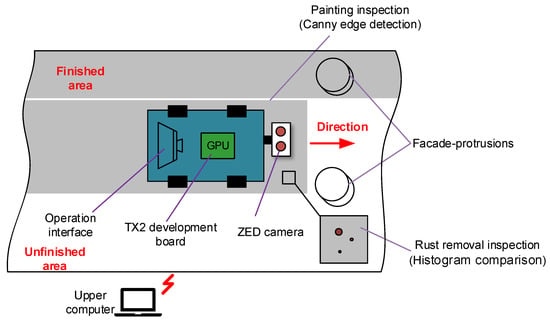

The system is designed to enable the wall-climbing robot to recognize protrusions on the façade, inspect operation effects and adjust parameters during rust removal and painting operations. The system is based on binocular vision, as shown in Figure 1.

Figure 1.

Schematic diagram of wall-climbing robot façade operation.

The system consists of two parts: hardware and software.

The hardware mainly includes the Nvidia Jetson TX2 embedded development board, ZED binocular camera, operation interface, upper computer and mobile platform mechanical body. The ZED camera is fixed onto the chassis support of the climbing robot to ensure stability and is connected to the Nvidia Jetson TX2 through a USB3.0 data cable.

The software part mainly involves installing the Compute Unified Device Architecture (CUDA), Open-Source Computer Vision Library (OpenCV), My Structured Query Language (MySQL), ZED SDK and a deep learning framework.

The wall-climbing robot façade operation system mainly includes four functions: (1) database function, (2) recognition and parameter-matching function, (3) effect inspection function and (4) parameter adjustment function. The specific implementation of these four functions is as follows:

- Database function: The database includes the dimension database and the process database. The dimension database guides the recognition of façade protrusions, and the process database guides rust removal and painting operations.

- Recognition and parameter-matching function: This function realizes the recognition and distance measurement of a façade protrusion and matches the results with the database to obtain dimensions and process parameters to guide robot operation.

- Effect inspection function: After the wall-climbing robot completes the operation on protrusions, it inspects the operation effect.

- Parameter adjustment function: When the inspection result of the operation effect is unqualified, the wall-climbing robot adjusts the corresponding parameters for two different operations—rust removal and painting.

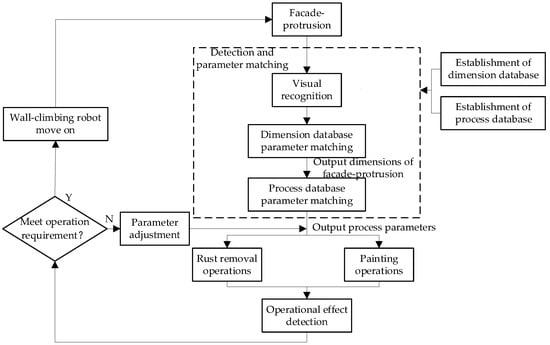

The overall system includes visual recognition, the matching of dimensional parameters, the selection of process parameters and real-time inspection of the operation effect. The flow chart of the wall-climbing robot’s overall system in this paper is shown in Figure 2.

Figure 2.

Overall system of façade protrusion detection and operation for a wall-climbing robot.

The workflow outlined in this article consists mainly of the following steps. Firstly, the dimension database and process database are established, which mainly include the categories, standard dimensions and process parameters of the façade protrusions. Secondly, the wall-climbing robot uses a visual system to recognize and measure the distance of the protrusions on the façade, obtaining the categories and dimensions of the protrusions. Then, the information obtained from the vision is compared with the information in the dimension database to obtain the standard size of the protrusions. This result is then compared with the information in the process database to obtain the corresponding process parameters. After obtaining the process parameters, painting and rust removal operations can be carried out. Finally, the visual system is used to inspect the results of the operation, and the process parameters are adjusted based on whether the results are qualified, thus achieving the automated operation of the protruding objects of the wall-climbing robot.

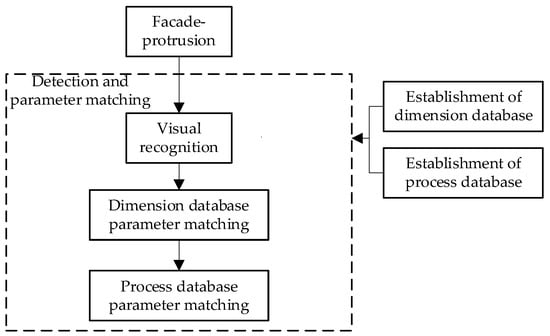

4. Recognition and Operation of Façade Protrusions

When wall-climbing robots work on a façade, protrusions are also objects that need to be worked on, so it is necessary to identify and operate these protrusions. A wall-climbing robot obtains the category information of the façade protrusion using the YOLOv5 visual recognition algorithm as it moves. Then, the wall-climbing robot obtains depth information and the contour size of the façade protrusion using binocular vision as it operates. The results are then compared with a pre-provided dimension database to produce accurate data on the category and dimensional parameters. The dimensional information of the façade protrusion is matched with the process database to obtain the corresponding process parameters to guide the operation. The flow chart of façade protrusion recognition and parameter matching is shown in Figure 3.

Figure 3.

Flow chart of façade protrusion recognition and parameter matching.

4.1. Establishment of Database

The database of the robot is MySQL database, which allows for the unified management and control of data, greatly improving the integrity and security of the data. In addition, MySQL is an open-source database and provides an interface that supports multiple language connection operations. This is highly adaptable to the embedded development of wall-climbing robots.

The database in this paper consists of a dimension database and a process database. The dimension database allows the wall-climbing robot to compare its own recognition results with the pre-provided data in the dimension database to produce accurate data category information and dimensional information. The process database provides appropriate operating parameters for different façade protrusions and guides the robot in its automated operations.

In this paper, façade protrusions include protrusions on the surfaces of large ships and oil storage tanks. The cleaning and maintenance of façade protrusions is the focus of this paper, and the categories of façade protrusions are classified through the research of a number of references, national industry standards and information provided by collaborating organizations. The main categories of façade protrusions are pipes, flanges, fasteners, fire protection accessories and instrument housings. The above façade protrusions categories are entered into the dimension database.

The process parameters required in this paper mainly include those for rust removal and painting. The rust removal operations of robots are implemented by using high-pressure water guns. The process parameters for the high-pressure water gun rust-removal method are mainly liquid pressure, the distance between the gun and façade protrusion and gun movement speed. The process parameters for painting are mainly liquid pressure, nozzle diameter, spray gun movement speed, the distance between the spray gun and the façade protrusion and the painting width.

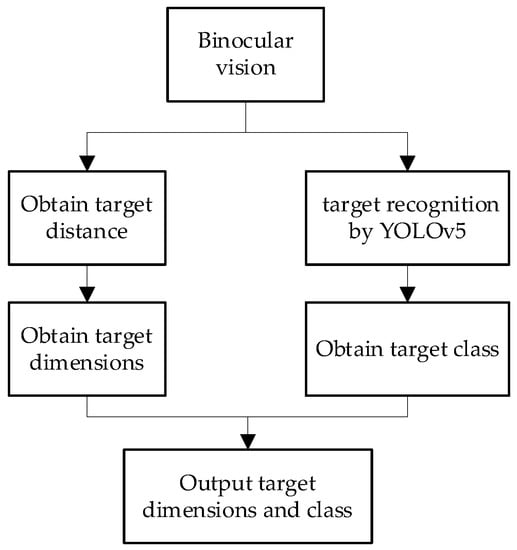

4.2. Target Recognition Based on Binocular Vision

The recognition of binocular vision consists of two main parts: one is the recognition of categories and the other is the recognition of dimensions. The flow chart of façade protrusion visual recognition is shown in Figure 4.

Figure 4.

Flow chart of façade protrusion visual recognition.

The algorithm used in recognizing façade protrusion is the YOLOv5 algorithm based on a convolutional neural network (CNN). In the field of vision, in addition to CNN, there are also vision transformers (ViT) and graph neural networks (GNN) that can be used to process visual tasks. In this study, it is necessary to transplant the recognition algorithm and the effect inspection algorithm into the wall-climbing robot. The TX2 processor installed on the robot has weak performance and requires real-time detection. However, the high computational cost of ViT and GNN is not conducive to the real-time recognition of the robot in this paper. YOLOv5 has fast recognition speed, mature hardware deployment and good compatibility with TX2, so YOLOv5 was chosen as the recognition algorithm for façade protrusions.

The YOLO detection algorithm was first proposed by Redmon J. et al. in 2016 [26]. YOLOv5 combines a large number of previous research techniques and implements appropriate innovative algorithms to achieve a balance of speed and accuracy [27].YOLOv5 is slightly inferior to YOLOv4 in terms of performance but is far superior to YOLOv4 in terms of flexibility and speed, providing an extreme advantage in the rapid deployment of the model; therefore, YOLOv5 was used to recognize the target category.

YOLOv5 includes five different network structures: YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l and YOLOv5x. Considering the processor performance and real-time requirements used, YOLOv5s was adopted, as it performs best on devices with limited computing resources, such as mobile devices. Compared to other network structures, it has fewer layers and lower computational complexity, while also meeting the recognition accuracy requirements of this study. Considering the size of the graphics memory, the batch is set to 8 and the training image resolution is set to 640 × 640, the number of epochs is set to 500, the learning rate is set to 0.005 and the learning rate attenuation parameter is set to 0.25.

To obtain the target size, binocular vision is used for the measurement and calculation of the façade protrusion distance. The binocular vision distance calculation principle is based on the similarity of triangles, and the depth information can be calculated by the triangle similarity principle [28].

Stereo matching is a key step in the implementation of binocular distance measurement, which is the process of finding the best matching pixel point in the left and right images taken by the binocular camera and computing the difference between the horizontal coordinates of the two-pixel points to obtain the parallax. The main algorithms studied were global stereo matching with graph cut and confidence propagation and local stereo matching based on the census algorithm and semi-global block matching (SGBM) [29].

The SGBM local stereo matching algorithm is used because of its fast computational speed while ensuring a certain level of accuracy, and the process of implementing the SGBM algorithm is divided into four steps: image pre-processing, cost calculation, dynamic planning and post-processing parallax refinement [30]. After the above process, SGBM is able to achieve a better parallax effect.

4.3. Matching of Dimensional Parameters

The concept of dimensional parameter matching: The robot compares the dimensions of the target object obtained by vision with all dimensions under that category of the object in the dimensional database and outputs the dimension with the smallest difference as the dimension. The matching of dimensional parameters relies on the establishment of the dimension database and the façade protrusion profile dimensions obtained by the vision system. The key idea of dimensional parameters matching is to compare the dimensional information obtained by binocular vision with the data in dimension database D to obtain accurate dimensional information {D|d1,d2,...,dn}.

The specific steps of the dimensional data matching are as follows: firstly, according to the recognized façade protrusion category z, the corresponding dimension of the façade protrusion is obtained from the database di. Then, the dimensions obtained by binocular vision h are compared with the different categories of the façade protrusion in the database to find the difference, and the dimension with the smallest difference is selected as the final result. The pseudocode for dimensional parameter matching is as follows (Algorithm 1):

| Algorithm 1: Dimensional parameter-matching algorithm | |

| Input: facade-protrusion category z, dimensional information obtained by binocular vision h, dimension database D Output: dimension of the facade-protrusion s | |

| 1: | k←|d1 -h|//Initial difference |

| 2: | b←0//Difference between visually obtained dimension and dimension parameter in the database |

| 3: | s←0 |

| 4: | for each di do//Obtain the minimum difference through loop comparison |

| 5: | b←| di -h| |

| 6: | if (b < k) then |

| 7: | k←b |

| 8: | s←di//Take the standard dimension with the smallest difference as the final dimension |

| 9: | end if |

| 10: | end for |

| 11: | return s |

The main categories of façade protrusions are pipes, flanges, fasteners, fire-fighting accessories and instrument housings. For the establishment of the dimension database in this paper, certain pipes and fittings were selected as objects. The three-dimensional information parameters are derived from GB/T 5312-1999 and GB/T 8163-1999 dimension tables, and the characteristic dimensions include nominal through the diameter, steel pipe outer diameter and steel pipe wall thickness. Taking the dimensional data of the steel pipe as an example, the dimension database of the steel pipe is shown in Table 1.

Table 1.

Dimensional parameters of different types of steel tubes as façade protrusion.

4.4. Matching of Process Parameters

The concept of process parameter matching: the robot takes the object dimensions and depth information obtained in the previous steps to filter the process parameters under the operation to be performed, and then obtains the required process parameters. Due to the dynamic operation of the wall-climbing robot and the need to dynamically adjust the process parameters for different operations, the process parameters are derived from the dimensions, the depth information obtained in the previous step and the matching of the process parameter database.

The process parameters are matched in the following steps: firstly, the corresponding process parameters B and C are obtained according to the current operation. Then, all process parameters under the façade protrusion are obtained based on the previously obtained dimensions {B1|b11, b21,...bi1..., bm1}. Finally, the process parameters under the current façade protrusion distance are selected {B2|b12, b22, …bj2…, bm2} in order to complete the dynamic operation. The process parameter-matching pseudocode is as follows (Algorithm 2):

| Algorithm 2: Process parameter-matching algorithm | |

| Input: dimension of the facade-protrusion s, measuring distance m, process parameter database B Output: Process parameters P | |

| 1: | L←0//Storage of filtered process parameters |

| 2: | If (select the rust removal function) then |

| 3: | for each bi1 do//Filter data according to dimension |

| 4: | if bi1 = s then |

| 5: | L←{bi2,bi3…bin} |

| 6: | end if |

| 7: | end for |

| 8: | for each bj2 in L do//Filter data in L according to distance |

| 9: | if bj2 = m then |

| 10: | P ←{bj3,bj4… bjn}//obtain process parameters |

| 11: | end if |

| 12: | end for |

| 13: | elseif (select the paint function) then |

| 14: | for each ci1 do//Filter data according to dimension |

| 15: | if ci1 = s then |

| 16: | L←{ci2,ci3…cin} |

| 17: | end if |

| 18: | end for |

| 19: | for each cj2 in L do//Filter data in L according to distance |

| 20: | if cj2 = m then |

| 21: | P ←{cj3,cj4… cjn}//obtain process parameters |

| 22: | end if |

| 23: | end for |

| 24: | end if |

| 25: | return P |

The process parameters for rust removal operation such as liquid pressure, distance from the gun, gun movement speed and spraying widths are shown in Table 2.

Table 2.

Database of process parameters for rust removal.

The process parameters for painting operations, such as liquid pressure, distance from the gun, gun movement speed and painting widths, are shown in Table 3.

Table 3.

Database of process parameters for painting.

The range of swing angles for the gun needs to be determined for each type of operation. The swing angles include the pitch swing angle and the horizontal swing angle. The pitch swing angle range requires that the height of the façade protrusion can be covered, and the horizontal swing angle range is designed to cover the façade protrusion width with an additional 10% of the operation range. Depending on the dimensions of the façade protrusion and the distance between the façade protrusion and the gun, the database of swing angles is created. Using a façade protrusion with a diameter of 20 cm and a height of 20 cm as an example, the swing angle is calculated for different distances. The parameters of swing angles are shown in Table 4.

Table 4.

Database of gun swing angles at different distances.

5. Operation Effect Inspection and Feedback Adjustment

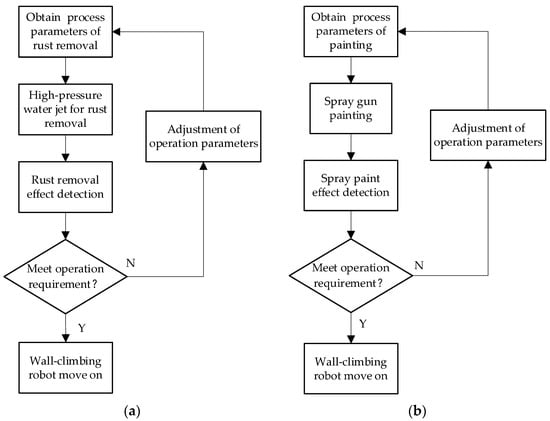

The main purpose of the operation effect inspection is to determine whether the operation meets the requirements by detecting the missed painting distance and the effect of rust removal (e.g., incomplete rust removal due to low liquid pressure). When the operation does not meet the requirements, the process parameters are adjusted, and the robot starts operation and inspection again until the operation meets the requirements. The flow chart of the façade operation and inspection and process parameters adjustment is shown in Figure 5.

Figure 5.

Flow chart of façade operation, detection and process parameters adjustment: (a) inspection of the effect of rust removal operation and parameters adjustment; (b) inspection of the effect of painting operation and parameters adjustment.

5.1. Rust Removal Inspection Algorithm

For façade protrusions, the brightness of the image with incomplete rust removal differs obviously from the brightness of the image with complete rust removal. With reference to the manual comparison of sample photographs, the use of histograms is less costly and faster to inspect when performing image calculation and processing. Therefore, the histogram comparison method is used to inspect the effect of the rust removal, using the characteristic pictures of qualified rust removal as a benchmark.

Histograms can count many features of an image, such as grey-scale values, gradients and orientation. The grey-scale histogram describes the number of pixels corresponding to each grey value in the image and is used to express the frequency of occurrence of each grey value in the image.

For two digital images, the histogram is calculated and compared using a defined criterion. If the histogram statistics of the two images are similar, then the number of pixels corresponding to the individual grey values of the two images is also similar and the two images can be considered to be very close to each other [31].

In this paper, the cardinality comparison method was used for the histogram comparison. The chi-square comparison method is derived from the chi-square test and is used to express the extent to which the measured value of an actual sample deviates from the theoretically calculated value. Based on this, the histogram comparison method was defined as Equation (1) [32]:

where is histogram of actual gray value, is histogram of complete rust removal gray value, and is the rust removal comparison value.

The size of the cardinality depends on the extent of deviation between the actual value and the theoretical value. The larger the cardinality, the less similar the two images are, and the closer the cardinality is to 0, the more similar they are. A cardinality of 0 means that H1 = H2 when the similarity is the highest.

5.2. Painting Inspection Algorithm

When painting, wall-climbing robots can have problems concerning areas of missed painting, and the color of the missed area can be obviously different from the painted area [33]. Edge detection can, therefore, be used to extract the outline of the missed painting area to calculate the missed painting distance, which can be used to control the spray gun offset by the corresponding distance for dynamic adjustment of the paint.

The Canny edge detection algorithm is a multi-level edge detection algorithm, similar to the Marr edge detection method, which is a smoothing method followed by derivative method. It is widely used in the field of computer vision due to its advantages such as high detection accuracy and speed. Therefore, in this paper, the Canny edge detection algorithm is used to detect the edge lines of the missed painting area.

After the edge detection of the image, the curve of the missed painting area can be obtained and the maximum pixel distance in the horizontal swing direction of the gun in the missed painting area can be obtained using image processing techniques.

Extrapolating the actual size of reality from the dimensions in the image requires knowledge of the pixel equivalent corresponding to this image. The size of the pixel equivalent is equal to the actual size of the real object divided by the pixel size of the object in the image, i.e., the actual physical size of a pixel. The formula for calculating the pixel equivalent is shown in Equation (2) [34]:

where is the actual size of the object, is the pixel size of the object in the image and is pixel equivalent.

The pixel equivalent can be calculated by fixing the camera imaging distance and measuring the pixel size of a standard size object in the image. In this paper, the pixel equivalent is obtained by averaging the measurements several times using a standard size block with micron-level accuracy as the calibration object.

The distance of the missed spray area can therefore be obtained by multiplying the pixel distance by the pixel equivalent, as seen in Equation (3) as follows:

where is the actual distance of the missed spray and is the maximum pixel distance of the missed spray.

5.3. Parameter Adjustment

Wall-climbing robots can be affected by the environment, process parameter settings, structural accuracy and other factors during operation, resulting in the operation effect not meeting the expected requirements. In order to enable the wall-climbing robots to work continuously and efficiently under unmanned conditions, it is necessary to inspect the operation effect in real time and to make dynamic adjustments to the process parameters.

The wall-climbing robot proposed in this paper has two main functions, namely rust removal and painting, with process parameters such as liquid pressure, gun swing angle and gun movement speed.

For the rust removal operation, inspection errors and process parameters errors can result in insufficient liquid pressure and rust not being removed. The effect inspection img_r returns the grey value of the image at the time and feeds the wall-climbing robot with the appropriate pressure parameters based on the size of the grey value, thus allowing the wall-climbing robot to work continuously on the façade protrusions as it moves.

For the painting operation, the uniformity of the painting thickness and the accuracy of the painting path are important indicators of the painting operation. Due to the influence of the robot’s movement errors, the painting problem can occur, and the swing angle may need to be adjusted so that it can cover the entire façade protrusion. The effect inspection img_p returns the current distance and the position of the missed painting, and through the control motor, the wall-climbing robot dynamically adjusts the swing angle of the spray gun. The pseudocodes for parameter adjustment of rust removal and painting are given in Algorithm 3 and Algorithm 4, respectively:

| Algorithm3: Dynamic adjustment of process parameters for rust removal | |

| Input: image of rust removal img_r, threshold of rust removal thres Output: process parameters P, control command for the robot cmd | |

| 1: | v←0//Rust removal comparison value |

| 2: | Convert img_r to gray image gray |

| 3: | Obtain v according to equation(1) |

| 4: | cmd←0 |

| 5: | If v > thres then |

| 6: | Increase the pressure of a liquid in P |

| 7: | Reduce gun movement speed in P |

| 8: | else |

| 9: | Send a command cmd for the robot to move on |

| 10: | end if |

| 11: | return process parameters P, control command cmd |

| Algorithm 4: Dynamic adjustment of process parameters for painting | |

| Input: image of painting img_p Output: process parameters P, control command for the robot cmd | |

| 1: | Init edge//Edge image by canny edge detection |

| 2: | Init LINE//Pixel distance for missed spray on each line of edge |

| 3: | cmd←0 |

| 4: | g←0//actual distance of the missed spray |

| 5: | dmax←0//max missing painting pixel distance |

| 6: | Convert img_p into edge by canny edge detection |

| 7: | LINE obtain each line of pixel distance for missed spray in edge |

| 8: | for each line in LINE do//Obtain maximum distance through cyclic comparison |

| 9: | if line > dmax then |

| 10: | dmax ← line |

| 11: | end if |

| 12: | end for |

| 13: | Obain g according to Equation (3) |

| 14: | Ifg > 0 mm then//Adjust process parameters if there exists missed spray |

| 15: | Increased gun swing angle in P |

| 16: | else |

| 17: | Send a command cmd for the robot to move on |

| 18: | end if |

| 19: | return process parameters P, control command cmd |

6. Experimental Results and Analysis

6.1. Experimental Platform Construction and Preparation

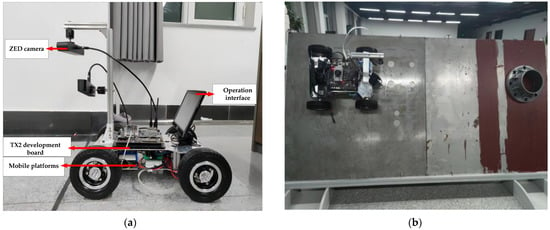

As shown in Figure 6, the experimental prototype was installed using the Nvidia Jetson TX2 high-performance graphics processor with CUDA for high-performance-image processing work, taking into account the performance, portability and working environment. The camera was selected from the ZED binocular camera, which is versatile and powerful enough to carry out high-quality 3D video capture and can also be used for motion tracking, 3D mapping and other projects. During the operation of the system, data transmission between devices adopts protocols with low energy consumption and latency [35].

Figure 6.

Experimental platform construction: (a) experimental prototype in a simulated façade environment; (b) hardware components of the experimental prototype.

Before the camera can be used for recognition, the calibration and correction of the binocular camera are required. The internal and external parameters of the camera are calibrated and solved using corner point detection, the aberration parameters are obtained using least squares and, finally, the Bouguet algorithm is used to achieve stereo correction.

6.2. Façade Protrusion Recognition

- 1.

- Classification

The recognition algorithm uses the YOLOv5 convolutional neural network algorithm. After collecting 1000 samples, the samples were trained and the training parameters were adjusted. After 500 training sessions, the accuracy of the model was evaluated with an average accuracy of 99.63%.

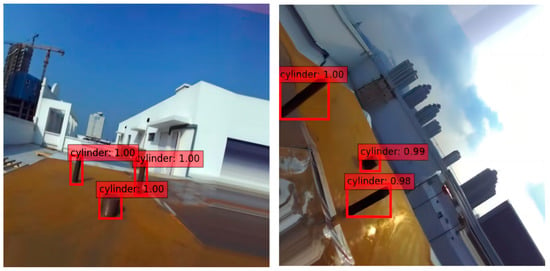

The recognition accuracy of the model was tested under different weather conditions, and the recognition results included the category of the target object, the candidate frame, the coordinate value and the confidence level. The recognition results are shown in Figure 7. The confidence level of the model in recognizing cylindrical objects under different lighting conditions and at different orientations exceeded 0.9.

Figure 7.

Recognition results in different weather conditions and orientations.

- 2.

- Distance measurement

The SGBM algorithm was applied to stereo matching to obtain depth images. After correcting and matching the image of the 800 mm column section, a depth image could be obtained, as shown in Figure 8, and the experimentally measured depths were shown in Table 5.

Figure 8.

Depth image of a columnar target.

Table 5.

Depth measurement result of columnar target at different distances.

Table 5 shows that the depth error increases as the distance increases. Of the depth error at 1000 mm, 1.579% is sufficient to meet operational requirements.

6.3. Operation Effect Inspection

- 1.

- Rust removal inspection

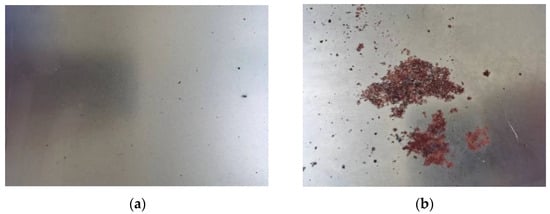

A total of 200 pictures were taken to simulate façade operation scenes with different degrees of rust removal. In accordance with the technical acceptance index for rust removal on large Chinese ships, such as the Sa2.5 grade in GB 8923, the individual pictures were assessed by comparing the sample pictures, as shown in Figure 9. The samples were divided into two categories—qualified and unqualified. Of the samples, there were 42 pictures in the qualified category and 158 pictures in the unqualified category.

Figure 9.

Comparison of complete and incomplete rust removal images: (a) complete rust removal image; (b) incomplete rust removal image.

The images of complete rust removal were compared with 200 samples and the comparison value was recorded. The results showed that the comparison values of the images of complete rust removal were all less than 1, and the comparison values of the images of incomplete rust removal were all greater than 1.5. Based on these results, the threshold value of comparison was set at 1.2. The rust removal was complete when the comparison value was less than or equal to the threshold value, and the rust removal was incomplete when the comparison value was greater than the threshold value.

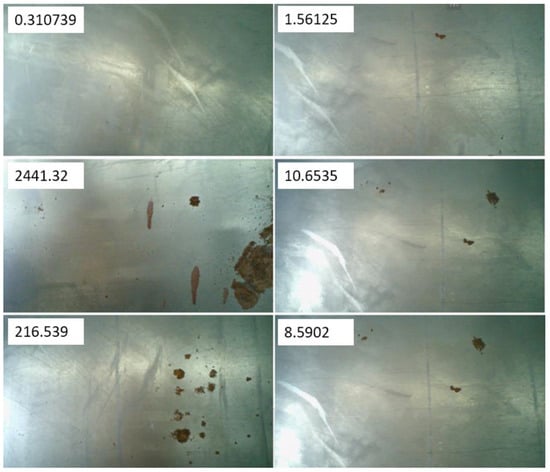

After defining the threshold value of comparison, the inspection algorithm was applied to the wall-climbing robot to inspect the effect of rust removal. A total of 51 images were captured by the binocular vision inspection system and the comparison value for each image was displayed in the top left corner, with some of the inspection results shown in Figure 10.

Figure 10.

Inspection under different rust removal effects.

The inspection of evaluation indicators obtained is shown in Table 6. The data show that the rust removal effect inspection has an accuracy rate of 98%, while the accuracy rate is only 93.33%. This is because the binocular inspection system lost edge information when processing the images, inspecting the pictures that were actually unqualified for rust removal as qualified, resulting in a low accuracy rate. In order to avoid this problem, the images of the façade surface can be fully captured by controlling the motion path of the wall-climbing robot in the actual work process.

Table 6.

Performance evaluation indicators of rust removal effect inspection.

- 2.

- Painting inspection

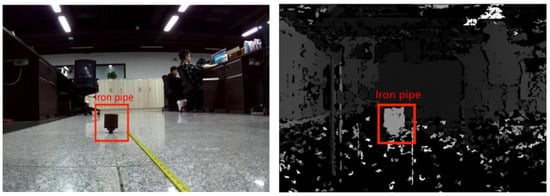

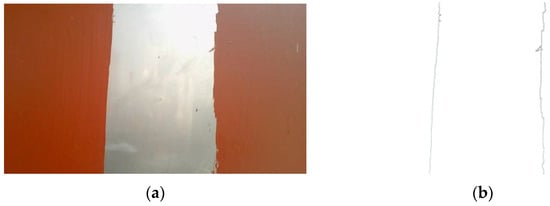

After simulating a painting operation scene on the façade, the binocular vision inspection system acquired 12 images of the missed painting area, using Canny edge detection to extract the curves on both sides of the missed painting area, and then measured the actual maximum distance of the missed painting area.

As shown in Figure 11, the Canny edge detection clearly extracts the outline of the missed painting area, providing pixel distance information for the subsequent calculation of the actual distance.

Figure 11.

Process of inspecting missed painted areas: (a) image of painting to be tested; (b) Canny edge detection result.

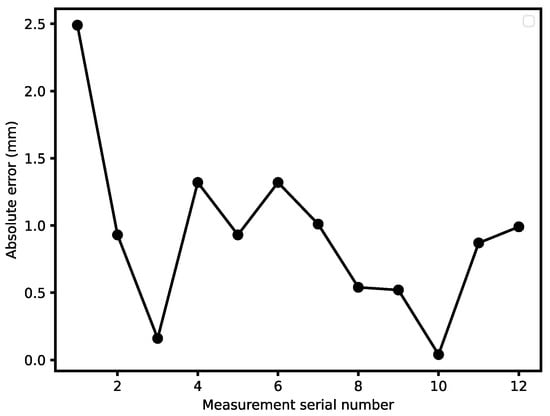

On the basis of the edge image obtained by Canny edge detection, the distance of the line pixel coordinates on all the same line of the edge was calculated iteratively, then the maximum line pixel coordinate distance was obtained by comparing each line, and finally, it was multiplied by the pixel equivalent to obtain the actual distance of the missed painting area. After the experiment, the actual missed painting distance of 100 mm was measured in 12 groups, and the experimental data are recorded in Table 7 and the error curve plotted is shown in Figure 12.

Table 7.

Measurement results of missed painting distances.

Figure 12.

Measurement error curve of missed painting distance.

According to Figure 12, the absolute error of the binocular inspection system for the measurement of the missed painting distance is less than 3 mm, and the average relative error is less than 2%, which meets actual engineering requirements.

7. Conclusions and Future Works

This paper proposes a binocular vision-based method for rust removal and painting on the large-scale façades of ships and tanks to address the problems of cleaning and maintenance. We designed a three-part process that included detection, operation and feedback adjustment on façades. The results of the visual recognition are compared with the dimension database to increase the reliability of the recognition. Different operation parameters were used according to the process database for different protrusions, enabling the robot to take appropriate operations on protrusions in the environment with different protrusions. Based on image processing technology to inspect the operation effect, the robot receives the inspection results and decides whether to adjust the operation parameters, which realizes the dynamic parameter adjustment and improves the operation effect. Experimental results show that the accuracy of façade protrusion recognition reaches 99.63% and the accuracy of the rust removal effect inspection reaches 93.33%. The absolute error of the missed painting distance measurement is less than 3 mm, and the average relative error is less than 2%.

As mentioned in related works, no similar application has been found in the field of assisting wall-climbing robots in recognizing façade protrusion. However, by searching for the application of visual recognition in non-façade operations, such as pipes and ship cabins, and comparing it with the method proposed in this article, it has the same level of accuracy. In addition, compared with the recognition accuracy of the rust removal effect in the field of façade operations, the inspection accuracy of the method proposed in this article is also within the normal range, which meets the actual operational requirements of wall-climbing robots. However, the innovation of this paper is that it is possible to accomplish both façade protrusion recognition and operation effect inspection with one vision system, which has the advantage of more functions and higher integration.

There are still some shortcomings in the proposed method; for example, the computation of the missed painting distance measurement is large when inspecting missed painting with complex irregular shapes; since the operation parameters depend on the result of façade protrusion recognition, it takes much time for the robot to adjust operation parameters when the result of recognition is wrong.

In future research, depending on the relevant problems in future experiments, the following directions could be investigated:

- The algorithm of missed painting distance measurement could be improved in order to reduce the computation.

- A method could be designed to remedy the errors in façade protrusion recognition, which would improve the fault tolerance of robot operation.

- The vision detection system proposed in this paper could be combined with path planning to improve the intelligence of a wall-climbing robot on a façade.

Author Contributions

Conceptualization and administration, M.Z.; writing, Y.M. and Z.L.; data sources, J.H.; review and editing, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (Grant number 2018YFB1309400).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the author Ming Zhong upon reasonable request.

Acknowledgments

The authors would like to thank Binyang Xu and Dahai Yu who participated in the experiments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Z.; Fang, S.; Zhu, F.; Wang, W.; Li, Y.; Chen, T.; Wang, J. Design of hull ultra high-pressure water ejector derusting system. IOP Conf. Ser. Earth Environ. Sci. 2019, 300, 032016. [Google Scholar] [CrossRef]

- Zhang, F.; Sun, X.; Li, Z.; Mohsin, I.; Wei, Y.; He, K. Influence of Processing Parameters on Coating Removal for High Pressure Water Jet Technology Based on Wall-Climbing Robot. Appl. Sci. 2020, 10, 1862. [Google Scholar] [CrossRef]

- Fang, G.; Cheng, J. Advances in Climbing Robots for Vertical Structures in the Past Decade: A Review. Biomimetics 2023, 8, 47. [Google Scholar] [CrossRef]

- Zhou, Q.; Li, X. Visual Positioning of Distant Wall-Climbing Robots Using Convolutional Neural Networks. J. Intell. Robot. Syst. 2020, 98, 603–613. [Google Scholar] [CrossRef]

- Hong, K.L.; Wang, H.G.; Yuan, B.B. Inspection-Nerf: Rendering Multi-Type Local Images for Dam Surface Inspection Task Using Climbing Robot and Neural Radiance Field. Buildings 2023, 13, 213. [Google Scholar] [CrossRef]

- Jiang, J.; Liu, L.; Fu, R.; Yan, Y.; Shao, W. Non-horizontal binocular vision ranging method based on pixels. Opt. Quantum Electron. 2020, 52, 223. [Google Scholar] [CrossRef]

- Choudhary, S.; Chede, B.; Ahmad, S.; Ansari, M.M.; Bramhankar, S.; Donode, M. Wall Climbing Glass Cleaner Robot. Int. J. Innov. Eng. Sci. 2021, 6, 1–3. [Google Scholar] [CrossRef]

- Huang, W.K.; Hu, W.; Zou, T.; Xiao, J.L.; Lu, P.W.; Li, H.Q. A Modular Cooperative Wall-Climbing Robot Based on Internal Soft Bone. Sensors 2021, 21, 7538. [Google Scholar] [CrossRef]

- Song, C.; Cui, W. Review of Underwater Ship Hull Cleaning Technologies. J. Mar. Sci. Appl. 2020, 19, 415–429. [Google Scholar] [CrossRef]

- Fang, H.F.; Liu, T.P.; Chen, J.; Zhou, Y.K. Halbach Square Array Structure for Optimizing Magnetic Adsorption. J. Nanoelectron. Optoelectron. 2021, 16, 1769–1779. [Google Scholar] [CrossRef]

- Guo, T.; Deng, Z.D.; Liu, X.; Song, D.; Yang, H. Development of a new hull adsorptive underwater climbing robot using the Bernoulli negative pressure effect. Ocean Eng. 2022, 243, 8–19. [Google Scholar] [CrossRef]

- Hu, J.; Han, X.; Tao, Y.; Feng, S. A magnetic crawler wall-climbing robot with capacity of high payload on the convex surface. Robot. Auton. Syst. 2022, 148, 103907. [Google Scholar] [CrossRef]

- Hong, S.; Um, Y.; Park, J.; Park, H.W. Agile and versatile climbing on ferromagnetic surfaces with a quadrupedal robot. Sci. Robot. 2022, 7, eadd1017. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Zhang, X.; Zhang, Y.; Zhao, L. A Method of Identifying Rust Status of Dampers Based on Image Processing. IEEE Trans. Instrum. Meas. 2020, 69, 5407–5417. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, G.T.; Morimoto, K.; Ma, S.G. Automated Rust Removal: Rust Detection and Visual Servo Control. Autom. Constr. 2022, 134, 104043. [Google Scholar] [CrossRef]

- Yang, J.; Luo, Y.; Zhou, Z.; Li, N.; Fu, R.; Zhang, X.; Ding, N. Automatic Rust Segmentation Using Gaussian Mixture Model and Superpixel Segmentation. In Proceedings of the 2022 International Conference on High Performance Big Data and Intelligent Systems (HDIS), Tianjin, China, 10–11 December 2022; pp. 71–78. [Google Scholar]

- Guo, Z.M.; Tian, Y.Y.; Mao, W.D. A Robust Faster R-CNN Model with Feature Enhancement for Rust Detection of Transmission Line Fitting. Sensors 2022, 22, 7961. [Google Scholar] [CrossRef]

- Forkan, A.R.M.; Kang, Y.-B.; Jayaraman, P.P.; Liao, K.; Kaul, R.; Morgan, G.; Ranjan, R.; Sinha, S. CorrDetector: A framework for structural corrosion detection from drone images using ensemble deep learning. Expert Syst. Appl. 2022, 193, 116461. [Google Scholar] [CrossRef]

- Le, A.V.; Kyaw, P.T.; Veerajagadheswar, P.; Muthugala, M.A.V.J.; Elara, M.R.; Kumar, M.; Khanh Nhan, N.H. Reinforcement learning-based optimal complete water-blasting for autonomous ship hull corrosion cleaning system. Ocean Eng. 2021, 220, 108477. [Google Scholar] [CrossRef]

- Li, J.; Li, B.B.; Dong, L.J.; Wang, X.S.; Tian, M.Q. Weld Seam Identification and Tracking of Inspection Robot Based on Deep Learning Network. Drones 2022, 6, 216. [Google Scholar] [CrossRef]

- Zhang, K.; Chen, Y.; Gui, H.; Li, D.; Li, Z. Identification of the deviation of seam tracking and weld cross type for the derusting of ship hulls using a wall-climbing robot based on three-line laser structural light. J. Manuf. Process. 2018, 35, 295–306. [Google Scholar] [CrossRef]

- Li, J.; Jin, S.; Wang, C.; Xue, J.; Wang, X. Weld line recognition and path planning with spherical tank inspection robots. J. Field Robot. 2021, 39, 131–152. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Zhang, M.; Sun, L.; Li, M. Optimization Design and Flexible Detection Method of Wall-Climbing Robot System with Multiple Sensors Integration for Magnetic Particle Testing. Sensors 2020, 20, 4582. [Google Scholar] [CrossRef]

- Martin-Abadal, M.; Piñar-Molina, M.; Martorell-Torres, A.; Oliver-Codina, G.; Gonzalez-Cid, Y. Underwater Pipe and Valve 3D Recognition Using Deep Learning Segmentation. J. Mar. Sci. Eng. 2020, 9, 5. [Google Scholar] [CrossRef]

- Shang, D.; Zhang, J.; Zhou, K.; Wang, T.; Qi, J. Research on the Application of Visual Recognition in the Engine Room of Intelligent Ships. Sensors 2022, 22, 7261. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Yu, L.; Qian, M.; Chen, Q.; Sun, F.; Pan, J. An Improved YOLOv5 Model: Application to Mixed Impurities Detection for Walnut Kernels. Foods 2023, 12, 624. [Google Scholar] [CrossRef]

- Li, S.K.; Yang, X. The Research of Binocular Vision Ranging System Based on LabVIEW. In Proceedings of the 2nd International Conference on Materials Science, Resource and Environmental Engineering (MSREE 2017), Wuhan, China, 27–29 October 2017; AIP Publishing LLC: Melville, NY, USA, 2017. [Google Scholar]

- Wang, L.; Miao, Y.; Han, Y.; Li, H.; Zhang, M.; Peng, C. Extraction of 3D distribution of potato plant CWSI based on thermal infrared image and binocular stereovision system. Front. Plant Sci. 2022, 13, 1104390. [Google Scholar] [CrossRef]

- Hong, P.N.; Ahn, C.W. Stereo Matching Methods for Imperfectly Rectified Stereo Images. Symmetry 2019, 11, 570. [Google Scholar] [CrossRef]

- Bhandari, A.K.; Maurya, S.; Meena, A.K. MFO-based thresholded and weighted histogram scheme for brightness preserving image enhancement. IET Image Process. 2019, 13, 896–909. [Google Scholar] [CrossRef]

- Pan, I.H.; Liu, K.-C.; Liu, C.-L. Chi-Square Detection for PVD Steganography. In Proceedings of the 2020 International Symposium on Computer, Consumer and Control (IS3C), Taichung City, Taiwan, 13–16 November 2020; pp. 30–33. [Google Scholar]

- Kavipriya, K.; Lurdu, L.; Bhuvaneswari, K.; Velkannan, V.; Anitha, N.; Rajendran, S.; Lacnjevac, C. Influence of a paint coating on the corrosion of hull plates made of mild steel in natural seawater. Zast. Mater. 2022, 63, 353–362. [Google Scholar] [CrossRef]

- Dwivedi, R.; Gangwar, S.; Saha, S.; Jaiswal, V.K.; Mehrotra, R.; Jewariya, M.; Mona, G.; Sharma, R.; Sharma, P. Estimation of Error in Distance, Length, and Angular Measurements Using CCD Pixel Counting Technique. Mapan 2021, 36, 313–318. [Google Scholar] [CrossRef]

- Ahmad, A.; Ullah, A.; Feng, C.; Khan, M.; Ashraf, S.; Adnan, M.; Nazir, S.; Khan, H.U. Towards an Improved Energy Efficient and End-to-End Secure Protocol for IoT Healthcare Applications. Secur. Commun. Netw. 2020, 2020, 8867792. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).