Contactless Real-Time Eye Gaze-Mapping System Based on Simple Siamese Networks

Abstract

1. Introduction

2. Related Work

2.1. Gaze Estimation

2.2. Facial Detection, Alignment, and Recognition

3. Eye Gaze-Mapping System

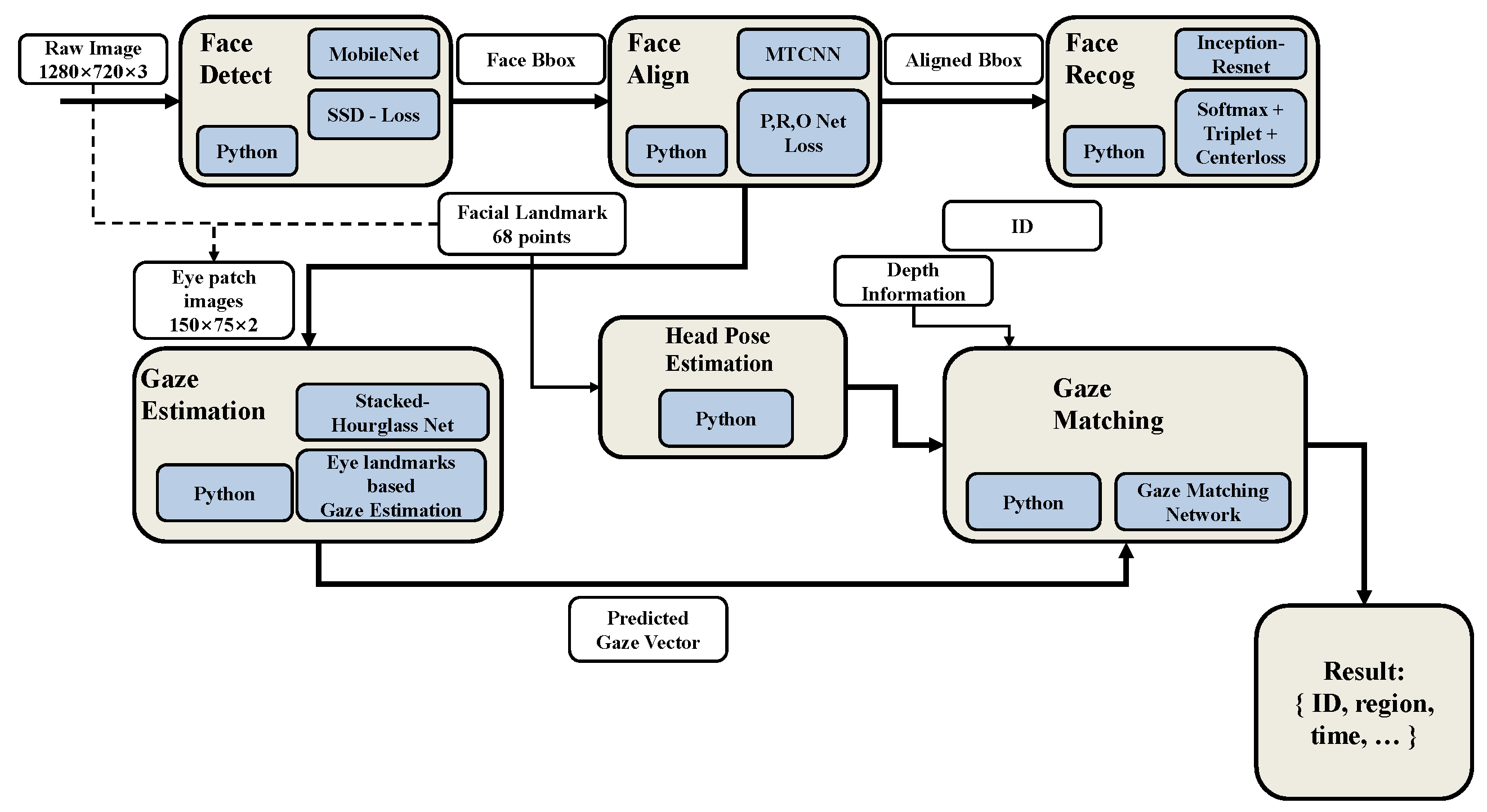

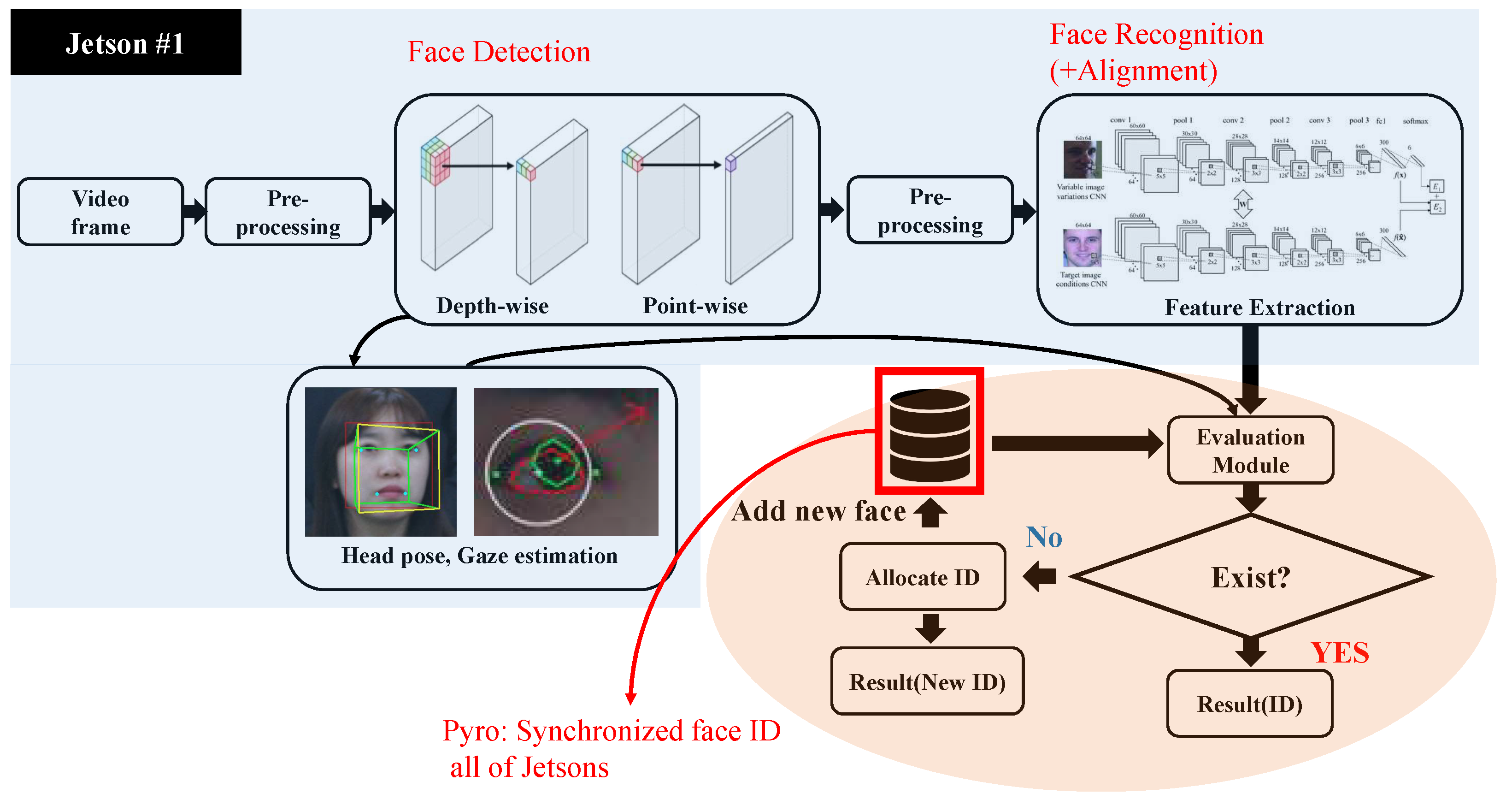

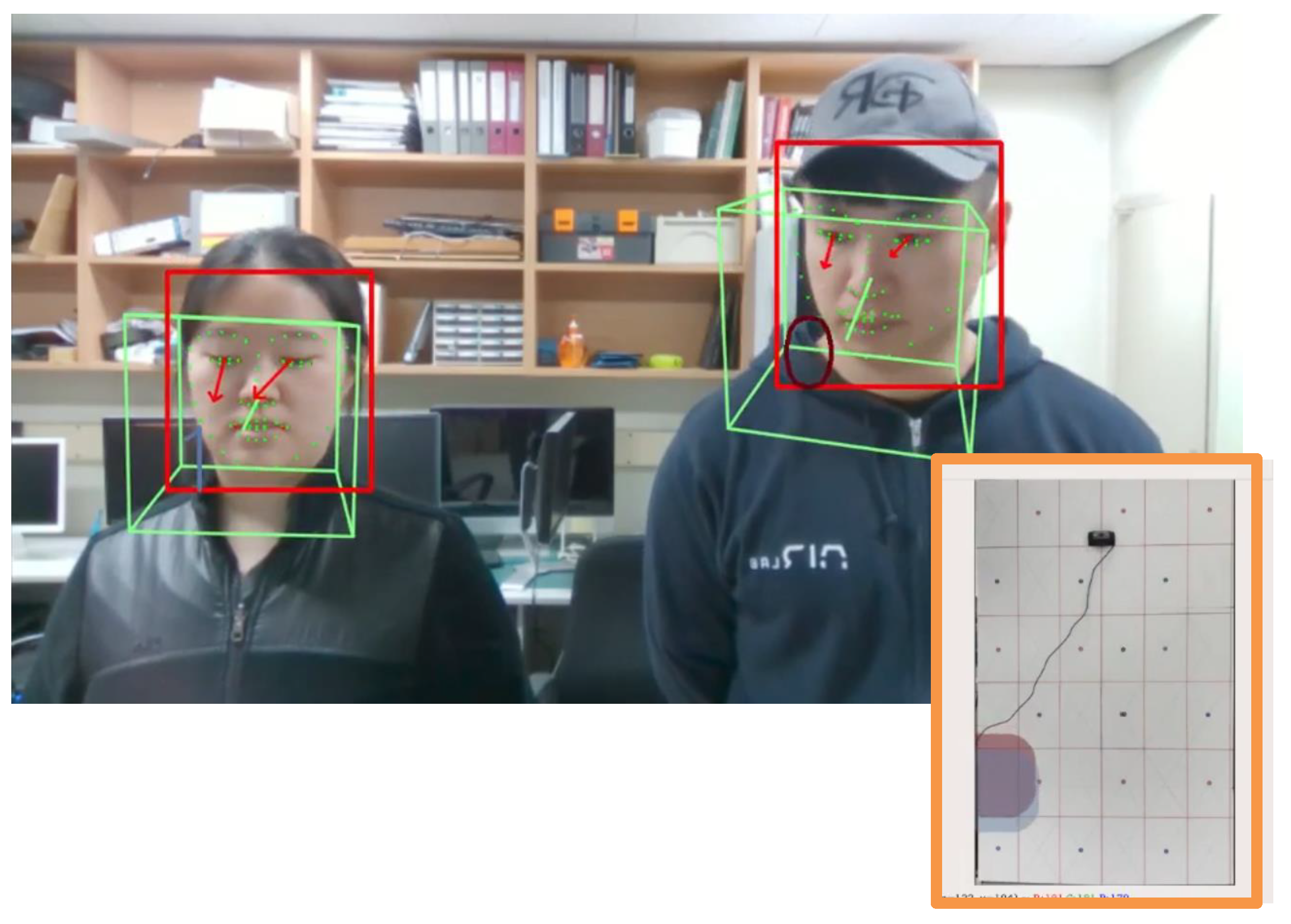

3.1. Facial Detection, Alignment, and Recognition

3.2. Gaze Estimation and Head-Pose Estimation

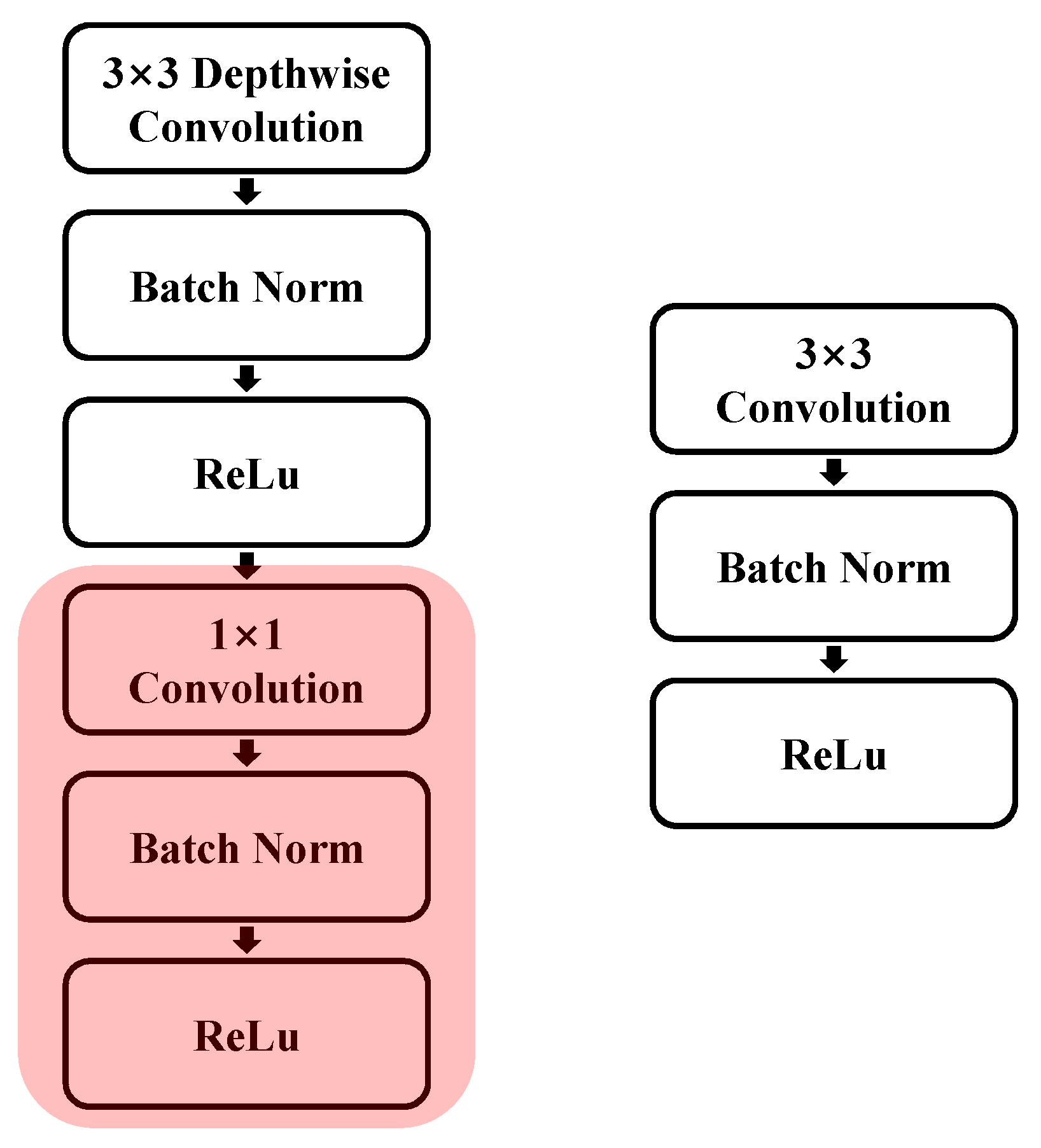

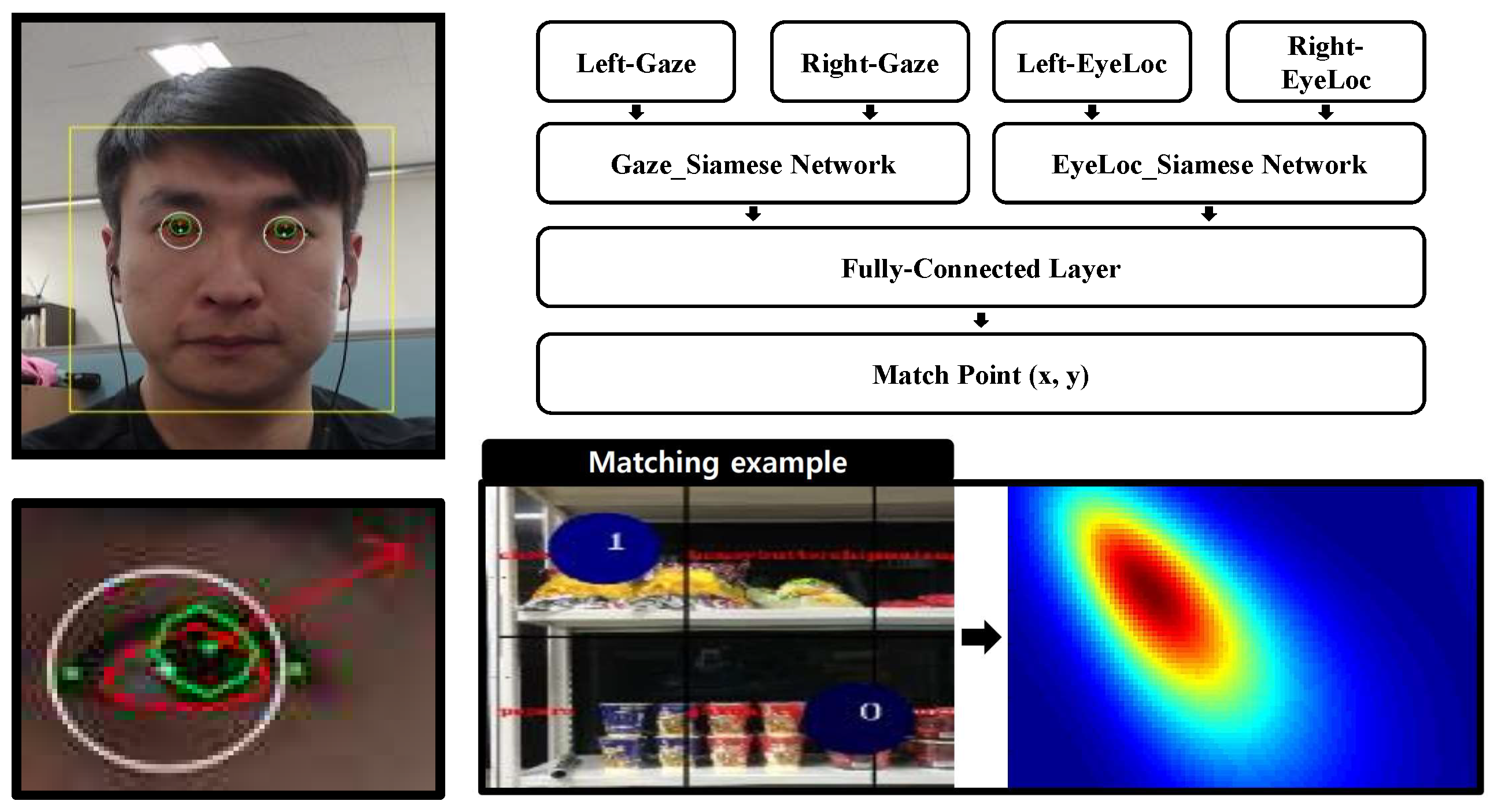

3.3. Gaze-Matching Network

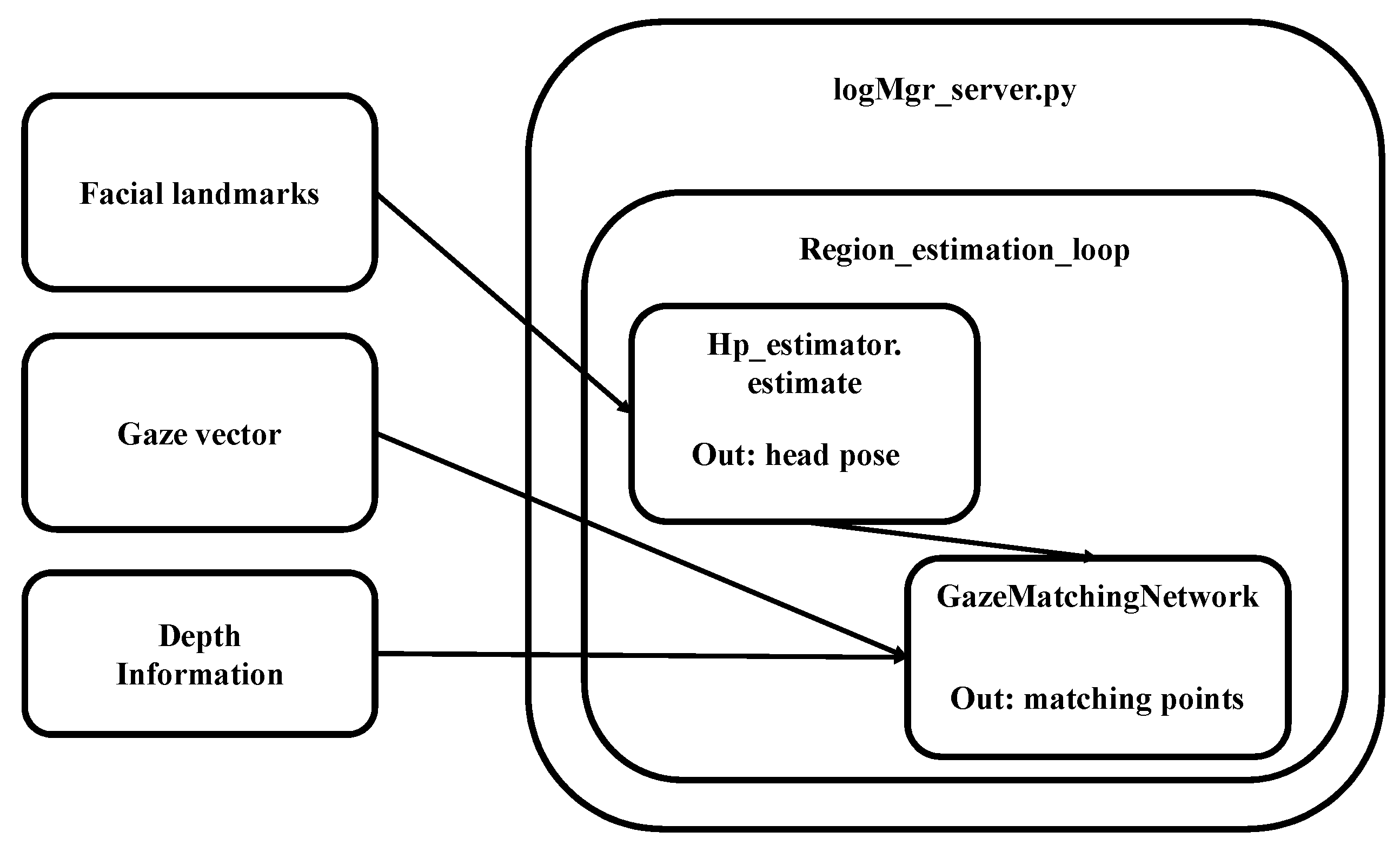

- The head-pose estimator extracted the head-pose vector using information collected from the Jetson TX2, such as bounding box, landmarks, etc.

- The gaze-matching network extracted the gaze area within the image using information from various modules, including the head-pose vector, gaze vector, bounding box, and landmarks.

- The logging and visualization modules recorded the events occurring in each module in the database, visualized the logged data, and transmitted the images. As a result, the matching-point coordinates were obtained in every loop, and the gaze-matching network was inferred by the region-estimation loop during the logging process.

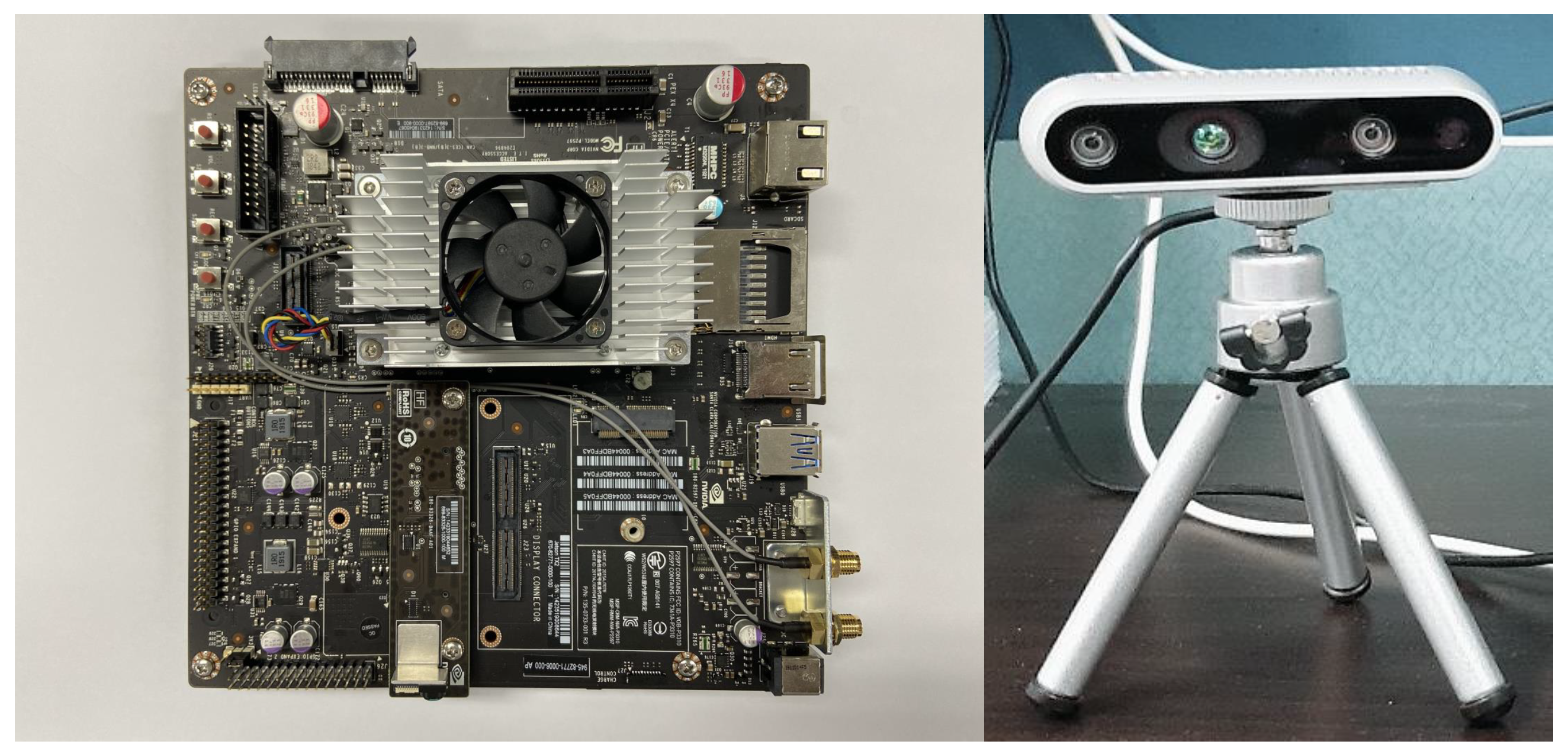

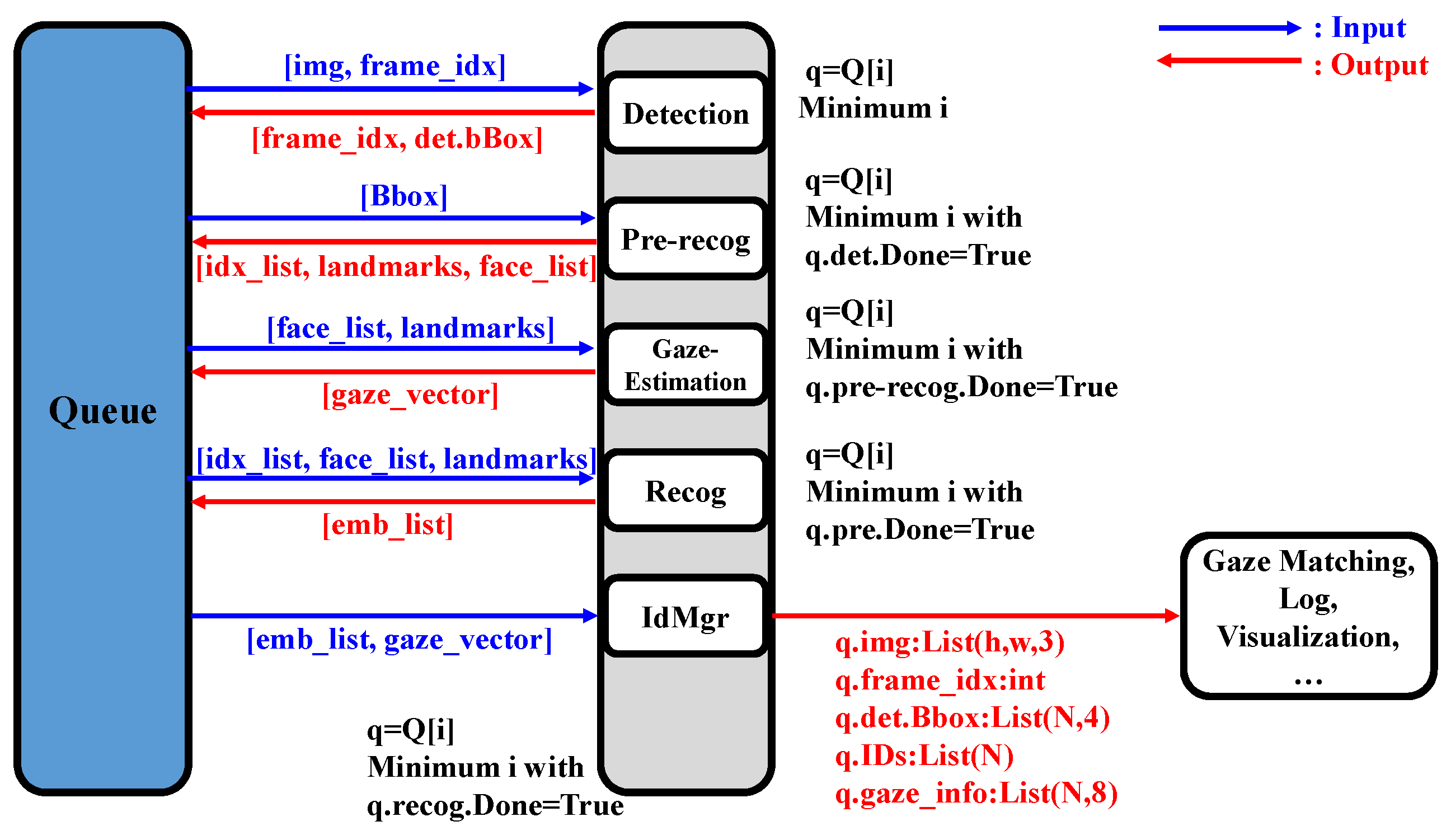

3.4. Gaze-Mapping System Parallelization and Optimization

4. Experiments

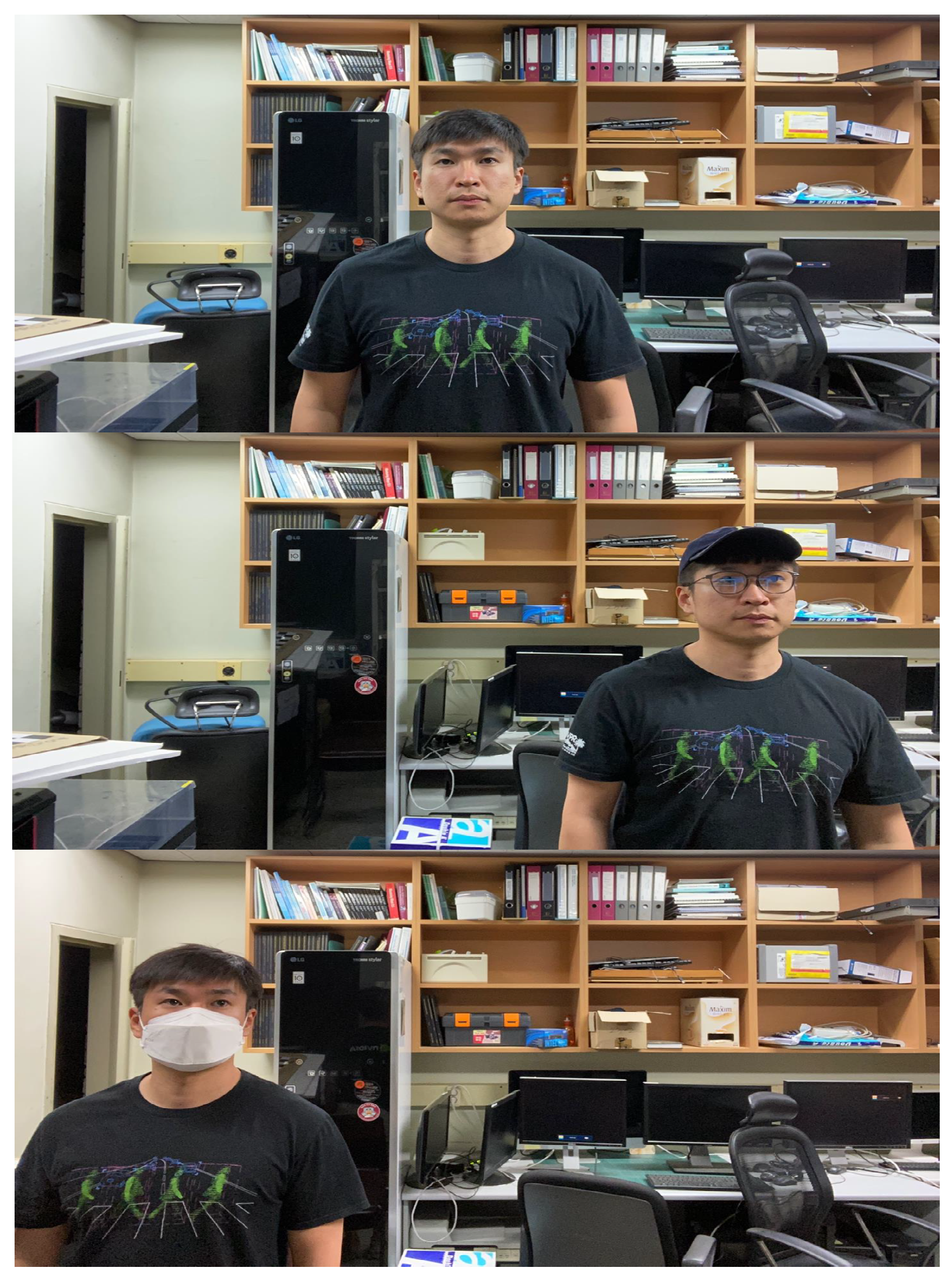

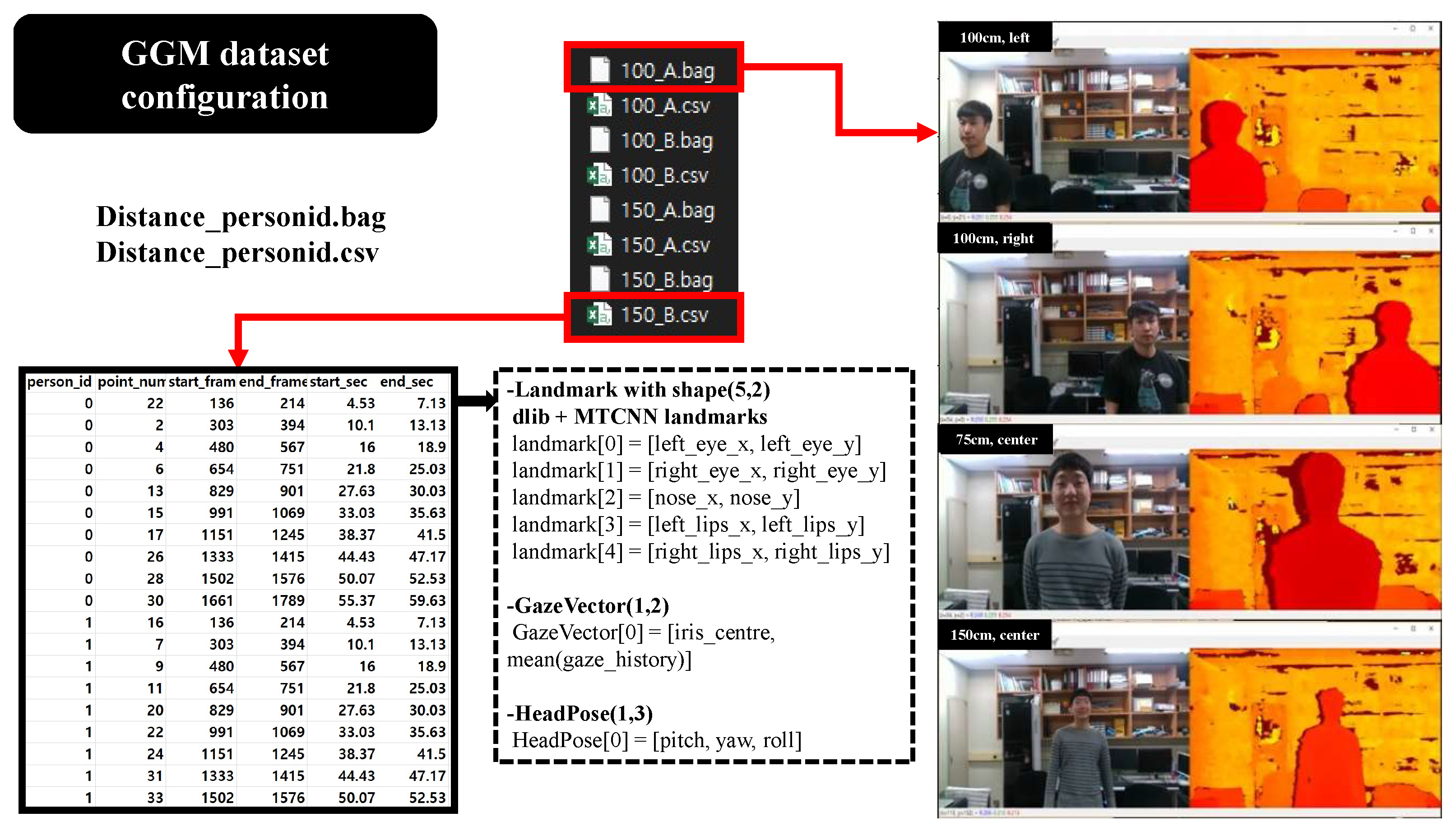

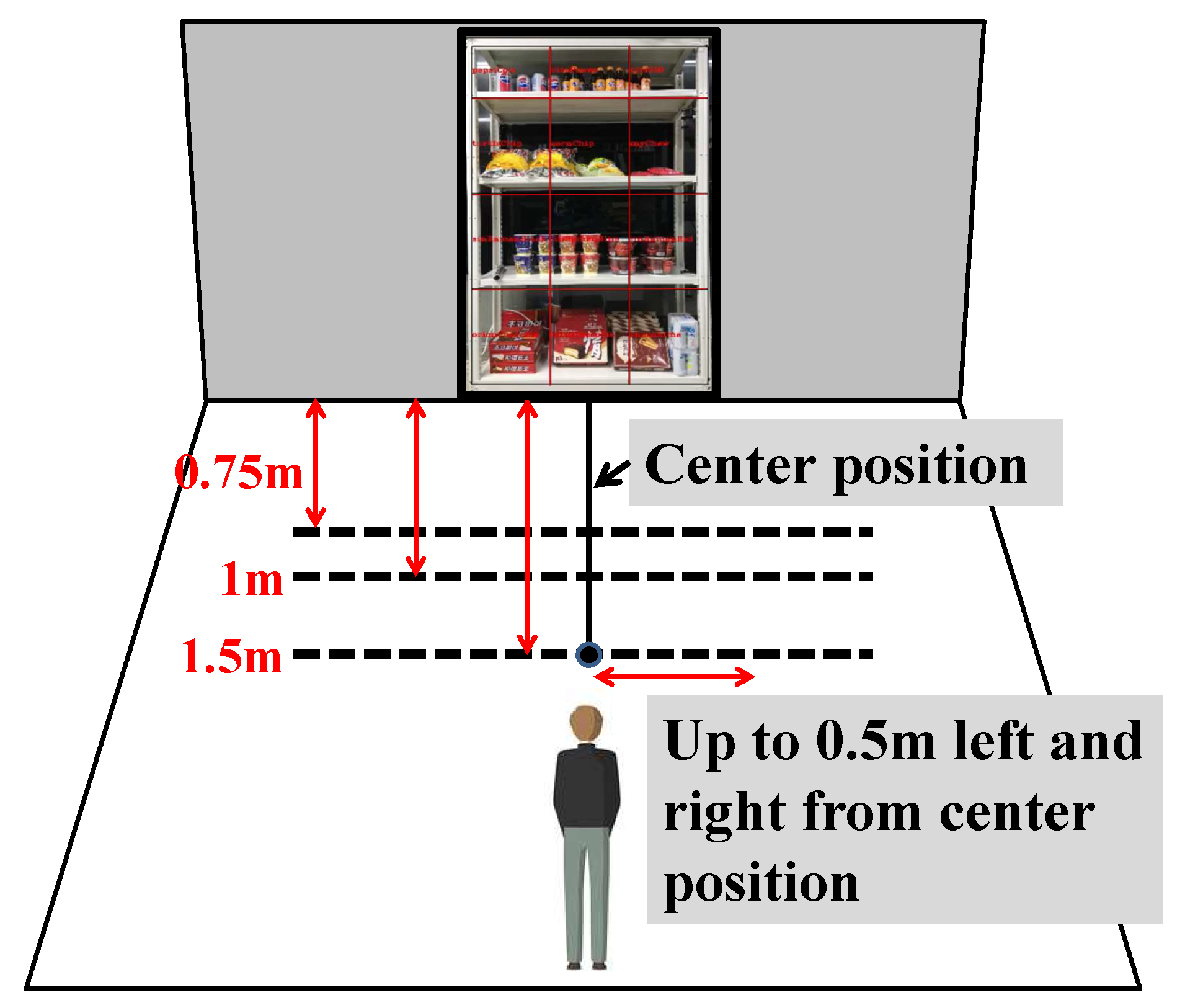

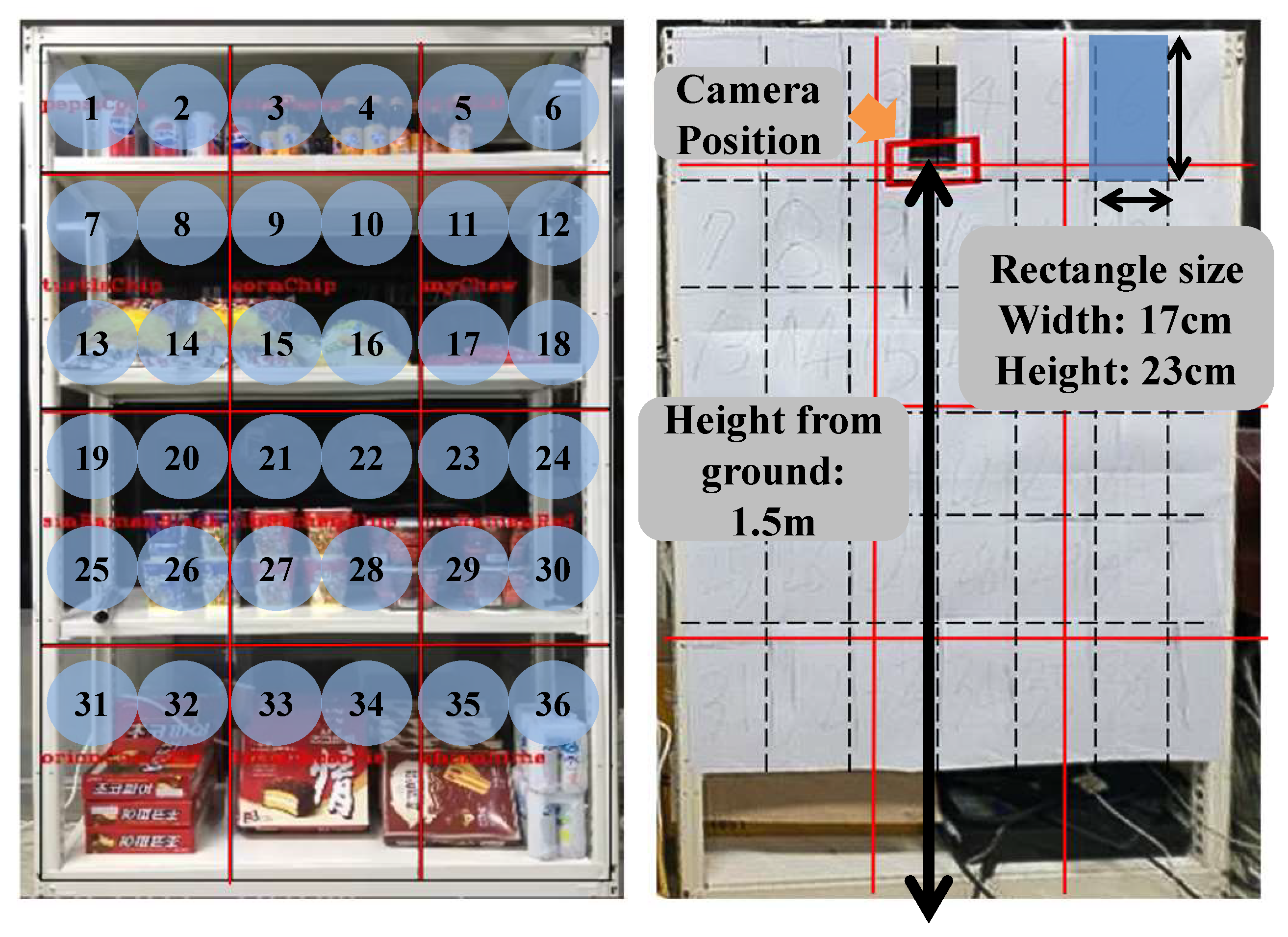

4.1. GGM Dataset

4.2. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Argyle, M. Non-verbal communication in human social interaction. In Non-Verbal Communication; Cambridge U. Press: Cambridge, UK, 1972; Volume 2. [Google Scholar]

- Goldin-Meadow, S. The role of gesture in communication and thinking. Trends Cogn. Sci. 1999, 3, 419–429. [Google Scholar] [CrossRef] [PubMed]

- Rayner, K. Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 1998, 124, 372. [Google Scholar] [CrossRef] [PubMed]

- Jacob, R.J.K.; Karn, K.S. Eye tracking in human–computer interaction and usability research: Ready to deliver the promises. In The Mind’s Eye; North Holland: Amsterdam, The Netherlands, 2003; pp. 573–605. [Google Scholar]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver gaze tracking and eyes off the road detection system. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- MassÉ, B.; Ba, S.; Horaud, R. Tracking gaze and visual focus of attention of people involved in social interaction. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2711–2724. [Google Scholar] [CrossRef]

- Ramirez Gomez, A.; Lankes, M. Towards designing diegetic gaze in games: The use of gaze roles and metaphors. Multimodal Technol. Interact. 2019, 3, 65. [Google Scholar] [CrossRef]

- Khan, M.Q.; Lee, S. Gaze and eye tracking: Techniques and applications in ADAS. Sensors 2019, 19, 5540. [Google Scholar] [CrossRef] [PubMed]

- Jen, C.L.; Chen, Y.L.; Lin, Y.J.; Lee, C.H.; Tsai, A.; Li, M.T. Vision based wearable eye-gaze tracking system. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 202–203. [Google Scholar]

- Huang, M.X.; Kwok, T.C.; Ngai, G.; Leong, H.V.; Chan, S.C. Building a self-learning eye gaze model from user interaction data. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 1017–1020. [Google Scholar]

- Sesma, L.; Villanueva, A.; Cabeza, R. Evaluation of pupil center-eye corner vector for gaze estimation using a web cam. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; pp. 217–220. [Google Scholar]

- Sun, L.; Liu, Z.; Sun, M. Real time gaze estimation with a consumer depth camera. Inf. Sci. 2015, 320, 346–360. [Google Scholar] [CrossRef]

- Wood, E.; Baltrušaitis, T.; Morency, L.P.; Robinson, P.; Bulling, A. A 3D morphable eye region model for gaze estimation. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 297–313. [Google Scholar]

- Mansanet, J.; Albiol, A.; Paredes, R.; Mossi, J.M.; Albiol, A. Estimating point of regard with a consumer camera at a distance. In Pattern Recognition and Image Analysis: 6th Iberian Conference, IbPRIA 2013, Funchal, Madeira, Portugal, 5–7 June 2013. Proceedings 6; Springer: Berlin/Heidelberg, Germany, 2013; pp. 881–888. [Google Scholar]

- Xu, L.; Machin, D.; Sheppard, P. A Novel Approach to Real-time Non-intrusive Gaze Finding. In Proceedings of the British Machine Conference, Southampton, UK, 14–17 September 1998; pp. 1–10. [Google Scholar]

- Wang, K.; Ji, Q. Real time eye gaze tracking with 3D deformable eye-face model. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1003–1011. [Google Scholar]

- Park, S.; Zhang, X.; Bulling, A.; Hilliges, O. Learning to find eye region landmarks for remote gaze estimation in unconstrained settings. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; pp. 1–10. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. Mpiigaze: Real-world dataset and deep appearance-based gaze estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 162–175. [Google Scholar] [CrossRef] [PubMed]

- Cortacero, K.; Fischer, T.; Demiris, Y. RT-BENE: A dataset and baselines for real-time blink estimation in natural environments. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Wood, E.; Baltrusaitis, T.; Zhang, X.; Sugano, Y.; Robinson, P.; Bulling, A. Rendering of eyes for eye-shape registration and gaze estimation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3756–3764. [Google Scholar]

- Krafka, K.; Khosla, A.; Kellnhofer, P.; Kannan, H.; Bharkar, S.; Matusik, W.; Torralba, A. Eye tracking for everyone. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2176–2184. [Google Scholar]

- Kellnhofer, P.; Recasens, A.; Stent, S.; Matusik, W.; Torralba, A. Gaze360: Physically unconstrained gaze estimation in the wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6912–6921. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time facial detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Pham, M.T.; Gao, Y.; Hoang, V.D.D.; Cham, T.J. Fast polygonal integration and its application in extending haar-like features to improve object detection. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 942–949. [Google Scholar]

- Zhu, Q.; Yeh, M.C.; Cheng, K.T.; Avidan, S. Fast human detection using a cascade of histograms of oriented gradients. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 1491–1498. [Google Scholar]

- Mathias, M.; Benenson, R.; Pedersoli, M.; Van Gool, L. Face detection without bells and whistles. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part IV 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 720–735. [Google Scholar]

- Yan, J.; Lei, Z.; Wen, L.; Li, S.Z. The fastest deformable part model for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2497–2504. [Google Scholar]

- Zhu, X.; Ramanan, D. Face detection, pose estimation, and landmark localization in the wild. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2879–2886. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Zhang, Z.; Luo, P.; Loy, C.C.; Tang, X. Facial landmark detection by deep multi-task learning. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part VI 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 94–108. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint facial detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Barbu, A.; Lay, N.; Gramajo, G. Face detection with a 3D model. In Academic Press Library in Signal Processing; Academic Press: Cambridge, MA, USA, 2018; Volume 6, pp. 237–259. [Google Scholar]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. S3fd: Single shot scale-invariant facial detector. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 192–201. [Google Scholar]

- Wang, J.; Yuan, Y.; Yu, G. Face attention network: An effective facial detector for the occluded faces. arXiv 2017, arXiv:1711.07246. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Wey, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for facial recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Wood, E.; Baltrušaitis, T.; Morency, L.P.; Robinson, P.; Bulling, A. Learning an appearance-based gaze estimator from one million synthesised images. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; pp. 131–138. [Google Scholar]

| User Case | Conditions |

|---|---|

| Common conditions | 1. A/B/C/D Sub-CASE (total no. of points-per-user 80 = 10 points × 4 cases × 2 trials) |

| -A: No accessories, stared at front of shelves | |

| -B: No accessories, 30 side view | |

| -C: Wore accessories (glasses, hats, masks), stared at front of shelves | |

| -D: Wore accessories (glasses, hats, masks), stared at 30 to the left and right | |

| 2. Complied with grid setting value (6 × 6 grid shelf) | |

| Different conditions (CASE 1–3) | 1. The height of the two users must be different (user heights: 155 cm, 175 cm, ±5 cm) |

| 2. The height of the two users must be different (user heights: 165 cm, 185 cm, ±5 cm) | |

| 3. Turn the head as far as possible and stare |

| Test Definition | User Case | No. of Trials | (Accuracy, %) |

|---|---|---|---|

| 2 users stared at 0.75 m | CASE 1 | 80 | 93.75 |

| 2 users stared at 0.75 m | CASE 2 | 80 | 90.00 |

| 2 users stared at 1 m | CASE 1 | 80 | 96.25 |

| 2 users stared at 1 m | CASE 2 | 80 | 91.25 |

| 2 users stared at 1.5 m | CASE 1 | 80 | 96.25 |

| 2 users stared at 1.5 m | CASE 2 | 80 | 91.25 |

| 1 user stared at 0.75 m | CASE 3 | 40 | 95.00 |

| 1 user stared at 1 m | CASE 3 | 40 | 92.50 |

| 1 user stared at 1.5 m | CASE 3 | 40 | 90.00 |

| Hardware | Jetson AGX Xavier | Jetson TX2 |

|---|---|---|

| CPU(ARM) | 8-core Carmel ARM CPU @ 2.26 GHz | 6-core Denver and A57 @ 2 GHz |

| GPU | 512 Core Volta @ 1.37 GHz | 256 Core Pascal @ 1.3 GHz |

| Memory | 16 GB 256-bit LPDDR4x @ 2133 MHz | 8 GB 128-bit LPDDR4 |

| Speed | 10 fps | 7.5 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahn, H.; Jeon, J.; Ko, D.; Gwak, J.; Jeon, M. Contactless Real-Time Eye Gaze-Mapping System Based on Simple Siamese Networks. Appl. Sci. 2023, 13, 5374. https://doi.org/10.3390/app13095374

Ahn H, Jeon J, Ko D, Gwak J, Jeon M. Contactless Real-Time Eye Gaze-Mapping System Based on Simple Siamese Networks. Applied Sciences. 2023; 13(9):5374. https://doi.org/10.3390/app13095374

Chicago/Turabian StyleAhn, Hoyeon, Jiwon Jeon, Donghwuy Ko, Jeonghwan Gwak, and Moongu Jeon. 2023. "Contactless Real-Time Eye Gaze-Mapping System Based on Simple Siamese Networks" Applied Sciences 13, no. 9: 5374. https://doi.org/10.3390/app13095374

APA StyleAhn, H., Jeon, J., Ko, D., Gwak, J., & Jeon, M. (2023). Contactless Real-Time Eye Gaze-Mapping System Based on Simple Siamese Networks. Applied Sciences, 13(9), 5374. https://doi.org/10.3390/app13095374