1. Introduction

The majority of classification algorithms treat classes or categories of images independently both in terms of visual and semantic aspects [

1]. In contrast, human beings use semantic relationships when classifying images into their respective categories [

2]. For instance, it might seem unreasonable to distinguish “tree” from “vegetation” since a “tree” is a kind of “vegetation”. Generally, human beings use features to distinguish different kinds of objects. For example, the NDVI (normalized difference vegetation index) is an essential feature for distinguishing between vegetation and water, while shapes can discriminate between broad-shaped leaves and needle-shaped leaves. Most classification algorithms achieved better performance results on easy image classification datasets such as Caltech 256 [

3] and Caltech 101 [

4]; however, they neglected the concept of semantics [

5], which led to poor results on fine-grained images [

6]. An ontology is a hierarchical structure of a particular domain that consists of all classes or categories as well as relationships such as “is-a” and “kind-of”. It captures semantic relationships between classes or categories in a manner that is close to human perception.

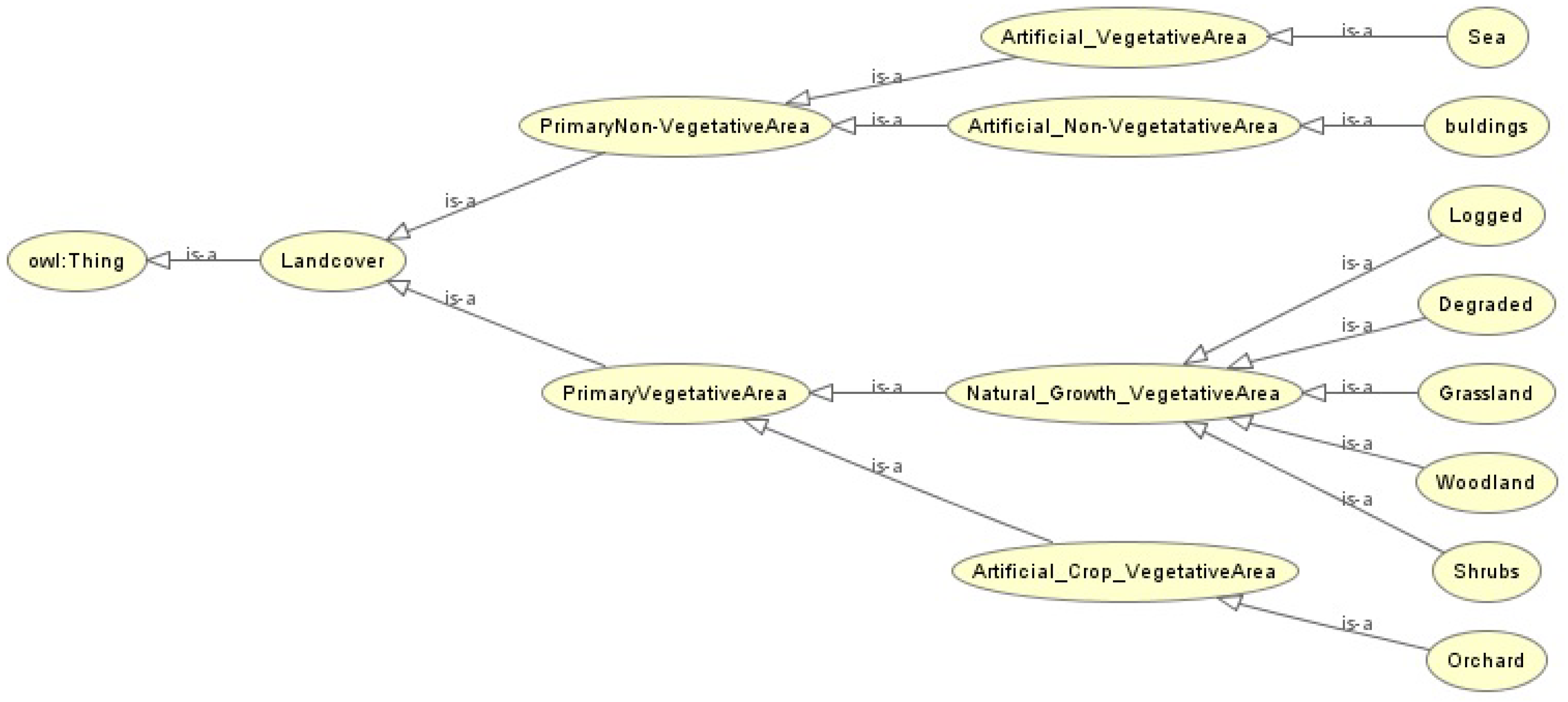

The adoption of ontologies in image classification algorithms incorporates semantics tools, thus leading to increases in image classification accuracy. Traditional ontology-based algorithms hugely suffer from the problem of error propagation because these ontology classifications are based on having classifiers at every ontological node, such that node subcategories are discriminated. Such errors were caused by intra-class variations of super-categories. The previous uses of ontologies in classification focused on improving speed rather than accuracy. Ontologies revolve around the use of semantic relationships, and data are expressed more at the semantic level, thus accounting for better classification. This study proposes an image classification model based on ontology and an ensemble stack of the Xception, VGG16, and ResNet50 models, which are employed to generate a set of features that are used by merged classifiers driven by taxonomic relationships in an ontology to improve image classification accuracy. The three pre-existing models, Xception, VGG-16, and ResNet50, have been adopted in this study via transfer learning because they have an innately dissimilar architecture that abstracts unrelated information from the images used for the classification purposes [

7]. Some potential applications of ontological bagging in forestry include species classification. Information relating to forest tree species plays a critical role in ecology and forest management [

8]. Our proposed ontological bagging model can be employed to improve the accuracy of species classification in forestry. Vegetation is an important part of an ecosystem because it provides oxygen and a suitable place for human beings to live [

9]. Therefore, information concerning vegetation is very critical; hence, our proposed model can be used to classify vegetation into different types and categories. Our model can also be used to classify fruits into their respective categories. Fruit classification plays an important role in many industrial applications, including supermarkets and factories. The importance of fruit classification can be seen in people with special dietary requirements; in this case, they can be assisted in selecting categories of fruits [

10]. The contribution of the study is summarised as follows:

We integrate semantic ontologies and aggregate outputs from hypernym–hyponym classifiers to increase image category distinction and also eliminate error propagation problems, hence increasing image classification accuracy.

We propose a new approach to image classification that uses an ensemble of Xception, ResNet50, and VGG16, whereby features obtained from Xception, ResNet50, and VGG16 are integrated together to produce all possible features, which are, in turn, used by an ontological bagging algorithm for subsequent classification.

The rest of the paper is structured as follows.

Section 2 discusses related work.

Section 3 discusses the dataset used for the study.

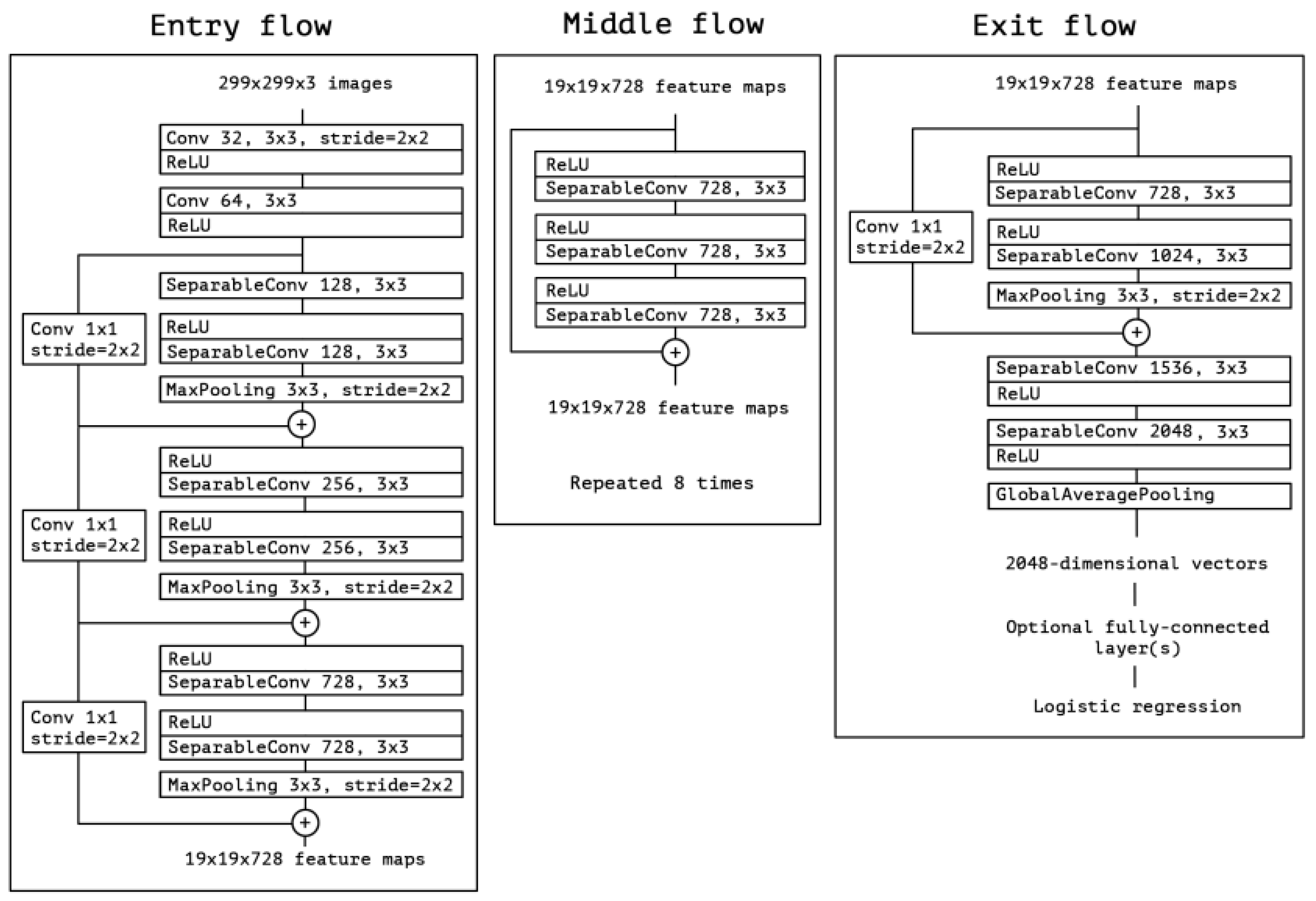

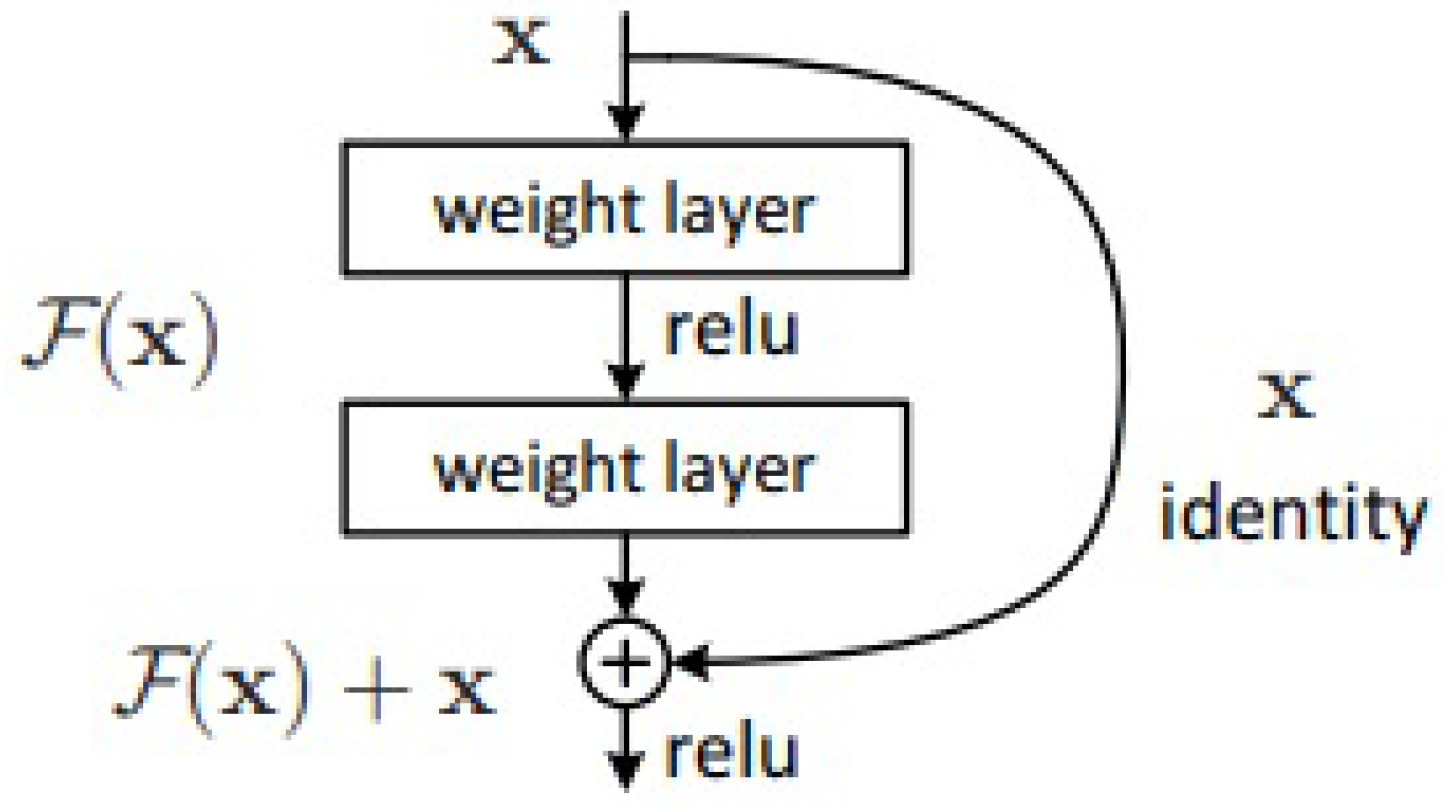

Section 4 describes the deep learning architectures.

Section 5 describes the ontological bagging algorithm used in the study.

Section 6 describes the proposed algorithm.

Section 7 outlines the experimental setup.

Section 8 describes the experimental results.

Section 9 discusses the results obtained from the experiment.

Section 10 concludes the paper.

2. Related Works

Image classification has received much attention in the fields of computer vision and image processing [

11,

12,

13,

14,

15,

16]. A study [

11] developed a model that harmonized ontology and HMAX features to perform image classification using merged classifiers. The basic idea behind the model was to exploit ontological relationships that exist between image classes or categories. For better discrimination between classes, the procedure involved training visual feature classifiers and merging outputs of hypernym–hyponym classifiers. The model included three components: (1) feature extraction, (2) ontology building, and (3) image classification. The visual features were obtained from the training dataset, and ontology building was carried out by mainly following the process of concept extraction and relationship generation. Visual features extracted from the training set and the ontology were used to perform image classification using a linear orange support-vector machine (SVM) classifier. In terms of accuracy, the model achieved an accuracy of 0.63, while the baseline method without ontology obtained an accuracy of 0.59. However, as coined by [

12], HMAX does not perform very well in terms of feature extraction over a limited dataset. To circumvent this shortcoming, the proposed model in this study has adopted an ensemble of CNNs to generate features for subsequent image classification.

Another study [

1] proposed an ontological random forest algorithm for forest image classification. The algorithm’s basic idea was that the semantic relationships between categories determined the splitting of the decision tree. Multiple-instance learning was used to provide a learning platform for generating hierarchical features that were then used to capture visual dissimilarities at various concept levels. Semantic splitting was used to build decision trees, and semantic relationships were used to learn hierarchical weak features. The experimental results showed that the approach not only outperformed state-of-the-art approaches but was also capable of identifying semantic features at different concept levels. The drawback of this study was that feature generation was hugely dependent on weak attribute learning. To solve this problem, the proposed study used an ensemble deep learning approach to generate all plausible features for subsequent image classification.

An algorithm that automatically builds image classification trees was proposed in [

17]. A set of categories was recursively divided into two minimally confused subsets and achieved 5–20-fold speedups over other methods. Other authors [

18] used lexical semantic networks to integrate knowledge about inter-category relationships into the learning process of visual appearance. A semantic hierarchy of discriminative classifiers was used for object detection. The challenge encountered was that object recognition was marred by the fact that the algorithm did not support weak attribute reasoning. To overcome this challenge, the proposed study incorporated the bagging algorithm because it has the ability to learn weak attributes.

A new formalism that incorporated hierarchy and exclusion (HEX) graphs to perform object classification by exploiting the rich structure of real-world labels was introduced [

19]. The new formalism has the ability to capture semantic relationships between any two labels on the same object. Results obtained from the model showed an improvement in object classification as a result of exploiting label relationships. However, the major limitation of the approach is that it is too general in nature and is only limited to domains with hierarchical and exclusion relationships.

A study [

20] developed a deep learning model for multiple-instance learning (MIL) frameworks, whose goal was to perform vision tasks such as classification and image annotation. In the model, each image object uses two instance sets of object proposals and text annotations to perform vision tasks. The main merit of the model is its ability to learn relationships between objects and annotation proposals. The study contributed extensively to solving computer vision tasks, and it performed well both in image classification and image auto-annotation. However, the shortcomings of the model were that it required fine tuning on the orange dataset, which is time-consuming.

A unified CNN-RNN model for multi-class image classification was proposed in [

21]. The classification process consisted of learning semantic redundancy and the co-occurrence dependency in an end-to-end way. The model has the ability to obtain semantic level dependency and image label relevance by learning the joint image label embedding. The model could also be trained from scratch to integrate both pieces of information in a unified framework. The results obtained show better performance in terms of classification than the state-of-the-art multi-label classification model.The shortcomings of the model were that it fails to make a prediction on small objects that have little covariance dependencies with other, larger objects.

Considering that microscopic imaging technology is rapidly advancing, bio-image-based approaches to protein subcellular localization have sparked a lot of interest. However, there are fewer techniques for predicting protein location, with the majority of them relying on automatic single-label classification. Therefore, a study [

22] developed an artificial intelligence (AI)-based stacked ensemble approach for the prediction of protein subcellular localization in confocal microscopy images. The ensemble approach was built by stacking ResNet152, DenseNet169, and VGG16 as base learners, and their predictions were integrated and fed as input to the meta-learner. The model was implemented on an image dataset obtained from Human Protein Atlas Image Classification on Kaggle and attained precision, F1-score, and recall of 0.71, 0.72, and 0.70, respectively. The main difficulty encountered in the study was a huge imbalance of images in the image categories, as some classes had very few images, which were insufficient to train the model. In our study, we used a data augmentation technique to determine image balance across categories. The evolution of AI applications has significantly increased the utilization of smart imaging devices. Convolutional neural networks (CNNs) are widely used in image classification because they do not require any handcrafted features to influence performance. However, fruit classification in the horticulture field suffers from the significant disadvantage of requiring an expert with extensive knowledge and experience. To address this issue, a study [

23] developed MobileNetV2 with a deep learning technique for fruit image classification. The study did not require the intervention of experts. The model used 26,149 images of 40 different fruit types from a Kaggle public dataset and achieved 99% accuracy. The model could be improved using a larger variety of fruits for broader fruit classification.

The idea of annotating images has received a significant amount of attention due to the sharp increase in volumes of images. By considering the area of agriculture, a study [

24] proposed a deep learning repetitive annotation approach for recognizing a variety of fruits and classifying the ripeness of oil palm fruit. The model was implemented on 3500 fruit images and achieved an accuracy of 98.7% for classifying oil palm fruit and 99.5% for recognizing a variety of fruits. CNNs are also used in agriculture for seed classification, despite the inherent limitations of traditional machine-learning approaches in extracting features and information from image data. Ref. [

25] created a deep CNN based on MobileNetV2 with a simple architecture for seed classification. The model was applied to a seed dataset with 14 different seed classes and achieved an accuracy of 95% and 98% on testing and training, respectively. However, future research will need to compare various CNN architectures to determine the best model for solving the problem at hand.

Recent trends have shown that image collection has significantly increased, thereby activating further research in image classification and annotation. A technique based on bag of visual words (BoVW), which relies on ontology, has been widely used in this area. However, problems relating to ambiguities between image categories have posed challenges with regard to image classification and annotation. A study in [

26] proposed a hierarchical max pooling (HMAX) model based on ontology to classify images of animals into their respective categories. The contribution of their model was the exploitation of semantic relationships between image categories as a way of eliminating the problem of ambiguity between image categories. The model performed well as it achieved an accuracy of 80%. However, HMAX is not a desirable technique for producing features; hence, our study has used an ensemble of CNNs for feature production.

CNNs have been widely employed to solve image classification problems, attributed to their power in extracting features and always making continuous breakthroughs in the field of image recognition. However, they suffer from a huge overhead, requiring a lot of time for the training process. To alleviate this challenge, a study [

27] developed a hybrid of deep learning and random forest algorithm to solve an image classification problem. The sole purpose of the CNN is for feature extraction, and the classification process is handled by the random forest algorithm. Random forest (RF) has the advantages of fast training speed and high classification accuracy. The model was effective as it produced a low error rate of 9.18%. The model did not carry out a comparative assessment against other baseline classifiers, which is accounted for in our study.

A supervised deep-learning approach based on a stacked auto-encoder was used in [

28] for the classification of forest areas. The study used unmanned aerial vehicle (UAV) datasets because they have been found to be quite useful for forest feature identification due to their relatively high spatial resolution. Through cross-validation, the model achieved an accuracy of 93%. However, one significant limitation of deep learning is that it requires more computing power than other machine learning algorithms.

3. Dataset

Given the scarcity of publicly available forest-type image datasets [

29], we downloaded 35 images for each class from the internet [

30,

31]. Considering that the obtained image dataset was too limited for the proposed model, the geometric transformation data augmentation technique from the scikit-learn library in Python was employed to produce 65 more images for each class in the training dataset and 9 more images in the testing dataset. Hence, the resulting dataset constituted a total of 952 images, from which 800 were set aside for training and 152 were reserved for testing.

Table 1 shows the corresponding forest-type image dataset distribution.

Data augmentation is a technique that artificially increases the image dataset by creating additional modified copies of already existing data.

Table 2 depicts the parameter configuration used in this study to perform data augmentation, where the first column represents the set of geometric properties that require fine-tuning, and the second column represents the set value for each geometric property. In this study, class labels were used as categorical data, and the label-encoder function from the scikit-learn library in Python was employed to convert string categorical data into numerical values. Class labels in this study represent image categories such as woodlands, shrubs, sea, orchards, logged forests, grassland, degraded land, and buildings. The class labels were transformed into distinct numerical values between 0 and 7. As presented in

Table 3, the first column represents the transformed numerical values, and the second column represents the corresponding class labels. Since images were of different sizes, the resize function from scikit-learn was employed to resize all images to 226 × 226 pixels. For each category, 19 images were set aside for testing, and 100 images were reserved for training.

Figure 1 shows a sample of the images used in the study.

8. Experimental Results

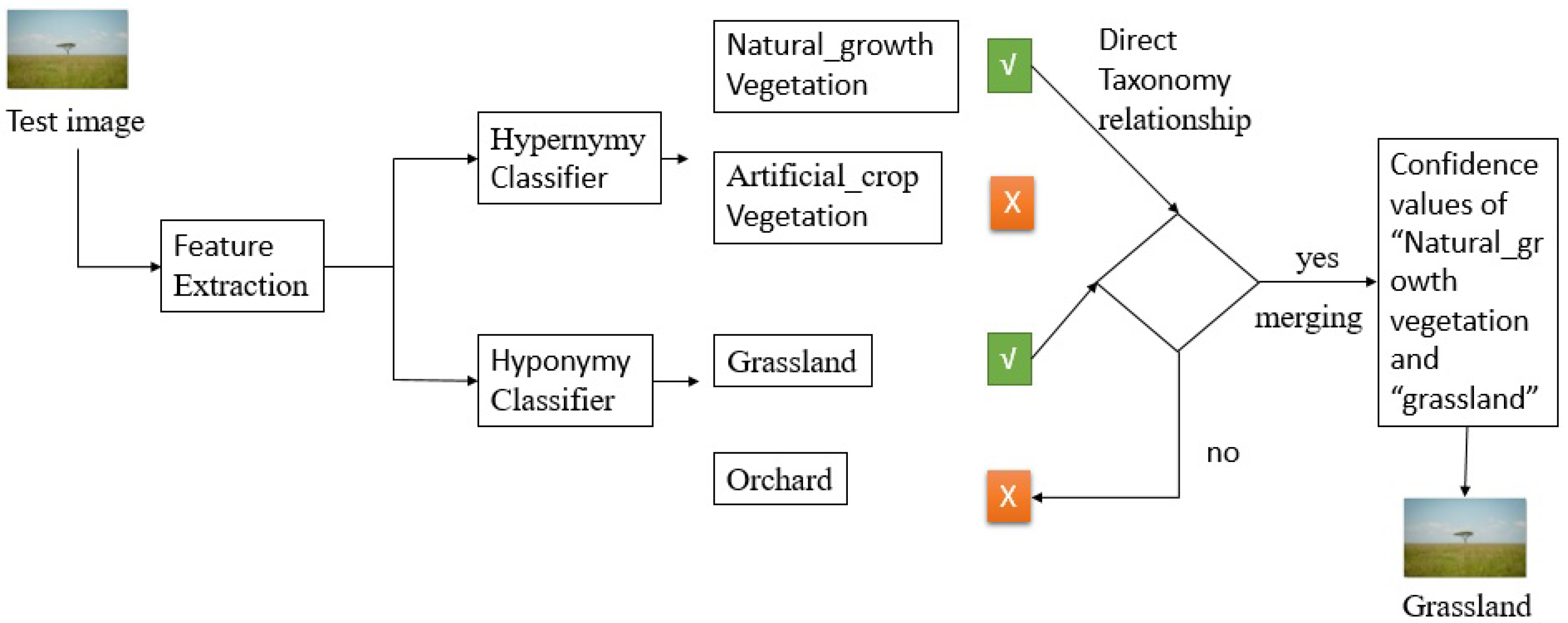

The aim of this study is to assess the effect of ontologies in the image classification task. With that in mind, the proposed ontology-based forest-type image classification model was compared against other baseline models such as random forest (RF), K-nearest neighbor (KNN), SVM, and Gaussian naive Bayes. The features extracted using an ensemble of deep learning models were used to train the classifiers based on an ontology that describes taxonomic relationships between image classes. For a particular test image, the classification task was performed both by the hypernym and hyponym classifiers. First, the classification process began by assigning the test image to the hyponym and hypernym classifiers with the highest confidence values. The hyponym and the hypernym classifiers ran in parallel. If there was a direct relationship between hypernym and hyponym classifiers, their confidence values were merged, and the test image was assigned to the best hyponym classier. If there was no relationship between the classifiers, the next best hyponym classifier was considered, and the same process repeats.

The results presented in

Table 5 show that the ontological bagging algorithm based on linear SVM outperformed other models with respect to RMSE and accuracy. The high accuracy is attributed to the ability of the model to suppress the error propagation of hierarchical classifiers.

The results were further presented in terms of the confusion matrix and ROC curves.

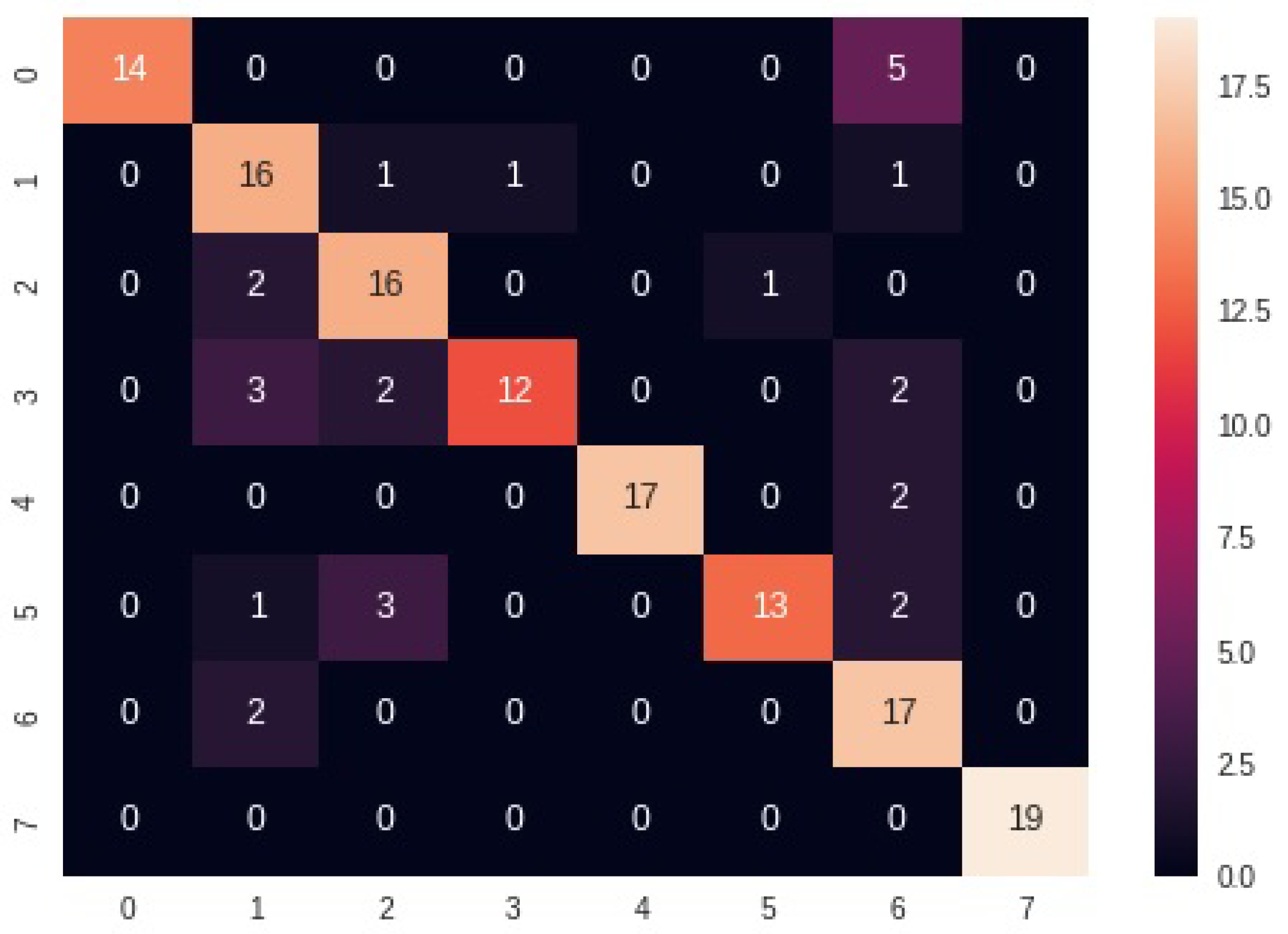

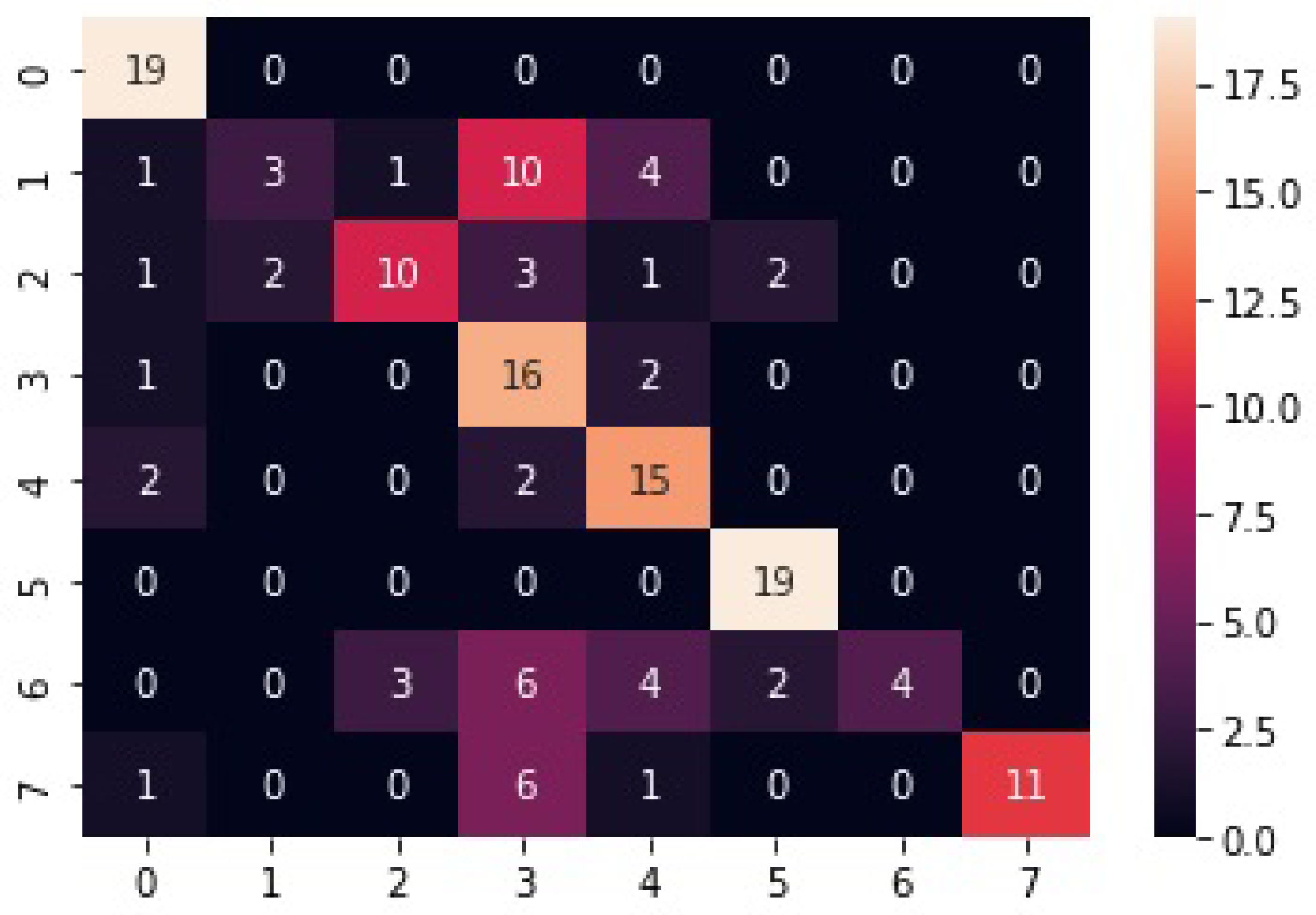

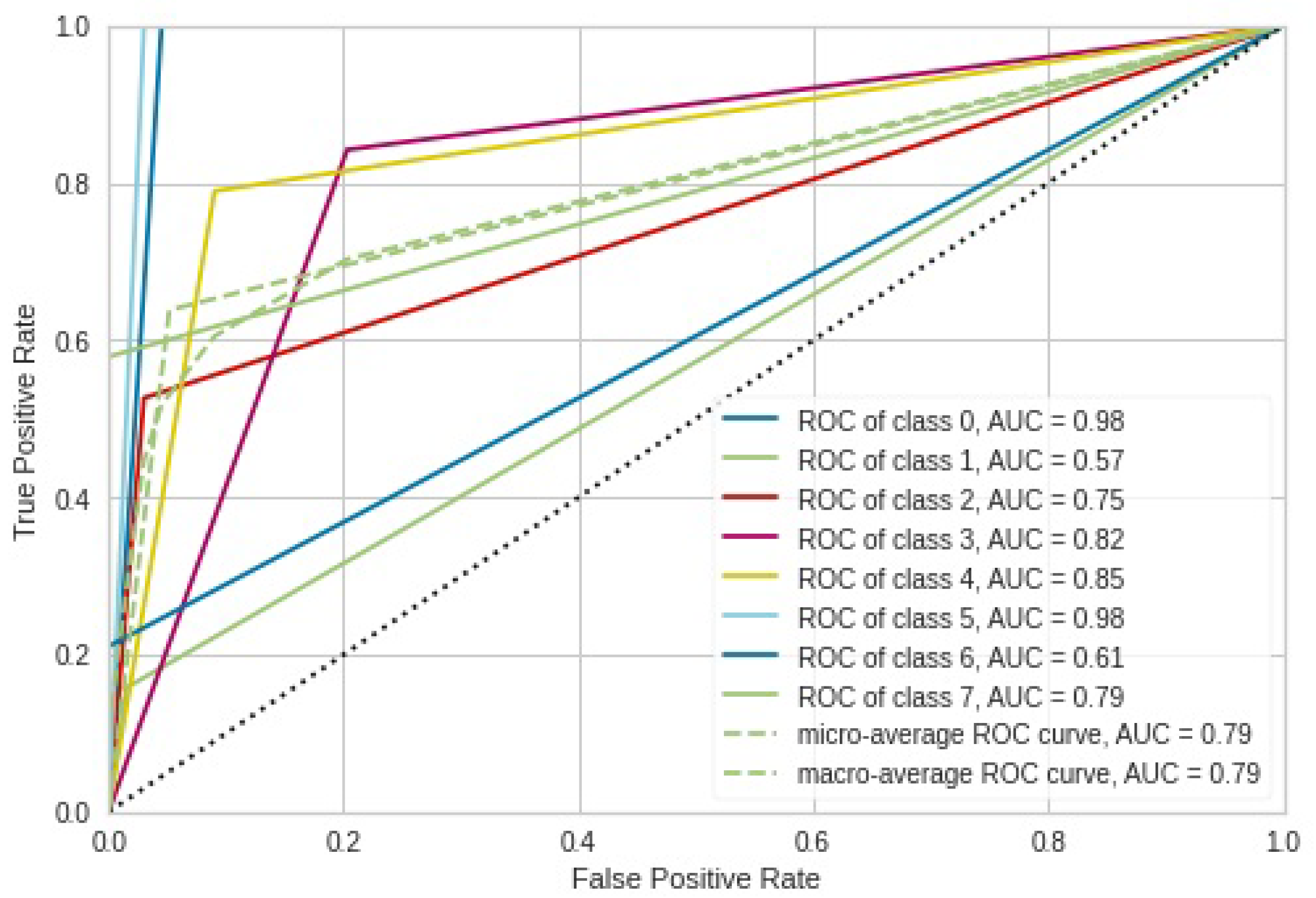

The confusion matrix for the kNN model is illustrated in

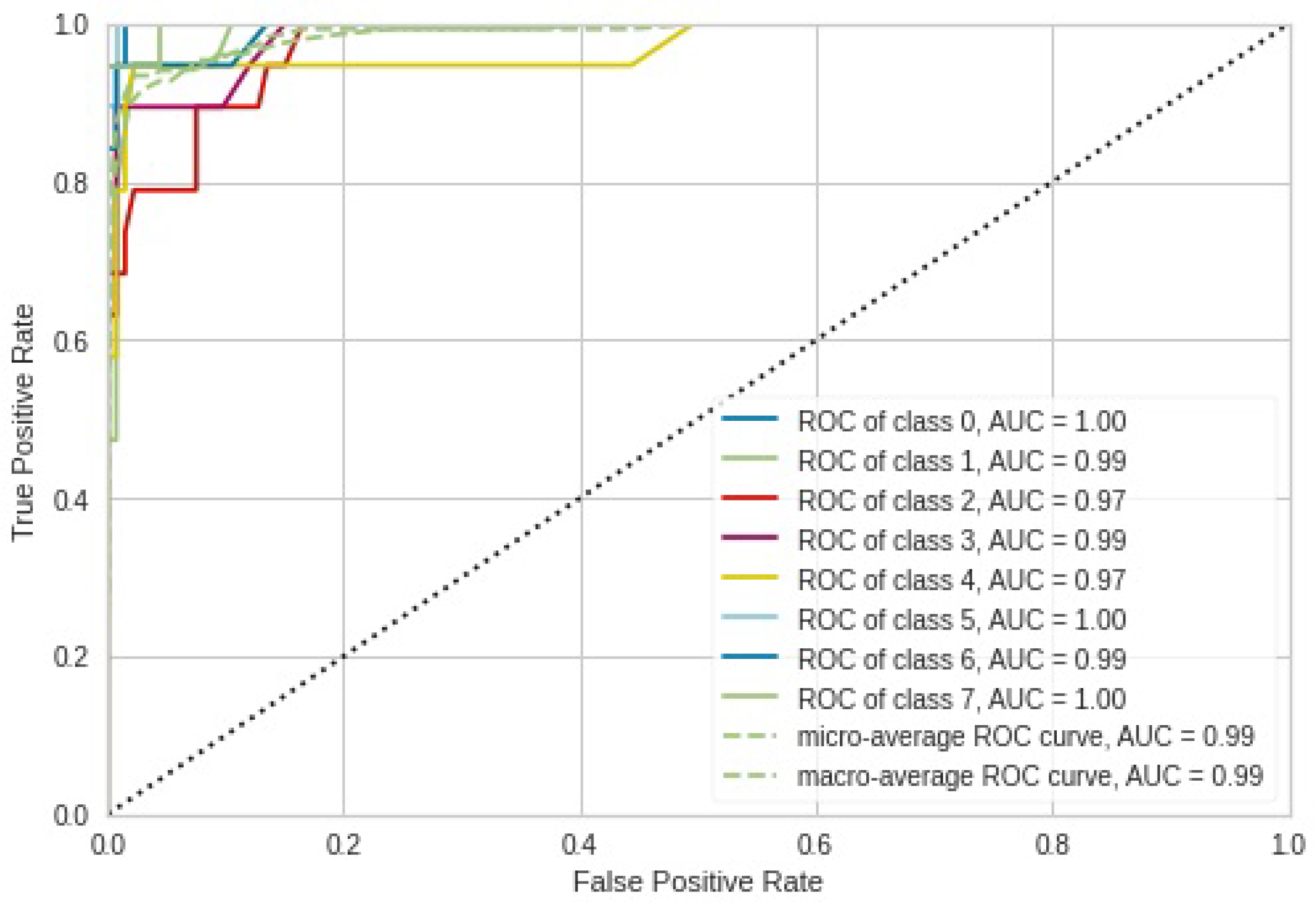

Figure 7. It is shown that the kNN model absolutely managed to correctly classify all nineteen images for class 9. Similarly, the model correctly classified seventeen test images for classes 4 and 5, but class 5 received twelve more test images from classes 0, 1, 3, 4, and 5. The kNN performed poorly in classes 3 and 5, misclassifying seven and six test images into classes 1 and 2, respectively. The associated ROC curve for the kNN model in

Figure 8 produced a perfect match for classes 7 and 4 by having an ROC AUC value of 1.0. In the corresponding confusion matrix of kNN, class 4 did not receive any false-positive test images, though two test images were misclassified into class 6.

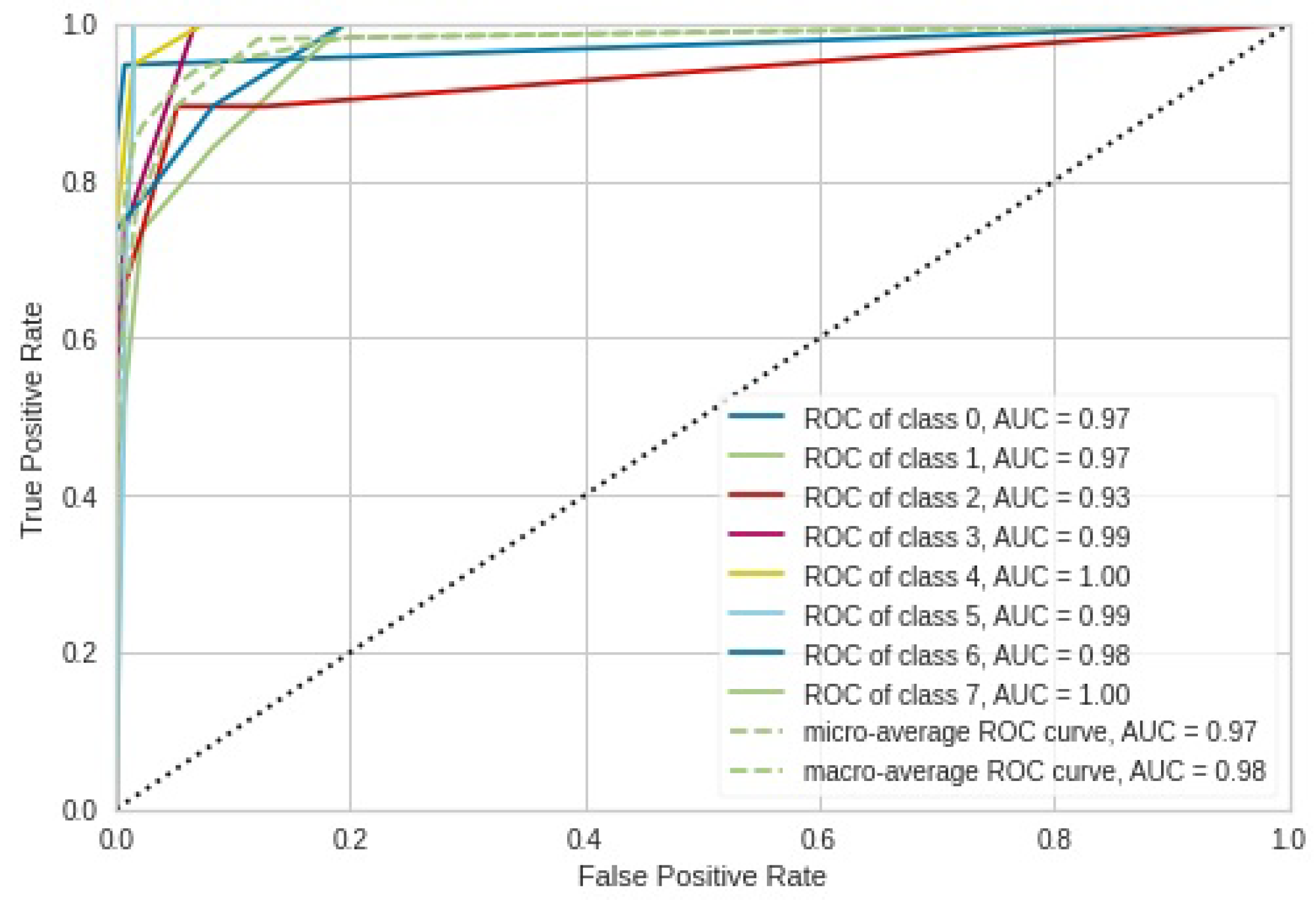

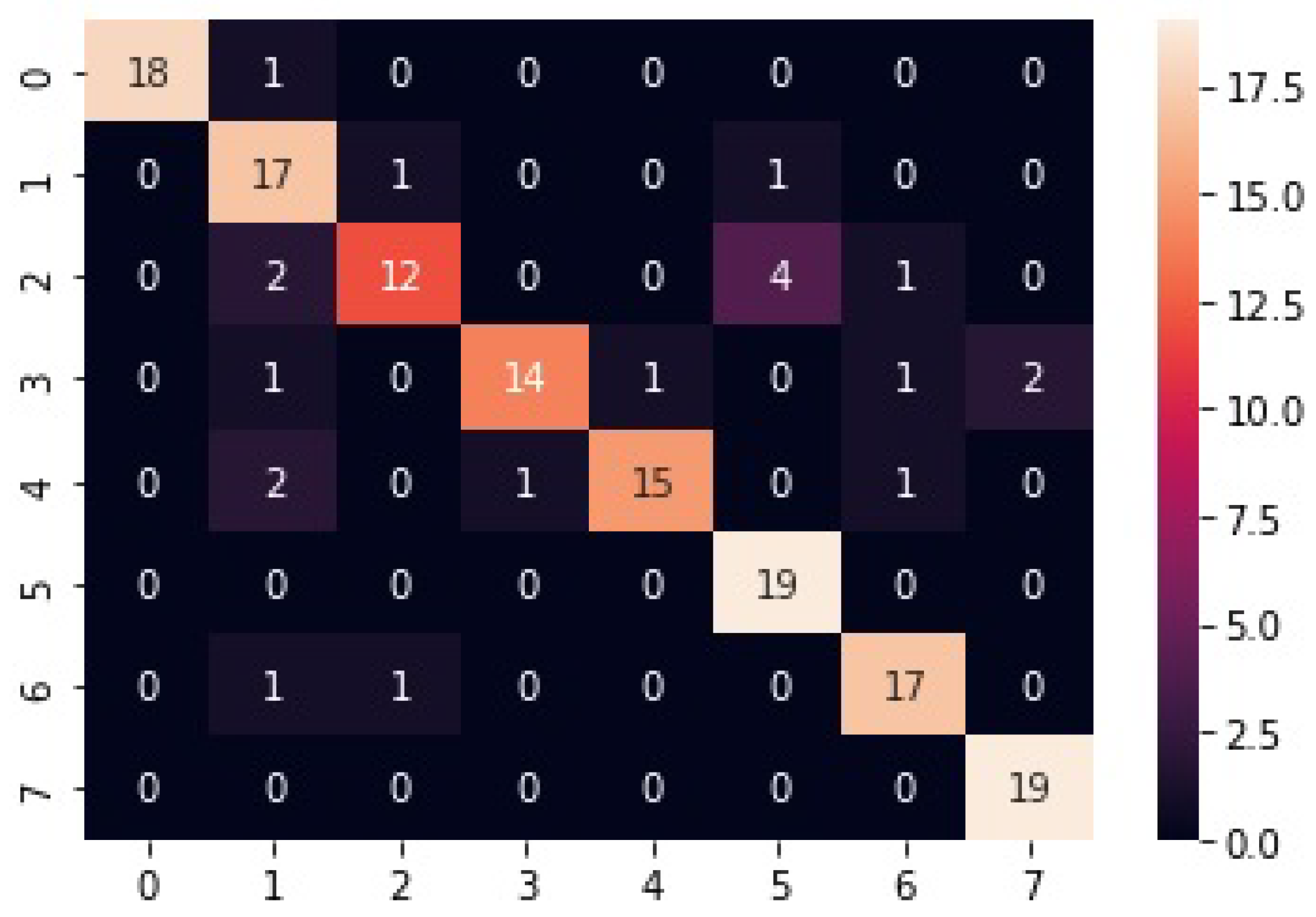

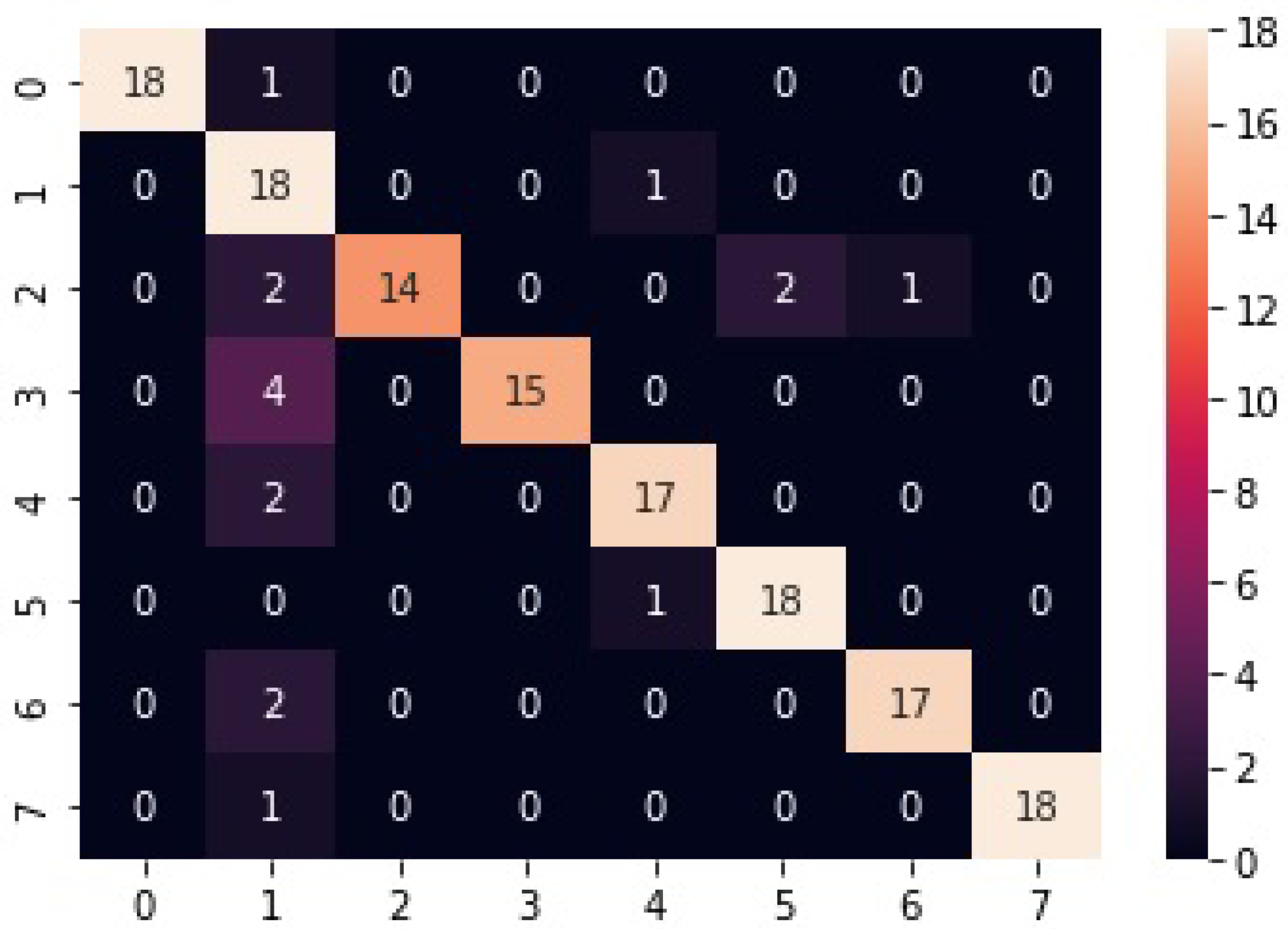

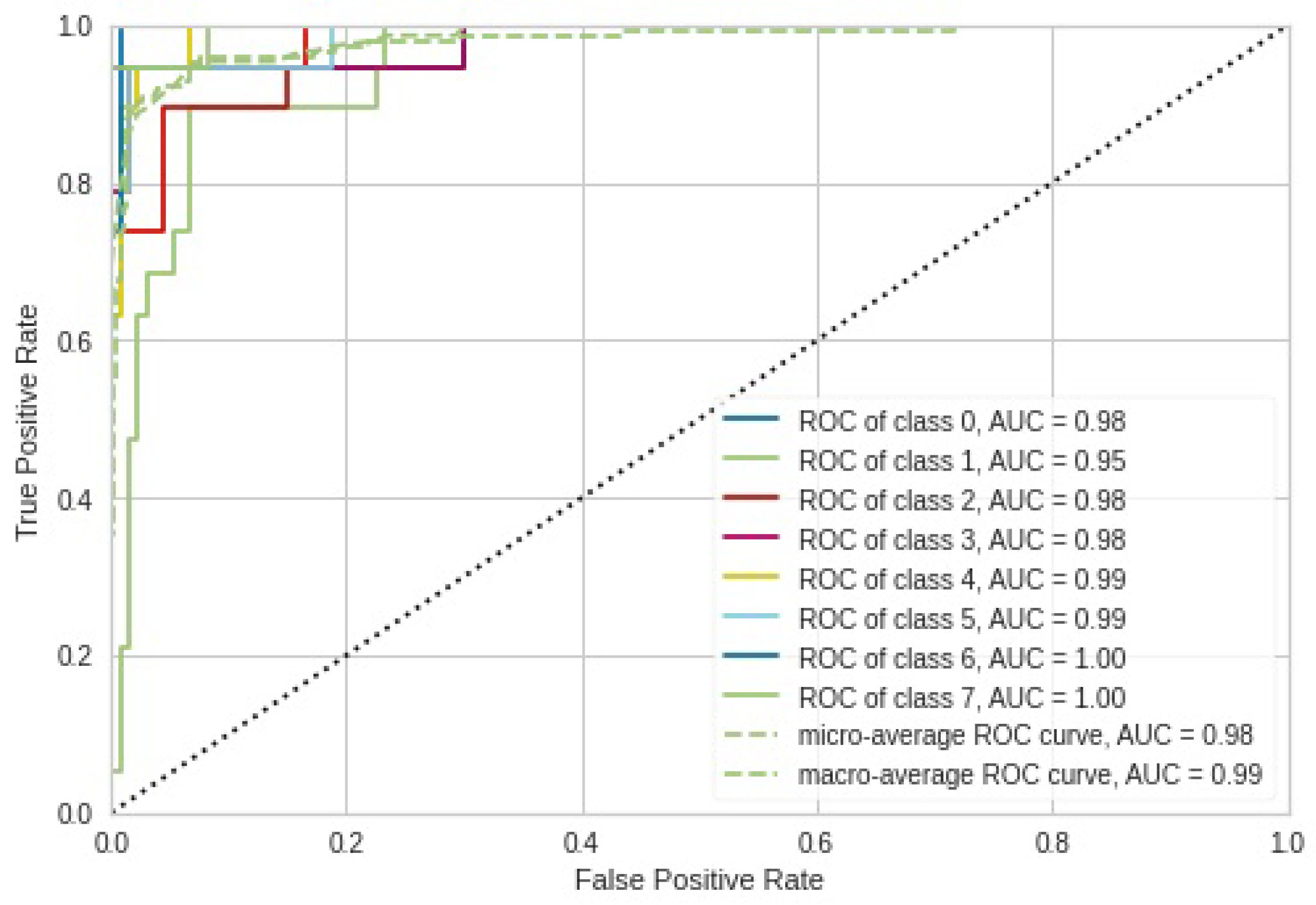

The confusion matrix of our ontological bagging approach in

Figure 9 provides a better alternative to image classification, as evidenced by its ability to correctly classify all nineteen test images for classes 0, 4, 5, 6, and 7, despite the fact that class 5 received two additional test images from class 2. Only one test image was misclassified for classes 1 and 3. The corresponding ROC AUC curve (

Figure 10) of our model produced a perfect match for classes 0, 3, 4, 5, and 7, i.e., the model managed to precisely distinguish between positive classes and negative classes. For all the classes, the model performed the worst for class 2, and this is consistent with the corresponding results from the confusion matrix, where four false-negative test images were recorded. Class 3 obtained a perfect match because one false-positive test image and one false-negative test image canceled each other out. In contrast to the confusion matrix results, class 5 did not produce a perfect match because there was an imbalance between false positives and false negatives, as the class received more false-negative test images than false-positive test images.

As illustrated in

Figure 11, the RF-based model correctly classified all nineteen test images for classes 5 and 7. Only one test image for class 0 was misclassified into class 1. The RF model performed the worst for class 2, where seven test images were misclassified into other classes. The ROC AUC curves for the RF-based classifier presented in

Figure 12 produced perfect matches for classes 0, 5, and 7. These results also go in tandem with the corresponding confusion matrix results in

Figure 11. All the classes have ROC AUC values that are greater than 0.9, implying that the model performed better.

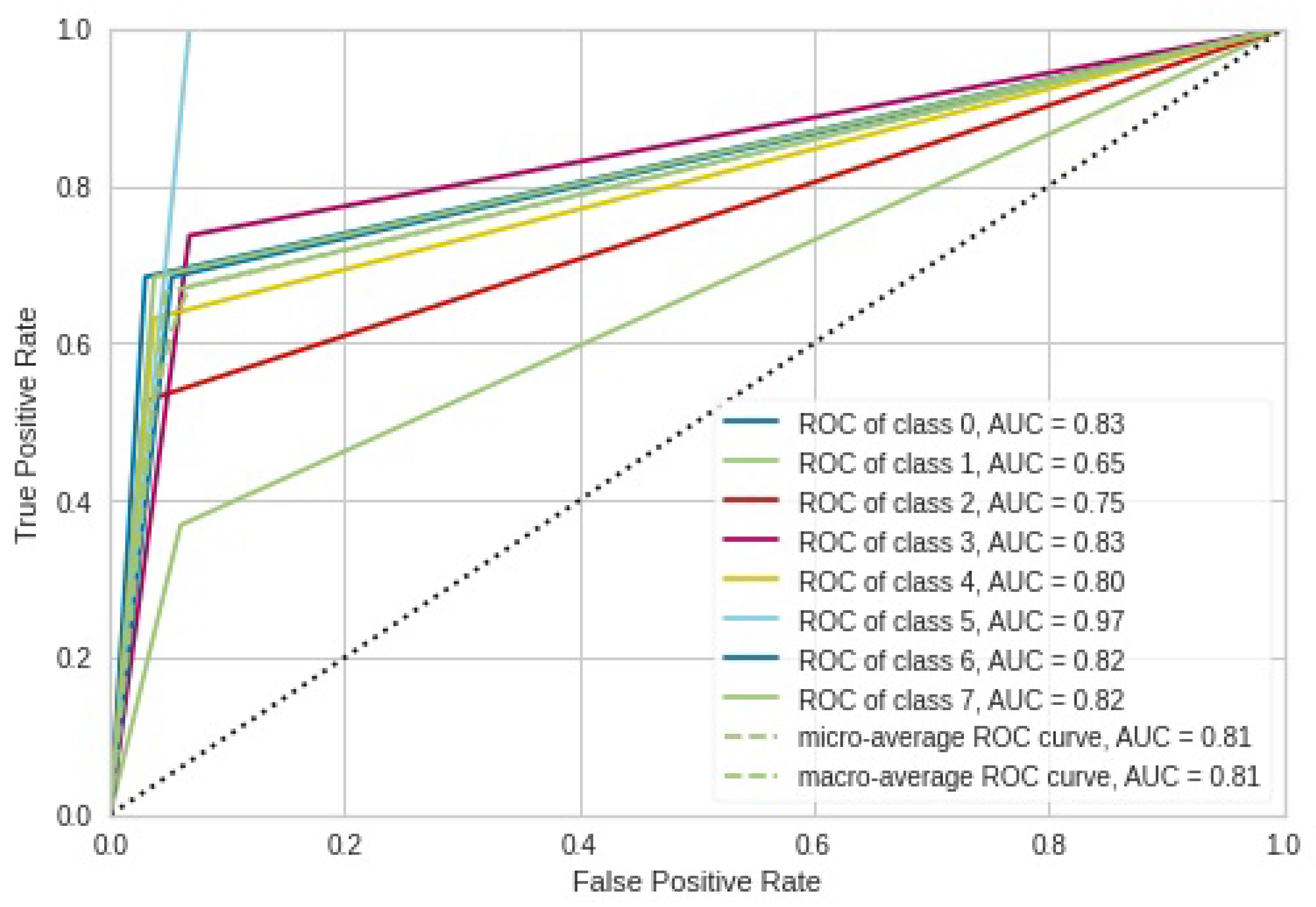

Overall, As shown in

Figure 13, the decision-tree-based model registered the worst performance as compared to the other models for all the classes, except class 5, where all nineteen test images were correctly classified despite the fact that the same class received nine more test images from other classes. The corresponding ROC AUC curves for the decision tree in

Figure 14 show that class 5 with its ROC AUC of 0.96 performed the best, and class 2 performed the worst with its ROC AUC of 0.75. This is also in line with the results obtained from the corresponding confusion matrix.

The confusion matrix of the SVM-based model presented in

Figure 15 shows that a range of two to three test images out of nineteen was misclassified for classes 0, 1, 4, 5, 6, and 7. Class 2 had the worst performance with regard to the number of misclassified test images; five test images were misclassified into classes 1, 5, and 6. The associated ROC AUC curves of the SVM-based model in

Figure 16 show that class 1 with its ROC AUC value of 0.95 performs the worst, as it registered fourteen false-positive test images.

The GaussianNB-based model presented in

Figure 17 classified all nineteen test images for class 0 and class 5 despite class 5 receiving four extra test images, two from class 2 and two from class 6, and class 0 receiving 6 extra test images from classes 1, 2, 3, 4 and 7. However, the GaussianNB under-performed in classes 1 and 6, misclassifying 16 and 15 test images, respectively. Most of the test images for class 1 were misclassified into class 3, implying that most degraded land images were mistakenly viewed as logged forest images. With reference to the ROC AUC curves presented in

Figure 18, the GaussianNB-based model performed best for classes 0 and 5 and performed extremely poorly for class 2. These findings also go in hand with the confusion matrix results presented in

Figure 17.

Table 6 shows that our proposed ontological bagging approach outperformed other classifiers in terms of accuracy, RMSE, and ROC

AUC. The results also demonstrate that our model has the strongest predictive power as it managed to correctly classify 146 out of 152 test images, followed by SVM, which correctly classified 135 test images. Our model registered the lowest RMSE of 0.532, implying that the model’s predictions are much closer to the actual values as compared to other models. Alongside RF, our ontological bagging algorithm recorded the highest ROC

AUC value of 0.99, meaning that the model did well in separating classes as compared to other models. GaussianNB performed the worst out of all the classifiers in terms of ROC

AUC and accuracy and misclassified 55 test images into the wrong classes. The outright performance of our model is attributed to the adoption of semantic relationships between image categories for the classification process; additionally, the bagging concept helped to minimize the error propagation of classifiers.

9. Discussion

The evaluation of image classification results is of paramount importance in order to determine the best suitable model for a given application. Classification performance is dependent on the types of images used and the domain application. Images are generally categorized into remote sensing images, natural images, medical images, and synthetic images; therefore, the performance of image classification approaches varies according to the type of images used. It is possible that a particular algorithm produces good results in remote sensing images but poor results in synthetic images. For this study, image classifications based on an ontology with deep learning were obtained for natural forest images. The classes used for the study were grassland, orchards, bare land, degraded forest, woodlands, sea, buildings, and shrubs. The results presented in

Table 2 show that the ontological bagging algorithm based on linear SVM outclassed other models with respect to RMSE and accuracy. The high accuracy is attributed to the ability of the model to suppress the error propagation of hierarchical classifiers. As presented in

Table 7, our ontological-based model managed to outperform other models such as [

11], which used ontology and an HMAX model to classify bird images into categories; ref. [

1] for classifying vehicles into their respective categories; ref. [

35] based on ontology and a CNN to classify natural images from an ImageNet dataset; and [

36] for natural image classification through the transfer learning of images obtained from the Caltech-101 image dataset. However, the ontology-based classification model presented in [

37] for classifying objects in urban and peri-urban areas slightly outperformed our model, with a classification score of 98%. A hybrid model of deep learning and SVM designed in [

38] to perform image classification on the Fashion-MNIST, Cifar10, Cifar100, and Animal10 datasets also attained a classification accuracy of 99%. The reason could be attributed to the nature and quality of the image dataset generated by the data augmentation process used in the study.