LHDNN: Maintaining High Precision and Low Latency Inference of Deep Neural Networks on Encrypted Data

Abstract

1. Introduction

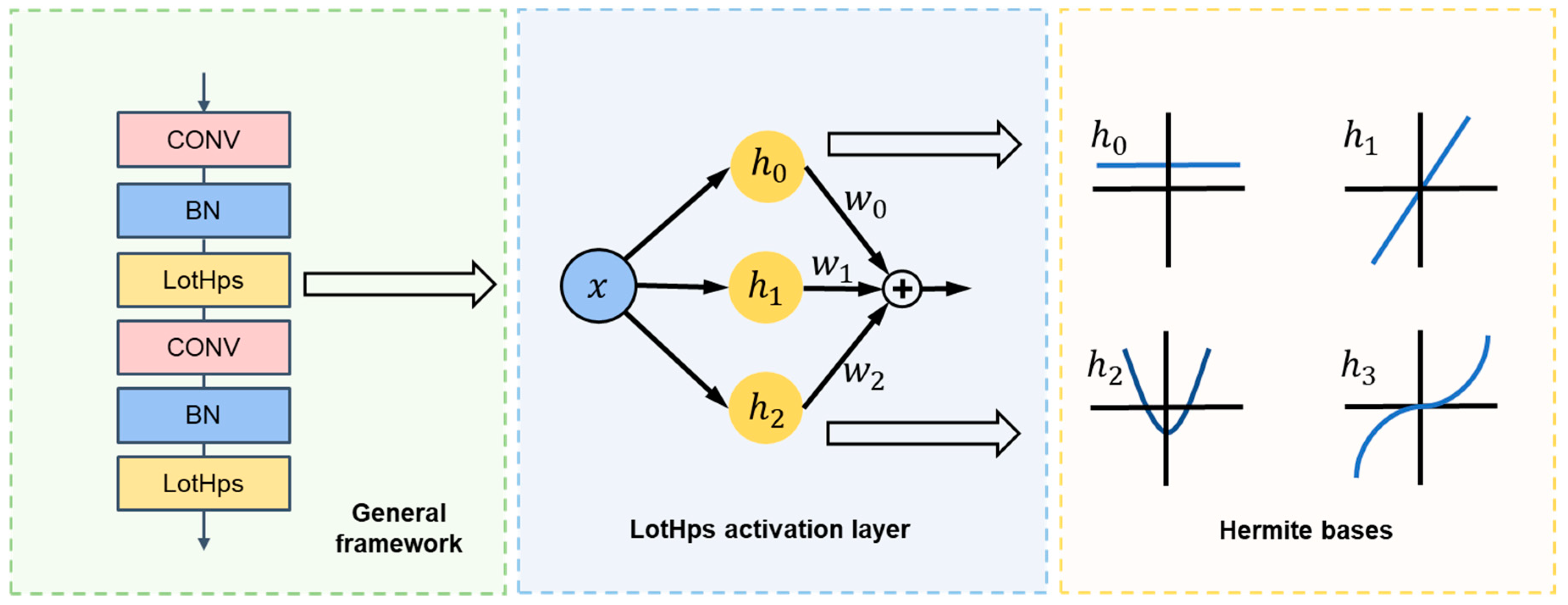

- We propose a low-degree Hermite deep neural network framework (called LHDNN), which employs a set of low-degree trainable Hermite polynomials (referred to as LotHps) as activation layers in the DNNs. In addition, LHDNN integrates a novel weight initialization and regularization module with LotHps, ensuring a more stable training process and a stronger model generalization ability.

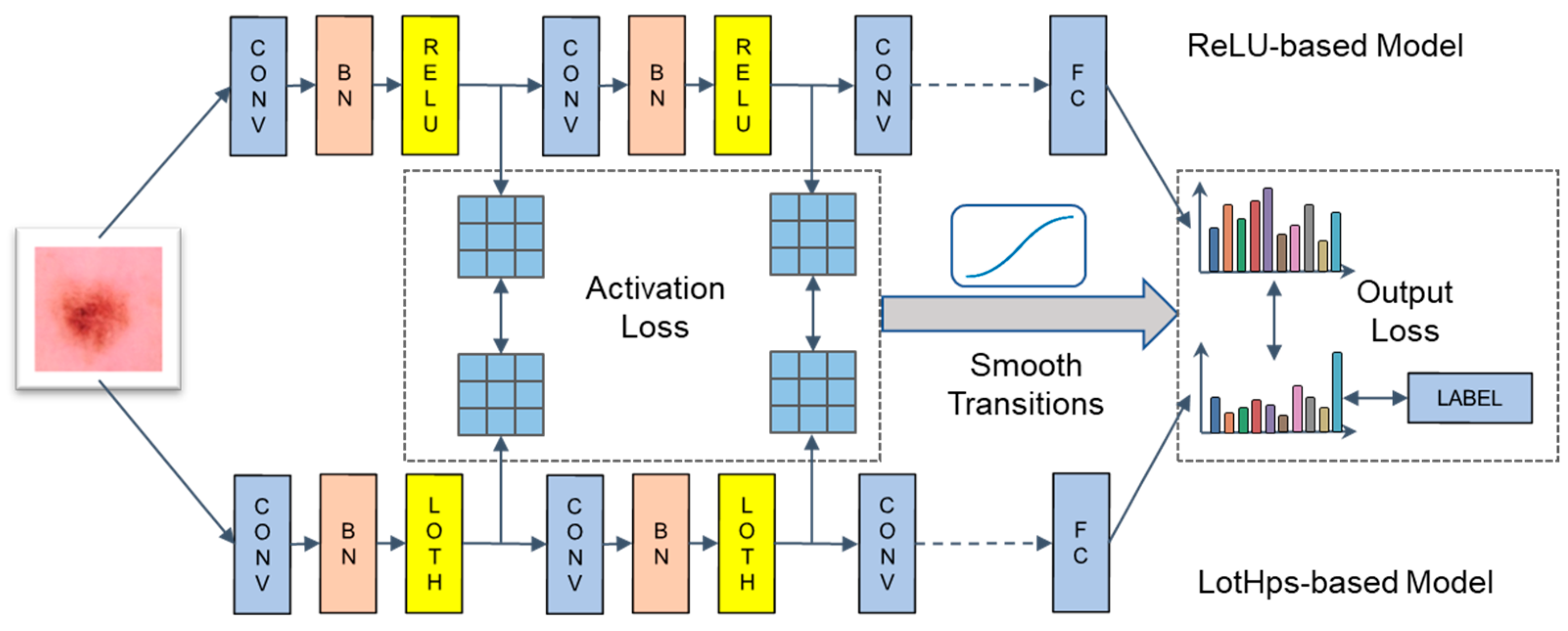

- We propose a variable-weighted difference training (VDT) strategy that uses the original ReLU-based model to guide the training of the LotHps-based model, thereby improving the accuracy of the LotHps-based model.

- Our extensive experiments on benchmark datasets MNIST, Skin-Cancer, and CIFAR-10 validated the superiority of LHDNN in inference speed and accuracy on encrypted data.

2. Related Work

3. Preliminaries

3.1. Fully Homomorphic Encryption

- : Given the security parameter , choose , choose an integer

- Sample output .

- Sample and , output .

- Sample and , output

- : For , sample and , output .

- : For , output .

- : Given two ciphertexts , output .

- : Given two ciphertexts , let , output .

- : Given a ciphertext at level , output .

3.2. Bootstrapping of CKKS

- ModRaise: If a ciphertext contains the plaintext , then , where , and h is the number of 1 in sk. The purpose of ModRaise is to increase the ciphertext modulus q to a large modulus Q, such that .

- CoeffToSlot: There is a modular reduction to be performed on the coefficients of the polynomial, but we need to approximate the modular reduction function using homomorphic addition and multiplication. Homomorphic addition and multiplication are done for the numbers in the slots, so we put the coefficients in the slots. This process is equivalent to a homomorphic ciphertext decoding operation, that is, for the matrix and , homomorphic calculation , to obtain two ciphertexts encrypting vectors and .

- EvalMod: The goal of EvalMod is to homomorphically compute the modular reduction function. Since the function is not a polynomial function, considering its periodicity, it can be approximated by a sine function to obtain . The Taylor polynomial is then used to approximate and the final approximation polynomial is obtained. In addition, the double angle formula and can be used to reduce the calculation cost [25].

- SlotToCoeff: SlotToCoeff is the inverse process of CoeffToSlot, which restores the numbers in the slots to the coefficients of the polynomial. That is, for the given two encoded vectors and , the linear transformation is computed.

4. The Proposed Method

4.1. Low-Order Trainable Hermite Polynomials (LotHps) Activation Layer

4.2. Weight Initialization and Regularization Module

4.3. Variable-Weighted Difference Training (VDT) Strategy

5. Implementation Details

5.1. DataSets

- MNIST [29]: The MNIST dataset consists of single-channel images of 10 handwritten Arabic numerals. It includes 50,000 images in the test set and 10,000 images in the training set, each with a size of 28 × 28 pixels. In total, there are 60,000 images in the MNIST dataset.

- Skin-Cancer [30]: The Skin-Cancer dataset consists of medical images of different types of skin cancer, with a total of 10,015 images belonging to seven different categories. We modified the size of all images to 32 × 32 pixels, and divided the dataset into a training set and a test set in an 8:2 ratio. Because the data was severely imbalanced, we performed data enhancement and resampling operations on the training data.

- CIFAR-10 [31]: The CIFAR-10 dataset consists of color images of 10 different objects, with a total of 60,000 images. It includes 50,000 images in the test set and 10,000 images in the training set, each with a size of 32 × 32 pixels. The training set is extended by random rotation and random clipping.

5.2. Model Architecture

5.3. Approximation Interval of Weight Initialization

5.4. Safety Parameter Setting

5.5. Inference Optimization

6. Evaluation

6.1. Plaintext Training

6.1.1. MNIST Dataset

6.1.2. Skin-Cancer Dataset

6.1.3. CIFAR-10 Dataset

6.2. Ciphertext Inference

6.2.1. Analysis and Comparison of Ciphertext Inference Results

6.2.2. Analysis of Decryption Error

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Zheng, Y.; Yuan, X.; Yi, X. Securely outsourcing neural network inference to the cloud with lightweight techniques. IEEE Trans. Dependable Secur. Comput. 2022, 20, 620–636. [Google Scholar] [CrossRef]

- Boulemtafes, A.; Derhab, A.; Challal, Y. A review of privacy-preserving techniques for deep learning. Neurocomputing 2020, 384, 21–45. [Google Scholar] [CrossRef]

- Gentry, C. Fully homomorphic encryption using ideal lattices. In Proceedings of the Forty-First Annual ACM Symposium on Theory of Computing, Bethesda, MD, USA, 31 May–2 June 2009; pp. 169–178. [Google Scholar]

- Falcetta, A.; Roveri, M. Privacy-preserving deep learning with homomorphic encryption: An introduction. IEEE Comput. Intell. Mag. 2022, 17, 14–25. [Google Scholar] [CrossRef]

- Li, M.; Chow, S.S.M.; Hu, S.; Yan, Y.; Shen, C.; Wang, Q. Optimizing Privacy-Preserving Outsourced Convolutional Neural Network Predictions. IEEE Trans. Dependable Secur. Comput. 2022, 19, 1592–1604. [Google Scholar] [CrossRef]

- Wang, J.; He, D.; Castiglione, A.; Gupta, B.B.; Karuppiah, M.; Wu, L. Pcnncec: Efficient and privacy-preserving convolutional neural network inference based on cloud-edge-client collaboration. IEEE Trans. Netw. Sci. Eng. 2022. [Google Scholar] [CrossRef]

- Zhang, Q.; Xin, C.; Wu, H. SecureTrain: An Approximation-Free and Computationally Efficient Framework for Privacy-Preserved Neural Network Training. IEEE Trans. Netw. Sci. Eng. 2022, 9, 187–202. [Google Scholar] [CrossRef]

- Dowlin, N.; Gilad-Bachrach, R.; Laine, K.; Lauter, K.; Naehrig, M.; Wernsing, J. CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Chabanne, H.; De Wargny, A.; Milgram, J.; Morel, C.; Prouff, E. Privacy-preserving classification on deep neural network. Cryptol. Eprint Arch. 2017, 2017, 35. [Google Scholar]

- Mohassel, P.; Zhang, Y. Secureml: A system for scalable privacy-preserving machine learning. In Proceedings of the 2017 IEEE Symposium on Security And Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 19–38. [Google Scholar]

- Hesamifard, E.; Takabi, H.; Ghasemi, M. Deep Neural Networks Classification over Encrypted Data. In Proceedings of the 9th ACM Conference on Data and Application Security and Privacy (CODASPY), Richardson, TX, USA, 25–27 March 2019; pp. 97–108. [Google Scholar]

- Lee, J.; Lee, E.; Lee, J.-W.; Kim, Y.; Kim, Y.-S.; No, J.-S. Precise approximation of convolutional neural networks for homomorphic ally encrypted data. arXiv 2021, arXiv:2105.10879. [Google Scholar]

- Lee, J.-W.; Kang, H.; Lee, Y.; Choi, W.; Eom, J.; Deryabin, M.; Lee, E.; Lee, J.; Yoo, D.; Kim, Y.-S.; et al. Privacy-Preserving Machine Learning With Fully Homomorphic Encryption for Deep Neural Network. IEEE Access 2022, 10, 30039–30054. [Google Scholar] [CrossRef]

- Bourse, F.; Minelli, M.; Minihold, M.; Paillier, P. Fast homomorphic evaluation of deep discretized neural networks. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 19–23 August 2018; pp. 483–512. [Google Scholar]

- Sanyal, A.; Kusner, M.; Gascon, A.; Kanade, V. TAPAS: Tricks to accelerate (encrypted) prediction as a service. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4490–4499. [Google Scholar]

- Lou, Q.; Jiang, L. SHE: A Fast and Accurate Deep Neural Network for Encrypted Data. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Folkerts, L.; Gouert, C.; Tsoutsos, N.G. REDsec: Running Encrypted DNNs in Seconds. IACR Cryptol. Eprint Arch. 2021, 2021, 1100. [Google Scholar]

- Meftah, S.; Tan, B.H.M.; Mun, C.F.; Aung, K.M.M.; Veeravalli, B.; Chandrasekhar, V. DOReN: Toward Efficient Deep Convolutional Neural Networks with Fully Homomorphic Encryption. IEEE Trans. Inf. Forensics Secur. 2021, 16, 3740–3752. [Google Scholar] [CrossRef]

- Meftah, S.; Tan, B.H.M.; Aung, K.M.M.; Yuxiao, L.; Jie, L.; Veeravalli, B. Towards high performance homomorphic encryption for inference tasks on CPU: An MPI approach. Future Gener. Comput. Syst. 2022, 134, 13–21. [Google Scholar] [CrossRef]

- Alsaedi, E.M.; Farhan, A.K.; Falah, M.W.; Oleiwi, B.K. Classification of Encrypted Data Using Deep Learning and Legendre Polynomials. In Proceedings of the ICR’22 International Conference on Innovations in Computing Research, Athens, Greece, 29–31 August 2022; pp. 331–345. [Google Scholar]

- Yagyu, K.; Takeuchi, R.; Nishigaki, M.; Ohki, T. Improving Classification Accuracy by Optimizing Activation Function for Convolutional Neural Network on Homomorphic Encryption. Advances on Broad-Band Wireless Computing, Communication and Applications. In Proceedings of the 17th International Conference on Broad-Band Wireless Computing, Communication and Applications (BWCCA-2022), Tirana, Albania, 27–29 October 2022; pp. 102–113. [Google Scholar]

- Cheon, J.H.; Kim, A.; Kim, M.; Song, Y. Homomorphic encryption for arithmetic of approximate numbers. In Proceedings of the International Conference on the Theory and Application of Cryptology and Information Security, Hong Kong, China, 3–7 December 2017; pp. 409–437. [Google Scholar]

- Cheon, J.H.; Han, K.; Kim, A.; Kim, M.; Song, Y. A full RNS variant of approximate homomorphic encryption. In Proceedings of the International Conference on Selected Areas in Cryptography, Calgary, AB, Canada, 15–17 August 2018; pp. 347–368. [Google Scholar]

- Cheon, J.H.; Han, K.; Kim, A.; Kim, M.; Song, Y. Bootstrapping for approximate homomorphic encryption. In Proceedings of the Annual International Conference on the Theory and Applications of Cryptographic Techniques, Tel Aviv, Israel, 29 April–3 May 2018; pp. 360–384. [Google Scholar]

- Ma, L.; Khorasani, K. Constructive feedforward neural networks using hermite polynomial activation functions. IEEE Trans. Neural Netw. 2005, 16, 821–833. [Google Scholar] [CrossRef] [PubMed]

- Bottou, L. Stochastic Gradient Descent Tricks. In Neural Networks: Tricks of the Trade; Springer: Cham, Switzerland, 2012; pp. 421–436. [Google Scholar]

- Baruch, M.; Drucker, N.; Greenberg, L.; Moshkowich, G. A methodology for training homomorphic encryption friendly neural networks. In Proceedings of the International Conference on Applied Cryptography and Network Security, Rome, Italy, 20–23 June 2022; pp. 536–553. [Google Scholar]

- LeCun, Y.; Cortes, C.; Burges, C. The MNIST Database of Handwritten Digits. 1998. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 18 October 2022).

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Nair, V.; Hinton, G. CIFAR-10; Canadian Institute for Advanced Research: Toronto, ON, Canada, 2010. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. Acm 2017, 60, 84–90. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Aharoni, E.; Adir, A.; Baruch, M.; Drucker, N.; Ezov, G.; Farkash, A.; Greenberg, L.; Masalha, R.; Moshkowich, G.; Murik, D.; et al. HeLayers: A Tile Tensors Framework for Large Neural Networks on Encrypted Data. arXiv 2020, arXiv:2011.01805. [Google Scholar] [CrossRef]

- Garimella, K.; Jha, N.K.; Reagen, B. Sisyphus: A cautionary tale of using low-degree polynomial activations in privacy-preserving deep learning. arXiv 2021, arXiv:2107.12342. [Google Scholar] [CrossRef]

- Wang, Z.; Li, P.; Hou, R.; Li, Z.; Cao, J.; Wang, X.; Meng, D.; Systems, D. HE-Booster: An Efficient Polynomial Arithmetic Acceleration on GPUs for Fully Homomorphic Encryption. IEEE Trans. Parallel 2023, 34, 1067–1081. [Google Scholar] [CrossRef]

- Chou, E.; Beal, J.; Levy, D.; Yeung, S.; Haque, A.; Fei-Fei, L. Faster cryptonets: Leveraging sparsity for real-world encrypted inference. arXiv 2018, arXiv:1811.09953. [Google Scholar]

- Lee, E.; Lee, J.-W.; Lee, J.; Kim, Y.-S.; Kim, Y.; No, J.-S.; Choi, W. Low-complexity deep convolutional neural networks on fully homomorphic encryption using multiplexed parallel convolutions. In Proceedings of the International Conference on Machine Learning, Baltimore, MA, USA, 17–23 July 2022; pp. 12403–12422. [Google Scholar]

- Al Badawi, A.; Jin, C.; Lin, J.; Mun, C.F.; Jie, S.J.; Tan, B.H.M.; Nan, X.; Aung, K.M.M.; Chandrasekhar, V.R. Towards the alexnet moment for homomorphic encryption: Hcnn, the first homomorphic cnn on encrypted data with gpus. IEEE Trans. Emerg. Top. Comput. 2020, 9, 1330–1343. [Google Scholar] [CrossRef]

| Dataset | Input Shape | Model | Params (MB) | Mul-Depth |

|---|---|---|---|---|

| MNIST | 1 28 28 | CNN-6 | 0.57 | 5 |

| Skin-Cancer | 3 32 32 | AlexNet | 88.74 | 7 |

| CIFAR-10 | 3 32 32 | ResNet-20 | 1.04 | 19 |

| Model | Integer Precision | Fractional Precision | Evalution Level | Degree | Bootstrapping Level | |

|---|---|---|---|---|---|---|

| CNN-6 | 128 | 10 | 50 | 14 | - | |

| AlexNet | 128 | 10 | 39 | 20 | - | |

| ResNet-20 | 128 | 12 | 48 | 9 | 13 |

| Model | Batchsize | Tile Shape | Packing Mode |

|---|---|---|---|

| CNN-6 | 64 | F-W-H-C-B | |

| AlexNet | 8 | C-W-H-F-B | |

| ResNet-20 | 16 | C-W-H-F-B |

| Dataset | Method | Model | Replacement Accuracy | Original Accuracy | Accuracy Difference |

|---|---|---|---|---|---|

| MNIST | CryptoNets [9] | C-A-P-A-F | 98.95 | 99.28 | −0.33 |

| PPCN [10] | [C-B-A-C-B-A-P]*3-F-F | 99.30 | 99.59 | −0.29 | |

| CryptoDL [12] | [[C-B-A]*2-P-C-B-A]*2-F-F | 99.52 | 99.56 | −0.04 | |

| Approx-ReLU [21] | [C-B-A-C-B-A-P]*2-F-A-F | 99.54 | 99.57 | −0.03 | |

| QuaiL [35] | LeNet-5 | 99.26 | 99.32 | −0.06 | |

| LHDNN | [C-B-A-C-B-A-P]*2-F-A-F | 99.62 | 99.57 | 0.05 | |

| Cancer | CryptoNets [9] | AlexNet | 67.26 | 81.14 | −13.88 |

| Approx-ReLU [21] | AlexNet | 75.85 | 81.14 | −5.29 | |

| LHDNN (no VDT) | AlexNet | 78.08 | 81.14 | −3.06 | |

| LHDNN | AlexNet | 81.10 | 81.14 | −0.04 | |

| CIFAR-10 | CryptoDL [12] | CNN-10 | 91.50 | 94.20 | −2.70 |

| PACN [13] | VGG-16 | 91.87 | 91.99 | −0.12 | |

| QuaiL [35] | VGG-11 | 82.85 | 90.46 | −7.61 | |

| QuaiL [35] | ResNet-18 | 85.72 | 93.21 | −7.49 | |

| BDGM [28] | AlexNet | 87.20 | 90.10 | −2.90 | |

| LHDNN (no VDT) | ResNet-20 | 88.97 | 91.58 | −2.61 | |

| LHDNN | ResNet-20 | 91.54 | 91.58 | −0.04 |

| Option | Dataset | Model | Initialization Context | Model Encoding | Input Encryption | Output Decryption | Inference |

|---|---|---|---|---|---|---|---|

| Time | MNIST (b = 64) | CNN-6 | 1.39 | 0.11 | 0.41 | 0.0045 | 142.62 |

| Cancer (b = 8) | AlexNet | 2.97 | 2.70 | 0.75 | 0.0049 | 244.90 | |

| CIFAR-10 (b = 16) | ResNet-20 | 8.18 | 0.53 | 0.18 | 0.0233 | 1027.96 | |

| Memory | MNIST (b = 64) | CNN-6 | 1.67 | 0.0021 | 2.26 | 0.0005 | 19.12 |

| Cancer (b = 8) | AlexNet | 5.13 | 0.1735 | 3.94 | 0.0005 | 71.91 | |

| CIFAR-10 (b = 16) | ResNet-20 | 11.62 | 0.0035 | 1.05 | 0.0002 | 83.13 |

| Dataset | Method | Model | Accuracy | Amortized Time | Memory Usage | |

|---|---|---|---|---|---|---|

| MNIST | CryptoNets [9] | CNN-4 | 98.95 | 249.6 | N/A | 80 |

| FCryptoNets [37] | CNN-4 | 98.71 | 39.1 | N/A | 128 | |

| CryptoDL [12] | CNN-4 | 99.25 | 148.9 | N/A | 80 | |

| HCNN-CPU [39] | CNN-3 | 99.00 | 90.32 | N/A | 76 | |

| LHDNN | CNN-6 | 99.62 | 2.23 | 6.91G | 128 | |

| Cancer | Approx-ReLU [21] | AlexNet | 75.85 | 45.68 | 32.89G | 128 |

| LHDNN | AlexNet | 81.10 | 30.61 | 24.58G | 128 | |

| CIFAR-10 | FCryptoNets [37] | CNN-8 | 75.99 | 22372 | N/A | 128 |

| CryptoDL [12] | CNN-9 | 91.50 | 11686 | N/A | 80 | |

| PACN [14] | ResNet-20 | 92.43 2.65 | 10602 | 172G | 111.6 | |

| LDCN [38] | ResNet-20 | 91.31 | 79.46 | N/A | 128 | |

| LHDNN | ResNet-20 | 92.28 2.58 | 64.25 | 34.63G | 128 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, J.; Zhang, P.; Zhu, H.; Liu, M.; Wang, J.; Ma, X. LHDNN: Maintaining High Precision and Low Latency Inference of Deep Neural Networks on Encrypted Data. Appl. Sci. 2023, 13, 4815. https://doi.org/10.3390/app13084815

Qian J, Zhang P, Zhu H, Liu M, Wang J, Ma X. LHDNN: Maintaining High Precision and Low Latency Inference of Deep Neural Networks on Encrypted Data. Applied Sciences. 2023; 13(8):4815. https://doi.org/10.3390/app13084815

Chicago/Turabian StyleQian, Jiaming, Ping Zhang, Haoyong Zhu, Muhua Liu, Jiechang Wang, and Xuerui Ma. 2023. "LHDNN: Maintaining High Precision and Low Latency Inference of Deep Neural Networks on Encrypted Data" Applied Sciences 13, no. 8: 4815. https://doi.org/10.3390/app13084815

APA StyleQian, J., Zhang, P., Zhu, H., Liu, M., Wang, J., & Ma, X. (2023). LHDNN: Maintaining High Precision and Low Latency Inference of Deep Neural Networks on Encrypted Data. Applied Sciences, 13(8), 4815. https://doi.org/10.3390/app13084815