1. Introduction

Color is an essential information for understanding the world around us, as human eyes are significantly more sensitive to color than to black and white. Therefore, color images provide us with more abundant visual information. Image colorization refers to assigning new colors to each pixel of a grayscale image. Nowadays, image colorization has been extensively applied in various fields, such as artistic creation [

1], remote sensing [

2,

3], medical imaging [

4], comics [

5], infrared imaging [

6], etc. Infrared radiation is emitted by objects and can be captured by infrared cameras, producing infrared images. Infrared imaging technology originated in the military field, where it was used to manufacture a series of military products such as aiming devices and guidance heads. With the development and maturity of infrared imaging technology, the application scenarios of infrared images have gradually expanded. Infrared cameras can be found in agriculture [

7], medicine [

8], electricity [

9], and other fields. Infrared thermal imaging technology can be used for various types of detection, such as equipment failure detection and material defect detection [

10], and is widely used in the steel industry. Infrared imaging captures information that is invisible to the human eye, which makes it valuable. However, infrared images captured at night are grayscale images that lack accuracy and details, limiting their interpretability and usability, and causing great difficulties in post-processing. To address the problem of background residue or target defect in the detection of infrared dark targets in complex marine environments [

11], infrared image colorization can be used to separate targets from the background, thereby improving detection accuracy. Due to the potential of infrared images in the aforementioned fields of application, there has been a great deal of attention paid to infrared image colorization.

There are two main categories of traditional colorization methods: user-assisted techniques based on manual painting and reference example-based methods. The former method relies on manually applying partial colorization to the image, which can be subject to human subjective bias and ultimately affect the quality of the coloring results. The latter relies heavily on the reference image used, and using an inappropriate example can lead to colorization that is grossly mismatched with the actual colors in the image. Additionally, the complexity and unique characteristics of infrared images make it difficult for these methods to fully leverage the information within the image, resulting in low-quality colorization results. Therefore, these two traditional methods are not well-suited for the task of infrared image colorization.

In recent years, deep learning has been widely applied in the field of computer vision. This approach has greatly solved the problem of complex human–machine system interaction processes that exist in the first two methods. By training machines to autonomously complete the coloring task, the application of deep neural networks to image coloring problems has also received good feedback [

12,

13,

14,

15].

Colorizing infrared images presents unique challenges compared to colorizing grayscale images due to the need to estimate both luminance and chromaticity. Berg et al. [

16] proposed two different methods to estimate color information for infrared images, with the first method estimating both luminance and chromaticity and the second method predicting chromaticity using grayscale image colorization after predicting the luminance. However, the lack of corresponding datasets for NIR and RGB images limited evaluations to traffic scenes. Nyberg et al. [

17] addressed this issue by using unsupervised learning with CycleGAN to generate corresponding images, but the approach suffered from distortion issues. Xian et al. [

18] tackled the challenge of modal differences between infrared and visible images by generating grayscale images as auxiliary information and using point-by-point transformation for single-channel infrared images.

Traditional grayscale image coloring methods have a complicated workflow that cannot be applied to colorizing NIR images. The GAN network, with its unique network structure and training mechanism, has been widely applied by researchers in the field of infrared image coloring [

19,

20,

21,

22]. Wei et al. [

19] proposed an improved Dual GAN architecture that uses two deep learning networks to establish the translation relationship between NIR and RGB images without the need for prior image pairing and labeling. Xu et al. [

20] proposed a DenseUnet generative adversarial network for colorizing near-infrared (facial) images. The DenseNet effectively extracts facial features by increasing the network depth, while the Unet preserves important facial details through skip connections. With improvements to the network structure and loss constraints, this method can minimize facial shape distortion and enrich facial detail information in near-infrared facial images. Although there has been some progress in converting infrared images to color images, the problem of semantic coding entanglement and geometric distortion remains unresolved. Luo et al. [

21] therefore proposed a top-down generative adversarial network called PearlGAN that aligns attention and gradient. By introducing attention-guided and loss modules, and adding a gradient alignment loss module, they reduced the ambiguity of semantic coding and improved the edge consistency of input–output images. However, due to an incomplete understanding of image features and limited information acquisition in existing methods, issues such as color leakage and loss of details still exist. Li et al. [

22] introduced a multi-scale attention mechanism into the task of infrared and visible light image fusion, extracting attention maps from multi-scale features of both types of images and adding them to the GAN network to preserve more details. Liu et al. [

23] also addressed this issue by proposing a deep network for infrared and visible light image fusion, consisting of a feature learning module and a fusion learning mechanism, and designing an edge-guided attention mechanism on multi-scale features to guide attention to common structures during the fusion process. However, because the network is trained based on edge attention, during image reconstruction, the middle features tend to pay more attention to texture details, which makes it less effective when the source image contains a large amount of noise.

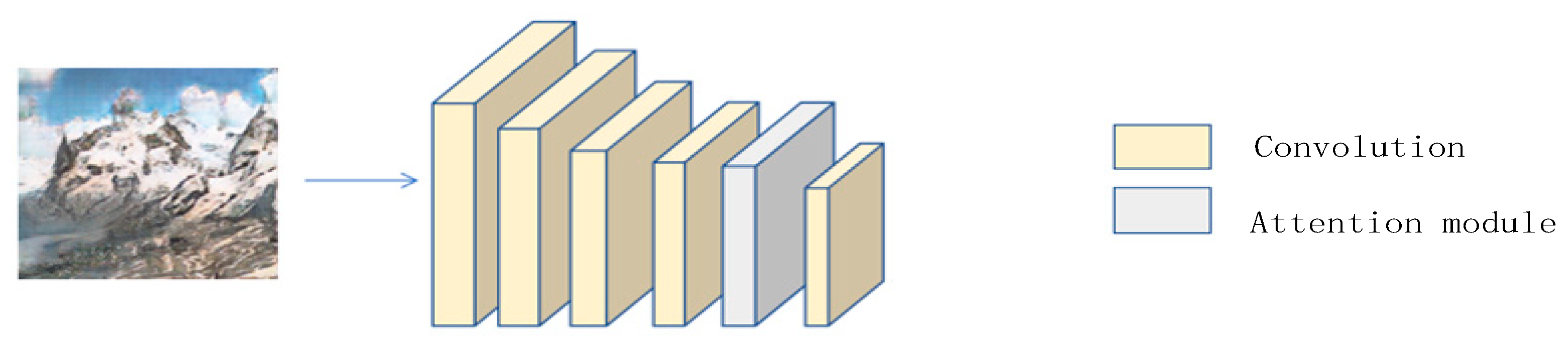

Building upon the previous methods, this paper proposes an infrared image coloring algorithm based on the CGAN with a multi-scale feature fusion and attention mechanism. CGAN is an extension of GAN [

24], which controls the image generation process by adding conditional constraints, resulting in better image quality and more detailed output. In this work, we improved the generator architecture of CGAN by incorporating a multi-scale convolution module with three types of convolutions in the U-Net network to fuse different scale features, enhance the network’s feature extraction ability, improve learning speed and semantic understanding ability, and address issues such as color leakage and blurring during the coloring process. We added an attention module to the discriminator, which contains both channel attention and spatial attention, to filter the feature layers from a channel perspective and select important regions on the feature map. This allows the network to focus on useful features and ignore unnecessary ones, while also improving the discriminator’s effectiveness and efficiency. By combining the improvements to the generator and discriminator, a new network with multi-scale feature fusion and attention module is obtained. Finally, we tested our proposed method on a near-infrared image dataset that combines the advantages of both infrared and visible images by preserving more details, edges, and texture features from the visible light images while retaining the benefits of the infrared images.

2. Related Work

2.1. Generating Adversarial Network

GAN is designed from game theory, mainly the idea of two-person zero-sum game in game theory, and this idea is introduced into the training of generator (G) and discriminator (D), and the process of G and D training is the process of gaming these two networks [

25].

The generator produces generated data that are close to the real data, and then these data are scored by the discriminator. The higher the score, the closer the discriminator thinks the image is to the real image. So, the generator will improve its ability to generate images that are as close to the real data as possible, so that the discriminator can give it a high score. The discriminator, moreover, will improve its ability to discriminate the image and maximize the distinction between the real data and the generated data. In the process of distinction, it will give the generated data a low score, as close to 0 as possible. In contrast, it will give the real data a high score, as close to 1 as possible.

The network structure of GAN is shown in

Figure 1.

The input noise z passes through the generator G, which generates the data. At this point, the generator G wants to confuse the discriminator and get a higher score, that is, the generator’s purpose is to output as close as possible to 1. The role of discriminator D is to discriminate between true and false, and D wants to give the generated data a low score and the real data a high score, so the discriminator’s purpose is to output as close as possible to 0 and as close as possible to 1, so as to achieve the purpose of correctly distinguishing the true and false data.

The ultimate goal of the network is to get a generator with good performance. This will ensure that, eventually, the data generated by the generator is close to the real data, when the discriminator has discriminated it.

The objective function of GAN is as follows [

25]:

where

is noise, and

is real data.

In Equation (1), the discriminator model expects to distinguish the real data from the generated data to the greatest extent possible. The image passes through the discriminator, the discriminator will score the image, the closer the score is to 1, the more the discriminator tends to think that the input is the real data; the closer the score is to 0, the more the discriminator tends to think that the input is the generated data, so to maximize and . The generator aims to produce a generated image that is close to the real image, to let the discriminator give itself a high score. The expected score is close to 1, to minimize . That is, for the generator G to make as small as possible, the discriminator D has to make as large as possible.

With the development of GAN, many variants of GAN have been developed, such as DCGAN, CGAN, and CycleGAN. In this paper, we chose CGAN to improve it.

2.2. Conditional Generative Adversarial Network

CGAN was proposed by Mirza et al. in 2014 [

24], because the original GAN direction is not fixed and the training effect is not stable. For example, if the training dataset contains species with large differences such as flowers, birds, and trees, it is impossible to control the type of output at the time of testing. Therefore, people began to try to add some a priori conditions to GAN to let the direction of GAN be controlled. CGAN is able to add conditional information to the generative and discriminative models, the conditional information can be any information you want to add, such as class labels, etc. In the paper, the authors use y to represent the auxiliary information. CGAN makes the application of GAN more extensive, for example, by using CGAN to accomplish the task of converting input text into images, and controlling the output content by adding conditions. The structure of the network does not change in this process, and the training process is the same as that of GAN, only the input changes. The model structure of CGAN is such that the auxiliary information is input together with the noise in the generator, and the auxiliary information is input together with the real data in the discriminator.

The objective function of CGAN is basically the same as that of GAN, but CGAN will input the conditions at the same time in the input, and its objective function is as follows [

24]:

CGAN network structure is shown in

Table 1.

The structure of the discriminator is shown in

Table 2.

3. Improve Image Colorization Generation Network

Since coloring techniques nowadays still suffer from problems such as color leakage and semantic errors, this paper will improve the generative adversarial network base generator by proposing to incorporate multi-scale convolutional modules to aggregate features of various scales.

3.1. U-Net Generation Network

The U-Net network is a model used for semantic segmentation [

26]. It is called U-Net because of its U-shaped network structure. The U-Net is symmetrical on the left and right sides, with the left side performing downsampling for feature extraction, and the right side performing upsampling for feature reconstruction. Since infrared images usually contain some weak edge information, the U-Net network is better able to preserve these details in image colorization, resulting in higher image accuracy. Compared to VGG-16 and ResNet, the U-Net network has fewer parameters and trains faster. Moreover, in some infrared image colorization tasks, there may be a large imbalance in the number of pixels of different colors, but the structure of the U-Net network allows it to better handle such unbalanced datasets.

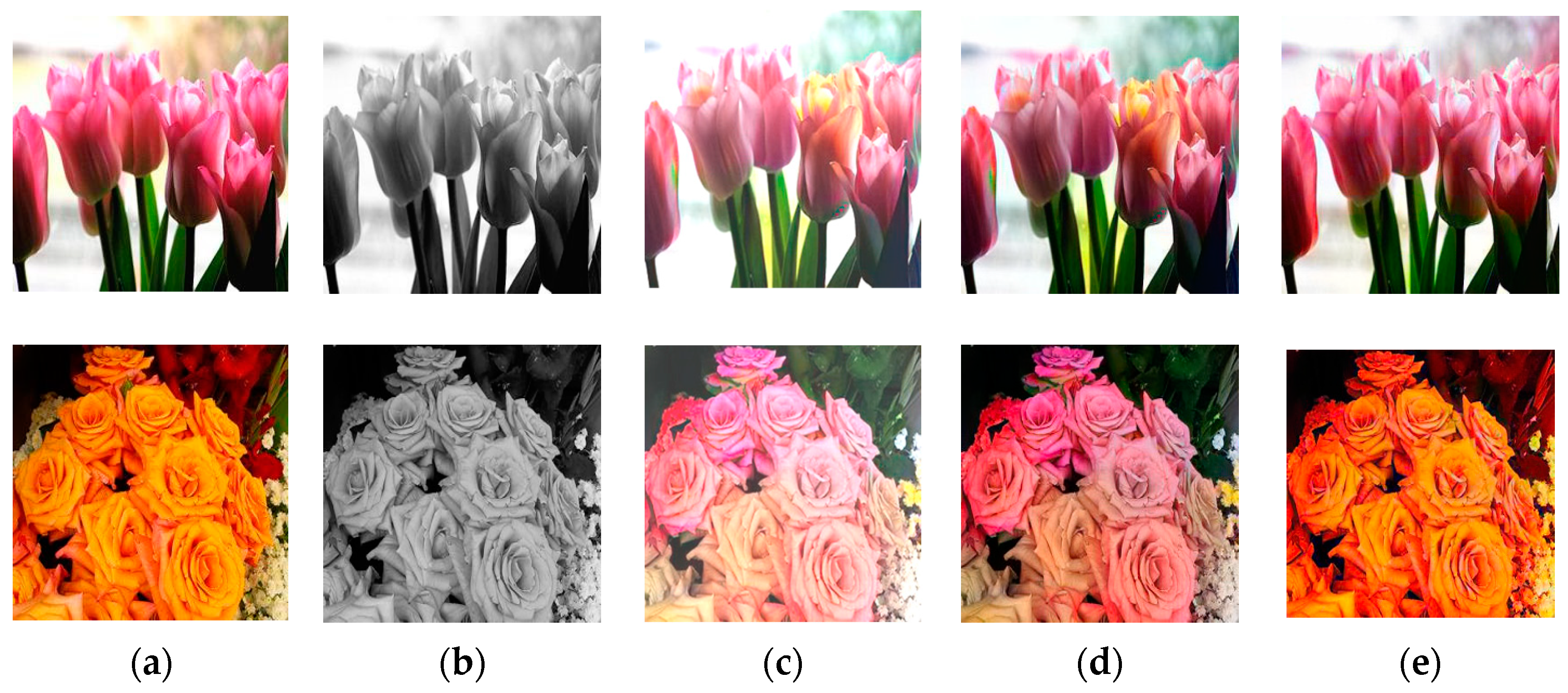

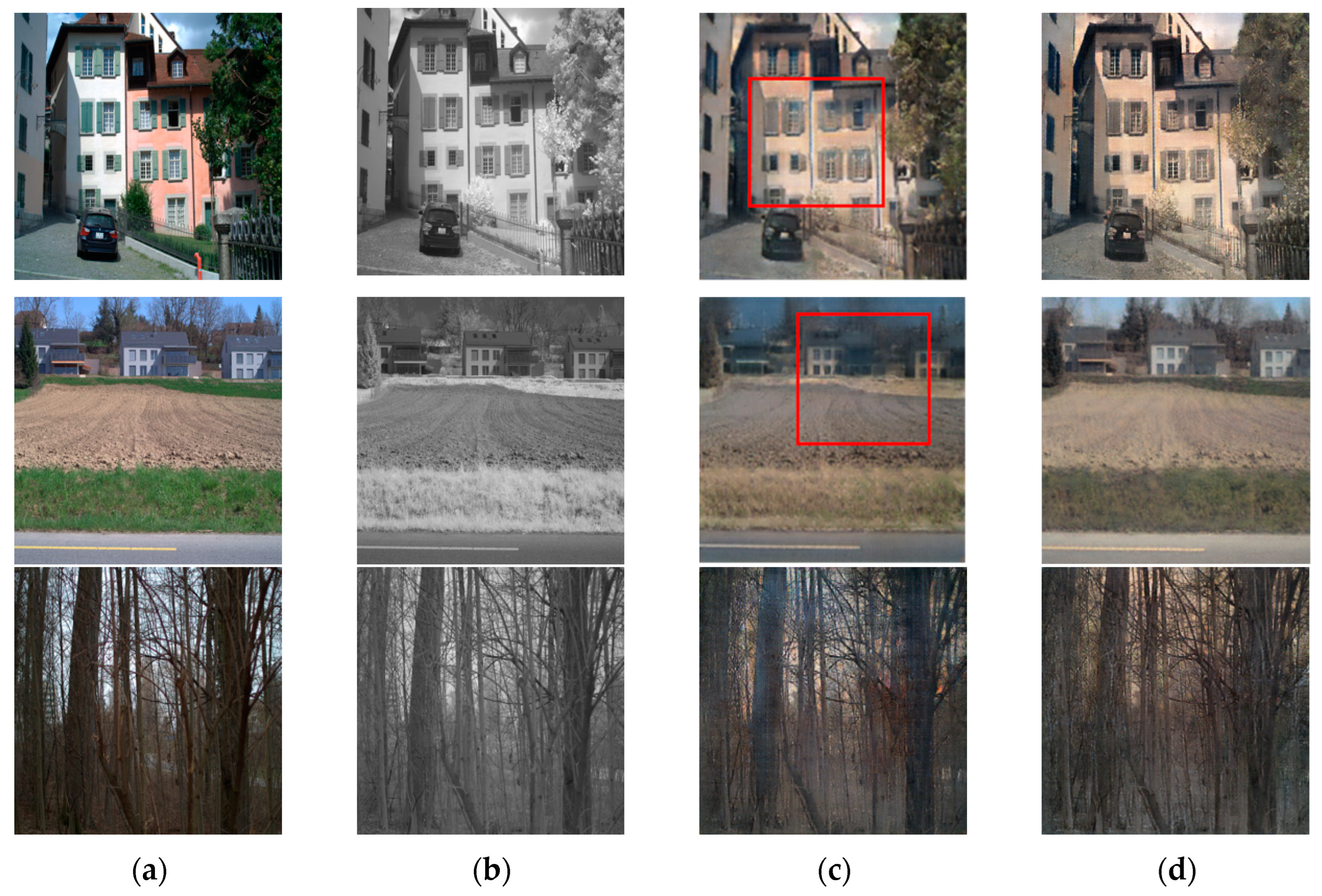

We compared the performance of U-Net with two other networks for colorizing near-infrared images. As shown in

Figure 2, VGG-16 produces images with low saturation and color distortion after colorization. Although ResNet improves upon the colorization results of VGG-16, it still cannot fully restore the colors of the original image. Moreover, the images colorized by U-Net are closest to the real images, with clearer object edges. Therefore, we chose U-Net as the generator and made improvements to it.

As the number of convolutions increases, each convolutional layer loses some information from the previous layer’s feature map. This loss of information can have a significant impact on the quality of the reconstructed image during upsampling. However, the U-Net network utilizes skip connections to concatenate and merge low-level and high-level feature maps, resulting in feature maps that contain both low-level and high-level information. For U-Net, information from each scale is important as it can capture multi-scale feature information and thus improve feature extraction capability. The U-Net structure is shown in

Figure 3.

3.2. Adding a Multi-Scale Feature Fusion Module to Improve the Generative Network U-Net

The existing algorithms for infrared image coloring still suffer from color leakage and semantic unclearness, leading to the loss of details, which is caused by insufficient extraction of features. Additionally, GAN includes two parts, generator G and discriminator D, where G generates data directly, and the proposed features by G in the downsampling process will directly affect the quality of reconstructed images. So, in this paper, in order to enhance the understanding of the semantic features of the image, the multi-scale feature fusion module is added to the generator. The improved network, by expanding the receptive field through atrous convolution, uses group convolution to motivate the network to have a good understanding of the features even with the information of only local channels. Shift convolution, again by permuting the channels, allows the network to have a deeper understanding of the features. Finally, the features are fused together to improve the feature extraction ability of the whole generator.

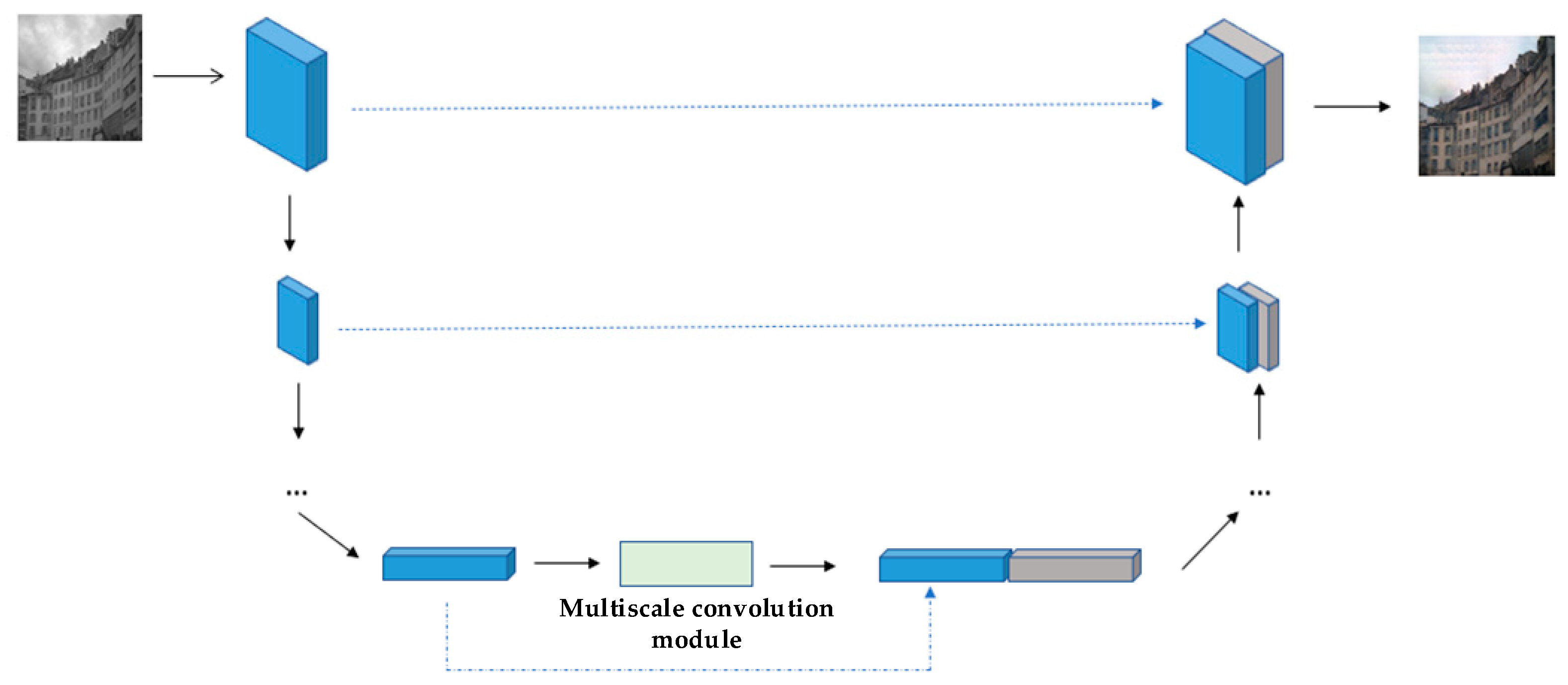

This subsection improves on the U-Net by adding multi-scale feature extraction to enhance the performance of the generator. The improved network structure is shown in

Figure 4.

The original U-Net includes 8 convolutional layers, with convolutional kernel size k = 4, step size s = 2, and padding layer padding = 1 used in the convolution operation. The size of the feature map is reduced by half for each convolution operation throughout the downsampling process. The number of channels is increased from 1 channel for the input, to 64 channels, and then exponentially to 512 channels for the hold. For upsampling, the transpose convolution operation is also performed 8 times. The transposed convolution kernel size k = 4, s = 2, and padding = 1.

In order to effectively prevent color leakage and deepen the network’s understanding of semantic features, the multi-scale feature extraction module is added after the last layer of U-Net downsampling to fuse the features.

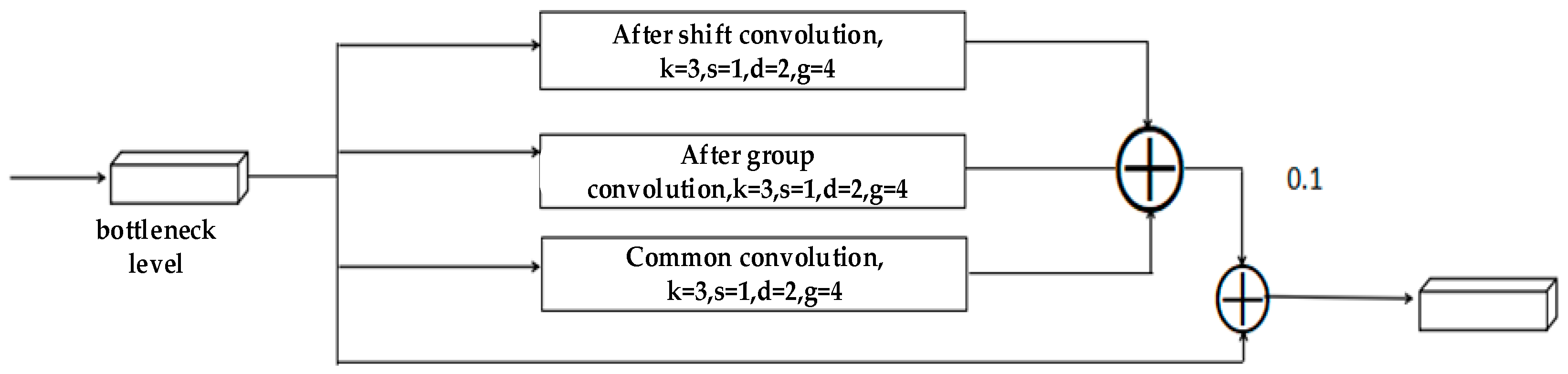

The multi-scale convolution module is shown specifically in

Figure 5.

The U-Net is initially composed of eight convolutional layers with a downsampling structure, no pooling layer, and the activation function of the network is the LeakyReLU function. Then, the multi-scale convolution module is added after the bottleneck layer of U-Net, which has three convolutions in parallel. The first convolution employs shift convolution and separates the network’s feature map into two parts, labeled A and B. The AB feature maps are transposed to create the BA combination, which is then subjected to group convolution and dilated convolution with g = 4 and d = 2, respectively. This increases the receptive field from 3 × 3 to 5 × 5. The second convolution is grouped convolution, which is divided into four groups and then convolved separately, and the last convolution layer is ordinary convolution. The three convolution operations are summed in parallel, then multiplied by a factor of 0.1 and summed with the input before convolution. This is equivalent to adding another residual operation to transpose the output of this module for convolution.

Group convolution improves the learning speed of the network by reducing the number of parameters, and can perform a whole action on different features. While ordinary convolution extracts only one kind of feature, grouped convolution can divide the feature layer into several parts, extract different features from them, and aggregate the different features. By using atrous convolution, the receptive field of the network is increased, and the loss of information in increasing the receptive field is avoided as much as possible, and at the same time, multi-scale contextual information can be obtained, which enhances the ability of the network to extract features.

4. Improve Image Colorization Discriminant Network

The training process of generating adversarial networks is the process of gaming between the generator and the discriminator. Improving the discriminator’s ability to discriminate images can also drive the generating ability of the generator to improve, so the discriminator will be modified in this section.

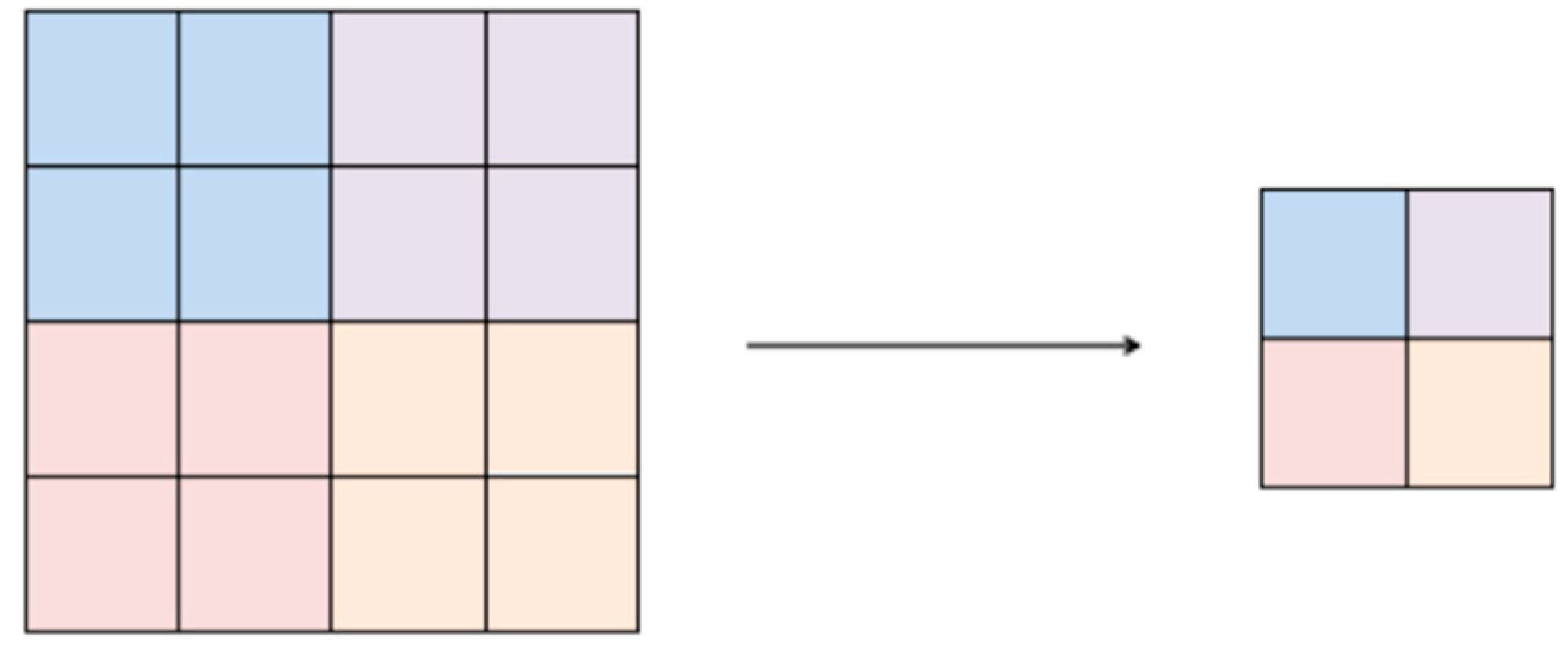

4.1. Discriminant Network PatchGAN

The “PatchGAN” is a commonly used discriminator in GAN. When GAN was first proposed, the discriminator only output a single evaluation score to judge whether the generated image was real or fake, and this score was obtained by evaluating the entire image data. PatchGAN uses the full convolution form, and the final output is not a single evaluation value, but the input image is convolved layer by layer to map the input image into the form of an

matrix. This matrix replaces the one evaluation value of the previous GAN into a separate evaluation of

regions, which is what Patch means. While the original GAN uses only one value to evaluate the image, PatchGAN produces a multi-value evaluation by evaluating

regions, and obviously PatchGAN focuses on more details. It was demonstrated in [

27] that the chosen N can be much smaller than the size of the global image and still give good results. This experimental result also demonstrates that N can be chosen to be a smaller number, which also reduces the number of parameters and works for images of arbitrary size. The pattern of PatchGAN for patch scoring is shown in

Figure 6.

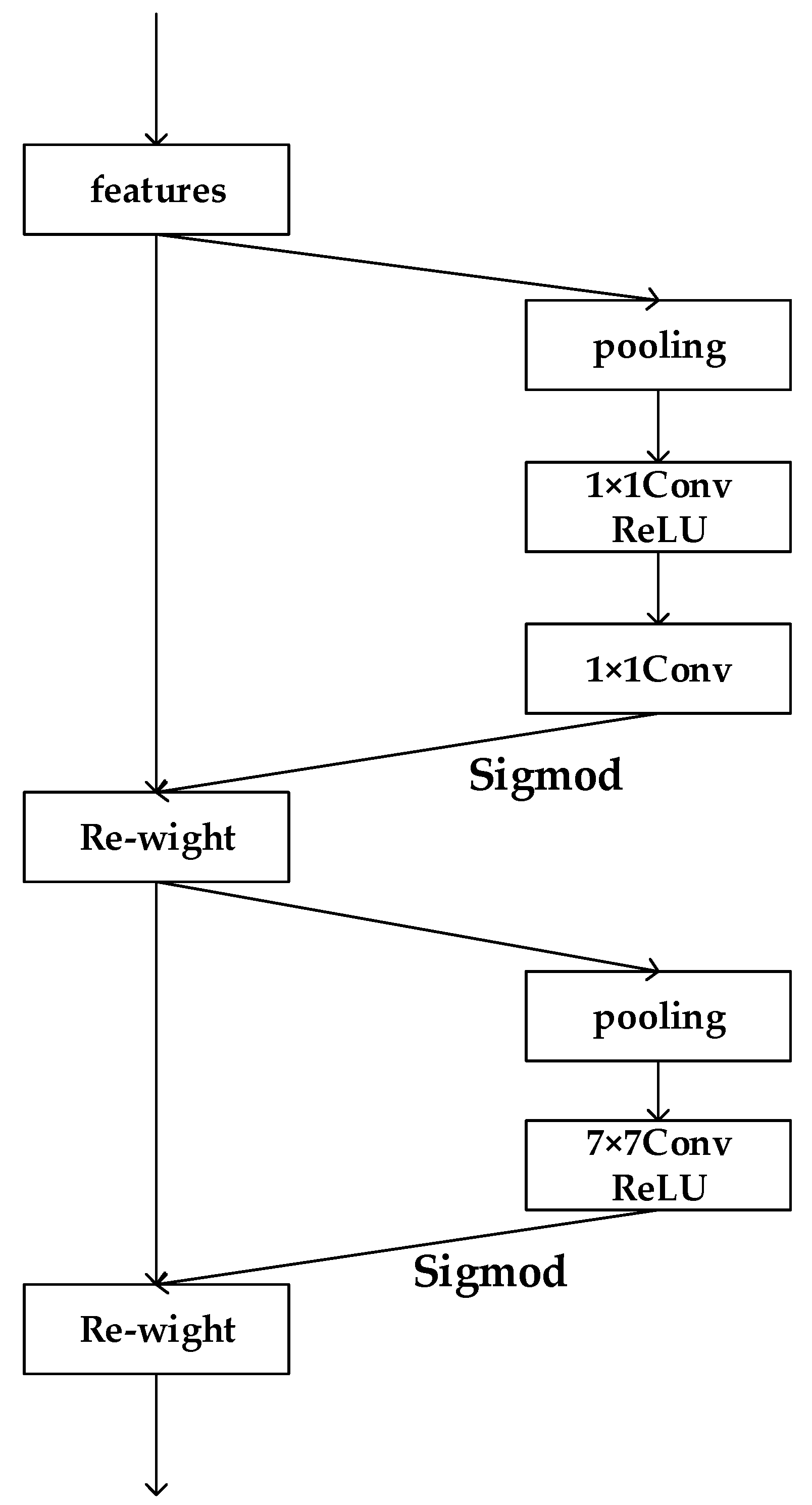

4.2. Attention Mechanism

Convolutional Block Attention Module (CBAM) is a simple and effective attention module [

28], which consists of two parts: channel attention and spatial attention. CBAM calculates the attention weights corresponding to the two dimensions. It uses the attention weights to weigh the input features so that the feature map can highlight the important features more. Each channel is a feature, and channel attention allows the network to focus more on meaningful features. Not all regions of a feature map are necessarily useful information, and each region has a different level of importance. Therefore, spatial attention lets the network find important regions to focus on.

The structure diagram of channel attention is shown in

Figure 7.

The operation process of CBAM is divided into two steps, the first step is to calculate the channel attention

Mc, and then to calculate the spatial attention

Ms. As shown in

Figure 8, F denotes the input feature map; the input feature map is pooled by maximum pooling and mean pooling, and after pooling, the summation operation is performed through the fully connected layer, and finally, the channel attention

Mc is obtained through the sigmod function. The feature map

F is subjected to the operation of

Mc, resulting in a further feature map. The feature map is then subjected to maximum pooling and mean pooling, and the features are stitched together after pooling, and then obtain the spatial attention

Ms by convolution and sigmod. Finally,

Ms is multiplied with the feature map to get the features that pass through the attention channel. The whole process can be formulated as follows:

4.3. Improved Discriminative Network PatchGAN with Added Attention Module

The GAN network consists of two modules, the generator module and the discriminator module. In this paper, we first improved the generator by adding a multi-scale feature fusion module to the generator U-Net network to improve the feature extraction ability of the generator and improve the coloring effect of the infrared images. In the GAN network, the generator and the discriminator promote each other through confrontation. When the ability of the generator network improves, the discriminator must improve its own discriminatory ability to not be confused by the generator.

Therefore, in this section, we improved the discriminator by adding an attention module to the discriminator network PatchGAN. When the input passes through, it will first pass through the channel attention and then the spatial attention. Through the attention module, we obtain the channel attention weight, and then multiply the input features with the channel attention weight to get a new feature map, which highlights the features and ignores the unimportant features to complete the filtering of the feature layer in the channel perspective. Then, the spatial attention module is used to filter the important regions on the feature map as well. The attention module allows PatchGAN to focus more on useful features and ignore unnecessary features, and also improves the learning efficiency of the network.

The structure of PatchGAN is composed of five convolutional layers, using LeakyReLU as the activation function. At the end of the fourth convolution, the attention mechanism module is added, which is composed of two parts, channel attention and spatial attention, in series. The structure of the improved network is shown in

Figure 9. The channel attention module goes through a mean pooling and maximum pooling first, which will go through a convolution kernel of 1 × 1 and a ReLU activation function, and then a convolution kernel of 1 × 1 and a Sigmod activation function. The output is fed to the spatial attention module, which goes through a convolution with a convolution kernel of 7 × 7 and a Sigmod activation function, as shown in

Figure 10.

Finally, the generator and discriminator are improved simultaneously to reconstruct the network. The improvement of the network consists of two parts, the first part improves the generator by adding a multi-scale feature fusion module to it to improve the semantic understanding of the network, and the second part improves the discriminator by adding an attention mechanism to the discriminator network to make the network more focused on useful features and improve the network recognition ability. Both networks have further improvements in feature extraction ability, so the problems of semantic uncertainty and color leakage for infrared image coloring are improved, and the next experimental validation will be performed on the NIR image dataset.

6. Discussion

In this paper, we proposed a method to improve the infrared image colorization problem by using a CGAN network that integrates multi-scale features into the generator and adds an attention mechanism to the discriminator. Prior literature has also attempted to improve networks using multi-scale feature modules and attention mechanisms [

22,

23,

31]. For example, Ref. [

31] proposed a multi-scale residual attention network (MsRAN), while Ref. [

22] integrated multi-scale attention mechanisms into the generator and discriminator of a GAN to fuse infrared and visible light images (AttentionFGAN) and [

23] proposed a deep network that concatenates feature learning modules and fusion learning mechanisms for infrared and visible light image fusion.

The main improvement direction for infrared image colorization is to focus the network’s attention on the most important areas of the image and retain more texture information. We also used multi-scale feature modules and attention mechanism modules but, unlike previous studies, we chose to improve the CGAN network. The CGAN network is more suitable for tasks that require generating images with specified conditional information than the GAN network. Infrared image colorization tasks require generating color images, and images generated solely by the GAN generator may have issues such as unnatural colors, blurriness, and distortion. The CGAN generator can generate corresponding color images based on the input infrared images, and the input infrared images as conditional information can help the generator produce more accurate corresponding color images, thus solving the above issues. We then added the multi-scale feature module and attention mechanism to the generator and discriminator separately, rather than adding both modules to the generator or discriminator simultaneously. Our goal was to use the game theory of GAN networks, allowing the generator and discriminator networks to compete and promote each other using different methods.

We selected a dataset that included many images with texture details, such as buildings with tightly arranged windows and dense trees. However, due to our focus on making the edges of the subject object smaller and clearer to solve color leakage and edge blur problems, there may be some deviation in the background color of the sky in some images. In future work, we will consider pre-processing the images before inputting them into the CGAN network to enhance the image quality and color restoration ability. After generating color images, post-processing can also be applied to the output images, such as denoising, smoothing, and enhancing contrast, to improve the quality and realism of the output images. The quality and quantity of the dataset are also crucial for the effectiveness of infrared image colorization. Therefore, future research can try to collect more high-quality infrared image datasets and conduct more in-depth studies based on the dataset.

7. Conclusions

In our study on infrared image colorization, we have identified issues with existing networks such as color leakage and semantic ambiguity. In this paper, we proposed a solution to address these issues by improving the generator and discriminator positions. Specifically, we added a multi-scale feature fusion module after the bottleneck layer of the U-Net generator network, which enhances the network’s understanding of features and improves its semantic recognition capabilities. For the discriminator, we added an attention mechanism module to the network, allowing it to focus more on useful features and improve its recognition capabilities. We also paid attention to dataset selection and chose scene datasets that are most likely to be applied in actual infrared image scenarios, and near-infrared image datasets that retain more detailed features for testing. Through comparative experiments, we found that our proposed network with attention and multi-scale feature fusion achieved a 5% and 13% improvement in PSNR and SSIM, respectively, compared to the Pix2pixCGAN network, demonstrating that our improvements have effectively solved the problem of color leakage and semantic ambiguity in the original network. Our study focuses solely on infrared images, but future research could consider infrared videos, which require greater attention to the continuity between frames to avoid color jumping.