CNN Attention Enhanced ViT Network for Occluded Person Re-Identification

Abstract

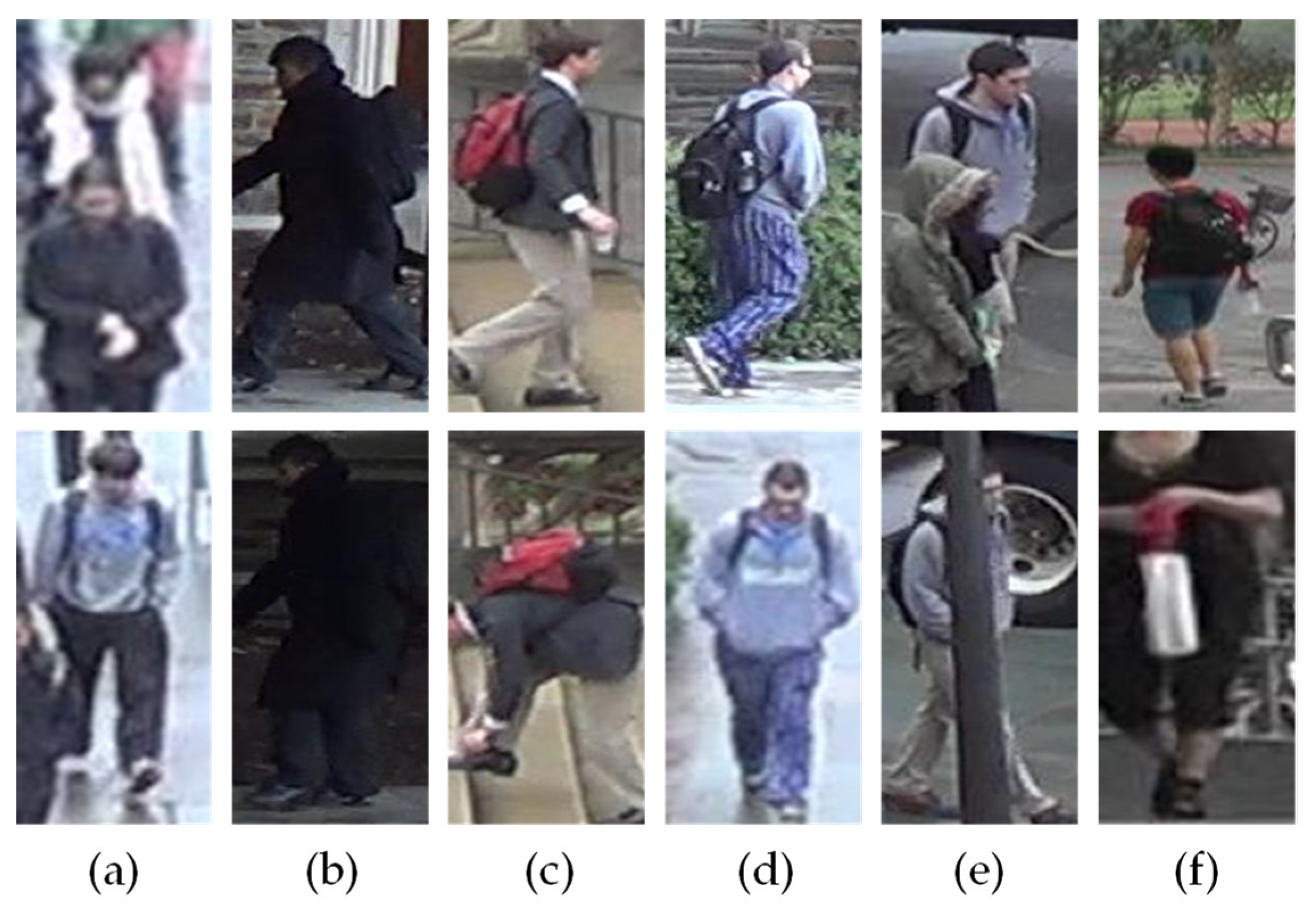

1. Introduction

- We proposed a ViT-based ReID framework, named AET-Net, to address the occlusion problem.

- Spatial Attention Enhanced Module (SAEM) is designed to strengthen the ViT patch embedding structure to enhance its attention to important local features of the image.

- MultiFeature Training Module (MFTM) exploits multiple losses to optimize the model and also to keep the model from over-biasing the attention feature.

- AET-Net achieves superior performance on the occluded dataset and competitive performance on the non-occluded datasets.

2. Related Work

2.1. Occluded Person ReID Based on Deep Learning

2.1.1. Partial Models

2.1.2. Attention Models

2.2. Transformer in Computer Vision

3. Method

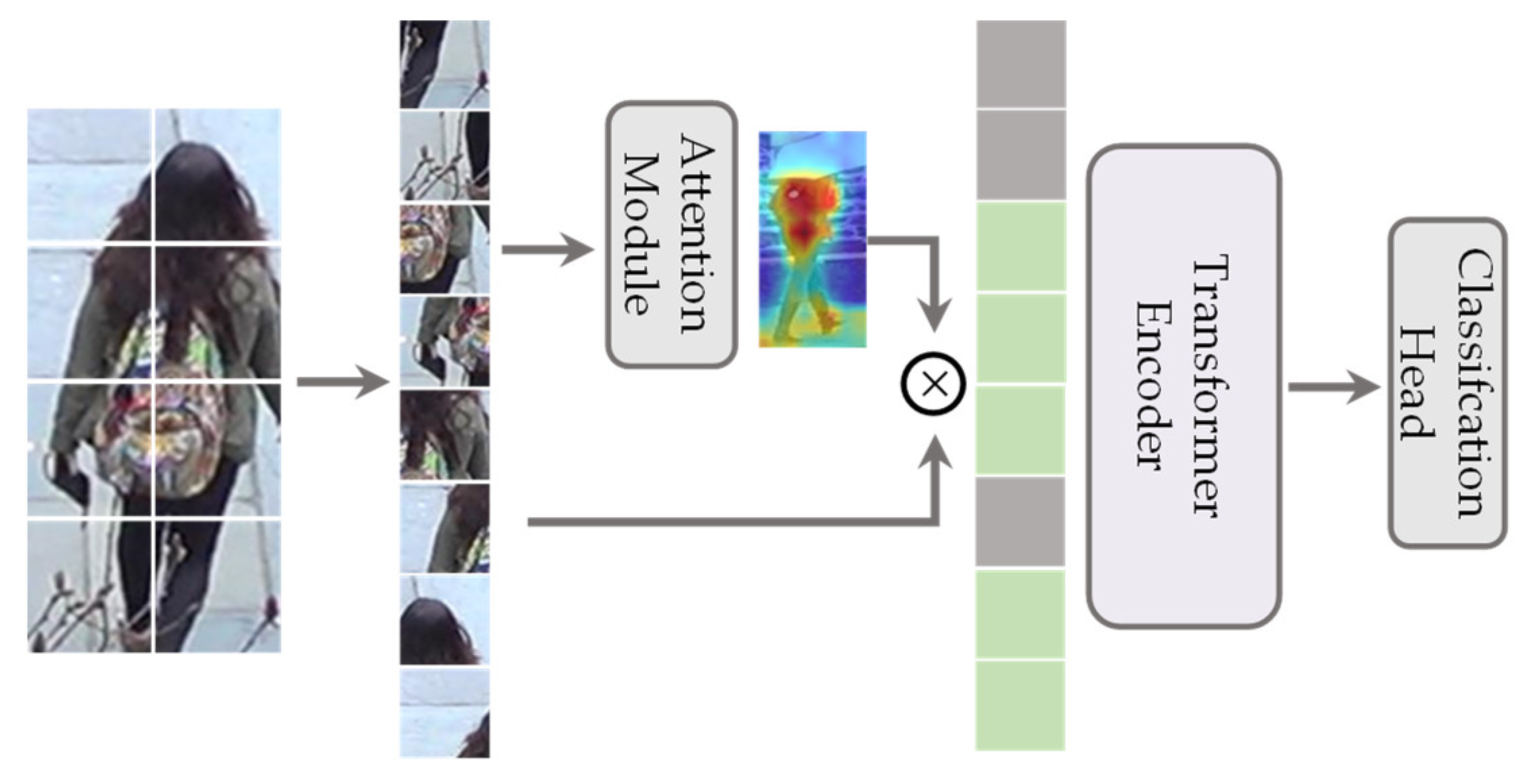

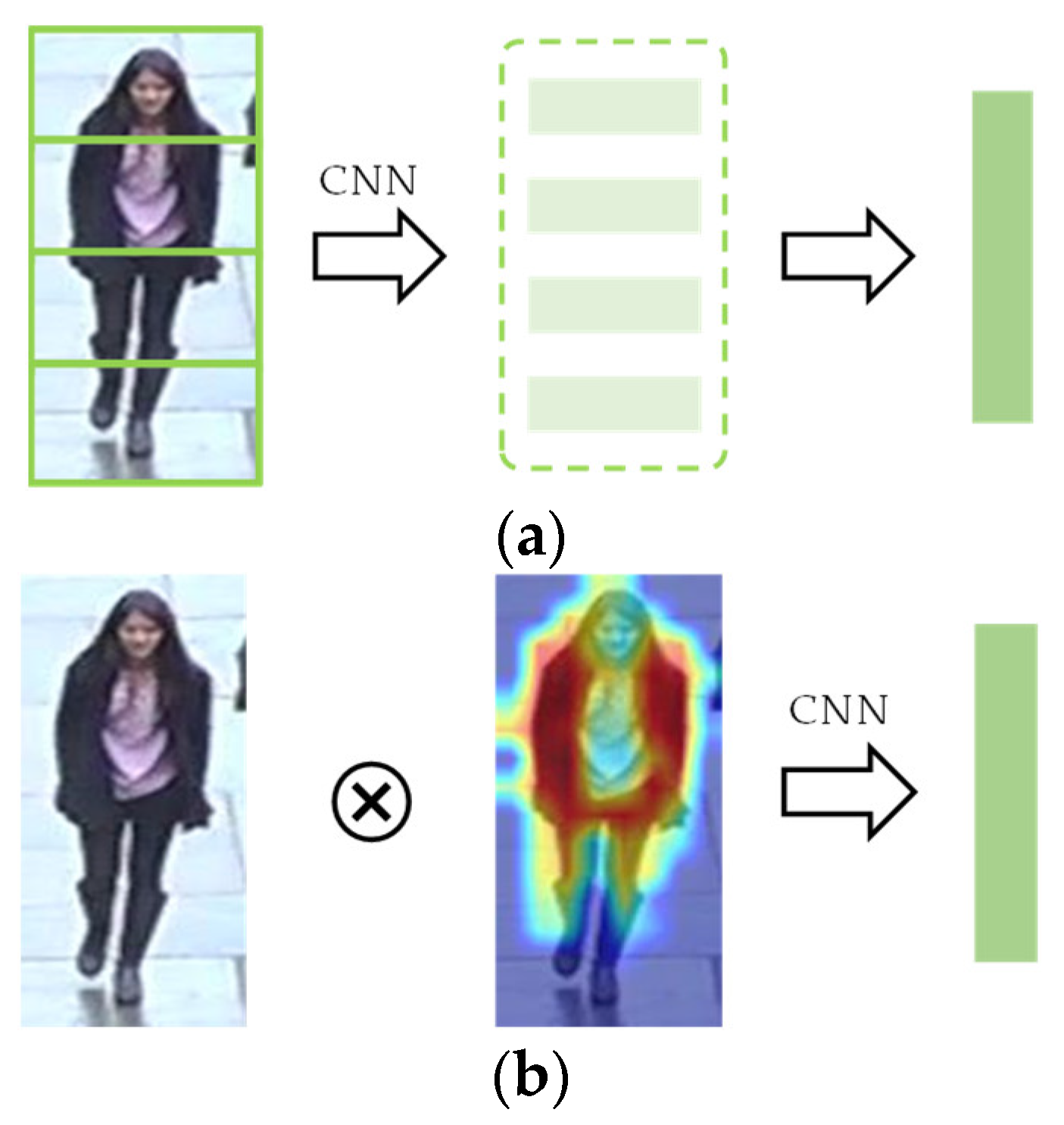

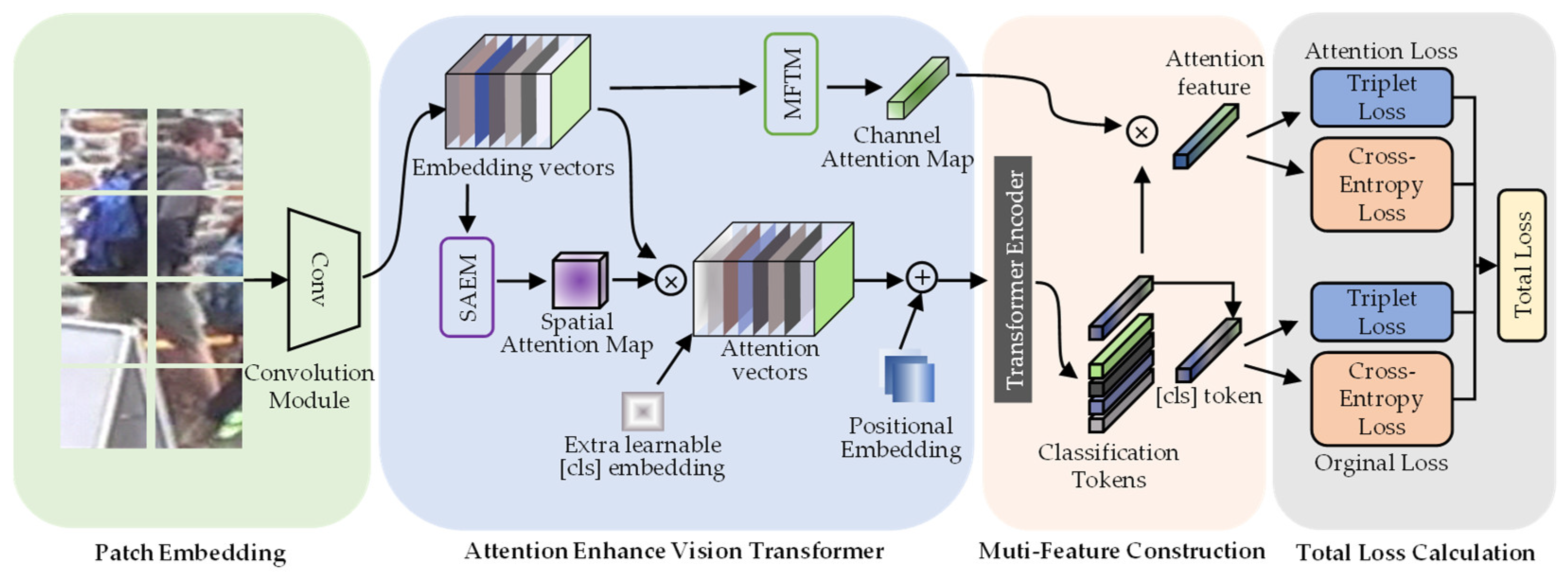

3.1. Feature Extraction Backbone

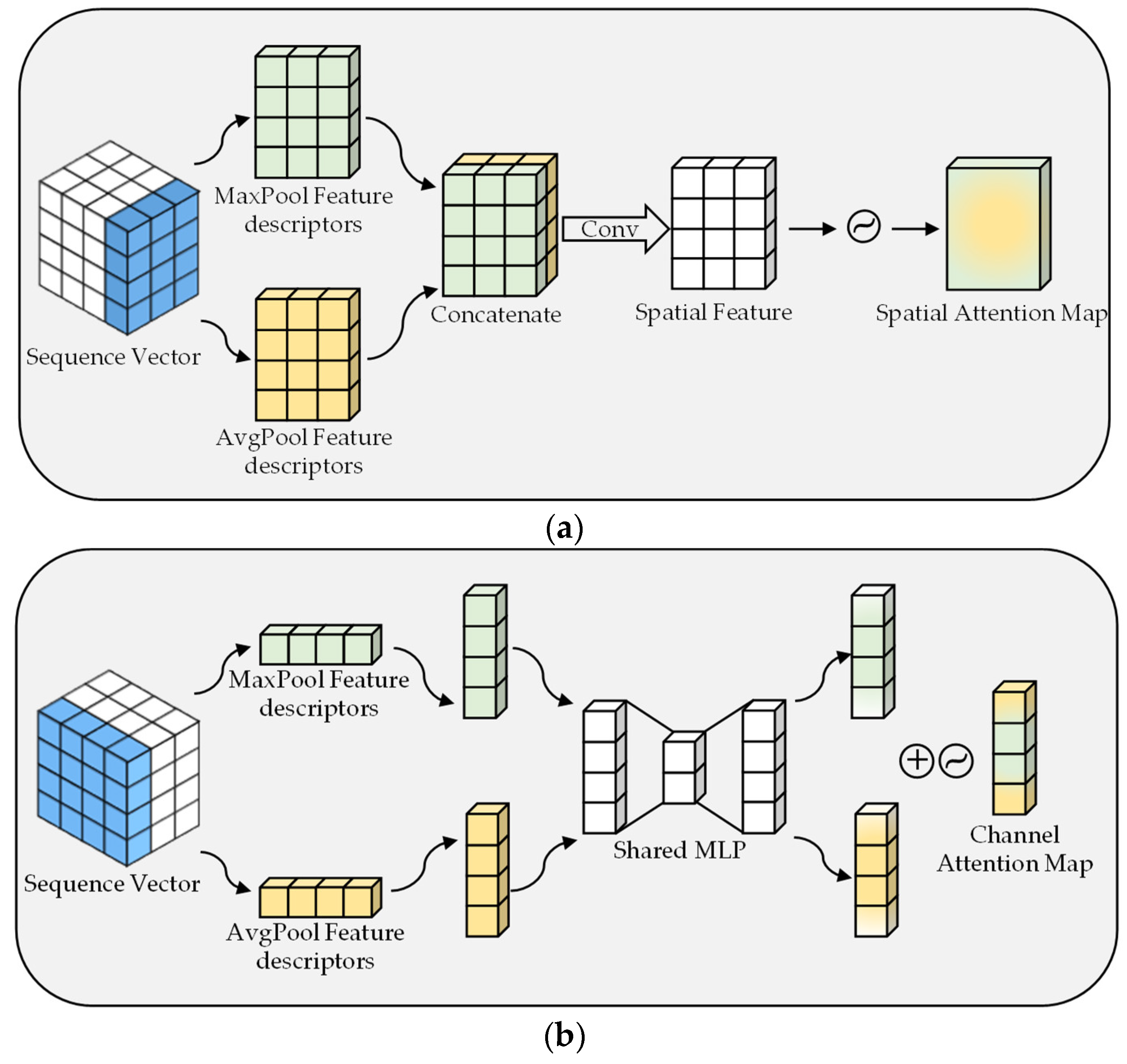

3.2. Spatial Attention Enhancement Module

3.3. MultiFeature Training Module

3.4. Loss Functions

3.4.1. SoftMax Cross-Entropy Loss

3.4.2. Triplet Loss

3.4.3. Total Loss

4. Results and Discussion

4.1. Datasets and Evaluation Protocols

4.1.1. Datasets

4.1.2. Evaluation Protocols

4.2. Implementation Details

4.3. Comparison with State-of-the-Art Methods

4.3.1. Results for the Non-Occluded Dataset

4.3.2. Results for the Occluded Dataset

4.4. Ablation Study and Visualization

4.4.1. Transformer Architecture

4.4.2. Spatial Attention Enhancement Module

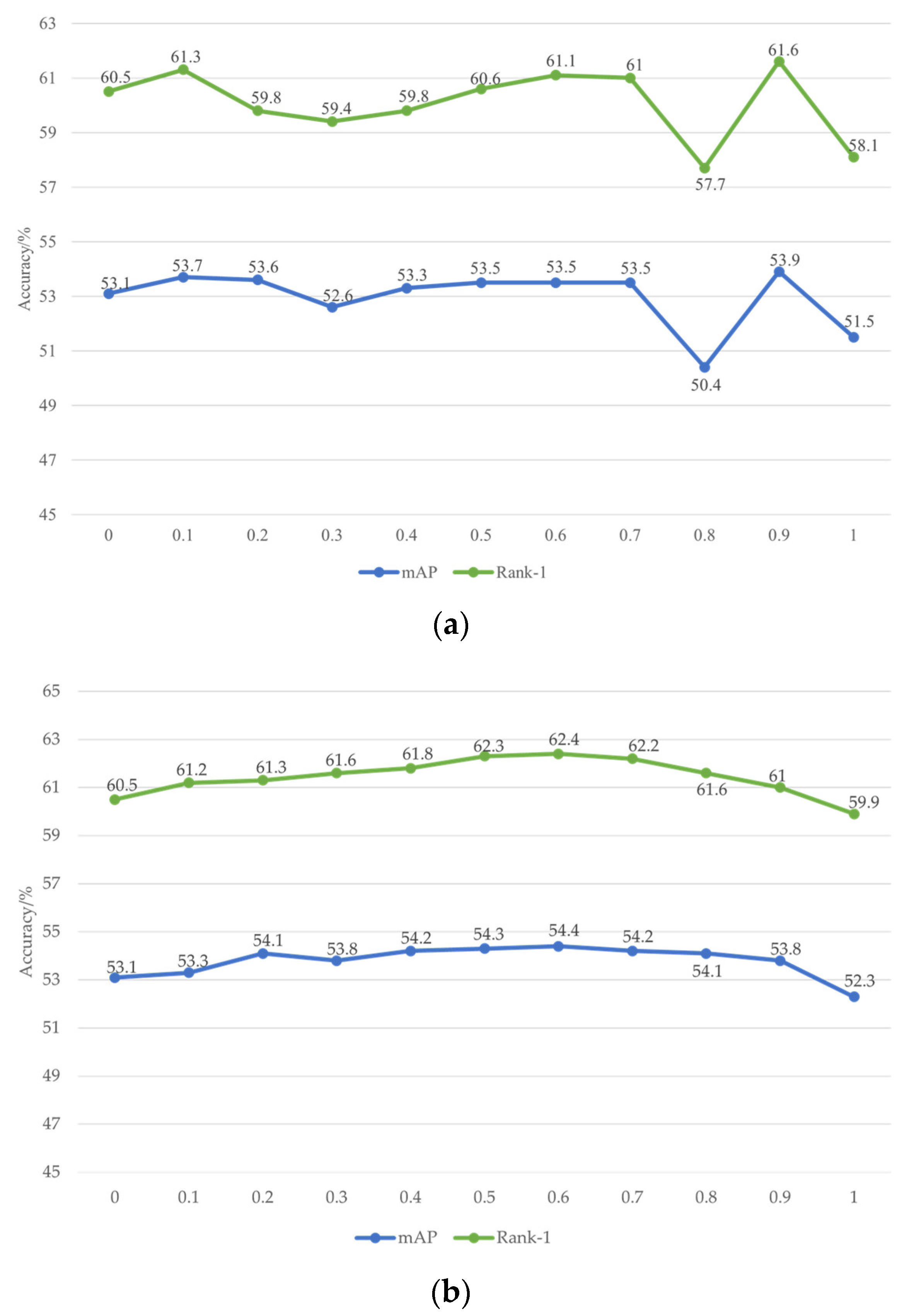

4.4.3. Multi-Feature Training Module

4.4.4. Integration of the Two Modules

4.4.5. Inferential Costs

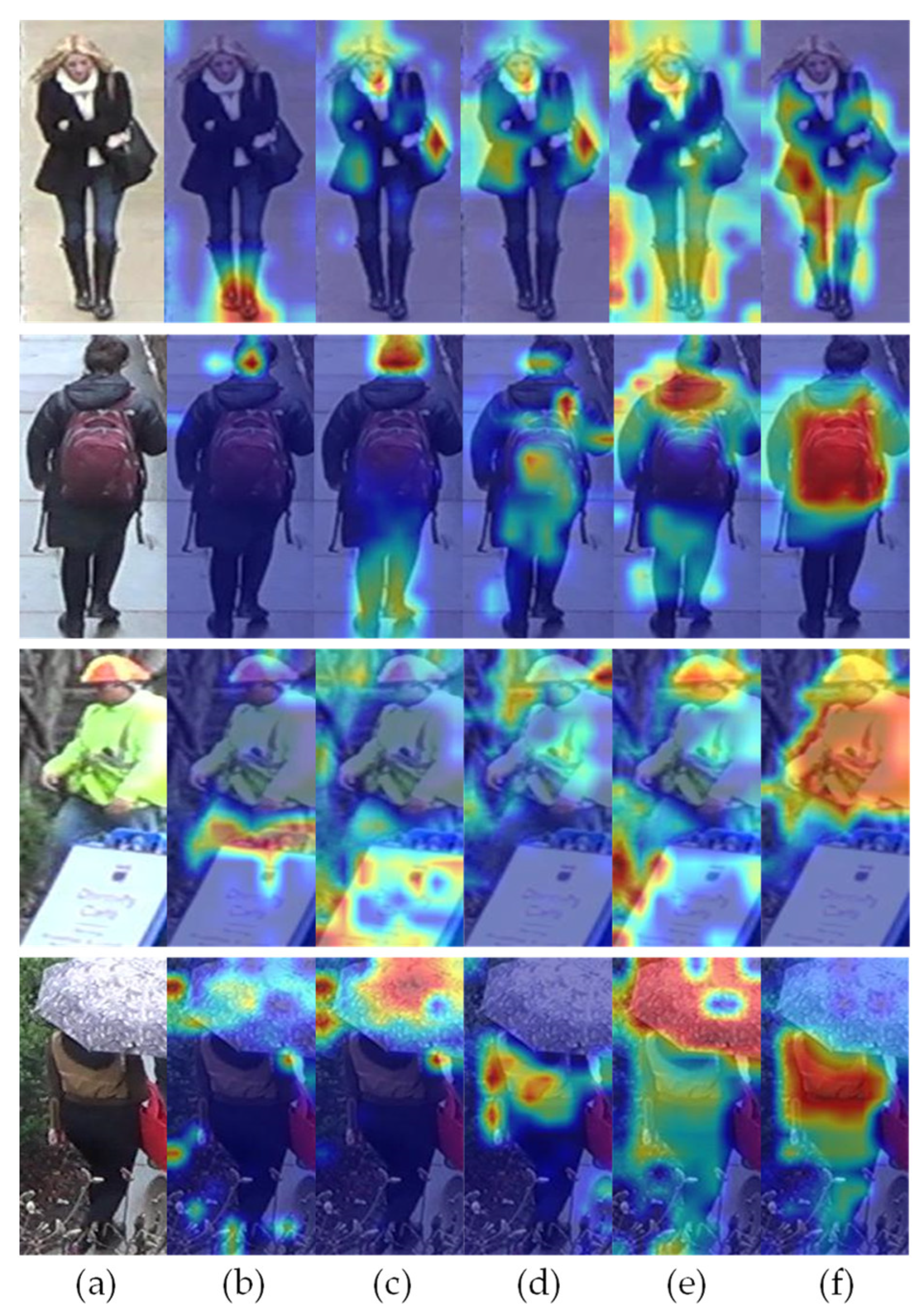

4.4.6. Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ye, M.; Shen, J.; Lin, G. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.A.; Yang, S.; Liu, H. High-order information matters: Learning relation and topology for occluded person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6449–6458. [Google Scholar]

- Wang, P.; Ding, C.; Shao, Z.; Hong, Z.; Zhang, S.; Tao, D. Quality-aware part models for occluded person re-identification. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Huang, H.; Li, D.; Zhang, Z. Adversarial occluded samples for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5098–5107. [Google Scholar]

- Jia, M.; Cheng, X.; Lu, S. Learning disentangled representation implicitly via transformer for occluded person re-identification. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Jia, M.; Cheng, X.; Zhai, Y. Matching on sets: Conquer occluded person re-identification without alignment. Proc. AAAI Conf. Artif. Intell. 2021, 35, 1673–1681. [Google Scholar] [CrossRef]

- Miao, J.; Wu, Y.; Liu, P. Pose-guided feature alignment for occluded person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 542–551. [Google Scholar]

- Li, Y.; He, J.; Zhang, T. Diverse part discovery: Occluded person re-identification with part-aware transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2898–2907. [Google Scholar]

- He, L.; Wang, Y.; Liu, W. Foreground-aware pyramid reconstruction for alignment-free occluded person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8450–8459. [Google Scholar]

- Wang, H.; Lu, J.; Pang, F. Bi-directional Style Adaptation Network for Person Re-Identification. IEEE Sens. J. 2022, 22, 12339–12347. [Google Scholar] [CrossRef]

- Hu, Z.; Hou, W.; Liu, X. Deep Batch Active Learning and Knowledge Distillation for Person Re-Identification. IEEE Sens. J. 2022, 22, 14347–14355. [Google Scholar] [CrossRef]

- Wu, Y.; Lin, Y.; Dong, X. Exploit the unknown gradually: One-shot video-based person re-identification by stepwise learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5177–5186. [Google Scholar]

- Wu, Y.; Lin, Y.; Dong, X. Progressive learning for person re-identification with one example. IEEE Trans. Image Process. 2019, 28, 2872–2881. [Google Scholar] [CrossRef]

- Lin, Y.; Wu, Y.; Yan, C. Unsupervised person re-identification via cross-camera similarity exploration. IEEE Trans. Image Process. 2020, 29, 5481–5490. [Google Scholar] [CrossRef]

- Cai, H.; Wang, Z.; Cheng, J. Multi-scale body-part mask guided attention for person re-identification. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 1555–1564. [Google Scholar] [CrossRef]

- Song, C.; Huang, Y.; Ouyang, W. Mask-guided contrastive attention model for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1179–1188. [Google Scholar]

- Liu, M.; Yan, X.; Wang, C. Segmentation mask-guided person image generation. Appl. Intell. 2020, 51, 1161–1176. [Google Scholar] [CrossRef]

- Zhao, H.; Tian, M.; Sun, S. Spindle net: Person re-identification with human body region guided feature decomposition and fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1077–1085. [Google Scholar]

- Su, C.; Li, J.; Zhang, S. Pose-driven deep convolutional model for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3960–3969. [Google Scholar]

- Kalayeh, M.M.; Basaran, E.; Gökmen, M. Human semantic parsing for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1062–1071. [Google Scholar]

- Zhu, K.; Guo, H.; Liu, Z. Identity-guided human semantic parsing for person re-identification. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 346–363. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Wang, G.; Yuan, Y.; Chen, X. Learning discriminative features with multiple granularities for person re-identification. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 274–282. [Google Scholar]

- He, S.; Luo, H.; Wang, P. Transreid: Transformer-based object re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15013–15022. [Google Scholar]

- Zhang, Z.; Lan, C.; Zeng, W. Relation-aware global attention for person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3186–3195. [Google Scholar]

- Chen, B.; Deng, W.; Hu, J. Mixed high-order attention network for person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 371–381. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Galassi, A.; Lippi, M.; Torroni, P. Attention in natural language processing. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4291–4308. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, H.; Zhou, Y. Spatial-Channel Enhanced Transformer for Visible-Infrared Person Re-Identification. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M. Transformers in vision: A survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Wang, Y. A survey of visual transformers. arXiv 2021, arXiv:2111.06091. [Google Scholar]

- Zhang, S.; Zhang, Q.; Yang, Y. Person re-identification in aerial imagery. IEEE Trans. Multimed. 2020, 23, 281–291. [Google Scholar] [CrossRef]

- Yang, W.; Huang, H.; Zhang, Z. Towards rich feature discovery with class activation maps augmentation for person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1389–1398. [Google Scholar]

- Zhao, L.; Li, X.; Zhuang, Y. Deeply-learned part-aligned representations for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3219–3228. [Google Scholar]

- He, L.; Liang, J.; Li, H. Deep spatial feature reconstruction for partial person re-identification: Alignment-free approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7073–7082. [Google Scholar]

- Zhang, X.; Luo, H.; Fan, X. Alignedreid: Surpassing human-level performance in person re-identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Guo, M.H.; Xu, T.X.; Liu, J.J. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Li, W.; Zhu, X.; Gong, S. Harmonious attention network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2285–2294. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wu, B.; Xu, C.; Dai, X. Visual transformers: Token-based image representation and processing for computer vision. arXiv 2020, arXiv:2006.03677. [Google Scholar]

- Srinivas, A.; Lin, T.-Y.; Parmar, N. Bottleneck transformers for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16519–16529. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- D’Ascoli, S.; Touvron, H.; Leavitt, M.L. Convit: Improving vision transformers with soft convolutional inductive biases. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 2286–2296. [Google Scholar]

- Zhang, Q.; Yang, Y.B. ResT: An efficient transformer for visual recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 15475–15485. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Xu, Y.; Jiang, Z.; Men, A. Multi-view feature fusion for person re-identification. Knowl. Based Syst. 2021, 229, 107344. [Google Scholar] [CrossRef]

- Zheng, L.; Shen, L.; Tian, L. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Unlabeled samples generated by gan improve the person re-identification baseline in vitro. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3754–3762. [Google Scholar]

- Wang, X.; Doretto, G.; Sebastian, T. Shape and appearance context modeling. In Proceedings of the 2007 IEEE 11th International conference on Computer Vision, Rio De Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Miao, J.; Wu, Y.; Yang, Y. Identifying visible parts via pose estimation for occluded person re-identification. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4624–4634. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Gu, Y.; Liao, X. Bag of tricks and a strong baseline for deep person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

| Datasets | ID/Image | Train (ID/Image) | Query | Gallery |

|---|---|---|---|---|

| Market-1501 | 1501/32,668 | 751/12,936 | 750/3368 | 750/19,732 |

| DukeMTMC-ReID | 1404/36,441 | 702/16,522 | 702/2228 | 1110/17,661 |

| Occluded-Duke | 1404/35,489 | 702/15,618 | 519/2210 | 1110/17,661 |

| Types | Models | DukeMTMC | Market-1501 | ||

|---|---|---|---|---|---|

| mAP/% | Rank-1/% | mAP/% | Rank-1/% | ||

| Partial Models | Spindle [18] | - | - | - | 76.9 |

| Part Aligned [34] | - | - | 63.4 | 81.0 | |

| PDC [19] | - | - | 63.4 | 84.1 | |

| DSR [35] | - | - | 64.2 | 83.6 | |

| MGCAM [16] | - | - | 74.3 | 83.8 | |

| Aligned Reid [36] | - | - | 79.3 | 91.8 | |

| PGFA [7] | 65.5 | 82.6 | 76.8 | 91.2 | |

| PGFA-PE [51] | 72.6 | 86.2 | 81.3 | 92.7 | |

| PCB [22] | 66.1 | 81.8 | 77.4 | 92.3 | |

| PCB+RPP [22] | 69.2 | 83.3 | 81.6 | 93.8 | |

| HOReID [2] | 75.6 | 86.9 | 84.9 | 94.2 | |

| FPR [9] | 78.4 | 88.6 | 86.6 | 95.4 | |

| MGN [23] | 78.4 | 88.7 | 86.9 | 95.7 | |

| Attention Models | HACNN [38] | 63.8 | 80.5 | 82.8 | 93.8 |

| MHN-6 [26] | 77.2 | 89.1 | 85.0 | 95.1 | |

| CAM [33] | 72.9 | 85.8 | 84.5 | 94.7 | |

| PAT [8] | 78.2 | 88.8 | 88.0 | 95.4 | |

| DRL-Net [5] | 76.6 | 88.1 | 86.9 | 94.7 | |

| TransReID [24] | 79.3 | 88.8 | 86.8 | 94.7 | |

| Ours | AET-Net | 80.1 | 89.5 | 87.5 | 94.8 |

| Types | Models | Occluded-Duke | |

|---|---|---|---|

| mAP/% | Rank-1/% | ||

| Partial Models | Part Aligned [34] | 20.2 | 28.8 |

| DSR [35] | 30.4 | 40.8 | |

| Ad-Occluded [4] | 32.2 | 44.5 | |

| PGFA [7] | 37.3 | 51.4 | |

| PCB [22] | 42.6 | 33.7 | |

| HOReID [2] | 43.8 | 55.1 | |

| PGFA-PE [51] | 43.5 | 56.6 | |

| Attention Models | HACNN [38] | 26.0 | 34.4 |

| DRL-Net [5] | 50.8 | 65.0 | |

| ISP [21] | 52.3 | 62.8 | |

| PAT [8] | 53.6 | 64.5 | |

| TransReID [24] | 53.1 | 60.5 | |

| Ours | AET-Net | 54.5 | 64.5 |

| Type | Models | Market-1501 | Occluded-Duke | ||

|---|---|---|---|---|---|

| mAP/% | Rank-1/% | mAP/% | Rank-1/% | ||

| CNN-Based | HOReID [2] | 84.9 | 94.2 | 43.8 | 55.1 |

| HACNN [38] | 82.8 | 93.8 | 26 | 34.4 | |

| Transformer | ViT-based (baseline) | 86.8 | 94.7 | 53.1 | 60.5 |

| Index | SAEM | MFTM | Market-1501 | Occluded-Duke | |||

|---|---|---|---|---|---|---|---|

| mAP/% | Rank-1/% | mAP/% | Rank-1/% | ||||

| 1 | 86.8 | 94.7 | 53.1 | 60.5 | |||

| 2 | √ | 86.4 | 94.5 | 51.5 | 58.1 | ||

| 3 | √ | √ | 87.2 | 94.7 | 53.9 | 61.6 | |

| 4 | √ | 87.0 | 94.1 | 52.3 | 59.9 | ||

| 5 | √ | √ | 87.5 | 94.8 | 54.4 | 62.4 | |

| 6 | √ | √ | 87.4 | 94.7 | 53.5 | 61.2 | |

| 7 | √ | √ | √ | 87.5 | 94.8 | 54.5 | 64.5 |

| Models | Inference Time | Flops | Parameters |

|---|---|---|---|

| ResNet50 [52] | 1x | 1x | 1x |

| Baseline | 0.4338x | 2.7105x | 3.6411x |

| TransReID + JPM | 0.4595x | 2.9401x | 3.9427x |

| Baseline + SAEM | 0.4212x | 2.7105x | 3.6411x |

| Baseline + MFTM | 0.3955x | 2.7106x | 3.6442x |

| Baseline + S + M | 0.4159x | 2.7106x | 3.6442x |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Li, P.; Zhao, R.; Zhou, R.; Han, Y. CNN Attention Enhanced ViT Network for Occluded Person Re-Identification. Appl. Sci. 2023, 13, 3707. https://doi.org/10.3390/app13063707

Wang J, Li P, Zhao R, Zhou R, Han Y. CNN Attention Enhanced ViT Network for Occluded Person Re-Identification. Applied Sciences. 2023; 13(6):3707. https://doi.org/10.3390/app13063707

Chicago/Turabian StyleWang, Jing, Peitong Li, Rongfeng Zhao, Ruyan Zhou, and Yanling Han. 2023. "CNN Attention Enhanced ViT Network for Occluded Person Re-Identification" Applied Sciences 13, no. 6: 3707. https://doi.org/10.3390/app13063707

APA StyleWang, J., Li, P., Zhao, R., Zhou, R., & Han, Y. (2023). CNN Attention Enhanced ViT Network for Occluded Person Re-Identification. Applied Sciences, 13(6), 3707. https://doi.org/10.3390/app13063707