AWEncoder: Adversarial Watermarking Pre-Trained Encoders in Contrastive Learning

Abstract

1. Introduction

- We propose a novel adversarial watermarking strategy for the pre-trained encoders in the embedding space, which is more effective for watermark verification compared with previous black-box watermarking methods.

- Unlike conventional watermarking methods assuming that the downstream task of the watermarked model is the same as the original one, the proposed method does not require a priori knowledge of the downstream task. As a result, the proposed method enables us to verify the ownership under white-box and stricter black-box settings, which is more applicable to practice compared with previous ones.

2. Preliminaries

2.1. Contrastive Learning

2.1.1. SimCLR [4]

2.1.2. MoCo v2 [3]

2.2. Problem and Threats

3. Proposed Method

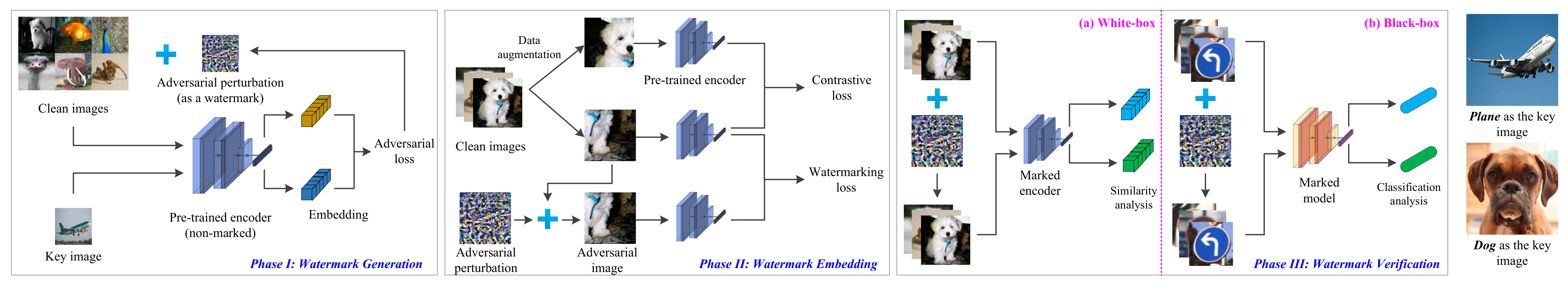

3.1. Watermark Generation

3.2. Watermark Embedding

3.3. Watermark Verification

4. Experimental Results and Analysis

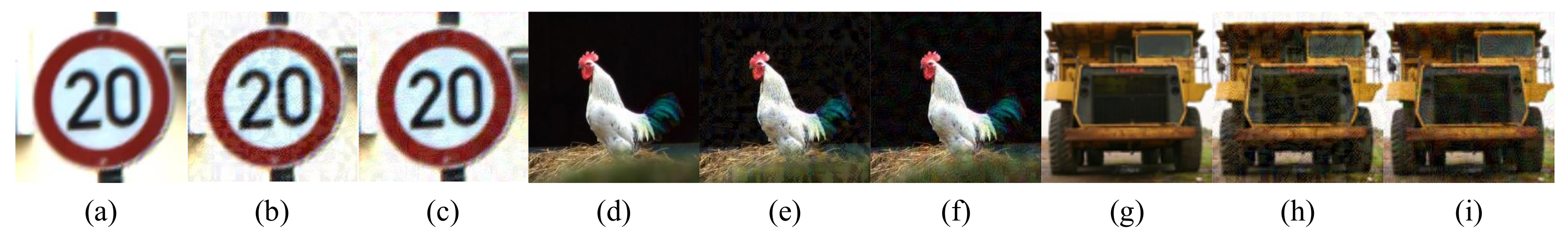

4.1. Effectiveness

4.2. Uniqueness

4.3. Robustness

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jing, L.; Tian, Y. Self-supervised visual feature learning with deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4037–4058. [Google Scholar] [CrossRef] [PubMed]

- Grill, J.-B.; Strub, F.; Altch, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap your own latent: A new approach to self-supervised learning. Proc. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.; He, K. Improved baselines with momentum contrastive learning. arXiv 2020, arXiv:2003.04297. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9726–9735. [Google Scholar]

- Orekondy, T.; Schiele, B.; Fritz, M. Knockoff Nets: Stealing functionality of black-box models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4949–4958. [Google Scholar]

- Chandrasekaran, V.; Chaudhuri, K.; Giacomelli, I.; Jha, S.; Yan, S. Exploring connections between active learning and model extraction. In Proceedings of the 29th USENIX Conference on Security Symposium, Boston, MA, USA, 12–14 August 2020; pp. 1309–1326. [Google Scholar]

- Fan, L.; Ng, K.W.; Chan, C.S. Rethinking deep neural network ownership verification: Embedding passports to defeat ambiguity attacks. Proc. Neural Inf. Process. Syst. 2019, 32, 4714–4723. [Google Scholar]

- Zhang, J.; Chen, D.; Liao, J.; Fang, H.; Zhang, W.; Zhou, W.; Cui, H.; Yu, N. Model watermarking for image processing networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12805–12812. [Google Scholar]

- Chen, J.; Wang, J.; Peng, T.; Sun, Y.; Cheng, P.; Ji, S.; Ma, X.; Li, B.; Song, D. Copy, Right? A testing framework for copyright protection of deep learning models. arXiv 2021, arXiv:2112.05588. [Google Scholar]

- Wu, H.; Liu, G.; Yao, Y.; Zhang, X. Watermarking neural networks with watermarked images. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 2591–2601. [Google Scholar] [CrossRef]

- Uchida, Y.; Nagai, Y.; Sakazawa, S.; Satoh, S. Embedding watermarks into deep neural networks. In Proceedings of the ACM International Conference on Multimedia Retrieval, Bucharest, Romania, 6–9 June 2017; pp. 269–277. [Google Scholar]

- Namba, R.; Sakuma, J. Robust watermarking of neural network with exponential weighting. In Proceedings of the ACM Asia Conference on Computer and Communications Security, Auckland, New Zealand, 9–12 July 2019; pp. 228–240. [Google Scholar]

- Wang, J.; Wu, H.; Zhang, X.; Yao, Y. Watermarking in deep neural networks via error back-propagation. In Proceedings of the IS&T Electronic Imaging, Media Watermarking, Security and Forensics, Burlingame, CA, USA, 26–30 January2020; pp. 1–8. [Google Scholar]

- Rouhani, B.D.; Chen, H.; Koushanfar, F. Deepsigns: An end-to-end watermarking framework for ownership protection of deep neural networks. In Proceedings of the International Conference on Architectural Support for Programming Languages and Operating Systems, Providence, RI, USA, 13–17 April 2019; pp. 485–497. [Google Scholar]

- Zhao, X.; Yao, Y.; Wu, H.; Zhang, X. Structural watermarking to deep neural networks via network channel pruning. In Proceedings of the IEEE Workshop on Information Forensics and Security, Montpellier, France, 7–10 December 2021; pp. 1–6. [Google Scholar]

- Adi, Y.; Baum, C.; Cisse, M.; Pinkas, B.; Keshet, J. Turning your weakness into a strength: Watermarking deep neural networks by backdooring. In Proceedings of the 27th USENIX Security Symposium, Baltimore, MD, USA, 15–17 August 2018; pp. 1615–1631. [Google Scholar]

- Gu, T.; Liu, K.; Dolan-Gavitt, B.; Garg, S. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 2019, 7, 47230–47244. [Google Scholar] [CrossRef]

- Jia, J.; Liu, Y.; Gong, N.Z. Badencoder: Backdoor attacks to pre-trained encoders in self-supervised learning. arXiv 2021, arXiv:2108.00352. [Google Scholar]

- Merrer, E.L.; Perez, P.; Trédan, G. Adversarial frontier stitching for remote neural network watermarking. Neural Comput. Appl. 2020, 32, 9233–9244. [Google Scholar] [CrossRef]

- Moosavi-Dezfooli, S.-M.; Fawzi, A.; Fawzi, O.; Frossard, P. Universal adversarial perturbations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1765–1773. [Google Scholar]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-pruning: Defending against backdooring attacks on deep neural networks. In Proceedings of the International Symposium on Research in Attacks, Intrusions, and Defenses, Crete, Greece, 10–12 September 2018; pp. 273–294. [Google Scholar]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning filters for efficient convnets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

- Jia, H.; Choquette-Choo, C.A.; Chandrasekaran, V.; Papernot, N. Entangled watermarks as a defense against model extraction. In Proceedings of the 30th USENIX Security Symposium, Virtual, 11–13 August 2021; pp. 1937–1954. [Google Scholar]

- Zhang, J.; Gu, Z.; Jang, J.; Wu, H.; Stoecklin, M.P.; Huang, H.; Molloy, I. Protecting intellectual property of deep neural networks with watermarking. In Proceedings of the ACM Asia Conference on Computer and Communications Security, Melbourne, VI, Australia, 10–14 July 2018; pp. 159–172. [Google Scholar]

- Wu, Y.; Qiu, H.; Zhang, T.; Li, J.; Qiu, M. Watermarking pre-trained encoders in contrastive learning. arXiv 2022, arXiv:2201.08217. [Google Scholar]

- Cong, T.; He, X.; Zhang, Y. SSLGuard: A watermarking scheme for self-supervised learning pre-trained encoders. arXiv 2022, arXiv:2201.11692. [Google Scholar]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Zhang, H.; Yu, Y.; Jiao, J.; Xing, E.; Ghaoui, L.E.; Jordan, M. Theoretically principled trade-off between robustness and accuracy. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7472–7482. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Personal communication; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Coates, A.; Ng, A.; Lee, H. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 June 2011; pp. 215–223. [Google Scholar]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. The German traffic sign recognition benchmark: A multi-class classification competition. In Proceedings of the International Joint Conference Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 1453–1460. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

| Pre-Trained Dataset | Encoder | Downstream Dataset | Method | Accuracy CE/WE | CE/WE/|CE-WE| | |||

|---|---|---|---|---|---|---|---|---|

| ImageNet | SimCLR (ResNet-18) | ImageNet | WPE | 74.5% | 73.4% | 25.5% | 86.4% | 60.9% |

| AWEncoder | 74.5% | 71.1% | 30.5% | 96.1% | 65.6% | |||

| GTSRB | WPE | 77.8% | 77.5% | 31.7% | 90.4% | 58.7% | ||

| AWEncoder | 77.8% | 75.5% | 16.8% | 88.9% | 72.1% | |||

| STL-10 | WPE | 64.7% | 64.1% | 27.3% | 91.6% | 64.3% | ||

| AWEncoder | 64.7% | 61.2% | 13.7% | 93.9% | 80.2% | |||

| CIFAR-10 | MoCo v2 (ResNet-50) | ImageNet | WPE | 73.0% | 72.9% | 19.3% | 80.1% | 60.8% |

| AWEncoder | 73.0% | 70.5% | 30.9% | 94.9% | 64.0% | |||

| GTSRB | WPE | 84.5% | 83.7% | 14.9% | 84.2% | 69.3% | ||

| AWEncoder | 84.5% | 84.0% | 12.8% | 90.2% | 77.4% | |||

| STL-10 | WPE | 70.5% | 68.9% | 11.5% | 80.9% | 69.4% | ||

| AWEncoder | 70.5% | 68.2% | 17.6% | 92.7% | 75.1% | |||

| Model | |||

|---|---|---|---|

| Correct Watermark | Incorrect Watermark | ||

| SimCLR | CE | 0.14 | 0.11 |

| WE | 0.89 | 0.27 | |

| MoCo v2 | CE | 0.26 | 0.25 |

| WE | 0.98 | 0.33 | |

| Downstream Dataset | Setting | SimCLR WE | MoCo v2 WE | ||

|---|---|---|---|---|---|

| GTSRB | Plane 1 | 88.9% | 0.89 | 90.2% | 0.98 |

| Plane 2 | 87.8% | 0.89 | 89.6% | 0.97 | |

| Plane 3 | 87.7% | 0.88 | 90.0% | 0.98 | |

| Dog | 87.4% | 0.86 | 89.5% | 0.97 | |

| Cat | 88.0% | 0.88 | 90.1% | 0.98 | |

| Downstream Dataset | Encoder | Setting | SimCLR ( ImageNet ) | MoCo v2 ( CIFAR-10 ) | ||

|---|---|---|---|---|---|---|

| GTSRB | Pre-trained encoder | Plane, = 15 | 88.9% | 0.89 | 90.2% | 0.98 |

| Plane, = 20 | 19.4% | 0.17 | 24.6% | 0.25 | ||

| Dog, = 15 | 29.4% | 0.22 | 30.6% | 0.27 | ||

| Surrogate encoder | Plane, = 15 | 33.3% | 0.27 | 37.1% | 0.31 | |

| Pruning Ratio | ||||

|---|---|---|---|---|

| SimCLR CE/WE | MoCo v2 CE/WE | |||

| - | 0.14 | 0.89 | 0.26 | 0.98 |

| 0.2 | 0.16 | 0.88 | 0.28 | 0.96 |

| 0.4 | 0.20 | 0.84 | 0.29 | 0.92 |

| 0.6 | 0.25 | 0.76 | 0.33 | 0.85 |

| 0.8 | 0.28 | 0.70 | 0.35 | 0.80 |

| Fine-Tuning | ||||

|---|---|---|---|---|

| SimCLR CE/WE | MoCo v2 CE/WE | |||

| - | 0.14 | 0.89 | 0.26 | 0.98 |

| FTAL | 0.17 | 0.74 | 0.30 | 0.84 |

| RTAL | 0.24 | 0.68 | 0.34 | 0.76 |

| Downstream Dataset | Pruning Ratio | Method | SimCLR (ImageNet) | ||||

|---|---|---|---|---|---|---|---|

| Accuracy CE/WE | CE/WE/|CE-WE| | ||||||

| GTSRB | 0.2 | WPE | 79.8% | 80.3% | 30.8% | 84.5% | 53.7% |

| AWEncoder | 79.8% | 78.0% | 19.9% | 85.4% | 65.5% | ||

| 0.4 | WPE | 77.9% | 77.2% | 29.6% | 79.2% | 49.6% | |

| AWEncoder | 77.9% | 76.3% | 19.5% | 80.8% | 61.3% | ||

| 0.6 | WPE | 71.2% | 70.5% | 27.9% | 68.0% | 40.1% | |

| AWEncoder | 71.2% | 68.5% | 20.5% | 79.6% | 59.1% | ||

| 0.8 | WPE | 64.9% | 64.8% | 27.5% | 65.7% | 38.2% | |

| AWEncoder | 64.9% | 63.8% | 22.1% | 78.4% | 56.3% | ||

| Downstream dataset | Pruning ratio | Method | MoCo v2 ( CIFAR-10 ) | ||||

| GTSRB | 0.2 | WPE | 84.0% | 83.6% | 15.6% | 81.3% | 65.7% |

| AWEncoder | 84.0% | 82.9% | 14.8% | 85.4% | 70.6% | ||

| 0.4 | WPE | 76.9% | 78.8% | 19.4% | 74.2% | 54.8% | |

| AWEncoder | 76.9% | 77.5% | 16.3% | 80.1% | 63.8% | ||

| 0.6 | WPE | 71.0% | 72.1% | 17.5% | 61.9% | 44.4% | |

| AWEncoder | 71.0% | 70.0% | 23.4% | 76.3% | 52.9% | ||

| 0.8 | WPE | 64.6% | 65.7% | 18.4% | 53.7% | 35.3% | |

| AWEncoder | 64.6% | 62.6% | 22.1% | 73.3% | 51.2% | ||

| Fine- Tuning | Downstream Dataset | Method | SimCLR (ImageNet) | ||||

|---|---|---|---|---|---|---|---|

| Accuracy CE/WE | CE/WE/|CE-WE| | ||||||

| FTAL | ImageNet | WPE | 74.8% | 74.3% | 22.3% | 74.0% | 51.7% |

| AWEncoder | 74.8% | 72.1% | 30.6% | 92.4% | 61.8% | ||

| GTSRB | WPE | 65.7% | 66.2% | 32.8% | 61.7% | 28.9% | |

| AWEncoder | 65.7% | 63.2% | 23.9% | 82.4% | 58.5% | ||

| STL-10 | WPE | 63.4% | 62.7% | 31.0% | 86.7% | 55.7% | |

| AWEncoder | 63.4% | 60.8% | 14.6% | 89.3% | 74.7% | ||

| RTAL | ImageNet | WPE | 94.5% | 94.3% | 21.6% | 60.5% | 38.9% |

| AWEncoder | 94.5% | 92.7% | 39.8% | 81.4% | 41.6% | ||

| GTSRB | WPE | 98.5% | 98.9% | 29.7% | 55.0% | 25.3% | |

| AWEncoder | 98.5% | 97.6% | 37.6% | 78.4% | 40.8% | ||

| STL-10 | WPE | 83.1% | 82.2% | 24.6% | 50.3% | 25.7% | |

| AWEncoder | 83.1% | 82.7% | 34.1% | 80.0% | 45.9% | ||

| Fine- tuning | Downstream dataset | Method | MoCo v2 ( CIFAR-10 ) | ||||

| FTAL | ImageNet | WPE | 78.3% | 77.2% | 18.2% | 77.6% | 59.4% |

| AWEncoder | 78.3% | 76.9% | 30.1% | 90.9% | 60.8% | ||

| GTSRB | WPE | 89.1% | 87.5% | 15.9% | 55.8% | 39.9% | |

| AWEncoder | 89.1% | 87.7% | 19.3% | 85.8% | 66.5% | ||

| STL-10 | WPE | 71.3% | 70.5% | 13.2% | 67.9% | 54.7% | |

| AWEncoder | 71.3% | 70.1% | 18.8% | 81.1% | 62.3% | ||

| RTAL | ImageNet | WPE | 97.1% | 96.6% | 22.3% | 61.8% | 39.5% |

| AWEncoder | 97.1% | 96.9% | 35.2% | 75.8% | 40.6% | ||

| GTSRB | WPE | 98.0% | 97.3% | 16.0% | 50.7% | 34.7% | |

| AWEncoder | 98.0% | 97.1% | 33.8% | 82.1% | 48.3% | ||

| STL-10 | WPE | 89.2% | 87.5% | 18.7% | 43.5% | 24.8% | |

| AWEncoder | 89.2% | 85.2% | 34.1% | 75.2% | 41.1% | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Wu, H.; Lu, X.; Han, G.; Sun, G. AWEncoder: Adversarial Watermarking Pre-Trained Encoders in Contrastive Learning. Appl. Sci. 2023, 13, 3531. https://doi.org/10.3390/app13063531

Zhang T, Wu H, Lu X, Han G, Sun G. AWEncoder: Adversarial Watermarking Pre-Trained Encoders in Contrastive Learning. Applied Sciences. 2023; 13(6):3531. https://doi.org/10.3390/app13063531

Chicago/Turabian StyleZhang, Tianxing, Hanzhou Wu, Xiaofeng Lu, Gengle Han, and Guangling Sun. 2023. "AWEncoder: Adversarial Watermarking Pre-Trained Encoders in Contrastive Learning" Applied Sciences 13, no. 6: 3531. https://doi.org/10.3390/app13063531

APA StyleZhang, T., Wu, H., Lu, X., Han, G., & Sun, G. (2023). AWEncoder: Adversarial Watermarking Pre-Trained Encoders in Contrastive Learning. Applied Sciences, 13(6), 3531. https://doi.org/10.3390/app13063531