Abstract

As the volume of data generated by information systems continues to increase, machine learning (ML) techniques have become essential for the extraction of meaningful insights. However, the sheer volume of data often causes these techniques to become sluggish. To overcome this, feature selection is a vital step in the pre-processing of data. In this paper, we introduce a novel K-nearest neighborhood (KNN)-based wrapper system for feature selection that leverages the iterative improvement ability of the weighted superposition attraction (WSA). We evaluate the performance of WSA against seven well-known metaheuristic algorithms, i.e., differential evolution (DE), genetic algorithm (GA), particle swarm optimization (PSO), flower pollination algorithm (FPA), symbiotic organisms search (SOS), marine predators’ algorithm (MPA) and manta ray foraging optimization (MRFO). Our extensive numerical experiments demonstrate that WSA is highly effective for feature selection, achieving a decrease of up to 99% in the number of features for large datasets without sacrificing classification accuracy. In fact, WSA-KNN outperforms traditional ML methods by about 18% and ensemble ML algorithms by 9%. Moreover, WSA-KNN achieves comparable or slightly better solutions when compared with neural networks hybridized with metaheuristics. These findings highlight the importance and potential of WSA for feature selection in modern-day data processing systems.

1. Introduction

With technology across all walks of life developing rapidly to expand the I/O bandwidth of intelligent systems to provide more utility, all these endless bit streams are becoming a hassle to manage. Storing heaps of data is a task that can still be managed easily; however, mining relevant data for the current intent and application has become increasingly daunting with the evolution of larger datasets. This is exactly where feature selection comes into play. Feature selection is the technique and process that aims to reduce the complexity and dimension of the existing dataset under observation by increasingly focusing on relevant subset tiers of information. This helps eliminate chunks of data considered redundant, i.e., repetitive, and data that are considered irrelevant, i.e., unrelated to the preferred feature/variable/attribute of choice. Feature selection has become particularly useful in machine learning (ML) applications since it can effectively reduce the database dimensions, thereby eliminating a lot of noise (useless filler data from the external environment which is not considered important to the current task) to make it highly optimized and easy to interpret by both the system and ML experts. This, in turn, would help in lowering the model training times, saving transmission bandwidths by a pre-processing screening of data inputs, reducing the burden on resources while raising efficiency and helping to safeguard against problems of dimensionality [1].

Conventional feature selection approaches are based on the wrapper, filter and embedded methods, with the preference of the method being dependent on the evaluation metrics under consideration and allowed error percentage bracket. The wrapper method is the most computationally intensive of them all, with the wrapper dividing the database into several feature subsets based on classes to train an ML model. It then scores the results based on the number of errors generated in the subset under evaluation, defined as the error rate of the subset. The subset with the least error rate is declared the most optimized one for the given problem and is hence chosen. The filter method reduces the complexity of operations undertaken by the wrapper, but at the expense of classification accuracy. A filter defines the score of a feature subset based on its relation to other features using the common information or traits. The subsets can then be sorted according to the desired attributes depending on the score or weight they have received from the system. In large-scale applications, a filter method usually acts as a pre-wrapper stage to improve the speed of the wrapper while reducing the dimensional scale of the input stream. Embedded methods often involve regression coefficients in their operation and carry out their feature selection stages as a part of the model generation process, and they are a blend of both wrappers and filters [2].

Liu et al. [3] proposed the Deep Neural Pursuit (DNP) algorithm, which selects a subset of high-dimensional features to alleviate overfitting and uses multiple dropouts to calculate gradients with low variance. GRACES, developed by Chen et al. [4], employs graph convolutional networks for feature selection. Both DNP and GRACES have been shown to outperform other methods in both synthetic and real-world biological datasets. Constantinopoulos et al. [5] proposed a Bayesian approach to mixture learning that simultaneously optimizes the number of components, feature saliency, and mixture model parameters. Li et al. [6] proposed Deep Feature Screening (DeepFS), a nonparametric approach that combines deep neural networks and feature screening for ultra-high-dimensional data with a small number of samples. Yamada et al. [7] presented a novel approach for high-dimensional feature selection using feature-wise kernelized Lasso, which efficiently captures nonlinear input–output dependencies. Gui et al. [8] introduced Attention-based Feature Selection (AFS), a novel neural-network-based feature selection architecture that achieves the best accuracy and stability compared to several state-of-the-art feature selection algorithms on various datasets, including those with small samples. Peng et al. [9] proposed a feature selection method based on mutual information, called minimal-redundancy–maximal-relevance criterion (mRMR), which selects a compact set of superior features at low cost and leads to promising improvements in feature selection and classification accuracy. Chen et al. [10] presented a kernel-based feature selection method that employs measures of independence to find the subset of covariates that is maximally predictive of the response.

However, the conventional approaches are prone to failure against new challenges of the complex and present developing world. Newer models require a more flexible and efficient algorithm, and this is the domain where metaheuristic algorithms have been appreciated by the ML researchers. These optimization algorithms offer superiority based on ease and versatility, and they mainly are one of four types, i.e., evolution-based, swarm intelligence, human-based and physics-based algorithms. The evolution-based algorithms include differential evolution (DE) [11] and the genetic algorithm (GA) [12] which have roots in the principles of natural selection, growth, mutation, etc. The swarm intelligence algorithms include a vast array of techniques inspired by colonies and swarms of natural species in the world and are constructed in similar ways of knowledge sharing and collaboration. They are further divided into sub-categories, such as insect (ant, bee, butterfly, dragonfly, firefly, glow-worm, etc.) and animal (lion, wolf, bat, monkey, emperor penguin, whale, hawk, etc.). Particle swarm optimization (PSO) is perhaps the most popular swarm intelligence algorithm [13]. Human-behavior-based algorithms include cultural evolution and teaching–learning-based optimization algorithms. Lastly, inspired by the laws of physics, physics-based algorithms are developed upon various phenomena, such as gravitational search and atom search optimization algorithms. In essence, there is no ‘one size fits all’ in complicated ML applications, and a schematic must be designed to select several of these techniques to fit the current problem [14]. This can lead to the creation of newer methods as a blend of these techniques or research of different phenomena in pursuit of a better model [15].

To provide a solution for better feature selection, several research efforts involving metaheuristics have generated commendable propositions. There is a plethora of literature available on the applications of metaheuristics to deal with feature selection problems. However, in context of the present study, the literature search is restricted to those algorithms considered in this paper. Thus, only the most recent and relevant literature on DE, GA, PSO, flower pollination algorithm (FPA), symbiotic organisms search (SOS), marine predators’ algorithm (MPA) and manta ray foraging optimization (MRFO) is included in this paper.

Lee and Hung [16] presented a DE solution to analyze a brushless DC motor for faults with an advanced feature selection approach based on a distance discriminant system for categorizing similarities. The features were subsequently classified using a back-propagation neural network and linear discriminant analysis and were later optimized using the DE algorithm. Hancer et al. [17] proposed a new filter feature selection criterion that would not rely on mutual redundancy and would rank features by their proposed ReliefF and Fisher scores combined with weightage to a classification task’s class and a feature’s mutual relevance. It would then lead to two new DE-based filter approaches discussed in their paper as a breakthrough. Zhang et al. [18] came up with a multi-objective DE-based solution to feature selection while tackling the issues with three embedded operators into a new approach, called binary differential evolution with self-learning. Gokulnath and Shantharajah [19] presented a GA-based function built on a support vector machine (SVM) to diagnose heart disease in an optimized manner compared to the existing techniques. In addition, Sayed et al. [20] developed a nested GA-based technique useful to select features in a cancer microarray. The data were first fed into a t-test as a pre-processing stage to a dual-nested GA (outer and inner stages), and the proposed technique had passed five-fold cross-validation for a colon cancer data trial. Yu et al. [21] paired GA with PSO to develop a hybrid algorithm with higher search capabilities and better efficiency in feature selection. Rashid et al. [22] endeavored to integrate Fast Fourier transform with GA and PSO to deal with applications of functional magnetic resonance imaging used to select features from high-dimensional images of brain scans. Using particle swarms to emulate bird and fish swarm behavior has also been an interesting concept to integrate into ML to search for candidate solutions. Sakri et al. [23] successfully utilized PSO in conjunction with naive Bayes, K-nearest neighbor (KNN) and fast decision tree learner classifiers for accurate breast cancer recurrence prediction. Almomani [24] combined PSO with the gray wolf optimizer (GWO), firefly algorithm (FFA) and GA for feature selection in a network intrusion detection system to monitor erratic network traffic. The proposed approach could employ both wrappers and filters designed for the considered algorithms and select features with higher accuracies. Sharkawy et al. [25] suggested the application of PSO to identify the type and dimension of particles suspended in transformer oil with the aid of partial discharge patterns. For generalized feature selection, Tawhid and Ibrahim [26] integrated PSO with FPA to propose a hybrid binary solution, called BPSOFPA. The algorithm had both the S-shaped and V-shaped transfer functions, with the V-shaped function being highly successful in enhancing the selection performance of PSO and FPA. Majidpour and Gharehchopogh [27] also formulated an improved FPA by blending it into the AdaBoost algorithm to demonstrate feature selection solutions in classifying text documents and observed that the hybrid approach would have higher detection accuracy than a standalone AdaBoost.

A marine predator’s relationship with its prey can also form the basis of an algorithm’s design for global searches. Yousri et al. [28] derived a fractional-order comprehensive learning MPA, having its foundation on a standard MPA, while fixing the lack of iterations inherent in the algorithm with the addition of fractional calculus. Sahlol et al. [29] developed a modified MPA to analyze COVID-19 X-ray images using fractional-order calculus techniques hybridized with MPA to improve accuracy against the existing convolutional neural network (CNN) technique. Proposing a different add-on to MPA, Abd Elminaam et al. [30] suggested the use of KNN to form a hybrid MPA-KNN for efficient feature selection purposes, with the trial being on medical datasets. Another attempt involving a different marine creature includes the work of Hassan et al. [31], dealing with an improved binary version of the MRFO. The proposed approach would help in solving binary problems and network intrusion detection issues by a random forest classifier. Ghosh et al. [32] compared the effectiveness and efficiency of S-shaped and V-shaped functions for binary MRFO for feature selection. Some researchers have also been interested in mutualism-based cooperative interactions between organisms, and Mohmmadzadeh and Gharehchopogh [33] proposed an efficient binary chaotic SOS algorithm for solving feature selection problems. With two additional operators, the authors effectively dealt with the commensalism and mutualism stages as binary attributes on the foundations of a chaotic function. The proposed system could also utilize S-shaped and V-shaped transfer functions. Han et al. [34] proposed a binary SOS with an adaptive S-shaped transfer function for solving feature selection problems with high accuracy.

All of the above-mentioned algorithms excel in solving certain problems in particular and have their own pros and cons in a given scenario. This paper proposes the application of a newly developed optimization technique called the weighted superposition attraction (WSA) algorithm for efficiently solving feature selection problems. It is a new-generation swarm-intelligence-based metaheuristic algorithm that can deal with continuous optimization challenges, with its particles generating a superposition leading to solution vectors [35]. This algorithm derives its inspiration from the principles of particulate superposition in physics, and the unique proposition has been observed to be quite effective in testing real-valued and dynamic optimization problems. Baykasoğlu and Şenol [36] also proved WSA to be superior to other metaheuristic algorithms since it would not require any S or V-shaped transfer function to convert values to binary, and it also adopts a step sizing function for population diversity. Baykasoğlu and Şenol [36] further developed WSA to solve combinatorial problems by integrating a random walk procedure and opposition-based learning into WSA, creating cWSA. The cWSA has a much more diverse form, with enhanced performance and capabilities over the regular algorithm. The WSA can also be enhanced to solve dynamic binary optimization problems, having the unique capability that is missing in most of the metaheuristic algorithms, since the research focus has heavily been based on real values. Baykasoğlu and Ozsoydan [37] achieved this capability by using the binary properties of WSA, known as bWSA, with excellent results. The WSA has proven itself in a variety of different application problems. Baykasoğlu et al. [38] observed it to be particularly useful in field pattern recognition while improving fuzzy c-means clustering by integrating a quantum-enhanced version of WSA. The original fuzzy c-means clustering algorithm had been unable to escape the local optima at times, and WSA had overcome the challenge easily. It can also be extended to macro-level structures, with Adil and Cengiz [39] performing 2D and 3D truss design optimizations using WSA. The adopted algorithm could deal with five truss design problems with 200 elements and generate a sheet for optimal weight while including statistical measures, such as standard deviations and analysis counts in comparison to other considered algorithms.

This paper extends those studies by applying WSA to feature selection problems as the pinnacle of future advancements in the field. To the best of the authors’ knowledge, no work on feature selection using WSA has been carried out so far. In this paper, the performance of WSA is compared with that of seven other metaheuristics, i.e., DE, GA, PSO, FPA, SOS, MPA and MRFO. Traditional metaheuristics, such as DE, GA and PSO, have already been applied to solve almost all classes of optimization problems. Thus, the comparison of WSA against them would provide a useful benchmark for traditional metaheuristics. On the other hand, FPA, SOS, MPA and MRFO are powerful metaheuristics that have been developed within the last five years. Comparison with these algorithms would therefore establish WSA’s standing among its recent peers. Thus, the results derived from this paper would provide valuable insights into the effectiveness of WSA for feature selection and its potential for future applications in the field. A variety of datasets have been considered to evaluate the performance of the algorithms under the consideration of classification accuracy, fitness, average features selected, convergence and computational time. The rest of the paper is arranged as follows: the algorithms adopted in this study are discussed in Section 2, followed by the experimental results and their discussion in Section 3. Section 4 details the conclusions drawn based on this study.

2. Methodology

2.1. Wrapper Method for Feature Selection

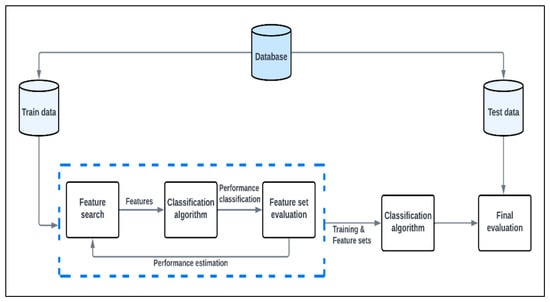

For feature selection, a wrapper method is employed in this paper. To accomplish the task, wrapper techniques use a learning algorithm that applies a search strategy to explore the space of feasible feature subsets, ranking them according to the quality of their performance in a specific algorithm. In most of the cases, wrapper approaches outperform filter methods since the feature selection process is tailored to the specific classification algorithm being employed. Wrapper methods, on the other hand, are prohibitively time- and resource-intensive for high-dimensional data since they require the evaluation of each feature set using the classifier algorithm. Figure 1 exhibits the framework of the wrapper-based feature selection approach. It can be noticed from Figure 1 that the database was initially spilt into dedicated training and testing sets. In this paper, an 80:20 split was considered for train and test sets. The train set was then deployed in the wrapper-based feature selection framework where the metaheuristic algorithm would act as the optimizer to optimize a composite function (Equation (1)). The framework starts by selecting a subset of the features and building a classification model using the specified ML algorithm. The performance of the model is then evaluated, and based on the derived results, the optimizer feeds a revised set of features to build a new classification model. This process of iterative classification model building, model performance measurement and the feeding of revised features to the classification algorithm continues until a pre-specified termination criterion is met. In this paper, the maximum iteration limit was considered as the termination criterion. The final classification model is finally tested on the independent test data, and its performance is recorded. In this paper, KNN was considered as the classification algorithm. The KNN method employs a set of K neighbors to determine how an object should be categorized. A positive integer value of K is pre-decided before running the algorithm. To classify a record, the Euclidean distances between the unclassified record and the classified records are determined and ranked.

Figure 1.

Wrapper-based feature selection framework.

2.2. Fitness Function

The effectiveness of an optimizer is evaluated by its corresponding fitness function. The fitness function in feature selection is dependent on the classification error rate and number of features used for classification. It is deemed to be a good solution if the selected feature subset reduces the classification error rate and number of features chosen. The following fitness function is considered in this paper [40]:

where is the classification error computed by the classifier, |S| is the reduced number of features in the new subset, |F| is the total number of features in the dataset, and is a factor corresponding to the importance of the classification performance and the length of the reduced subset.

2.3. Metaheuristics

As discussed in the previous section, the main focus of this paper is based on the application of the WSA algorithm to solve feature selection problems. To demonstrate the performance capability of WSA in feature selection problems, it is compared with seven other algorithms. All these algorithms considered in this paper are briefly introduced hereunder.

2.3.1. Differential Evolution

DE is a population-based search algorithm in continuous space, initially propounded by Storn and Price [11]. It starts by initializing a population of search agents. The search agents move about in the search space using pre-defined mathematical rules to adjust their positions with respect to the position of the existing agents. The new position of any agent is only accepted if it provides an improvement over the existing ones. This process of improvement is carried out iteratively over generations until the termination criterion is met. The pseudocode for DE can be generally represented, as shown in Algorithm 1.

| Algorithm 1: Differential evolution | ||

| Objective function Initialize population G = 0; Initialize all NP individuals WHILE | ||

| FOR to NP | ||

| GENERATE three individuals randomly based on the condition that MUTATION Form the donor vector using the formula: CROSSOVER The trial vector ui is developed either from the elements of the target vector xi or the elements of the donor vector vi as follows: | ||

| where ,, CR is the crossover rate, is random number generated for each and is random integer to ensure that in all cases EVALUATE if replace the individual with the trial vector | ||

| END | ||

| END | ||

2.3.2. Genetic Algorithm

The GA is one the earliest algorithms, initially introduced by Bremermann in 1958 [30]. It was inspired by Charles Darwin’s theory of natural selection. The algorithm was later made famous by Holland [12]. The GA, like any other usual algorithm, starts the task with an initialized population of candidate solutions (individuals). The chromosome would be the parents of the population. The performance of the individuals is then evaluated using the fitness function. Based on this fitness value, a selection scheme mechanism would be chosen to subsequently achieve the desired goal. Therefore, a new population is produced through crossover, mutation and reproduction. The pursuit of this algorithm reiterates until the optimal solution is reached. In this paper, a roulette-wheel-based selection method is considered for GA. In the roulette wheel selection method, the fitness of each individual chromosome is used to determine the probability that it would be selected for reproduction. The higher is the fitness of an individual, the greater is the probability that it would be chosen for reproduction. This probability is proportional to the fitness of the individual.

Let fk be the fitness value for each chromosome . The probability is calculated using the following expression:

where fi is the fitness value of ith individual and n is the total number of individuals.

The pseudocode for GA (roulette wheel) can be represented as shown in Algorithm 2.

| Algorithm 2: Genetic algorithm (roulette wheel) | |||

| Objective function Initialize population G = 0; Initialize all NP individuals WHILE Iterations < Maximum Iterations | |||

| FOR i ← 1 to NP | |||

| sum += fitness of this individual FOR all members of population probability = sum of probabilities + (fitness/sum) | |||

| sum of probabilities += probability | |||

| END number = random between 0 and 1 for all members of population if number > probability but less than next probability Iterations = Iterations + NP | |||

| END G = G + 1 | |||

| END | |||

Similar to roulette wheel, tournament selection is another parent selection approach in the GA. Unlike the roulette wheel, tournament selection is usually restricted to two chromosomes. However, selection between more than two chromosomes can be carried out by adjusting the tournament size. The tournament selection approach can be represented using the pseudocode provided in Algorithm 3 [41].

| Algorithm 3: Genetic algorithm (tournament selection) | ||||

| Objective function Initialize population ; Initialize all individuals WHILE | ||||

| FOR | ||||

| WHILE need to generate more offspring | ||||

| IF then | ||||

| Refill: move all individuals from the temporary population to population | ||||

| END IF | ||||

| sampling individuals without replacement from population select the winner from the tournament move the sampled individuals into temporary population return the winner | ||||

| END | ||||

| END | ||||

| END | ||||

2.3.3. Particle Swarm Optimization

The PSO is a metaheuristic optimization algorithm which is inspired by the swarm behavior observed in birds or schools of fish [13]. Any of the observable vicinities of a bird in nature are limited to a given range. But having many birds enables all birds in a swarm to be cognizant of the larger area of a fitness objective/function. Every particle in the swarm optimization has a fitness value, velocity and an associated position. Every particle also keeps track of the particle’s best fitness value, and a set of global best fitness values is maintained [42,43]. The mathematical model for PSO is represented using Equation (3).

where is the position of th particle in th iteration.

When a particle is involved in a population like its topological neighbors, it results in a value referred to as local best. Once the best two values are obtained, the particle updates its velocity and location using the following equations [44]:

where is the velocity of ith particle in tth iteration, is the best position of th particle in th iteration, is the best position obtained so far among all particles until th iteration, and are random numbers in the range [0, 1], and and are the user-defined parameters.

The pseudocode for PSO can be represented as shown in Algorithm 4.

| Algorithm 4: Particle swarm optimization | |||

| Objective function Initialize population FOR | |||

| FOR | |||

| If then | |||

| END | |||

| FOR | |||

| IF THEN | |||

| ELSE IF THEN | |||

| IF THEN | |||

| IF THEN | |||

| END | |||

| END | |||

2.3.4. Flower Pollination Algorithm

The FPA was inspired by the flower pollination process [45]. The general rules for this algorithm are stated in the following four steps:

- (a)

- A biotic process is global pollination and obeys Levy flights.

- (b)

- On the other hand, an abiotic process is local pollination.

- (c)

- Pollinators are the probabilities of reproduction.

- (d)

- Probability switches between local and global pollination.

As a part of the global pollination process, insects and other pollinators transport flower pollen across vast distances. Therefore, the strongest individuals are the ones to be pollinated and produce offspring, denoted as . The global pollination plus flower constancy can be modeled as shown in Equation (6):

where denotes the pollen or solution vector at iteration , refers to the current best solution, and is a step size representing the pollination strength. The pollination strength, represented by the parameter , is analogous to a step size. A Levy flight is a useful tool for simulating insect flight since it can cover large distances in discrete increments, like those that insects can take. The traits of pollinators are based on the Levy flight probability distribution. The Levy can be approximately modeled as shown in Equation (7):

The local pollination plus flower constancy can be modeled as shown in Equation (8):

Equation (8) represents the local pollination. The pseudocode for FPA is described in Algorithm 5.

| Algorithm 5: Flower pollination algorithm | |||

| Objective function Initialize population Find the best solution in the initial population Define a switch probability FOR | |||

| FOR | |||

| IF | |||

| Draw d-dimensional step vector L Global phase Equation (6) | |||

| ELSE | |||

| Draw in Equation (8) from a uniform distribution [0, 1] Local phase Equation (8) | |||

| END | |||

| Assess new solutions If new solutions > old solutions, update population | |||

| END current best solution | |||

| END | |||

2.3.5. Symbiotic Organism’s Search

The SOS is used to simulate the interaction of living things in an ecosystem. Organisms do not live in isolation but depend on each other for survival and sustenance [46]. This reliance on each other for survival is called symbiosis. There are three major dependencies in nature, i.e., mutualism, commensalism and parasitism [47]. The SOS algorithm starts with a population in which a group of individuals is generated by random chance in the search area. Each organism is associated with a fitness value reflecting a degree of adaptation to the optimization objective [48]. In mutualism, two different species depend on each other such that both are benefited, for example, an oxpecker and a cow; the oxpecker benefits from feeding on ticks on the cow, while the cow benefits from the removal of the parasites [47]. In SOS, is the th member in the ecosystem, while is a randomly selected member in the ecosystem that interacts with . The mutual interaction aims to increase the survival ability of both the organisms. The equations to simulate the interactions are stated as below:

where , is a vector of random numbers, MV is a mutual vector, and and are the benefit factors. These factors state the benefit achieved by each organism, i.e., whether an organism partially or fully benefits from the interaction.

In commensalism, one organism benefits while the other is neutral or unaffected. In SOS, is randomly selected to interact with . In this case, tries to benefit from , but does not benefit from . The interaction is modeled as shown in the equation below; the organism xi’s optimal solution is only updated if its new fitness is better than the pre-interaction fitness.

The reflects the beneficial advantage, where helps to increase its survival ability to a higher degree.

In parasitism, one of the individuals benefits while the other is harmed. Such a parasitic relationship is noticed in worms inside the gastrointestinal tract of human beings or any other animal; the host is severely affected while the parasite benefits. In the parasitism phase, there is a fight for existence between the parasite and the host. If the host can develop immunity against the parasite, the parasite will not be able to live in the ecosystem; if the parasite has a better fitness value, it will kill the host [47]. Assuming is the parasite vector while is the host vector and both are randomly selected, both would try to outdo each other so that the resilient vector survives in the ecosystem. The procedure for the SOS algorithm is highlighted in Algorithm 6.

| Algorithm 6: Symbiotic organisms search | |

| Objective function Initialize population FOR | |

| Mutual interaction phase Commensalism interaction phase Parasitic interaction phase current best solution | |

| END | |

2.3.6. Marine Predators’ Algorithm

The MPA is a population-based metaheuristic formulated by drawing inspiration from marine predators. For the first trial, the initial solution is randomly spread over a search area, as depicted by the following equation [49]:

where and , respectively, represent the minimum and maximum boundary for the variables, and rand is a random value vector within the range of 0 and 1.

Based on the theory of survival of the fittest, the fittest solution is selected as the top predator for developing a matrix that is known as the elite [49]. The elite’s matrix arrays oversee the search and finding of prey based on the information on the position of the prey.

where denotes the top predator that is duplicated n number of times to make the elite matrix, n stands for the population of agents, and d represents the dimension. After each computation, the elite is updated in the event a better predator substitutes the top predator. The predators move into new positions based on the prey matrix [30,50]. The prey matrix is indicated as shown below:

where Xi,j represents jth dimension of ith prey.

The entire optimization process is majorly and directly associated with the elite and prey matrixes. It is divided into three key phases based on the ratio of velocity and emulates the entire life of the predator and prey [51].

Phase 1: Prey is moving faster compared to the predator (i.e., in high-velocity ratio) [51]. The mathematical model is:

where is a vector consisting of random numbers, denotes entry-wise multiplication, is a random number vector uniformly distributed in the range [0, 1], and P is a constant with a value of 0.5.

Phase 2: Both predator and prey are moving at the same speed (i.e., in unit velocity ratio). This can be modeled as shown below [51]:

If

For the first half of the population:

For the second half of the population:

where denotes a vector of random numbers representing the Levy movement, and CF is an adaptive parameter to control the predator’s step size.

Phase 3: Predators move faster than prey (i.e., in low-velocity ratio). It is the last stage of the process of optimization, and it is associated with high exploitation ability [40].

The procedure for MPA is highlighted in Algorithm 7.

| Algorithm 7: Marine predators algorithm | ||

| Objective function Initialize population Compute the fitness values, elite matrix and memory saving FOR t = 1: max generation | ||

| IF | ||

| ELSE IF | ||

The first half of the population is updated by The second half of the population is updated by | ||

| ELSE IF | ||

| END IF | ||

| Accomplish elite update and memory saving based on (where ) current best solution | ||

| END | ||

2.3.7. Manta Ray Foraging Optimization

This is an algorithm (Algorithm 8) that emulates three foraging behaviors to update the solution position. The foraging behaviors are chain, cyclone and somersault [52]. The mathematical model for each foraging behavior is outlined below:

Chain foraging: The foraging chain is formed when manta rays line up head-to-tail. In every iteration, the best solution is used to update each individual [53]. This is represented by the following mathematical model:

where represents the size of the population, is a random vector between [0, 1], is th individual’s position at the tth iteration, α is the weight coefficient, and is the plankton with the highest concentration (the best solution obtained so far).

Cyclone foraging: Once manta rays spot food, they create a lengthy foraging chain and then swim to get the food. The swimming motion is a spiral in which each manta ray moves to the one in front of it [53]. The cyclone foraging behavior is represented by the following mathematical equation:

where and represent the weight factor and the maximum number of iterations, respectively, and is a random value between [0, 1].

The exploration mechanism can be used to improve the algorithm by employing the following mathematical model:

where is a random position in the search space, and and are the upper and lower limits of the th dimension, respectively.

Somersault foraging: The position of food at this point is regarded as the pivot, where every individual appears to swim towards or around the pivot and then somersaults to a new position. The corresponding mathematical equation can be provided as shown below [54]:

where the somersault factor is represented by , and and are random numbers between [0, 1].

| Algorithm 8: Manta ray foraging | |||

| Objective function Initialize population and maximum iterations Compute the fitness of each individual and obtain the best solutions FOR | |||

| IF THEN use cyclone foraging | |||

| IF THEN | |||

| ELSE | |||

| END IF | |||

| ELSE use chain foraging | |||

| END IF | |||

| Calculate the fitness of the individuals using | |||

| IF THEN | |||

| For somersault foraging | |||

| Calculate the fitness of the individuals using | |||

| IF THEN | |||

| END current best solution | |||

2.3.8. Weighted Superposition Attraction Algorithm

The WSA algorithm is used to solve unconstrained global optimization problems [55]. The WSA is based on the principle of superposition and combines with the attracted movements of agents, as observed in several other metaheuristics, such as PSO. It models and simulates changing superpositions due to the dynamic instability of the system with attracted movements of agents [56]. The steps involved in this algorithm are stated in Algorithm 9.

The position-updating mechanism to the best position during the optimization process is given by:

where indicates the position of th search agent in th dimension at th iteration, is the step length at th iteration, and indicates the search direction of th search agent in th dimension at th iteration. The value of is updated by the function as given below:

where is a random number in the range [0, 1], is the number of iterations, and and are user-defined parameters. The is updated as the search progresses via a proportional rule.

| Algorithm 9: Weighted superposition attraction algorithm | |

| Objective function ) Initialize population and maximum iterations Compute the fitness of each individual and obtain the best solutions FOR | |

| Ranking solutions by the fitness Determining the target point to move the simulated iteration towards it Evaluating the fitness value of the target Determination of the search direction for the solutions Each solution is moved toward its determined direction Update the fitness solutions for | |

| END current best solution | |

3. Results and Discussion

The problem addressed in this paper is the need for the efficient identification of feature selection methods in modern data processing systems. As the volume of data generated by information systems increases, ML techniques are often employed to extract meaningful information. However, the large size of these datasets may lead to slow performance, making it necessary to remove redundant features from the data during pre-processing. Feature selection is a crucial step in modern data processing systems, but it is a challenging task due to the complexity and size of the datasets. To address this problem, the authors propose the use of WSA metaheuristics for feature selection and compare its performance with seven other metaheuristics.

3.1. Dataset Description

In this paper, nine different datasets of varying complexity and domain are considered, as shown in Table 1. For the datasets, the number of features ranges between 8 and 4000, whereas the number of data points or instances ranges between 62 to 1000. Thus, it is evident that both low and high feature dimensions as well as low- and high-scale datasets are considered here. The optimization objective of this paper is already described in Equation (1), i.e., an optimal combination of a lower number of features and higher classification accuracy (or low classification error) is desired.

Table 1.

Description of the binary datasets.

3.2. Metaheuristic Parameters

It is well known that the tuning parameters of metaheuristics largely influence the outcome of these algorithms. Thus, it is necessary to carefully tune and select the best possible tuning parameters. Various tuning parameters for all the algorithms selected in this paper are provided in Table 2. Each algorithm was independently run 20 times to account for its stochastic nature. In each run, the number of search agents was limited to 30, whereas the iteration limit was kept as 200, thereby encompassing 6000 function evaluations. Fixing the number of function evaluations would help in drawing a meaningful comparison between the algorithms under consideration.

Table 2.

Description of tuning parameters used in each algorithm.

3.3. Performance of the Metaheuristic on the Real-World Datasets

The best fitness value obtained among the 20 independent runs for each algorithm for all the nine datasets is reported in Table 3. The average rank of each algorithm based on its relative performance on all the nine datasets was calculated and is also provided in Table 3. It is worthwhile to mention here that the bold letters symbolize the best solutions achieved. Thus, it can be observed from this table that WSA achieved the best optimal solution in six out of nine datasets. Thus, based on the best fitness value, the algorithms can be ranked as WSA > MPA > GA (T) > MRFO > GA (R) > FPA > PSO > DE > SOS.

Table 3.

Best fitness function value achieved by each algorithm for each dataset.

The mean best fitness and standard deviation values of 20 independent runs for each algorithm are reported in Table 4. A mean best fitness close to the best fitness reported in Table 3 would indicate that an algorithm is constantly able to achieve ‘good’ results in repeated trials. Moreover, a low standard deviation would mean low variability in the repeated trials. Thus, for a ‘high’-performing algorithm, it is desired that the mean best fitness would be close to its best fitness, accompanied by low standard deviation. Such an algorithm can be deemed to be more robust in terms of reproducibility. In terms of the mean best fitness and standard deviation, the algorithms can be ranked as WSA > MPA > MRFO > FPA > GA (R) > GA (T) > DE > PSO > SOS.

Table 4.

Mean (and standard deviation) of best fitness value achieved by each algorithm for each dataset on repeated trials.

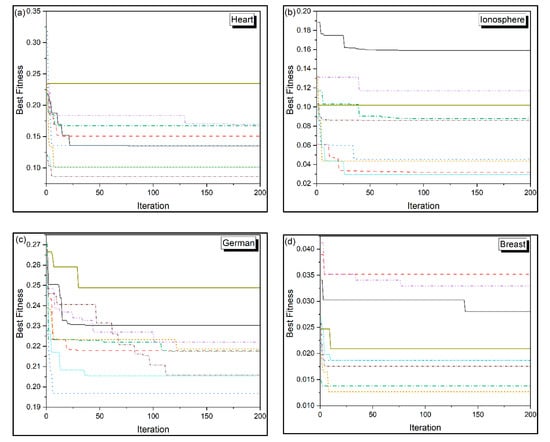

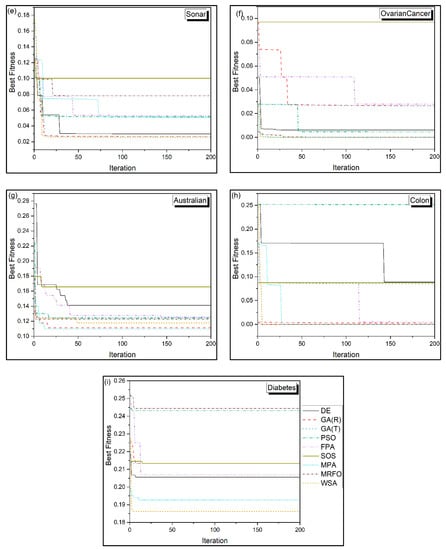

The convergence curves for all the algorithms for all the datasets under consideration are exhibited in Figure 2. The convergences of WSA and MPA are noticed to be quite rapid, whereas the FPA and PSO algorithms are slightly sluggish. In addition, the trend followed by FPA and PSO is very similar. On the other hand, notable similarity in convergence trends between WSA and MPA is observed.

Figure 2.

Convergence of each algorithm for different datasets (a) Heart (b) Ionosphere (c) German (d) Breast (e) Sonar (f) Ovarian cancer (g) Australian (h) Colon (i) Diabetes.

Table 5 presents the best classification accuracy for each dataset as obtained by each of the algorithms. An ideal classification accuracy of 1 would indicate that the algorithm can correctly classify 100% of the instances. With respect to the best classification accuracy, the considered algorithms can be ranked as WSA > GA (T) > GA (R) > DE > MPA ≈ MRFO > PSO > FPA > SOS. In the case of datasets large numbers of features, such as the Ovarian Cancer dataset (with 4000 features), it is interesting to notice that all the algorithms are able to achieve a 100% classification rate. The mean best classification accuracy and standard deviation of 20 independent runs are provided in Table 6. Based on this table, the algorithms can be graded as WSA > MPA > MRFO > GA (T) > GA (R) > FPA > DE > PSO > SOS.

Table 5.

Best classification accuracy achieved by each algorithm for each dataset.

Table 6.

Mean (and standard deviation) of best classification accuracy achieved by each algorithm for each dataset in repeated trials.

The computational time is one of the key factors to be considered while identifying the appropriate algorithms for a given task. This is because in real-life conditions, the number of instances may run in millions, indicating a huge computational burden. The best computational time for all the algorithms is reported in Table 7, which indicates the ranking of the considered algorithms is FPA > DE > WSA > PSO > MRFO > MPA > GA (T) > GA (R) > SOS. Similarly, the mean and standard deviation values of the best computational time of 20 independent trials are provided in Table 8, which arrange these algorithms as FPA > DE > PSO > WSA > MRFO > MPA > GA (T) > GA (R) > SOS. The fastest algorithm (FPA) is noticed to be on average 3.2 times faster than the slowest algorithm (SOS). This further reiterates the need for computationally efficient algorithms. So far, with respect to optimal solutions and classification accuracy, WSA is noticed to outrank the other considered algorithms. However, in terms of average computational time, it is ranked fourth and is observed to be about 2.7 times faster than SOS, but would take approximately 19% more time than FPA.

Table 7.

Best computational time achieved by each algorithm for each dataset.

Table 8.

Mean (and standard deviation) of computational time achieved by each algorithm for each dataset in repeated trials.

The primary task of the feature selection process is to eliminate redundant features from further analyses. The lowest numbers of features selected by the algorithms for each dataset are depicted in Table 9. For large-feature datasets, such as Ovarian Cancer, the classical algorithms, such as DE and GA, reduce the features by approximately half and two-thirds, respectively. However, algorithms such as SOS, MPA and WSA are able to achieve 99% or more reduction in features. When this is taken into account in conjunction with the fact that these algorithms clock a 100% classification rate on this dataset, this outcome appears even more remarkable. For all the datasets combined, the algorithms are ranked in terms of the best feature reduction as MPA > WSA > SOS > GA (T) > GA (R) > PSO > FPA > MRFO > DE. In terms of repeated trials of 20 independent evaluations (Table 10), the algorithms are ranked as SOS > MPA > GA (T) > GA (R) > WSA > PSO > FPA > DE > MRFO.

Table 9.

Least features selected by each algorithm for each dataset.

Table 10.

Mean (and standard deviation) of features selected by each algorithm for each dataset on repeated trials.

3.4. Comparison with the State of the Art

In this section, the WSA is compared with existing solutions from the literature. A neural network trained with PSO (PSO-NN) [59], random forest (RF) [60], Vote method [61], support vector machine (SVM) [62], decision tree (DT) [63] and genetic algorithm with fuzzy logic (AGAFL) [64] are compared with the current WSA-KNN. From Table 11, it is observed that despite the additional sophistication of neural networks over KNN, it is observed that the WSA-KNN’s solution is on par with PSO-NN. Moreover, the current method is about 2% better than the AGAFL. Compared to traditional ML algorithms such as DT and SVM, the WSA-KNN is able to better its performance by as much as 18%. About 9% improvement in accuracy is seen even over ensemble ML algorithms such as RF.

Table 11.

Comparison of accuracy of various algorithms on Heart dataset.

4. Conclusions

In this paper, the WSA algorithm was applied to solve feature selection problems. Its performance was compared with several other algorithms, such as DE, GA, PSO, FPA, SOS, MPA and MRFO. Nine different datasets of varying complexity and domain were considered to analyze the performance of the algorithms. The algorithms were compared on varied criteria, such as fitness value, convergence, classification accuracy, number of features selected and computational time. Based on the exhaustive analysis, it can be concluded that:

- WSA > MPA > GA (T) > MRFO > GA (R) > FPA > PSO > DE > SOS is the order of the algorithms under consideration that provide the best fitness value. In contrast, while comparing these algorithms based on mean best fitness and standard deviation, WSA > MPA > MRFO > FPA > GA > GA > GA > GA > GA > DE > PSO > SOS is the order of their performance.

- The convergence of WSA and MPA is found to be superior to other algorithms.

- WSA > GA (T) > GA (R) > DE > MPA > MRFO > PSO > FPA > SOS is the ranking order of the algorithms with respect to the highest classification accuracy. On the other hand, in terms of mean best classification accuracy and standard deviation, WSA > MPA > MRFO > GA (T) > GA (R) > FPA > DE > PSO > SOS is the order of the algorithms.

- FPA and DE are noticed to be computationally faster than the other algorithms, and thus, based on the best computation time, the algorithms can be ranked as FPA > DE > WSA > PSO > MRFO > MPA > GA (T) > GA (R) > SOS. In terms of mean computational time and standard deviation, the ranking of these algorithms is FPA > DE > PSO > WSA > MRFO > MPA > GA (T) > GA (R) > SOS.

- With respect to the lowest number of features selected, the ranking is MPA > WSA > SOS > GA (T) > GA (R) > PSO > FPA > MRFO > DE, whereas for the mean and standard deviation of the number of features selected, the ranking is derived as SOS > MPA > GA (T) > GA (R) > WSA > PSO > FPA > DE > MRFO.

In general, it is possible to conclude from the study that WSA is quite suitable for solving feature selection problems. It is observed that for big-feature datasets, a decrease of as much as 99% in the number of features can be obtained with the WSA algorithm without sacrificing the classification accuracy. The future scope of this research includes extending the algorithm to handle high-dimensional data and exploring its performance on other classification problems. The algorithm can also be hybridized with other optimization techniques to improve its accuracy and efficiency. Finally, the research highlights the need for developing universal algorithms that can address various aspects of data processing.

Author Contributions

Conceptualization, R.Č. and K.K.; data curation, N.G. and R.S.; formal analysis, N.G. and R.S.; methodology, N.G., R.S., R.Č., S.C. and K.K.; software, K.K.; supervision, R.Č. and S.C.; validation, N.G. and R.S.; visualization, K.K.; writing—original draft, N.G., R.S. and S.C.; writing—review and editing, R.Č., S.C. and K.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available through email upon request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Too, J.; Mafarja, M.; Mirjalili, S. Spatial bound whale optimization algorithm: An efficient high-dimensional feature selection approach. Neural Comput. Appl. 2021, 33, 16229–16250. [Google Scholar] [CrossRef]

- Mukherjee, S.; Dutta, S.; Mitra, S.; Pati, S.K.; Ansari, F.; Baranwal, A. Ensemble Method of Feature Selection Using Filter and Wrapper Techniques with Evolutionary Learning. In Emerging Technologies in Data Mining and Information Security: Proceedings of IEMIS 2022; Springer: Berlin/Heidelberg, Germany, 2022; Volume 2, pp. 745–755. [Google Scholar]

- Liu, B.; Wei, Y.; Zhang, Y.; Yang, Q. Deep Neural Networks for High Dimension, Low Sample Size Data. In Proceedings of the International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 2287–2293. [Google Scholar]

- Chen, C.; Weiss, S.T.; Liu, Y.-Y. Graph Convolutional Network-based Feature Selection for High-dimensional and Low-sample Size Data. arXiv 2022, arXiv:2211.14144. [Google Scholar]

- Constantinopoulos, C.; Titsias, M.K.; Likas, A. Bayesian feature and model selection for Gaussian mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1013–1018. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Wang, F.; Yang, L. Deep feature screening: Feature selection for ultra high-dimensional data via deep neural networks. arXiv 2022, arXiv:2204.01682. [Google Scholar]

- Yamada, M.; Jitkrittum, W.; Sigal, L.; Xing, E.P.; Sugiyama, M. High-dimensional feature selection by feature-wise kernelized lasso. Neural Comput. 2014, 26, 185–207. [Google Scholar] [CrossRef]

- Gui, N.; Ge, D.; Hu, Z. AFS: An attention-based mechanism for supervised feature selection. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3705–3713. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Chen, J.; Stern, M.; Wainwright, M.J.; Jordan, M.I. Kernel feature selection via conditional covariance minimization. Adv. Neural Inf. Process. Syst. 2017, 30, 6949–6958. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Sastry, K.; Goldberg, D.; Kendall, G. Genetic algorithms. In Search Methodologies; Springer: Boston, MA, USA, 2005; pp. 97–125. [Google Scholar]

- Bansal, J.C. Particle swarm optimization. In Evolutionary and Swarm Intelligence Algorithms; Springer: Berlin/Heidelberg, Germany, 2019; pp. 11–23. [Google Scholar]

- Singh, A.; Sharma, A.; Rajput, S.; Mondal, A.K.; Bose, A.; Ram, M. Parameter extraction of solar module using the sooty tern optimization algorithm. Electronics 2022, 11, 564. [Google Scholar] [CrossRef]

- Janaki, M.; Geethalakshmi, S.N. A Review of Swarm Intelligence-Based Feature Selection Methods and Its Application. In Soft Computing for Security Applications: Proceedings of ICSCS 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 435–447. [Google Scholar]

- Lee, C.-Y.; Hung, C.-H. Feature ranking and differential evolution for feature selection in brushless DC motor fault diagnosis. Symmetry 2021, 13, 1291. [Google Scholar] [CrossRef]

- Hancer, E.; Xue, B.; Zhang, M. Differential evolution for filter feature selection based on information theory and feature ranking. Knowl.-Based Syst. 2018, 140, 103–119. [Google Scholar] [CrossRef]

- Zhang, Y.; Gong, D.-W.; Gao, X.-Z.; Tian, T.; Sun, X.-Y. Binary differential evolution with self-learning for multi-objective feature selection. Inf. Sci. 2020, 507, 67–85. [Google Scholar] [CrossRef]

- Gokulnath, C.B.; Shantharajah, S.P. An optimized feature selection based on genetic approach and support vector machine for heart disease. Clust. Comput. 2019, 22, 14777–14787. [Google Scholar] [CrossRef]

- Sayed, S.; Nassef, M.; Badr, A.; Farag, I. A nested genetic algorithm for feature selection in high-dimensional cancer microarray datasets. Expert Syst. Appl. 2019, 121, 233–243. [Google Scholar] [CrossRef]

- Yu, X.; Aouari, A.; Mansour, R.F.; Su, S. A Hybrid Algorithm Based on PSO and GA for Feature Selection. J. Cybersecur. 2021, 3, 117. [Google Scholar]

- Rashid, M.; Singh, H.; Goyal, V. Efficient feature selection technique based on fast Fourier transform with PSO-GA for functional magnetic resonance imaging. In Proceedings of the 2nd International Conference on Computation, Automation and Knowledge Management (ICCAKM), Dubai, United Arab Emirates, 19–21 January 2021; pp. 238–242. [Google Scholar]

- Sakri, S.B.; Rashid, N.B.A.; Zain, Z.M. Particle swarm optimization feature selection for breast cancer recurrence prediction. IEEE Access 2018, 6, 29637–29647. [Google Scholar] [CrossRef]

- Almomani, O. A feature selection model for network intrusion detection system based on PSO, GWO, FFA and GA algorithms. Symmetry 2020, 12, 1046. [Google Scholar] [CrossRef]

- Sharkawy, R.M.; Ibrahim, K.; Salama, M.M.A.; Bartnikas, R. Particle swarm optimization feature selection for the classification of conducting particles in transformer oil. IEEE Trans. Dielectr. Electr. Insul. 2011, 18, 1897–1907. [Google Scholar] [CrossRef]

- Tawhid, M.A.; Ibrahim, A.M. Hybrid binary particle swarm optimization and flower pollination algorithm based on rough set approach for feature selection problem. In Nature-Inspired Computation in Data Mining and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 249–273. [Google Scholar]

- Majidpour, H.; Soleimanian Gharehchopogh, F. An improved flower pollination algorithm with AdaBoost algorithm for feature selection in text documents classification. J. Adv. Comput. Res. 2018, 9, 29–40. [Google Scholar]

- Yousri, D.; Abd Elaziz, M.; Oliva, D.; Abraham, A.; Alotaibi, M.A.; Hossain, M.A. Fractional-order comprehensive learning marine predators algorithm for global optimization and feature selection. Knowl. -Based Syst. 2022, 235, 107603. [Google Scholar] [CrossRef]

- Sahlol, A.T.; Yousri, D.; Ewees, A.A.; Al-Qaness, M.A.A.; Damasevicius, R.; Elaziz, M.A. COVID-19 image classification using deep features and fractional-order marine predators algorithm. Sci. Rep. 2020, 10, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Abd Elminaam, D.S.; Nabil, A.; Ibraheem, S.A.; Houssein, E.H. An efficient marine predators algorithm for feature selection. IEEE Access 2021, 9, 60136–60153. [Google Scholar] [CrossRef]

- Hassan, I.H.; Mohammed, A.; Masama, M.A.; Ali, Y.S.; Abdulrahim, A. An Improved Binary Manta Ray Foraging Optimization Algorithm based feature selection and Random Forest Classifier for Network Intrusion Detection. Intell. Syst. Appl. 2022, 16, 200114. [Google Scholar] [CrossRef]

- Ghosh, K.K.; Guha, R.; Bera, S.K.; Kumar, N.; Sarkar, R. S-Shaped versus V-shaped transfer functions for binary Manta ray foraging optimization in feature selection problem. Neural Comput. Appl. 2021, 33, 11027–11041. [Google Scholar] [CrossRef]

- Mohmmadzadeh, H.; Gharehchopogh, F.S. An efficient binary chaotic symbiotic organisms search algorithm approaches for feature selection problems. J. Supercomput. 2021, 77, 9102–9144. [Google Scholar] [CrossRef]

- Han, C.; Zhou, G.; Zhou, Y. Binary symbiotic organism search algorithm for feature selection and analysis. IEEE Access 2019, 7, 166833–166859. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Ozsoydan, F.B.; Senol, M.E. Weighted superposition attraction algorithm for binary optimization problems. Oper. Res. 2020, 20, 2555–2581. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Şenol, M.E. Weighted superposition attraction algorithm for combinatorial optimization. Expert Syst. Appl. 2019, 138, 112792. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Ozsoydan, F.B. Dynamic optimization in binary search spaces via weighted superposition attraction algorithm. Expert Syst. Appl. 2018, 96, 157–174. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Gölcük, İ.; Özsoydan, F.B. Improving fuzzy c-means clustering via quantum-enhanced weighted superposition attraction algorithm. Hacet. J. Math. Stat. 2018, 48, 859–882. [Google Scholar]

- Adil, B.; Cengiz, B. Optimal design of truss structures using weighted superposition attraction algorithm. Eng. Comput. 2020, 36, 965–979. [Google Scholar] [CrossRef]

- Too, J.; Liang, G.; Chen, H. Memory-Based Harris hawk optimization with learning agents: A feature selection approach. Eng. Comput. 2022, 38 (Suppl. 5), 4457–4478. [Google Scholar] [CrossRef]

- Fang, Y.; Li, J. A Review of Tournament Selection in Genetic Programming. In International Symposium on Intelligence Computation and Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 181–192. [Google Scholar]

- Du, K.-L.; Swamy, M.N.S. Particle swarm optimization. In Search and Optimization by Metaheuristics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 153–173. [Google Scholar]

- Singh, A.; Sharma, A.; Rajput, S.; Bose, A.; Hu, X. An investigation on hybrid particle swarm optimization algorithms for parameter optimization of PV cells. Electronics 2022, 11, 909. [Google Scholar] [CrossRef]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 2018, 22, 387–408. [Google Scholar] [CrossRef]

- Yang, X.-S. Flower pollination algorithm for global optimization. In International Conference on Unconventional Computing and Natural Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar]

- Ezugwu, A.E.; Prayogo, D. Symbiotic organisms search algorithm: Theory, recent advances and applications. Expert Syst. Appl. 2019, 119, 184–209. [Google Scholar] [CrossRef]

- Abdullahi, M.; Ngadi, M.A.; Dishing, S.I.; Abdulhamid, S.M.; Usman, M.J. A survey of symbiotic organisms search algorithms and applications. Neural Comput. Appl. 2020, 32, 547–566. [Google Scholar] [CrossRef]

- Cheng, M.-Y.; Prayogo, D. Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput. Struct. 2014, 139, 98–112. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Jangir, P.; Buch, H.; Mirjalili, S.; Manoharan, P. MOMPA: Multi-objective marine predator algorithm for solving multi-objective optimization problems. Evol. Intell. 2023, 16, 169–195. [Google Scholar] [CrossRef]

- Al-Qaness, M.A.A.; Ewees, A.A.; Fan, H.; Abualigah, L.; Abd Elaziz, M. Marine predators algorithm for forecasting confirmed cases of COVID-19 in Italy, USA, Iran and Korea. Int. J. Environ. Res. Public Health 2020, 17, 3520. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Houssein, E.H.; Emam, M.M.; Ali, A.A. Improved manta ray foraging optimization for multi-level thresholding using COVID-19 CT images. Neural Comput. Appl. 2021, 33, 16899–16919. [Google Scholar] [CrossRef]

- Tang, A.; Zhou, H.; Han, T.; Xie, L. A modified manta ray foraging optimization for global optimization problems. IEEE Access 2021, 9, 128702–128721. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Akpinar, Ş. Weighted Superposition Attraction (WSA): A swarm intelligence algorithm for optimization problems—Part 1: Unconstrained optimization. Appl. Soft Comput. 2017, 56, 520–540. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Akpinar, Ş. Weighted Superposition Attraction (WSA): A swarm intelligence algorithm for optimization problems—Part 2: Constrained optimization. Appl. Soft Comput. 2015, 37, 396–415. [Google Scholar] [CrossRef]

- Conrads, T.P.; Fusaro, V.A.; Ross, S.; Johann, D.; Rajapakse, V.; Hitt, B.A.; Steinberg, S.M.; Kohn, E.C.; Fishman, D.A.; Whitely, G.; et al. High-Resolution Serum Proteomic Features for Ovarian Cancer Detection. Endocr. -Relat. Cancer 2004, 11, 163–178. [Google Scholar] [CrossRef] [PubMed]

- Alon, U.; Barkai, N.; Notterman, D.A.; Gish, K.; Ybarra, S.; Mack, D.; Levine, A.J. Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Cell Biol. 1999, 96, 6745–6750. [Google Scholar] [CrossRef]

- Feshki, M.G.; Shijani, O.S. Improving the heart disease diagnosis by evolutionary algorithm of PSO and Feed Forward Neural Network. In Proceedings of the 2016 Artificial Intelligence and Robotics (IRANOPEN), Qazvin, Iran, 9 April 2016; pp. 48–53. [Google Scholar]

- Chicco, D.; Jurman, G. Machine learning can predict survival of patients with heart failure from serum creatinine and ejection fraction alone. BMC Med. Inform. Decis. Mak. 2020, 20, 16. [Google Scholar] [CrossRef]

- Amin, M.S.; Chiam, Y.K.; Varathan, K.D. Identification of significant features and data mining techniques in predicting heart disease. Telemat. Inform. 2019, 36, 82–93. [Google Scholar] [CrossRef]

- Pouriyeh, S.; Vahid, S.; Sannino, G.; De Pietro, G.; Arabnia, H.; Gutierrez, J. A comprehensive investigation and comparison of machine learning techniques in the domain of heart disease. In Proceedings of the IEEE Symposium on Computers and Communications (ISCC), Heraklion, Greece, 3–6 July 2017; pp. 204–207. [Google Scholar]

- Maji, S.; Arora, S. Decision tree algorithms for prediction of heart disease. In Proceedings of the Information and Communication Technology for Competitive Strategies: In Proceedings of the Third International Conference on ICTCS, Amman, Jordan, 11–13 October 2017; pp. 447–454. [Google Scholar]

- Reddy, G.T.; Reddy, M.P.K.; Lakshmanna, K.; Rajput, D.S.; Kaluri, R.; Srivastava, G. Hybrid genetic algorithm and a fuzzy logic classifier for heart disease diagnosis. Evol. Intell. 2020, 13, 185–196. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).