Abstract

In order to minimize damage in the event of a fire, the ignition point must be detected and dealt with before the fire spreads. However, the method of detecting fire by heat or fire is more damaging because it can be detected after the fire has spread. Therefore, this study proposes a Swin Transformer-based object detection model using explainable meta-learning mining. The proposed method merges the Swin Transformer and YOLOv3 model and applies meta-learning so as to build an explainable object detection model. In order for efficient learning with small data in the course of learning, it applies Few-Shot Learning. To find the causes of the object detection results, Grad-CAM as an explainable visualization method is used. It detects small objects of smoke in the fire image data and classifies them according to the color of the smoke generated when a fire breaks out. Accordingly, it is possible to predict and classify the risk of fire occurrence to minimize damage caused by fire. In this study, with the use of Mean Average Precision (mAP), performance evaluation is carried out in two ways. First, the performance of the proposed object detection model is evaluated. Secondly, the performance of the proposed method is compared with a conventional object detection method’s performance. In addition, the accuracy comparison using the confusion matrix and the suitability of real-time object detection using FPS are judged. Given the results of the evaluation, the proposed method supports accurate and real-time monitoring and analysis.

1. Introduction

Fires are often caused by people’s negligence, such as cigarette butts, incineration of garbage, food cooking, etc., and dry climates. In the National Fire Information System [1] of the National Fire Administration, carelessness was the most common cause of fires from January 2021 to December 2021, followed by electrical, mechanical, and chemical factors. In general, people detect fire through their eyes, ears, nose, and skin. In addition, the occurrence of a fire is detected through a fire alarm in the building. However, since these methods are methods of detecting fires, deaths, injuries, and property damage can occur significantly [2]. Therefore, in order to reduce damage, it is necessary to detect and respond to large-scale fires before they occur. The installation of CCTV is expanded for safety against crimes, disasters, and calamities. Such a device can be used as evidential data to solve diverse accidents or events [3]. In fact, the human monitoring of numerous CCTV devices is problematic in terms of accuracy, efficiency, and time cost. Accordingly, it is necessary to apply an effective method of predicting and analyzing accurately numerous CCTV devices and their generated data in real-time.

Object detection is used to predict a set of labels of the bounding box and category for each object of interest in image data [4]. Among several object detection methods, object detection using YOLO is designed as a one-stage method, so it has a fast detection speed but low accuracy. This method is suitable for real-time processing [5]. In the case of the model based on CNN, the locality of the convolution operation has the capacity limit to extract global context information [6]. Therefore, it is necessary to apply a method of solving the capacity limit problem and improving prediction accuracy. The Vision Transformer (ViT) model processes and classifies patches of an image. The method makes it possible to focus on the dependence of image feature patches globally. Additionally, it is possible to maintain the space information for objection detection through multi-head self-attention learning [7]. Therefore, since the method is capable of extracting more context information and learning feature expressions that can be judged, accuracy can be improved. The Vision Transformer model, however, requires numerous data points for learning. In reality, open data for ViT model learning are not enough. For this reason, it is necessary to apply a method of letting a model learn enough with a small quantity of data. Meta-learning makes it possible to do so. There are a variety of meta-learning methods, including Few-Shot Learning, hyper-parameter optimization, and meta-expression.

Generally, a deep learning model is categorized as a black box model [8]. In a black box model, it is possible to find inputs and outputs but is difficult to determine the grounds, causes, and reasons for internal work. Since there is a lack of validity in the process of drawing the result to be determined by the model, the reliability of the result is low. To solve the problem, an explainable artificial intelligence (AI) model was developed. It is a white box model with the addition of explainability to determine not only inputs and outputs but a final result [9]. This study proposes a Swin Transformer-based object detection model using explainable meta-learning mining. The proposed method merges the Swin Transformer as a type of vision transformer and the YOLOv3 model, applying meta-learning and devising an explainable object detection model. Based on the feature pyramid network of YOLOv3, it considers objects of an image in multiple scales. To improve the detection accuracy of small objects, the method makes it possible to consider various patch sizes through the Swin Transformer model. Therefore, it supports more detailed learning. As a Few-Shot Learning method for efficient learning with small quantities of data and information loss prevention, it detects and classifies objects by using a metric-based technique. The contributions of the proposed method are as follows:

- General transformers have the potential to cause information loss on image local structures such as lines and edges in the process of generating images as patches. Therefore, based on the Swin Transformer, it solves the problem of information loss, because it ignores the modeling for image local structures such as patch lines and edges.

- There are objects of various sizes in the image data. In order to detect all of these, it is necessary to be able to detect objects of various sizes. Therefore, the combination of YOLOv3 and the Swin Transformer makes it possible to detect small objects of various sizes, including the ones which are hard to be detected by YOLOv3.

- Grad-CAM as an explainable visualization technique is used to keep data at high resolution, to reduce noise, and to find the causes of classification accurately.

This paper is composed of the following: in Section 2, the trends of vision transformer-based object detection technology and meta-learning technology are described; in Section 3, the proposed Swin Transformer-based object detection model using explainable meta-learning mining is described; in Section 4, the explainable risk prediction result from the Swin Transformer-based object detection and its performance evaluation are described; in Section 5, the conclusion of this study is drawn.

2. Related Work

2.1. Object Detection Technology Based on Vision Transformer

Image data include various objects, and their information can be understood easily by people. Deep learning models for image data analysis have been researched steadily [10]. In these models, an object detection algorithm of them is used to detect objects of interest in an image. It first generates a bounding box to detect an object. Since a bounding box makes it possible to detect objects in particular areas rightly in large image data, it helps a model learn efficiently [11]. Additionally, an object detection algorithm is classified into a one-stage model and a two-stage model. A one-stage model executes classification and regional proposal at the same time in order to draw outcomes. It means that the objects and their positions in an image are inspected once for prediction. Accordingly, since the model conducts image extraction with the use of a convolution layer after receiving an image, it features fast extraction but low accuracy. On the contrary, the two-stage model carries out classification and regional proposal each sequentially in order to extract outcomes. Although its speed is relatively slow, the model has high accuracy. YOLO, the most popular one out of object detection algorithm, is applied to real-time object detection [12]. The YOLO series is a well-known object detection algorithm. In particular, the YOLO model and Vision Transformer-based object detection model have been studied in various ways. Vision Transformer (ViT) uses an image patch embedding sequence as an input and makes it possible to classify images in detail for pre-trained visual expression [13]. Figure 1 shows the architecture of the Vision Transformer-based object detection model.

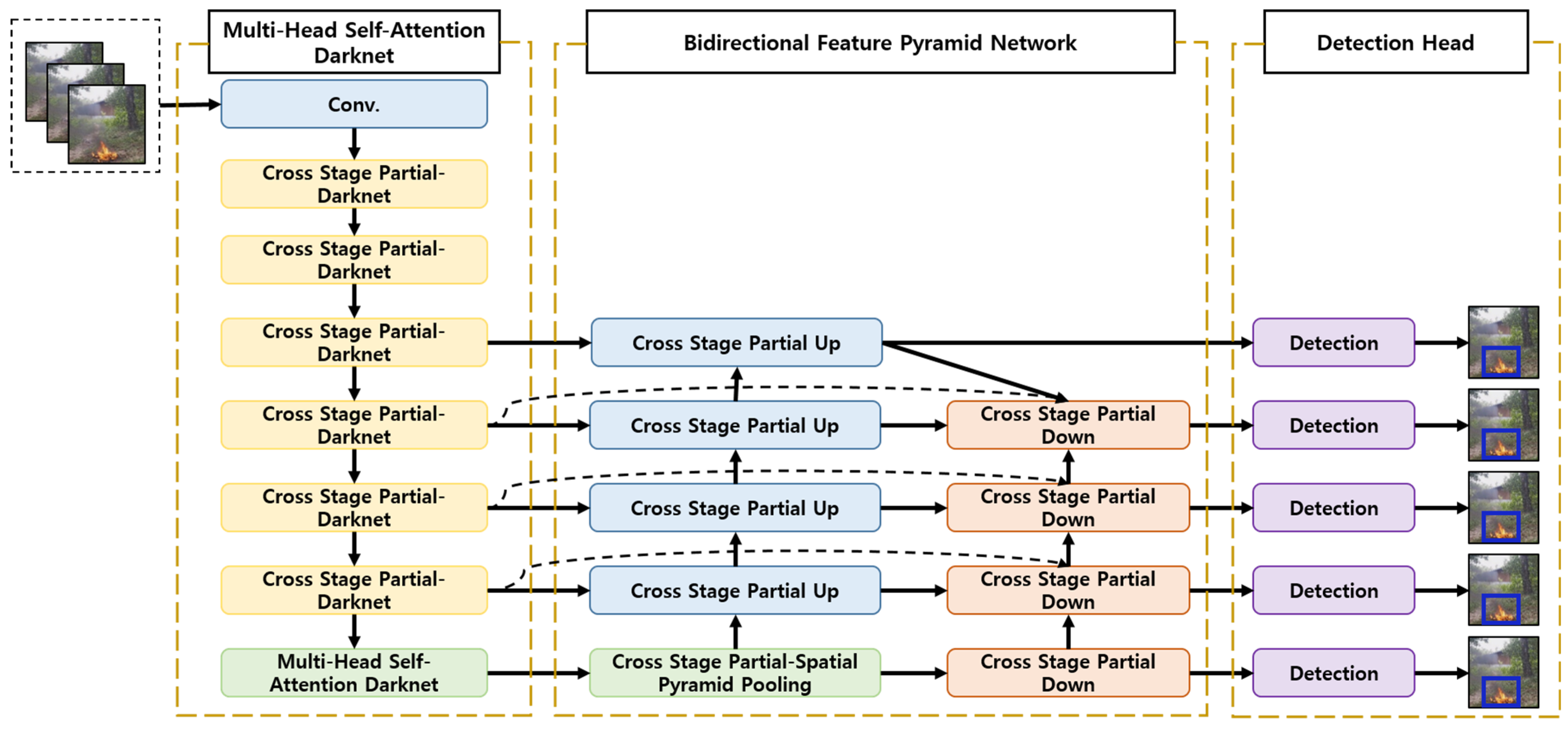

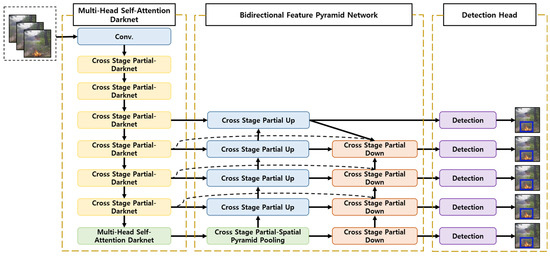

Figure 1.

The architecture of the Vision Transformer-based object detection model [14].

As shown in Figure 1, Multi-Head Self-Attention-Darknet is used as a backbone network so that features are extracted from the input image in the way of integrating multi-head self-attention with Cross Stage Partial-Darknet. Multi-Head Self-Attention-Darknet improves the mobility of learned features and extracts features of a detector in order to capture far-distant context information. The objective of a Bidirectional Feature Pyramid Network is to count the features extracted in different backbone network levels for a detector level. In order for the fusion of many features with no addition of high cost, the Bidirectional Feature Pyramid Network adds the extra edge from an input node to an output node in the case of the same level. While low-level and high-level features are combined with each other, feature inconsistency and performing deterioration through learning weights occur to learn various input features. Accordingly, for better features of the middle layer, the Spatial Pyramid Pooling module is additionally used in path-aggregation neck, and Cross Stage Partial connection is applied for feature processing. Detection Head is used to predict a box in five scales. For example, Fang, Y. et al. [15] proposed a YOLOS model. For image classification in ViT, the proposed model replaces one [CLS] token with 100 [DET] tokens for object detection. For object detection, the image classification loss of ViT is replaced by bipartite matching loss. YOLOS is the model designed to verify the versatility and transferability of pre-trained standard transformers not only in high-performance object detection but in hard-to-recognize object detection. Z. Zhang et al. [14] proposed a ViT-YOLO based on one-stage detector. The framework of the proposed method integrates CSP-Darknet and multi-head self-attention in order for feature extraction. In this way, it is possible to maintain enough global context information and extract differentiated features for object detection. In addition, its architecture has the interface with BiFPN in order to combine the features in different scales effectively. Since ViT-YOLO brings more context information, it is possible to learn feature expressions to identify small objects and to extract differentiated features for object detection.

2.2. Trends in Meta-Learning Technology

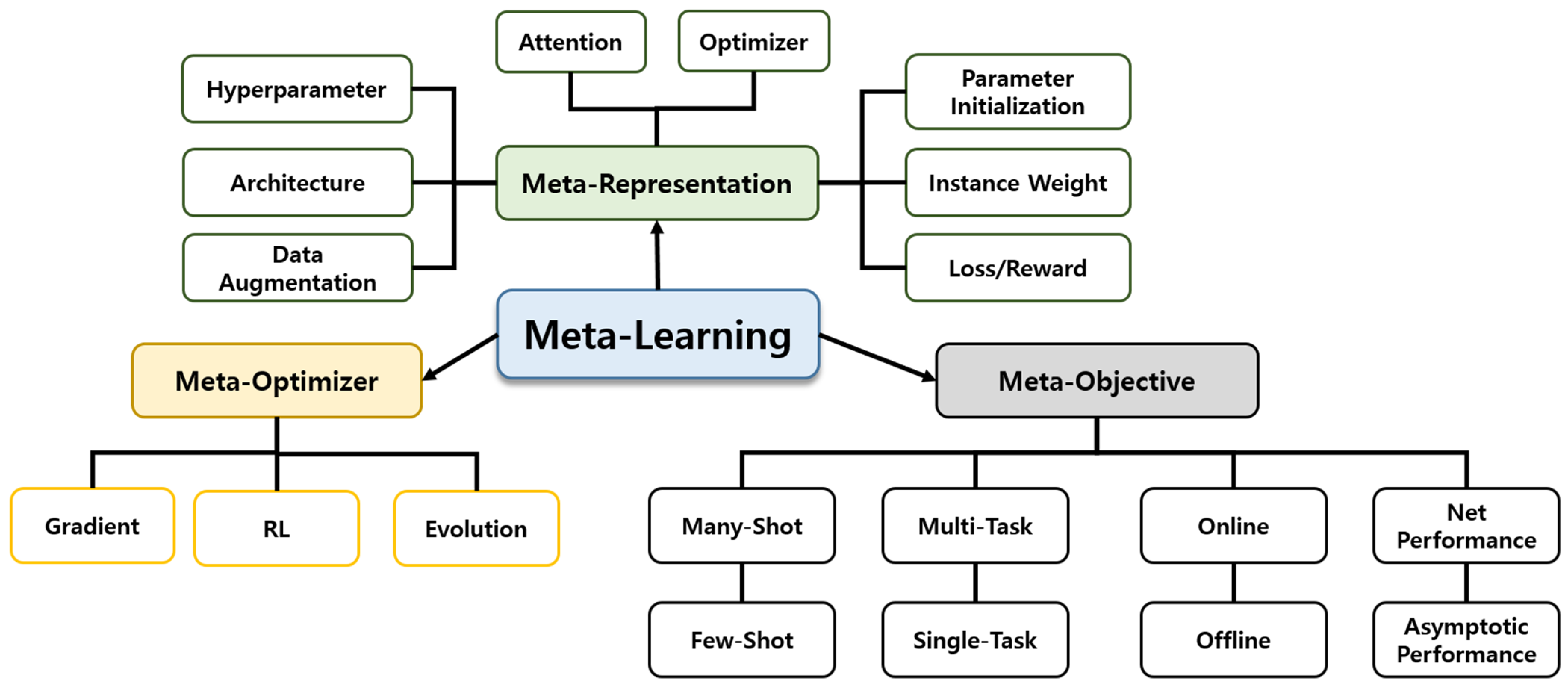

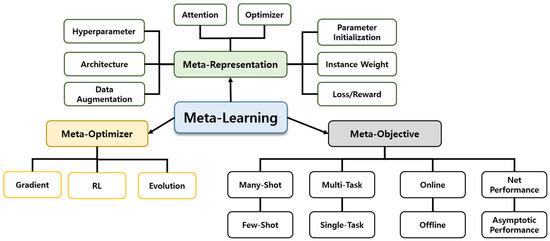

Meta-learning refers to the learning for learning. It gives an opportunity to improve a deep learning algorithm in terms of generalization, data, or calculation bottleneck. Meta-learning is classified through meta-expressions, meta-optimization, and meta-objectives [16]. Meta-expression is a choice of meta-knowledge for meta-learning, including various expressions from the parameters of an initial model to readable codes of a program. Meta-optimization is a choice of the optimization approach to be used in an external level during meta-learning. It is possible to use such different approaches as gradient descent, reinforcement learning, and evolutional search [17]. A meta-objective refers to the meta-learning’s objective determined by task distribution and the selection of the data flow between internal and external levels. A user is able to designate meta-learning depending on different purposes such as Few-Shot Learning and adversarial attack. At present, meta-learning has been researched in diverse aspects such as computer vision and reinforcement learning [18]. The most successful ones of meta-learning approaches are Few-Shot image recognition, unsupervised learning, data efficiency, self-directed reinforcement learning (RL), hyper-parameter optimization and neural architecture search (NAS) [19]. Figure 2 shows the trend structure of meta-learning technology.

Figure 2.

Meta-learning technology trend diagram.

For example, W. Yu et al. [20] proposed Meta-Former by applying meta-learning to the transformer. The proposed method replaces the attention module of the transformer with a spatial pooling operator so as to generate Pool Former, a derivative model of the transformer to execute token mixing. In a test, it was proved that the proposed method was excellent at image classification, object detection, instance segmentation, and semantic segmentation. D. Qi et al. [21] proposed the method of using a vision transformer as a learner of Few-Shot Learning. The proposed approach expands self-supervised learning to Few-Shot Recognition. It consists of two steps. In the first step, through masked auto-encoder, a feature encoder is learned with unlabeled data. In the second step, through supervised learning, a pre-trained ViT model, which has almost no labeled samples, is fine-tuned. At this time, for fine-tuning generalization, Parametric Instance Discrimination (PID) normalization and Base-Novel (BN) normalization are carried out. Accordingly, the performance of Few-Shot Recognition is improved.

2.3. Fire Prediction Technology Using Object Detection

The National Fire Information System [1] of the National Fire Agency is a pan-national system to inform the public of fire damage and promote fire prevention and fire prevention by providing statistical information on mechanisms from fire occurrence to evacuation situations and fire suppression. It helps establish fire prevention policies for each institution by providing real-time fire risk information to the public and related agencies through real-time anomaly detection and monitoring functions. Accordingly, the risk of fire can be recognized in advance and used as fire prevention and response data. However, it is inefficient due to the high cost of manpower for real-time monitoring, reporting in the event of a flame, and problems with device malfunction. To solve this problem, an accurate and effective fire prediction technology using deep learning is needed. Therefore, F. Saeed et. al. [22] proposed a machine learning-based approach for multimedia surveillance in the event of a fire emergency. This led to the efficient prediction of fires using sensor data such as smoke, heat, and gas for the training of the Adobe-MLP model. Accordingly, it is a CNN model that can be detected immediately when a fire occurs. However, sensor data can reduce prediction performance in case of malfunction. Z. Tang et al. [23] proposed a deep learning-based wildfire event object detection model from aerial image data. This creates a labeled dataset to be shared publicly from data collected from aviation. It is also a cos-to-fine framework for automatically detecting rare, small, irregular-shaped wildfires. The coarse detector adaptively selects sub-areas that are likely to contain objects of interest, and the fine detector performs additional scrutiny by passing only the details of sub-areas, not the entire area. Accordingly, the learning time for prediction may be reduced and accuracy may be improved. However, there is a disadvantage that can only be used if a flame is detected. Additionally, S. Mohana Kumar et al. [24] proposed a forest fire prediction method using image processing and machine learning. It aims to predict forest fires with an input stream of images. The proposed method uses image processing, background removal, and special wavelet analysis. An SVM model is used to classify candidate areas as real fire or non-fire. Fast fire detection is possible by using a faster R-CNN object detection model for full image convolution operation. However, the larger the amount of data, the slower the training speed.

3. Swin Transformer-Based Object Detection Model Using Explainable Meta-Learning Mining

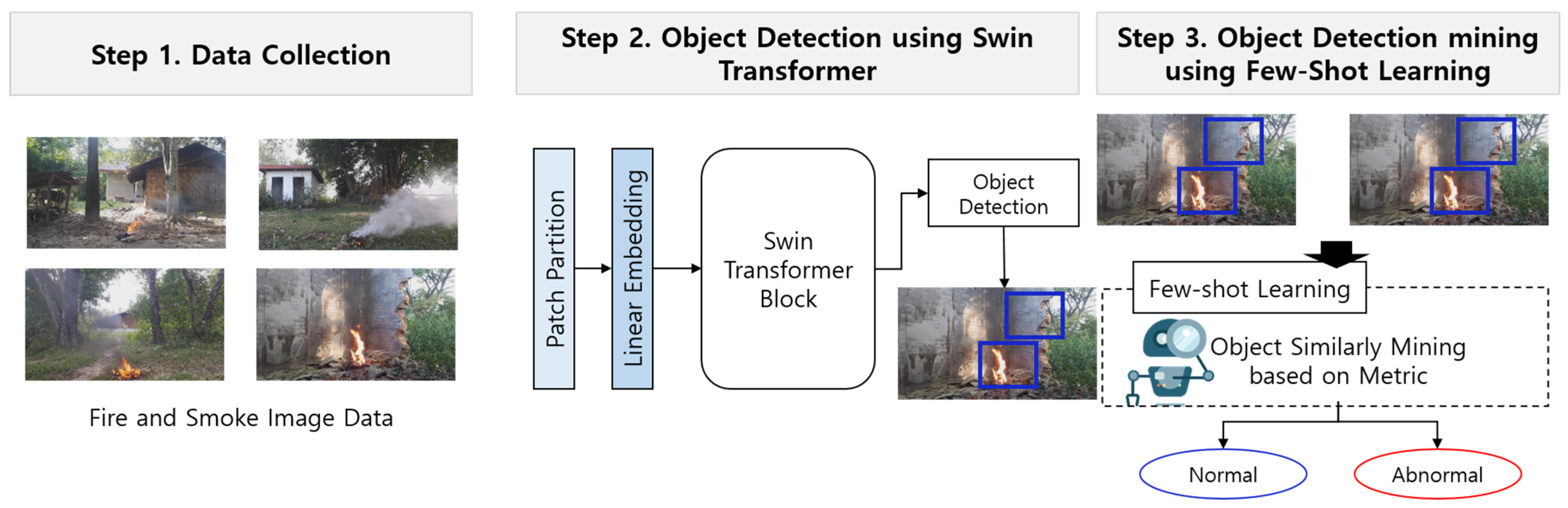

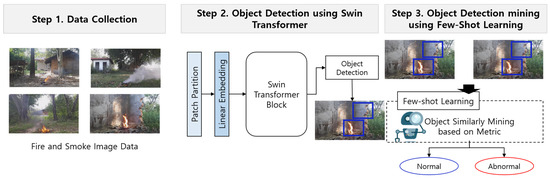

Recently, building fires cause a lot of property damage. Since a smoke detector for detecting a fire can detect only when smoke touches the detector, it is highly likely that a fire is already occurring. Additionally, since flame detectors can only detect flames, it means that a large fire is already occurring. If it is possible to extinguish the fire in the early stages when smoke is generated, the damage caused by the fire can be reduced. In addition, if the initial ignition point is detected before a large fire occurs in industrial facilities, homes, and nature, damage can be minimized. Therefore, there is a need for a method capable of quickly detecting smoke generation. It means that they need to be monitored continuously. Even though they are monitored with CCTV or other devices, it is hard to make accurate management due to a shortage of management laborers. Therefore, this study proposes a Swin Transformer-based object detection model using explainable meta-learning mining. Based on an object detection algorithm, the proposed method detects fire-fighting facilities in buildings. Accordingly, the detected result is classified into normality and abnormality. A model for classification is Swin Transformer. It uses massive parameters and requires large data for effective learning. To solve the problem of large data quantities, meta-learning is applied. For the reliability and interpretability of the model, the scores of explainable visualization and object detection are applied. Figure 3 shows the process of the proposed Swin Transformer-based object detection model using explainable meta-learning mining.

Figure 3.

The process of the proposed Swin Transformer-based object detection model using explainable meta-learning mining.

In Figure 3, the process consists of three steps. The first step is to collect fire prediction data. It collects fire prediction image data. In the second step, meta-learning is applied to the vision transformer model. In short, Few-Shot Learning is used to reduce the amount of data for learning and to capture features more accurately. In the last step, objects are detected in the meta-learning-based ViT model and then are classified into normality and abnormality. In this way, it is possible to continue to monitor fire-fighting facilities and prevent risks in everyday life. In addition, the JSON file of meta information such as data location information and class information is reconstructed similarly to the JSON file format of the COCO dataset.

3.1. Collect and Preprocess Risk Prediction Data

The data used in this paper are fire prediction image data provided by AI Hub [25]. The fire occurrence prediction image data are image data obtained by photographing smoke occurring before a fire occurs. It is classified into three types: fire scene, similar scene, and unrelated scene, and the fire scene is divided into black smoke, gray smoke, white smoke, and fire (flame) classes. Similar scenes are composed of classes such as fog, lighting, sunlight, and leaves. In addition, unrelated scenes include objects unrelated to smoke generation. When predicting a fire, fire is predicted by the color of the smoke. Therefore, in this paper, 13,159 training data points and 22,000 validation data points from the fire scene are used. The data are composed equally in 1920 × 1080 size. White smoke appears at the beginning of a fire, and gray smoke and black smoke appear over time. Figure 4 shows fire prediction image data.

Figure 4.

Fire prediction image data. (a) flames are visible (b) almost no flame (c,d) flame is detected by the eye.

In Figure 4, in the case of (a) flames are visible, but not much smoke appears. In (b), there is almost no flame, but a lot of white smoke is generated. This indicates the beginning of a fire. In the case of (c) and (d), the flame is detected by the eye, and gray and white smoke is generated. This indicates that the fire has occurred for a long time. Therefore, in order to minimize damage to property and human life, a quick response plan for fire response is required.

3.2. Object Detection Based on Swin Transformer

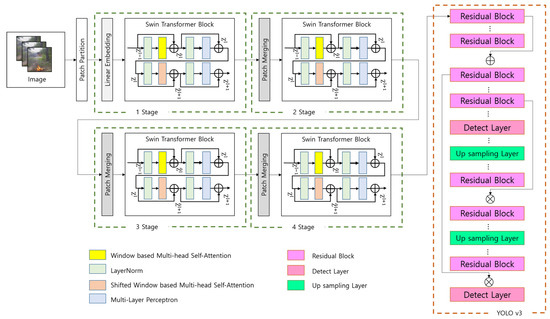

An object detection algorithm makes detection through the localization used to classify objects and find their position information. A general CNN considers all areas of an image through sliding. Although it is simple, it is executed multiple times, and thus its speed is slow [26]. Therefore, there is a speed limit for detecting objects from much data. The one-stage object detection method conducts regional proposal and classification at the same time so that it is excellent at speed. The two-stage object detection method processes regional proposal and classification sequentially, and thus it has high accuracy even though its speed is slower than that of the one-stage approach. YOLO as an object detection algorithm scans the entire image once. Due to its high speed, it is possible to detect objects in real-time. The algorithm, however, has a low recognition rate of small objects. In fact, image data include not only big objects but small ones. Accordingly, it is necessary to apply a method of improving the recognition rate and detection rate of objects of various sizes. When ViT is applied to YOLO for object detection, there is a good performance [27]. However, when standard ViT tokenizes an input image, it slides a patch without overlapping. This approach ignores modeling for image local structures such as lines and edges, so that information loss can occur. Therefore, this study makes use of the Swin Transformer for object detection. Swin Transformer refers to the model in which transformer structure is applied to object detection. Through the shifted window, it carries out expression learning with a hierarchical transformer [28]. For object detection in various scales, the method uses a feature pyramid structure. In addition, YOLOv3 uses a model. YOLOv3 applies the sigmoid function to all classes in the last layer in order to make a binary classification in each class. It has low accuracy for small objects. To improve the efficiency of object detection, YOLOv3 is combined with the Swin Transformer. Figure 5 shows the architecture of the Swin Transformer-based object detection model.

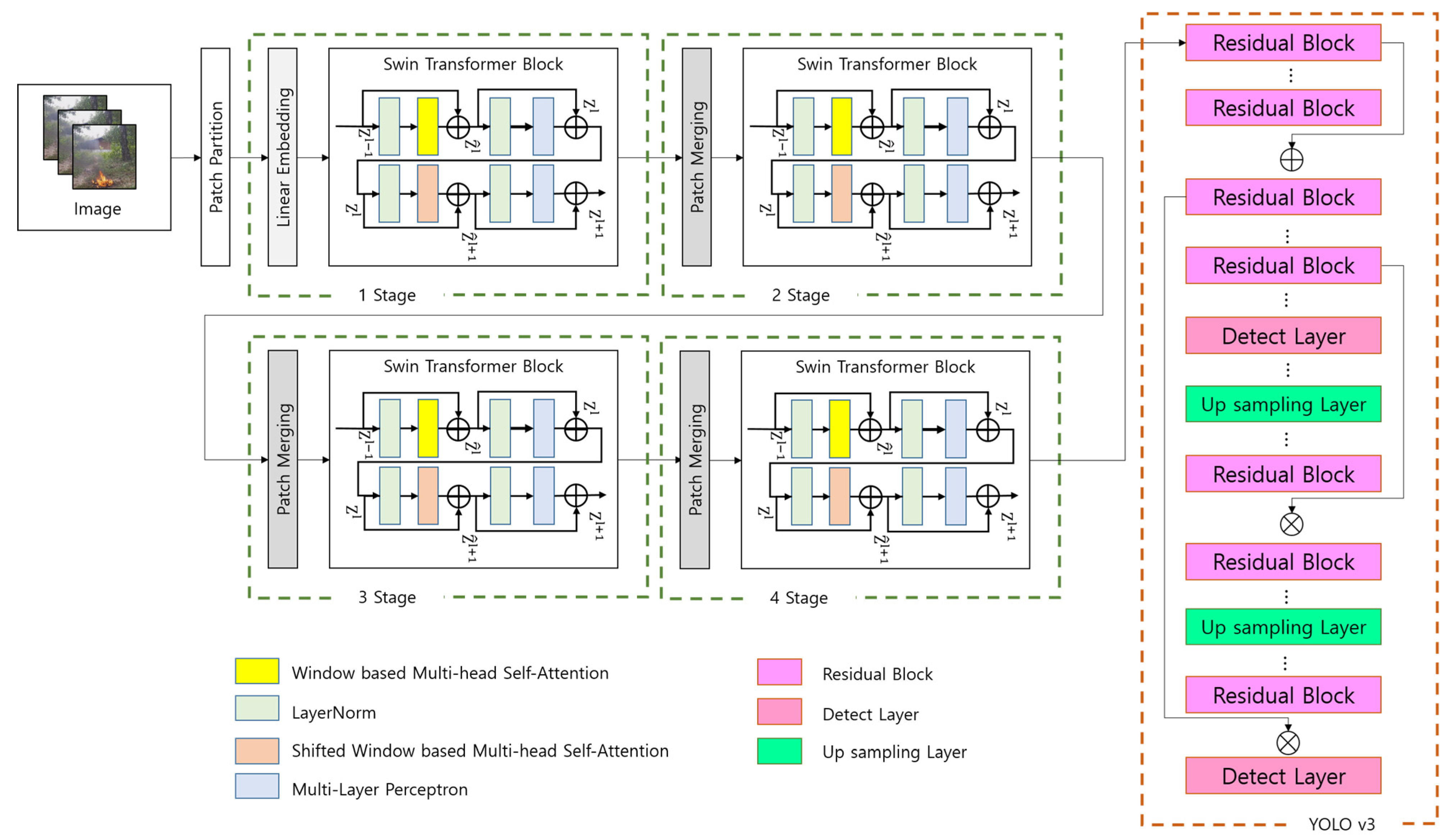

Figure 5.

The architecture of the Swin Transformer-based object detection model.

In Figure 5, the architecture consists of the Swin Transformer section and the YOLOv3 section. The Swin Transformer transforms input image data into patches and merges them in the way of increasing a patch size gradually from a small one. Window-Multi-Head Self-Attention executes self-attention operation between patches found in the current window. It can reduce the cost of operations due to the high association of adjacent pixels. Shifted-Window-Multi-Head Self-Attention shifts the window and applies a mask in order to prevent self-attention operation. That is because it is meaningless for a patch to move to the opposite side through the shift and to execute the self-attention operation. After mask operation, the original value is recovered, and thereby it is possible to present the connectivity of windows. The object detector is based on YOLOv3. DarkNet53 is used as a backbone network. Initial, intermediate, and final feature maps are used in the feature pyramid network. In the feature pyramid network, each feature map is set to the same size through up-sampling and is connected together. Accordingly, binary classification of the image is possible through the sigmoid function. As a result, in the combination of YOLOv3 and Swin Transformer, the Swin Transformer is capable of detecting small objects of various sizes, the ones which YOLOv3 hardly detect. Nevertheless, the model is heavy because of many parameters. To solve the problem and design a relatively light model, Few-Shot Learning is applied.

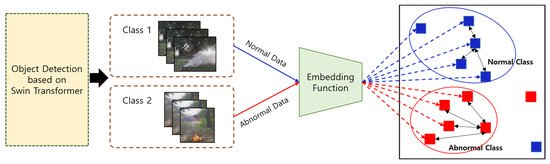

3.3. Object Detection Mining Using Meta-Learning

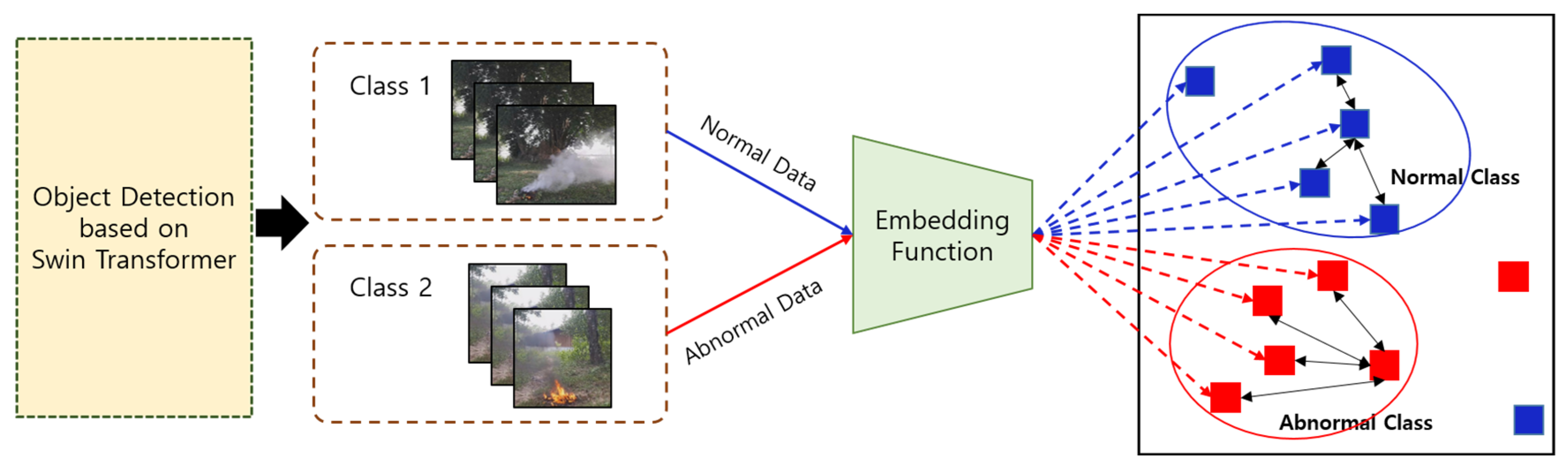

The Vision Transformer model uses the transformer structure as it is, so it is excellent at scalability. In the case of transfer learning, it uses fewer calculation resources than convolution neural networks [29]. However, it needs a very large quantity of data for learning, because it has a few inductive biases used to predict the output for the input data that the model first encounters. Therefore, it is necessary to apply an approach of making efficient learning with small data and preventing information loss. As a result, meta-learning, which brings about effective results with the use of small quantities of data, is applied [30]. In particular, Few-Shot Learning as one of the meta-learning methods supports model learning with the use of a small amount of image data. It uses the learning dataset composed of images and labels as a support set, the dataset to evaluate the model as a query set [31]. Few-Shot Learning is classified into a data-based approach and a model-based approach. The data-based approach either applies transformation with the use of a given support set or creates the data for model learning through GAN [32]. It is simple and intuitive. The support set, however, has no clear information on the object of interest to obtain. The model-based approach lets a model learn a similarity between feature vectors in order to help the model identify whether an image is in the same class or in a different class or prevents overfitting of small quantities of data to the model. As a model-based approach, there is the metric-based method of learning a similarity or distance between images. Figure 6 shows the structure of the Few-Shot Learning-based object detection mining.

Figure 6.

The structure of the Few-Shot Learning-based object detection mining.

For prediction, the class of a support set, which has the highest similarity among query data, is used. Accordingly, in order to let a model learn similarities, it is possible to use a large amount of labeled data. For example, to learn similarities between dog and cat images, it is possible to use ImageNet data. At this time, a class to predict is called a novel class (target domain), and the data to be used for prediction are named the base class (source domain). Few-Shot Learning is made possible even if novel class data are small [33]. Therefore, this study generates a feature extractor on the basis of distance and improves efficiency for object detection.

4. Results and Performance Evaluation

4.1. Swin Transformer-Based Explainable Object Detection Using Meta-Learning Mining

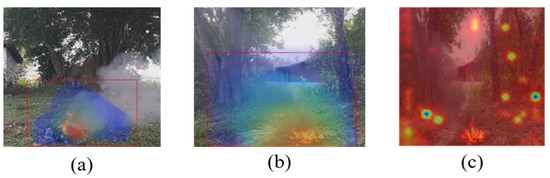

Explainable AI is different depending on an explanation category. Global technique explains all results that a model predicts [34]. Since it provides the explanatory power for all prediction results of a model, it is excellent at explanatory power. The technique, however, is less feasible and has weak explanatory power of the features of individual prediction results. Local technique explains particular decision results and prediction results so that it has a small category to explain. Therefore, the technique is relatively feasible and is excellent at explanatory power for small prediction results [35]. In addition, it is possible to apply an explainable AI approach in a universal way regardless of the models. This study applies explainable AI to object detection and visualization. Firstly, when the score of target detection is extracted for explainable object detection, the detect box can be different from the existing target. For this reason, the overlapping score between the correct target and the detect box is multiplied. Based on the final score, the proposed object detection model determines a level of prediction reliability for an object and the accuracy of its position. Secondly, combinations feature maps using gradient signals that do not require network changes. This assigns importance using gradient information that proceeds to a specific layer of the CNN. Therefore, it is possible to solve the limited problem of the model structure and to explain the structure and results of various models. Figure 7 shows the results drawn from the vision transformer-based explainable object detection using meta-learning mining.

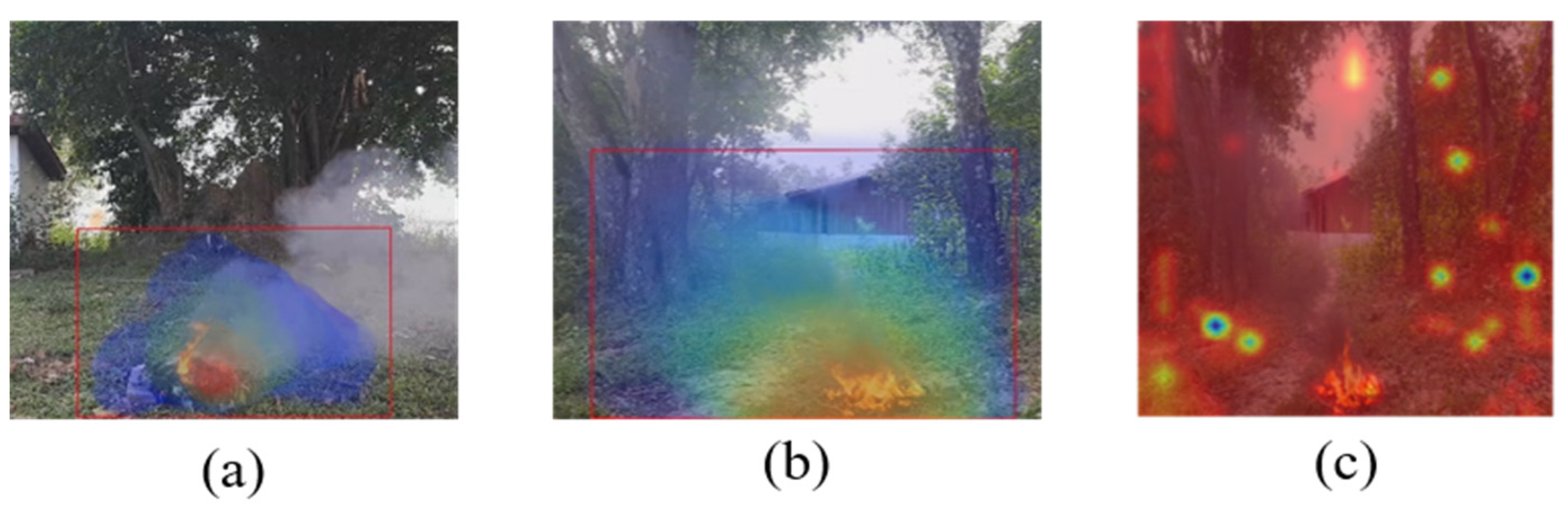

Figure 7.

The results drawn from the Vision Transformer-based explainable object detection using meta-learning mining. (a,b) Grad-CAM normally captures the highest importance in fire prediction as fire. (c) incorrectly detected results.

Figure 7a,b shows that Grad-CAM normally captures the highest importance in fire prediction as fire. In addition, low importance indicates the smoke that is occurring around the fire. However, there is a disadvantage that smoke far from the fire could not be detected. In addition, (c) in Figure 7 shows incorrectly detected results. It detected the entire part, not the fire part, rather than the fire part. It is judged that the focus is more on other objects. Thus, the generalization performance for the dataset is poor.

4.2. Performance Evaluation

In this paper, 35,159 fire prediction image data points provided by AI hub are used for performance evaluation. Accordingly, to prevent overfitting, the training dataset is 50%; the validation dataset is 10%; and the test dataset is 40%. In addition, the implementation environment uses AMD Ryzen 9 5900X 12-Core Processor 3.70 GHz, 96 GB RAM, NVIDIA GeForce RTX 3090, Python 3.6 version, etc. For the performance evaluation of the proposed method, its accuracy for object detection is compared. Average Precision (AP) for the performance comparison of object detectors uses AUC using the precision-recall relation [36]. Generally, an AUC curve has many repetitions up and down. In the curves that have the tendency of crossing each other, it is hard to compare with a different detector in the same plot. Therefore, AP is suitable for comparison with a different detector [37]. In this study, APs for multiple classes are needed. So, the AP value for each class is calculated, and their mean value is extracted. To do that, Mean Average Precision (mAP) is applied. mAP is capable of extracting the mean of AP values by interpolating all points. Equation (1) presents the interpolation of all points.

The higher mAP value, the more accuracy. The lower value, the less accuracy. Equation (2) presents mAP. APi represents the AP value of ith class, and N means the total number of classes to evaluate.

For performance evaluation, firstly, the performance of the proposed method is evaluated. Standard Swin Transformer, Swin Transformer + YOLO, and Swin Transformer + YOLO + Few-Shot Learning are evaluated in terms of performance. Table 1 shows the results of the performance evaluation for each method [38].

Table 1.

The results of the performance evaluation for each method.

In the evaluation results of Table 1, the mAP result of the standard Swin Transformer is evaluated as 46.5. Additionally, in the case of Swin Transformer + YOLO, the mAP is 50.54, which is higher than standard Swin Transformer [39]. This is considered to be because Swin Transformer + YOLO can detect object information and pixel information in various scales. In addition, in the case of the proposed Swin Transformer + YOLO + Few-Shot Learning (ours), the extracted mAP was the highest at 51.2.

Secondly, the proposed method is compared with a conventional object detection method in order the evaluation of its excellency. The network used in the proposed method has a feature pyramid structure. Therefore, the performance of the object detection model based on the feature pyramid structure is compared. The object detection models used for comparison are YOLOACT, YOLOACT++, and YOLOS models. Table 2 shows the results of the performance comparison between object detection algorithms.

Table 2.

The results of the performance comparison between object detection algorithms.

Table 2 compares the mAP of the YOLACT, YOLACT++, and YOLOS models and the proposed model. The YOLACT and YOLACT++ models have the advantage of real-time detection [42]. As a result of performance evaluation, the proposed method is evaluated highly by about 17. Therefore, through the proposed method, it is possible to predict and monitor fire occurrence in real-time.

In addition, accuracy and f-measure are compared based on the confusion matrix to evaluate the classification results of each model. Equation (3) shows the accuracy based on the confusion matrix.

Additionally, Equation (4) shows the F-measure.

In Equations (3) and (4), TP (True Positive) indicates the case where the predicted value is true and where the actual value is true. TN (True Negative) represents a case where the predicted value is false and where the actual value is false. FP (False Positive) indicates the case where the predicted value is true but where the actual value is false. FN (False Negative) represents a case where the predicted value is false and where the actual value is false. Table 3 shows the performance evaluation results based on the confusion matrix.

Table 3.

The performance evaluation results based on the confusion matrix.

As a result of the evaluation in Table 3, the proposed method is evaluated about 2–4% better than other methods. YOLACT and YOLACT++ also detect smoke in occluded parts through mask-RCNN. YOLOS loses information while composing images into patches, so its accuracy is evaluated as low. However, the proposed method performs better than other methods by detecting objects at various scales and minimizing information loss during patch construction.

The last performance evaluation evaluates the FPS to evaluate the suitability of the proposed method in real-time detection. FPS (Frame Per Second) in object detection represents the detection rate per second. Table 4 shows the FPS performance evaluation results.

Table 4.

The FPS performance evaluation results.

In the FPS performance evaluation results in Table 4, the FPS of the proposed method is not judged to be the best. However, since real-time is generally uninterrupted and natural at 30 fps, the proposed method can be judged to be suitable for real-time.

5. Conclusions

This study proposed the Swin Transformer-based object detection model using explainable meta-learning mining. The proposed method is based on three steps. In the first step, prediction data were collected. In the second step, Swin Transformer-based object detection was carried out. Since it ignores modeling for image local structures such as lines and edges in the course of transforming images into patches, it causes information loss. To solve this problem, the shifted window of Swin Transformer was applied. The feature pyramid network of YOLOv3 was also used to detect objects of various sizes. Nevertheless, only with YOLOv3, it is hard to detect small objects. Accordingly, the Swin Transformer was combined with the approach in order to detect objects in various scales. In the third step, meta-learning-based objection detection mining was applied. This approach is capable of making efficient learning with a small amount of data and preventing information loss. Few-Shot Learning as one of the meta-learning methods was used. It generated a feature extractor on the basis of distance and improved object detection. In order to find the cause for the object classification result from the proposed method, explainable visualization was applied. With the use of Grad-CAM, it was possible to keep the high resolution, reduce noise, and find the cause for classification easily. For the performance evaluation, the performance evaluation of the proposed method was conducted step by step. In addition, accuracy and f-measure evaluation were conducted based on the mAP and confusion matrix of the proposed and existing methods. As a result of the evaluation, the proposed method was evaluated excellently. This is because it is possible to detect up to a small area by solving the disadvantages of the existing vision transformer. In addition, the FPS evaluation results are evaluated above 30 fps. Therefore, the proposed method makes it possible to monitor CCTVs continuously and detect abnormality effectively. However, the generalization of the data was not considered. Therefore, there are limitations on the data to be used. In order to solve this problem, future research plans to improve the generalization performance by adjusting parameters for various datasets and combining each model.

Author Contributions

Conceptualization, J.-W.B.; methodology, J.-W.B. and K.C.; validation, J.-W.B. and K.C.; writing—original draft preparation, J.-W.B. and K.C.; writing—review and editing, J.-W.B. and K.C.; visualization, J.-W.B. and K.C.; supervision, K.C.; project administration, K.C.; funding acquisition, K.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Kyonggi University Research Grant 2022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- National Fire Information System. Available online: https://nfds.go.kr/ (accessed on 5 November 2022).

- Sharma, R.; Rani, S.; Memon, I. A smart approach for fire prediction under uncertain conditions using machine learning. Multimed. Tools Appl. 2020, 79, 28155–28168. [Google Scholar] [CrossRef]

- Bui, K.H.N.; Yi, H.; Cho, J. A multi-class multi-movement vehicle counting framework for traffic analysis in complex areas using cctv systems. Energies 2020, 13, 2036. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural. Netw. Learn Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Ji, Y.; Zhang, H.; Zhang, Z.; Liu, M. CNN-based encoder-decoder networks for salient object detection: A comprehensive review and recent advances. Inf. Sci. 2021, 546, 835–857. [Google Scholar] [CrossRef]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- von Eschenbach, W.J. Transparency and the black box problem: Why we do not trust AI. Philos. Technol. 2020, 34, 1607–1622. [Google Scholar] [CrossRef]

- Minh, D.; Wang, H.X.; Li, Y.F.; Nguyen, T.N. Explainable artificial intelligence: A comprehensive review. Artif. Intell. Rev. 2022, 55, 3503–3568. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Cheng, L.; Li, J.; Duan, P.; Wang, M. A small attentional YOLO model for landslide detection from satellite remote sensing images. Landslides 2021, 18, 2751–2765. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Fang, Y.; Liao, B.; Wang, X.; Fang, J.; Qi, J.; Wu, R.; Niu, J.; Liu, W. You only look at one sequence: Rethinking transformer in vision through object detection. Adv. Neural. Inf. Process. Syst. 2021, 34, 26183–26197. [Google Scholar]

- Zhang, Z.; Lu, X.; Cao, G.; Yang, Y.; Jiao, L.; Liu, F. ViT-YOLO: Transformer-based YOLO for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef] [PubMed]

- Rusu, A.A.; Rao, D.; Sygnowski, J.; Vinyals, O.; Pascanu, R.; Osindero, S.; Hadsell, R. Meta-learning with latent embedding optimization. arXiv 2018, arXiv:1807.05960. [Google Scholar]

- Gupta, A.; Eysenbach, B.; Finn, C.; Levine, S. Unsupervised meta-learning for reinforcement learning. arXiv 2018, arXiv:1806.04640. [Google Scholar]

- Yao, H.; Wu, X.; Tao, Z.; Li, Y.; Ding, B.; Li, R.; Li, Z. Automated relational meta-learning. arXiv 2020, arXiv:2001.00745. [Google Scholar]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zhu, H.; Cai, X.; Dou, J.; Gao, Z.; Zhang, L. Multi-level adaptive few-shot learning network combined with vision transformer. J. Ambient Intell. Humaniz. Comput. 2022, 2022, 1–15. [Google Scholar] [CrossRef]

- Saeed, F.; Paul, A.; Hong, W.H.; Seo, H. Machine learning based approach for multimedia surveillance during fire emergencies. Multimed. Tools Appl. 2020, 79, 16201–16217. [Google Scholar] [CrossRef]

- Tang, Z.; Liu, X.; Chen, H.; Hupy, J.; Yang, B. Deep learning based wildfire event object detection from 4K aerial images acquired by UAS. AI 2020, 1, 166–179. [Google Scholar] [CrossRef]

- Mohana Kumar, S.; Sowmya, B.J.; Priyanka, S.; Ruchita Sharma, S.T. Forest Fire Prediction Using Image Processing and Machine Learning. Nat. Volatiles Essent. 2021, 8, 13116–13134. [Google Scholar]

- AI Hub. Available online: https://aihub.or.kr/ (accessed on 5 September 2022).

- Lee, Y.H.; Kim, Y. Comparison of CNN and YOLO for Object Detection. J. Semicond. Disp. Technol. 2020, 19, 85–92. [Google Scholar]

- Dai, Y.; Liu, W.; Wang, H.; Xie, W.; Long, K. YOLO-Former: Marrying YOLO and Transformer for Foreign Object Detection. IEEE Trans. Instrum. Meas. 2020, 71, 5026114. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Wang, J.X. Meta-learning in natural and artificial intelligence. Curr. Opin. Behav. Sci. 2021, 38, 90–95. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Wang, M.; Ning, H.; Liu, H. Object detection based on few-shot learning via instance-level feature correlation and aggregation. Appl. Intell. 2022, 53, 351–368. [Google Scholar] [CrossRef]

- Jiang, W.; Huang, K.; Geng, J.; Deng, X. Multi-scale metric learning for few-shot learning. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1091–1102. [Google Scholar] [CrossRef]

- Onchis, D.M.; Gillich, G.R. Stable and explainable deep learning damage prediction for prismatic cantilever steel beam. Comput. Ind. 2021, 125, 103359. [Google Scholar] [CrossRef]

- Gulum, M.A.; Trombley, C.M.; Kantardzic, M. A review of explainable deep learning cancer detection models in medical imaging. Appl. Sci. 2021, 11, 4573. [Google Scholar] [CrossRef]

- Chen, K.; Lin, W.; Li, J.; See, J.; Wang, J.; Zou, J. AP-loss for accurate one-stage object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3782–3798. [Google Scholar] [CrossRef]

- Xu, M.; Zhang, Z.; Hu, H.; Wang, J.; Wang, L.; Wei, F.; Bai, X.; Liu, Z. End-to-end semi-supervised object detection with soft teacher. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Yoo, H.; Chung, K. Deep learning-based evolutionary recommendation model for heterogeneous big data integration. KSII Trans. Internet Inf. Syst. 2020, 14, 3730–3744. [Google Scholar]

- Yoo, H.; Park, R.C.; Chung, K. IoT-Based Health Big-Data Process Technologies: A Survey. KSII Trans. Internet Inf. Syst. 2021, 15, 974–992. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF international conference on computer vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact++: Better real-time instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1108–1121. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.C.; Chung, K. Neural-Network based Adaptive Context Prediction Model for Ambient Intelligence. J. Ambient Intell. Humaniz. Comput. 2020, 11, 1451–1458. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).