Large-Scale Identification and Analysis of Factors Impacting Simple Bug Resolution Times in Open Source Software Repositories

Abstract

1. Introduction

- (A)

- Time factors

- (1)

- Is the time it takes to fix a bug affected by the day of the week in which the bug was written?

- (2)

- Is the time it takes to fix a bug affected by the time of the day in which it was written?

- (B)

- Author factors

- (1)

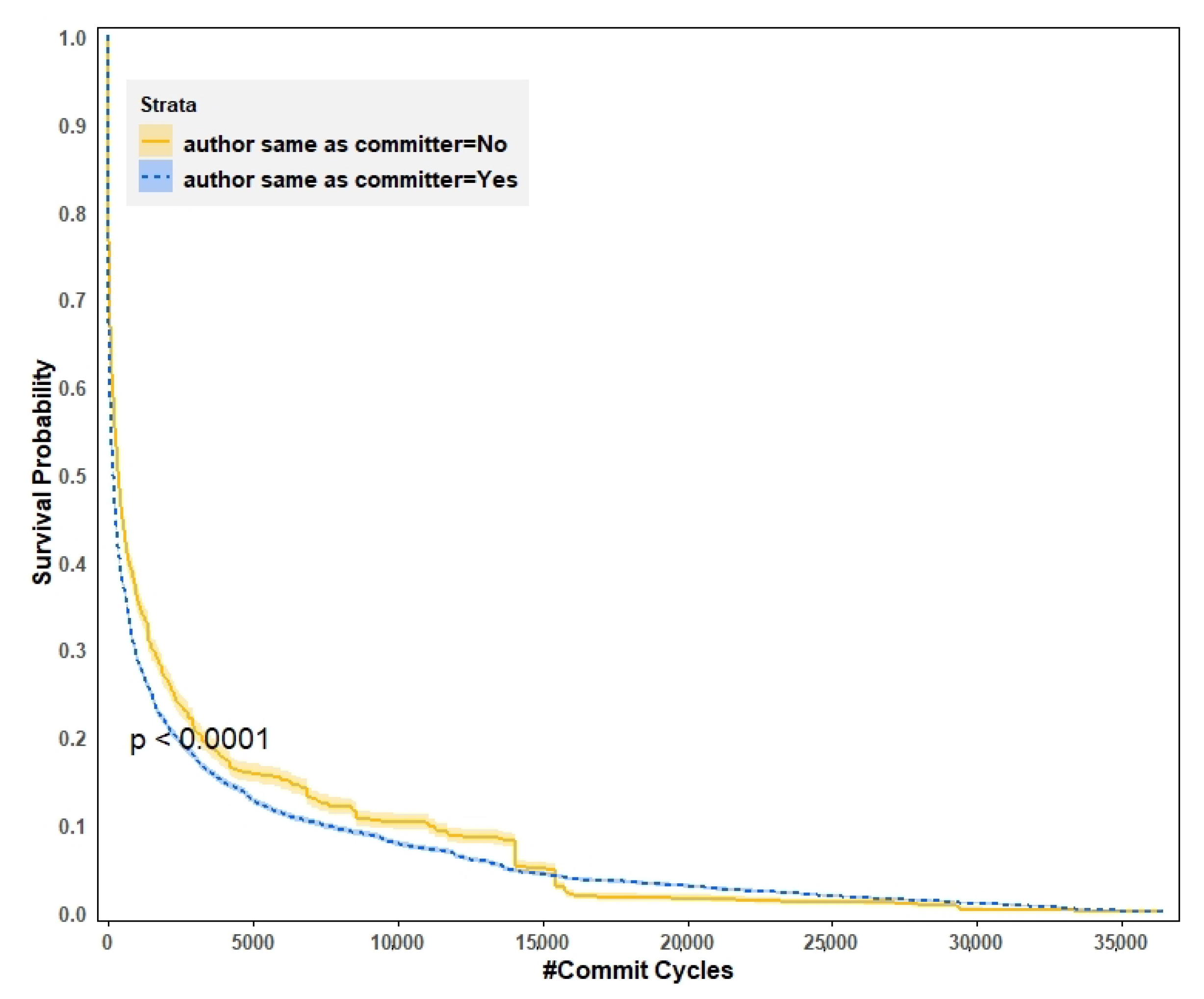

- Is the time it takes to fix a bug different if the buggy code is authored and committed by different users?

- (2)

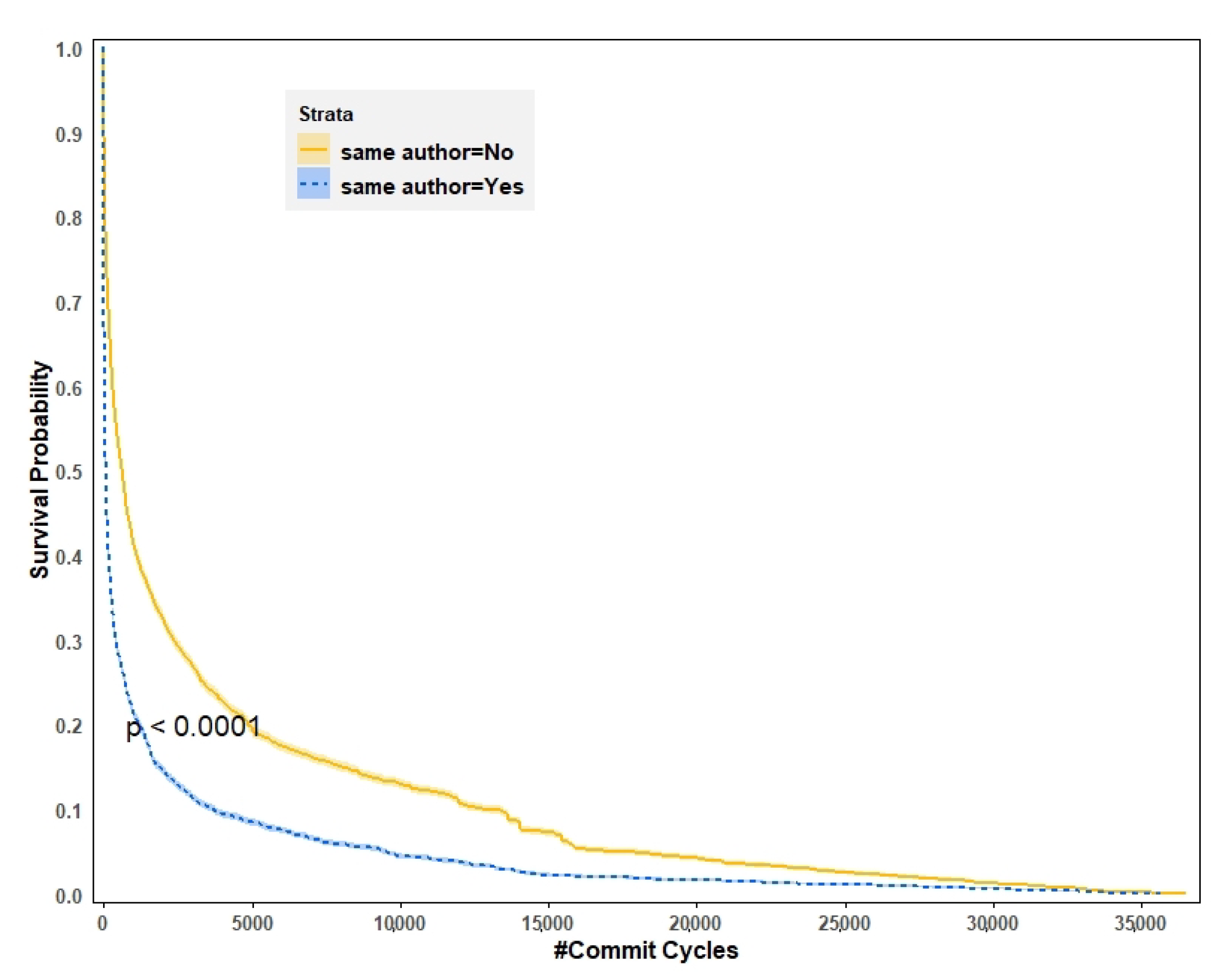

- Is the time it takes to fix a bug different if the same user authored both the initial buggy code and the fix code?

- (3)

- Is the time it takes to fix a bug affected by the amount of active users in the project?

2. Related Works

3. Materials and Methods

3.1. Materials

3.1.1. The ManySStuBs4J Dataset

- Bug Type: Type of bug determined out of the 16 possible bugs;

- Fix Commit Hash: Identifier of the commit fixing the bug;

- Bug File Path: Path of the fixed file;

- Git Diff: Contains the changes that happened when the bug was fixed along with the original buggy code [21];

- Project Name: in the format of repository_owner.repository_name;

- Bug Line Number: The line number where the bug existed, in the buggy version of the file;

- Fix Code: The code that fixed the bug.

3.1.2. Traversal Algorithm

| Listing 1. Git commands used in the traversal algorithm. |

| 1 git clone <project git link> <foldername> |

| 2 git log -1 --format=\%ae\%ai\%ce\%ci --date=iso <commit hash> |

| 3 git log --source --pretty=’\%H’ -S <buggy code> -- <Bug File Path> |

| 4 git show <possible initial commit hash> -- <file path to buggy code> |

- Maximum date: Latest date of the project found in our dataset, padded by one month;

- Minimum date: Earliest date of the project found in our dataset, padded by one month;

- Project time frame: Calculated by using the maximum and minimum date;

- Total commits: The total number of commits made during the project time frame;

- Active committers: The number of active committers during the project time frame;

- Active authors: The number of active authors during the project time frame;

- Commit rate per minute: Commits made per minute calculated as outlined in Equation (1).

- The bug type;

- The code author’s email who fixed the commit;

- The time and date the code author fixed the bug;

- The code author’s email who introduced the bug;

- The time and date the code author introduced the bug;

- Range of line numbers where the code that introduced the bug was located;

- The total number of commits made in the project;

- The number of active committers in the project;

- The number of authors;

- Latest date of project;

- Earliest date of project;

- Number of minutes it took to fix a bug;

- Project time frame;

- Commit rate per minute;

- Number of Commit Cycles it took to fix each bug.

3.1.3. Limitations of Data

3.2. Methods

3.2.1. Non-Parametric Tests

3.2.2. Survival Analysis

4. Results

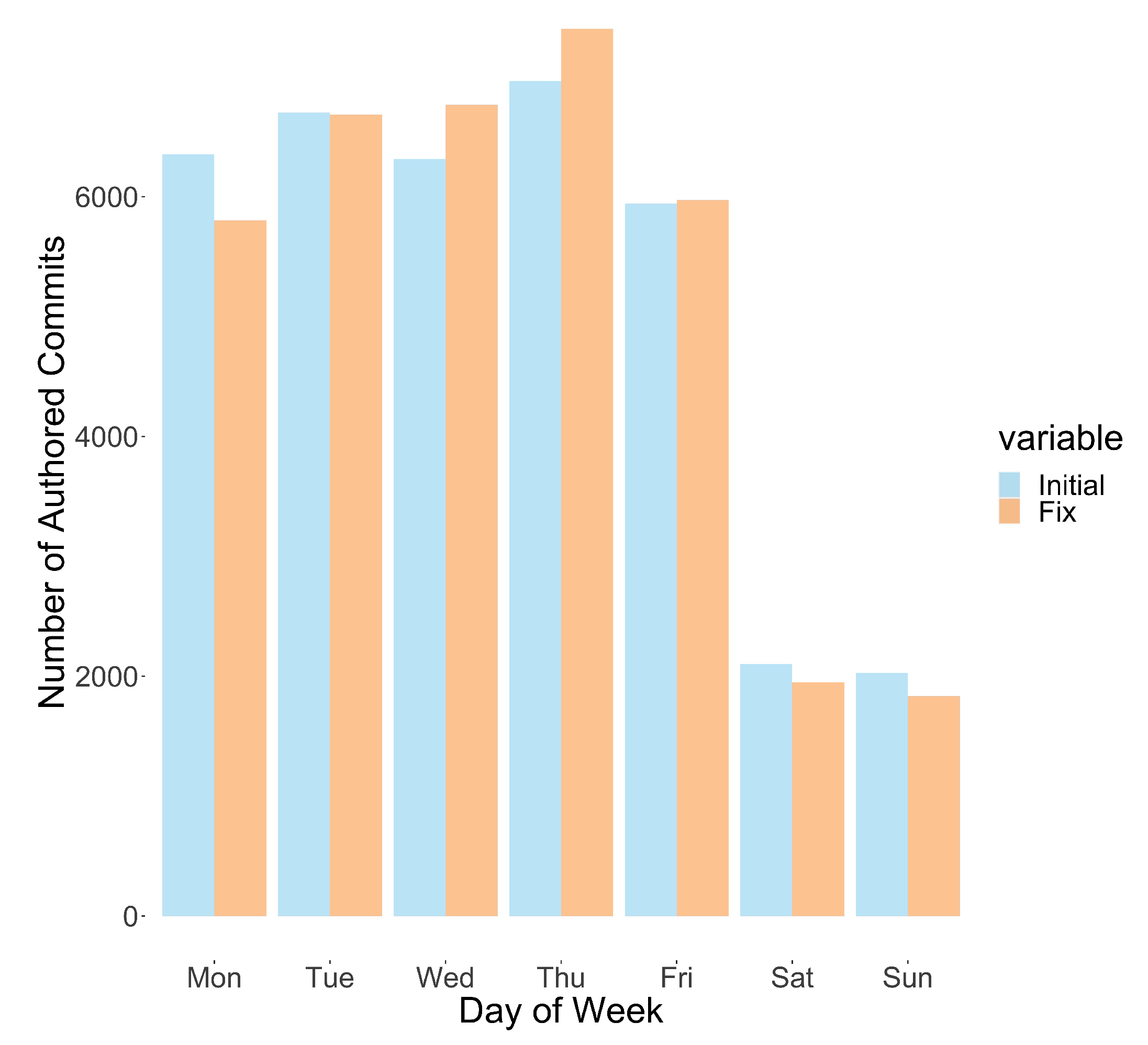

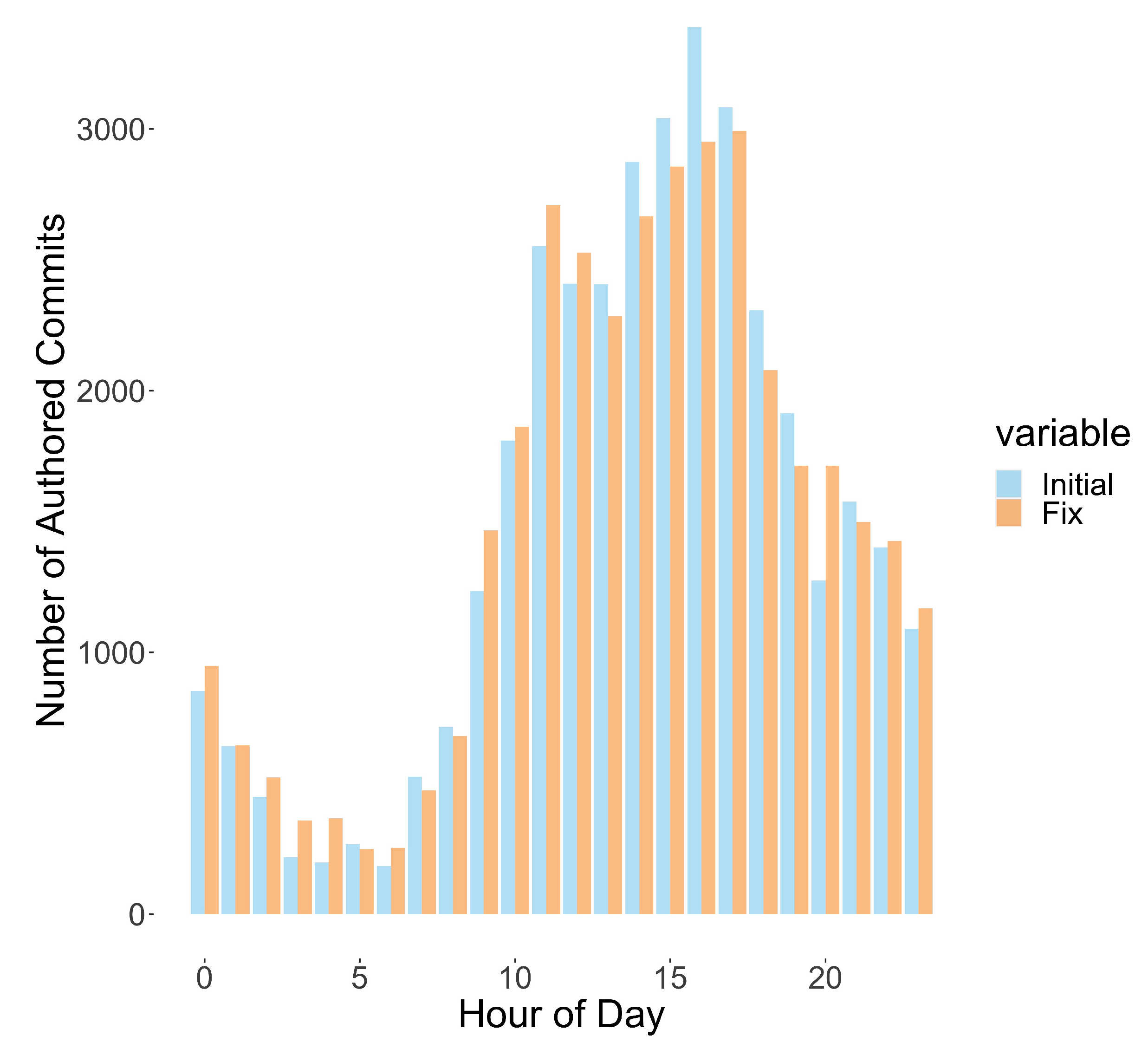

4.1. Descriptive Statistics

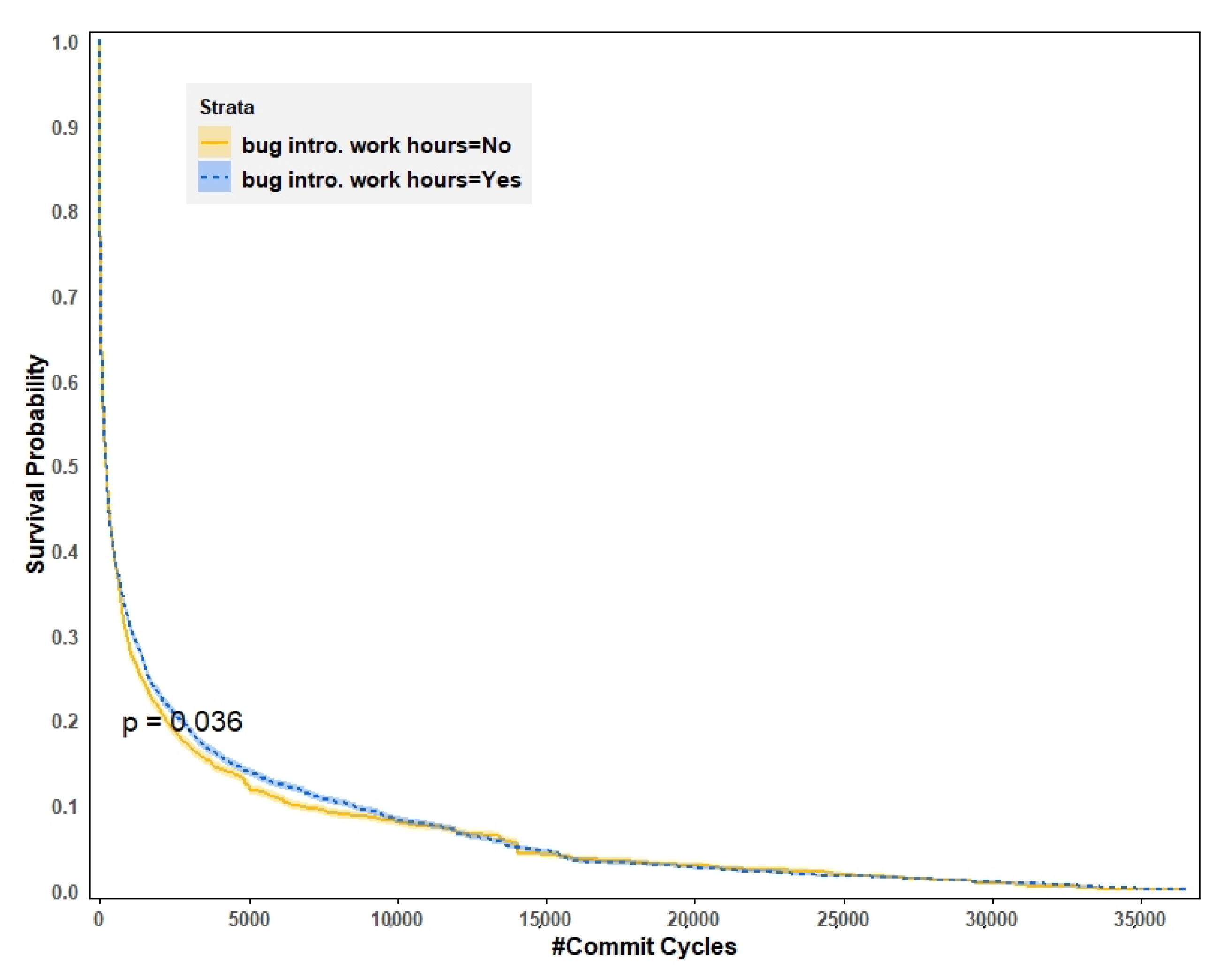

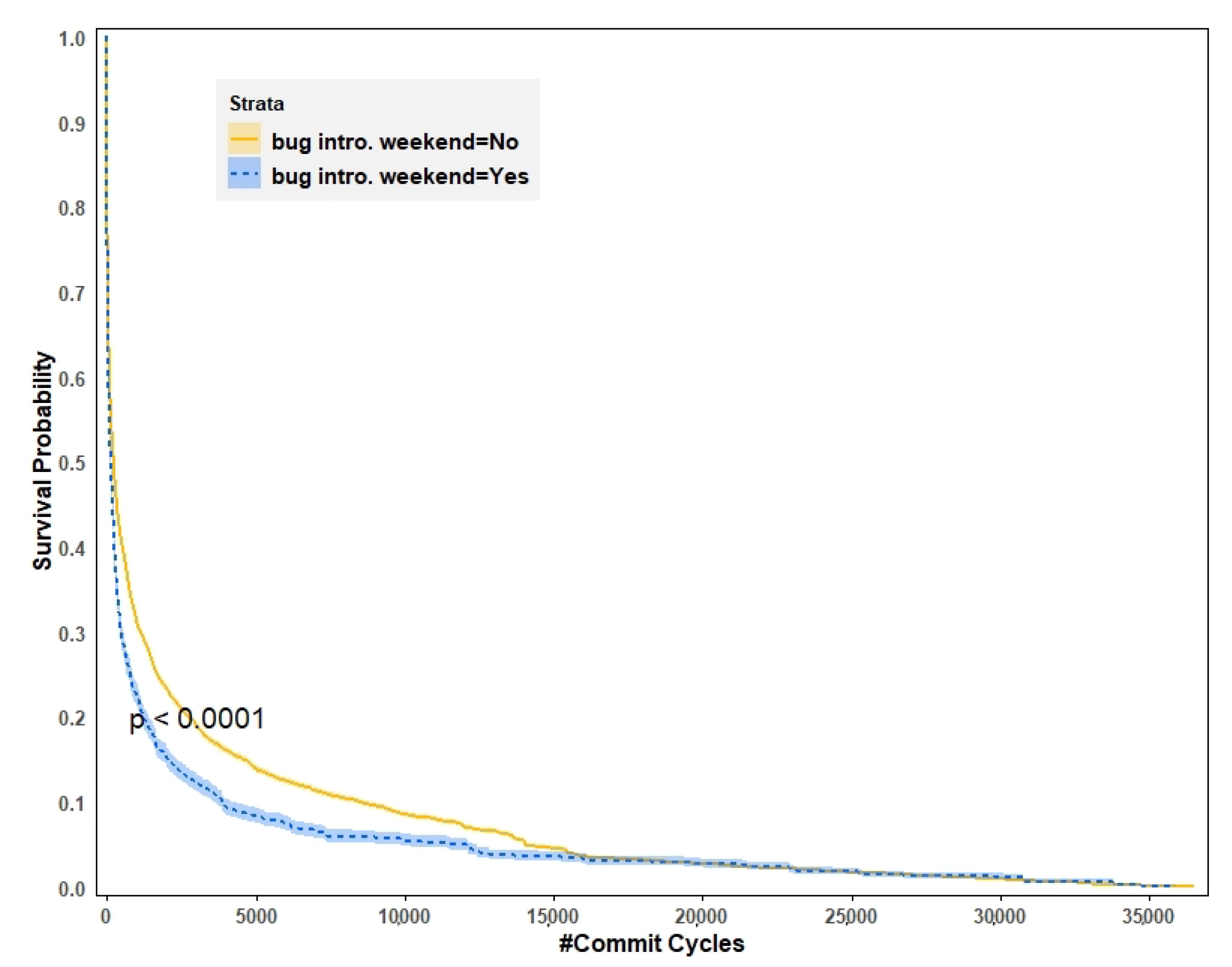

4.2. KM Curves

4.2.1. Bugs Introduced during Work Hours

4.2.2. Bugs Introduced during Weekend

4.2.3. Bugs Introduced and Fixed by the Same Developer

4.2.4. Buggy Code Authored and Committed by the Same Developer

4.3. Cox Proportional-Hazards Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| K-M | Kaplan–Meier |

| HR | Hazard Ratio |

| Cox PH | Cox Proportional-Hazards |

| SSTUB | Simple Stupid Bugs |

References

- Llaguno, M. 2017 Coverity Scan Report. Available online: https://www.synopsys.com/blogs/software-security/2017-coverity-scan-report-open-source-security/ (accessed on 23 April 2021).

- Chen, C.; Cui, B.; Ma, J.; Wu, R.; Guo, J.; Liu, W. A systematic review of fuzzing techniques. Comput. Secur. 2018, 75, 118–137. [Google Scholar] [CrossRef]

- Dinella, E.; Dai, H.; Li, Z.; Naik, M.; Song, L.; Wang, K. Hoppity: Learning Graph Transformations to Detect and Fix Bugs in Programs. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Sidiroglou-Douskos, S.; Lahtinen, E.; Rittenhouse, N.; Piselli, P.; Long, F.; Kim, D.; Rinard, M. Targeted Automatic Integer Overflow Discovery Using Goal-Directed Conditional Branch Enforcement. In Proceedings of the Twentieth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’15, Istanbul, Turkey, 14–18 March 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 473–486. [Google Scholar] [CrossRef]

- Nulab. Backlog. 2021. Available online: https://backlog.com/bug-tracking-software/ (accessed on 23 April 2021).

- Foundation, M. Bugzilla. 2021. Available online: https://www.bugzilla.org/ (accessed on 23 April 2021).

- Kroah-Hartman, G.; Pakki, A. Linux-NFS Archive on lore.kernel.org. 2021. Available online: https://lore.kernel.org/linux-nfs/YH%2FfM%2FTsbmcZzwnX@kroah.com/ (accessed on 23 April 2021).

- Padhye, R.; Mani, S.; Sinha, V.S. A study of external community contribution to open source projects on GitHub. In Proceedings of the 11th Working Conference on Mining Software Repositories, Hyderabad, India, 31 May–7 June 2014; pp. 332–335. [Google Scholar]

- Marks, L.; Zou, Y.; Hassan, A.E. Studying the Fix-Time for Bugs in Large Open Source Projects. In Proceedings of the 7th International Conference on Predictive Models in Software Engineering, Promise ’11, Banff, AB, Canada, 20–21 September 2011; Association for Computing Machinery: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- Francalanci, C.; Merlo, F. Empirical analysis of the bug fixing process in open source projects. In Proceedings of the IFIP International Conference on Open Source Systems, Milan, Italy, 7–10 September 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 187–196. [Google Scholar]

- Kim, S.; Whitehead, E.J., Jr. How long did it take to fix bugs? In Proceedings of the 2006 International Workshop on Mining Software Repositories, Shanghai, China, 22–23 May 2006; pp. 173–174. [Google Scholar]

- Ray, B.; Hellendoorn, V.; Godhane, S.; Tu, Z.; Bacchelli, A.; Devanbu, P. On the “Naturalness” of Buggy Code. arXiv 2015, arXiv:1506.01159. [Google Scholar]

- Osman, H.; Lungu, M.; Nierstrasz, O. Mining frequent bug-fix code changes. In Proceedings of the 2014 Software Evolution Week—IEEE Conference on Software Maintenance, Reengineering, and Reverse Engineering (CSMR-WCRE), Antwerp, Belgium, 3–6 February 2014; pp. 343–347. [Google Scholar] [CrossRef]

- Rodriguez Perez, G.; Zaidman, A.; Serebrenik, A.; Robles, G.; González-Barahona, J. What if a bug has a different origin? Making sense of bugs without an explicit bug introducing change. In Proceedings of the 12th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, ESEM ’18, Oulu, Finland, 11–12 October 2018; Association for Computing Machinery, Inc.: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Peruma, A.; Newman, C.D. On the Distribution of “Simple Stupid Bugs” in Unit Test Files: An Exploratory Study. arXiv 2021, arXiv:2103.09388. [Google Scholar]

- Pan, K.; Kim, S.; Whitehead, E.J. Toward an Understanding of Bug Fix Patterns. Empirical Softw. Engg. 2009, 14, 286–315. [Google Scholar] [CrossRef]

- Kim, S.; Zimmermann, T.; Pan, K.; Whitehead, E.J., Jr. Automatic Identification of Bug-Introducing Changes. In Proceedings of the 21st IEEE/ACM International Conference on Automated Software Engineering (ASE’06), Tokyo, Japan, 18–22 September 2006; pp. 81–90. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Karampatsis, R.; Sutton, C. ManySStuBs4J Dataset. Zenodo 2020. [Google Scholar] [CrossRef]

- Karampatsis, R.M.; Sutton, C. How often do single-statement bugs occur? The ManySStuBs4J dataset. In Proceedings of the 17th International Conference on Mining Software Repositories, Seoul, Republic of Korea, 29–30 June 2020; pp. 573–577. [Google Scholar]

- Chacon, S.; Long, J. Git-Diff Documentation. 2021. Available online: https://git-scm.com/docs/git-diff (accessed on 23 April 2021).

- Chacon, S.; Long, J. Git-Log Documentation. 2021. Available online: https://git-scm.com/docs/git-log (accessed on 23 April 2021).

- Mcknight, P.E.; Najab, J. Mann-Whitney U Test. Corsini Encycl. Psychol. 2010. [Google Scholar] [CrossRef]

- Nachar, N. The Mann-Whitney U: A Test for Assessing Whether Two Independent Samples Come from the Same Distribution. Tutorials Quant. Methods Psychol. 2008, 4, 13–20. [Google Scholar] [CrossRef]

- Fritz, C.; Morris, P.E.; Richler, J.J. Effect size estimates: Current use, calculations, and interpretation. J. Exp. Psychol. Gen. 2012, 141, 2–18. [Google Scholar] [CrossRef] [PubMed]

- Conover, W.J. Practical Nonparametric Statistics; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1999. [Google Scholar]

- Sentas, P.; Angelis, L.; Stamelos, I. A statistical framework for analyzing the duration of software projects. Empir. Softw. Eng. 2008, 13, 147–184. [Google Scholar] [CrossRef]

- Aman, H.; Amasaki, S.; Yokogawa, T.; Kawahara, M. A survival analysis of source files modified by new developers. In Proceedings of the International Conference on Product-Focused Software Process Improvement, Innsbruck, Austria, 29 November–1 December 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 80–88. [Google Scholar]

- Canfora, G.; Ceccarelli, M.; Cerulo, L.; Di Penta, M. How long does a bug survive? An empirical study. In Proceedings of the 2011 18th Working Conference on Reverse Engineering, Limerick, Ireland, 17–20 October 2011; pp. 191–200. [Google Scholar]

- Efron, B. Logistic regression, survival analysis, and the Kaplan-Meier curve. J. Am. Stat. Assoc. 1988, 83, 414–425. [Google Scholar] [CrossRef]

- Samoladas, I.; Angelis, L.; Stamelos, I. Survival analysis on the duration of open source projects. Inf. Softw. Technol. 2010, 52, 902–922. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Ulanova, L.; Neamtiu, I.; Koduru, S.C. An empirical analysis of bug reports and bug fixing in open source android apps. In Proceedings of the 2013 17th European Conference on Software Maintenance and Reengineering, Genova, Italy, 5–8 March 2013; pp. 133–143. [Google Scholar]

- Lamkanfi, A.; Demeyer, S. Filtering bug reports for fix-time analysis. In Proceedings of the 2012 16th European Conference on Software Maintenance and Reengineering, Szeged, Hungary, 27–30 March 2012; pp. 379–384. [Google Scholar]

- Vijayakumar, K.; Bhuvaneswari, V. How much effort needed to fix the bug? A data mining approach for effort estimation and analysing of bug report attributes in Firefox. In Proceedings of the 2014 International Conference on Intelligent Computing Applications, Coimbatore, India, 6–7 March 2014; pp. 335–339. [Google Scholar]

- Ali, R.H.; Parlett-Pelleriti, C.; Linstead, E. Cheating Death: A Statistical Survival Analysis of Publicly Available Python Projects. In Proceedings of the 17th International Conference on Mining Software Repositories, Seoul, Republic of Korea, 29–30 June 2020; pp. 6–10. [Google Scholar]

- Liu, Z.; Li, M.; Hua, Q.; Li, Y.; Wang, G. Identification of an eight-lncRNA prognostic model for breast cancer using WGCNA network analysis and a Cox-proportional hazards model based on L1-penalized estimation. Int. J. Mol. Med. 2019, 44, 1333–1343. [Google Scholar] [CrossRef] [PubMed]

- Bird, C.; Rigby, P.C.; Barr, E.T.; Hamilton, D.J.; German, D.M.; Devanbu, P. The promises and perils of mining git. In Proceedings of the 2009 6th IEEE International Working Conference on Mining Software Repositories, Vancouver, BC, Canada, 16–17 May 2009; pp. 1–10. [Google Scholar]

- Tao, Y.; Dang, Y.; Xie, T.; Zhang, D.; Kim, S. How do software engineers understand code changes? An exploratory study in industry. In Proceedings of the ACM SIGSOFT 20th International Symposium on the Foundations of Software Engineering, Cary, NC, USA, 11–16 November 2012; pp. 1–11. [Google Scholar]

- Developer Survey Results 2019. Available online: insights.stackoverflow.com/survey/2019 (accessed on 23 April 2021).

- Zhang, H.; Gong, L.; Versteeg, S. Predicting bug-fixing time: An empirical study of commercial software projects. In Proceedings of the 2013 35th International Conference on Software Engineering (ICSE), San Francisco, CA, USA, 18–26 May 2013; pp. 1042–1051. [Google Scholar]

- Hu, H.; Zhang, H.; Xuan, J.; Sun, W. Effective bug triage based on historical bug-fix information. In Proceedings of the 2014 IEEE 25th International Symposium on Software Reliability Engineering, Naples, Italy, 3–6 November 2014; pp. 122–132. [Google Scholar]

| Group | Count | Mean | Standard Deviation | Median |

|---|---|---|---|---|

| Research Questions (A) | ||||

| Initial Buggy Commit on Weekday | 32,276 | 7611.25 | 26,156.3 | 330.392661 |

| Initial Buggy Commit on Weekend | 4131 | 3458.431 | 13,390.04 | 160.079725 |

| Initial Buggy Commit Work Hours | 22,800 | 6396.583 | 21,265.25 | 287.917833 |

| Initial Buggy Commit not Work Hours | 13,607 | 8385.787 | 30,359.9 | 329.574957 |

| Research Questions (B) | ||||

| Same Author Bug Fix | 18,201 | 2489.816 | 9565.716 | 72.961409 |

| Different Author Bug Fix | 18,206 | 11,788.99 | 33,501.36 | 987.887367 |

| Same User is Author Committer | 31,359 | 7651.337 | 26,528.25 | 280.848463 |

| Different User is Author Committer | 5048 | 3963.789 | 12,250.26 | 431.233376 |

| More than 25 Committers | 34,688 | 7485.949 | 25,635.98 | 344.932187 |

| Less than 25 Committers | 1719 | 159.916 | 434.1329 | 21.776339 |

| More than 40 Authors | 34,534 | 7504.619 | 25,690.44 | 343.853362 |

| Less than 40 Authors | 1873 | 418.0325 | 1404.526 | 25.125066 |

| Group | Mann–Whitney U Test Statistic | Asymptotic Sig. (2-Sided Test) | Effect Size |

|---|---|---|---|

| Research Questions (A) | |||

| Initial Buggy Commit on Weekday– Initial Buggy Commit not on Weekend | 58954258 | 0.0635463 | |

| Initial Buggy Commit Work Hours– Initial Buggy Commit not Work Hours | 151804794 | 0.0179076 | |

| Research Questions (B) | |||

| Same Author Bug Fix– Different Author Bug Fix | 95107772 | 0.3688948 | |

| Same User is Author and Committer– Different User is Author and Committer | 73798999 | 0.0404673 | |

| More than 25 Committers– Less than 25 Committers | 43675871 | 0.17079872 | |

| More than 40 Authors– Less than 40 Authors | 45870631 | 0.16006322 |

| Group | Independent-Samples Hodges–Lehmann Median Difference Estimate | 95 % Confidence Interval |

|---|---|---|

| Research Questions (A) | ||

| Initial Buggy Commit on Weekday– Initial Buggy Commit not on Weekend | 35.330461 | (24.620914, 48.102542) |

| Initial Buggy Commit Work Hours– Initial Buggy Commit not Work Hours | 1.320736 | (0.272528, 3.068727) |

| Research Questions (B) | ||

| Same Author Bug Fix– Different Author Bug Fix | 543.990805 | (506.822835, 578.456770) |

| Same User is Author and Committer– Different User is Author and Committer | 14.924 | (9.481,23.603) |

| More than 25 Committers– Less than 25 Committers | −262.823369 | (−304.192357, −226.864087) |

| More than 40 Authors– Less than 40 Authors | −225.802457 | (−261.235513, −193.823217) |

| Attribute | Value | N | Ratio |

|---|---|---|---|

| same author | No | 13,795 | reference (1) |

| Yes | 17,958 | 1.71 (1.67–1.74) *** | |

| bug intro. work hours | No | 11,726 | reference |

| Yes | 20,027 | 0.96 (0.94–0.98) *** | |

| bug intro. weekend | No | 27,839 | reference |

| Yes | 3914 | 1.12 (1.08–1.15) *** | |

| code authors > 40 | No | 1546 | reference |

| Yes | 30,207 | 0.57 (0.53–0.61) *** | |

| code committers > 25 | No | 692 | reference |

| Yes | 31,061 | 0.60 (0.54–0.62) *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eiroa-Lledo, E.; Ali, R.H.; Pinto, G.; Anderson, J.; Linstead, E. Large-Scale Identification and Analysis of Factors Impacting Simple Bug Resolution Times in Open Source Software Repositories. Appl. Sci. 2023, 13, 3150. https://doi.org/10.3390/app13053150

Eiroa-Lledo E, Ali RH, Pinto G, Anderson J, Linstead E. Large-Scale Identification and Analysis of Factors Impacting Simple Bug Resolution Times in Open Source Software Repositories. Applied Sciences. 2023; 13(5):3150. https://doi.org/10.3390/app13053150

Chicago/Turabian StyleEiroa-Lledo, Elia, Rao Hamza Ali, Gabriela Pinto, Jillian Anderson, and Erik Linstead. 2023. "Large-Scale Identification and Analysis of Factors Impacting Simple Bug Resolution Times in Open Source Software Repositories" Applied Sciences 13, no. 5: 3150. https://doi.org/10.3390/app13053150

APA StyleEiroa-Lledo, E., Ali, R. H., Pinto, G., Anderson, J., & Linstead, E. (2023). Large-Scale Identification and Analysis of Factors Impacting Simple Bug Resolution Times in Open Source Software Repositories. Applied Sciences, 13(5), 3150. https://doi.org/10.3390/app13053150