1. Introduction

There are millions of deaf and mute (D-M) people who heavily rely on sign language to communicate with others. They are often faced with the challenge of establishing effective communication when others are unable to understand or use sign language. In general, people with no physical impairment do not make an effort to learn sign language. This creates a scenario where it is difficult to establish effective communication between the D-M and non-deaf and mute (ND-M). To overcome this problem, a system is needed that provides a platform where the D-M and the ND-M can communicate with each other.

This work focuses on the use of information and communication technology (ICT) to provide a platform for which a system can be developed to support this type of communication. The research covers the development of a software application that is interfaced with hardware and uses different algorithms to solve some of the above-discussed problems. This is expected to be a step towards improving the integration of the D-M in society. The development presented in this manuscript uses existing sign language datasets to establish automated communication. The proposed system is designed around commercial-off-the-shelf (COTS) hardware integrated with a software application using machine learning (ML) algorithms that provide full-duplex communication.

The use of ML is providing a growing number of solutions to complex problems in diverse areas related to human impairment. ML is already applied to establish effective communication systems so that the D-M and the ND-M can communicate. Researchers are using existing algorithms, as well as creating new algorithms, to enhance the existing systems. ML algorithms are not only being deployed to solve existing problems, but these algorithms are also employed to increase efficiency by reducing processing time, creating consistent datasets, and improving quality.

This research is the continuation of the previously published work [

1]. In this manuscript, the capability of the previous system is significantly enhanced, and new features include the new combined sign language datasets, American Sign Language (ASL), Pakistani Sign Language (PSL) and Spanish Sign Language (SSL). The online versions of these datasets are enhanced by including more images of better quality and variations of individual gestures. The updated system now includes a language detection feature and an improved user profile which contains more information such as right/left hand, speed and accuracy of user’s hand gestures, default user’s sign language dataset, etc. The proposed system is further validated using new experiments. A comparison of the three-sign languages is also carried out. A literature review is extended to help improve the proposed system. The software application is updated and includes the features mentioned here. Some new features, i.e., detecting gestures when the user is holding or wearing other objects like a wristwatch, ring, etc., are also added. The database is updated with new images, and the algorithm is further improved to exploit the new features.

In this manuscript, limitations of the existing systems are studied, and a new two-way communication system for the D-M and the ND-M is presented based on a new dataset created, which is the combination of ASL, PSL, and SSL, with a focus on overcoming limitations in existing systems. Within this research, the three datasets which are individually available are further enhanced to improve the quality of these datasets. The details of the proposed system are presented in this manuscript and validated using a series of experiments. The acquired hand gesture data is processed using Convolutional Neural Network (CNN), and further processing, discussed later, is implemented using supervised ML.

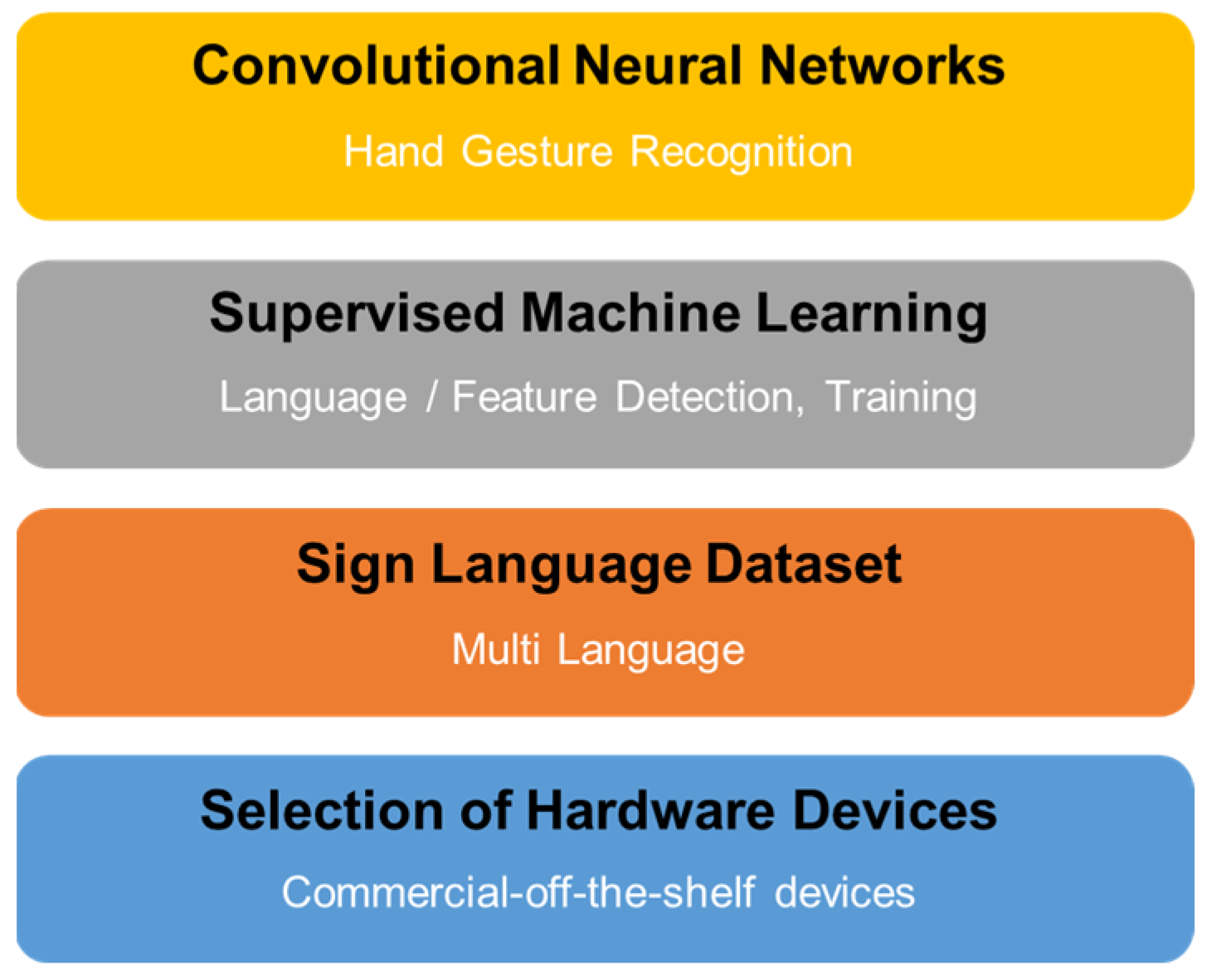

Figure 1 shows the scope of this work, which encompasses the four main building blocks, which include CNN, supervised ML algorithms, selection and processing of sign language datasets, and review of hardware tools for hand gesture acquisition.

A summary of the section-wise layout of the manuscript is outlined here.

Section 2 provides a detailed review of existing systems as part of the related work to understand the current state of research, novel features, limitations of existing systems, and prototypes.

Section 3 presents the research methodology through 4 steps. The next sections, i.e., 4 and 5, provide the details of the proposed communication system and its validation through experimental setups. The results of these experiments are presented in

Section 5. A discussion section is presented in

Section 6, followed by the last

Section 7, which covers conclusions and future work.

2. Related Work

This section is a review of recent research work carried out for the development of communication systems involving the D-M. The review includes the novel features presented by the authors as well as the limitations of their proposed systems.

2.1. System Level Review

In the manuscript [

2], the authors used a Leap Motion Device (LMD) to acquire hand gesture signals. The D-M can communicate with others using hand gestures while the ND-M are provided with an android application that converts speech to text. The accuracy of gesture detection is 95%. The authors in [

3] presented a system that converts hand gestures from the D-M into text. The system also displays a gesture image for the text input. A portable device is designed by the authors in [

4]. The D-M can carry this device to acquire and convert hand gestures into speech. In [

5], a contactless hand gesture recognition system is presented where the gesture is acquired using an LMD. Long Short-Term Memory (LSTM) recurrent neural networks were used for gesture detection.

The authors used an LMD for hand gesture recognition for the D-M in [

6]. They defined a criterion for hand gesture recognition and used different methods such as Gaussian Mixture Model (GMM), Hidden Markov Model (HMM), and Dynamic Time Warping (DTW) for image data processing. In the manuscript [

7], the authors acquired sign language gestures using a camera. Various neural network algorithms were compared to process the image data and calculate the accuracy of the proposed system. The authors in [

8] developed a learning system for the D-M people. They used Augmented Reality to convert the acquired gesture into a 3D model. They also developed a glove using Flex sensors.

The manuscript [

9] is a survey paper where the authors collected and reviewed data to understand different reasons for hearing loss and the age when it is detected. A prototype of a glove using flex sensors is designed by the authors in [

10]. They designed a system where hand gestures are acquired and then converted into text and voice. The researchers in [

11] reviewed and compared several techniques for hand gesture recognition and listed the novel features of different algorithms, and they also highlighted their limitations. This survey provides a good insight into different algorithms, including their pros and cons. In [

12], an automated ASL-based hand gesture recognition system is presented, where hand gestures are first detected and then converted into text. An automated sign language detection system is proposed by the authors in [

13], where they designed two-way communication using an ASL dataset.

The Bangla Sign Language (BSL) dataset is applied in [

14], where the authors processed the hand gesture data using CNN, reporting an accuracy exceeding 98%. In [

15], the authors developed a mobile phone application where a customized interface for individual users is proposed. This application provides support for multiple languages. An Arabic Sign language (ArSL) based system is presented by authors in [

16]. The detected ArSL hand gestures are converted into voice. In this manuscript, the authors surveyed different hand gesture detection techniques.

A CNN-based hand gesture recognition system is presented in [

17]. The authors reported a hand gesture recognition accuracy exceeding 93%. In [

18], the researchers developed a glove that was used for translating ASL and reported an accuracy of 95%. A portable hand gesture recognition prototype is presented in [

19]. The authors of this manuscript used a deep learning algorithm to process image data, which is then converted to speech. In [

20], the authors developed a prototype of a smart glove for detecting sign language, and when a gesture is recognized, a recorded audio message is played. This system is designed to detect Indian Sign Language (ISL) data. Another CNN-based hand gesture detection prototype is presented in [

21]. The authors claim that the proposed prototype detects ISL with training accuracy exceeding 99% by using more than 3000 images.

In [

22], the authors used the ASL dataset, where the hand gestures are detected using CNN. They reported an accuracy exceeding 95%. The authors in [

23] developed a prototype using a flex sensors-based glove and interfaced it with Arduino. The prototype converts the hand gesture data into text. The authors used K-Nearest Neighbours (KNN) algorithm for image data processing. A PC-based image detection system is presented in [

24]. The authors used the Principal Component Analysis (PCA) algorithm. In [

25], an automated Sign Language Interpreter (SLI) prototype, which is based on a custom-made glove connected to Arduino, is presented. The authors reported a hand gesture detection accuracy of 93%. A CNN-based prototype for hand gesture detection is presented in [

26]. This prototype also provides a training option and support for multiple languages.

The authors developed a mobile application in [

27] for the D-M. The application works in offline mode and uses ASL and Filipino Sign Language (FPL) datasets. A PC-based prototype is presented by authors in [

28] for detecting hand images using sensors. An android-based application is presented in [

29], where visual feedback is provided to the user. The authors in this paper also compared different technologies. In [

30], the authors carried out a survey to understand the problems and challenges faced by the D-M. They highlighted the importance and impact of hand gesture recognition systems and prototypes in providing a platform for the D-M.

Table 1 is a summary of the undertaken review. The details include hardware, software, features, and limitations of the designed prototypes and products. The last column also includes information related to Sign Language Dataset (SLD).

2.2. Sign Language Dataset Review

In [

14], the authors used the BSL dataset, containing more than 2500 images, to validate their prototype. The authors in [

16] used a new method to detect an ArSL dataset. In [

31], the authors used a word-level ASL dataset. Their selection of the dataset is based on the number of people who can use this sign language. Argentinian Sign Language (ArgSL) is processed in [

32], where they used videos as input. The researchers used Turkish Sign Language (TSL) [

33] and the CNN algorithm to process the dataset.

Chinese language speakers are the single largest group as compared to speakers of all other languages. The authors in [

34] used Chinese Sign Language (CSL) with hundreds of categories within the dataset. Another word-level ASL dataset is used in [

35]. In [

36], a large-scale ASL dataset is presented and processed. Russian Sign Language (RSL) is used as input in [

37]. In [

38], the authors processed ArSL. A prototype was developed based on Arduino and a glove with sensors connected.

Table 2 is a summary of the different sign language datasets reviewed. This review is focused on the size of the datasets, how many people can use this dataset, and its complexity.

2.3. Machine Learning Algorithm Review

In this section, a review of some machine learning algorithms is presented. A new dataset was created for supervised machine learning in [

39,

40]. The dataset is used to suggest a solution to the faults found during electronic product manufacturing. The algorithms are implemented in LabVIEW [

41]. The authors in [

42] reviewed machine learning algorithms and used them to determine the performance of soldering stations.

In [

43], the authors review machine learning algorithms. Their finding includes highlighting the limitations of some algorithms. The authors used Scikit, a Python [

44] toolkit, to implement a machine-learning algorithm in [

45].

2.4. Related Work Conclusions and Research Gap

In this section, a further summary is presented of the prototypes and systems reviewed. From this conclusion, the research gap, which forms the basis of the research work carried out in this manuscript, is highlighted.

The majority of the work reviewed here is unidirectional, i.e., detection and conversion of hand gesture data into text and or voice. This is a limitation where the ND-M are unable to communicate with the D-M. The other limitation is the input sign language dataset, which in most cases, is one. This means that people who do not know that particular sign language are unable to benefit from the system. Some prototypes designed using gloves and sensors require maintenance and calibration and are difficult to maintain consistency.

The system proposed in this manuscript provides a full-duplex system where both the D-M and the ND-M can communicate with each other. This system uses multiple sign language datasets, provides training, is easy to use, and has a low cost. Systems based on commercial-off-the-shelf (COTS) devices like LMDs require considerably less effort in converting the prototype into a product. Affordability is also important, so low initial cost and no operational cost are essential features.

3. Research Methodology

In this section, the research methodology is presented to explain the several steps which were carried out in this research.

Figure 2 shows the block diagram with the steps and relevant details.

3.1. Design Research

In this step, two tasks are performed. The first task is to list the activities that are within the research scope. The scope of this work is to implement a two-way communication system where the D-M and ND-M can communicate with each other without the need to learn sign language through sign language interpretation and conversion to audio. The following task is to select certain areas to review the existing work, which in this case includes deep learning algorithms, ML algorithms, and image-capturing devices. These details are provided in

Section 2, literature review.

3.2. Conduct Research

In this second step, an in-depth review of the selected categories is carried out. The details are presented in

Section 2. In this manuscript, some existing products and prototypes, which are developed to facilitate D-M communication, are reviewed. For this review, both the software and hardware aspects are considered, including the development platform, i.e., PC or Android, and hardware devices to capture hand gesture data. The literature review also covers how the existing systems are designed, whether based on COTS or custom-made. In the case of custom-made devices such as sensor-based gloves, the calibration and build process are also reviewed. The next step is to select and review sign language datasets, machine learning algorithms, and deep learning algorithms. Within the review, the software applications are also considered, and LabVIEW is selected for software development. The final activity is to define experimental setups so that the proposed system can be validated. A detailed review is conducted in order to define how the different requirements for the proposed system can be mapped to the experiments and then validated. It is important to have an optimum number of experiments for validation as a means of avoiding repetition and duplication. The conclusion of the literature review is necessary to determine which parameters to use for implementing the proposed system.

3.3. Design Implementation

In this step, the tasks related to the implementation of the proposed system are considered. The features of the system are selected after conclusions drawn from the literature review. The design is implemented through five tasks, as listed in

Figure 2. In the first step, the sign language data is collected and enhanced, i.e., some images are updated, and new images are added. The sign language datasets are selected, and information is stored in the database. The software, including the algorithm implementation, is done in LabVIEW. After completing the software development, hardware deployment, and integration tasks, the user manual and other documentation work are completed.

3.4. Validation and Conclusions

This is the final step, where the complete system validation is carried out. The validation activities include testing the updated language dataset, system response, processing times, and accuracy. The validated system is then tested for performance through different experimental setups, and the results are presented.

4. Proposed Communication System for D-M

In this section, the details of the proposed system are presented, including some key features.

4.1. Communication System Novel Features

Figure 3 shows the novel features of the proposed system, which provides full duplex communication between the D-M and the ND-M. This low-cost system is user-friendly and easy to install, with no operational cost to the end user. There is a training option available, which allows the features to be customized for individual users. The ML-based algorithm provides a continuous improvement option where new and higher quality data, i.e., hand gesture images, can replace an existing image. The proposed system supports American Sign Language, Pakistani Sign Language, and Spanish Sign Language. More languages can be added. Hand gestures data is acquired using LMD, which is a COTS device and, unlike a glove, does not require maintenance or calibration. The image data is processed using the CNN algorithm.

4.2. Communication System Block Details

Figure 4 is a full duplex communication system between the D-M and the ND-M. The figure shows the implementation of the proposed system. The D-M person is provided with an interface where the hand gestures are acquired using an LMD connected to the PC-based system. The LMD captures hand gestures as images and forwards them to the PC, where it is further processed. The details of the processing are discussed later. The system supports multiple sign language datasets, i.e., ASL, PSL, and SSL. The system is reconfigurable, and more datasets can be added in the future. The acquired image data is processed through multiple stages and is then converted into a voice message.

The ND-M person can listen to the voice message. The speech or voice data is generated through the PC sound card output. In response, the ND-M person records a voice message which is acquired by the PC using sound card input. The voice message is then processed by the software application and converted into text and hand gesture images. The D-M person is then able to read the text or see the images. Similarly, an ND-M person can also initiate a conversation.

The proposed system is low-cost, user-friendly, and does not require any special training. These are the main features that make it easy and attractive for people to use this system. The sign language datasets selected for this system are based on the number of people using these sign language datasets. The selection of ASL, PSL, and SSL is based on the availability and size of datasets and the number of people using them. A small dataset means the size of the training dataset will also be small; hence the trained system will be less effective or accurate, while a large dataset results in higher accuracy but a slower response. Considering this, medium-sized datasets are selected.

The system provides a training mode where the user can check the accuracy of the system while undergoing training. In this mode, the hand gestures of the D-M person are processed, and the detected results are displayed without going through the text to speech to text conversion. The D-M person can vary hand gestures to check for detection accuracy. In this mode, the system also updates the database by replacing existing images with higher-quality images. The ML-based process compares the newly acquired images with the stored images and then decides between replacing the existing image with the new one or storing the new image along with the existing image or not storing the new image at all. Having more image files means an improved dataset which will increase accuracy, but this also means the system will require more processing time. It is important to have a balance between these parameters. This decision is taken by the ML-based implementation.

The ML algorithm also reviews the user-stored data, which helps to increase processing speed and accuracy. For example, if a user profile shows that the user only understands SSL, then the process will bypass the language detection step for this particular user. Similarly, the user can update the profile by adding more sign language datasets and other parameters. The system also maintains a performance record, both for the individual user and the overall system, using the stored user data and new data acquired through the training and normal modes.

Figure 5 shows the ML-based implementation. The block diagram shows the input, output, and hidden layers displaying various activities. The data is fed through the input layer, as shown by ‘1’. The input data includes both the hand gesture image data and the user data, which is stored as part of the user profile. In ‘2’, the user profile data is processed. The user can update the profile to reflect any changes. The next step, ‘3’, is the training mode. This step is used when the training mode is selected. In normal mode, the ML algorithm can use some options from this step. The language detection is done in step ‘4’. Currently, there are three datasets, but more can be added. The user-generated gestures are acquired by the proposed system, comparing them to the existing dataset, which is a combination of ASL, PSL and SSL. The system checks up to five gestures and then selects the language based on the results of the gestures matched to individual datasets of ASL, PSL or SSL. The acquired image goes through initialization in step ‘5’. In step ‘6’, the image goes through initial processing, where some features are detected and extracted. CNN algorithm is applied to further process the image data in ‘7’. The next two steps, i.e., ‘8’ and ‘9’, are for data storage. The results are generated through the output layer as marked by step ‘10’.

Figure 6 shows some graphical results of hand gesture image processing using the ML algorithm. The figure shows the original hand gesture and its extracted Red, Green and Blue (RGB) planes. The images in the third row show the feature and edge extraction of the original image for the particular gesture. The results of convolution using kernel size 3 are also presented here. The graphical results of detecting fingers using a region of interest and the pixel count of bright objects, i.e., the borders, are also shown.

5. Experimental Setup and Results

In this section, the proposed system is validated using 8 experiments that are specifically defined for this research. It is important to validate the key features and understand the limitations of the system. This is achieved using the experiments presented in this section.

5.1. System Validation Criteria

The communication system is validated through two stages. The first stage is to select the sign language dataset, which in this case are ASL, PSL, and SSL datasets. The second stage is to select a subset of these datasets. It is important to use a known input dataset and a predicted output result. This will help in understanding the system accuracy and limitations of the proposed system. Once the system is validated using these criteria, the full dataset is applied to further determine the performance of the system.

It is important to use a known set of images for initial validation. Outside the scope of this work is a study of how sign language datasets are created and validated.

To validate the proposed communication system, the ASL dataset images are used [

46]. These are colored images of hand gestures. The SSL dataset used for validation is available online [

47]. The PSL dataset is created by recording images of letters and numbers. A reference to PSL is also available from [

48].

Letters and numbers from the three sign language datasets are used for validating the communication system. For this research work, 70% of the hand gesture images from the datasets are used for training, while the remaining 30% are used for testing. The split of 70% and 30% is a good ratio for training and testing. However, a different ratio can also be used. As an example, approximately 1800 images of letters and 700 images of numbers from ASL are used, which are then split into the ratio mentioned before.

Figure 7,

Figure 8 and

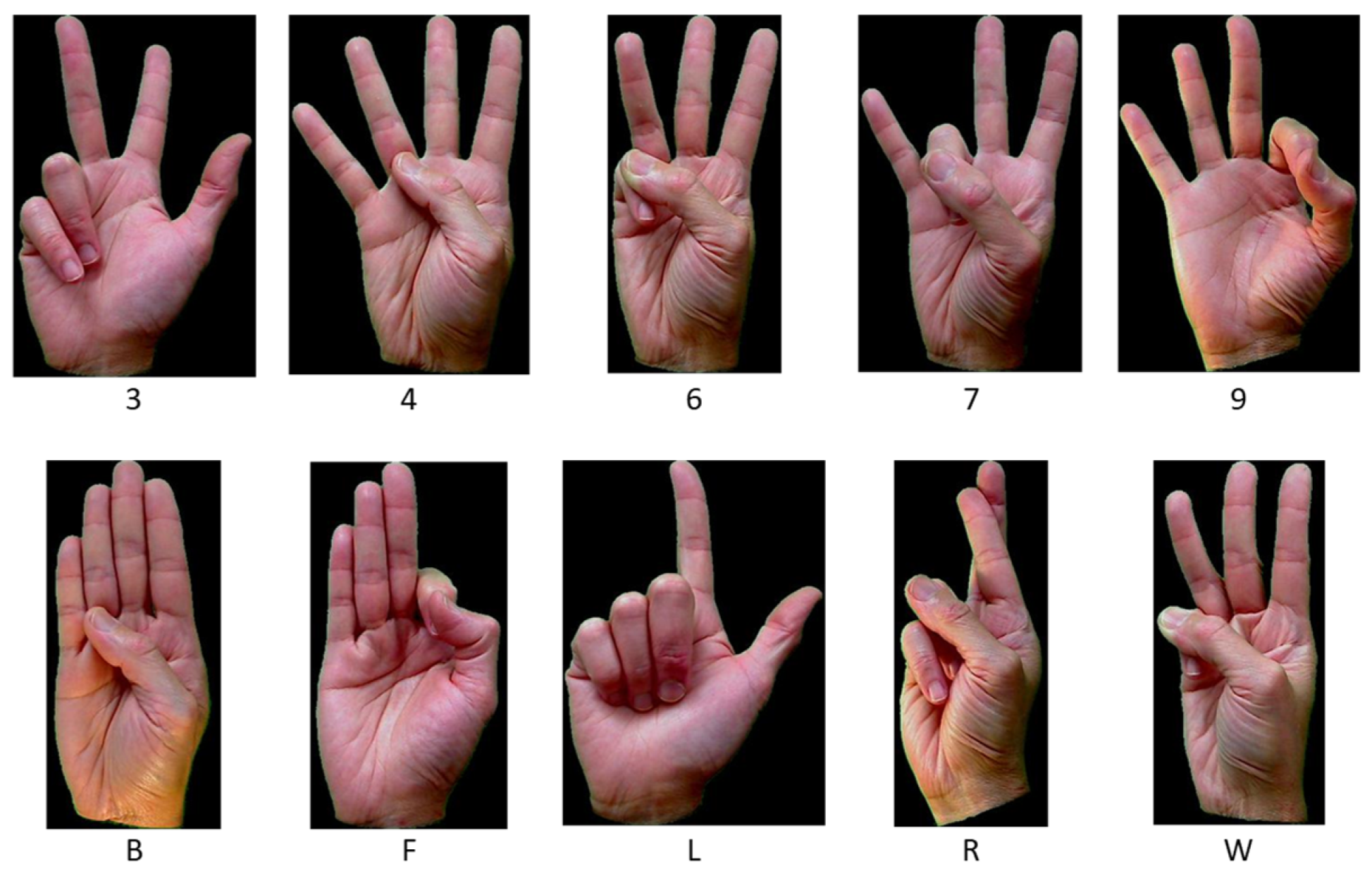

Figure 9 show subsets of ASL, PSL, and SSL dataset images.

Figure 7 shows five numbers and letters from ASL, while in

Figure 8, eight letters from PSL are shown. In

Figure 9, six letters from SSL are shown. The dataset used by the proposed system includes approximately 6000 hand gesture images from the three individual datasets.

5.2. Experiment 1—Proposed Communication System Accuracy

In this experiment, the accuracy of the detection of hand gestures is presented. The formula for accuracy is presented in Equation (1). The accuracy is presented in percentages in

Table 3. The accuracy values are also used to determine the performance of the algorithm.

In this experiment, the repeatability of the algorithm is evaluated. The system is validated by processing the gestures of all letters and numbers. For validation, the most accurate gesture detected by the system is selected and processed. The results are presented in

Table 3. The accuracy is listed for letters and numbers of the three individual sign language datasets. The table also lists the size of subsets used for the training and testing of the system. It is also observed that the accuracy of the proposed system depends on the accuracy of the hand gestures, i.e., how accurately the gesture is made.

5.3. Experiment 2—Processing Individual Hand Gestures

In this section, hand gesture detection results are presented. In

Figure 10, three letters from the PSL dataset are randomly selected for this experiment and processed through the proposed system. The hand gesture detection algorithm acquires the hand gesture and compares it with the images from the dataset stored in the database. The results show that the detection can exceed 96%. Depending on the input hand gesture, more than one image from the database can be matched, and the one with the highest percentage is selected.

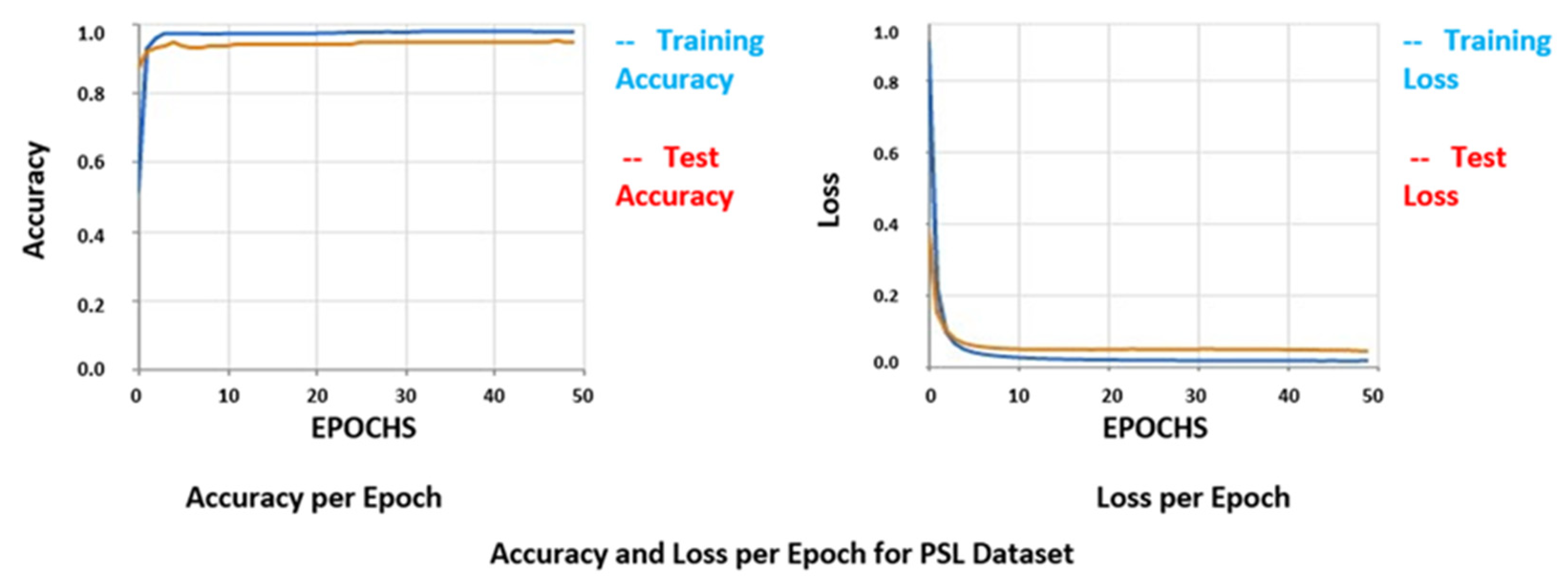

Figure 11 shows the accuracy and loss graphs for the PSL dataset. The graphs include both actual and test results.

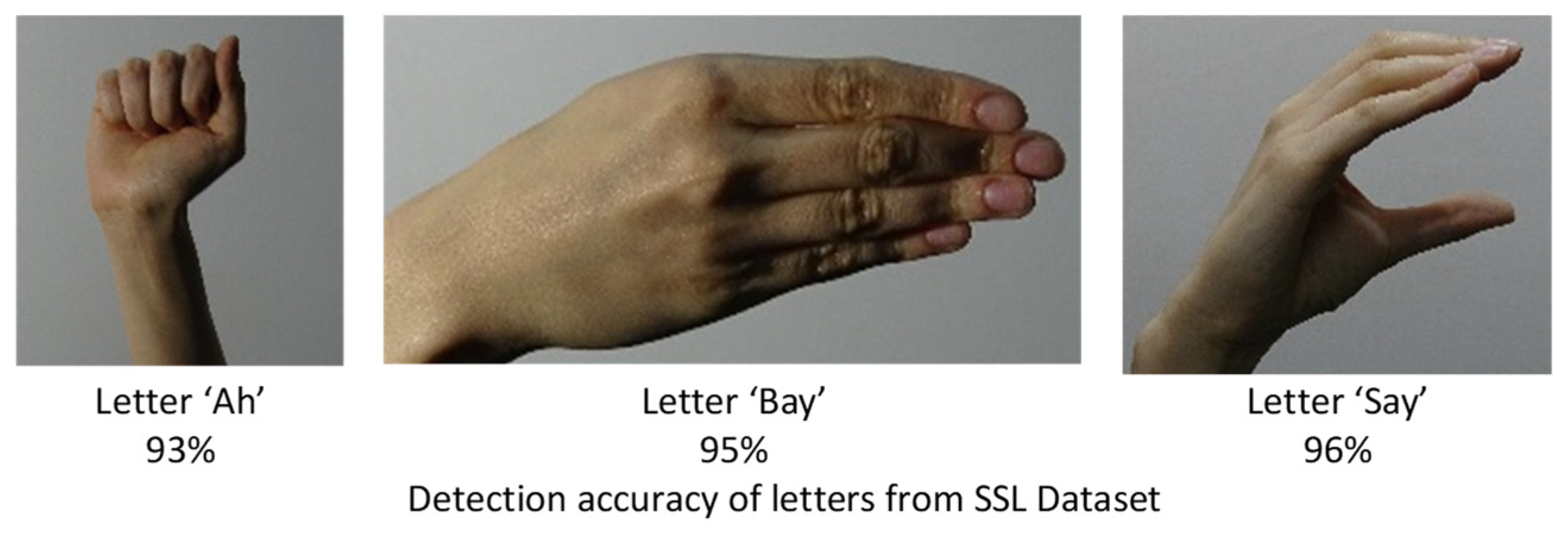

Figure 12 shows the hand gesture detection results for the SSL dataset. The results are encouraging and show high accuracy. In this figure, three random gestures are selected from SSL, and the detection accuracy is shown. It is observed that the accuracy of the captured hand gesture is high when it is unique among the stored hand gesture images. As an example, if two different hand gesture images look similar, then the detection accuracy of these will be slightly lower.

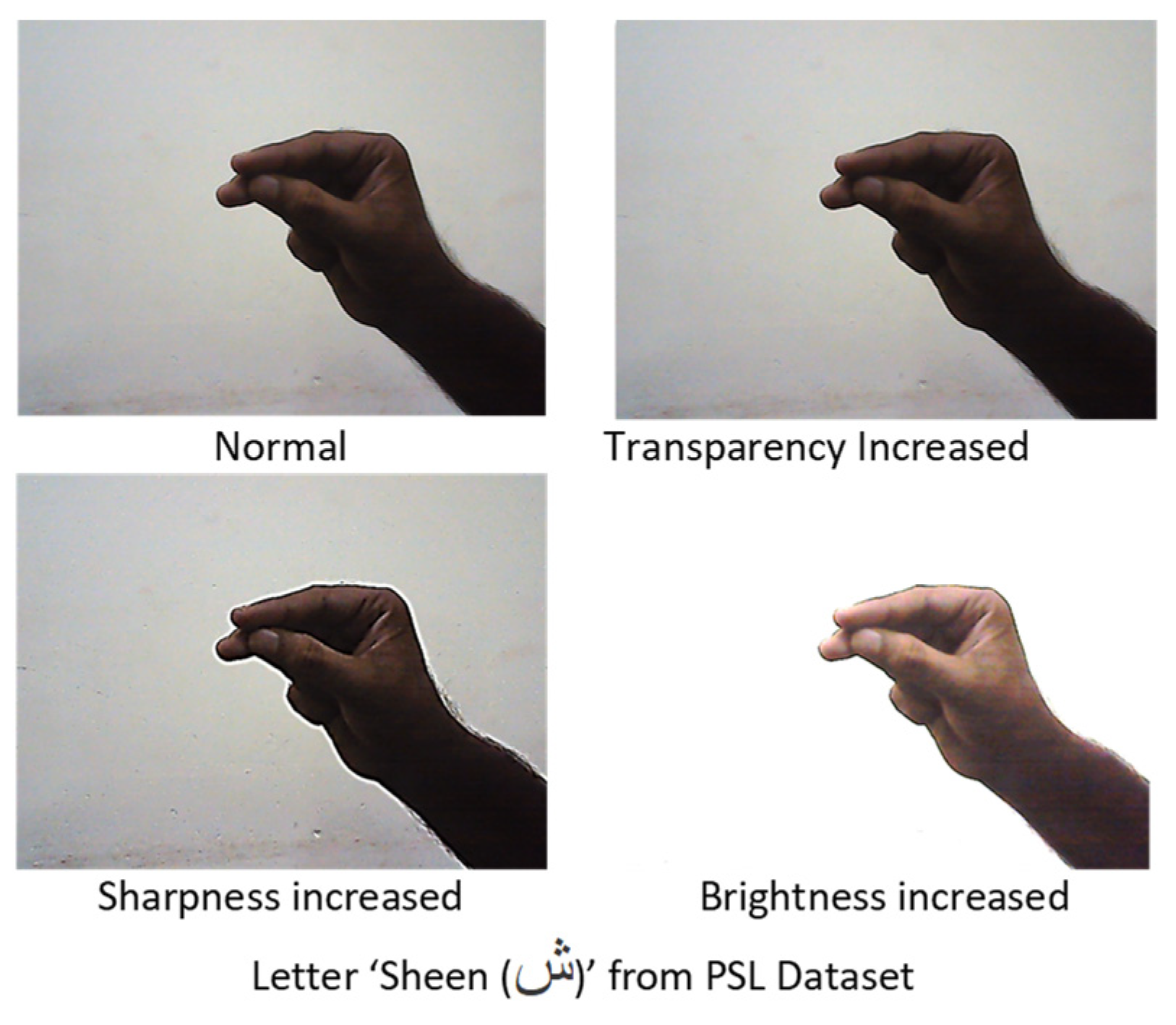

5.4. Experiment 3—Processing Hand Gestures Image Quality

In this section, the experiment is carried out by changing the quality of the hand gesture image and then processed through the proposed system.

Figure 13 shows the four variants of a letter from the PSL dataset. All the images are correctly detected, thus confirming that the proposed system is able to detect the correct gesture for varying image quality. In this experiment, the image quality of the acquired hand gestures varies, and the results show that the system is able to detect the letters correctly. This is an important result since the acquired image quality can vary due to background color, sharpness, background texture, etc.

5.5. Experiment 4—Processing Variations in Same Hand Gestures

In this section, the variation in hand gestures is processed through the proposed communication system. In

Figure 14,

Figure 15 and

Figure 16, two variants of letters from the PSL dataset are processed, and the detection accuracy is calculated. In this experiment, three letters from PSL are selected, and the hand gestures of these letters are varied slightly and then processed through the system. The results show that the accuracy is between 80% and 100% for the first letter in

Figure 14. This variation is due to the distance between the hand and the LMD. A part of the index finger of the image on the left is outside the camera vision.

A similar experiment is repeated in

Figure 15, where the accuracy is 100% due to minimal variation between the images. The results shown in

Figure 16 are similar to that in

Figure 14. Again, the low accuracy for the image on the right side in

Figure 16 is due to the distance of the hand from the LMD as well as the variation in the gesture.

In

Figure 17, a letter from the ASL dataset is shown with a detection accuracy exceeding 90%. The accuracy for the two images is high for the letter selected from the ASL dataset, mainly because the distance between the hand and camera for both images is similar, and the variation between the two gestures is minimal.

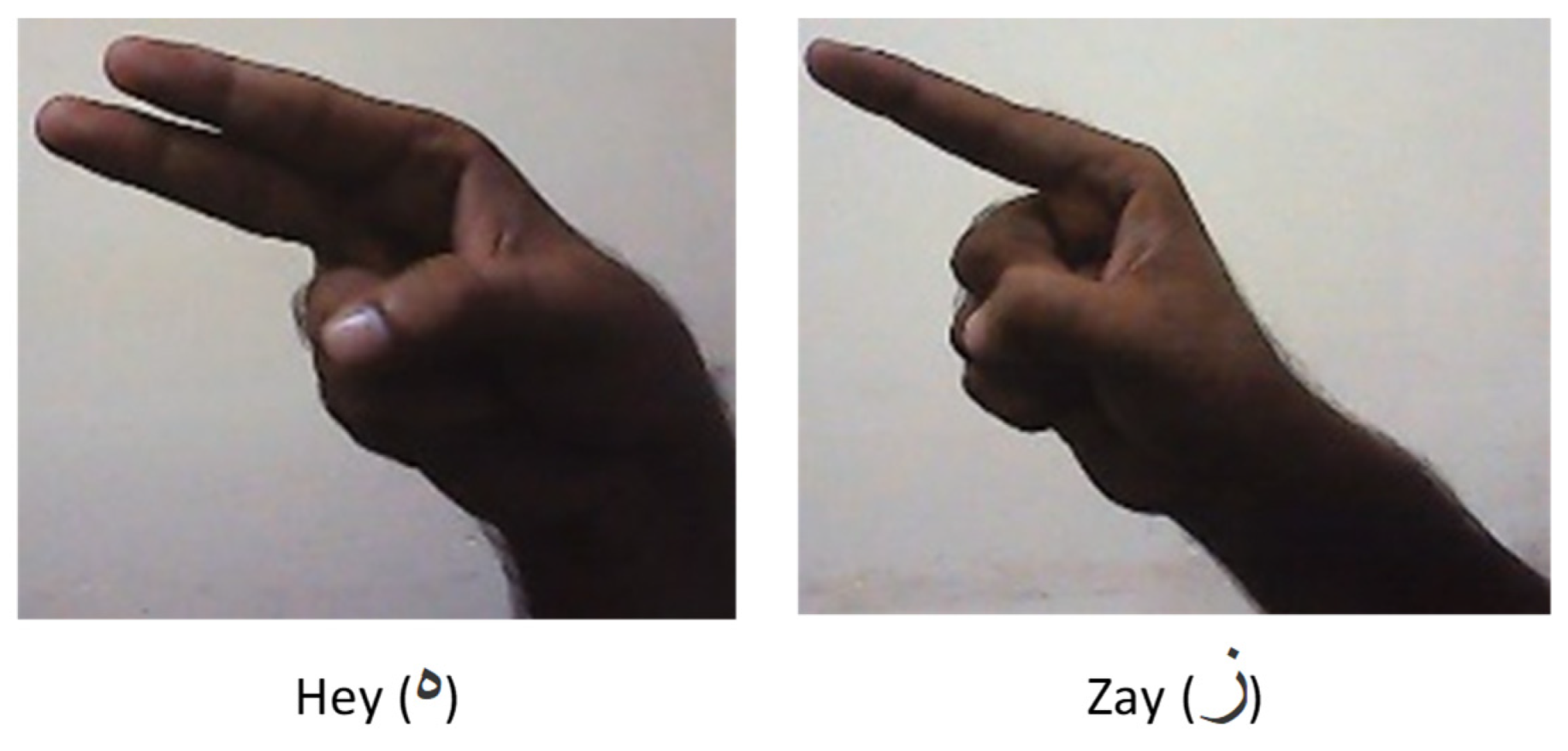

5.6. Experiment 5—Processing Similar Hand Gestures

Some hand gestures are similar, although not identical, and consequently, there is a possibility of wrong detection. In this section, results of similar hand gesture detection are presented.

Figure 18 and

Figure 19 show the detection results of two sets of letters from the PSL dataset. In

Figure 18, two hand gestures from the PSL dataset are compared. There is a significant difference between the two gestures, as there is only one finger used for the gesture on the right but two fingers for the gesture on the left. The system, in this instance, is able to correctly detect the two letters. The experiment is repeated for two more letters from PSL in

Figure 19, which are different, and the system is able to detect these letters correctly.

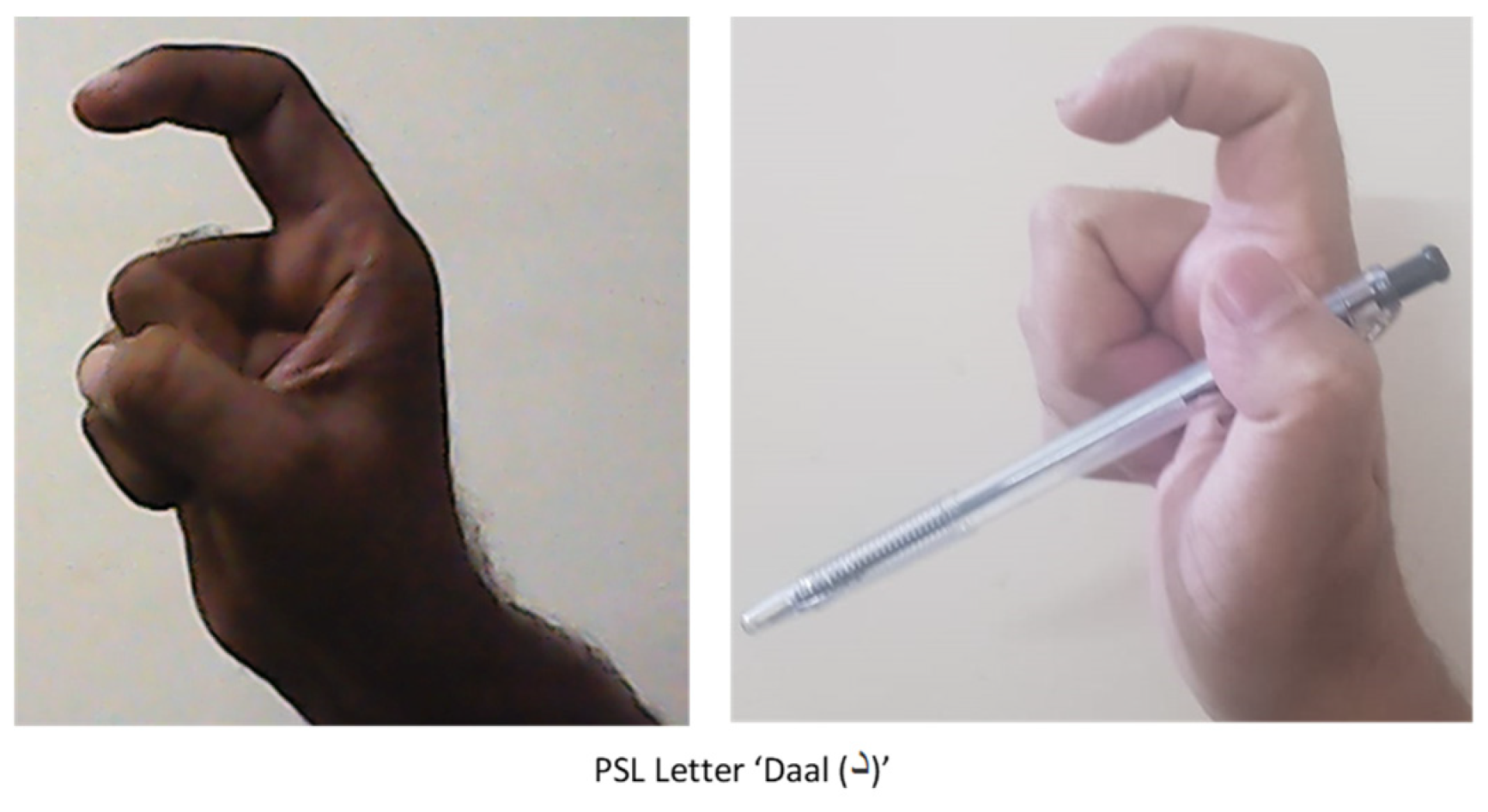

5.7. Experiment 6—Detecting Other Objects

This experiment focuses on other objects that can be visible while detecting hand gestures. The proposed system has a unique feature where these objects are also detected, and their information is ignored in some cases so that the correct hand gesture can be recognized.

Figure 20,

Figure 21 and

Figure 22 show images with and without other objects. A unique feature of this system is validated using this experiment. Here the user is wearing a watch in

Figure 20 and

Figure 21 and a ring in

Figure 22. It is observed that the system detection accuracy can vary if these objects are also captured with a hand gesture. Therefore, the images of objects like watches, rings, etc., are also stored in the database, enabling the proposed system to detect these objects and ignore them.

Figure 23 shows another example of an object captured with a hand gesture. The proposed system is unable to correctly detect the hand gesture here. In this experiment, the object, which is a pen, is detected by the system, but the system is unable to detect the hand gesture correctly. This is due to the shape of the object and also how the user is holding it.

5.8. Experiment 7—Algorithm Performance

In this section, the performance of the PSL dataset is evaluated and presented using a confusion matrix. This matrix shows the results for each letter compared with all other letters. The matrix is used in evaluating the performance of the algorithm and highlighting any errors. The confusion matrix for letters within the PSL dataset is shown in

Figure 24 and

Figure 25. This is an important metric for validating this type of system.

5.9. Experiment 8—Creating a New Dataset

In this experiment, the similarities between the three sign language datasets are presented. In

Figure 26, three letters, one each from ASL, SSL, and PSL, are shown. These letters have similar hand gestures. This approach can be used to create a new sign language dataset which can be a combination of multiple sign languages. This figure provides insight into the process of creating a new dataset. In the normal validation process, a confusion matrix is created, in which each letter within the dataset is compared with the rest of the letters, and accuracy is calculated. The figure shows a scenario where the gestures taken from ASL, PSL, and SSL are identical. In this case, the system is unable to detect the correct input sign language based on just one hand gesture.

6. Discussion

In this manuscript, a full duplex communication system for the D-M and the ND-M is presented. The scope of this work includes ML, CNN, the use of multiple Sign Language datasets, and COTS hardware devices. The system presented in this manuscript is the continuation of previously published work [

1]. The system uses three sign language datasets combined into one. This is a unique feature where the system automatically detects sign language. An extensive review of the existing systems is carried out and discussed in the related work section, including a tabular summary providing a summary of the review and listing some of the features of the existing systems. This also includes an analysis of some available datasets of different sign languages, followed by an evaluation of some machine learning techniques.

The proposed system is developed, implemented, and then validated using a four-stage research methodology. The key features of the proposed system include the implementation of CNN, ML, text-to-speech-to-text, a training interface, and a database storing the sign language datasets and user profiles. The system acquires hand gesture data using a COTS LMD device, and then the data is processed using the CNN algorithm. Using a COTS device means the system has a stable hardware interface to capture hand gesture images and does not require extensive calibration, which is normally needed when custom-made devices like a glove with sensors are used.

The system is validated through a series of experiments, and the limitations of some features are also defined. The experiments include hand gesture detection accuracy, processing of individual hand gesture data, evaluation of hand gestures with varying image quality, detection of variations in the same gestures, and the identification and processing of different hand gestures that look similar, detection of hand gestures with visible objects such as a watch, ring, etc., and finally automatic identification of a sign language dataset. The performance of the system is also evaluated using a confusion matrix.

7. Conclusions and Future Work

The system presented in this manuscript provides a communication platform for the D-M and the ND-M to communicate with each other without the need to learn sign language. A unique feature of this work is the creation of a new dataset that combines three existing sign language datasets. The proposed system automatically detects the hand gestures of a D-M person and converts them into a voice for an ND-M person after going through various processing stages. The system is reliable, easy to use, and based on a COTS LMD device to acquire hand gesture data which is then processed using CNN. Apart from CNN, a supervised ML algorithm is also applied for processing and automatically detecting sign language.

The system provides an audio interface for the ND-M and a hand gesture capture interface for the D-M. The system currently recognizes ASL, PSL, and SSL datasets, and the total number of images is approximately 6000. The system includes a training mode that can help individuals review how accurately the system is detecting their hand gestures. The users can create their unique profiles, which the system can use when processing hand gestures. The proposed system is validated through a series of experiments using the combined dataset of ASL, PSL, and SSL, and results show an accuracy exceeding 95%. The results are encouraging and repeatable. Another key feature of this system is that the combined dataset can be used by millions of people who use English, Urdu, and Spanish sign languages. The proposed system is to be installed and can be upgraded. There is no annual licensing; hence there is a low initial expense and no ongoing reoccurring cost. The database is updated, which improves the system’s performance and accuracy.

In the future, more sign language datasets can be processed and added. Dataset size can also be increased based on the recommendations from ML-based algorithms. Datasets can also be improved by adding videos and other types of data, including word-level hand gestures. More work can be done in the undertaking of comparisons between different sign languages, understanding similarities between them, and afterward combining these to create larger datasets. The proposed communication system can be further customized for individual users by adding more training features.

Author Contributions

Conceptualization, M.I.S. and S.N.; methodology, formal analysis, programming, and validation, M.I.S.; data curation, S.N.; writing, M.I.S., A.S., S.N., M.-A.L.-N. and E.N.-B.; visualization and software architecture and development, review of machine learning algorithm, A.S.; supervision, M.-A.L.-N. and E.N.-B. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been partially funded by Universidad de Málaga, Málaga, Spain.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No data is available.

Acknowledgments

The authors would like to acknowledge the support provided by Universidad de Málaga, Málaga, Spain.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Imran Saleem, M.; Siddiqui, A.A.; Noor, S.; Luque-Nieto, M.Á. A Novel Machine Learning Based Two-Way Communication System for Deaf and Mute. Appl. Sci. 2022, 13, 453. [Google Scholar] [CrossRef]

- Saleem, M.I.; Otero, P.; Noor, S.; Aftab, R. Full Duplex Smart System for Deaf Dumb and Normal People. In Proceedings of the 2020 Global Conference on Wireless and Optical Technologies, GCWOT, Malaga, Spain, 6–8 October 2020. [Google Scholar] [CrossRef]

- Sood, A.; Mishra, A. AAWAAZ: A communication system for deaf and dumb’. In Proceedings of the 2016 5th International Conference on Reliability, Infocom Technologies and Optimization, ICRITO 2016: Trends and Future Directions, Noida, India, 7–9 September 2016; IEEE: Noida, India, 2016; pp. 620–624. [Google Scholar] [CrossRef]

- Boppana, L.; Ahamed, R.; Rane, H.; Kodali, R.K. Assistive sign language converter for deaf and dumb. In Proceedings of the 2019 IEEE International Congress on Cybermatics: 2019 International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), iThings/GreenCom/CPSCom/SmartData, Atlanta, GA, USA, 14–17 July 2019; pp. 302–307. [Google Scholar] [CrossRef]

- Ameur, S.; Ben Khalifa, A.; Bouhlel, M.S. A novel hybrid bidirectional unidirectional LSTM network for dynamic hand gesture recognition with Leap Motion’, Entertainment Computing. Entertain. Comput. 2020, 35, 100373. [Google Scholar] [CrossRef]

- Ameur, S.; Ben Khalifa, A.; Bouhlel, M.S. Chronological pattern indexing: An efficient feature extraction method for hand gesture recognition with Leap Motion. Journal of Visual Communication and Image Representation. J. Vis. Commun. Image Represent. 2020, 70, 102842. [Google Scholar] [CrossRef]

- Suharjito, R.A.; Wiryana, F.; Ariesta, M.C.; Kusuma, G.P. Sign Language Recognition Application Systems for Deaf-Mute People: A Review Based on Input-Process-Output. Procedia Comput. Sci. 2017, 116, 441–448. [Google Scholar] [CrossRef]

- Deb, S.; Suraksha Bhattacharya, P. Augmented Sign Language Modeling (ASLM) with interaction design on smartphone-An assistive learning and communication tool for inclusive classroom. Procedia Comput. Sci. 2018, 125, 492–500. [Google Scholar] [CrossRef]

- Bhadauria, R.S.; Nair, S.; Pal, D.K. A survey of deaf mutes’, Medical Journal Armed Forces India. Dir. Gen. Armed Med. Serv. 2007, 63, 29–32. [Google Scholar] [CrossRef]

- Patwary, A.S.; Zaohar, Z.; Sornaly, A.A.; Khan, R. Speaking System for Deaf and Mute People with Flex Sensors. In Proceedings of the 2022 6th International Conference on Trends in Electronics and Informatics, ICOEI 2022-Proceedings, (Icoei), Tirunelveli, India, 28–30 April 2022; pp. 168–173. [Google Scholar] [CrossRef]

- Asthana, O.; Bhakuni, P.; Srivastava, P.; Singh, S.; Jindal, K. Sign Language Recognition Based on Gesture Recognition/Holistic Features Recognition: A Review of Techniques. In Proceedings of the 2nd International Conference on Innovative Practices in Technology and Management, ICIPTM 2022, Tirunelveli, India, 23–25 February 2022; pp. 713–718. [Google Scholar] [CrossRef]

- Farhan, Y.; Madi, A.A.; Ryahi, A.; Derwich, F. American Sign Language: Detection and Automatic Text Generation. In Proceedings of the 2022 2nd International Conference on Innovative Research in Applied Science, Engineering and Technology, IRASET 2022, Meknes, Morocco, 3–4 March 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Bisht, D.; Kojage, M.; Shukla, M.; Patil, Y.P.; Bagade, P. Smart Communication System Using Sign Language Interpretation. In Proceedings of the Conference of Open Innovation Association, FRUCT. FRUCT Oy, Moscow, Russia, 27–29 April 2022; pp. 12–20. [Google Scholar] [CrossRef]

- Billah, A.R.; Fahad, F.I.; Raaz, S.R.; Saha, A.; Eity, Q.N. Recognition of Bangla Sign Language Characters and Digits Using CNN. In Proceedings of the 2022 International Conference on Innovations in Science, Engineering and Technology, ICISET 2022, IDubai, United Arab Emirates, 16–18 February 2022; pp. 239–244. [Google Scholar] [CrossRef]

- Jamdar, V.; Garje, Y.; Khedekar, T.; Waghmare, S.; Dhore, M.L. Inner Voice-An Effortless Way of Communication for the Physically Challenged Deaf Mute People. In Proceedings of the 2021 1st IEEE International Conference on Artificial Intelligence and Machine Vision, AIMV 2021, Gandhinagar, India, 24–26 September 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Ben Hamouda, S.; Gabsi, W. Arabic sign Language Recognition: Towards a Dual Way Communication System between Deaf and Non-Deaf People. In Proceedings of the 22nd IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing, SNPD 2021-Fall, Gandhinagar, India, 24–26 September 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 37–42. [Google Scholar] [CrossRef]

- Shareef, S.K.; Haritha IS, L.; Prasanna, Y.L.; Kumar, G.K. Deep Learning Based Hand Gesture Translation System. In Proceedings of the 5th International Conference on Trends in Electronics and Informatics, ICOEI 2021, Tirunelveli, India, 3–5 June 2021; pp. 1531–1534. [Google Scholar] [CrossRef]

- Illahi AA, C.; Betito MF, M.; Chen CC, F.; Navarro CV, A.; Or IV, L. Development of a Sign Language Glove Translator Using Microcontroller and Android Technology for Deaf-Mute. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2021, Manila, Philippines, 28–30 November 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Janeera, D.A.; Raja, K.M.; Pravin UK, R.; Kumar, M.K. Neural Network based Real Time Sign Language Interpreter for Virtual Meet. In Proceedings of the 5th International Conference on Computing Methodologies and Communication, ICCMC 2021, (Iccmc), Erode, India, 8–10 April 2021; pp. 1593–1597. [Google Scholar] [CrossRef]

- Snehaa, A.; Suryaprakash, S.; Sandeep, A.; Monikapriya, S.; Mathankumar, M.; Thirumoorthi, P. Smart Audio Glove for Deaf and Dumb Impaired. In Proceedings of the 2021 International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation, ICAECA 2021, Coimbatore, India, 8–9 October 2021. [Google Scholar] [CrossRef]

- Vanaja, S.; Preetha, R.; Sudha, S. Hand Gesture Recognition for Deaf and Dumb Using CNN Technique. In Proceedings of the 6th International Conference on Communication and Electronics Systems, ICCES 202, Coimbatre, India, 8–10 July 2021. [Google Scholar] [CrossRef]

- Rishi, K.; Prarthana, A.; Pravena, K.S.; Sasikala, S.; Arunkumar, S. Two-Way Sign Language Conversion for Assisting Deaf-Mutes Using Neural Network. In Proceedings of the 8th International Conference on Advanced Computing and Communication Systems, ICACCS 2022, Coimbatore, India, 25–26 March 2022; pp. 642–646. [Google Scholar] [CrossRef]

- Rosero-Montalvo, P.D.; Godoy-Trujillo, P.; Flores-Bosmediano, E.; Carrascal-Garcia, J.; Otero-Potosi, S.; Benitez-Pereira, H.; Peluffo-Ordonez, D.H. Sign Language Recognition Based on Intelligent Glove Using Machine Learning Techniques. In Proceedings of the 2018 IEEE 3rd Ecuador Technical Chapters Meeting, ETCM 2018, Cuenca, Ecuador, 15–19 October 2018; pp. 5–9. [Google Scholar] [CrossRef]

- Soni, N.S.; Nagmode, M.S.; Komati, R.D. Online hand gesture recognition & classification for deaf & dumb. In Proceedings of the International Conference on Inventive Computation Technologies, ICICT 2016, Coimbatore, India, 26–27 August 2016. [Google Scholar] [CrossRef]

- Anupama, H.S.; Usha, B.A.; Madhushankar, S.; Vivek, V.; Kulkarni, Y. Automated sign language interpreter using data gloves. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 472–476. [Google Scholar] [CrossRef]

- Gupta, A.M.; Koltharkar, S.S.; Patel, H.D.; Naik, S. DRISHYAM: An Interpreter for Deaf and Mute using Single Shot Detector Model. In Proceedings of the 8th International Conference on Advanced Computing and Communication Systems, ICACCS 2022, Coimbatore, India, 25–26 March 2022; pp. 365–371. [Google Scholar] [CrossRef]

- Samonte, M.J.C.; Gazmin, R.A.; Soriano, J.D.S.; Valencia, M.N.O. BridgeApp: An Assistive Mobile Communication Application for the Deaf and Mute. In Proceedings of the ICTC 2019-10th International Conference on ICT Convergence: ICT Convergence Leading the Autonomous Future, Jeju, Republic of Korea, 16–18 October 2019; pp. 1310–1315. [Google Scholar] [CrossRef]

- Lan, S.; Ye, L.; Zhang, K. Attention-Augmented Electromagnetic Representation of Sign Language for Human-Computer Interaction in Deaf-and-Mute Community. In Proceedings of the 2021 IEEE USNC-URSI Radio Science Meeting (Joint with AP-S Symposium), USNC-URSI 2021-Proceedings, Singapore, 4–10 December 2021; pp. 47–48. [Google Scholar] [CrossRef]

- Sobhan, M.; Chowdhury, M.Z.; Ahsan, I.; Mahmud, H.; Hasan, M.K. A Communication Aid System for Deaf and Mute using Vibrotactile and Visual Feedback. In Proceedings of the 2019 International Seminar on Application for Technology of Information and Communication: Industry 4.0: Retrospect, Prospect, and Challenges, iSemantic 2019, Semarang, Indonesia, 21–22 September 2019; pp. 184–190. [Google Scholar] [CrossRef]

- Chakrabarti, S. State of deaf children in West Bengal, India: What can be done to improve outcome. Int. J. Pediatr. Otorhinolaryngol. 2018, 110, 37–42. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Rodriguez, C.; Yu, X.; Li, H. Word-level deep sign language recognition from video: A new large-scale dataset and methods comparison. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision, WACV 2020, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1448–1458. [Google Scholar] [CrossRef]

- Ronchetti, F.; Quiroga, F.; Estrebou, C.A.; Lanzarini, L.C.; Rosete, A. LSA64: An Argentinian Sign Language Dataset; Congreso Argentino de Ciencias de La Computacion (CACIC): San Luis, Argentina, 2016; pp. 794–803. [Google Scholar]

- Sincan, O.M.; Keles, H.Y. AUTSL: A large scale multi-modal Turkish sign language dataset and baseline methods. IEEE Access 2020, 8, 181340–181355. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, W.; Li, H.; Li, W. Attention-Based 3D-CNNs for Large-Vocabulary Sign Language Recognition. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2822–2832. [Google Scholar] [CrossRef]

- Tavella, F.; Schlegel, V.; Romeo, M.; Galata, A.; Cangelosi, A. WLASL-LEX: A Dataset for Recognising Phonological Properties in American Sign Language. arXiv 2022, arXiv:2203.06096. [Google Scholar]

- Joze, H.R.V.; Koller, O. MS-ASL: A Large-Scale Data Set and Benchmark for Understanding American Sign Language. In Proceedings of the 30th British Machine Vision Conference 2019, BMVC 2019, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Kagirov, I.; Ivanko, D.; Ryumin, D.; Axyonov, A.; Karpov, A. TheRuSLan: Database of Russian Sign Language. In Proceedings of the LREC 2020—12th International Conference on Language Resources and Evaluation, Conference Proceedings, Marseille, France, 11–16 May 2020; pp. 6079–6085. [Google Scholar]

- Kumari, M.; Singh, V. Real-time glove and android application for visual and audible Arabic sign language translation. Procedia Comput. Sci. 2019, 163, 450–459. [Google Scholar] [CrossRef]

- Siddiqui, A.; Zia MY, I.; Otero, P. A universal machine-learning-based automated testing system for consumer electronic products. Electronics 2021, 10, 136. [Google Scholar] [CrossRef]

- Siddiqui, A.; Zia MY, I.; Otero, P. A Novel Process to Setup Electronic Products Test Sites Based on Figure of Merit and Machine Learning. IEEE Access 2021, 9, 80582–80602. [Google Scholar] [CrossRef]

- Engineer Ambitiously—NI. Available online: https://www.ni.com/en-gb.html (accessed on 2 January 2023).

- Martinek, P.; Krammer, O. Analysing machine learning techniques for predicting the hole-filling in pin-in-paste technology. Comput. Ind. Eng. 2019, 136, 187–194. [Google Scholar] [CrossRef]

- Sapkota, S.; Mehdy, A.N.; Reese, S.; Mehrpouyan, H. Falcon: Framework for anomaly detection in industrial control systems. Electronics 2020, 9, 1192. [Google Scholar] [CrossRef]

- Welcome to Python. Available online: https://www.python.org/ (accessed on 1 January 2023).

- Dorochowicz, A.; Kurowski, A.; Kostek, B. Employing Subjective Tests and Deep Learning for Discovering the Relationship between Personality Types and Preferred Music Genres. Electronics 2020, 9, 2016. [Google Scholar] [CrossRef]

- Kaggle Dataset. Available online: https://www.kaggle.com/datasets/alexalex1211/aslamerican-sign-language (accessed on 3 January 2022).

- Kaggle Dataset. Available online: https://www.kaggle.com/datasets/kirlelea/spanish-sign-language-alphabet-static (accessed on 3 January 2022).

- Kaggle Dataset. Available online: https://www.kaggle.com/datasets/hazrat/urdu-speech-dataset (accessed on 3 January 2022).

Figure 1.

Research scope.

Figure 1.

Research scope.

Figure 2.

Research methodology.

Figure 2.

Research methodology.

Figure 3.

Novel features of the full duplex communication system.

Figure 3.

Novel features of the full duplex communication system.

Figure 4.

Block diagram of the communication system.

Figure 4.

Block diagram of the communication system.

Figure 5.

Machine learning algorithm.

Figure 5.

Machine learning algorithm.

Figure 6.

Examples of processed images using the ML algorithm.

Figure 6.

Examples of processed images using the ML algorithm.

Figure 7.

A subset of the American sign language dataset.

Figure 7.

A subset of the American sign language dataset.

Figure 8.

A subset of the Pakistani sign language dataset.

Figure 8.

A subset of the Pakistani sign language dataset.

Figure 9.

A subset of the Spanish sign language dataset.

Figure 9.

A subset of the Spanish sign language dataset.

Figure 10.

Processing hand gestures (PSL dataset).

Figure 10.

Processing hand gestures (PSL dataset).

Figure 11.

Hand gesture detection results for PSL dataset.

Figure 11.

Hand gesture detection results for PSL dataset.

Figure 12.

Processing hand gestures (SSL dataset).

Figure 12.

Processing hand gestures (SSL dataset).

Figure 13.

Processing images of different quality for the same hand gesture.

Figure 13.

Processing images of different quality for the same hand gesture.

Figure 14.

Processing variation in same hand gesture scenario 1.

Figure 14.

Processing variation in same hand gesture scenario 1.

Figure 15.

Processing variation in same hand gesture scenario 2.

Figure 15.

Processing variation in same hand gesture scenario 2.

Figure 16.

Processing variation in same hand gesture scenario 3.

Figure 16.

Processing variation in same hand gesture scenario 3.

Figure 17.

Processing variation in same hand gesture scenario 4.

Figure 17.

Processing variation in same hand gesture scenario 4.

Figure 18.

Processing similar gestures (PSL dataset) scenario 1.

Figure 18.

Processing similar gestures (PSL dataset) scenario 1.

Figure 19.

Processing similar gestures (PSL dataset) scenario 2.

Figure 19.

Processing similar gestures (PSL dataset) scenario 2.

Figure 20.

Detecting other objects—wristwatch scenario 1.

Figure 20.

Detecting other objects—wristwatch scenario 1.

Figure 21.

Detecting other objects—wristwatch scenario 2.

Figure 21.

Detecting other objects—wristwatch scenario 2.

Figure 22.

Detecting other objects—ring.

Figure 22.

Detecting other objects—ring.

Figure 23.

Detecting other objects—pen.

Figure 23.

Detecting other objects—pen.

Figure 24.

Confusion matrix of letters within PSL dataset (set 1).

Figure 24.

Confusion matrix of letters within PSL dataset (set 1).

Figure 25.

Confusion matrix of letters within PSL dataset (set 2).

Figure 25.

Confusion matrix of letters within PSL dataset (set 2).

Figure 26.

Similarities between different sign languages.

Figure 26.

Similarities between different sign languages.

Table 1.

Summary of related work review.

Table 1.

Summary of related work review.

| References | Hardware/Software | Summary |

|---|

| [2] | LMD, Android app | D-M to ND-M to D-M, Single SLD |

| [3] | PC, Harris algorithm | D-M to ND-M to D-M, Single SLD |

| [4] | Raspberry Pi | D-M to ND-M, Single SLD |

| [5] | LMD, LSTM Neural networks | D-M to ND-M, Single SLD |

| [6] | LMD, (GMM, HMM, DTW) | D-M to ND-M, Single SLD |

| [7] | PC, NN algorithms | D-M to ND-M, Single SLD |

| [8] | PC, Arduino, Gloves, Flex sensors | Calibration required for the custom-made product, D-M to ND-M, Single SLD |

| [10] | Arduino, Gloves, Sensors | Calibration required for the custom-made product, D-M to ND-M, Single SLD |

| [12] | PC, TensorFlow | D-M to ND-M, Single SLD |

| [13] | PC, Various ML algorithms | D-M to ND-M to D-M, Single SLD |

| [14] | PC, TensorFlow, CNN | D-M to ND-M, Single SLD |

| [15] | Android | D-M to ND-M, Multi SLD |

| [16] | Android | D-M to ND-M, Single SLD |

| [17] | PC, CNN | D-M to ND-M, Single SLD |

| [18] | Arduino, Gloves, Flex sensors | Calibration required for the custom-made product, D-M to ND-M, Single SLD |

| [19] | PC NN | D-M to ND-M, Single SLD |

| [20] | Gloves, Flex sensors, Voice recorder, Matlab | D-M to ND-M, Single SLD |

| [21] | PC, CNN | D-M to ND-M, Single SLD |

| [22] | CNN | D-M to ND-M to D-M, Single SLD |

| [23] | Gloves, Sensors, Arduino, KNN | D-M to ND-M, Single SLD |

| [24] | PC-based, PCA | D-M to ND-M, Single SLD |

| [25] | Arduino, Gloves, Sensors, KNN | Calibration required for the custom-made product, D-M to ND-M, Single SLD |

| [26] | PC-based, CNN | D-M to ND-M, Single SLD |

| [27] | Android application | D-M to ND-M, Multi SLD |

| [28] | PC, CNN | D-M to ND-M, Single SLD |

| [29] | Android | D-M to ND-M to D-M, Single SLD |

Table 2.

Summary of sign language datasets reviewed.

Table 2.

Summary of sign language datasets reviewed.

| References | Sign Language Dataset | Dataset Size |

|---|

| [14] | Bangladesh Sign Language | 2660 images |

| [16] | Arabic Sign Language | 50 signs |

| [31] | Word-level American Sign Language | More than 2000 words |

| [32] | Argentinian Sign Language | More than 3200 videos |

| [33] | Turkish Sign Language | 226 signs |

| [34] | Chinese Sign Language | 500 categories |

| [35] | Word-level American Sign Language | More than 10,000 videos |

| [36] | American Sign Language | 10,000 signs and 25,000 videos |

| [37] | Russian Sign Language | 164 lexical units |

| [38] | Arabic Sign Language | Not provided |

Table 3.

Accuracy of detection.

Table 3.

Accuracy of detection.

| Dataset | % Accuracy

(Letters) | % Accuracy (Numbers) | Train Dataset

(70%) | Test Dataset

(30%) |

|---|

| ASL | 98.5 | 98.9 | 1750 | 750 |

| PSL | 93.1 | 92.7 | 1015 | 435 |

| SSL | 94.3 | 93.8 | 1330 | 570 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).