Joint Task Offloading, Resource Allocation, and Load-Balancing Optimization in Multi-UAV-Aided MEC Systems

Abstract

1. Introduction

- An efficient load-balancing algorithm is introduced for optimizing the load among GBSs, in which the mobile users are redistributed to the most appropriate GBSs regarding their location, task size, and CPU cycles. In addition, UAVs are utilized as a potential MEC server to provide computation and communication resources by hovering in overcrowded areas where the GBSs server is still overloaded.

- Task offloading, load balancing, and resource allocation are jointly optimized for multi-tiers UAV-aided MEC systems via a formulation of an integer programming problem with the primary objective of minimizing system cost.

- A novel form of deep reinforcement learning is introduced in which the application task’s requirements represent the system state and the offloading decision is used to define the action. The solution is then derived using an efficient distributed deep reinforcement-learning-based algorithm.

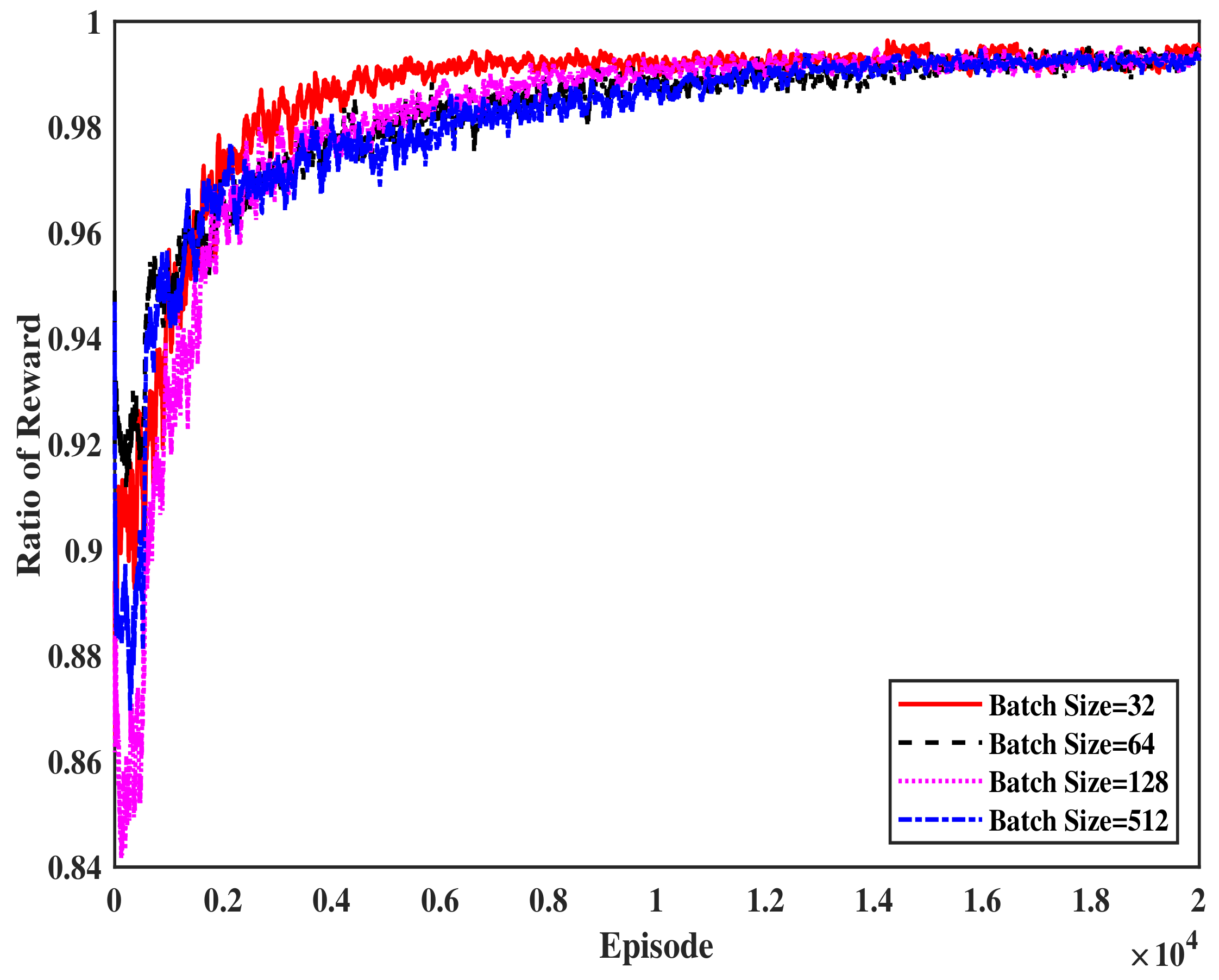

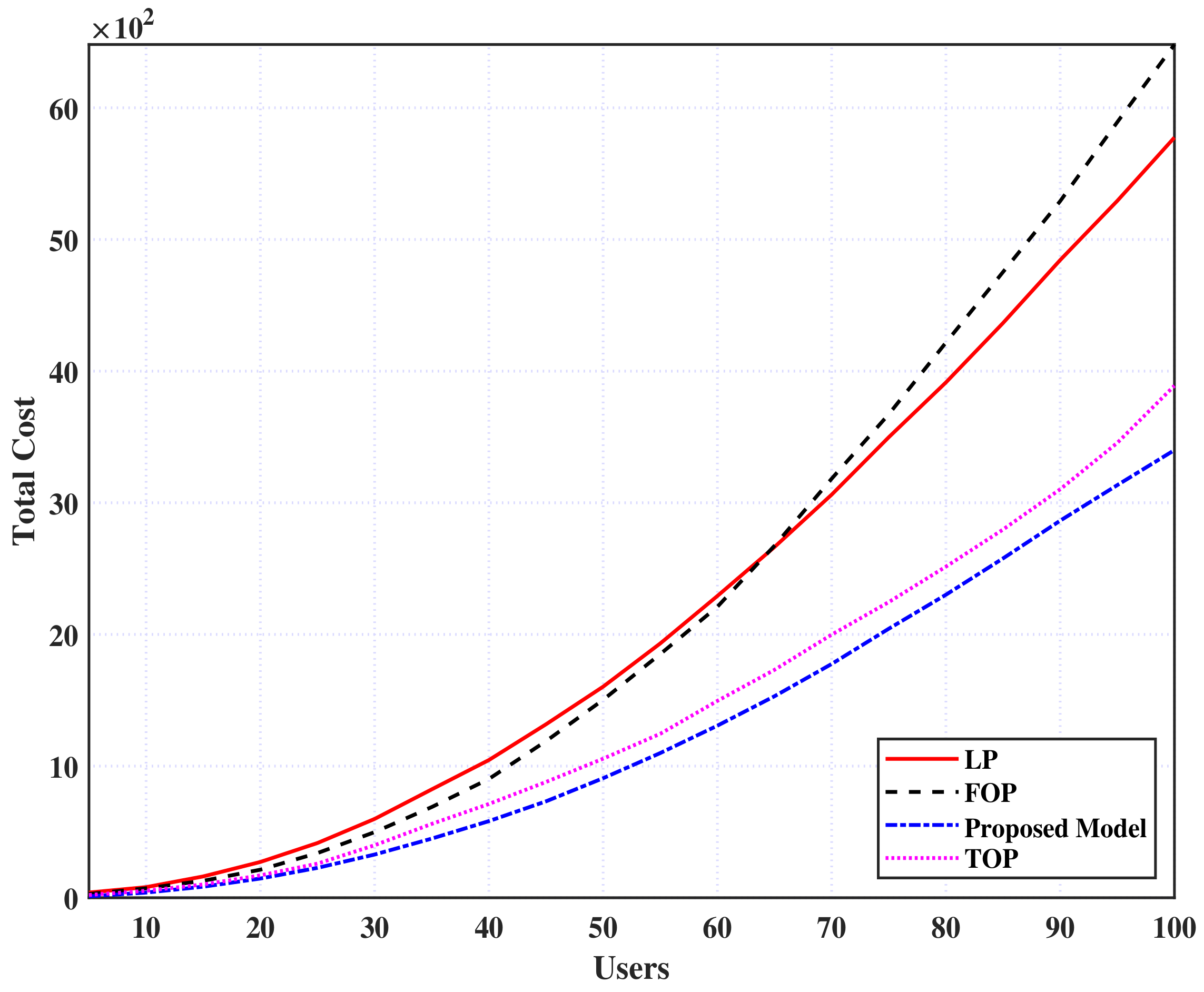

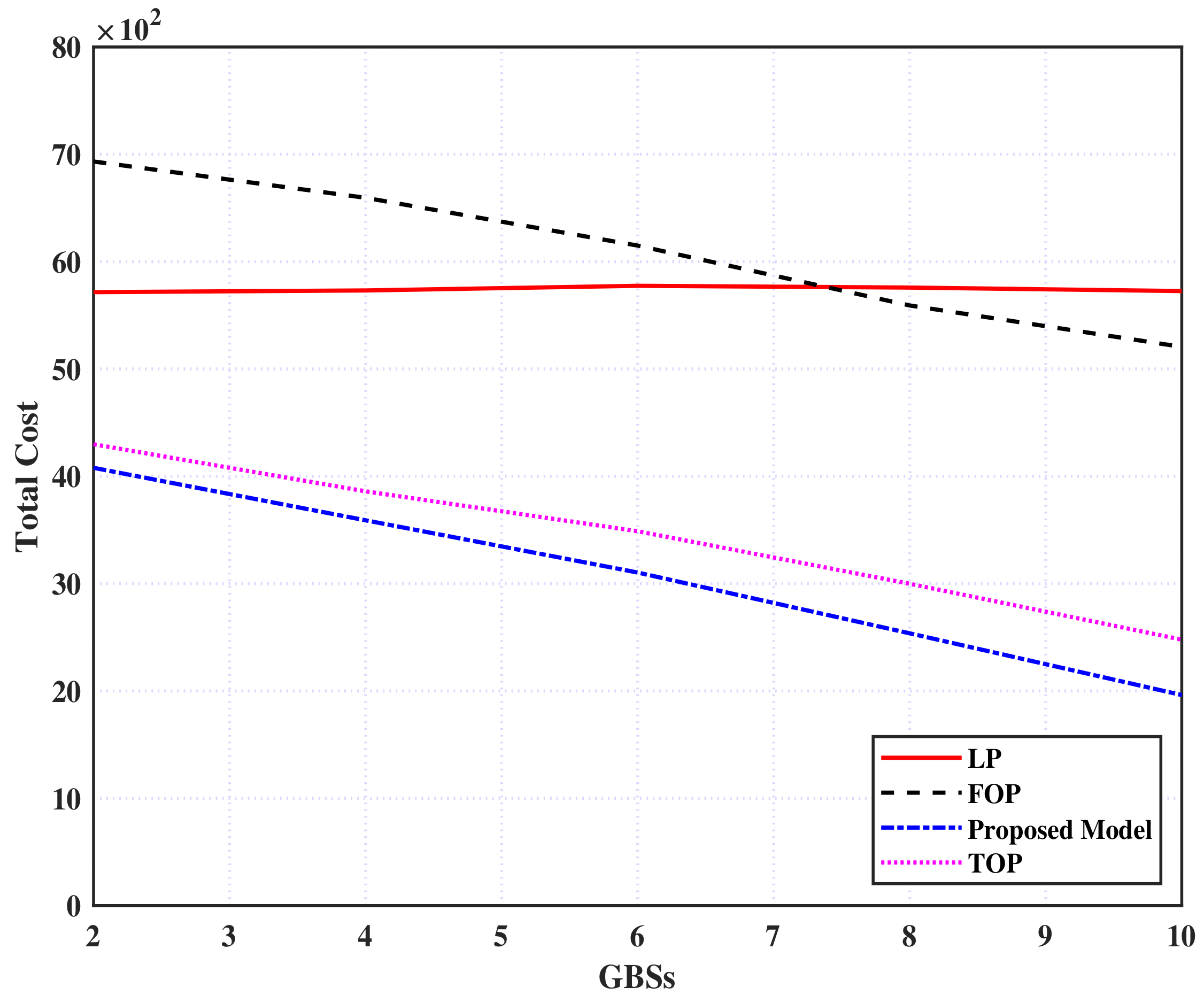

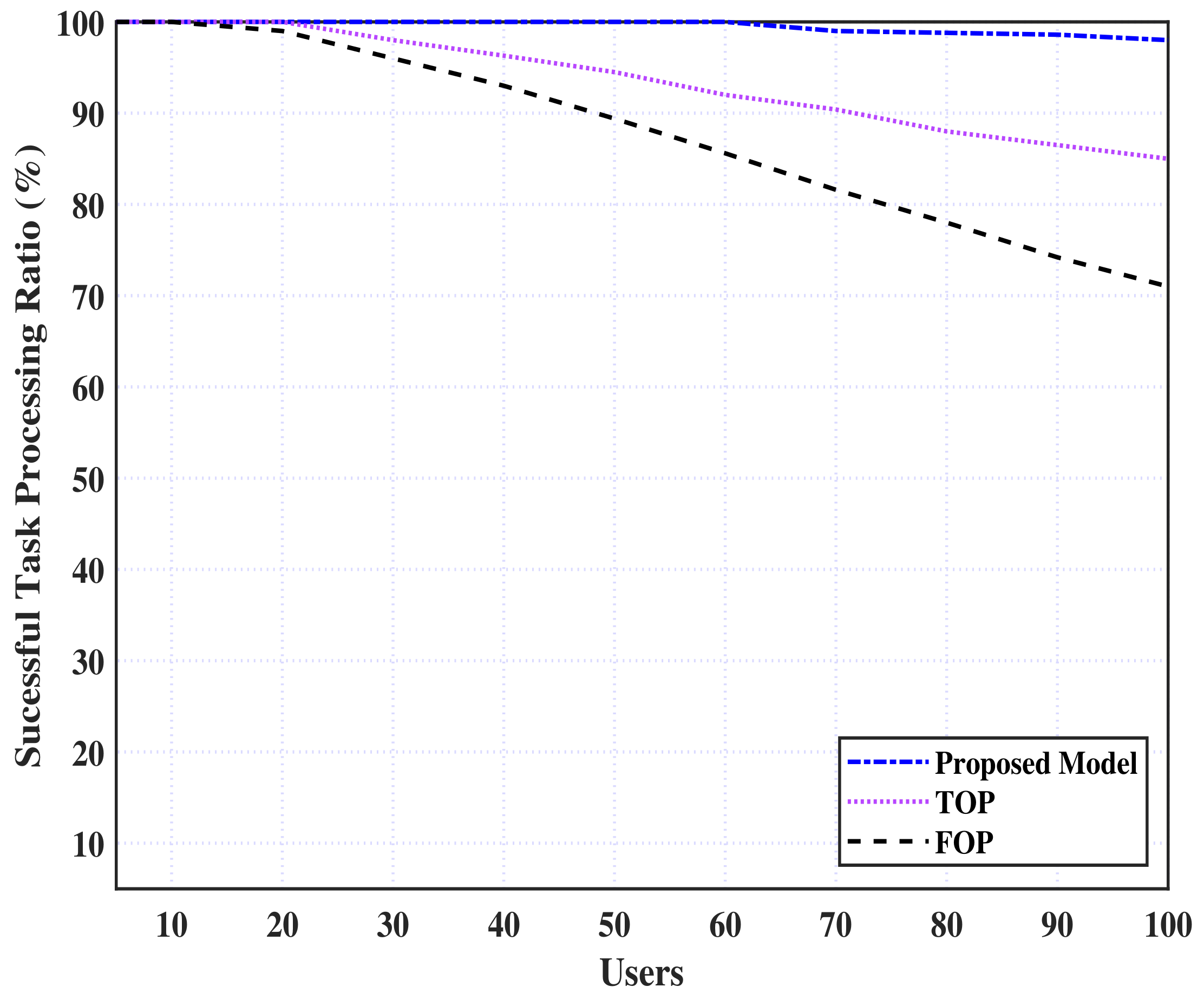

- Simulation findings demonstrate that the proposed model not only exhibits a fast and effective convergence performance but also significantly decreases the system costs compared with a benchmark approach.

2. Related Work

3. System Model and Problem Formulation

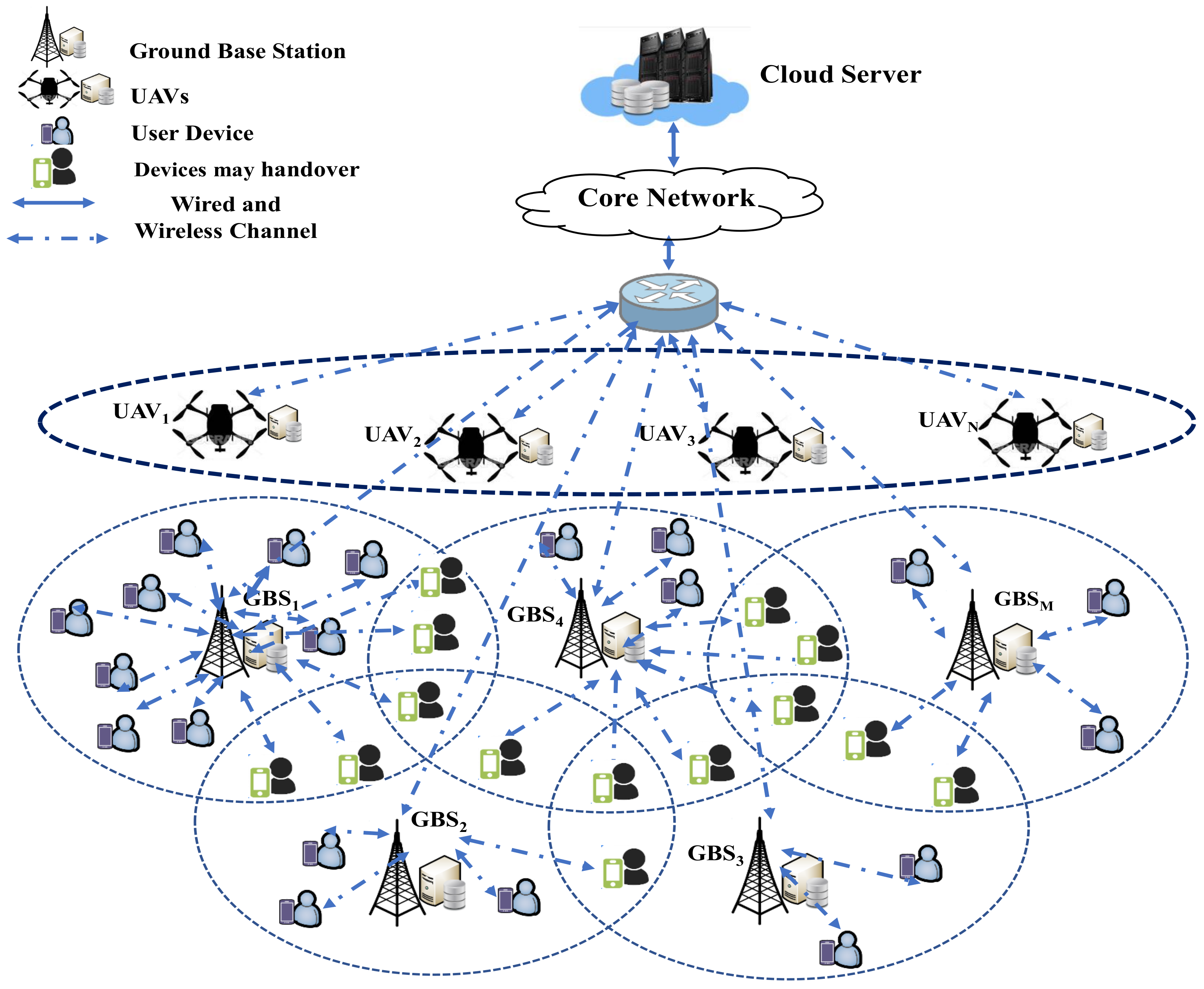

3.1. System Model

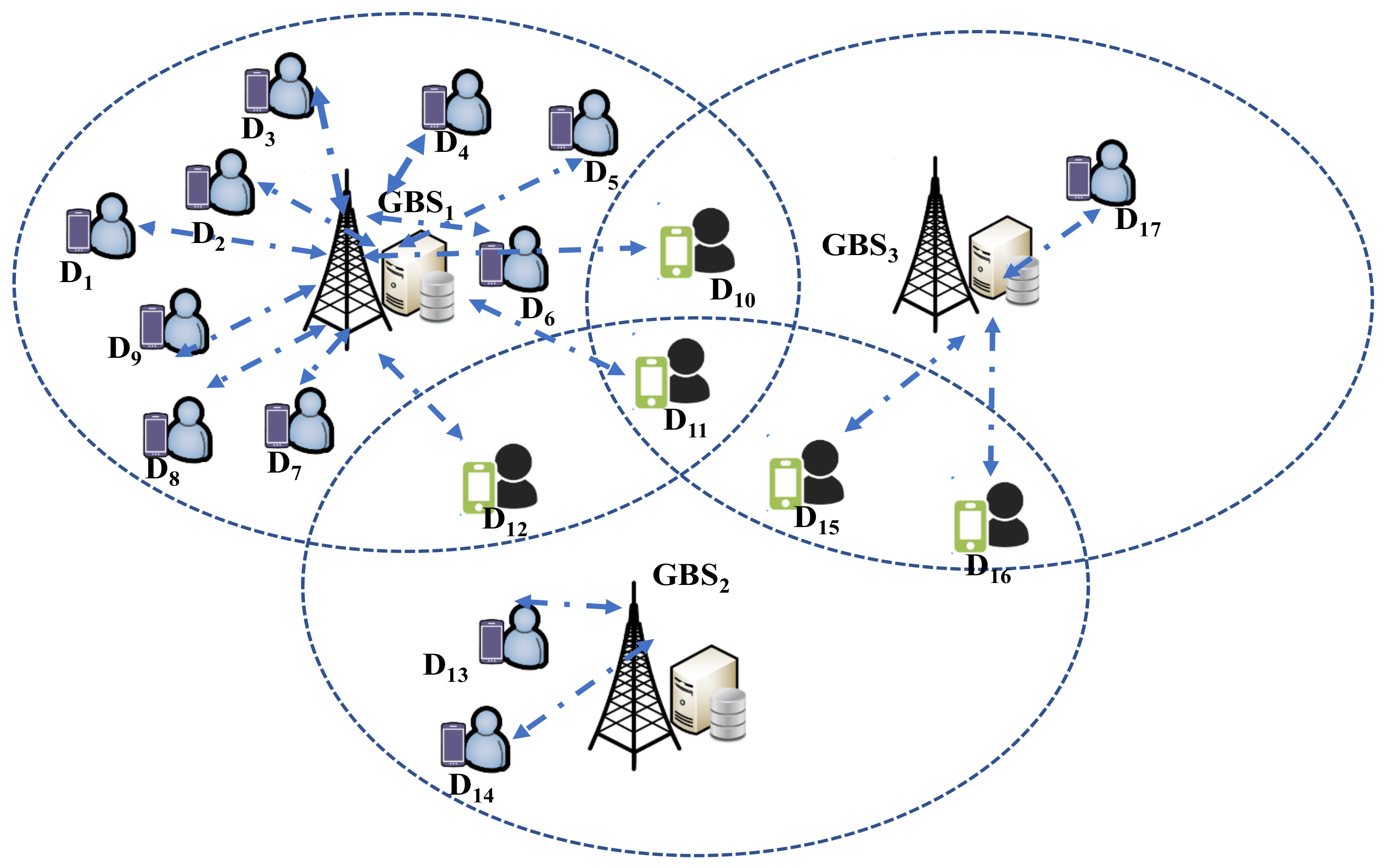

3.1.1. Load Balancing

| Algorithm 1 GBSs Balancing Load |

|

3.1.2. Communication Model

3.1.3. Computation Model

3.1.4. Problem Formulation

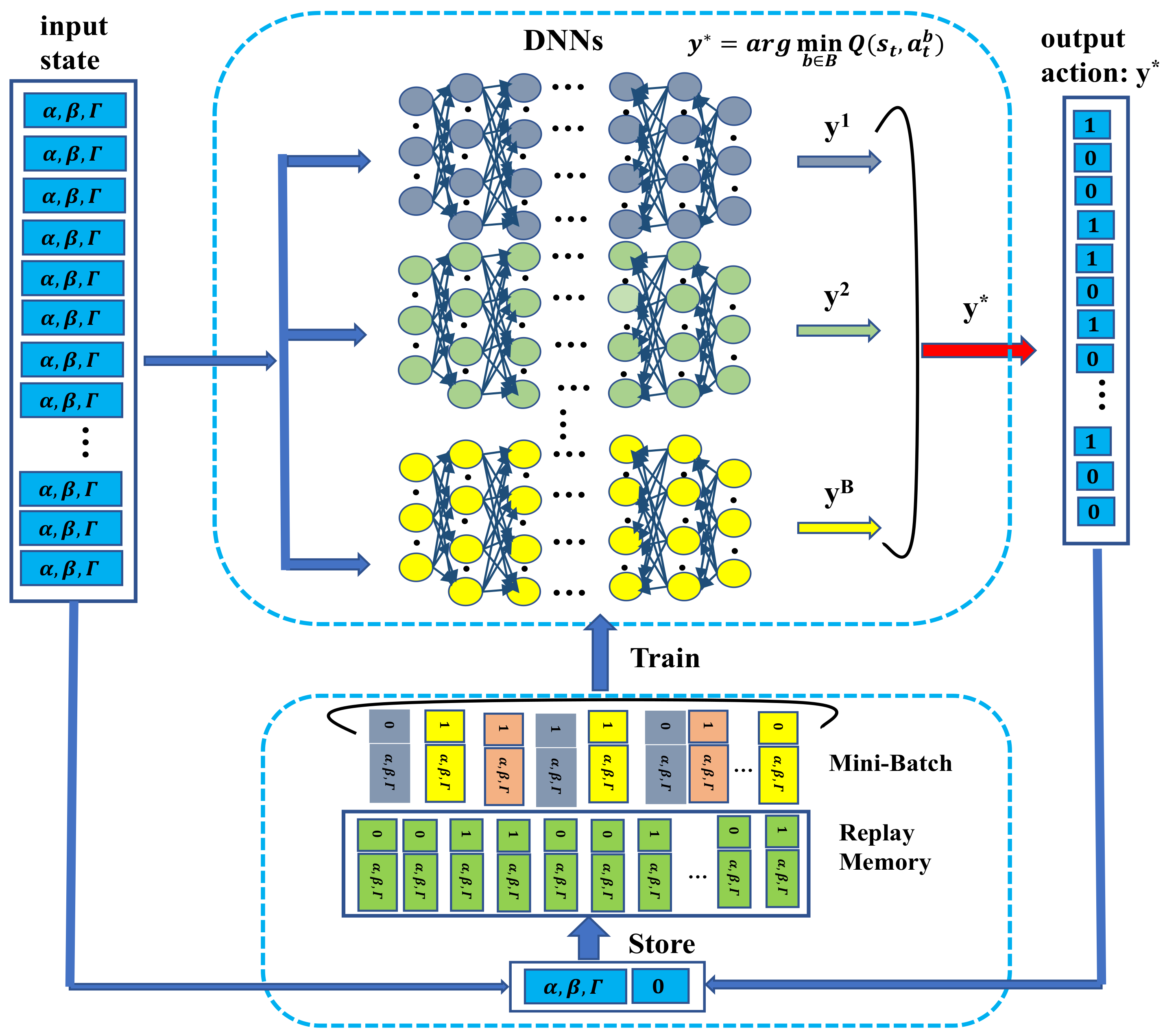

4. Deep Reinforcement Learning-Based Approach for Solving the Problem

4.1. Reinforcement Learning Introduction

4.2. Reinforcement Learning Key Elements

- State: In our study, the computational requirements for the intensive application can be utilized to define the state space S as follows

- Action: The offloading decision can be utilized to specify the action space A, in which selecting an action based on the can follow the policy .

- Reward: The reward value is given by the objective function according to (Equation (11)) as part of our problem formulation. Therefore, the objective function value at time t can be calculated using policy depending on the state and after selecting an action . Afterward, the same procedure is continuously repeated with the time index increasing as . As a result, based on the findings of this study, the total reward is minimized using a policy that can be defined as , where denotes the in Equation (11).

4.3. Distributed Deep Reinforcement Learning-Based Algorithm

| Algorithm 2 Distributed Deep Reinforcement Learning-Based Algorithm |

|

5. Performance Evaluation and Discussion

5.1. Experiment Setup

5.2. Experiment Results and Discussion

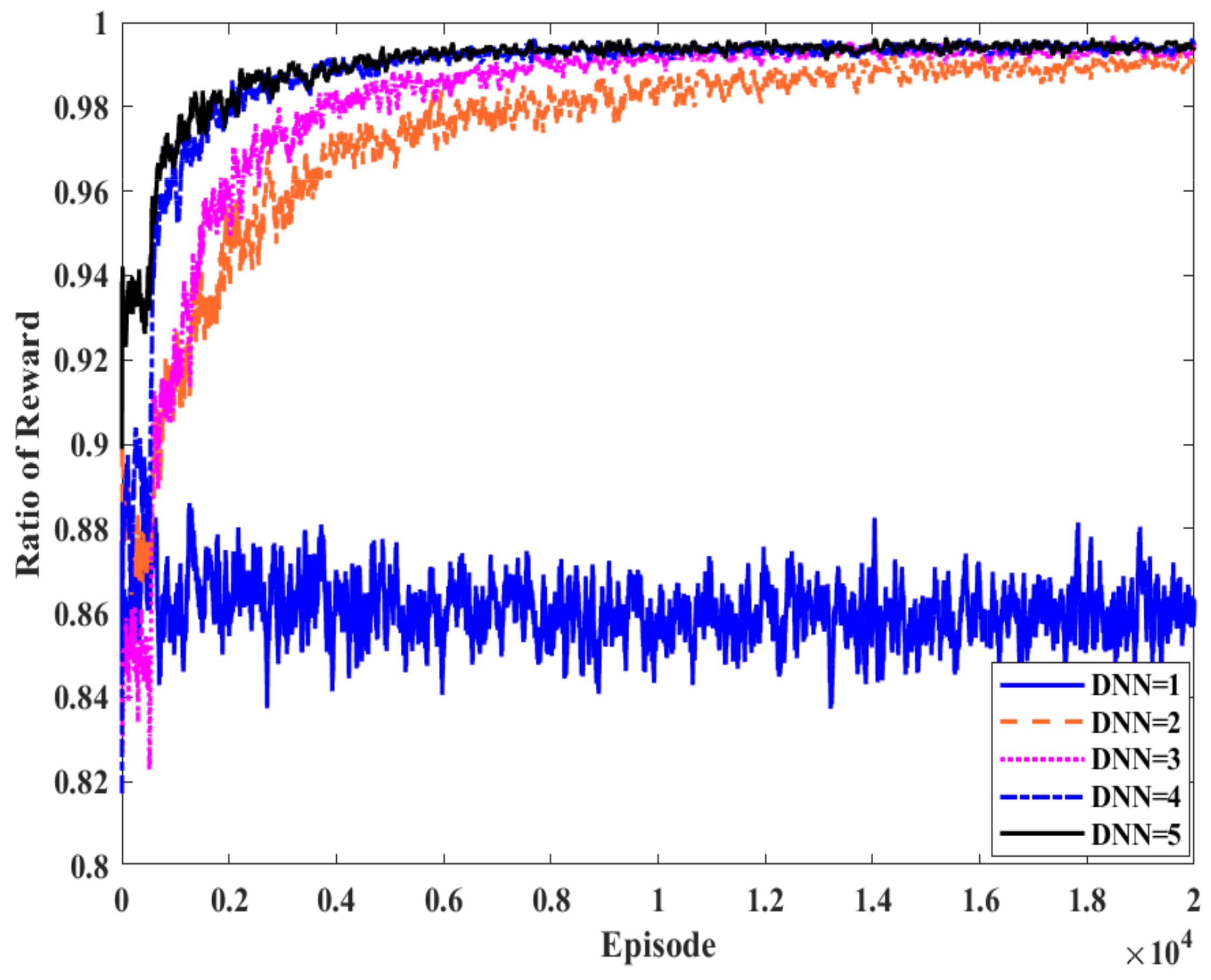

5.2.1. Convergence Performance of System

5.2.2. System Performance

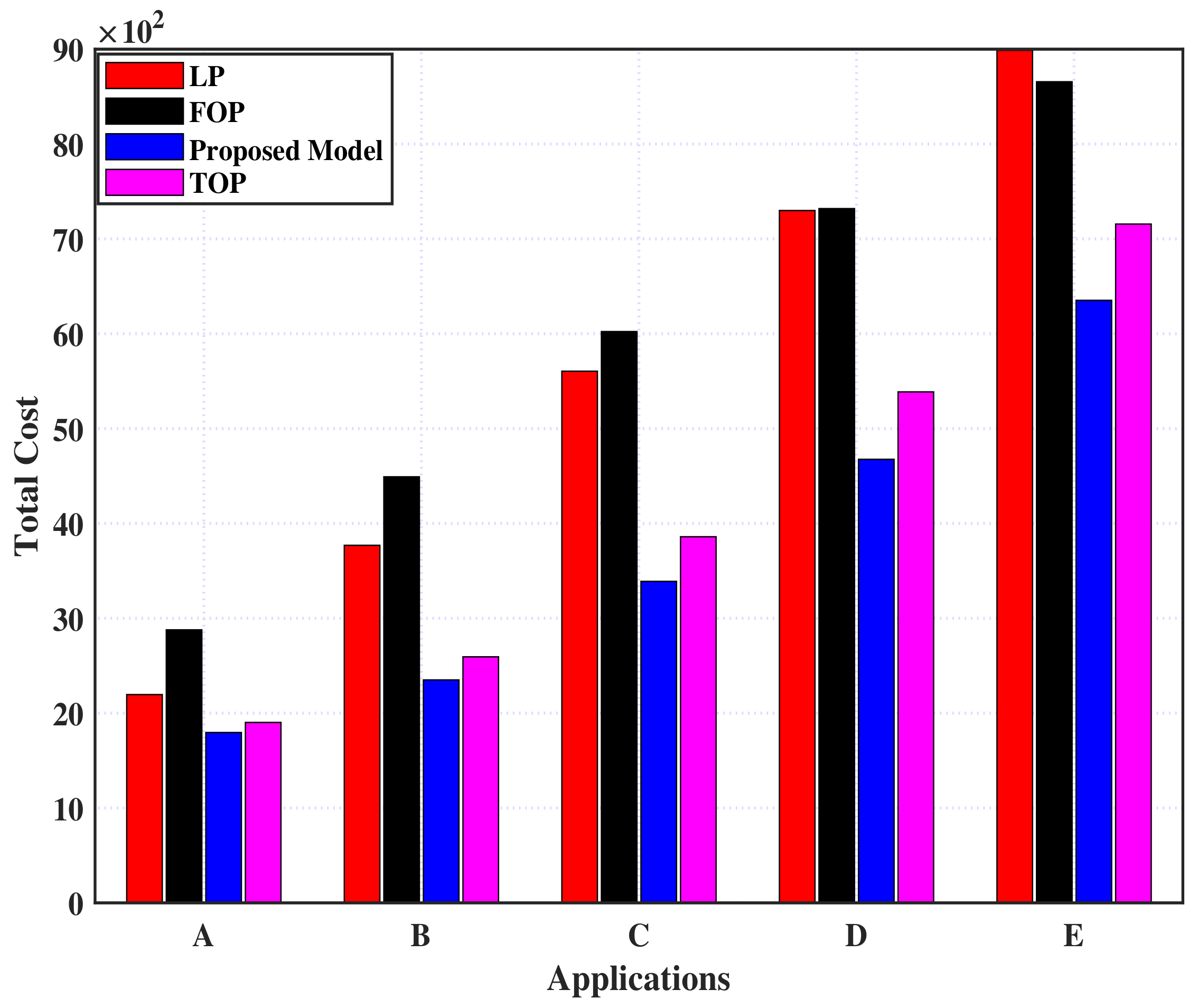

- Local Policy (LP): In this policy, there is no offloading. The application’s tasks are carried out locally on the device resources.

- Full Offloading Policy (FOP): This policy involves offloading the application’s tasks to GBSs for remote processing.

- Proposed Model Policy: In this policy, according to our proposed model, the application’s tasks will be processed according to the offloading decision, which will minimize the total overhead of the system in the end.

- Task Offloading Policy (TOP [47]): This policy is set up to handle the application’s tasks for mobile users based on the model proposed in [47] whereby each mobile user should send their application’s tasks to the connected GBSs in the event that it does not take the selection of GBSs into consideration.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Asghari, P.; Rahmani, A.M.; Javadi, H.H.S. Internet of Things applications: A systematic review. Comput. Netw. 2019, 148, 241–261. [Google Scholar] [CrossRef]

- Paramonov, A.; Muthanna, A.; Aboulola, O.I.; Elgendy, I.A.; Alharbey, R.; Tonkikh, E.; Koucheryavy, A. Beyond 5g network architecture study: Fractal properties of access network. Appl. Sci. 2020, 10, 7191. [Google Scholar] [CrossRef]

- Fagroud, F.Z.; Ajallouda, L.; Lahmar, E.H.B.; Toumi, H.; Zellou, A.; El Filali, S. A Brief Survey on Internet of Things (IoT). In Proceedings of the International Conference on Digital Technologies and Applications, Fez, Morocco, 29–30 January 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 335–344. [Google Scholar]

- Kim, Y.G.; Kong, J.; Chung, S.W. A survey on recent OS-level energy management techniques for mobile processing units. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 2388–2401. [Google Scholar] [CrossRef]

- Kumar, K.; Liu, J.; Lu, Y.H.; Bhargava, B. A survey of computation offloading for mobile systems. Mob. Netw. Appl. 2013, 18, 129–140. [Google Scholar] [CrossRef]

- Khayyat, M.; Elgendy, I.A.; Muthanna, A.; Alshahrani, A.S.; Alharbi, S.; Koucheryavy, A. Advanced deep learning-based computational offloading for multilevel vehicular edge-cloud computing networks. IEEE Access 2020, 8, 137052–137062. [Google Scholar] [CrossRef]

- Kalra, V.; Rahi, S.; Tanwar, P.; Sharma, M.S. A Tour Towards the Security Issues of Mobile Cloud Computing: A Survey. In Emerging Technologies for Computing, Communication and Smart Cities; Springer: Berlin/Heidelberg, Germany, 2022; pp. 577–589. [Google Scholar]

- Chakraborty, A.; Mukherjee, A.; Bhattacharyya, S.; Singh, S.K.; De, D. Multi-criterial Offloading Decision Making in Green Mobile Cloud Computing. In Green Mobile Cloud Computing; Springer: Berlin/Heidelberg, Germany, 2022; pp. 71–105. [Google Scholar]

- Othman, M.; Madani, S.A.; Khan, S.U. A survey of mobile cloud computing application models. IEEE Commun. Surv. Tutorials 2013, 16, 393–413. [Google Scholar]

- Noor, T.H.; Zeadally, S.; Alfazi, A.; Sheng, Q.Z. Mobile cloud computing: Challenges and future research directions. J. Netw. Comput. Appl. 2018, 115, 70–85. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Zhang, W.Z.; Liu, C.Y.; Hsu, C.H. An efficient and secured framework for mobile cloud computing. IEEE Trans. Cloud Comput. 2018, 9, 79–87. [Google Scholar] [CrossRef]

- Mach, P.; Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Yadav, R. Survey on mobile edge-cloud computing: A taxonomy on computation offloading approaches. In Security and Privacy Preserving for IoT and 5G Networks; Springer: Berlin/Heidelberg, Germany, 2022; pp. 117–158. [Google Scholar]

- Zhang, S.; Zhang, H.; Di, B.; Song, L. Joint trajectory and power optimization for UAV sensing over cellular networks. IEEE Commun. Lett. 2018, 22, 2382–2385. [Google Scholar] [CrossRef]

- Alzenad, M.; El-Keyi, A.; Lagum, F.; Yanikomeroglu, H. 3-D placement of an unmanned aerial vehicle base station (UAV-BS) for energy-efficient maximal coverage. IEEE Wirel. Commun. Lett. 2017, 6, 434–437. [Google Scholar] [CrossRef]

- Motlagh, N.H.; Bagaa, M.; Taleb, T. UAV-based IoT platform: A crowd surveillance use case. IEEE Commun. Mag. 2017, 55, 128–134. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutorials 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Liu, L.; Chang, Z.; Guo, X.; Ristaniemi, T. Multi-objective optimization for computation offloading in mobile-edge computing. In Proceedings of the 2017 IEEE Symposium on Computers and Communications (ISCC), Heraklion, Greece, 3–6 July 2017; pp. 832–837. [Google Scholar]

- Alhelaly, S.; Muthanna, A.; Elgendy, I.A. Optimizing Task Offloading Energy in Multi-User Multi-UAV-Enabled Mobile Edge-Cloud Computing Systems. Appl. Sci. 2022, 12, 6566. [Google Scholar] [CrossRef]

- Pham, Q.V.; Fang, F.; Ha, V.N.; Piran, M.J.; Le, M.; Le, L.B.; Hwang, W.J.; Ding, Z. A survey of multi-access edge computing in 5G and beyond: Fundamentals, technology integration, and state-of-the-art. IEEE Access 2020, 8, 116974–117017. [Google Scholar] [CrossRef]

- Chen, J.; Chen, S.; Luo, S.; Wang, Q.; Cao, B.; Li, X. An intelligent task offloading algorithm (iTOA) for UAV edge computing network. Digit. Commun. Netw. 2020, 6, 433–443. [Google Scholar] [CrossRef]

- Qi, W.; Sun, H.; Yu, L.; Xiao, S.; Jiang, H. Task Offloading Strategy Based on Mobile Edge Computing in UAV Network. Entropy 2022, 24, 736. [Google Scholar] [CrossRef]

- Yu, Z.; Gong, Y.; Gong, S.; Guo, Y. Joint task offloading and resource allocation in UAV-enabled mobile edge computing. IEEE Internet Things J. 2020, 7, 3147–3159. [Google Scholar] [CrossRef]

- Zhu, S.; Gui, L.; Zhao, D.; Cheng, N.; Zhang, Q.; Lang, X. Learning-based computation offloading approaches in UAVs-assisted edge computing. IEEE Trans. Veh. Technol. 2021, 70, 928–944. [Google Scholar] [CrossRef]

- Zhao, N.; Ye, Z.; Pei, Y.; Liang, Y.C.; Niyato, D. Multi-Agent Deep Reinforcement Learning for Task Offloading in UAV-assisted Mobile Edge Computing. IEEE Trans. Wirel. Commun. 2022, 21, 6949–6960. [Google Scholar] [CrossRef]

- Sacco, A.; Esposito, F.; Marchetto, G.; Montuschi, P. Sustainable task offloading in UAV networks via multi-agent reinforcement learning. IEEE Trans. Veh. Technol. 2021, 70, 5003–5015. [Google Scholar] [CrossRef]

- He, X.; Jin, R.; Dai, H. Multi-hop task offloading with on-the-fly computation for multi-UAV remote edge computing. IEEE Trans. Commun. 2021, 70, 1332–1344. [Google Scholar] [CrossRef]

- Luo, Y.; Ding, W.; Zhang, B. Optimization of task scheduling and dynamic service strategy for multi-UAV-enabled mobile-edge computing system. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 970–984. [Google Scholar] [CrossRef]

- Yang, L.; Yao, H.; Wang, J.; Jiang, C.; Benslimane, A.; Liu, Y. Multi-UAV-enabled load-balance mobile-edge computing for IoT networks. IEEE Internet Things J. 2020, 7, 6898–6908. [Google Scholar] [CrossRef]

- Li, W.T.; Zhao, M.; Wu, Y.H.; Yu, J.J.; Bao, L.Y.; Yang, H.; Liu, D. Collaborative offloading for UAV-enabled time-sensitive MEC networks. EURASIP J. Wirel. Commun. Netw. 2021, 2021, 1. [Google Scholar] [CrossRef]

- Zhou, Y.; Ge, H.; Ma, B.; Zhang, S.; Huang, J. Collaborative task offloading and resource allocation with hybrid energy supply for UAV-assisted multi-clouds. J. Cloud Comput. 2022, 11, 42. [Google Scholar] [CrossRef]

- He, Y.; Zhai, D.; Huang, F.; Wang, D.; Tang, X.; Zhang, R. Joint task offloading, resource allocation, and security assurance for mobile edge computing-enabled UAV-assisted VANETs. Remote Sens. 2021, 13, 1547. [Google Scholar] [CrossRef]

- Munawar, S.; Ali, Z.; Waqas, M.; Tu, S.; Hassan, S.A.; Abbas, G. Cooperative Computational Offloading in Mobile Edge Computing for Vehicles: A Model-based DNN Approach. IEEE Trans. Veh. Technol. 2022, 1–16. [Google Scholar] [CrossRef]

- Mohamed, H.; Al-Masri, E.; Kotevska, O.; Souri, A. A Multi-Objective Approach for Optimizing Edge-Based Resource Allocation Using TOPSIS. Electronics 2022, 11, 2888. [Google Scholar] [CrossRef]

- Chen, J.; Cao, X.; Yang, P.; Xiao, M.; Ren, S.; Zhao, Z.; Wu, D.O. Deep Reinforcement Learning Based Resource Allocation in Multi-UAV-Aided MEC Networks. IEEE Trans. Commun. 2022, 71, 296–309. [Google Scholar] [CrossRef]

- Xu, D.; Xu, D. Cooperative task offloading and resource allocation for UAV-enabled mobile edge computing systems. Comput. Netw. 2023, 223, 109574. [Google Scholar] [CrossRef]

- Chai, F.; Zhang, Q.; Yao, H.; Xin, X.; Gao, R.; Guizani, M. Joint Multi-task Offloading and Resource Allocation for Mobile Edge Computing Systems in Satellite IoT. IEEE Trans. Veh. Technol. 2023, 1–15. [Google Scholar] [CrossRef]

- Banerjee, A.; Sufian, A.; Paul, K.K.; Gupta, S.K. Edtp: Energy and delay optimized trajectory planning for uav-iot environment. Comput. Netw. 2022, 202, 108623. [Google Scholar] [CrossRef]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient multi-user computation offloading for mobile-edge cloud computing. IEEE/ACM Trans. Netw. 2015, 24, 2795–2808. [Google Scholar] [CrossRef]

- Zhang, W.Z.; Elgendy, I.A.; Hammad, M.; Iliyasu, A.M.; Du, X.; Guizani, M.; Abd El-Latif, A.A. Secure and optimized load balancing for multitier IoT and edge-cloud computing systems. IEEE Internet Things J. 2020, 8, 8119–8132. [Google Scholar] [CrossRef]

- Elgendy, I.A.; Zhang, W.; Tian, Y.C.; Li, K. Resource allocation and computation offloading with data security for mobile edge computing. Future Gener. Comput. Syst. 2019, 100, 531–541. [Google Scholar] [CrossRef]

- Deb, S.; Monogioudis, P. Learning-based uplink interference management in 4G LTE cellular systems. IEEE/ACM Trans. Netw. 2014, 23, 398–411. [Google Scholar] [CrossRef]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q. Offloading in mobile edge computing: Task allocation and computational frequency scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar]

- Fooladivanda, D.; Rosenberg, C. Joint resource allocation and user association for heterogeneous wireless cellular networks. IEEE Trans. Wirel. Commun. 2012, 12, 248–257. [Google Scholar] [CrossRef]

- Ong, H.Y.; Chavez, K.; Hong, A. Distributed deep Q-learning. arXiv 2015, arXiv:1508.04186. [Google Scholar]

- Chen, M.H.; Liang, B.; Dong, M. Joint offloading decision and resource allocation for multi-user multi-task mobile cloud. In Proceedings of the 2016 IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016; pp. 1–6. [Google Scholar]

- Huang, L.; Feng, X.; Zhang, L.; Qian, L.; Wu, Y. Multi-server multi-user multi-task computation offloading for mobile edge computing networks. Sensors 2019, 19, 1446. [Google Scholar] [CrossRef] [PubMed]

- Almutairi, J.; Aldossary, M.; Alharbi, H.A.; Yosuf, B.A.; Elmirghani, J.M. Delay-Optimal Task Offloading for UAV-Enabled Edge-Cloud Computing Systems. IEEE Access 2022, 10, 51575–51586. [Google Scholar] [CrossRef]

| Reference | Objective | Proposed Approach | Application | Tier Environment | Weakness | |

|---|---|---|---|---|---|---|

| Two | Multiple | |||||

| [21] | Minimize Average Service Latency | An intelligent task-offloading approach | UAV Edge-Cloud Network | ✓ |

| |

| [22] | Maximize Offloading Revenue | A two-stage Stackelberg game model | UAV Edge-Cloud Network | ✓ |

| |

| [23] | Minimize IoT Devices’ Service Delay and UAVs’ Energy Consumption | An innovative UAV-enabled MEC model | IoT Devices Network | ✓ |

| |

| [24] | Minimize Average Mission Response Time | A multi-agent reinforcement learning approach | IoT Devices Network | ✓ | • Load balancing issue is not addressed. | |

| [25] | Minimize Execution Delays and Energy Consumption | A collaborative multi-agent deep learning-based framework | IoT Devices Network | ✓ |

| |

| [26] | Minimize User’s Latency and UAV Energy Consumption | A distributed multi-agent reinforcement learning-based technique | UAV Edge-Cloud Network | ✓ |

| |

| [27] | Maximize Network Computing Rate | A multi-hop task offloading scheme | IoT Devices Network | ✓ |

| |

| [28] | Minimize User Energy Consumption | A two-layer task scheduling and dynamic service optimization strategy | IoT Devices Network | ✓ |

| |

| [29] | Optimize Average Slow-Down for Offloaded Tasks | A multi-UAV-enabled load-balancing scheme | IoT Devices Network | ✓ |

| |

| [30] | Reduce Ground Nodes’ Energy Consumption | A collaborative multi-task computation and cache offloading scheme | IoT Devices Network | ✓ |

| |

| [31] | Minimize UAVs’ Power Consumption under Stability Queue Constraint | A collaborative task offloading and resource allocation scheme | IoT Devices Network | ✓ |

| |

| [32] | Minimize Task Delay | An MEC-enabled UAV-assisted vehicular ad hoc network architecture | Vehicular Edge Computing Network | ✓ | • Energy consumption is not considered. | |

| [33] | Minimize Task Delay and Increase Service Performance | An integrated model of task offloading, RSUs’ cooperation, and tasks’ division | Vehicular Edge Computing Network | ✓ |

| |

| [34] | Minimize Energy Consumption and Promote Processing Computations, Network Bandwidth, and Task Execution Time | A multi-tiered edge-based resource allocation optimization framework | IoT Devices Network | ✓ |

| |

| [35] | Decrease the Energy Consumption and System Latency | A resource allocation strategy for multi-UAC-aided MEC networks | UAV-Aided MEC Network | ✓ |

| |

| [36] | Minimize Overall Energy Consumption of Mobile Devices and UAVs | A cooperative task offloading and resource allocation approach for UAV-aided MEC systems | UAV-Aided MEC Network | ✓ |

| |

| [37] | Minimize the Total Cost of All Tasks | A multi-task offloading, and resource allocation approach | MEC Systems in Satellite IoT | ✓ |

| |

| [38] | Optimize Energy Consumption and Transition Times between Hovering Points | An energy and delay-optimized trajectory planning framework | UAV-Aided MEC Network | ✓ | • Load-balancing issue is not addressed. | |

| IoT Devices | Data Size (MB) | CPU Cycles (Gigacycles) | Estimated Execution Time (s) | Best GBS | |

|---|---|---|---|---|---|

| Upload Time | Computation Time | ||||

| D | 15 | 15 | GBS | GBS | GBS |

| GBS | GBS | ||||

| D | 20 | 10 | GBS | GBS | GBS |

| GBS | GBS | ||||

| GBS | GBS | ||||

| D | 15 | 20 | GBS | GBS | GBS |

| GBS | GBS | ||||

| D | 10 | 15 | GBS | GBS | GBS |

| GBS | GBS | ||||

| D | 15 | 25 | GBS | GBS | GBS |

| GBS | GBS | ||||

| Application | Label | CPU Cycle/Byte |

|---|---|---|

| Health Monitoring | A | 500 |

| Automatic Number Plate Reading | B | 960 |

| x264 CBR encode | C | 1900 |

| Traffic Management | D | 5900 |

| Augmented Reality | E | 12,000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elgendy, I.A.; Meshoul, S.; Hammad, M. Joint Task Offloading, Resource Allocation, and Load-Balancing Optimization in Multi-UAV-Aided MEC Systems. Appl. Sci. 2023, 13, 2625. https://doi.org/10.3390/app13042625

Elgendy IA, Meshoul S, Hammad M. Joint Task Offloading, Resource Allocation, and Load-Balancing Optimization in Multi-UAV-Aided MEC Systems. Applied Sciences. 2023; 13(4):2625. https://doi.org/10.3390/app13042625

Chicago/Turabian StyleElgendy, Ibrahim A., Souham Meshoul, and Mohamed Hammad. 2023. "Joint Task Offloading, Resource Allocation, and Load-Balancing Optimization in Multi-UAV-Aided MEC Systems" Applied Sciences 13, no. 4: 2625. https://doi.org/10.3390/app13042625

APA StyleElgendy, I. A., Meshoul, S., & Hammad, M. (2023). Joint Task Offloading, Resource Allocation, and Load-Balancing Optimization in Multi-UAV-Aided MEC Systems. Applied Sciences, 13(4), 2625. https://doi.org/10.3390/app13042625