Deep Analysis of Student Body Activities to Detect Engagement State in E-Learning Sessions

Abstract

1. Introduction

Contributions and Novelty

- •

- The new method supports future work on customizing interactive e-learning systems.

- •

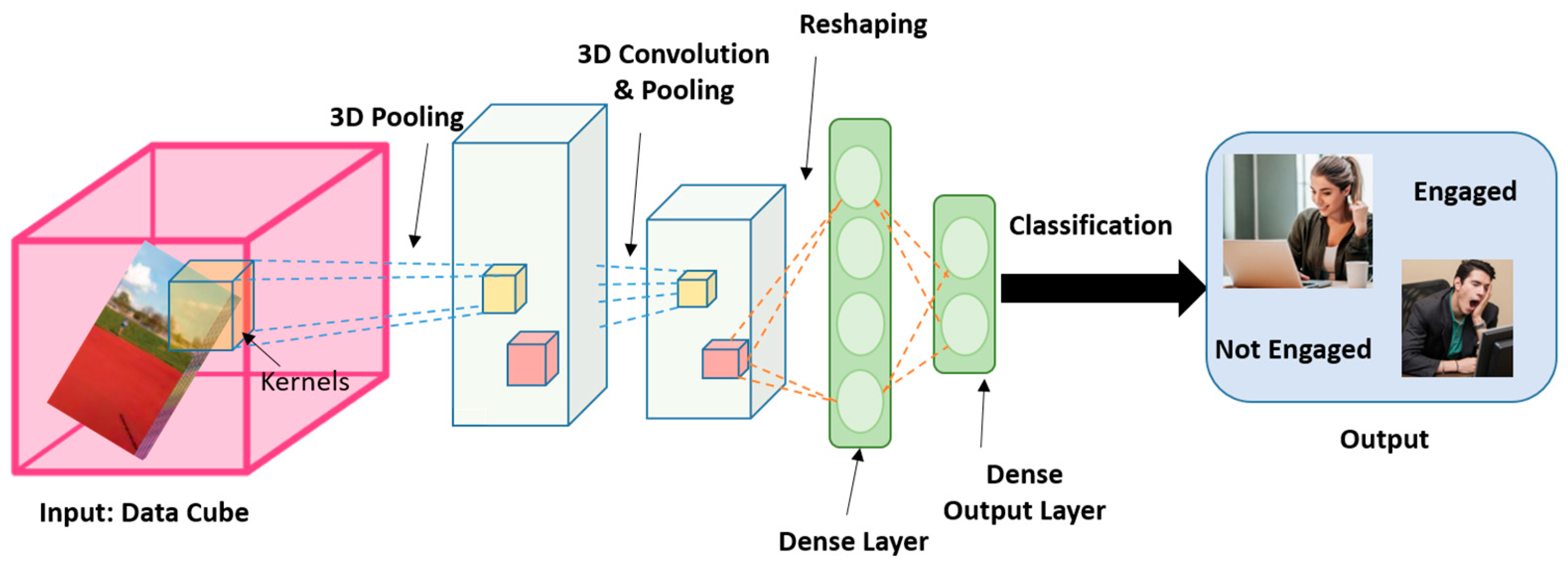

- This work presents a novel approach to the implementation of a solution for automatic engagement level detection by utilizing a deep 3D CNN model for learning the spatiotemporal attributes of micro-/macro-body actions from video inputs. The implemented 3D model learns the required gesture and appearance features from student micro-/macro-body gestures.

- •

- In this study, we address the significance of learning the spatiotemporal features of micro- and macro-body activities. This work mainly contributes to academia by providing a deep 3D CNN model trained on realistic datasets; the proposed model outperforms previous works. Furthermore, this work contributes to emerging educational technology trends, and the proposed deep 3D CNN model can extend existing interactive e-learning systems by adding an additional indicator of learner performance based on the level of engagement.

- •

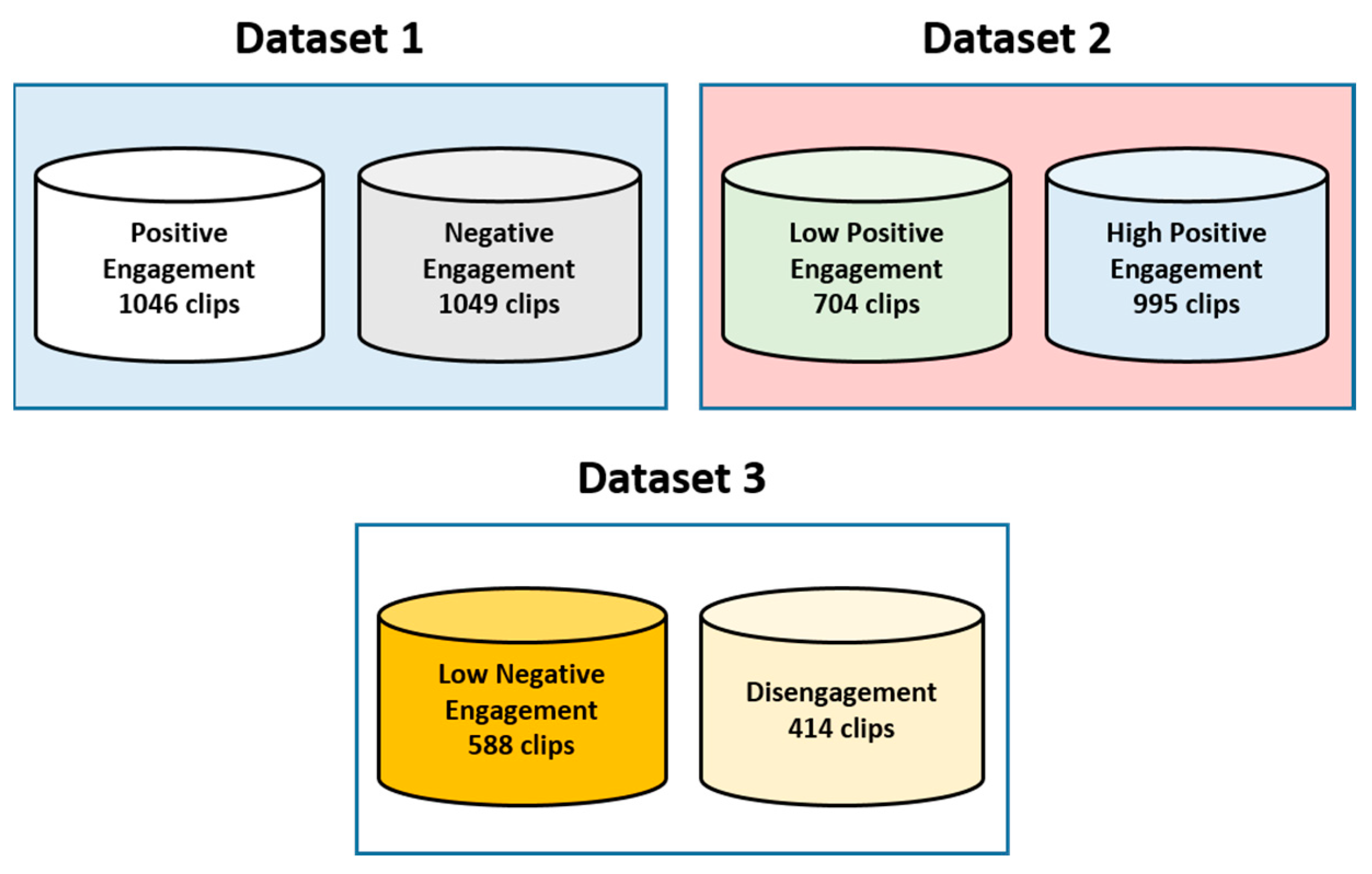

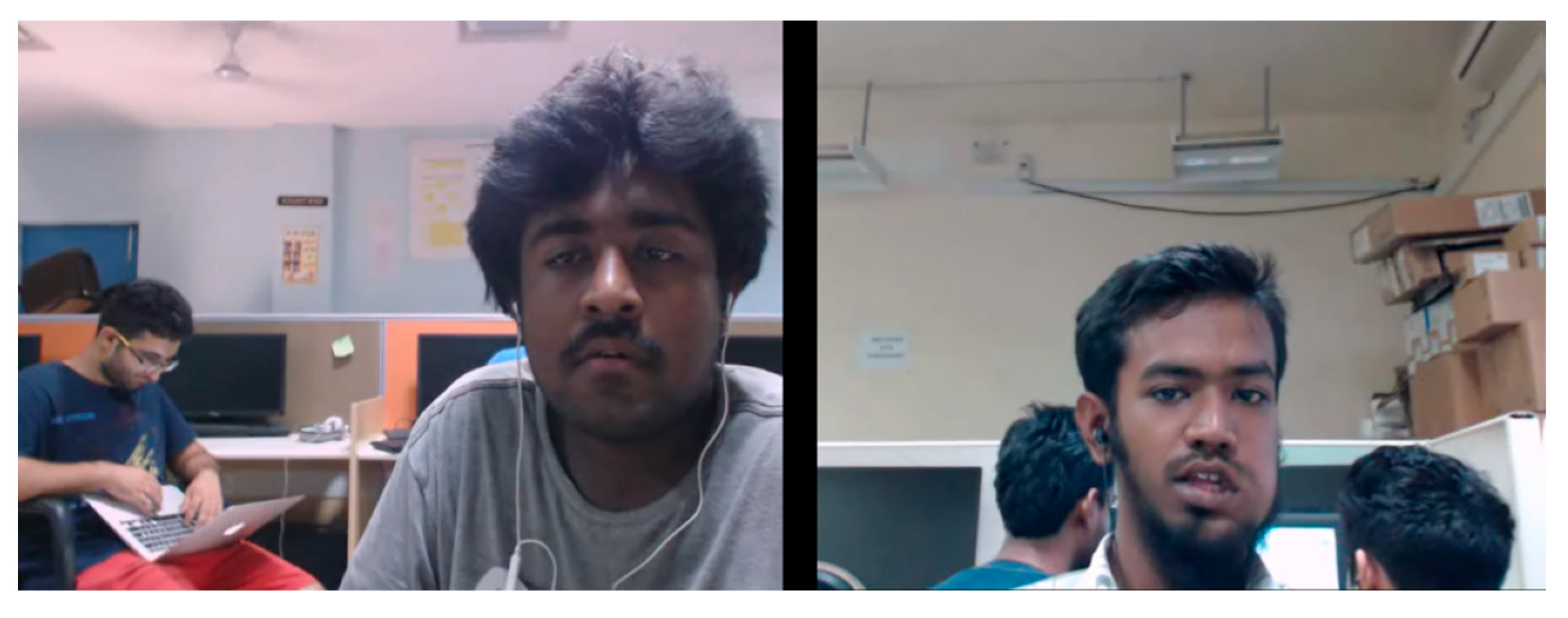

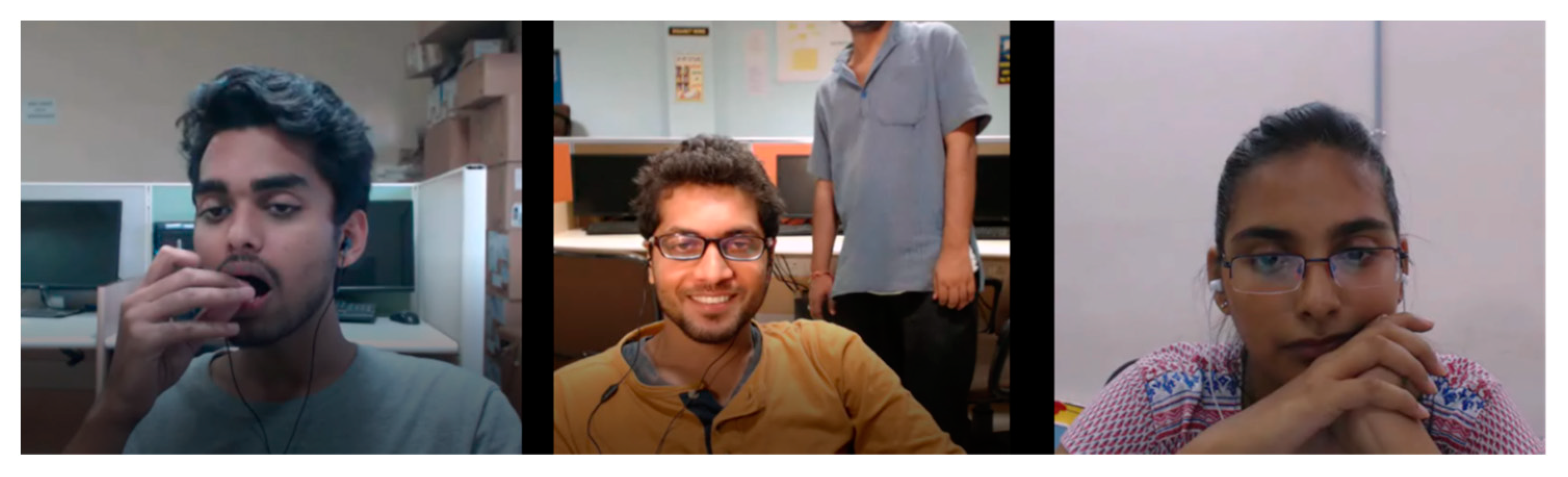

- We collect and process two new versions of our original dataset, named dataset 1. We will call the new versions dataset 2 and dataset 3. The data were collected during real scenarios recorded by real students in an uncontrolled environment, which offers many challenges related to the recording settings (features from the dataset are available from the corresponding author on reasonable request).

- •

- We implement two new prediction models to measure more precise engagement levels based on the new dataset versions.

- •

- We empirically find the architecture of the models that give the highest performance.

- •

- We assess the performance of the proposed models via a number of experiments.

2. Related Work

2.1. Facial Features vs. Body Activity for Affect Recognition

2.2. Frame-Based Feature Extraction vs. Video-Based Feature Extraction

2.3. Current Approach

3. New Video Dataset

4. Dataset Collection and Preparation Methodology

- Examination and inspection of any unintentional natural body activities, both micro and macro.

- Examination and investigation of the frequency of occurrence of the body’s activities as time progresses.

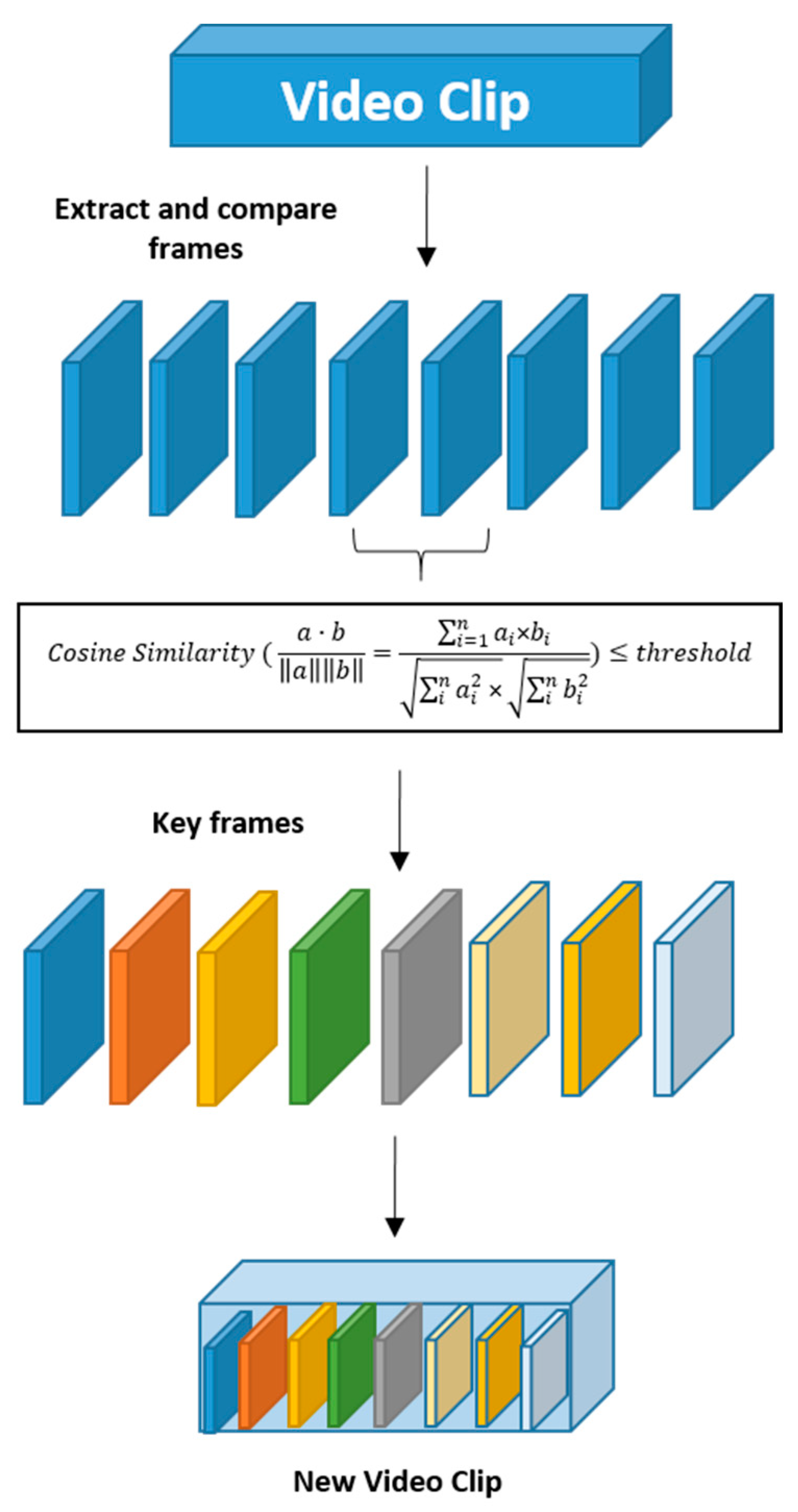

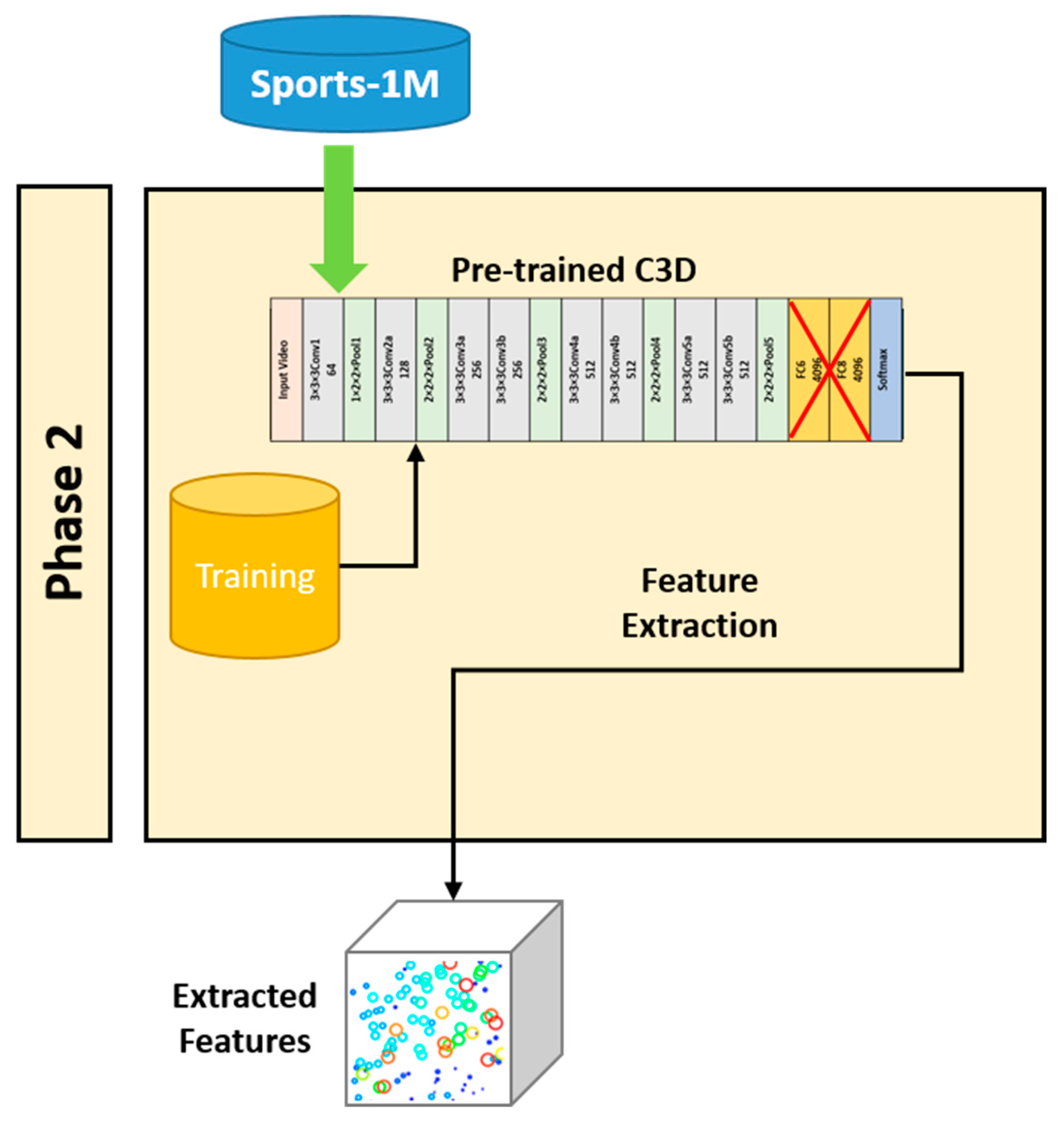

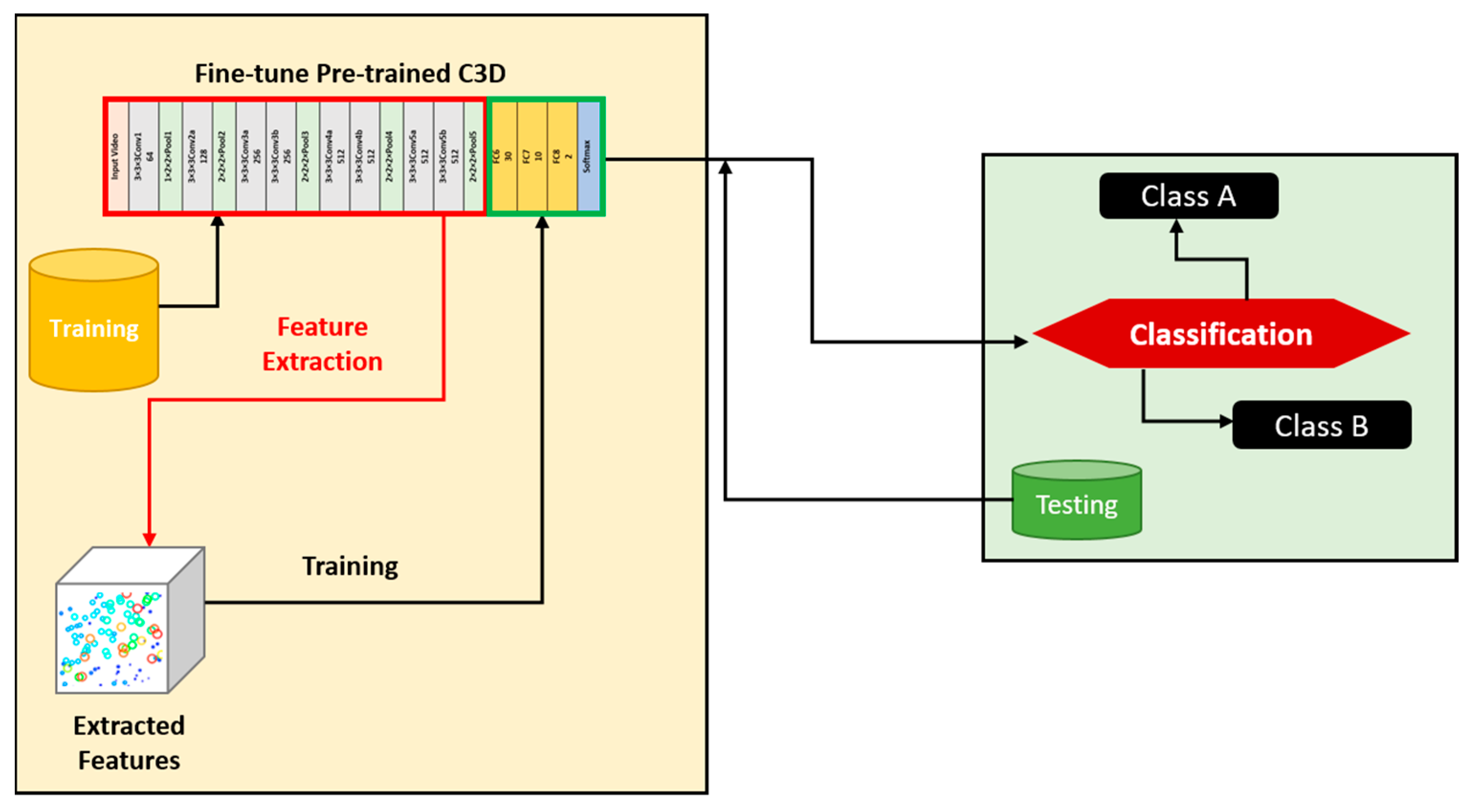

5. Spatiotemporal Feature Extraction

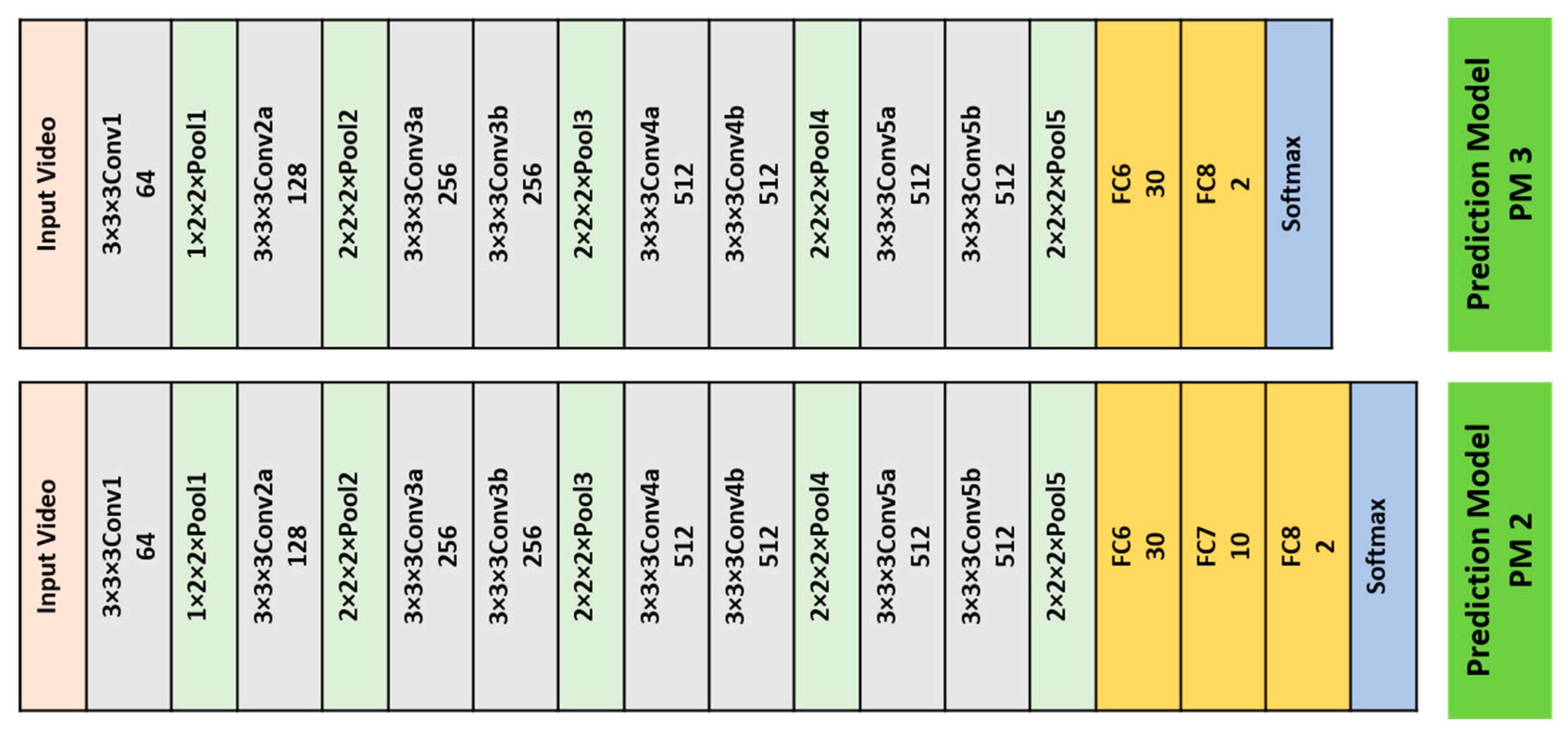

6. Prediction Model Generation

7. Experimentation and Evaluation

- Experiment 1: Evaluate the efficiency of the splitting of training and testing of the dataset. The goal of this experiment was to select the ratio of the training and testing split that would lead to the best performance of the prediction model.

- Experiment 2: Evaluate the different 3D CNN architectures for prediction model generation. The aim of this experiment was to explore the different 3D CNN architectures used in this study, evaluate them, and compare their efficiency.

- Experiment 3: Validate the contribution of the proposed method by comparing our results to the state-of-the-art methods.

- Experiment 4: Evaluate the efficiency of the proposed model on an unseen dataset.

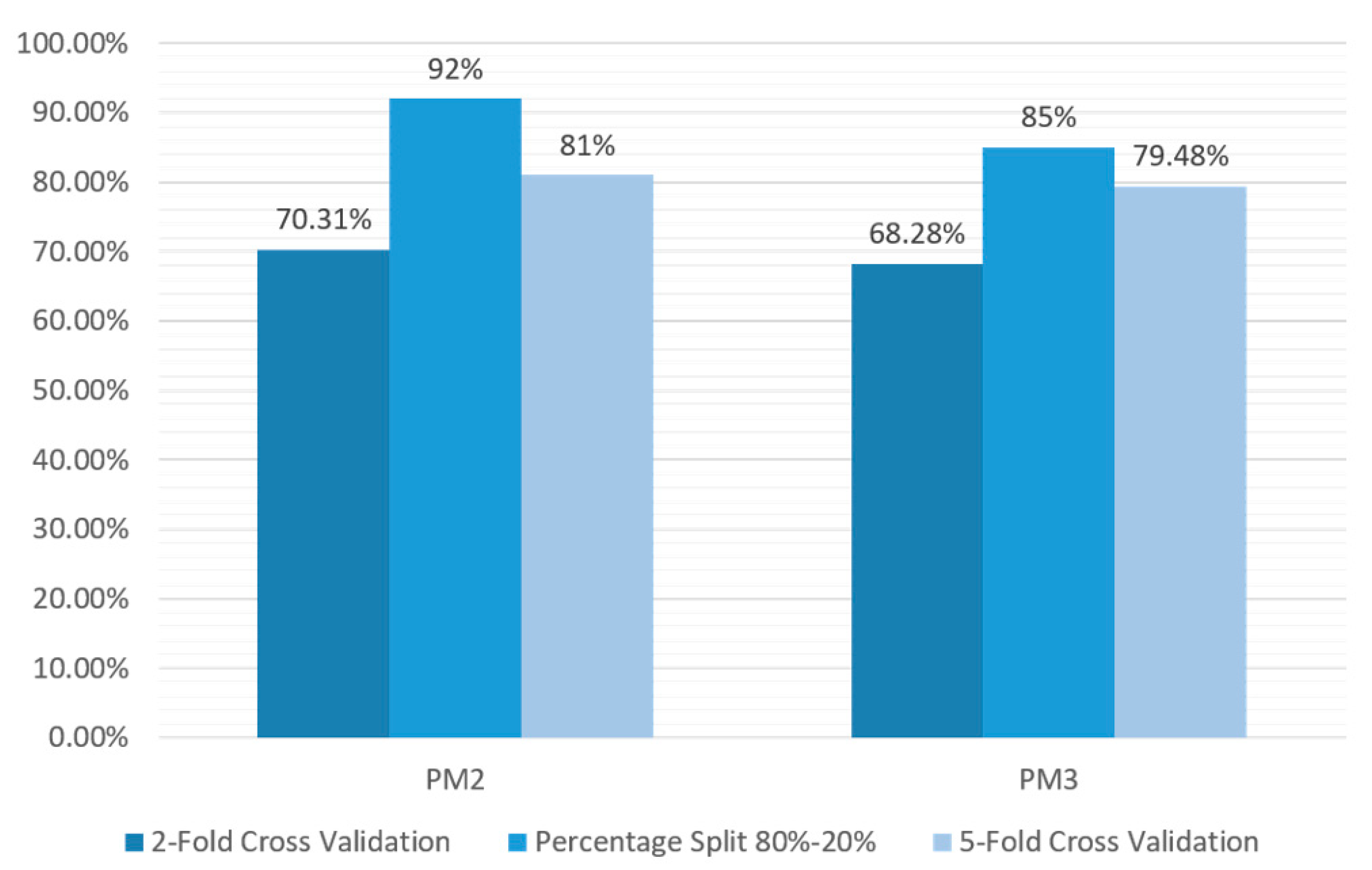

7.1. Experiment 1

- Cross-validation: In this procedure, the training set was split into k smaller sets. The model was trained using K 1 of the folds as training data. The generated model was then validated on the remaining part of the data.

- Percentage split: In this approach, we split the data based on a predefined percentage for training and testing.

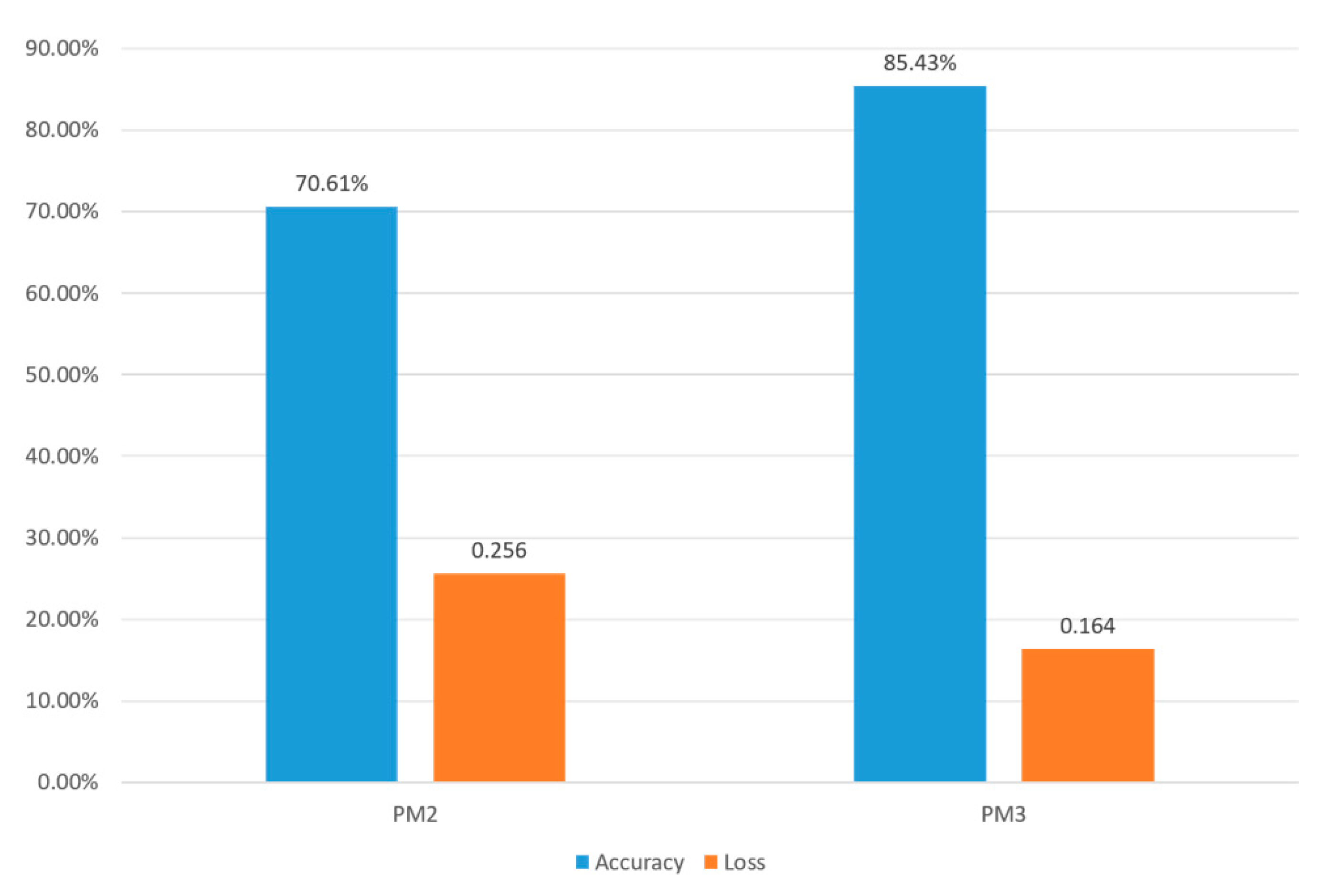

7.2. Experiment 2

7.3. Experiment 3

7.4. Experiment 4

8. Discussion

9. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ricardo, M.L.; Iglesias, M.J. Analyzing learners’ experience in e-learning based scenarios using intelligent alerting systems: Awakening of new and improved solutions. In Proceedings of the 13th Iberian Conference on Information Systems and Technologies (CISTI 2018), Cáceres, Spain, 13–16 June 2018; pp. 1–3. [Google Scholar]

- Tao, J.; Tan, T. Affective Computing: A Review. In Affective Computing and Intelligent Interaction; Tao, J., Tan, T., Picard, R.W., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3784, pp. 981–995. [Google Scholar]

- Shen, L.; Wang, M.; Shen, R. Affective e-learning: Using “emotional” data to improve learning in pervasive learning environment. J. Educ. Technol. Soc. 2009, 12, 176–189. [Google Scholar]

- Moubayed, A.; Injadat, M.; Shami, A.; Lutfiyya, H. Relationship between student engagement and performance in e-learning environment using association rules. In Proceedings of the IEEE World Engineering Education Conference (EDUNINE 2018), Buenos Aires, Argentina, 11–14 March 2018; pp. 1–6. [Google Scholar]

- Monkaresi, H.; Bosch, N.; Calvo, R.A.; D’Mello, S.K. Automated detection of engagement using video-based estimation of facial expressions and heart rate. IEEE Trans. Affect. Comput. 2017, 8, 15–28. [Google Scholar] [CrossRef]

- Jang, M.; Park, C.; Yang, H.S.; Kim, Y.H.; Cho, Y.J.; Lee, D.W.; Cho, H.K.; Kim, Y.A.; Chae, K.; Ahn, B.K. Building an automated engagement recognizer based on video analysis. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (HRI 14), Bielefeld, Germany, 3–6 March 2014; pp. 182–183. [Google Scholar] [CrossRef]

- Li, J.; Ngai, G.; Leong, H.V.; Chan, S.C. Multimodal human attention detection for reading from facial expression, eye gaze, and mouse dynamics. SIGAPP Appl. Comput. Rev. 2016, 16, 37–49. [Google Scholar] [CrossRef]

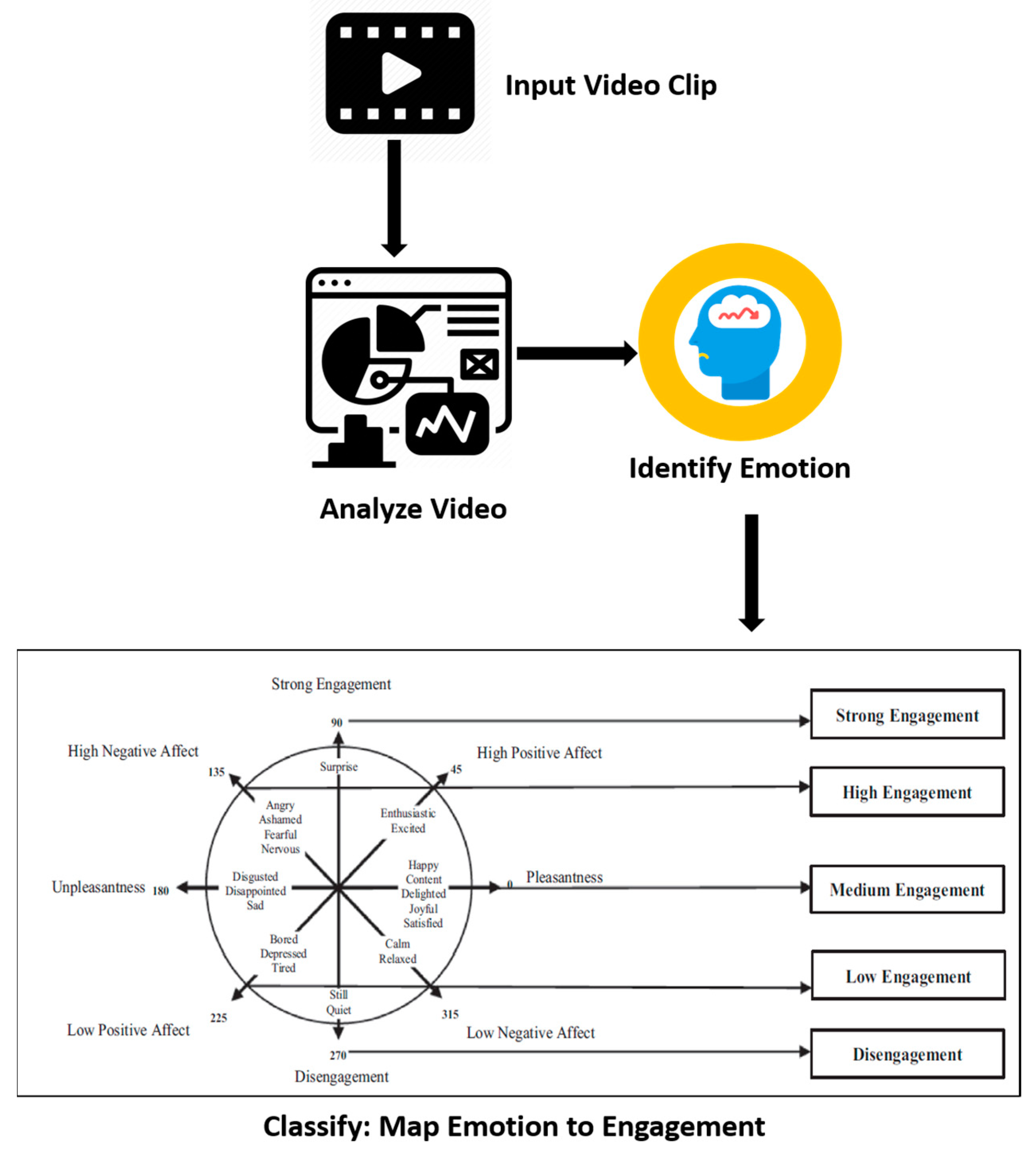

- Altuwairqi, K.; Jarraya, S.; Allinjawi, A.; Hammami, M. A new emotion–based affective model to detect student’s engagement. J. King Saud Univ. Comput. Inf. Sci. 2018, 33, 99–109. [Google Scholar] [CrossRef]

- Khenkar, S.; Jarraya, S.K. Engagement detection based on analysing micro body gestures using 3d cnn. Comput. Mater. Contin. CMC 2022, 70, 2655–2677. [Google Scholar]

- Psaltis, A.; Apostolakis, K.C.; Dimitropoulos, K.; Daras, P. Multimodal student engagement recognition in prosocial games. IEEE Trans. Games 2018, 10, 292–303. [Google Scholar] [CrossRef]

- Shen, J.; Yang, H.; Li, J.; Zhiyong, C. Assessing learning engagement based on facial expression recognition in MOOC’s scenario. Multimed. Syst. 2022, 28, 469–478. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.Y.; Hung, Y.P. Feature fusion of face and body for engagement intensity detection. In Proceedings of the IEEE International Conference on Image Processing (ICIP 2019), Taipei, Taiwan, 22–29 September 2019; pp. 3312–3316. [Google Scholar]

- Dhall, A.; Kaur, A.; Goecke, R.; Gedeon, T. Emotiw2018: Audio-video, student engagement and group-level affect prediction. In Proceedings of the 20th ACM International Conference on Multimodal Interaction (ICMI 18), Boulder, CO, USA, 16–20 October 2018; pp. 653–656. [Google Scholar] [CrossRef]

- Dash, S.; Dewan, M.A.; Murshed, M.; Lin, F.; Abdullah, M.; Das, A. A two-stage algorithm for engagement detection in online learning. In Proceedings of the International Conference on Sustainable Technologies for Industry 4.0 (STI), Dhaka, Bangladesh, 24–25 December 2019; pp. 1–4. [Google Scholar]

- Gupta, A.; Jaiswal, R.; Adhikari, S.; Balasubramanian, V. DAISEE: Dataset for affective states in e-learning environments. arXiv 2016, arXiv:1609.01885. [Google Scholar]

- Dewan, M.; Murshed, M.; Lin, F. Engagement detection in online learning: A review. Smart Learn. Environ. 2019, 6, 1–20. [Google Scholar] [CrossRef]

- Chen, L.; Nugent, C.D. Human Activity Recognition and Behaviour Analysis, 1st ed.; Springer International Publishing: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Darwin, C. The Expression of the Emotions in Man and Animals; John Murray: London, UK, 1872. [Google Scholar]

- Kleinsmith, A.; Bianchi-Berthouze, N. Affective body expression perception and recognition: A survey. IEEE Trans. Affect. Comput. 2013, 4, 15–33. [Google Scholar] [CrossRef]

- Argyle, M. Bodily Communication; Routledge: Oxfordshire, UK, 1988. [Google Scholar]

- Stock, J.; Righart, R.; Gelder, B. Body expressions influence recognition of emotions in the face and voice. Emotion 2007, 7, 487–499. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Detecting deception from the body or face. J. Personal. Soc. Psychol. 1974, 29, 288–298. [Google Scholar] [CrossRef]

- Behera, A.; Matthew, P.; Keidel, A.; Vangorp, P.; Fang, H.; Canning, S. Associating facial expressions and upper-body gestures with learning tasks for enhancing intelligent tutoring systems. Int. J. Artif. Intell. Educ. 2020, 30, 236–270. [Google Scholar] [CrossRef]

- Riemer, V.; Frommel, J.; Layher, G.; Neumann, H.; Schrader, C. Identifying features of bodily expression as indicators of emotional experience during multimedia learning. Front. Psychol. 2017, 8, 1303. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Lie catching and microexpressions. Philos. Decept. 2009, 1, 5. [Google Scholar]

- Tran, D.; Bourdev, L.D.; Fergus, L.; Paluri, M. C3D: Generic features for video analysis. arXiv 2014, arXiv:1412.0767. [Google Scholar]

- Whitehill, J.; Serpell, Z.Y.; Lin, A.F.; Movellan, J.R. The faces of engagement: Automatic recognition of student engagement from facial expressions. IEEE Trans. Affect. Comput. 2014, 5, 86–98. [Google Scholar] [CrossRef]

- Nezami, O.M.; Dras, M.L.; Richards, D.; Wan, S.; Paris, C. Automatic recognition of student engagement using deep learning and facial expression. In Proceedings of the 19th Joint European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), Wurzburg, Germany, 16–20 September 2020; pp. 273–289. [Google Scholar]

- Dewan, M.A.; Lin, F.; Wen, D.; Murshed, M.; Uddin, Z. A deep learning approach to detecting engagement of online learners. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence Computing, Advanced Trusted Computing, Scalable Computing Communications, Cloud Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Los Alamitos, CA, USA, 8–12 October 2018; pp. 1895–1902. [Google Scholar]

| Class Name | Total | Training | Testing |

|---|---|---|---|

| High Positive Engagement | 995 | 796 | 199 |

| Low Positive Engagement | 704 | 563 | 141 |

| Class Name | Total | Training | Testing |

|---|---|---|---|

| Low Negative Engagement | 588 | 528 | 60 |

| Disengagement | 414 | 373 | 41 |

| Parameter | PM2 | PM3 |

|---|---|---|

| Optimizer | Adam | Adam |

| Learning rate | 0.0003 | 0.0003 |

| Fully connected layers | 3 | 2 |

| Convolution layers | 5 | 5 |

| Max pooling layers | 5 | 5 |

| Training dataset | Dataset 2 | Dataset 3 |

| Number of training epochs | 15 | 10 |

| Number of trainable params | 245,852 | 246,122 |

| Number of non-trainable params | 27,655,936 | 27,655,936 |

| Total number of params | 27,901,788 | 27,902,058 |

| Prediction Model | K = 2 | K = 5 | Percentage Split |

|---|---|---|---|

| PM2 | 70.31% | 81% | 92% |

| PM3 | 68.28% | 79.48% | 85% |

| Prediction Model | FC | Optimizer | Activation Function | Accuracy |

|---|---|---|---|---|

| PM2 | 1 | SGD | Sigmoid | 43.05% |

| PM2 | 2 | Adam | Softmax | 86% |

| PM2 | 3 | Adam | Softmax | 92% |

| PM3 | 1 | SGD | Sigmoid | 25.46% |

| PM3 | 2 | Adam | Softmax | 85% |

| PM2 | 1 | SGD | Sigmoid | 43.05% |

| Work | Year | Prediction Model | Analysis |

|---|---|---|---|

| Whitehill, J., et al. [27] | 2014 | SVM | 2D |

| Li, J., et al. [7] | 2016 | SVM | 2D |

| Monkaresi, H., et al. [5] | 2017 | Naive Bayes | 2D |

| Psaltis, A., et al. [10] | 2018 | ANN | 2D |

| Nezami O.M., et al. [28] | 2020 | CNN, VGGnet | 2D |

| Dewan, M.A., et al. [29] | 2015 | DBN | 2D |

| Shen, J., et al. [11] | 2021 | CNN | 2D |

| PM2 | 2023 | 3D CNN | 3D |

| PM3 | 2023 | 3D CNN | 3D |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khenkar, S.G.; Jarraya, S.K.; Allinjawi, A.; Alkhuraiji, S.; Abuzinadah, N.; Kateb, F.A. Deep Analysis of Student Body Activities to Detect Engagement State in E-Learning Sessions. Appl. Sci. 2023, 13, 2591. https://doi.org/10.3390/app13042591

Khenkar SG, Jarraya SK, Allinjawi A, Alkhuraiji S, Abuzinadah N, Kateb FA. Deep Analysis of Student Body Activities to Detect Engagement State in E-Learning Sessions. Applied Sciences. 2023; 13(4):2591. https://doi.org/10.3390/app13042591

Chicago/Turabian StyleKhenkar, Shoroog Ghazee, Salma Kammoun Jarraya, Arwa Allinjawi, Samar Alkhuraiji, Nihal Abuzinadah, and Faris A. Kateb. 2023. "Deep Analysis of Student Body Activities to Detect Engagement State in E-Learning Sessions" Applied Sciences 13, no. 4: 2591. https://doi.org/10.3390/app13042591

APA StyleKhenkar, S. G., Jarraya, S. K., Allinjawi, A., Alkhuraiji, S., Abuzinadah, N., & Kateb, F. A. (2023). Deep Analysis of Student Body Activities to Detect Engagement State in E-Learning Sessions. Applied Sciences, 13(4), 2591. https://doi.org/10.3390/app13042591