SAViP: Semantic-Aware Vulnerability Prediction for Binary Programs with Neural Networks

Abstract

1. Introduction

- We propose SAViP, a semantic-aware model for vulnerability prediction that utilizes the semantic, statistical, and structural features of binary programs.

- To better extract semantic information from instructions, we introduce the pre-training task of the RoBERTa model to assembly language and further employ the pre-trained model to generate semantic embeddings for the instructions.

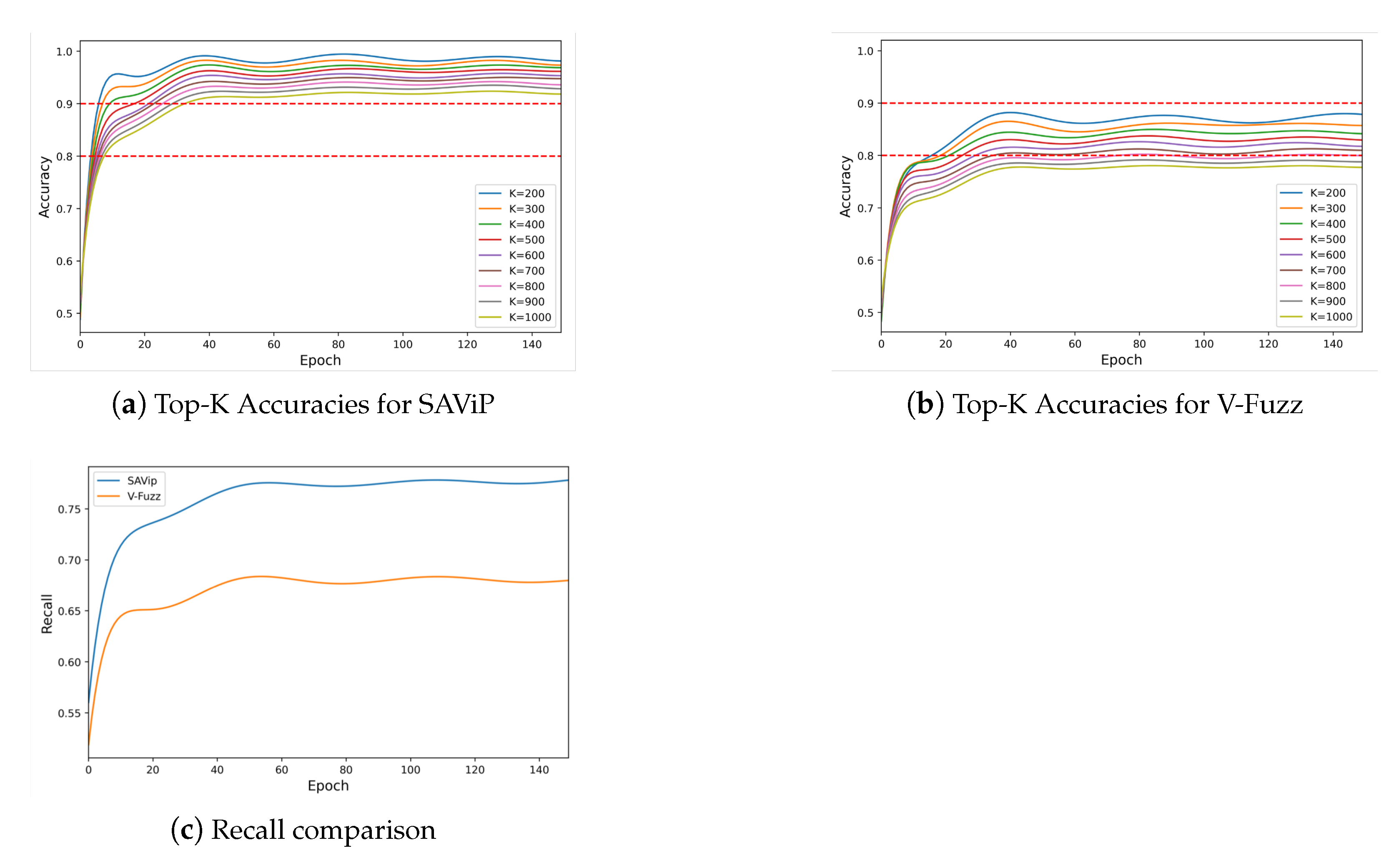

- Experiments show that our SAViP makes significant improvements over the previous state-of-the-art V-fuzz (recall +10%) and achieves new state-of-the-art results for vulnerability prediction.

2. Related Work

2.1. Vulnerability Prediction

2.2. Intermediate Representation

2.3. Pre-Training Language Model

2.4. Graph Neural Network (GNN)

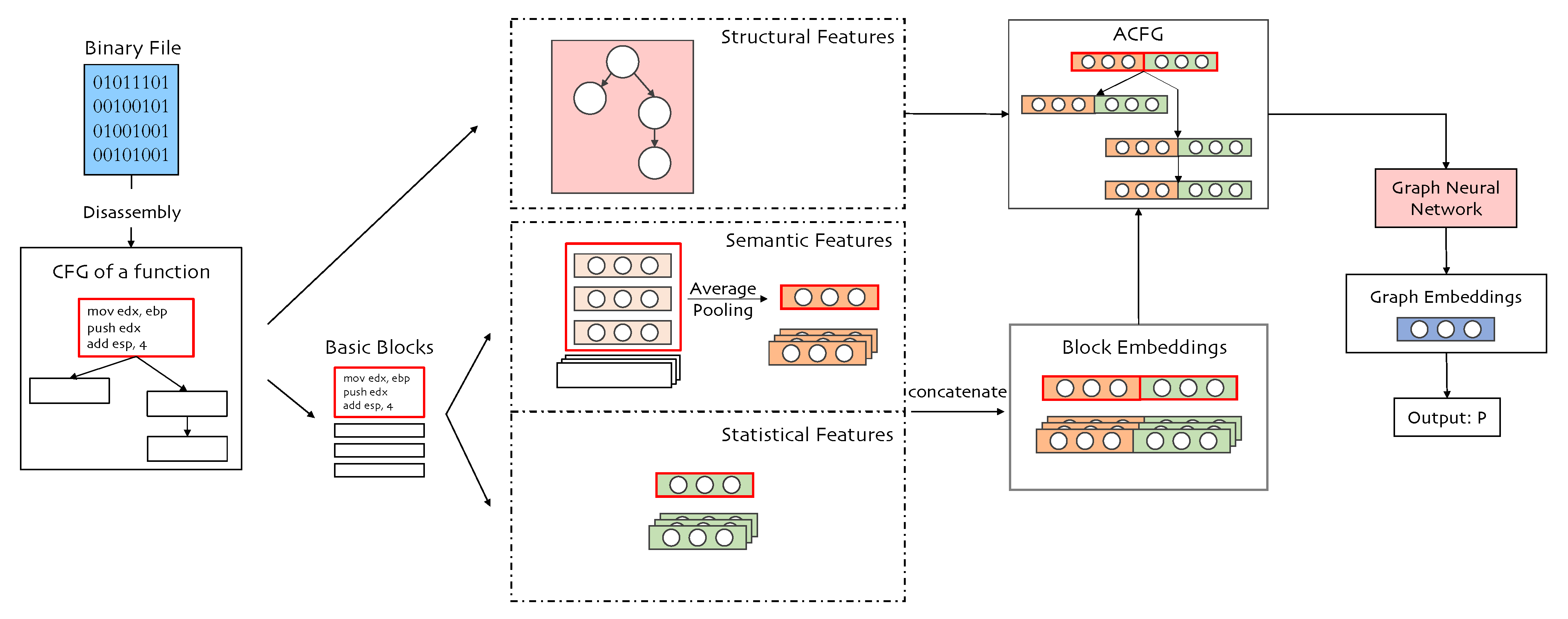

3. Vulnerability Prediction Model

3.1. Overview

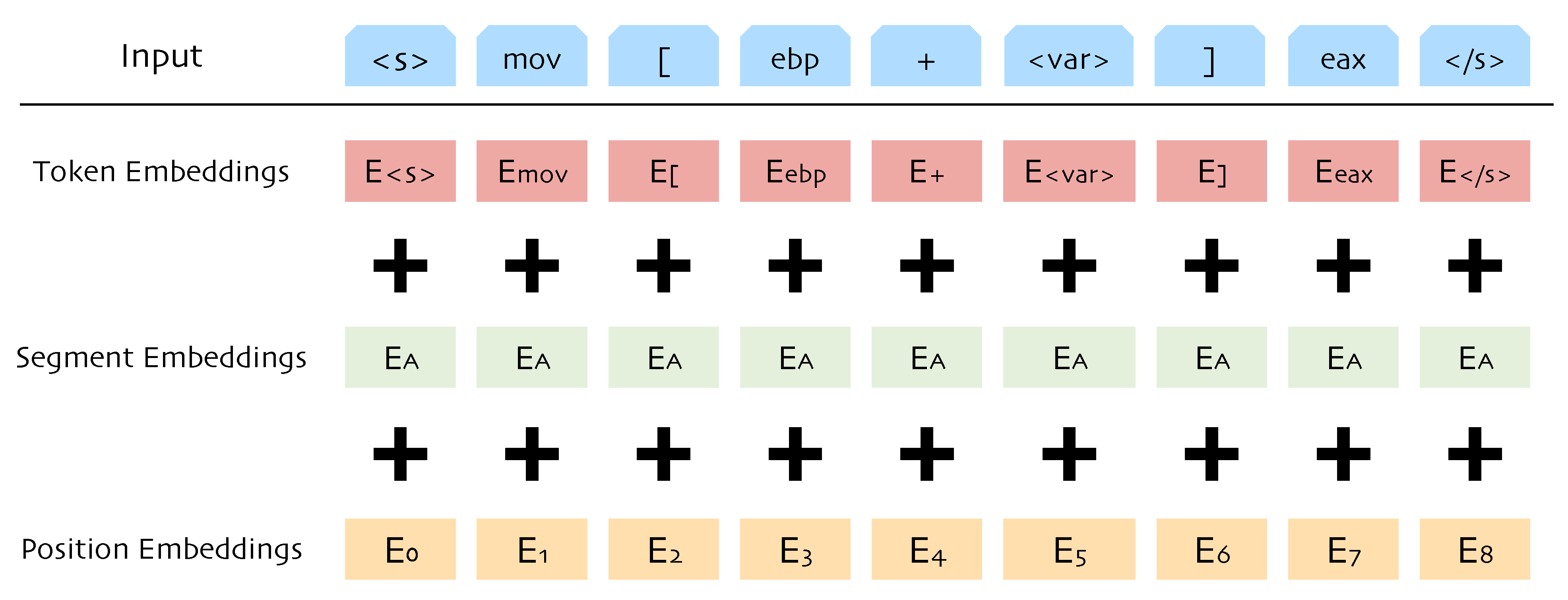

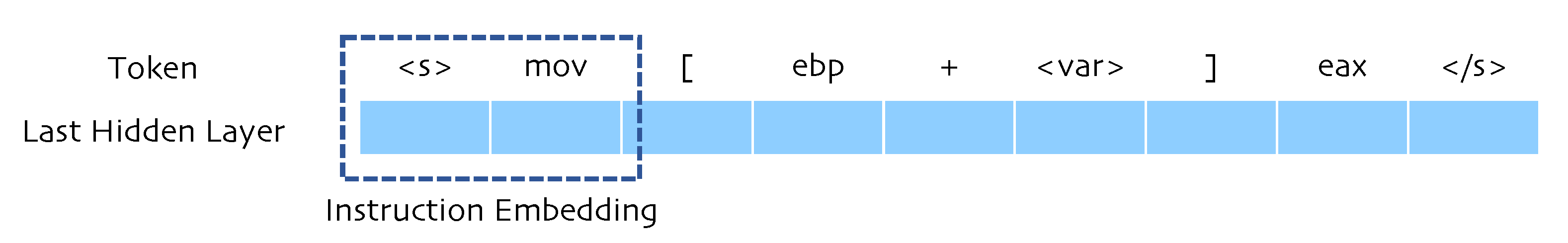

3.2. Semantic Features

3.2.1. Tokenization

3.2.2. Pre-Training

3.2.3. Dynamic Masking

3.2.4. Outputs

3.3. Statistical Features

3.4. Structural Features

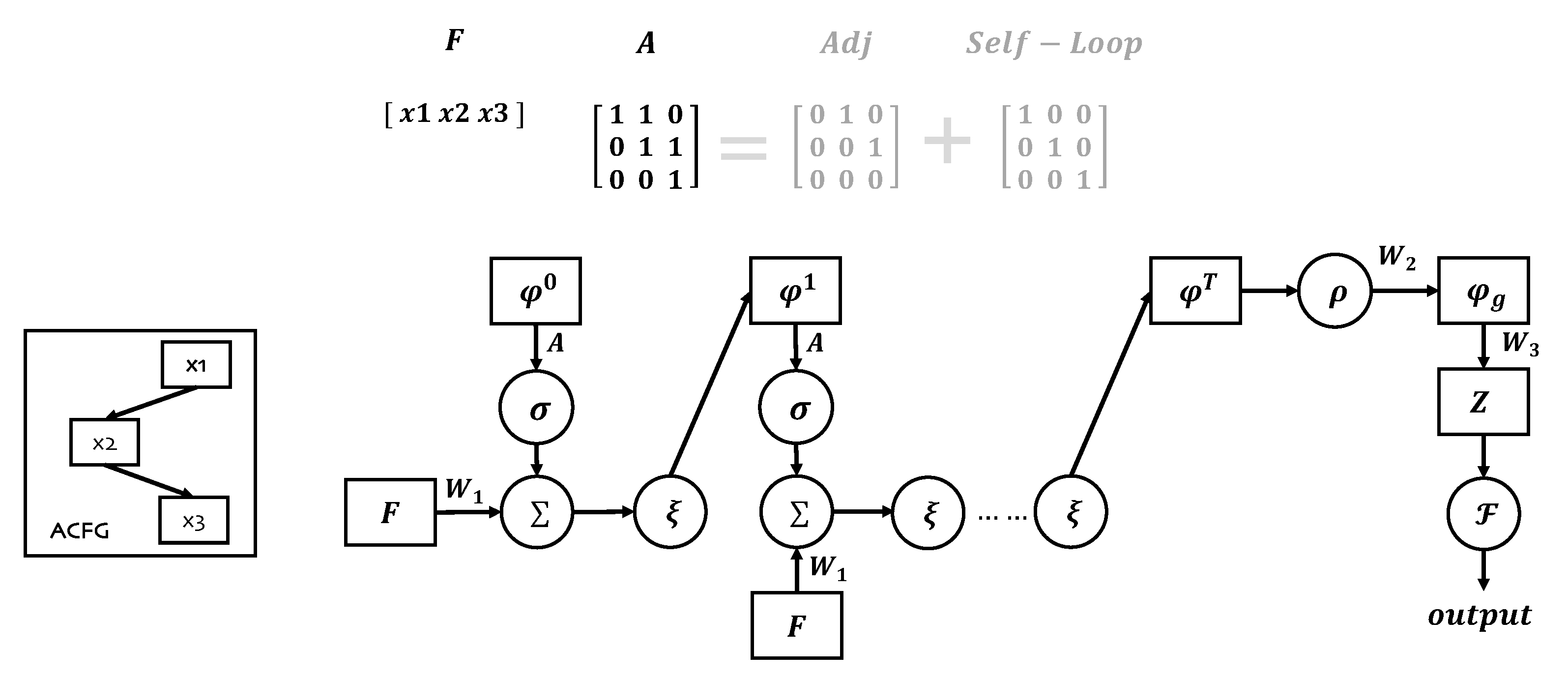

3.4.1. Model Structure

3.4.2. Model Training

4. Evaluation

4.1. Dataset

4.2. Environment

4.3. Evaluation Metrics

4.4. Model Performance

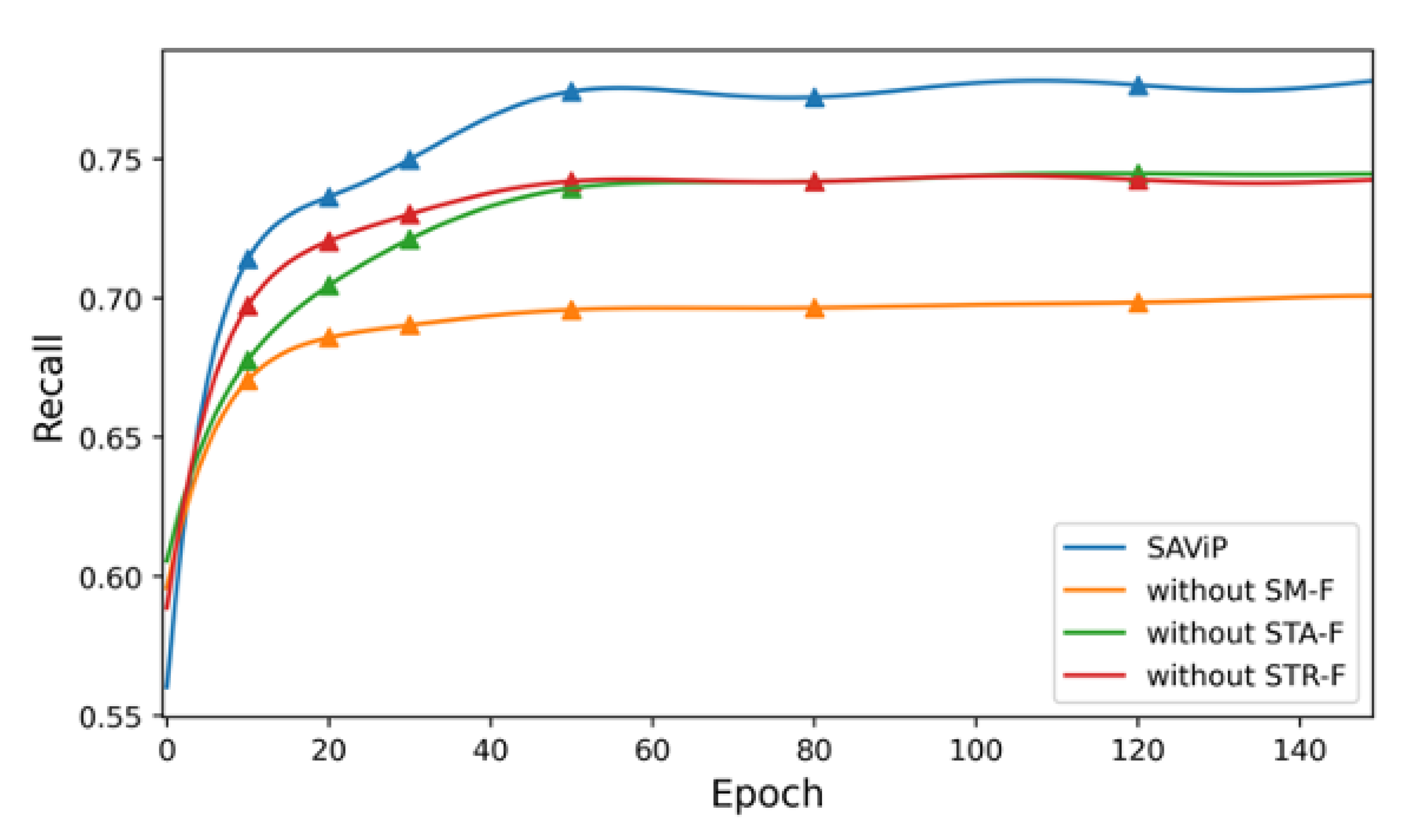

4.5. Ablation Study

4.6. Parameter Analysis

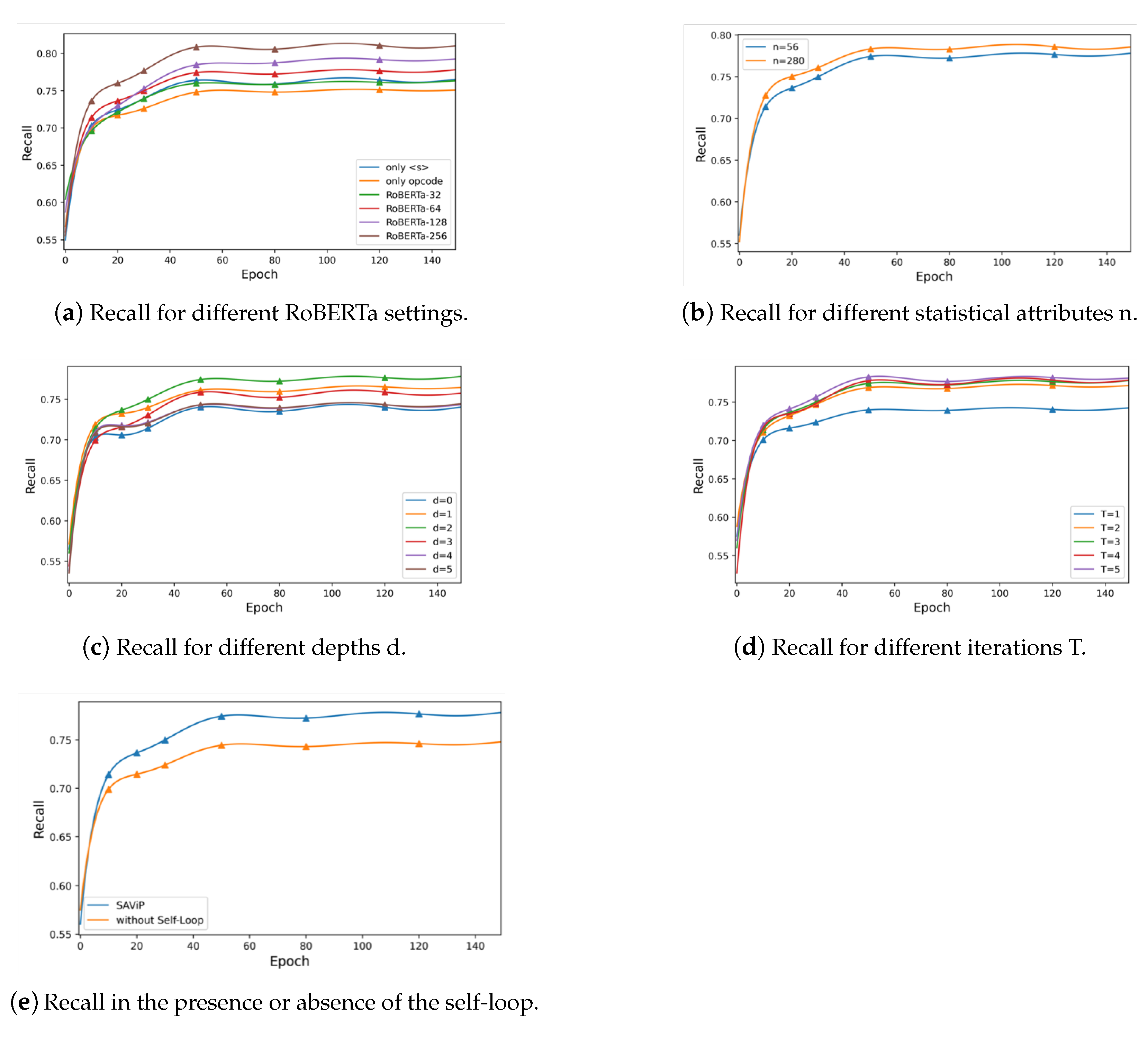

4.6.1. Semantic Features

4.6.2. Statistical Features

4.6.3. Structural Features

- DepthTable 7(Depth) and Figure 10c show the influence of different neural network depths d in the model. By setting the number of fully connected layers d (d = 0∼5), we observe that the recall reaches its maximum value when d is 2. We believe that the reason is that an excessively deep network structure will result in feature dispersion, leading to worse results. Therefore, we choose 2 as the final depth of the network in the GNN.

- Iteration In the GNN, we need to perform certain iterations to optimize the parameters. We set T to 1, 2, 3, 4, and 5 to observe the impact of iterations on the network. Table 7 (Iteration) and Figure 10d show the differences in the recall for different situations. The recall obtained in cases where are very similar, but the larger T is, the greater the time cost. We infer that the scale is not sufficiently large to require deep iterations; for our functions, three hops are sufficient for the basic blocks to collect the information of their neighbors. Therefore, we set T to 3 as the number of iterations of the GNN.

- Self-Loop To ensure the block embeddings always focus on themselves while collecting network information, we add a self-loop to the ACFG before the GNN starts. Table 7 (Self-Loop) and Figure 10e show the effect of this step on the results. The experimental results show that, after adding the self-loop, the recall is increased by 3.7%. This shows that the addition of a self-loop can improve the ability of the GNN to extract structural features well.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- SonarQube. Available online: https://www.sonarqube.org/ (accessed on 7 January 2022).

- DeepScan. Available online: https://deepscan.io/ (accessed on 7 January 2022).

- Reshift Security. Available online: https://www.reshiftsecurity.com/ (accessed on 7 January 2022).

- Wang, S.; Liu, T.; Tan, L. Automatically Learning Semantic Features for Defect Prediction. In Proceedings of the 2016 IEEE/ACM 38th International Conference on Software Engineering, Austin, TX, USA, 14–22 May 2016; pp. 297–308. [Google Scholar]

- Li, Z.; Zou, D.; Xu, S.; Ou, X.; Jin, H.; Wang, S.; Deng, Z.; Zhong, Y. VulDeePecker: A deep learning-based system for vulnerability detection. In Proceedings of the 25th Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018; pp. 1–15. [Google Scholar]

- Wang, S.; Liu, T.; Nam, J.; Tan, L. Deep Semantic Feature Learning for Software Defect Prediction. IEEE Trans. Softw. Eng. 2020, 46, 1267–1293. [Google Scholar] [CrossRef]

- Luo, Z.; Wang, P.; Wang, B.; Tang, Y.; Xie, W.; Zhou, X.; Liu, D.; Lu, K. VulHawk: Cross-architecture Vulnerability Detection with Entropy-based Binary Code Search. In Proceedings of the 2023 Network and Distributed System Security Symposium, San Diego, CA, USA, February 2023. [Google Scholar]

- Zheng, J.; Pang, J.; Zhang, X.; Zhou, X.; Li, M.; Wang, J. Recurrent Neural Network Based Binary Code Vulnerability Detection. In Proceedings of the 2019 2nd International Conference on Algorithms, Computing and Artificial Intelligence, Hong Kong, China, 20–22 December 2019; pp. 160–165. [Google Scholar]

- Han, W.; Pang, J.; Zhou, X.; Zhu, D. Binary vulnerability mining technology based on neural network feature fusion. In Proceedings of the 2022 5th International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Wuhan, China, 22–24 April 2022; pp. 257–261. [Google Scholar]

- Duan, B.; Zhou, X.; Wu, X. Improve vulnerability prediction performance using self-attention mechanism and convolutional neural network. In Proceedings of the International Conference on Neural Networks, Information, and Communication Engineering (NNICE), Guangzhou, China, June 2022. [Google Scholar]

- Tian, J.; Xing, W.; Li, Z. BVDetector: A program slice-based binary code vulnerability intelligent detection system. Inf. Softw. Technol. 2020, 123, 106289. [Google Scholar] [CrossRef]

- Li, Y.; Ji, S.; Lyu, C.; Chen, Y.; Chen, J.; Gu, Q. V-Fuzz: Vulnerability-Oriented Evolutionary Fuzzing. arXiv 2019, arXiv:1901.01142. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Intel. Available online: https://software.intel.com/en-us/articles/intel-sdm (accessed on 7 January 2022).

- Feng, Q.; Zhou, R.; Xu, C.; Cheng, Y.; Testa, B.; Yin, H. Scalable graph-based bug search for firmware images. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, New York, NY, USA, 24–28 October 2016; pp. 480–491. [Google Scholar]

- Dai, D.H.; Dai, B.; Song, L. Discriminative embeddings of latent variable models for structured data. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2702–2711. [Google Scholar]

- Software Assurance Reference Dataset. Available online: https://samate.nist.gov/SRD/testsuite.php (accessed on 7 January 2022).

- Zou, D.; Wang, S.; Xu, S.; Li, Z.; Jin, H. μVulDeePecker: A Deep Learning-Based System for Multiclass Vulnerability Detection. IEEE Trans. Dependable Secur. Comput. 2021, 18, 2224–2236. [Google Scholar] [CrossRef]

- LLVM Compiler Infrastructure. Available online: https://llvm.org/docs/LangRef.html (accessed on 7 January 2022).

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Rawat, S.; Jain, V.; Kumar, A.; Cojocar, L.; Giuffrida, C.; Bos, H. VUzzer: Application-aware Evolutionary Fuzzing. In Proceedings of the 25th Network and Distributed System Security Symposium, San Diego, CA, USA, 26 February–1 March 2017; Volume 17, pp. 1–14. [Google Scholar]

- Zhang, G.; Zhou, X.; Luo, Y.; Wu, X.; Min, E. Ptfuzz: Guided fuzzing with processor trace feedback. IEEE Access 2018, 6, 37302–37313. [Google Scholar] [CrossRef]

- Song, C.; Zhou, X.; Yin, Q.; He, X.; Zhang, H.; Lu, K. P-fuzz: A parallel grey-box fuzzing framework. Appl. Sci. 2019, 9, 5100. [Google Scholar] [CrossRef]

- Xu, K.; Hu, W.; Leskovec, J.; Jegelka, S. How powerful are graph neural networks? arXiv 2018, arXiv:1810.00826. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4 December 2017; pp. 5998–6008. [Google Scholar]

- Kumar, S.; Chaudhary, S.; Kumar, S.; Yadav, R.K. Node Classification in Complex Networks using Network Embedding Techniques. In Proceedings of the 2020 5th International Conference on Communication and Electronics Systems, Coimbatore, India, 10–12 June 2020; pp. 369–374. [Google Scholar]

- Mithe, S.; Potika, K. A unified framework on node classification using graph convolutional networks. In Proceedings of the 2020 Second International Conference on Transdisciplinary AI, Irvine, CA, USA, 21–23 September 2020; pp. 67–74. [Google Scholar]

- Deylami, H.A.; Asadpour, M. Link prediction in social networks using hierarchical community detection. In Proceedings of the 2015 7th Conference on Information and Knowledge Technology, Urmia, Iran, 26–28 May 2015; pp. 1–5. [Google Scholar]

- Abbasi, F.; Talat, R.; Muzammal, M. An Ensemble Framework for Link Prediction in Signed Graph. In Proceedings of the 2019 22nd International Multitopic Conference, Islamabad, Pakistan, 29–30 November 2019; pp. 1–6. [Google Scholar]

- Ting, Y.; Yan, C.; Xiang-wei, M. Personalized Recommendation System Based on Web Log Mining and Weighted Bipartite Graph. In Proceedings of the 2013 International Conference on Computational and Information Sciences, Shiyang, China, 21–23 June 2013; pp. 587–590. [Google Scholar]

- Suzuki, T.; Oyama, S.; Kurihara, M. A Framework for Recommendation Algorithms Using Knowledge Graph and Random Walk Methods. In Proceedings of the 2020 IEEE International Conference on Big Data, Atlanta, GA, USA, 10–13 December 2020; pp. 3085–3087. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Cao, S.; Lu, W.; Xu, Q. Grarep: Learning graph representations with global structural information. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, Melbourne, Australia, 18–23 October 2015. [Google Scholar]

- Hex-Rays. Available online: https://www.hex-rays.com/products/ida/ (accessed on 7 January 2022).

- Networkx. Available online: https://networkx.org/ (accessed on 7 January 2022).

- Xu, X.; Liu, C.; Feng, Q.; Yin, H.; Song, L.; Song, D. Neural network-based graph embedding for cross-platform binary code similarity detection. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 363–376. [Google Scholar]

- Han, W.; Joe, B.; Lee, B.; Song, C.; Shin, I. Enhancing memory error detection for large-scale applications and fuzz testing. In Proceedings of the 25th Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018; pp. 1–47. [Google Scholar]

| Type | Content | Num |

|---|---|---|

| Instructions | Num of each instruction in Table 2 | 43 |

| Oprands | Num of void operands | 8 |

| Num of general registers | ||

| Num of direct memory references | ||

| Num of memory references using register contents | ||

| Num of memory references using register contents with displacement | ||

| Num of immediate operands | ||

| Num of operands accessing immediate far addresses | ||

| Num of operands accessing immediate near addresses | ||

| Strings | Num of strings “malloc” | 5 |

| Num of strings “callo” | ||

| Num of strings “free” | ||

| Num of strings “memcpy” | ||

| Num of strings “memset” | ||

| Total | 56 |

| Type | Detail | Num |

|---|---|---|

| Data Transfer Instructions | mov push pop | 3 |

| Binary Arithmetic Instructions | adcx adox add adc sub sbb imul mul idiv div inc dec neg cmp | 14 |

| Logical Instructions | and or xor not | 4 |

| Control Transfer Instructions | jmp je jz jne jnz ja jnbe jae jnb jb jnae jbe jg jnle jge jnl jl jnge jle jng call leave | 22 |

| Total | 43 |

| CWE ID | Type | #Secure | #Vulnerable | Total |

|---|---|---|---|---|

| 121 | Stack Based Buffer Overflow | 7947 | 9553 | 17,300 |

| 122 | Heap Based Buffer Overflow | 10,090 | 11,049 | 21,139 |

| 124 | Buffer Under Write | 3524 | 3894 | 7418 |

| 126 | Buffer Over Read | 2678 | 2672 | 5350 |

| 127 | Buffer Under Read | 3524 | 3894 | 7418 |

| 134 | Uncontrolled Format String | 11,120 | 8100 | 19,220 |

| 190 | Integer Overflow | 9300 | 5324 | 14,624 |

| 401 | Memory Leak | 5100 | 1884 | 6984 |

| 415 | Double Free | 2810 | 1786 | 4596 |

| 416 | Use After Free | 1432 | 544 | 1976 |

| 590 | Free Memory Not On The Heap | 3819 | 5058 | 8877 |

| 761 | Free Pointer Not At Start | 1104 | 910 | 2014 |

| Total | 62,448 | 54,468 | 116,916 |

| Dataset | #Secure | #Vulnerable | Total |

|---|---|---|---|

| ALL-DATA | 62,448 | 54,468 | 116,916 |

| TRAIN-DATA | 18,000 | 18,000 | 36,000 |

| DEV-DATA | 2000 | 2000 | 4000 |

| TEST-DATA | 2000 | 2000 | 4000 |

| Model | DEV | Recall TEST | GAP | Time (s) |

|---|---|---|---|---|

| SAViP | 0.782 | 0.7785 | 0 | 18.04 |

| without SM-F | 0.701 | 0.6905 | −8.8% | 11.86 |

| without STA-F | 0.7515 | 0.7445 | −3.4% | 16.56 |

| without STR-F | 0.745 | 0.7325 | −4.6% | 13.015 |

| Model | Semantic Content | Word Embedding Size | Recall | Time (s) | |

|---|---|---|---|---|---|

| DEV | TEST | ||||

| RoBERTa-s | 64 | 0.7655 | 0.7585 | 16.145 | |

| RoBERTa-o | opcode | 64 | 0.7515 | 0.751 | 16.12 |

| RoBERTa-32 | + opcode | 32 | 0.763 | 0.7575 | 13.41 |

| RoBERTa-64(SAViP) | + opcode | 64 | 0.782 | 0.7785 | 18.04 |

| RoBERTa-128 | + opcode | 128 | 0.791 | 0.7845 | 22.685 |

| RoBERTa-256 | + opcode | 256 | 0.813 | 0.8065 | 36.415 |

| Parameter | Value | Recall | Time (s) | |

|---|---|---|---|---|

| DEV | TEST | |||

| Statistical Size | 56 | 0.782 | 0.7785 | 18.04 |

| 280 | 0.789 | 0.7845 | 27.535 | |

| Depth | 0 | 0.743 | 0.7405 | 13.66 |

| 1 | 0.766 | 0.7665 | 16.865 | |

| 2 | 0.782 | 0.7785 | 18.04 | |

| 3 | 0.769 | 0.767 | 19.89 | |

| 4 | 0.753 | 0.752 | 21.475 | |

| 5 | 0.75 | 0.742 | 22.3 | |

| Iteration | 1 | 0.7425 | 0.74 | 14.895 |

| 2 | 0.7665 | 0.7615 | 16.45 | |

| 3 | 0.782 | 0.7785 | 18.04 | |

| 4 | 0.7725 | 0.775 | 19.305 | |

| 5 | 0.778 | 0.7775 | 21.03 | |

| Self-Loop | True | 0.782 | 0.7785 | 18.04 |

| False | 0.744 | 0.7415 | 14.355 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Duan, B.; Wu, X.; Wang, P. SAViP: Semantic-Aware Vulnerability Prediction for Binary Programs with Neural Networks. Appl. Sci. 2023, 13, 2271. https://doi.org/10.3390/app13042271

Zhou X, Duan B, Wu X, Wang P. SAViP: Semantic-Aware Vulnerability Prediction for Binary Programs with Neural Networks. Applied Sciences. 2023; 13(4):2271. https://doi.org/10.3390/app13042271

Chicago/Turabian StyleZhou, Xu, Bingjie Duan, Xugang Wu, and Pengfei Wang. 2023. "SAViP: Semantic-Aware Vulnerability Prediction for Binary Programs with Neural Networks" Applied Sciences 13, no. 4: 2271. https://doi.org/10.3390/app13042271

APA StyleZhou, X., Duan, B., Wu, X., & Wang, P. (2023). SAViP: Semantic-Aware Vulnerability Prediction for Binary Programs with Neural Networks. Applied Sciences, 13(4), 2271. https://doi.org/10.3390/app13042271