1. Introduction

Manufacturing is a complex process to produce goods. It is the process of changing raw materials into finished products with specific functions or values to fulfill the needs of customers. Automatic productions are widely used in contemporary manufacturing systems. Even in the highly industrialized era, workers are still required to perform certain tasks which may not be easily accomplished by machines [

1]. With the advancement of science and technology, workers have opportunities to use sophisticated digital tools to accomplish their tasks more easily. One of the advancements in technology that facilitates the progress of industrialization is augmented reality (AR) [

2].

AR is the technology to superimpose computer-generated images onto a real environment [

3]. It is characterized by combining virtual objects in a real environment and allowing human interaction with the virtual objects in a real time manner [

4,

5]. AR is achievable in different ways. However, the most common approach to realize this technology is via the use of AR devices such as AR glasses [

6]. A set of AR glasses is a transparent displayer which may display both 2D and/or 3D images in the visual field of the users. The images do not block the vision of the real world so that the users may see both what actually exists and those virtual visions generated by the AR device. The displayed images of the device may be assistive and supplemental to the users when incorporated into the real world. They may also be independent objects for special purposes.

AR devices have been applied for various purposes. They have been used as guidance for wire assembly tasks [

7], product planning and design [

8,

9], logistics [

10,

11], maintenance and repairment [

12,

13], assembly and disassembly tasks [

14,

15,

16,

17], training and practices in both dentistry [

18] and surgery [

19,

20], and so on. Empirical studies have shown the advantages of AR in training automotive service technicians [

21,

22] and medical students [

19].

Assembly tasks are fundamental in both manufacturing and maintenance operations [

14]. They may include alignment and fastening to put two or more parts together [

23,

24]. Disassembly tasks, on the other hand, refer to separating and removing parts in order to repair or replace them. They are fundamental in maintenance operations. Both assembly and disassembly are performed following specific standard operating procedures (SOPs) [

25]. Assembly in mass productions is normally accomplished using automatic or semi-automatic machines unless they are technically unfeasible. Small lot size assembly and many disassembly tasks, on the other hand, are commonly performed manually. The SOPs for assembly and disassembly tasks are established by engineers and designers. They are established so that workers may follow them to perform the tasks successfully. Experienced and skilled workers may perform the tasks without reading the SOPs if they are very familiar with them. Novice or inexperienced workers, however, need to read those procedures either step by step or occasionally in order to complete their tasks.

Traditionally, hard copies of SOPs, such as printed manuals, are provided at workplaces. Inexperienced workers may read them step by step to accomplish their tasks. The procedures may also be presented on a computer monitor. Both printed manual and video presentation of job instructions have been used for a long time. Presenting a SOP in an AR device has been a relatively new approach in providing support to workers. There are studies discussing the use of an AR device at workplaces [

26,

27,

28]. The literature has shown inconsistent results in using an AR device to convey job instructions in facilitating the assembly and disassembly tasks. For example, Tang et al. [

29] has shown that using an AR device in assembly tasks reduced 82% of error rate. Several other authors, such as Fiorentino et al. [

30], Wang et al. [

16], and Masood and Egger [

31], have shown that an AR device may improve worker efficiency and reduce the cost in both assembly and disassembly tasks. On the contrary of these findings, the literature [

32,

33] has also shown that the performance of wearing a set of AR glasses was not significantly higher than those without using such a device. There are even reports that AR devices could cause safety and health problems for workers including musculoskeletal problems [

34] and motion sickness [

35]. It is apparent that more studies are required to discover the benefits and applicability of using AR technology in assisting workers to perform assembly and disassembly tasks.

The purpose of this study was to compare the difference between using an AR device to convey job instructions and using traditional job instructions, including paper manual and computer monitor display, in assembly and disassembly tasks as performed in common maintenance and repairment services. In addition to the time to complete the task, the quality of the tasks being performed was assessed in terms of the number of errors made by the participants. Our hypothesis was that using an AR device to present job instructions may either improve the efficiency or the quality of the tasks being performed. The usability of the types of job instructions were also analyzed and discussed.

2. Materials and Methods

In this section, we introduce the participants, hand dexterity test, steps of assembly and disassembly tasks, types of job instruction, and experimental design and data analyses of this study.

2.1. Participants

A total of 21 participants, including 11 males and 10 females, were recruited. All the participants were college students without computer assembly and disassembly experience. Fifteen (nine males and six females) of them were right-handed. The other six (four females and two males) were left-handed. A Landolt C chart and a color book were used to examine the visual acuity and color deficiency of the participants. The mean (±SD) of the age and decimal visual acuity (both eyes) were 20.70 (±1.34) yrs and 0.91 (±0.27), respectively, for females and 19.91 (±2.16) yrs and 0.95 (±0.22), respectively, for males. The stature and body weight of female participants were 158.91 (±5.53) cm and 56.30 (±12.22) kg, respectively, and 172.55 (±5.84) cm and 66.73 (±12.56) kg, respectively, for male participants. None of the participants had color deficiency. Each participant received a cash reward of TWD 600 for their participation in the experiment.

2.2. Hand Dexterity Tests

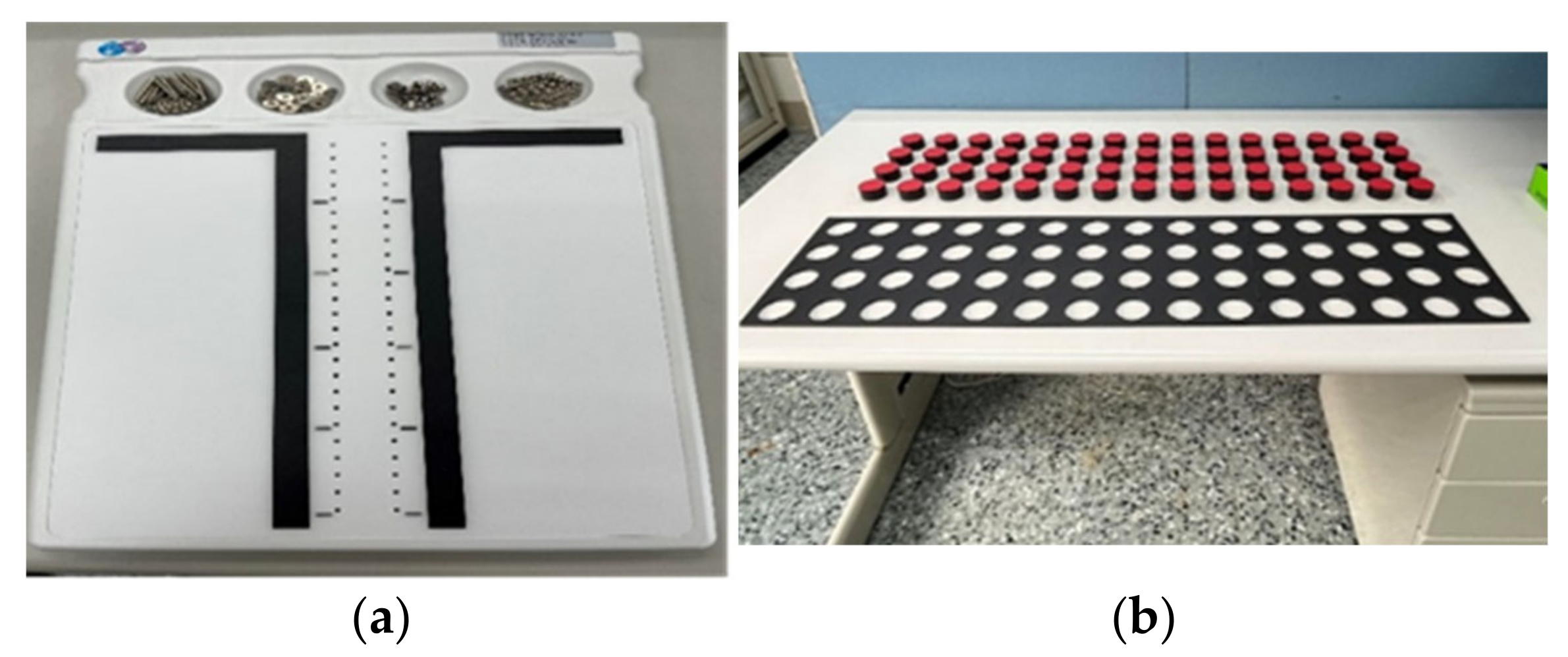

We measured the hand dexterity of the participants using both the Purdue Pegboard Test (PPT) and the Minnesota Dexterity Test (MDT) (see

Figure 1). The test kits were placed on a table 75 cm high. The PPT required the seated participant to place as many pegs into the holes in 30 s, first with each hand separately and then together, followed by the assembly of as many pegs, washers, and collars as possible in 1 min [

36,

37,

38,

39]. We recorded the average numbers of pegs completed for two trials both using dominant hand in 30 s and assemblies of pegs, washers, and collars using both hands in 1 min for each trial. The MDT test included a turning test and a placing test [

40,

41]. The participants stood in front of the table to perform the tests. We recorded the average times of two trials to complete the placing test using dominant hand and the time to complete the turning test using both hands.

2.3. Assembly and Disassembly Tasks

A desktop computer (ASUS

®, ASD760, Taipei, Taiwan; see

Figure 2) was prepared for the assembly and disassembly tasks on a table 75 cm above the floor. In computer maintenance and repairment services, only partial components are disassembled and assembled. In this study, we requested our participants to disassemble and assemble the following components: a fan unit, a power supply, a DVD drive, and a motherboard (see

Figure 3).

The steps of assembly and disassembly tasks are shown in

Table 1.

For each assembly and disassembly task, a total of 20 screws needed to be tightened or unscrewed and 4 cable connectors needed to be plugged or unplugged.

2.4. Job Instructions

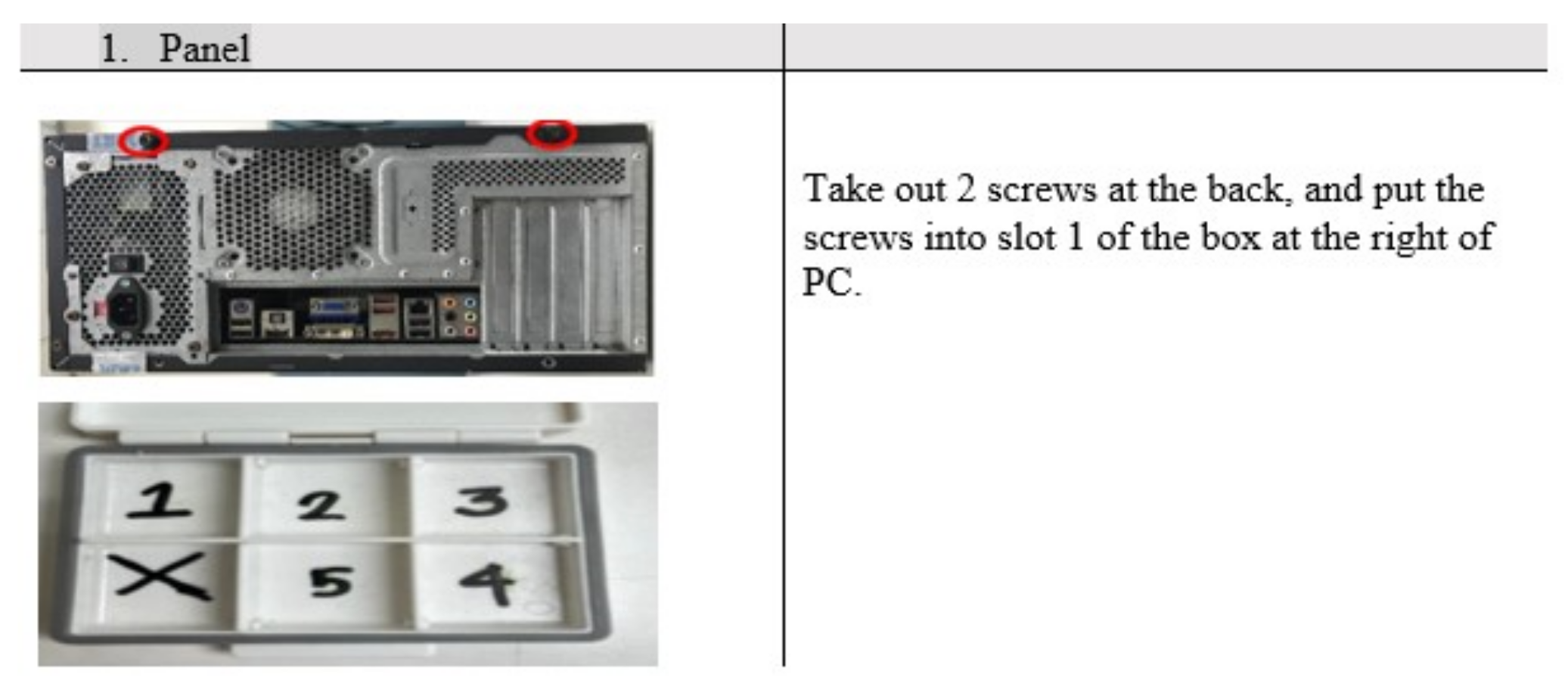

The job instructions or the steps of assembly and disassembly of the computer components were presented in three different ways, including a paper manual, a video displayed in a laptop computer, and an AR display. For all these job instructions, there were no vocal explanations.

For the printed manual, Chinese versions of both the disassembly and assembly steps are printed as two 6-page manuals. The steps in these manuals are illustrated using text accompanied with a photo highlighting the locations of the screws and cable connections (see

Figure 4). For video display on a laptop computer (Acer

® Swift 3, SF314-42, New Taipei, Taiwan), the steps were shown in a video without text explanation (see

Figure 5). The participant could click to pause, reverse, and continue to see what he or she needed to do. The locations and connections were not highlighted in this condition because they were obvious in the video.

For AR-displayed condition, a set of smart glasses (HoloLens 2, Microsoft

® Inc., Redmond, WA, USA) was adopted as the AR device in presenting the instructions of the tasks (see

Figure 6). This device encompasses see-through holographic lenses. Users may see both virtual instruction and the components in the computer. The Dynamics 365 Guides app (Microsoft

® Inc., Redmond, WA, USA) in the HoloLens 2 was adopted to present the job instructions. The text (in Chinese) of each step for both assembly and disassembly tasks were incorporated into the Dynamic 365 app. Virtual circles and squares were added using the AR toolkit to highlight the locations of the screws and cable connections, respectively (see

Figure 7), so as to provide supplemental information. When a cable needed to be plugged or unplugged, a virtual hand appeared to highlight the cable (dis)connection operation (see

Figure 8). Virtual hand(s) also appeared when pushing or pulling a component was required (see

Figure 9 and

Figure 10). The virtual hand(s) disappeared after the participant had followed this instruction. The participant might click on a virtual arrow on the top right corner of the instruction to go to the next step when he/she has completed one step using his/her index finger.

All the participants could see and read the steps of assembly and disassembly tasks presented by the three types of job instruction without difficulty.

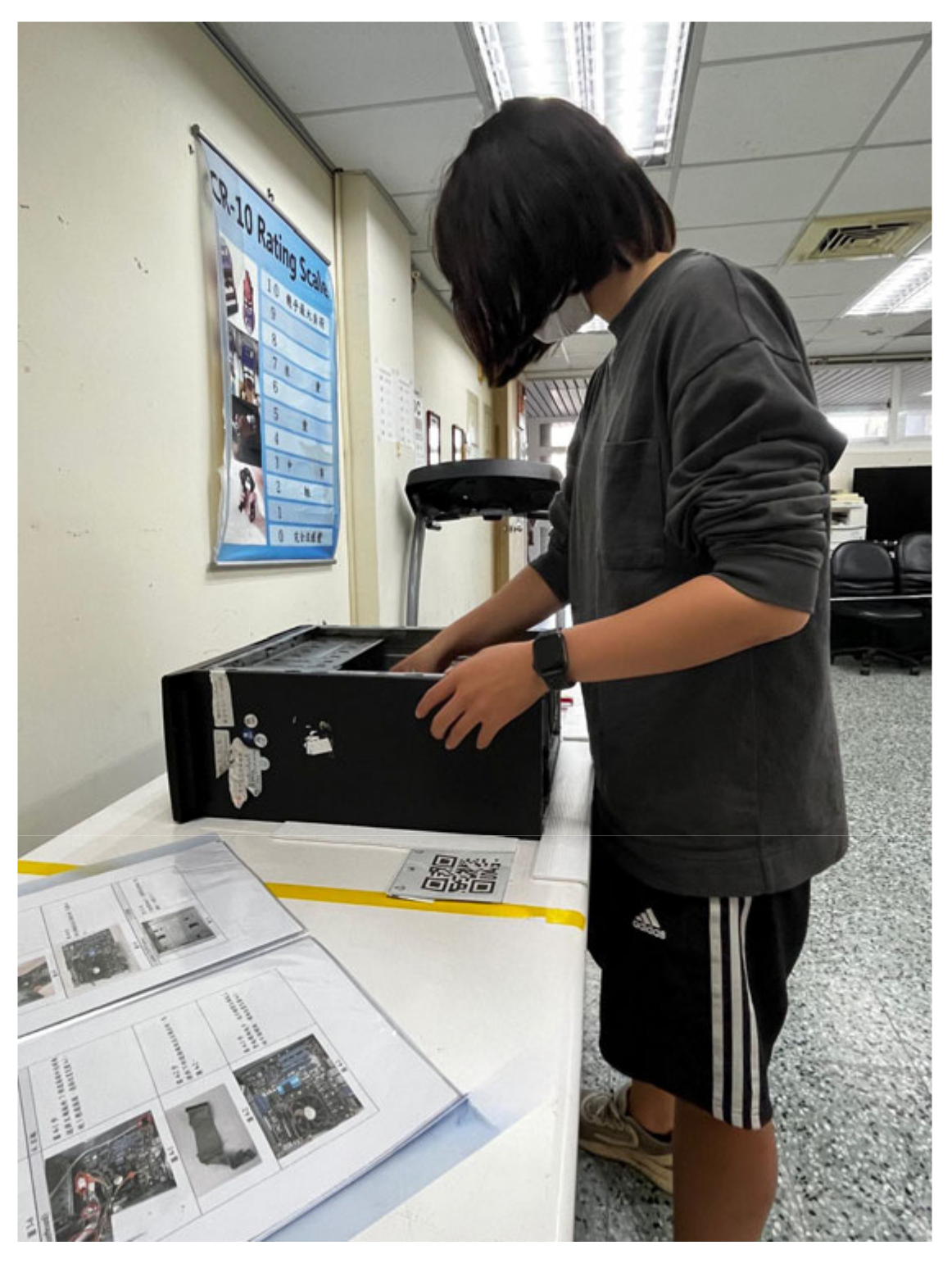

2.5. Procedure

Before the experiment, an experimenter explained the purposes and procedures of the experiment. The components, cables and connectors, and screws were displayed, and the participants were requested to be able to identify them. Each participant attended six trials or treatments comprised of three types of instruction (printed manual, computer display, and AR display) and two tasks (assembly and disassembly). For the assembly task, the components were placed in each assigned location on the table by the experimenter before a trial. For the disassembly task, the location for each component disassembled was marked using colored tapes. For each trial, the participant was instructed that he or she needs to follow the instructions to complete the task. For the printed manual and laptop computer conditions, the printed manual and laptop computer were placed on the left of the computer to be handled (see

Figure 11 and

Figure 12). For the AR condition, the virtual manual was activated by an experimenter. The participant put on the HoloLens 2 head piece and had an opportunity to practice using the hand gesture to interact with the virtual manual. After the participant felt he or she could use this device without difficulty, he or she then started to perform the task once after hearing a vocal signal (see

Figure 13). The trial ended when the participant completed the last step. The time to complete the task was recorded using a stopwatch.

An error in performing the task was recorded when the participant tightened or unscrewed a wrong screw or in a wrong screw hole, plugged or unplugged a wrong cable or in a wrong slot, and picked up or put a screw in a wrong grid in the container. In addition, the participant was required to fix each screw tight enough and press firmly when connecting cables in the assembly task. An error was also recorded if the participant did not put a screw tight enough or did not press the connector on a cable into the slot firmly (this could lead to missed connection) in the assembly task. An experimenter observed the participant performing the trial. She recorded the number of errors without telling the participant.

After the participant completed the trial, he or she filled out a rating scale of system usability (SUS) [

42,

43] to measure the usability of each job instruction type. The ratings of the SUS include 10 questions (see

Appendix A). The participant rated each question on a 5-point Likert scale (1—strongly disagree to 5—strongly agree). After the participant completed one trial, he or she took a break of 4 h or longer before he or she joined the next trial so as to avoid the effects of fatigue on the dependent variables.

2.6. Experimental Design and Data Analyses

A completely randomized block design experiment was performed. As mentioned earlier, the order of the trials was randomized for each participant. A total of 126 trials (21 participants × 3 job instructions × 2 tasks) were performed. The results of the PPT and MDT were recorded to indicate the hand dexterity of the participants. The dependent variables were time to complete the task and rating of the SUS. For the SUS, the score of each question ranged between 1 and 5. The calculations of the total score followed those recommended by Brooke [

42]. In addition to the total score, the individual scores of questions 3 (ease of use), 4 (need support from a technical person to use this system), and 10 (need to learn many things before using this system) were also analyzed.

Descriptive statistics were performed. Analyses of variance were performed to determine the significance of the factors affecting the dependent variables. Duncan’s multiple range tests were performed if the main effects of the factors reached the α = 0.05 level of significance. Pearson’s correlation coefficients between the hand dexterity scores and the time to complete the task were calculated to determine the correlation between the variables. Statistical analyses were performed using the SPSS® 20 software (IBM®, Armonk, NY, USA).

4. Discussion

The order of the assembly/disassembly and types of job instructions were randomly arranged for each participant. It was, therefore, reasonable to assume that learning effects contributed equally to both the task and type of job instruction in this study.

The time to complete the task was adopted as the main variable to indicate the performance of the participants. For inexperienced participants, assembly tasks required significantly (

p < 0.05) longer time to complete than disassembly. This was not surprising at all. Disassembly is always easier and less time consuming than assembly. Our data showed that disassembling the four components needed only 60% of the time of assembling them. Gender was also a significant (

p < 0.05) factor affecting the time to complete the assembly/disassembly tasks. Males could complete their tasks faster than their female counterparts. The time to complete the tasks for male participants was only 90% of their female counterparts. This gender difference might be attributed to the motor skills of males and females to tighten and loosen the screws. For job instructions, the participants using AR displays spent significantly (

p < 0.05) longer time than those of the video display and printed manual conditions. Using AR display, on average, spent 118 and 87 s longer than those video display and printed manual, respectively, in performing the assembly and disassembly tasks. This was consistent with the findings in the literature [

32,

33] that wearing an AR device might not lead to better efficiency than those who did not wear one and watched a wall-mounted monitor instead. This, however, was inconsistent with the findings of some of the other literature [

16,

30,

31]. All the participants were familiar with clicking a mouse to activate a continue button on a laptop display and to flip a sheet of paper of the manual. On the contrary, none of them were familiar with tapping on the “next page” button on the virtual-displayed instruction on the HoloLens 2. This might result in a longer time accessing the job instructions. The virtual hand(s) to highlight the push/pull movements was one of the innovations of the job instruction we created using the Dynamic 365 app in HoloLens 2 for the tasks. They clearly indicated what the participants should do using their hand(s). However, the participants might be distracted by this innovation and responded slower because of the attraction of the hand(s).

In addition to the time to complete the task, the errors made by the participant was also an important variable to assess the performance of the tasks. There were no errors made in the disassembly tasks. There were, however, 28, 13, and 7 errors in the printed manual, video display, and AR display conditions for the assembly task. The majority of the errors were observed when the participant was assembling the power supply. This component has three cables. Two of them needed to be plugged into two slots on the motherboard and the third needed to be plugged into the DVD drive. Most of the errors were that the participant simply put the connector into the slot without pressing it firmly. An assembly task failed so long as any cable connector was not well connected. The numbers of unsuccessful assembly for the printed manual, video display, and AR display conditions were 14, 8, and 5, respectively, out of 21. Job instruction via AR display apparently led to less failed assembly, which implies better quality of the tasks. This advantage might be attributed to innovation of virtual hand(s) to highlight the hand movements to be performed. Our results in the errors associated with the AR device were consistent with the findings of Tang et al. [

29].

The hand dexterity of the participants was measured using both the PPT and MDT. The PPTd was negative correlated with the time to complete the assembly task for both video (−0.47, p < 0.05) and AR instructions (−0.52, p < 0.05). This was reasonable because high PPT number implies high hand dexterity. High hand dexterity should be associated with less time to complete the task. The correlation coefficients between the PPT scores tested and the time to complete the tasks for other task/job instruction conditions were, however, insignificant. For the MDT results, the MDTd (0.43, p < 0.05) and MDTt (0.46, p < 0.05) were both positively correlated with the time to complete the mission for video-displayed job instruction tasks. This was also reasonable because low MDT time implies high hand dexterity, which should be associated with less time to complete the task.

Usability is a core item in assessing a device or a system for human use. It has been defined as “the effectiveness, efficiency, and satisfaction with which specified users can achieve goals in particular environments” [

44]. We used the SUS scores [

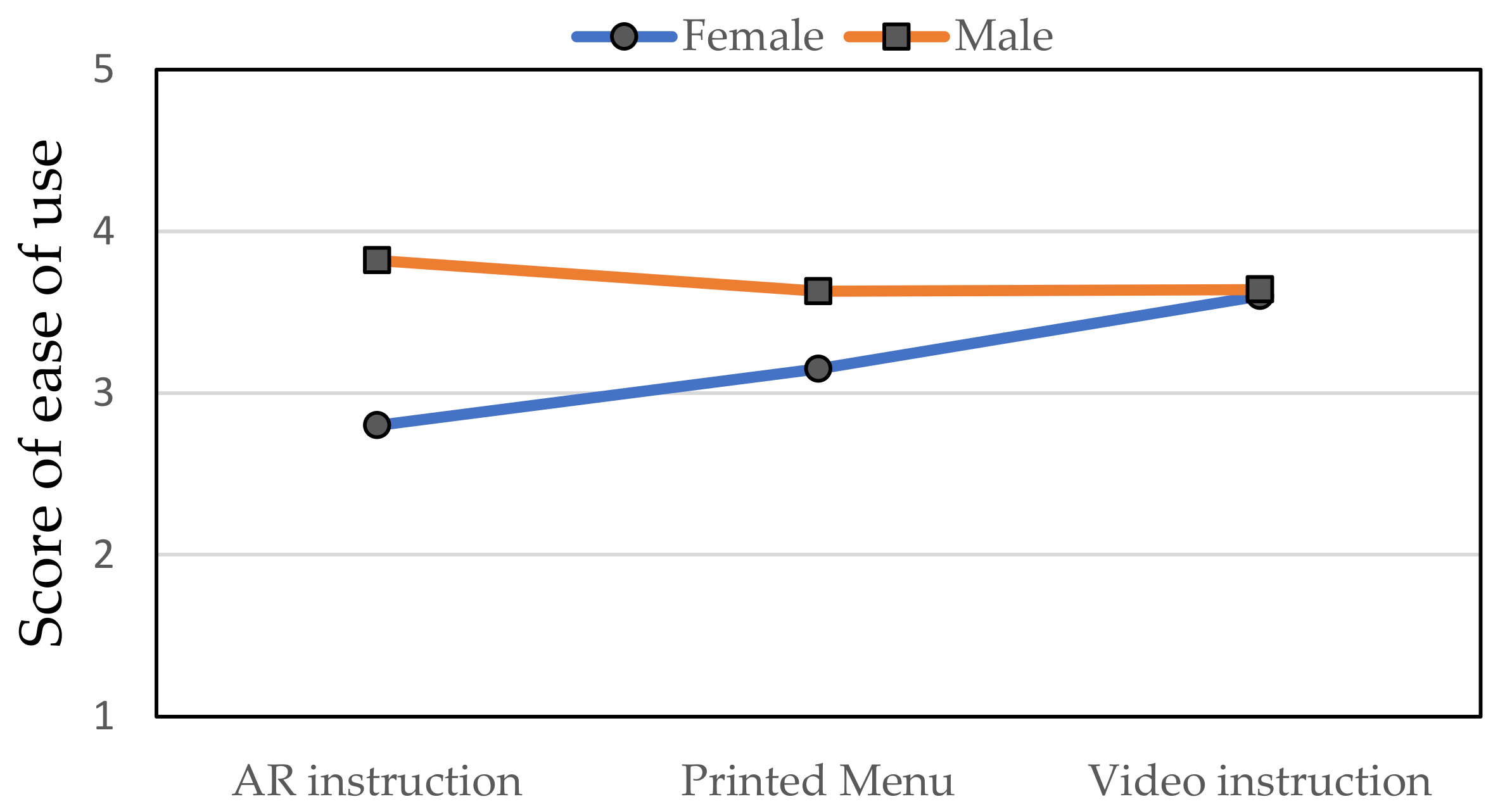

42] to assess the usability of the type of job instructions for male and female participants. In addition to the total score, we specifically analyzed the individual score of questions 3, 4, and 10, which assess the ease of use, need support from a technical person, and need to learn, respectively. The score of ease of use of the SUS results showed that both gender and gender × job instruction interactions were significant (

p < 0.05).

Figure 14 shows that the difference of the score of ease of use between males and females was most significant for the AR instruction condition, next for printed manual condition. For the video display condition, there was no difference between the two genders. This implies that AR instruction was easier to use for males than for females. Regarding the question asking the need of support from a technical person to use a system, female participants responded with a significantly higher score than male participants. This implies that females needed support from a technical person more than males. Comparing the need to learn for different job instructions, the score of AR instruction was significantly higher than those of the printed manual and video instruction. This was consistent with what actually happened in that all the participants needed both detailed instructions from the experimenter on how to use the HoloLens 2 to access the job instructions and to practice. For the other two types of job instructions, only simple verbal instruction was required.

There are limitations to the study. There were essential differences among the three types of job instructions. The information displayed on printed manual and AR display were both static and the image displayed on video was dynamic. For printed manual, the participants needed to flip the printed manual three times to read all six pages. For AR display, the participants needed to click 12 and 11 times on the arrow button to go to the next step for the assembly and disassembly tasks, respectively. For video display, the number of clicking the mouse to pause and display was dependent on the participant. The participants might watch multiple steps and make one mouse click to pause. This might reduce their time in performing the tasks. These might be the main reasons that AR display was most time consuming and video display was most efficient. In fact, dynamic information such as video display may also be presented via the HoloLens 2. Future research may be designed to compare the difference between presenting static and dynamic job instruction on an AR device. Another limitation of this study was that we did not consider vocal information in our job instructions. In fact, vocal instruction may be added to both the video and AR displays. Future research may also be designed to study the effects of vocal information in the job instruction on the performance of assembly and disassembly tasks. In addition, we only considered four components in the assembly and disassembly tasks. Assembly and disassembly of all the components in a computer may be investigated in the future using both AR and other types of job instruction presentation. Finally, it should be noted that there are many other AR devices, and we only used the HoloLens 2 in the AR instruction condition. Our results may not be generalized to AR devices other than the HoloLens 2.