Abstract

In order to solve the problem of manual labeling in semi-supervised tree species classification, this paper proposes a pixel-level self-supervised learning model named M-SSL (multisource self-supervised learning), which takes the advantage of the information of plenty multisource remote sensing images and self-supervised learning methods. Based on hyperspectral images (HSI) and multispectral images (MSI), the features were extracted by combining generative learning methods with contrastive learning methods. Two kinds of multisource encoders named MAAE (multisource AAE encoder) and MVAE (multisource VAE encoder) were proposed, respectively, which set up pretext tasks to extract multisource features as data augmentation. Then the features were discriminated by the depth-wise cross attention module (DCAM) to enhance effective ones. At last, joint self-supervised methods output the tress species classification map to find the trade-off between providing negative samples and reducing the amount of computation. The M-SSL model can learn more representative features in downstream tasks. By employing the feature cross-fusion process, the low-dimensional information of the data is simultaneously learned in a unified network. Through the validation of three tree species datasets, the classification accuracy reached 78%. The proposed method can obtain high-quality features and is more suitable for label-less tree species classification.

1. Introduction

Due to the wide distribution and significant area of forests, building large-scale labeled datasets is a highly laborious process [1]. More and more attention was paid to real-time monitoring and forest protection [2,3,4,5,6,7,8]. In particular, from unlabeled samples of large-scale areas, there is an urgent need to identify the distribution information of tree species. Therefore, the defect of the supervised learning model relying on labeled samples strongly hinders the deep learning method to be applied in tree species classification. To solve the challenge of samples unlabeled in deep learning, some experts and scholars conducted a lot of research [9,10,11]. They developed many alternative methods for supervised learning, such as unsupervised learning, semi-supervised learning, weakly supervised learning, and self-learning [12,13]. From the deep learning methods, recently, self-supervised learning (SSL) attracted significant attention in computer vision and remote sensing [14]. Some SSL algorithms outperformed supervised learning methods for many problems by extracting representative features from unlabeled data. The self-supervised learning model extracts representative features, which helps classify pixels from multisource data efficiently. Recently, self-supervised learning methods were applied in tree species classification. Researchers combined LiDAR and RGB images in a self-supervised RetinaNet to classify tree species [6]. A similar model was proposed for urban tree species classification with multisource images [15]. Although self-supervised learning succeeded in computer vision, the potential of forest remote sensing is not yet realized. Until now, there was no record in the literature of multisource remote sensing images used to classify tree species with the self-supervised learning method, especially HJ-1A and Sentinel-2 images. Therefore, it is a challenge to study tree species classification based on self-supervised learning for multisource forest remote sensing images.

Self-supervised learning can extract effective information from unlabeled samples [16,17,18,19,20], and tree species classification can be accomplished by extracting features from multi-source remote sensing images through self-supervised learning methods. Self-supervised learning methods were studied as an extended taxonomy: generative, contrastive, and joint methods.

Generative learning aims to map the training data to a particular distribution pattern [21]. According to the input distribution, beneficial features or fake sample extractors similar to the actual data are obtained. For HSI/MSI classification, stacked sparse autoencoder (SSAE) uses the autoencoder to extract sparse spectral features and multiscale spatial features. 3D convolution autoencoders (3DCAE) and CapsNet are assisted by GAN (TripleGAN) [22,23].

The contrastive learning method aims to distinguish from different samples. After training by comparing different samples, the discriminator is employed in image classification tasks [24]. The enhanced views of the same input are embedded next to each other while trying to separate embeddings from different inputs. Adding all features generated in different ways from the same class is known to improve the similarity between them, which tends to cluster their representation in the feature space so that the computation cost is less than generative learning methods. The cascade network and the contrastive loss method are often used in contrast learning [25], and the difference between them lies mainly in their different ways of collecting negative samples. SimCLR [26] uses a large number of different scales data for training to obtain a contrast loss value more representative of the image domain. MoCo [27] uses contrastive learning as the dictionary lookup, where dynamic dictionaries with queues and average-shift encoders are constructed to make the large and consistent dictionaries to be built for facilitating contrast unsupervised learning. SimSiam [28] collects negative samples by reducing the batch size.

The above learning method combines them to generate negative samples and employs discriminators for the input to distinguish them from samples. Prototypical contrastive learning (PCL) combines the clustering algorithm with contrast learning and achieves desirable performance [29]. ContrastNet [30] shows strong self-supervised learning capabilities when faced with ordinary images, which take advantage of two methods based on PCL and MoCo.

However, the above joint self-learning methods are difficult to apply directly to the multisource images for tree species classification. Because of the large difference in the distribution of HSI and MSI, it is difficult to integrate two data source features for self-supervised learning. In the paper, we explored the usefulness of multisource images for feature learning rather than learning by a single source. HSI and MSI for different features of the same sample are augmented by joint learning ability for tree species classification, respectively. In addition, in the process of feature fusion, the computation of training is reduced by the discriminant learning method.

Based on the mechanism of the autoencoder, the MAAE and MVAE module are proposed for data augment. Different distributions generated by MAAE and MVAE can provide more features for tree species classification. MAAE and MVAE learn the HSI and MSI data features, respectively, and generate four data features: h-AAE, m-AAE, h-VAE, and m-VAE. Then they are fused to the downstream task of M-SSL to output the tree species classification map. M-SSL only learns the features from the pretext task. The M-SSL is described in detail below.

2. Materials and Methods

2.1. Study Area

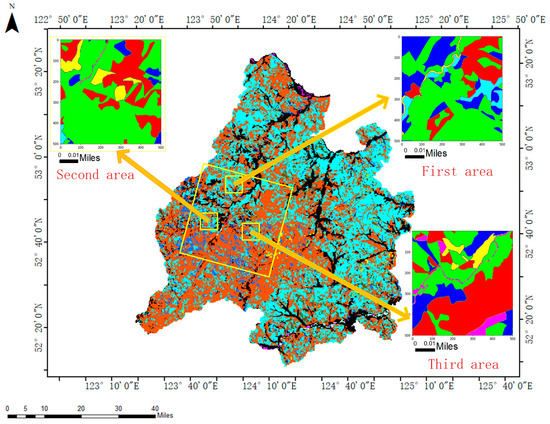

The areas were studied in the Tahe Forestry Bureau Figure 1, which is located in the Daxing’an Mountains, northwest of Heilongjiang Province from China (123° to 125° E and 52° to 53° N). The studied areas are with a borderline of 173 km and a total area of 14,420 km2, the climate of which is a cold–temperate continental climate and experiences severe climatic changes, with short, hot, humid summers, and long, dry, and cold winters. The annual average temperature of the area is −2.4 °C, and the average yearly precipitation is 463.2 mm, mainly in July and August. The forest, with a storage capacity of 53.4 million m3 covers 81% of the total area. Dominant tree species include birch, larch, spruce, mongolica pine, willow, and poplar [31].

Figure 1.

Map of the study area.

2.2. Data

We studied HSI from HJ-1A and MSI from Sentinel-2 for tree species classification. From the China Center for Satellite Data Application of Resource, HSI was obtained, and MSI was obtained from the United States Geological Survey (USGS). They are presented in Figure 1. Both kinds of data were captured from August to September 2016.

The spatial resolution of the Sentinel-2A satellite image is 10 m [32]. There is a high-resolution image instrument with 115 bands in the HJ-1A satellite, the spatial resolution of which is 100 m, which could not obtain high accuracy in the present tree species classification studies [33]. Therefore, fusing Sentinel-2A with a high spatial resolution image was necessary to improve tree species classification accuracy. To provide the gap in the relative low resolution of the HSI, the spatial resolution of the HJ-1A HSI image was up sampled to 10 m with the bilinear interpolation method from ENVI 5.1 software. HSI and MSI of the study areas had the same spatial resolution of 10 × 10 m2 by resampling HSI. Furthermore, the labels of the dominant tree species were obtained from the second-class data surveyed in 2018.

Due to the large room of the forest, the area with the most tree species was selected for the experiment, and the size of pixels from HSI and MSI was 500 × 500 × 115 and 500 × 500 × 13, respectively, and the spatial resolution of a pixel is 10 m. Birch, larch, spruce, Mongolia, willow, and poplar are studied as dominant species. In the experiment, three datasets are set up, and the number of samples from them is presented in Figure 2 and Table 1.

Figure 2.

Map of three tree species datasets from the study area.

Table 1.

Tree species samples from three datasets of the study area.

2.3. Classification Models

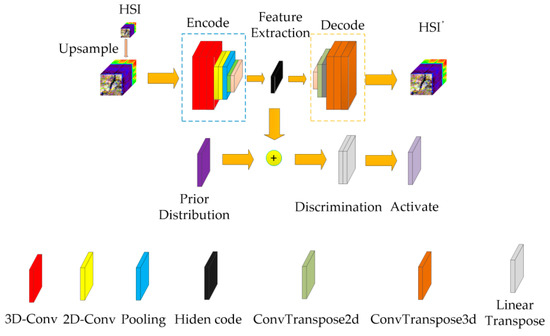

2.3.1. Feature Extraction Based on MVAE

AE consists of an encoder network and a decoder network . The aim of AE is to make x and x′ as similar as possible (e.g., by mean squared error). Sometimes the number of latent samples is more than the input, and the sparsity is constrained. Because of the plenty of computation for AE, a variational autoencoder (VAE) was proposed to reduce the computation by optimizing the distribution of latent codes, where KL divergence and reparameterization methods are applied to make random samples decoded into simple distribution [34] for data augment of multi-source remote sensing images. Based on the VAE model, MVAE is designed to extract features from them (composed of H-VAE and M-VAE). The dimension of HSI/MSI is reduced by PCA in h-MVAE, as shown in Figure 3. Then, the image is segmented into ‘a × a × c’ shape by a sliding window, where, ‘a’ denotes the size of the sliding window and ‘c’ denotes the image channel after preprocessing. The encoder structure adopts a mixed convolution mode to extract feature information. In this paper, multiple 3D-Conv and 2D-Conv are used for extracting the spatial and spectral information. Mixed ConvTranspose3d and ConvTranspose2d layers are used to construct the decoder part. In addition, to avoid the risk of overfitting, h-MVAE used a batch normalization layer after the convolutional operation. Each linear layer is bound to Smish [35], except the output layer. In the module, for the purpose of the size of reconstruction before and after being consistent, an average pooling layer is used to fix the shape of the feature map.

Figure 3.

Feature extraction with MVAE for HSI (h-MVAE).

Based on the original VAE algorithm, two variables μ and ν obtained by the encoder, and then the latent code z, were calculated follows:

here, γ denotes the random value drawn from the normal distribution.

The loss function of the VAE module consists of distribution loss of latent codes and reconstruction loss, which is represented by Equation (2), where LZ denotes loss of latent codes and Lre denotes loss of reconstruction, N denotes the number of samples, and LZ calculates the KL divergence between the latent codes in the normal distribution. In Equation (3), the shape of the input image I is a × a × c, and the shape of the output image for decoder is the same as I.

The whole loss function L can be presented by Equation (4), which is the sum of the loss of latent codes and the loss of reconstruction.

In MVAE, the feature extracted from MSI is similar to HSI as a pretext task. The difference between them was that HSI was preprocessed before that. HSI and MSI are used as input to calculate and , respectively. After the VAE module is trained, all patches are mapped into the VAE, which provides data augmentation for downstream tasks of tree species classification.

2.3.2. Feature Extraction Based on MAAE

Although the adversarial method absorbs the advantages of the generative method and the contrast method, it may lead to unstable convergence. Based on adversarial thoughts, adversarial autoencoders (AAE) apply adversarial restrictions to latent codes. When the adversarial network (GAN) is trained with latent codes and samples, which are randomly selected from the normal distribution, they are changed into desired ones. The distribution of latent codes generated by the AAE algorithm differs from that of the VAE algorithm.

Similar to the MVAE module, the MAAE module first adopts the PCA algorithm to reduce the dimension of the HSI. Figure 4 shows the process of extracting features from the MAAE module for HSI, where the encoder outputs the latent code directly and acts as a GAN generator, and decoder modules are similar to MVAE. In h-MAAE, the latent code is both the input of the discriminant and the output of the generator. In addition, another input to the discriminant is a random variable that draws from a normal distribution of samples.

Figure 4.

Feature extraction with MAAE for HSI (h-MAAE).

The training of MAAE consists of reconstruction and regularization parts. In the part of the reconstruction, the reconstruction loss is used for calculating loss presented in Equation (5), where, denotes the reconstruction loss function, which is defined in the same way as . In another part, the decoder and encoder are optimized in order. To stabilize the training process of GAN, the Wasserstein GAN (WGAN) loss is used for the discriminator and encoder optimization. The discriminant loss and encoder loss functions are represented by Equations (6) and (7), respectively, where, denotes the discriminator loss and denotes the generator loss. denotes the generated sample distribution, which is represented as latent code, is the actual sample that is drawn from the normal distribution, and x denotes the random sample of and .

Similarly, HSI and MSI are pre-trained by AAE to generate two coding features, respectively. Images of two sources are mapped to the same subspace to generate their correlation characteristics, and the extracted features are input to the downstream task of tree classification as data augment.

2.3.3. M-SSL Model for Tree Classification Based on Multisource Images

The proposed M-SSL comprises two pre-trained models and a contrast network model. The two modules train HSI and MSI, respectively, and extract features. This M-SSL is presented in detail next.

In M-SSL, which was proposed for multisource remote sensing imagery, ContrastNet was applied to reduce the need for deep networks because it only deals with low-dimensional vectors. M-SSL is separated into two parts to reduce the computational cost of each component, by formatting fixed vectors (such as 1024 d) for image coding, feature extraction, and contrast learning. After training the pretext modules, features can be extracted from the input of HSI and MSI images, respectively. A small proportion of samples are trained to obtain satisfactory classification performance.

According to the features extracted from the pretext task for multi-source images, the downstream task is carried out for tree species classification. In this paper, a multisource self-supervised learning model (M-SSL) for tree species classification is proposed based on PCL. The training samples of the dataset are represented as , where, , and n denotes the number of training samples. The goal of PCL is to find the optimal embedding input so that the image X is transformed into the dimension as W, . The transform function is represented as , where was transformed into . The objective function is calculated by InNCE loss, and the equation is presented as follows:

where denotes the sample of input, denotes a positive embedding, r denotes the negative embedding of other instances, and τ denotes the temperature hyperparameter. These embeddings are obtained as with the input and , which is the average momentum encoding.

When minimizing , the distance between and becomes closer, and the distance between and r negative embedding samples becomes further. However, the original loss function has the same concentration to some extent because it uses a fixed parameter τ. However, when calculating the distance between and each negative embedding, some negative embedding samples are not representative.

Therefore, PCL proposed a new loss function [36]. As shown in Equations (9) and (10) based on , where, the parameter is used instead of w in the loss function , and the original parameter τ is replaced by the estimated parameter for each prototype concentration.

The detailed algorithm of M-SSL is shown in Algorithm 1. In the M-SSL algorithm, there are two encoders for query encoding and momentum encoding, respectively. The query encoder was transformed from to , and the momentum encoder was assigned from to ; and were obtained by the same , but the two inputs of appropriate data augmentation for increased. For example, was obtained by geometry transformation, and was obtained by means of applying random cropping. Additionally, the query encoder ε and momentum encoder are presented as Equation (11). The learning process of the M-SSL model is implemented as Algorithm 1, where, K-Means denotes a clustering algorithm that is widely used with GPU, and denotes the prototype set (center of the cluster) [37]. In Equation (12), represents the parameter of the encoder, the momentum encoder in the same cluster c is represented as , α is the smoothing parameter, which plays a role in restriction , and the default value is set to 10. In addition, Adam is employed to make the parameters in the query encoder update.

| Algorithm 1 M-SSL model pseudocode |

| , , , , . 1: Loop 200 epochs: , |

| , |

| , , , , , |

| 15: End. |

The proposed M-SSL is similar to the original ContrastNet model with several differences. First, M-SSL treats features extracted from HSI and MSI as input, rather than a single source dataset. Second, more attention was paid to the fusion of the two kinds of features rather than to the “contrast” part in the structure of M-SSL directly. Third, two types of special characteristics employ DCAM [38] to emphasize the discriminative characteristics and weaken ineffective characteristics. Because of that, through the attention mechanism, DCAM encourages feature interaction, in which, the depthwise correlation layer further enhances the correlation to integrate the features of HSI and MSI.

The whole structure of M-SSL is shown in Figure 4, where, the expectation maximization (EM) is used to train the multisource supervised network. In the E-step, the VAE features of HSI and MSI are crossed to fuse into the momentum encoder by DCAM, and then and are calculated, respectively. In the M-step, according to the updated features and variables, is calculated, and then the query encoder and the average momentum encoder are updated by backpropagation Equations (11) and (12). Similarly, all convolution calculation in the multi-source pretext task consists of a convolution layer and a batch normalization layer. After the cross-fusion of DCAM, the AAE and VAE features of 512 HSI and 512 MSI are divided into 4 × 4 × 64 blocks. First, 128 feature maps are extracted, and the spatial information is completely retained. Then, the feature map is mostly fed from a supervised network. Since the previous process implementing feature extraction, M-SSL completes the downstream task of tree species classification, which only needs to pay attention to feature fusion and contrast learning. The structure of the projection head is adopted in M-SSL, the output of which is used for training, and the features in front of the head are used for testing. The projection head is used as a nonlinear transformation as g(.), and the embedded variable is the transformation of the contrast feature z, as shown in Equation (13).

In the training part, is used to supervise the update of M-SSL parameters, and in the testing part, z is used for the downstream task of tree species classification. In this way, the contrastive features extracted will assimilate more original ones. In the test part, only the query encoder extracts the features that could save much testing time for the multi-source supervised network in Figure 5. Ultimately, the features learned with the M-SSL model from multi-source images are fed into the Softmax classifier to output the classification map of tree species. Detailed experimental settings are shown in the next section.

Figure 5.

The architecture of the M-SSL for tree species classification.

3. Experimental Results and Analysis

To verify the effectiveness of the multisource fusion classification method proposed in the chapter, three tree species datasets in Section 2.2 are still used as experimental data in this chapter, and classical methods are selected for comparison. The training sample and test sample are in Section 2.2. The Softmax classification and linear discriminant analysis (LDA) are used for the classifiers, respectively.

3.1. Experimental Settings

Parameter settings are essential for the performance of models. For the fairness of the experiment, although the data resolution is different, the resolution of the ground truth is the same. The experiment was implemented with Pytorch in Python3. In M-SSL, the input is pretrained as the input from the pretext task.

MVAE consists of h-VAE and m-VAE. Similarly, MAAE consists of h-AAE and m-AAE. Table 2 and Table 3 show the encoder and decoder structure of AAE for HSI, and Table 4 and Table 5 show the encoder and decoder structure of AAE for MSI. The difference between them is that the additional processing of dimensionality reduction is operated for HSI. The Conv3d and Smish layers [39] are connected to be used in MAAE or MVAE, due to the effectiveness of the Smish activation function. The latent code dimension is 128, the feature extracted by the pooling layer of the AAE output dimension of 1024 d. In the training part, the encoder, the decoder, the generator, and the discriminator are all optimized by two Adam optimizers. Whereas both the learning rate of the optimizer for the encoder and decoder were set at 0.001, and the weight decay of them was set to 0.0005, similarly, the learning rate of the generator module was set to 0.0001 and without weight decay, and the learning rate of the discriminator was set to 0.00005 without weight decay. The size of the batch is set as 128. In the experiment, we trained the MAAE model for 30 epochs and just took the parameters from the encode module.

Table 2.

The shape of layers in the h-AAE model for encoding.

Table 3.

The shape of layers in the h-AAE model for decoding.

Table 4.

The shape of layers in the m-AAE model for encoding.

Table 5.

The shape of layers in the m-AAE model for decoding.

Table 6 and Table 7 show the encode and decode structures of MVAE for HSI (h-VAE), and Table 8 and Table 9 show the encode and decode structure of the MVAE for MSI (m-VAE). Similar to the above module, the dimension of the latent code is set to 128, and the features extracted by the pooling layer of VAE output the size of 1024 d. In the training part, the optimizers are employed in the same as the above module. The learning rate of the encoder and decoder were all set to 0.002, and their weight decay was set to 0.0003. The HSI and MSI latent codes were calculated by the VAE encoder, respectively. The batch size of them was set as 64. The MVAE module was trained for 50 epochs to save the parameters of encoders with HSI and MSI, respectively.

Table 6.

The shape of layers in the h-VAE model for encoding.

Table 7.

The shape of layers in the h-VAE model for decoding.

Table 8.

The shape of layers in the m-VAE model for decoding.

Table 9.

The shape of layers in the m-VAE model for encoding.

Most of the encoders in the supervised network have the same structure, as shown in Table 10. The input of HSI and MSI is discriminated to fusion by DCAM module, both dimensions are 1024, and the dimensions of the contrast features and the projection features are both 64 d. In M-SSL, the Adam optimizer is applied to train mostly from supervised networks. The learning rate = 0.003, and weight decay = 0.001. Negative sample number r = 640, batch number 128, τ = 0.01 Equation (8), and cluster number K = [2000, 2500, 3000]. According to Equation (8), M-SSL was trained for 50 epochs. M-SSL was trained for 200 epochs based on Equation (10). When training epochs between 100 and 130, the learning rate is multiplied by 0.1.

Table 10.

The shape of layers in the M-SSL model.

3.2. Comparative Experiment

In the study, the results of tree species classification were evaluated by overall accuracy, average accuracy (OA, AA), and Kappa. Several comparative models were studied, which include linear discriminant analysis (LDA) [38], deep convolutional neural network (1D-CNN) [39], and supervised deep feature extraction (S-CNN) [40]. In addition, three unsupervised models were compared with M-SSL that are VAE, AAE, and the contrastive unsupervised feature learning method (CUFL) [30].

The results of tree species classification were obtained from the average records of three experiments for all models. To verify the ability of M-SSL for beneficial feature learning, the classification of tree species was performed as a downstream task. In addition, the Softmax is employed to highlight the effectiveness of extracted features, which are mainly derived from a supervised learning network. Table 11 and Table 12 present classification results of three tree species datasets with the above seven models. The supervised and unsupervised models were tested, and their results are listed next.

Table 11.

The classification accuracy of models for dataset (1).

Table 12.

The classification accuracy of models for dataset (2).

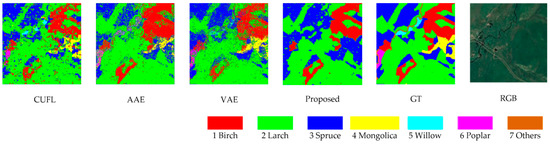

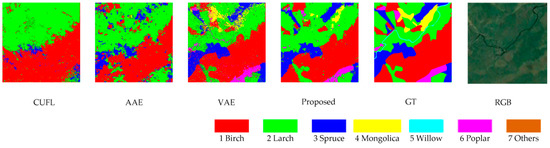

Table 11 and Figure 6 show the classification results of the dataset (1). According to them, M-SSL achieved the best performance that the OA of the dataset (1) classification is close to 80%. Since the tree species in the dataset (3) were slightly complex, it was difficult to classify, but M-SSL also reached 76%, and the classification effect was higher than in other models.

Figure 6.

Tree classification maps of models for dataset (1).

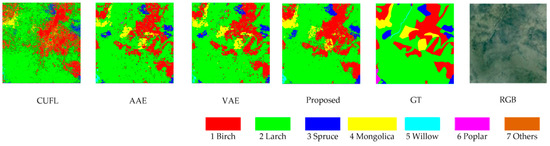

Table 12 and Figure 7 show the classification results of the dataset (2). Similar to the dataset (1), M-SSL performed best in larch, and the classification results of the other five tree species also outperformed other models. However, according to the results of the dataset (1) in Table 13 and Figure 8, the average accuracy of M-SSL in this article is about 74%, which is better than that of AAE. Considering the overall performance of tree species classification, experimental results demonstrate the feasibility of M-SSL for multisource datasets. M-SSL is worthy of further study because it specializes in multisource datasets. Although the model works without label information, it is slightly weaker than supervised learning methods. In addition, compared to the best classification result of 68% by LDA, the study concludes that the classification capability of the traditional machine method is slightly inferior to the deep learning model.

Figure 7.

Tree classification maps of models for dataset (2).

Table 13.

The classification accuracy of models for dataset (3).

Figure 8.

Tree classification maps of models for dataset (3).

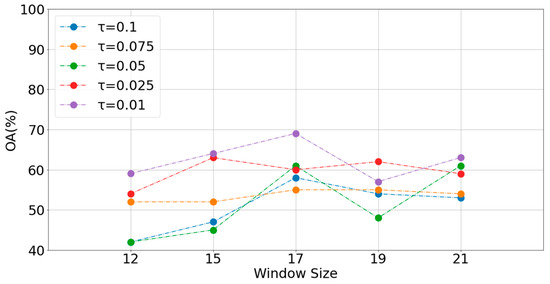

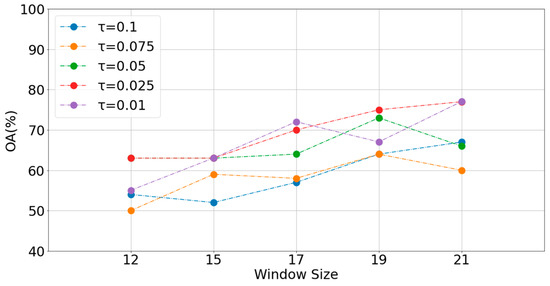

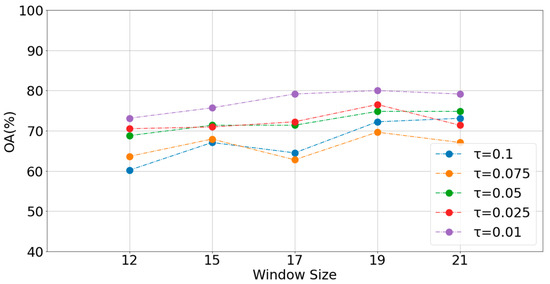

3.3. Analysis of Parameters

In the M-SSL model, the performance of was affected by some critical parameters, such as window size and temperature. In the experiment, τ denotes temperature, which was set to 0.01, 0.025, 0.05, 0.075, and 0.1, respectively. Similarly, the sliding window size was set as 12, 15, 17, 19, and 21. The OA of the classification results is recorded on the three datasets of Chapter 2.2 with different parameters. With the above effective parameters, from Figure 9, Figure 10 and Figure 11, the experimental results derived mainly from MAAE and MVAE are significantly better than from them alone. The value of τ only affects the features of the supervised learning models, so features of MVAE and MAAE have no relation with τ. The learning performance of the supervised models in experiment is influenced by τ. From Figure 9, when τ is 0.01, the best results were obtained for tree classification with the M-SSL model.

Figure 9.

OA of MAAE for τ with a different size of window; τ denotes the temperature of Equation (8).

Figure 10.

OA of MVAE for τ with different window sizes.

Figure 11.

OA of M-SSL for τ with a different size of window.

In addition, the performance of MVAE and MAAE for feature learning is improved with increased window size, which may be one of the parameters related to the results, whereas changing the parameter almost has few impacts on the learning of contrastive features. More valuable information could be obtained from a multisource dataset. Contrastively, the increase in spatial information is of little help to the experiment. The results conclude that the multisource self-supervised model is robust, and the parameter is insensitive.

4. Discussion

The supervised learning method was conducted on the ground objects classification based on feature fusion of multi-source remote sensing images. The property of supervised classification relying on labeling limits the application of artificial intelligence in forestry supervision. The self-supervised learning method attracted extensive attention because of no human annotation [9,10,11]. PCL combines the clustering algorithm with contrast learning and obtains good performance, but cannot be used directly with hyperspectral and multispectral data [29]. ContrastNet demonstrated strong self-supervised learning with processing ordinary images, but its single data source greatly reduces the features of the sample [30]. Contrastively, in the M-SSL model, the HJ-1A and Sentinel-2 remote sensing image data were fused to enhance the features of the samples. The M-SSL model takes advantage of the combination of AAE and VAE. The generator uses the generative learning mode, and the discriminator uses the heavy loss function, which obtains the ability of extracting discriminating features. The following conclusions were drawn from the experiments:

- The self-supervised learning model proposed in this paper is similar to traditional deep learning methods, and features are extracted and fused from the two data sources. HSI and MSI provide implicit augmentation with spectral and structural information differences. Using HSI and MSI as multi-modal features for network training provides more desirable presentation than other methods.

- By taking the advantages of generative learning and contrastive learning to conduct joint learning, the M-SSL model extracted two types of features from multi-source datasets in the pretext task, and fine-tuned parameters only in downstream task. The sharing features of multi-source tree species images learned from pretext task training bring robustness and stability to downstream tasks.

- Observed from the compared results, it is proven that the feature learning based on M-AAE or M-VAE can better integrate the discriminate features and remove some redundant features. The pixel from the same tree species with positive samples provides more abundant information to make the result of tree species classification more accurate.

Although M-SSL uses different particle sizes as negative samples to reduce calculation of negative label samples, the learning process of M-SSL is based on MOCO model to make itself still complex. In the next step, we can try other methods, such as data distillation DINO or Barlow Twins, to reduce the computational complexity. In addition, the spatial resolution of datasets is 10 m, in the future, we further explore the self-supervised methods for tree species classification with higher spatial resolution datasets.

5. Conclusions

In the study, a deep self-supervised learning model named M-SSL, based on encoder and contrast methods, is proposed on multisource remote sensing datasets that combine to represent learning and self-supervised discrimination methods to extract features for tree species classification in HSI and MSI more effectively than other methods. Inspired by encoder and generator methods, features extracted with MVAE and MAAE models, respectively, as pretext tasks in HSI and MSI, are discriminated to fuse by DCAM modules, so that effective information is enhanced. Then, the fused features from the different modules are applied to compare the learning characteristics of multisources, which were filtered through negative clustering samples, for the sake of reducing computing resources of the contrast learning method. The accuracy of the experimental results with M-SSL is close to 80%, which is 5% higher than that of other advanced methods. M-SSL can learn more representative information. It shows that the deep self-supervised learning method still has great potential in forest classification, especially for multisource remote sensing datasets.

Author Contributions

X.W.: Conceptualization, methodology, software, data curation, funding acquisition, writing—original draft, writing—review and editing; N.Y.: methodology, writing—review and editing, supervision; E.L.: writing—review and editing, supervision; W.G.: writing—review and editing, supervision; J.Z.: funding acquisition, supervision; S.Z.: supervision; G.S.: supervision; J.W.: supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Basic Scientific Research Project of Heilongjiang Provincial Universities (Grant number 145109219), Basic Scientific Research Project of Heilongjiang Provincial Universities (Grant number 135409422). All the works were conducted at Forestry Intelligent Equipment Engineering Research Center.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to still being used in a proceeding project.

Conflicts of Interest

Authors declare no conflict of interest.

References

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Sharma, R.C.; Hara, K. Self-Supervised Learning of Satellite-Derived Vegetation Indices for Clustering and Visualization of Vegetation Types. J. Imaging 2021, 7, 30. [Google Scholar] [CrossRef]

- Saheer, L.B.; Shahawy, M. Self-Supervised Approach for Urban Tree Recognition on Aerial Images. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Hersonissos, Greece, 25–27 June 2021; Springer: Cham, Switzerland, 2021; pp. 476–486. [Google Scholar]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual tree-crown detection in RGB imagery using semi-supervised deep learning neural networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Cat Tuong, T.T.; Tani, H.; Wang, X.; Thang, N.Q. Semi-supervised classification and landscape metrics for mapping and spatial pattern change analysis of tropical forest types in Thua Thien Hue province, Vietnam. Forests 2019, 10, 673. [Google Scholar] [CrossRef]

- Wan, H.; Tang, Y.; Jing, L.; Li, H.; Qiu, F.; Wu, W. Tree Species Classification of Forest Stands Using Multisource Remote Sensing Data. Remote Sens. 2021, 13, 144. [Google Scholar] [CrossRef]

- Li, Y.; Shao, Z.; Huang, X.; Cai, B.; Peng, S. Meta-FSEO: A Meta-Learning Fast Adaptation with Self-Supervised Embedding Optimization for Few-Shot Remote Sensing Scene Classification. Remote Sens. 2021, 13, 2776. [Google Scholar] [CrossRef]

- Zhao, Z.; Luo, Z.; Li, J.; Chen, C.; Piao, Y. When Self-Supervised Learning Meets Scene Classification: Remote Sensing Scene Classification Based on a Multitask Learning Framework. Remote Sens. 2020, 12, 3276. [Google Scholar] [CrossRef]

- Illarionova, S.; Trekin, A.; Ignatiev, V.; Oseledets, I. Tree Species Mapping on Sentinel-2 Satellite Imagery with Weakly Supervised Classification and Object-Wise Sampling. Forests 2021, 12, 1413. [Google Scholar] [CrossRef]

- Dong, H.; Ma, W.; Wu, Y.; Zhang, J.; Jiao, L. Self-Supervised Representation Learning for Remote Sensing Image Change Detection Based on Temporal Prediction. Remote Sens. 2020, 12, 1868. [Google Scholar] [CrossRef]

- Weis, M.A.; Pede, L.; Lüddecke, T.; Ecker, A.S. Self-supervised Representation Learning of Neuronal Morphologies. arXiv 2021, arXiv:2112.12482. [Google Scholar]

- Van Horn, G.; Cole, E.; Beery, S.; Wilber, K.; Belongie, S.; Aodha, O.M. Benchmarking Representation Learning for Natural World Image Collections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12884–12893. [Google Scholar]

- Liu, B.; Gao, K.; Yu, A.; Ding, L.; Qiu, C.; Li, J. ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images. Remote Sens. 2022, 14, 4236. [Google Scholar] [CrossRef]

- Liu, C.; Sun, H.; Xu, Y.; Kuang, G. Multi-Source Remote Sensing Pretraining Based on Contrastive Self-Supervised Learning. Remote Sens. 2022, 14, 4632. [Google Scholar] [CrossRef]

- Monowar, M.M.; Hamid, M.A.; Ohi, A.Q.; Alassafi, M.O.; Mridha, M.F. AutoRet: A Self-Supervised Spatial Recurrent Network for Content-Based Image Retrieval. Sensors 2022, 22, 2188. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Y.; Liu, H. Hybrid Variability Aware Network (HVANet): A self-supervised deep framework for label-free SAR image change detection. Remote Sens. 2022, 14, 734. [Google Scholar] [CrossRef]

- Tao, B.; Chen, X.; Tong, X.; Jiang, D.; Chen, B. Self-supervised monocular depth estimation based on channel attention. Photonics 2022, 9, 434. [Google Scholar] [CrossRef]

- Gao, H.; Zhao, Y.; Guo, P.; Sun, Z.; Chen, X.; Tang, Y. Cycle and Self-Supervised Consistency Training for Adapting Semantic Segmentation of Aerial Images. Remote Sens. 2022, 14, 1527. [Google Scholar] [CrossRef]

- Liu, B.; Yu, H.; Du, J.; Wu, Y.; Li, Y.; Zhu, Z.; Wang, Z. Specific Emitter Identification Based on Self-Supervised Contrast Learning. Electronics 2022, 11, 2907. [Google Scholar] [CrossRef]

- Cui, X.Z.; Feng, Q.; Wang, S.Z.; Zhang, J.-H. Monocular depth estimation with self-supervised learning for vineyard unmanned agricultural vehicle. Sensors 2022, 22, 721. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Shen, C.; Kong, T.; Li, L. Dense Contrastive Learning for Self-Supervised Visual Pre-Training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3024–3033. [Google Scholar]

- Mei, S.; Ji, J.; Geng, Y.; Zhang, Z.; Li, X.; Du, Q. Unsupervised spatial–spectral feature learning by 3D convolutional autoencoder for hyperspectral classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6808–6820. [Google Scholar] [CrossRef]

- Wang, X.; Tan, K.; Du, Q.; Chen, Y.; Du, P. Caps-TripleGAN: GAN-assisted CapsNet for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7232–7245. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural Networks for One-Shot Image Recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 10–11 June 2015; p. 2. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning PMLR, Online, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–14 June 2020; pp. 9729–9738. [Google Scholar]

- Chen, X.; He, K. Exploring Simple Siamese Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15750–15758. [Google Scholar]

- Li, J.; Zhou, P.; Xiong, C.; Hoi, S.C.H. Prototypical contrastive learning of unsupervised representations. arXiv 2020, arXiv:2005.04966. [Google Scholar]

- Cao, Z.; Li, X.; Feng, Y.; Chen, S.; Xia, C.; Zhao, L. ContrastNet: Unsupervised feature learning by autoencoder and prototypical contrastive learning for hyperspectral imagery classification. Neurocomputing 2021, 460, 71–83. [Google Scholar] [CrossRef]

- Wang, L.; Fan, W.Y. Identification of forest dominant tree species group based on hyperspectral remote sensing data. Northeast. For. Univ. 2015, 43, 134–137. [Google Scholar]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Johannessen, J.A. Potential Contribution to Earth System Science: Oceans and Cryosphere. In Proceedings of the EGU General Assembly Conference Abstracts, Vienna, Austria, 3–8 April 2010; p. 15625. [Google Scholar]

- Vahdat, A.; Kautz, J. NVAE: A deep hierarchical variational autoencoder. Adv. Neural Inf. Process. Syst. 2020, 33, 19667–19679. [Google Scholar]

- Wang, X.; Ren, H.; Wang, A. Smish: A Novel Activation Function for Deep Learning Methods. Electronics 2022, 11, 540. [Google Scholar] [CrossRef]

- Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with gpus. IEEE Trans. Big Data 2019, 7, 535–547. [Google Scholar] [CrossRef]

- Gao, Y.; Li, W.; Zhang, M.; Wang, J. Hyperspectral and multispectral classification for coastal wetland using depthwise feature interaction network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Hu, P.; Peng, D.; Sang, Y.; Xiang, Y. Multi-view linear discriminant analysis network. IEEE Trans. Image Process. 2019, 28, 5352–5365. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).