Short-Term Power Prediction of Wind Turbine Applying Machine Learning and Digital Filter

Abstract

1. Introduction

- The output of the training set was optimized by a Savitzky-Golay filter, and the deep LSTM network trained by this reduced overfitting and exhibited better generalization performance.

- The optimal configuration parameters of the digital filter were determined. With such a configuration, the noise can be filtered out well and the original features retained, and the prediction model will not be overfitted due to the learned noise.

- More accurate multi-step predictions were achieved based on machine learning and digital filtering, which can improve the timeliness of the prediction information.

2. Methodology Model

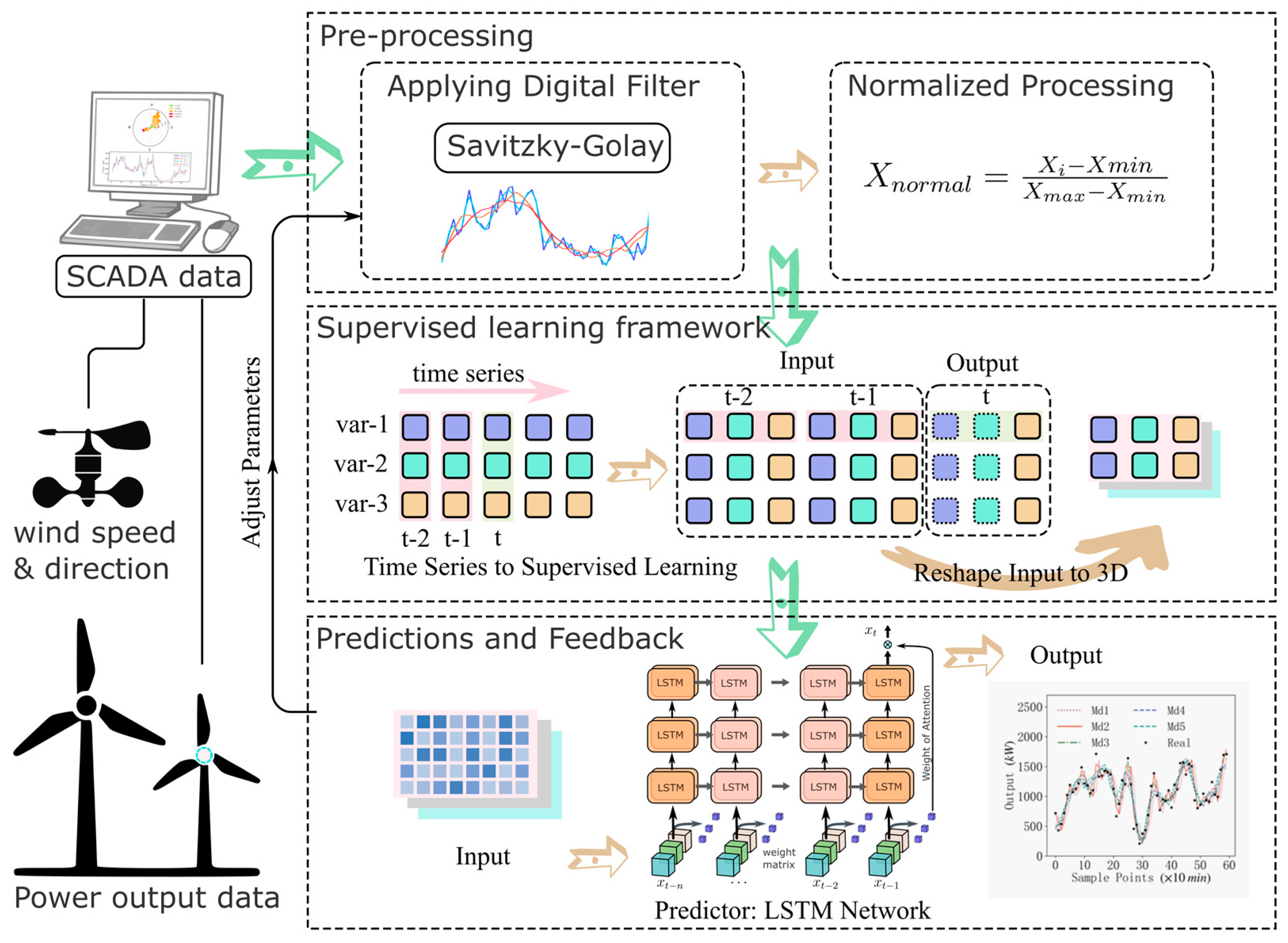

2.1. Overall Data Flow

2.2. Digital Filter

2.3. Method of Prediction

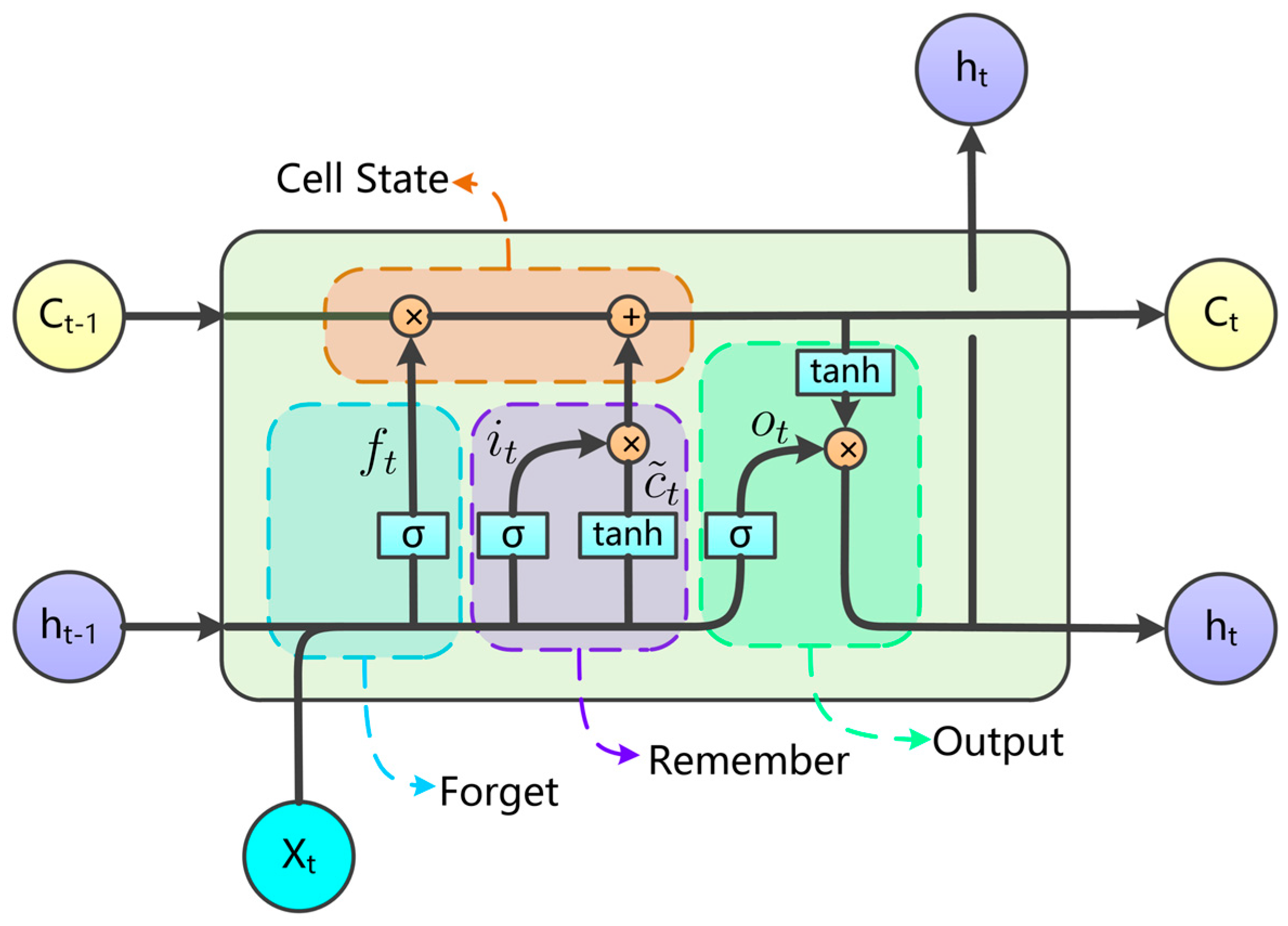

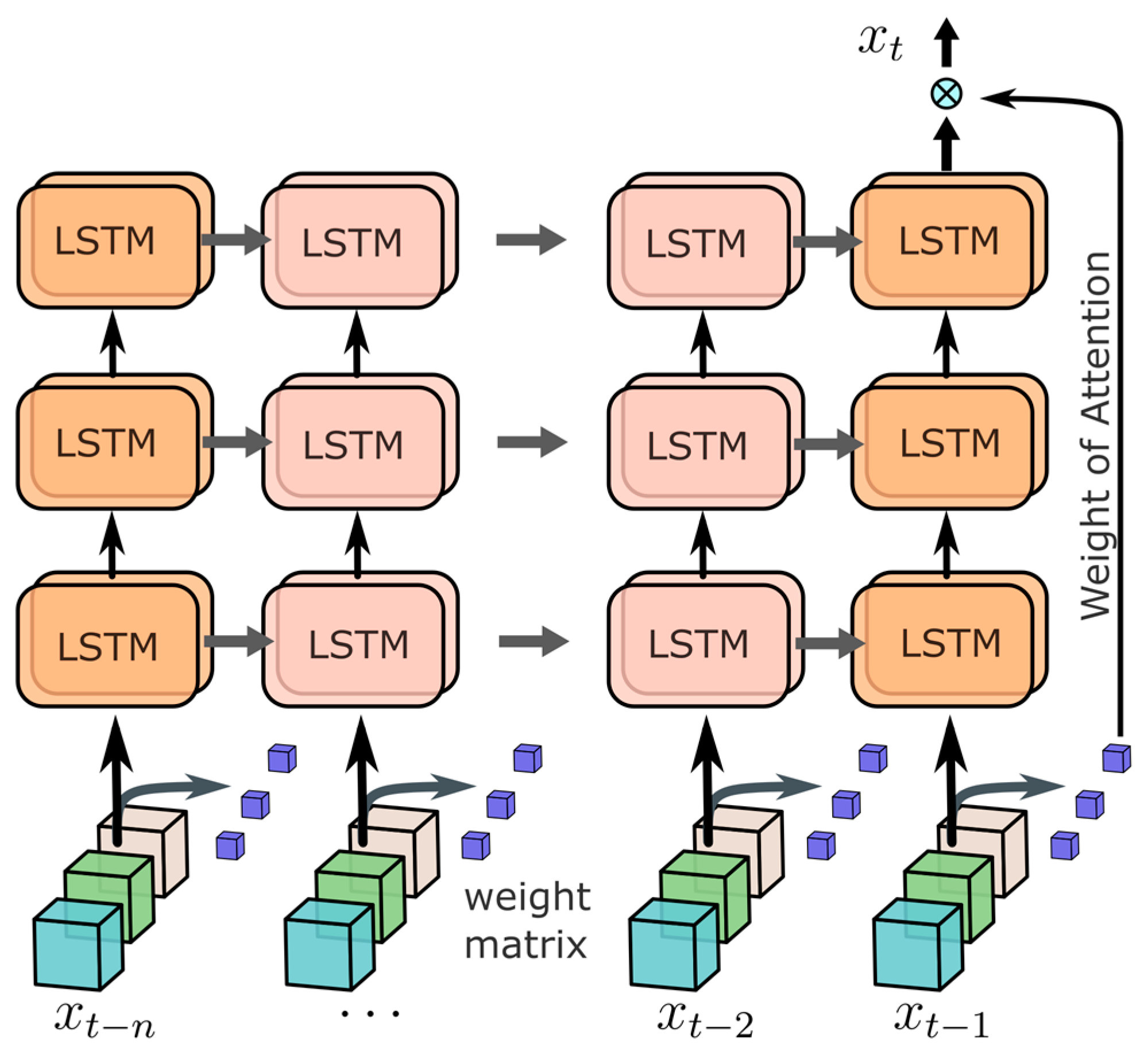

2.3.1. Neural Network Units

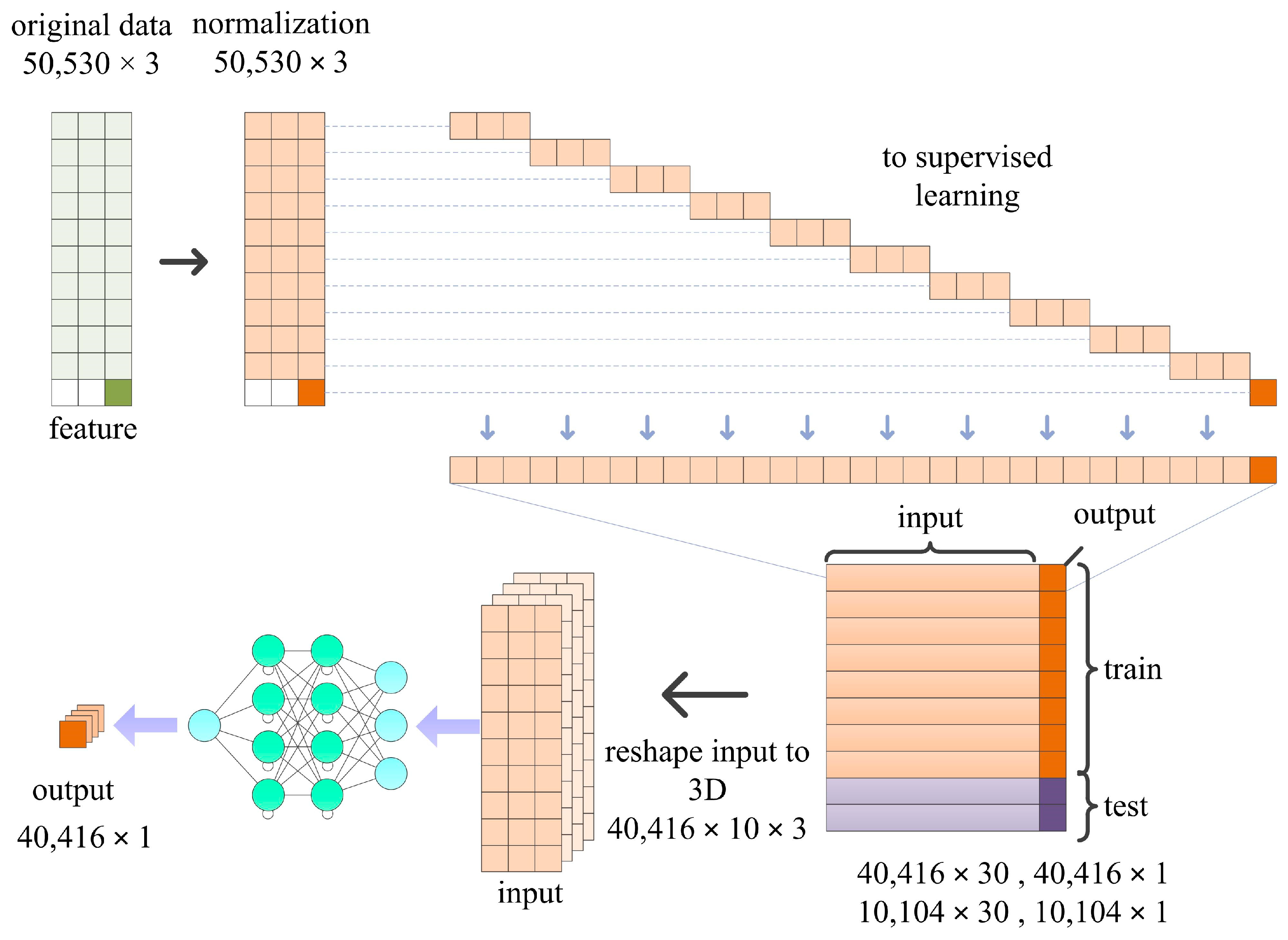

2.3.2. Time Series Forecasting to Supervised Learning

3. Time Series Forecasting Experiments

3.1. Data Description

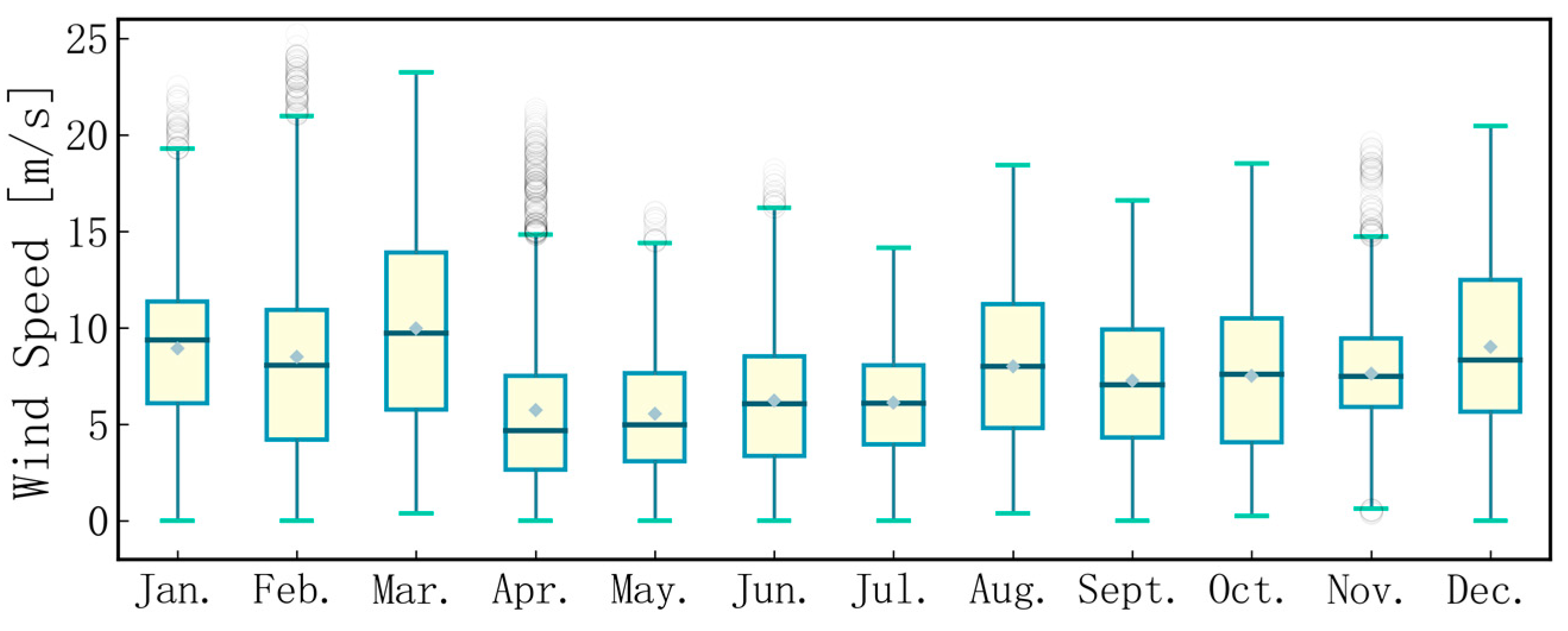

3.1.1. General Overview

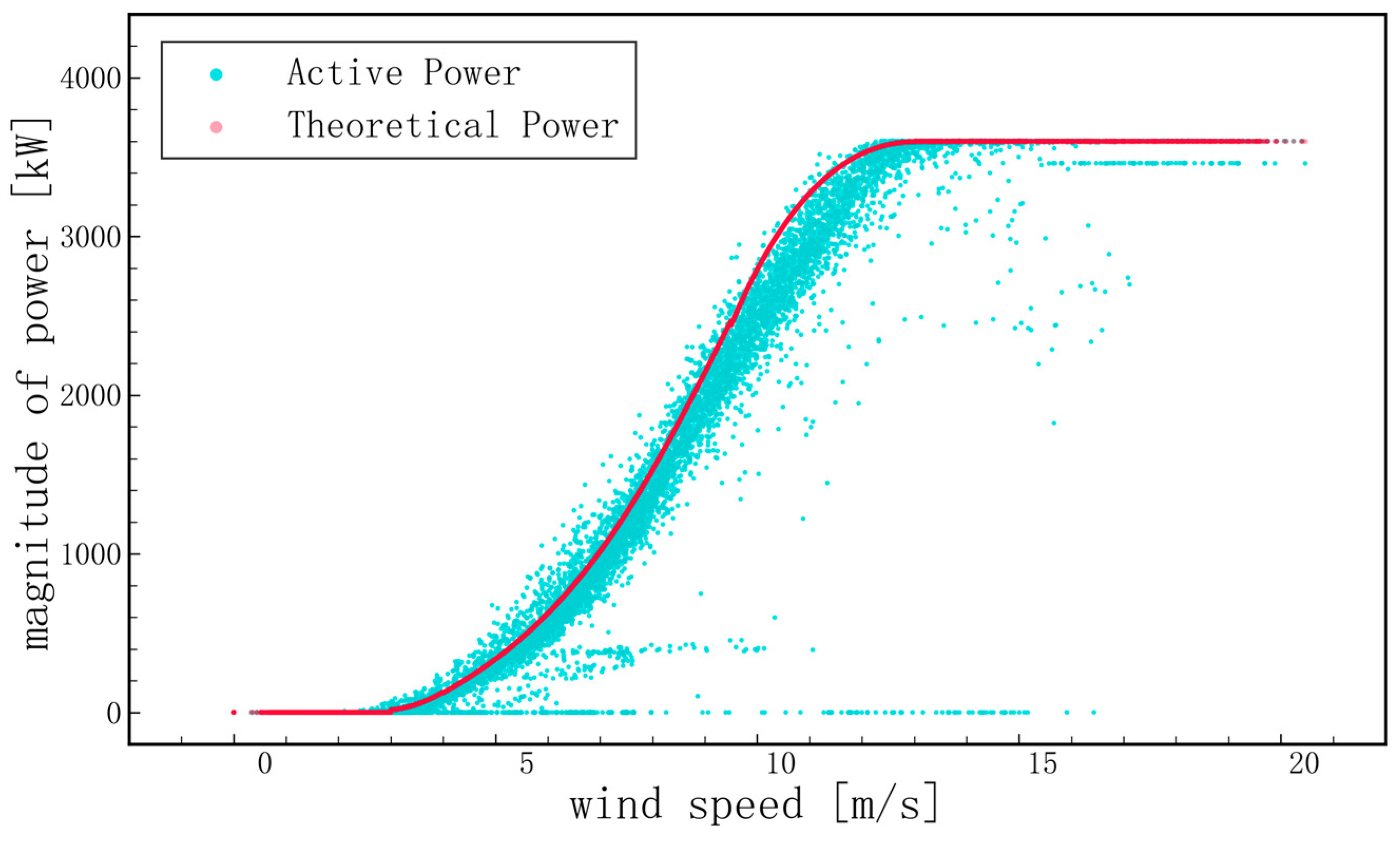

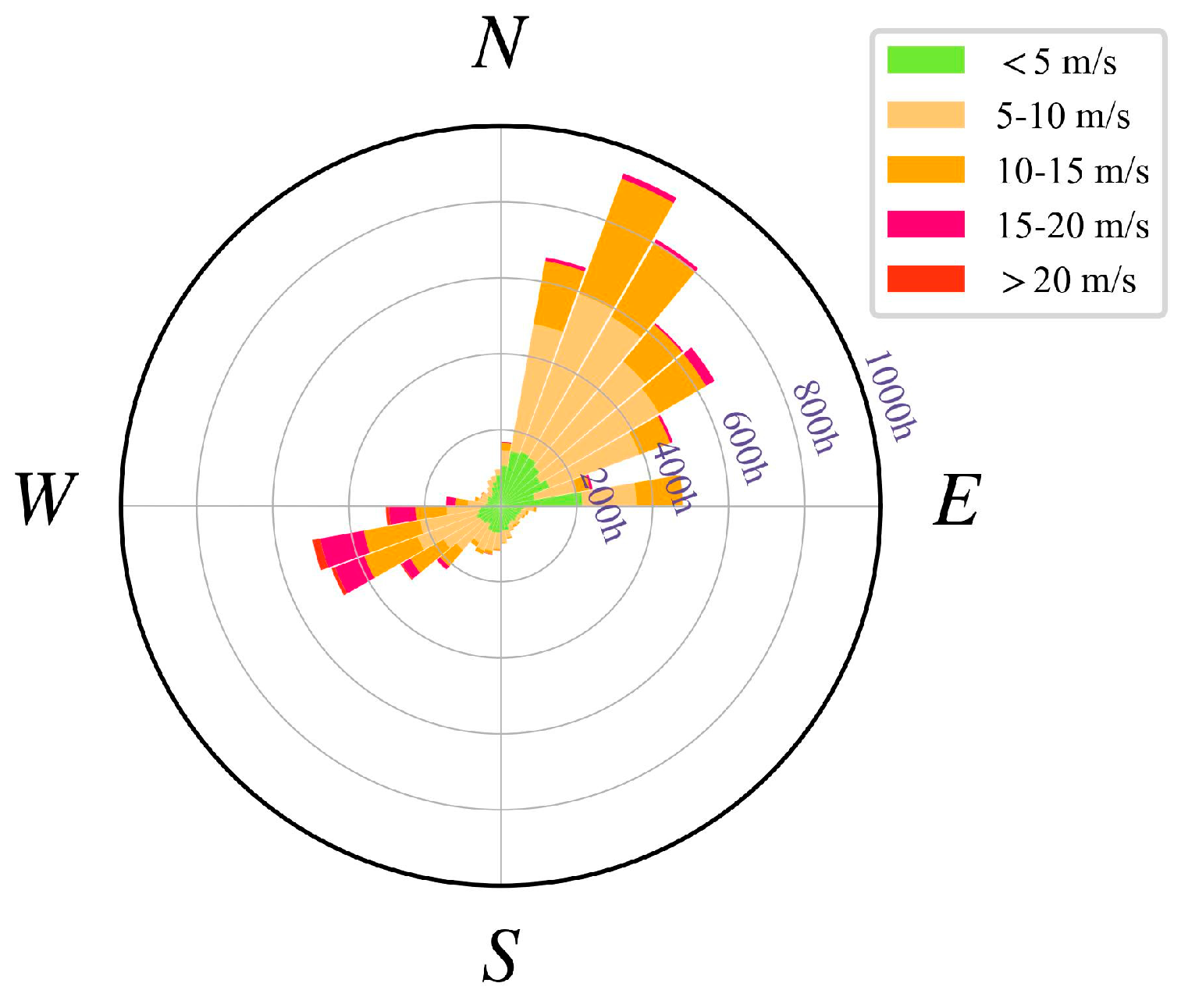

3.1.2. Wind Speed and Power

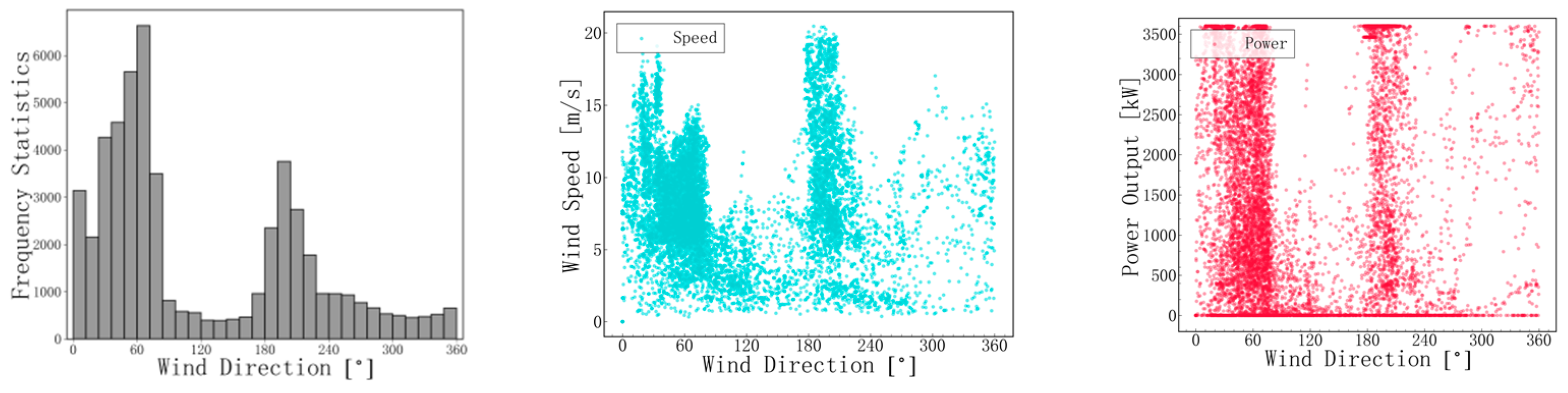

3.1.3. Effect of Wind Direction

3.2. Evaluation Standard

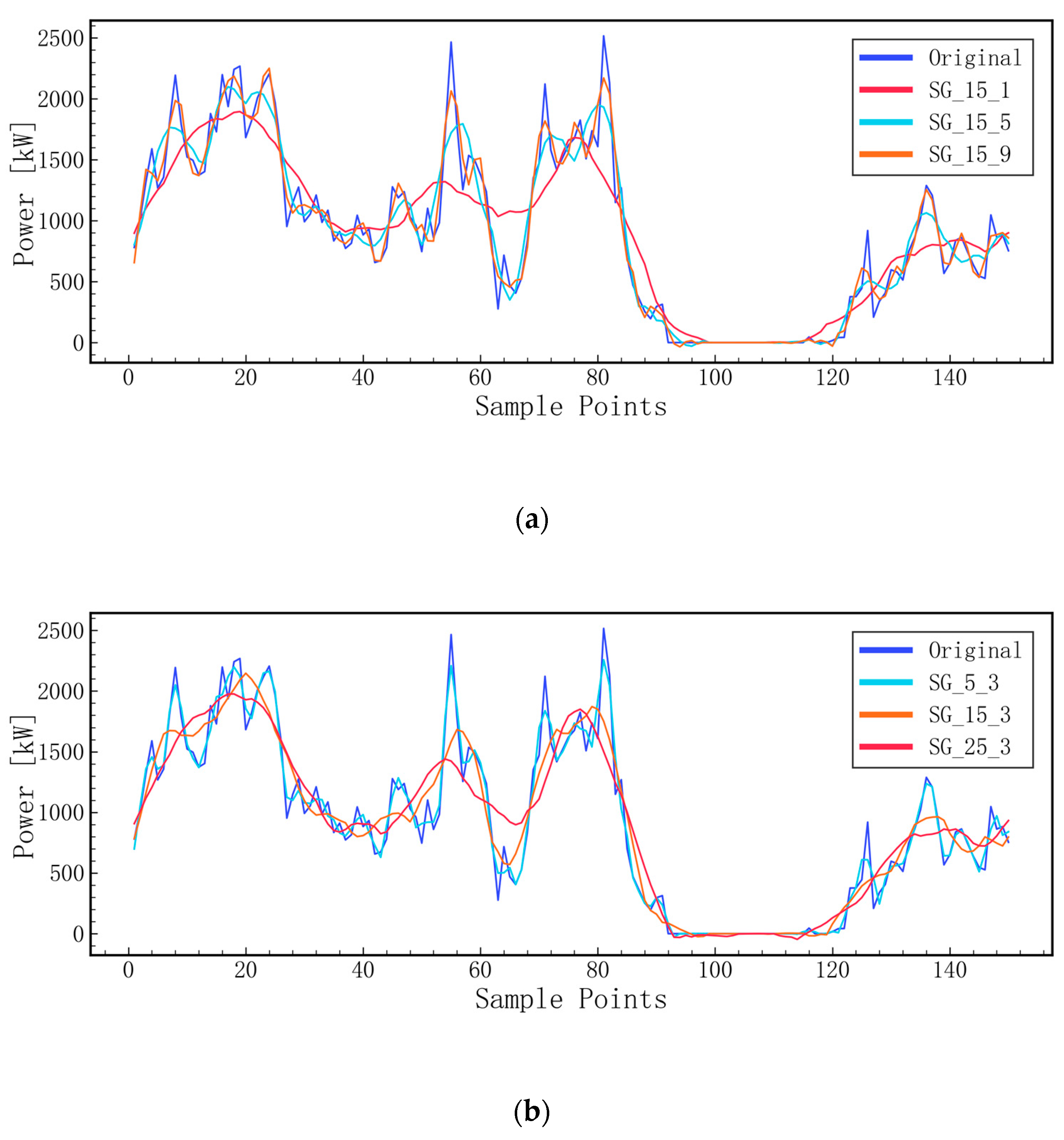

3.3. Pre-Processing of Signals

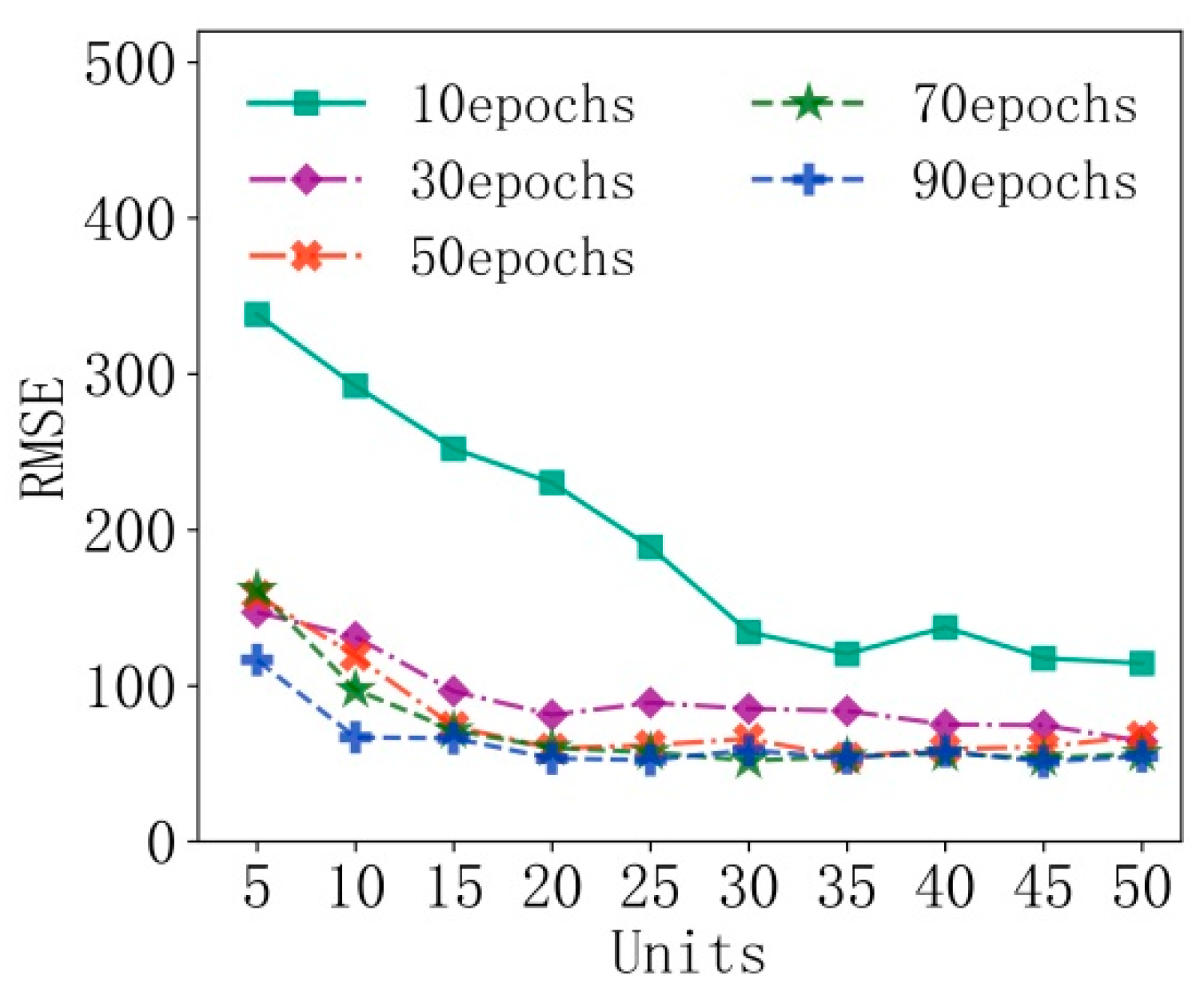

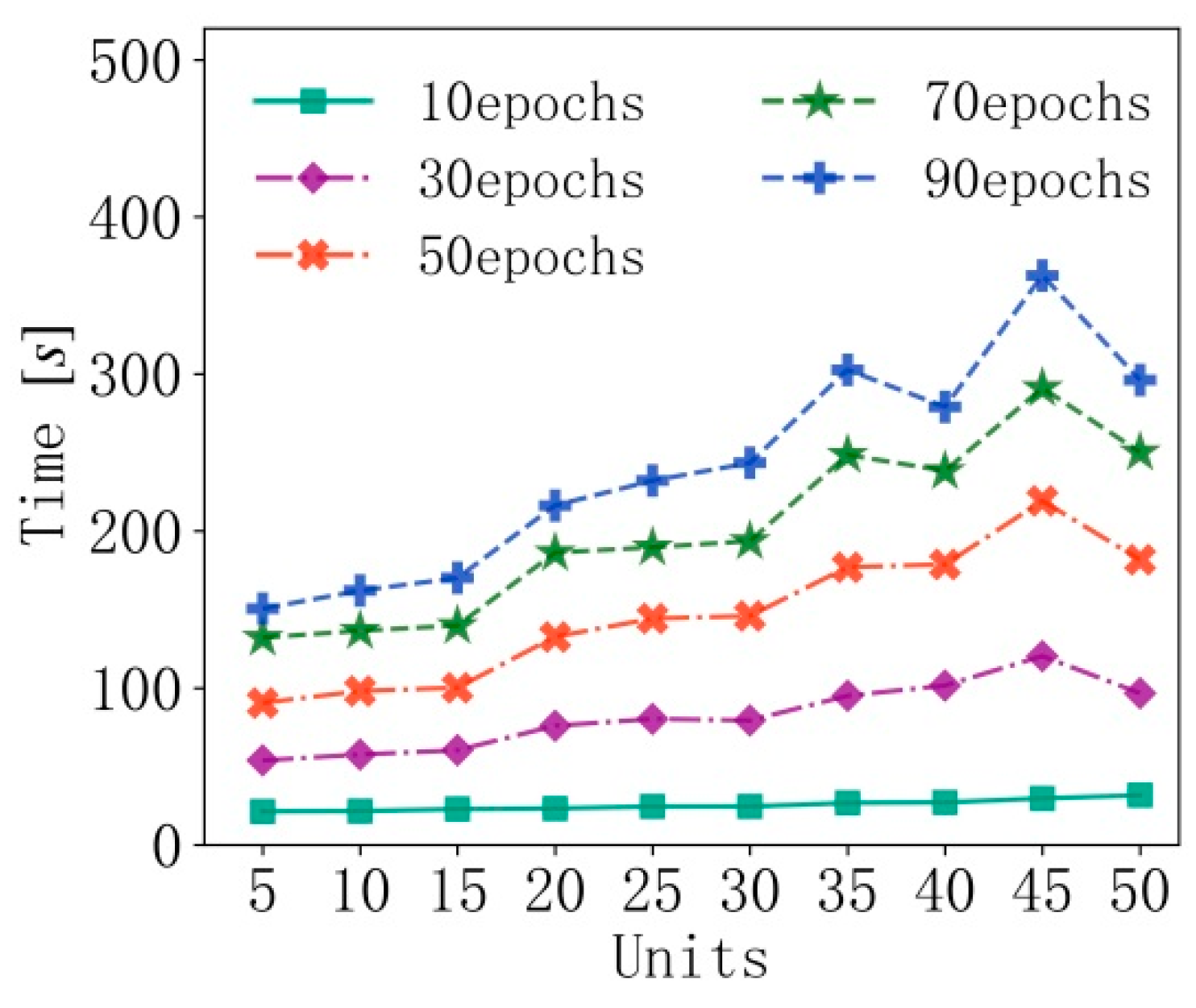

3.4. Improved LSTM Network Model

3.5. Presentation of Prediction Results and Analysis

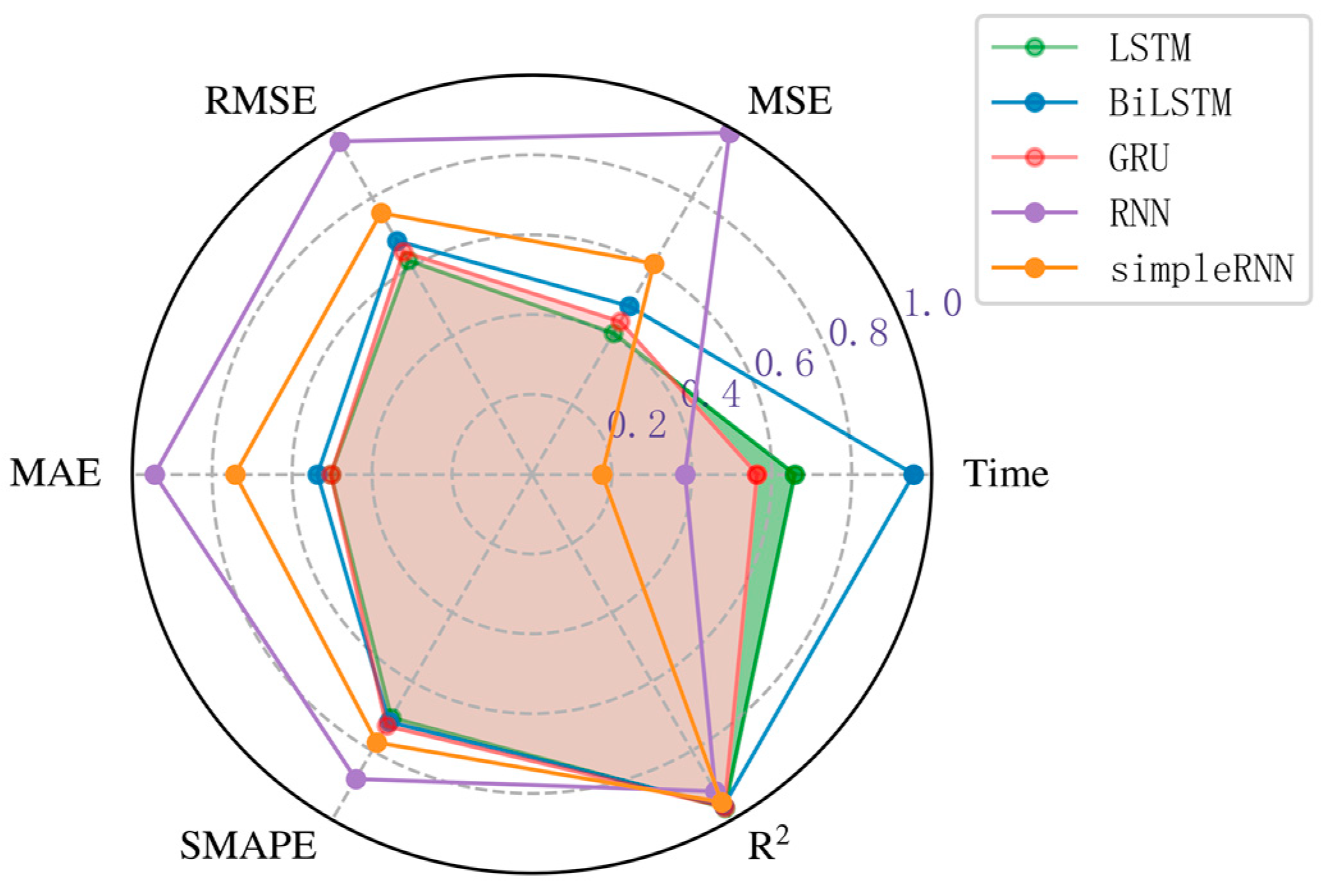

3.5.1. Comprehensive Comparison of Models

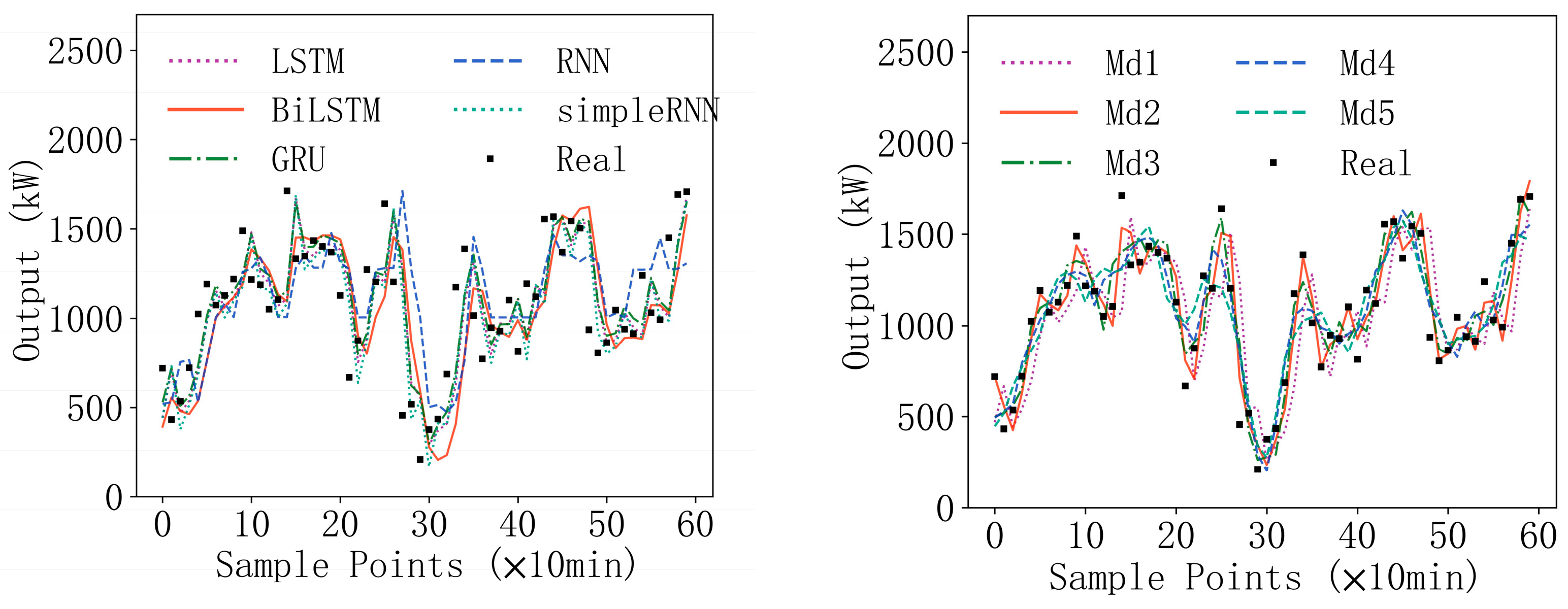

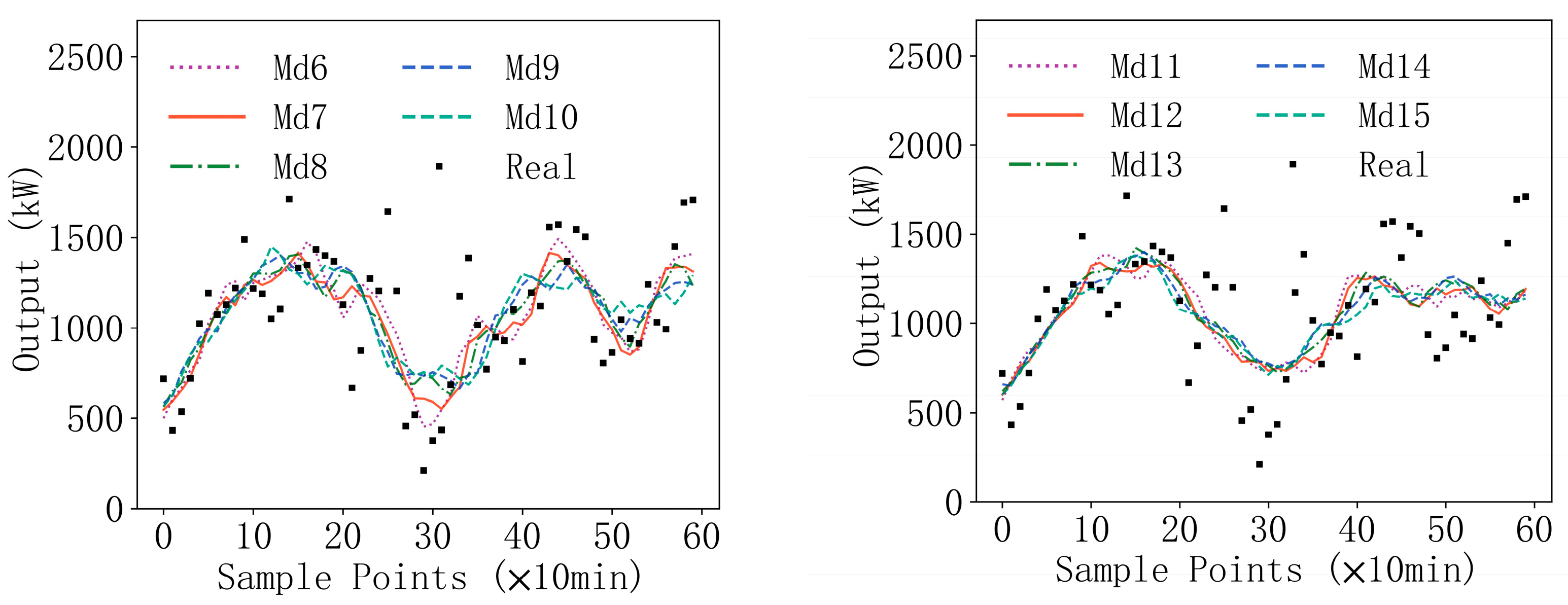

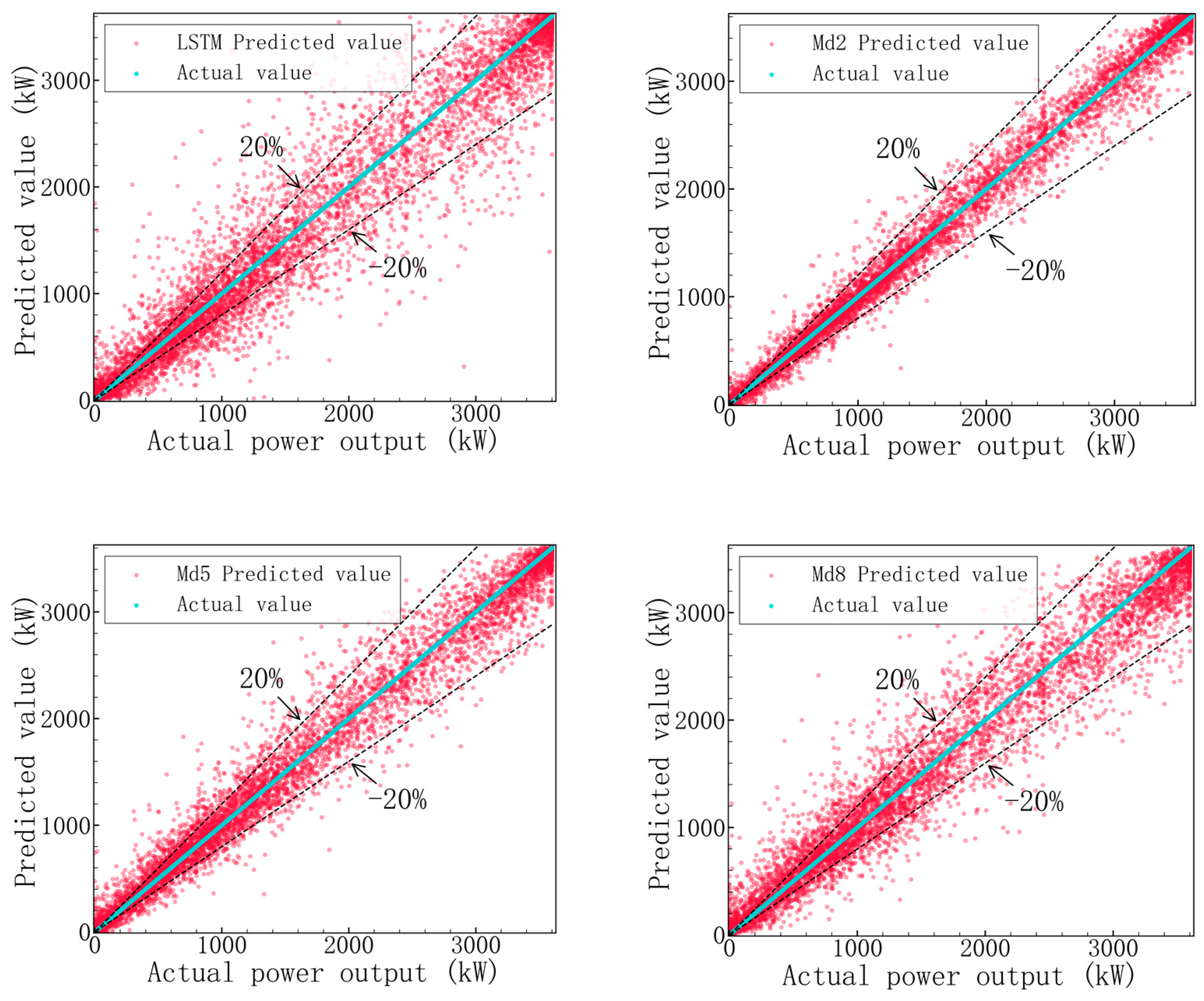

3.5.2. Single-Step Prediction

3.5.3. Multi-Step Prediction

4. Summary and Conclusions

- For the second-order SG filter, a data frame length setting of 5 is the best solution for signal quality improvement.

- Optimization of the data used for learning allowed the RMSE of single-step prediction to decrease from 247.4 to 115.52, i.e., a 3.8% reduction in error over the range of values (0–3500 kW). The method is beneficial for improving the model’s generalization performance in testing.

- From the multi-step prediction results, this method has the smallest prediction error at each step with normalized RMSEs of 0.0485, 0.0772, 0.0932, 0.0109, 0.1168, and 0.1299, respectively. The determination coefficient () remains 0.91 at the fifth step of prediction. The method is effective in increasing the timeliness of the prediction.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- BP. BP Statistical Review of World Energy; BP: London, UK, 2022. [Google Scholar]

- Qin, B.; Wang, M.; Zhang, G.; Zhang, Z. Impact of renewable energy penetration rate on power system frequency stability. Energy Rep. 2022, 8, 997–1003. [Google Scholar] [CrossRef]

- Dabar, O.A.; Awaleh, M.O.; Waberi, M.M.; Adan, A.-B.I. Wind resource assessment and techno-economic analysis of wind energy and green hydrogen production in the Republic of Djibouti. Energy Rep. 2022, 8, 8996–9016. [Google Scholar] [CrossRef]

- Saxena, A.; Shankar, R. Improved load frequency control considering dynamic demand regulated power system integrating renewable sources and hybrid energy storage system. Sustain. Energy Technol. Assess. 2022, 52, 102245. [Google Scholar] [CrossRef]

- Singh, B.; Sharma, A.K. Benefit maximization and optimal scheduling of renewable energy sources integrated system considering the impact of energy storage device and Plug-in Electric vehicle load demand. J. Energy Storage 2022, 54, 105245. [Google Scholar] [CrossRef]

- Niu, D.; Sun, L.; Yu, M.; Wang, K. Point and interval forecasting of ultra-short-term wind power based on a data-driven method and hybrid deep learning model. Energy 2022, 254, 124384. [Google Scholar] [CrossRef]

- Hu, W.C.; Yang, Q.S.; Zhang, P.; Yuan, Z.T.; Chen, H.P.; Shen, H.T.; Zhou, T.; Guo, K.P.; Li, T. A novel two-stage data-driven model for ultra-short-term wind speed prediction. Energy Rep. 2022, 8, 9467–9480. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Mirjalili, S.; Tjernberg, L.B.; Astiaso Garcia, D.; Alexander, B.; Wagner, M. A deep learning-based evolutionary model for short-term wind speed forecasting: A case study of the Lillgrund offshore wind farm. Energy Convers. Manag. 2021, 236, 114002. [Google Scholar] [CrossRef]

- Bett, P.E.; Thornton, H.E.; Troccoli, A.; De Felice, M.; Suckling, E.; Dubus, L.; Saint-Drenan, Y.-M.; Brayshaw, D.J. A simplified seasonal forecasting strategy, applied to wind and solar power in Europe. Clim. Serv. 2022, 27, 100318. [Google Scholar] [CrossRef]

- Ahmad, T.; Zhang, H.; Yan, B. A review on renewable energy and electricity requirement forecasting models for smart grid and buildings. Sustain. Cities Soc. 2020, 55, 102052. [Google Scholar] [CrossRef]

- Hwang, Y.-H.; Su, D.-T.; Yu, J.-L. A high resolution numerical method for solving atmospheric models. Chin. J. Phys. 2022, 77, 92–111. [Google Scholar] [CrossRef]

- Constantin, A.; Johnson, R.S. On the modelling of large-scale atmospheric flow. J. Differ. Equ. 2021, 285, 751–798. [Google Scholar] [CrossRef]

- Liu, X.; Lin, Z.; Feng, Z. Short-term offshore wind speed forecast by seasonal ARIMA—A comparison against GRU and LSTM. Energy 2021, 227, 120492. [Google Scholar] [CrossRef]

- Wang, Z.-X.; Li, Q.; Pei, L.-L. A seasonal GM(1,1) model for forecasting the electricity consumption of the primary economic sectors. Energy 2018, 154, 522–534. [Google Scholar] [CrossRef]

- Ding, S.; Hipel, K.W.; Dang, Y.-g. Forecasting China’s electricity consumption using a new grey prediction model. Energy 2018, 149, 314–328. [Google Scholar] [CrossRef]

- Suárez-Cetrulo, A.L.; Burnham-King, L.; Haughton, D.; Carbajo, R.S. Wind power forecasting using ensemble learning for day-ahead energy trading. Renew. Energy 2022, 191, 685–698. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Y.; Zhang, G. Short-term wind power forecasting approach based on Seq2Seq model using NWP data. Energy 2020, 213, 118371. [Google Scholar] [CrossRef]

- Blanchard, T.; Samanta, B. Wind speed forecasting using neural networks. Wind Eng. 2020, 44, 33–48. [Google Scholar] [CrossRef]

- Corizzo, R.; Ceci, M.; Fanaee-T, H.; Gama, J. Multi-aspect renewable energy forecasting. Inf. Sci. 2021, 546, 701–722. [Google Scholar] [CrossRef]

- Meka, R.; Alaeddini, A.; Bhaganagar, K. A robust deep learning framework for short-term wind power forecast of a full-scale wind farm using atmospheric variables. Energy 2021, 221, 119759. [Google Scholar] [CrossRef]

- Ding, S.; Tao, Z.; Li, R.; Qin, X. A novel seasonal adaptive grey model with the data-restacking technique for monthly renewable energy consumption forecasting. Expert Syst. Appl. 2022, 208, 118115. [Google Scholar] [CrossRef]

- Li, Z.; Luo, X.R.; Liu, M.J.; Cao, X.; Du, S.H.; Sun, H.X. Wind power prediction based on EEMD-Tent-SSA-LS-SVM. Energy Rep. 2022, 8, 3234–3243. [Google Scholar] [CrossRef]

- Yan, Y.; Wang, X.; Ren, F.; Shao, Z.; Tian, C. Wind speed prediction using a hybrid model of EEMD and LSTM considering seasonal features. Energy Rep. 2022, 8, 8965–8980. [Google Scholar] [CrossRef]

- Jaseena, K.U.; Kovoor, B.C. Decomposition-based hybrid wind speed forecasting model using deep bidirectional LSTM networks. Energy Convers. Manag. 2021, 234, 113944. [Google Scholar] [CrossRef]

- Liu, Y.; Guan, L.; Hou, C.; Han, H.; Liu, Z.; Sun, Y.; Zheng, M. Wind Power Short-Term Prediction Based on LSTM and Discrete Wavelet Transform. Appl. Sci. 2019, 9, 1108. [Google Scholar] [CrossRef]

- Liao, W.; Bak-Jensen, B.; Pillai, J.R.; Yang, Z.; Liu, K. Short-term power prediction for renewable energy using hybrid graph convolutional network and long short-term memory approach. Electr. Power Syst. Res. 2022, 211, 108614. [Google Scholar] [CrossRef]

- Dolatabadi, A.; Abdeltawab, H.; Mohamed, Y. Deep Spatial-Temporal 2-D CNN-BLSTM Model for Ultrashort-Term LiDAR-Assisted Wind Turbine’s Power and Fatigue Load Forecasting. IEEE Trans. Ind. Inform. 2022, 18, 2342–2353. [Google Scholar] [CrossRef]

- Li, L.-L.; Zhao, X.; Tseng, M.-L.; Tan, R.R. Short-term wind power forecasting based on support vector machine with improved dragonfly algorithm. J. Clean. Prod. 2020, 242, 118447. [Google Scholar] [CrossRef]

- Ewees, A.A.; Al-qaness, M.A.A.; Abualigah, L.; Abd Elaziz, M. HBO-LSTM: Optimized long short term memory with heap-based optimizer for wind power forecasting. Energy Convers. Manag. 2022, 268, 116022. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Mirjalili, S.; Groppi, D.; Heydari, A.; Tjernberg, L.B.; Garcia, D.A.; Alexander, B.; Shi, Q.F.; et al. Wind turbine power output prediction using a new hybrid neuro-evolutionary method. Energy 2021, 229, 120617. [Google Scholar] [CrossRef]

- Zhang, Z.; Ye, L.; Qin, H.; Liu, Y.; Wang, C.; Yu, X.; Yin, X.; Li, J. Wind speed prediction method using Shared Weight Long Short-Term Memory Network and Gaussian Process Regression. Appl. Energy 2019, 247, 270–284. [Google Scholar] [CrossRef]

- Xiong, B.R.; Lou, L.; Meng, X.Y.; Wang, X.; Ma, H.; Wang, Z.X. Short-term wind power forecasting based on Attention Mechanism and Deep Learning. Electr. Power Syst. Res. 2022, 206, 107776. [Google Scholar] [CrossRef]

- Zuo, B.; Cheng, J.; Zhang, Z. Degradation prediction model for proton exchange membrane fuel cells based on long short-term memory neural network and Savitzky-Golay filter. Int. J. Hydrog. Energy 2021, 46, 15928–15937. [Google Scholar] [CrossRef]

- Schafer, R.W. What Is a Savitzky-Golay Filter? [Lecture Notes]. IEEE Signal Process. Mag. 2011, 28, 111–117. [Google Scholar] [CrossRef]

- Lu, R.; Bai, R.; Huang, Y.; Li, Y.; Jiang, J.; Ding, Y. Data-driven real-time price-based demand response for industrial facilities energy management. Appl. Energy 2021, 283, 116291. [Google Scholar] [CrossRef]

- Angrisani, L.; Capriglione, D.; Cerro, G.; Ferrigno, L.; Miele, G. On Employing a Savitzky-Golay Filtering Stage to Improve Performance of Spectrum Sensing in CR Applications Concerning VDSA Approach. Metrol. Meas. Syst. 2016, 23, 295–308. [Google Scholar] [CrossRef]

| Layer (Type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| input_1 (InputLayer) | (, 10, 3) | 0 | |

| lstm_1 (LSTM) | (, 10, 64) | 17,408 | input_1[0][0] |

| dropout_1 (Dropout) | (, 10, 64) | 0 | lstm_1[0][0] |

| lstm_2 (LSTM) | (, 10, 3) | 816 | dropout_1[0][0] |

| dense_1 (Dense) | (, 10, 3) | 12 | input_1[0][0] |

| dropout_2 (Dropout) | (, 10, 3) | 0 | lstm_2[0][0] |

| attention_vec (Permute) | (, 10, 3) | 0 | dense_1[0][0] |

| multiply_1 (Multiply) | (, 10, 3) | 0 | dropout_2[0][0] attention_vec[0][0] |

| flatten_1 (Flatten) | (, 30) | 0 | multiply_1[0][0] |

| dense_2 (Dense) | (, 1) | 31 | flatten_1[0][0] |

| Model | MSE | RMSE | MAE | SMAPE/% | R2 | Time/s |

|---|---|---|---|---|---|---|

| LSTM | 61,211.25 | 247.40 | 150.92 | 56.34 | 0.96 | 5262 |

| BiLSTM | 72,940.68 | 270.07 | 161.03 | 57.31 | 0.95 | 7639 |

| GRU | 66,200.80 | 257.29 | 150.82 | 58.27 | 0.96 | 4503 |

| RNN | 148,230.00 | 385.00 | 283.30 | 70.56 | 0.91 | 3064 |

| simpleRNN | 91,431.48 | 302.37 | 222.81 | 62.09 | 0.94 | 1402 |

| Model | RMSE | MAE | SMAPE/% | R2 |

|---|---|---|---|---|

| LSTM | 247.40 | 150.92 | 56.34 | 0.96 |

| BiLSTM | 270.07 | 161.03 | 57.31 | 0.95 |

| GRU | 257.29 | 150.82 | 58.27 | 0.96 |

| RNN | 385.00 | 283.30 | 70.56 | 0.91 |

| simpleRNN | 302.37 | 222.81 | 62.09 | 0.94 |

| Md1 (N = 2, M = 1) | 281.25 | 169.78 | 39.60 | 0.96 |

| Md2 (N = 2, M = 2) | 115.52 | 70.76 | 32.27 | 0.99 |

| Md3 (N = 2, M = 3) | 153.50 | 104.54 | 36.81 | 0.99 |

| Md4 (N = 2, M = 4) | 170.99 | 103.11 | 34.70 | 0.98 |

| Md5 (N = 2, M = 5) | 187.61 | 116.96 | 36.09 | 0.98 |

| Md6 (N = 2, M = 6) | 202.78 | 122.27 | 38.39 | 0.98 |

| Md7 (N = 2, M = 7) | 215.09 | 130.58 | 38.24 | 0.97 |

| Md8 (N = 2, M = 8) | 226.97 | 139.54 | 37.13 | 0.97 |

| Md9 (N = 2, M = 9) | 236.80 | 144.91 | 38.96 | 0.97 |

| Md10 (N = 2, M = 10) | 248.04 | 151.02 | 40.06 | 0.97 |

| Md11 (N = 2, M = 11) | 258.54 | 160.96 | 39.03 | 0.96 |

| Md12 (N = 2, M = 12) | 266.47 | 161.88 | 40.73 | 0.96 |

| Md13 (N = 2, M = 13) | 273.91 | 169.60 | 40.80 | 0.96 |

| Md14 (N = 2, M = 14) | 282.26 | 182.46 | 40.31 | 0.95 |

| Md15 (N = 2, M = 15) | 285.25 | 175.56 | 40.97 | 0.95 |

| Methods | Error | 10 Min | 20 Min | 30 Min | 40 Min | 50 Min | 60 Min |

|---|---|---|---|---|---|---|---|

| Linear Regression | nRMSE | 0.0614 | 0.0856 | 0.1014 | 0.1133 | 0.1236 | 0.1326 |

| nMAE | 0.0358 | 0.0523 | 0.0632 | 0.0722 | 0.08 | 0.0868 | |

| R2 | 0.9727 | 0.9469 | 0.9255 | 0.9071 | 0.8894 | 0.8728 | |

| K- Neighbors | nRMSE | 0.0789 | 0.1038 | 0.122 | 0.1365 | 0.1498 | 0.1607 |

| nMAE | 0.0469 | 0.0629 | 0.0746 | 0.0839 | 0.0928 | 0.1002 | |

| R2 | 0.9550 | 0.9220 | 0.8922 | 0.8652 | 0.8375 | 0.8131 | |

| Ridge | nRMSE | 0.0614 | 0.0856 | 0.1014 | 0.1133 | 0.1236 | 0.1325 |

| nMAE | 0.0359 | 0.0523 | 0.0632 | 0.0722 | 0.0800 | 0.0868 | |

| R2 | 0.9727 | 0.9469 | 0.9255 | 0.9071 | 0.8894 | 0.8728 | |

| Random Forest | nRMSE | 0.0667 | 0.0924 | 0.1103 | 0.1239 | 0.1336 | 0.1445 |

| nMAE | 0.0395 | 0.0571 | 0.0700 | 0.0798 | 0.0872 | 0.0958 | |

| R2 | 0.9678 | 0.9382 | 0.9119 | 0.8888 | 0.8709 | 0.8489 | |

| xgBoost | nRMSE | 0.0672 | 0.0946 | 0.1108 | 0.1245 | 0.1341 | 0.1457 |

| nMAE | 0.0385 | 0.0557 | 0.0669 | 0.0765 | 0.0832 | 0.0914 | |

| R2 | 0.9673 | 0.9352 | 0.9111 | 0.8878 | 0.8699 | 0.8464 | |

| Adaboost | nRMSE | 0.1545 | 0.1897 | 0.1963 | 0.2015 | 0.1977 | 0.1898 |

| nMAE | 0.1320 | 0.1593 | 0.1638 | 0.1713 | 0.1648 | 0.1602 | |

| R2 | 0.8272 | 0.7396 | 0.7211 | 0.7062 | 0.7171 | 0.7393 | |

| SVR | nRMSE | 0.0767 | 0.0981 | 0.1134 | 0.1245 | 0.134 | 0.1426 |

| nMAE | 0.0606 | 0.0754 | 0.0861 | 0.0934 | 0.0997 | 0.1057 | |

| R2 | 0.9574 | 0.9303 | 0.9069 | 0.8878 | 0.87 | 0.8528 | |

| MLP | nRMSE | 0.0631 | 0.0905 | 0.1033 | 0.1225 | 0.1260 | 0.1350 |

| nMAE | 0.0378 | 0.0586 | 0.0633 | 0.0839 | 0.0790 | 0.0886 | |

| R2 | 0.9712 | 0.9408 | 0.9228 | 0.8913 | 0.8850 | 0.8680 | |

| RNN | nRMSE | 0.0719 | 0.1037 | 0.1178 | 0.1179 | 0.1306 | 0.1499 |

| nMAE | 0.0466 | 0.0713 | 0.0843 | 0.0797 | 0.0901 | 0.1142 | |

| R2 | 0.9626 | 0.9222 | 0.8995 | 0.8994 | 0.8766 | 0.8373 | |

| LSTM | nRMSE | 0.0636 | 0.0878 | 0.1031 | 0.1154 | 0.1267 | 0.1366 |

| nMAE | 0.0413 | 0.0561 | 0.0633 | 0.0741 | 0.0799 | 0.0853 | |

| R2 | 0.9707 | 0.9442 | 0.9231 | 0.9037 | 0.8839 | 0.8650 | |

| Seq2Seq | nRMSE | 0.1578 | 0.1706 | 0.1238 | 0.1426 | 0.1586 | 0.1608 |

| nMAE | 0.1208 | 0.1288 | 0.0854 | 0.0894 | 0.1161 | 0.1226 | |

| R2 | 0.8198 | 0.7894 | 0.8891 | 0.8527 | 0.8179 | 0.8128 | |

| SG-LSTM | nRMSE | 0.0485 | 0.0772 | 0.0932 | 0.1092 | 0.1168 | 0.1299 |

| nMAE | 0.0297 | 0.0482 | 0.0578 | 0.0741 | 0.0751 | 0.0872 | |

| R2 | 0.9828 | 0.9563 | 0.9363 | 0.9126 | 0.9082 | 0.8763 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Zhang, Y.; Du, X.; Xu, T.; Wu, J. Short-Term Power Prediction of Wind Turbine Applying Machine Learning and Digital Filter. Appl. Sci. 2023, 13, 1751. https://doi.org/10.3390/app13031751

Liu S, Zhang Y, Du X, Xu T, Wu J. Short-Term Power Prediction of Wind Turbine Applying Machine Learning and Digital Filter. Applied Sciences. 2023; 13(3):1751. https://doi.org/10.3390/app13031751

Chicago/Turabian StyleLiu, Shujun, Yaocong Zhang, Xiaoze Du, Tong Xu, and Jiangbo Wu. 2023. "Short-Term Power Prediction of Wind Turbine Applying Machine Learning and Digital Filter" Applied Sciences 13, no. 3: 1751. https://doi.org/10.3390/app13031751

APA StyleLiu, S., Zhang, Y., Du, X., Xu, T., & Wu, J. (2023). Short-Term Power Prediction of Wind Turbine Applying Machine Learning and Digital Filter. Applied Sciences, 13(3), 1751. https://doi.org/10.3390/app13031751