TeleFE: A New Tool for the Tele-Assessment of Executive Functions in Children

Abstract

1. Introduction

1.1. Tele-Assessment of Cognitive Functioning in Children

1.2. Executive Functions Assessment

1.3. Aims and Scope

2. Materials and Methods

2.1. Participants

2.2. Procedure

2.3. Measures

2.4. Statistical Analysis

3. Results

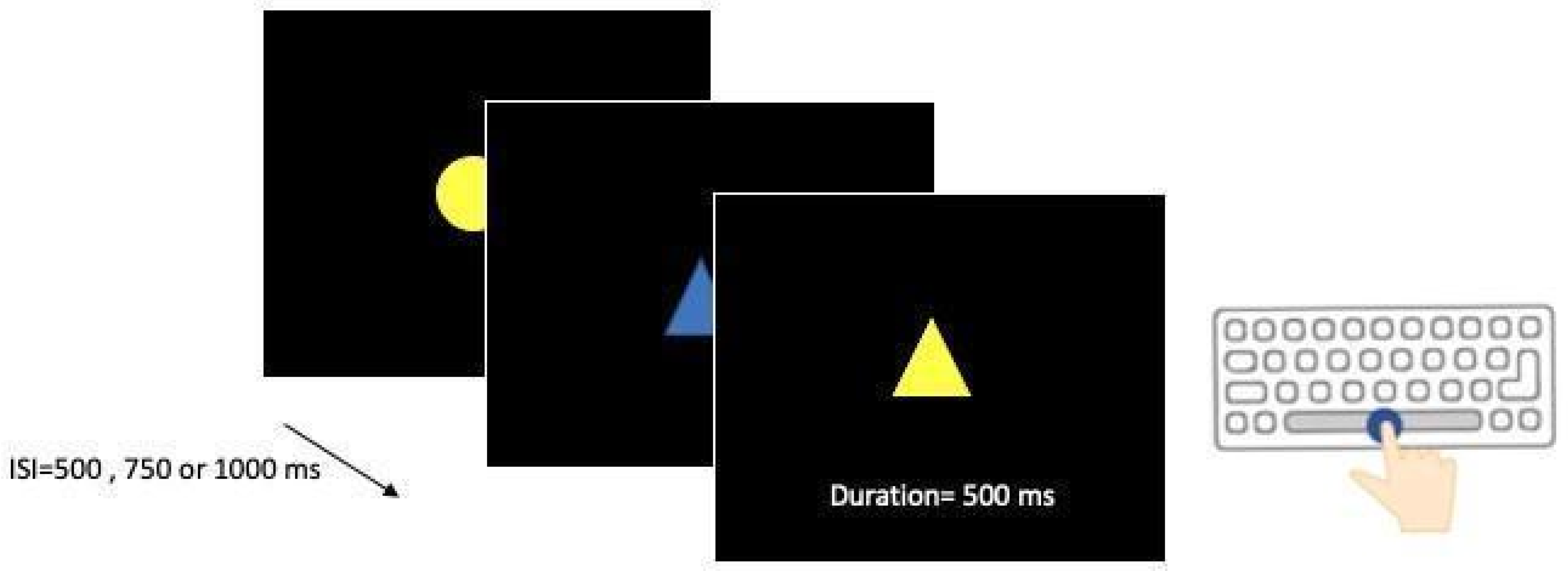

3.1. Basic Processes: Go/NoGo Task

3.2. Response Inhibition: Go/NoGo Task

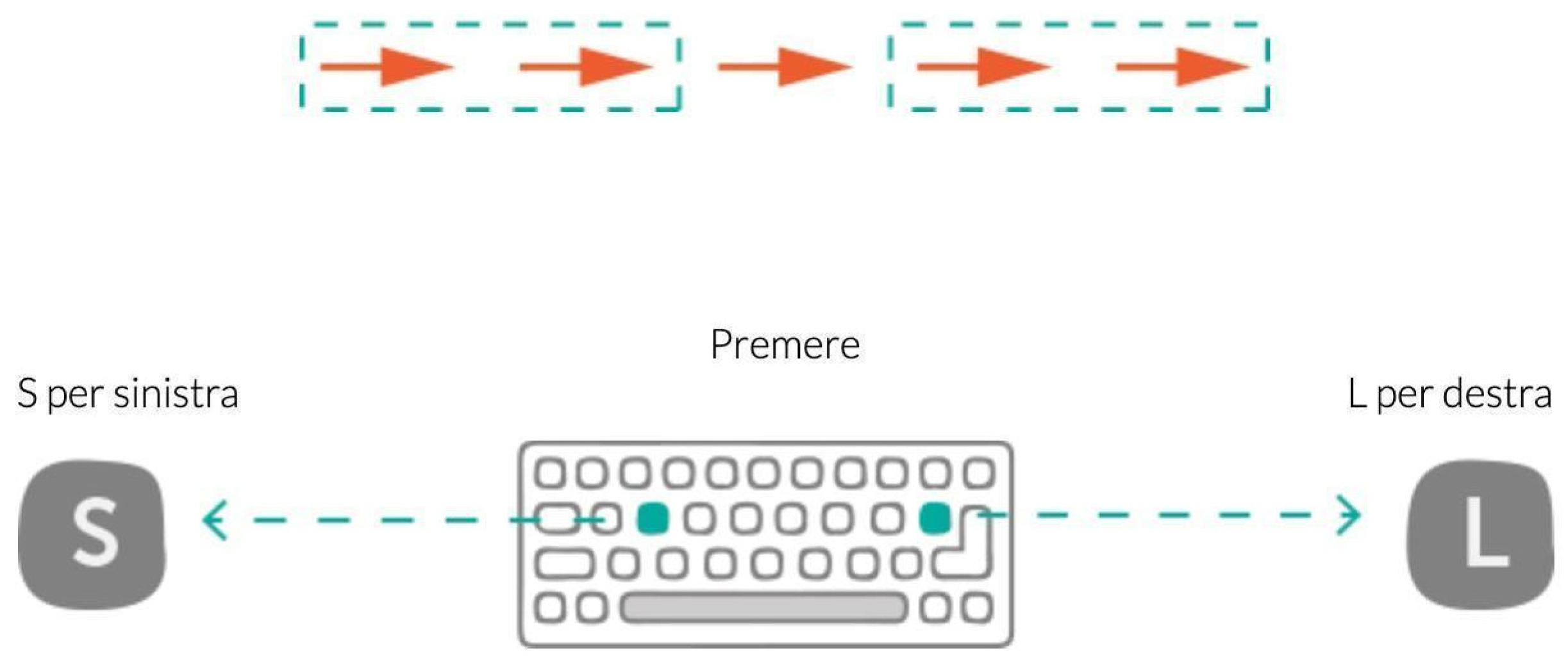

3.3. Interference Suppression: Flanker Task

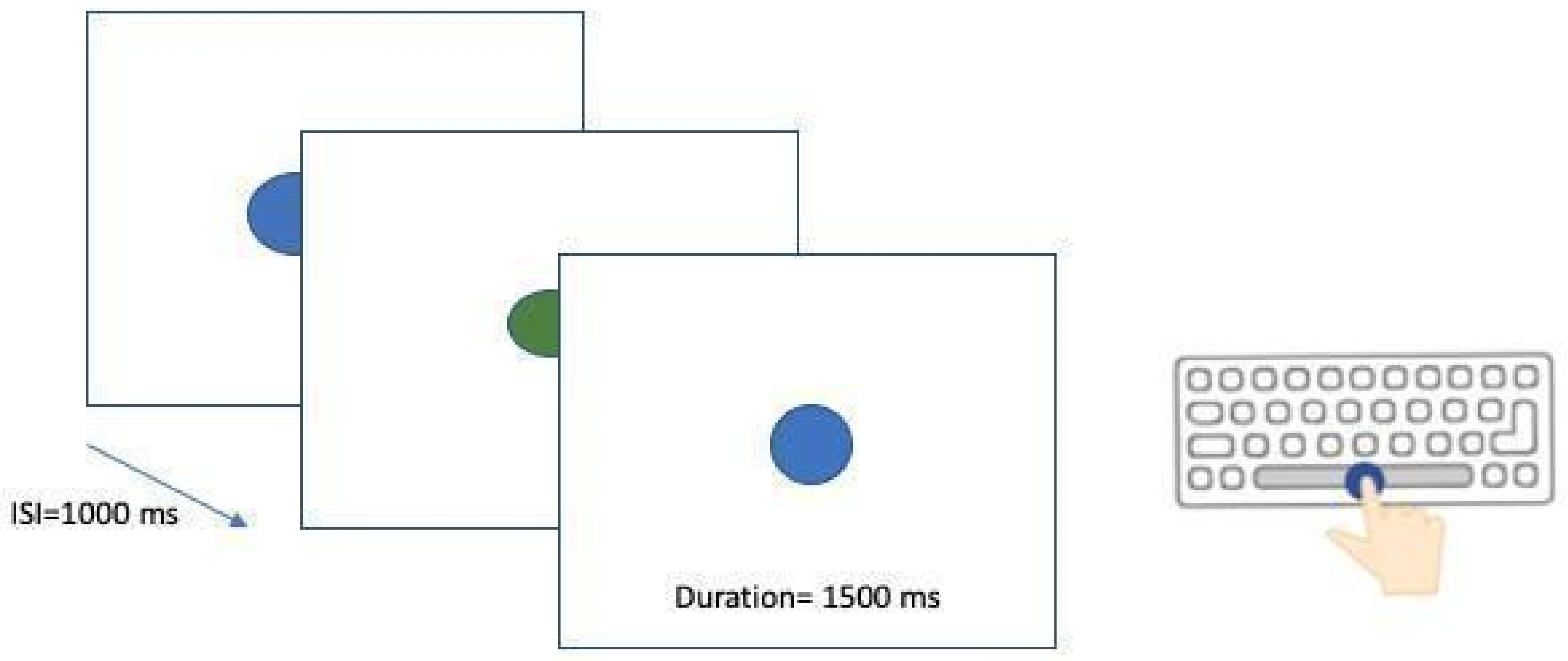

3.4. Updating: N-Back Task

3.5. Flexibility: Flanker Task

3.6. Daily Planning Test

3.7. Internal Consistency and Test Retest Reliability

3.8. Partial Correlations

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hodge, M.A.; Sutherland, R.; Jeng, K.; Bale, G.; Batta, P.; Cambridge, A.; Detheridge, J.; Drevensek, S.; Edwards, L.; Everett, M.; et al. Agreement between telehealth and face-to-face assessment of intellectual ability in children with specific learning disorder. J. Telemed. Telecare 2019, 25, 431–437. [Google Scholar] [CrossRef]

- Krach, S.K.; Paskiewicz, T.L.; Monk, M.M. Testing our children when the world shuts down: Analyzing recommendations for adapted tele-assessment during COVID-19. J. Psychoeduc. Assess. 2020, 38, 923–941. [Google Scholar] [CrossRef] [PubMed]

- Jaffar, R.; Ali, A.Z. Examining Ease and Challenges in Tele-Assessment of Children Using Slosson Intelligence Test. Pak. J. Psychol. Res. 2021, 36, 555–570. [Google Scholar] [CrossRef]

- Ruffini, C.; Tarchi, C.; Morini, M.; Giuliano, G.; Pecini, C. Tele-assessment of cognitive functions in children: A systematic review. Child Neuropsychol. 2022, 28, 709–745. [Google Scholar] [CrossRef] [PubMed]

- Farmer, R.L.; McGill, R.J.; Dombrowski, S.C.; McClain, M.B.; Harris, B.; Lockwood, A.B.; Powell, S.L.; Pynn, C.; Smith-Kellen, S.; Loethen, E.; et al. Teleassessment with children and adolescents during the coronavirus (COVID-19) pandemic and beyond: Practice and policy implications. Prof. Psychol. Res. Pract. 2020, 51, 477–487. [Google Scholar] [CrossRef]

- Wosik, J.; Fudim, M.; Cameron, B.; Gellad, Z.F.; Cho, A.; Phinney, D.; Curtis, S.; Roman, M.; Poon, E.G.; Ferranti, J.; et al. Telehealth transformation: COVID-19 and the rise of virtual care. J. Am. Med. Inform. Assoc. 2020, 27, 957–962. [Google Scholar] [CrossRef] [PubMed]

- Ragbeer, S.N.; Augustine, E.F.; Mink, J.W.; Thatcher, A.R.; Vierhile, A.E.; Adams, H.R. Remote assessment of cognitive function in juvenile neuronal ceroid lipofuscinosis (Batten disease): A pilot study of feasibility and reliability. J. Child Neurol. 2016, 31, 481–487. [Google Scholar] [CrossRef]

- Sutherland, R.; Trembath, D.; Hodge, A.; Drevensek, S.; Lee, S.; Silove, N.; Roberts, J. Telehealth language assessments using consumer grade equipment in rural and urban settings: Feasible, reliable and well tolerated. J. Telemed. Telecare 2017, 23, 106–115. [Google Scholar] [CrossRef]

- Sutherland, R.; Trembath, D.; Hodge, M.A.; Rose, V.; Roberts, J. Telehealth and autism: Are telehealth language assessments reliable and feasible for children with autism? Int. J. Lang. Commun. Disord. 2019, 54, 281–291. [Google Scholar] [CrossRef]

- American Psychological Association Services (APA Services). Guidance on Psychological Tele-Assessment during the COVID-19 Crisis. 2020. Available online: https://www.apaservices.org/practice/reimbursement/health-codes/testing/tele-assessment-covid-19 (accessed on 20 December 2022).

- Harder, L.; Hernandez, A.; Hague, C.; Neumann, J.; McCreary, M.; Cullum, C.M.; Greenberg, B. Home-based pediatric teleneuropsychology: A validation study. Arch. Clin. Neuropsychol. 2020, 35, 1266–1275. [Google Scholar] [CrossRef]

- Alloway, T.P. Automated Working Memory Assessment (AWMA). Harcourt Assessment; Pearson: London, UK, 2007. [Google Scholar]

- Luciana, M.; Nelson, C.A. Assessment of neuropsychological function through use of the Cambridge Neuropsychological Testing Automated Battery: Performance in 4- to 12-year-old children. Dev. Neuropsychol. 2002, 22, 595–624. [Google Scholar] [CrossRef]

- Miyake, A.; Friedman, N.P.; Emerson, M.J.; Witzki, A.H.; Howerter, A.; Wager, T.D. The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cogn. Psychol. 2000, 41, 49–100. [Google Scholar] [CrossRef]

- Diamond, A. Executive functions. Annu. Rev. Psychol. 2013, 64, 135–168. [Google Scholar] [CrossRef]

- Miyake, A.; Friedman, N.P. The nature and organization of individual differences in executive functions: Four general conclusions. Curr. Dir. Psychol. Sci. 2012, 21, 8–14. [Google Scholar] [CrossRef]

- Podjarny, G.; Kamawar, D.; Andrews, K. Measuring on the go: Response to Morra, Panesi, Traverso, and Usai. J. Exp. Child Psychol. 2018, 171, 131–137. [Google Scholar] [CrossRef]

- Willoughby, M.; Holochwost, S.J.; Blanton, Z.E.; Blair, C.B. Executive functions: Formative versus reflective measurement. Meas. Interdiscip. Res. Perspect. 2014, 12, 69–95. [Google Scholar] [CrossRef]

- Burgess, P.W. Theory and methodology in executive function research. In Methodology of Frontal and Executive Function; Rabbit, P., Ed.; Psychology Press: Hove, UK, 1997; pp. 81–116. [Google Scholar]

- Lee, K.; Bull, R.; Ho, R.M. Developmental changes in executive functioning. Child Dev. 2013, 84, 1933–1953. [Google Scholar] [CrossRef]

- Morra, S.; Panesi, S.; Traverso, L.; Usai, M.C. Which tasks measure what? Reflections on executive function development and a commentary on Podjarny, Kamawar, and Andrews (2017). J. Exp. Child Psychol. 2018, 167, 246–258. [Google Scholar] [CrossRef]

- Gioia, G.A.; Isquith, P.K.; Guy, S.C.; Kenworthy, L. Behavior rating inventory of executive function. Child Neuropsychol. 2000, 6, 235–238. [Google Scholar] [CrossRef]

- Toplak, M.E.; West, R.F.; Stanovich, K.E. Practitioner review: Do performance-based measures and ratings of executive function assess the same construct? J. Child Psychol. Psychiatry 2013, 54, 131–143. [Google Scholar] [CrossRef]

- Conklin, H.M.; Salorio, C.F.; Slomine, B.S. Working memory performance following paediatric traumatic brain injury. Brain Inj. 2008, 22, 847–857. [Google Scholar] [CrossRef] [PubMed]

- McAuley, T.; Chen, S.; Goos, L.; Schachar, R.; Crosbie, J. Is the Behavior Rating Inventory of Executive Function more strongly associated with measures of impairment or executive function? J. Int. Neuropsychol. Soc. 2010, 16, 495–505. [Google Scholar] [CrossRef] [PubMed]

- Sølsnes, A.E.; Skranes, J.; Brubakk, A.-M.; Løhaugen, G.C.C. Executive functions in very-low-birth-weight young adults: A comparison between self-report and neuropsychological test results. J. Int. Neuropsychol. Soc. 2014, 20, 506–515. [Google Scholar] [CrossRef] [PubMed]

- Anderson, P. Assessment and development of executive function (EF) during childhood. Child Neuropsychol. A J. Norm. Abnorm. Dev. Child. Adolesc. 2002, 8, 71–82. [Google Scholar] [CrossRef]

- Cragg, L.; Nation, K. Go or no-go? Developmental improvements in the efficiency of response inhibition in mid-childhood. Dev. Sci. 2008, 11, 819–827. [Google Scholar] [CrossRef]

- Eriksen, B.A.; Eriksen, C.W. Effects of noise letters upon the identification of a target letter in a nonsearch task. Percept. Psychophys. 1974, 16, 143–149. [Google Scholar] [CrossRef]

- Diamond, A.; Barnett, W.S.; Thomas, J.; Munro, S. Preschool program improves cognitive control. Science 2007, 318, 1387–1388. [Google Scholar] [CrossRef]

- Kirchner, W.K. Age differences in short-term retention of rapidly changing information. J. Exp. Psychol. 1958, 55, 352. [Google Scholar] [CrossRef]

- Mencarelli, L.; Neri, F.; Momi, D.; Menardi, A.; Rossi, S.; Rossi, A.; Santarnecchi, E. Stimuli, presentation modality, and load-specific brain activity patterns during n-back task. Hum. Brain Mapp. 2019, 40, 3810–3831. [Google Scholar] [CrossRef]

- Sgaramella, T.M.; Bisiacchi, P.; Falchero, S. Ruolo dell’età nell’abilità di pianificazione di azioni in un contesto spaziale. Ric. Psicol. 1995, 19, 165–181. [Google Scholar]

- Schweiger, M.; Marzocchi, G.M. Lo sviluppo delle Funzioni Esecutive: Uno studio su ragazzi dalla terza elementare alla terza media. G. Ital. Psicol. 2008, 35, 353–374. [Google Scholar] [CrossRef]

- Korkman, M.; Kirk, U.; Kemp, S. NEPSY–II; Italian Adaptation; Giunti, O.S. (Organizzazioni Speciali): Firenze, Italy, 2011. [Google Scholar]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Cullum, C.M.; Hynan, L.S.; Grosch, M.; Parikh, M.; Weiner, M.F. Teleneuropsychology: Evidence for video teleconference-based neuropsychological assessment. J. Int. Neuropsychol. Soc. 2014, 20, 1028–1033. [Google Scholar] [CrossRef] [PubMed]

- Betts, J.; Mckay, J.; Maruff, P.; Anderson, V. The Development of Sustained Attention in Children: The Effect of Age and Task Load. Child Neuropsychol. 2007, 12, 205–221. [Google Scholar] [CrossRef]

- Cornblath, E.J.; Tang, E.; Baum, G.L.; Moore, T.M.; Adebimpe, A.; Roalf, D.R.; Gur, R.C.; Gur, R.E.; Pasqualetti, F.; Satterthwaite, T.D.; et al. Sex differences in network controllability as a predictor of executive function in youth. NeuroImage 2019, 188, 122–134. [Google Scholar] [CrossRef]

- Sadeghi, S.; Shalani, B.; Nejati, V. Sex and age-related differences in inhibitory control in typically developing children. Early Child Dev. Care 2020, 192, 292–301. [Google Scholar] [CrossRef]

- Saunders, B.; Farag, N.; Vincent, A.S.; Collins, F.L., Jr.; Sorocco, K.H.; Lovallo, W.R. Impulsive errors on a Go-NoGo reaction time task: Disinhibitory traits in relation to a family history of alcoholism. Alcohol. Clin. Exp. Res. 2008, 32, 888–894. [Google Scholar] [CrossRef]

- Usai, M.C. Inhibitory abilities in girls and boys: More similarities or differences? J. Neurosci. Res. 2022. [Google Scholar] [CrossRef]

- Juárez, A.P.; Weitlauf, A.S.; Nicholson, A.; Pasternak, A.; Broderick, N.; Hine, J.; Stainbrook, J.A.; Warren, Z. Early identification of ASD through telemedicine: Potential value for underserved populations. J. Autism Dev. Disord. 2018, 48, 2601–2610. [Google Scholar] [CrossRef]

- Salinas, C.M.; Bordes Edgar, V.; Berrios Siervo, G.; Bender, H.A. Transforming pediatric neuropsychology through video-based teleneuropsychology: An innovative private practice model pre-COVID-19. Arch. Clin. Neuropsychol. Off. J. Natl. Acad. Neuropsychol. 2020, 35, 1189–1195. [Google Scholar] [CrossRef]

| Class | N | Mean | SD | Min | Max | Skew | SE | Kurt | SE | V.C. | Post hoc (p < 0.05) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Go CR | 1 | 70 | 128.06 | 9.88 | 100 | 140 | −1.37 | 0.29 | 1.16 | 0.57 | 0.08 | <4–8 |

| 2 | 199 | 128.39 | 10.12 | 93 | 140 | −1.64 | 0.17 | 2.33 | 0.34 | 0.08 | <5–8 | |

| 3 | 220 | 131.33 | 8.26 | 98 | 140 | −1.70 | 0.16 | 2.97 | 0.33 | 0.06 | <4–8 | |

| 4 | 269 | 133.24 | 7.34 | 104 | 140 | −1.91 | 0.15 | 3.65 | 0.30 | 0.06 | >1, 3 | |

| 5 | 183 | 134.04 | 5.82 | 110 | 140 | −1.74 | 0.18 | 3.40 | 0.36 | 0.04 | >1–3 | |

| 6 | 88 | 135.52 | 4.36 | 121 | 140 | −1.41 | 0.26 | 1.47 | 0.51 | 0.03 | >1–3 | |

| 7 | 91 | 135.82 | 3.57 | 123 | 140 | −1.15 | 0.25 | 1.78 | 0.50 | 0.03 | >1–3 | |

| 8 | 89 | 136.19 | 4.70 | 118 | 140 | −1.96 | 0.26 | 4.30 | 0.51 | 0.03 | >1–3 | |

| NoGo CR | 1 | 71 | 42.79 | 7.23 | 18 | 57 | −0.60 | 0.28 | 1.15 | 0.56 | 0.17 | <6–8 |

| 2 | 203 | 41.37 | 8.84 | 18 | 59 | −0.75 | 0.17 | 0.01 | 0.34 | 0.21 | <4–8 | |

| 3 | 225 | 43.62 | 7.03 | 21 | 58 | −0.40 | 0.16 | −0.11 | 0.32 | 0.16 | <4–8 | |

| 4 | 275 | 45.91 | 6.97 | 24 | 59 | −0.66 | 0.15 | 0.03 | 0.29 | 0.15 | <7–8 | |

| 5 | 186 | 46.13 | 6.49 | 26 | 59 | −0.67 | 0.18 | 0.09 | 0.35 | 0.14 | <7–8 | |

| 6 | 91 | 47.87 | 6.18 | 33 | 59 | −0.57 | 0.25 | −0.35 | 0.50 | 0.13 | >1–3 | |

| 7 | 92 | 48.65 | 6.17 | 34 | 58 | −0.49 | 0.25 | −0.61 | 0.50 | 0.13 | >1–5 | |

| 8 | 90 | 50.17 | 6.09 | 32 | 59 | −1.12 | 0.25 | 1.13 | 0.50 | 0.12 | >1–5 | |

| Go RT | 1 | 72 | 2135.54 | 211.70 | 1684 | 2603 | 0.24 | 0.28 | −0.38 | 0.56 | 0.10 | >3–8 |

| 2 | 203 | 2015.38 | 208.53 | 1575 | 2556 | 0.38 | 0.17 | −0.32 | 0.34 | 0.10 | >4–8 | |

| 3 | 226 | 1921.12 | 189.02 | 1344 | 2422 | 0.20 | 0.16 | 0.21 | 0.32 | 0.10 | >4–8 | |

| 4 | 275 | 1814.62 | 187.77 | 1364 | 2320 | 0.38 | 0.15 | −0.19 | 0.29 | 0.10 | >5–8 | |

| 5 | 186 | 1751.87 | 186.35 | 1348 | 2310 | 0.54 | 0.18 | 0.24 | 0.35 | 0.11 | >7–8 | |

| 6 | 91 | 1684.89 | 151.58 | 1317 | 2040 | −0.06 | 0.25 | 0.06 | 0.50 | 0.09 | >8 | |

| 7 | 91 | 1644.63 | 143.13 | 1294 | 1954 | −0.13 | 0.25 | −0.54 | 0.50 | 0.09 | <1–5 | |

| 8 | 90 | 1575.43 | 147.55 | 1289 | 2049 | 0.66 | 0.25 | 0.49 | 0.50 | 0.09 | <1–6 | |

| Congruent CR | 1 | 55 | 33.84 | 4.42 | 24 | 40 | −0.61 | 0.32 | −0.59 | 0.63 | 0.13 | <3–8 |

| 2 | 171 | 35.01 | 4.40 | 24 | 40 | −0.84 | 0.19 | −0.29 | 0.37 | 0.13 | <3–8 | |

| 3 | 210 | 36.95 | 2.88 | 24 | 40 | −1.46 | 0.17 | 2.47 | 0.33 | 0.08 | <5–8 | |

| 4 | 264 | 37.78 | 2.85 | 27 | 40 | −1.97 | 0.15 | 3.52 | 0.30 | 0.08 | <7–8 | |

| 5 | 181 | 38.62 | 1.47 | 34 | 40 | −0.96 | 0.18 | 0.10 | 0.36 | 0.04 | >1–3 | |

| 6 | 86 | 38.98 | 1.26 | 35 | 40 | −1.38 | 0.26 | 1.48 | 0.51 | 0.03 | >1–3 | |

| 7 | 91 | 39.14 | 1.23 | 34 | 40 | −2.02 | 0.25 | 4.64 | 0.50 | 0.03 | >1–4 | |

| 8 | 87 | 39.30 | 0.93 | 36 | 40 | −1.26 | 0.26 | 1.09 | 0.51 | 0.02 | >1–4 | |

| Incongruent CR | 1 | 55 | 34.24 | 6.67 | 13 | 40 | −1.59 | 0.32 | 2.16 | 0.63 | 0.19 | <5–8 |

| 2 | 171 | 28.70 | 8.90 | 9 | 40 | −0.40 | 0.19 | −1.09 | 0.37 | 0.31 | <5–8 | |

| 3 | 216 | 32.13 | 7.33 | 4 | 40 | −1.17 | 0.17 | 0.72 | 0.33 | 0.23 | <5–8 | |

| 4 | 266 | 33.48 | 7.08 | 9 | 40 | −1.37 | 0.15 | 1.01 | 0.30 | 0.21 | <6–8 | |

| 5 | 184 | 35.43 | 5.20 | 14 | 40 | −1.85 | 0.18 | 3.18 | 0.36 | 0.15 | >1–3 | |

| 6 | 87 | 36.32 | 4.34 | 19 | 40 | −2.16 | 0.26 | 5.05 | 0.51 | 0.12 | >1–4 | |

| 7 | 89 | 38.12 | 2.15 | 30 | 40 | −1.69 | 0.26 | 3.01 | 0.51 | 0.06 | >1–4 | |

| 8 | 88 | 38.09 | 2.63 | 22 | 40 | −3.15 | 0.26 | 15.40 | 0.51 | 0.07 | >1–4 | |

| Congruent RT | 1 | 55 | 1842.44 | 269.50 | 1216 | 2560 | 0.10 | 0.32 | 0.12 | 0.63 | 0.15 | >3–8 |

| 2 | 171 | 1715.68 | 242.39 | 1118 | 2431 | 0.14 | 0.19 | 0.01 | 0.37 | 0.14 | >4–8 | |

| 3 | 215 | 1619.55 | 230.58 | 1099 | 2452 | 0.48 | 0.17 | 0.57 | 0.33 | 0.14 | >4–8 | |

| 4 | 266 | 1506.05 | 240.14 | 968 | 2389 | 0.54 | 0.15 | 0.44 | 0.30 | 0.16 | >5–8 | |

| 5 | 182 | 1400.63 | 254.71 | 814 | 2500 | 0.66 | 0.18 | 1.36 | 0.36 | 0.18 | >8 | |

| 6 | 86 | 1322.67 | 216.69 | 883 | 2221 | 0.82 | 0.26 | 2.62 | 0.51 | 0.16 | >8 | |

| 7 | 89 | 1303.29 | 247.49 | 911 | 2186 | 1.07 | 0.26 | 1.33 | 0.51 | 0.19 | <1–4 | |

| 8 | 87 | 1172.03 | 202.89 | 866 | 1743 | 0.83 | 0.26 | 0.28 | 0.51 | 0.17 | <1–7 | |

| Incongruent RT | 1 | 55 | 2024.55 | 288.93 | 1230 | 2717 | −0.30 | 0.32 | 0.43 | 0.63 | 0.14 | >4–8 |

| 2 | 171 | 1897.22 | 248.53 | 1208 | 2498 | −0.28 | 0.19 | −0.11 | 0.37 | 0.13 | >4–8 | |

| 3 | 216 | 1848.92 | 275.88 | 1169 | 2673 | 0.12 | 0.17 | −0.03 | 0.33 | 0.15 | >4–8 | |

| 4 | 268 | 1705.10 | 289.86 | 1023 | 2578 | 0.39 | 0.15 | 0.02 | 0.30 | 0.17 | >5–8 | |

| 5 | 186 | 1563.60 | 292.69 | 843 | 2404 | 0.21 | 0.18 | −0.18 | 0.35 | 0.19 | >7–8 | |

| 6 | 88 | 1453.17 | 226.50 | 964 | 2078 | 0.12 | 0.26 | −0.27 | 0.51 | 0.16 | >8 | |

| 7 | 91 | 1428.35 | 259.28 | 995 | 2246 | 0.74 | 0.25 | 0.32 | 0.50 | 0.18 | >8 | |

| 8 | 90 | 1263.11 | 230.78 | 855 | 1835 | 0.65 | 0.25 | −0.21 | 0.50 | 0.18 | <1–7 | |

| 1-back | 1 | 66 | 136.88 | 10.93 | 102 | 154 | −1.18 | 0.29 | 1.96 | 0.58 | 0.08 | <3–8 |

| 2 | 179 | 138.78 | 12.50 | 94 | 155 | −1.24 | 0.18 | 1.29 | 0.36 | 0.09 | <4–8 | |

| 3 | 230 | 143.49 | 9.32 | 105 | 154 | −1.92 | 0.16 | 4.36 | 0.32 | 0.06 | <4–8 | |

| 4 | 270 | 146.67 | 7.12 | 116 | 156 | −1.62 | 0.15 | 2.76 | 0.30 | 0.05 | <7–8 | |

| 5 | 188 | 148.64 | 4.93 | 126 | 155 | −1.37 | 0.18 | 2.27 | 0.35 | 0.03 | >1–3 | |

| 6 | 87 | 148.44 | 5.83 | 126 | 156 | −1.76 | 0.26 | 3.32 | 0.51 | 0.04 | >1–3 | |

| 7 | 91 | 148.44 | 6.71 | 115 | 156 | −2.57 | 0.25 | 8.10 | 0.50 | 0.05 | >1–4 | |

| 8 | 89 | 150.45 | 3.91 | 133 | 156 | −1.65 | 0.26 | 4.01 | 0.51 | 0.03 | >1–4 | |

| 2-back | 1 | 64 | 117.80 | 7.83 | 96 | 137 | −0.55 | 0.30 | 0.80 | 0.59 | 0.07 | <4–8 |

| 2 | 170 | 118.39 | 8.58 | 94 | 140 | −0.29 | 0.19 | 0.40 | 0.37 | 0.07 | <4–8 | |

| 3 | 224 | 121.45 | 9.29 | 96 | 144 | −0.46 | 0.16 | 0.22 | 0.32 | 0.08 | <4–8 | |

| 4 | 271 | 126.98 | 8.92 | 103 | 147 | −0.22 | 0.15 | −0.24 | 0.29 | 0.07 | <7–8 | |

| 5 | 187 | 128.39 | 7.93 | 106 | 148 | −0.11 | 0.18 | −0.08 | 0.35 | 0.06 | <8 | |

| 6 | 88 | 129.26 | 8.94 | 110 | 149 | 0.01 | 0.26 | −0.63 | 0.51 | 0.07 | >1–3 | |

| 7 | 90 | 130.62 | 8.71 | 103 | 154 | −0.12 | 0.25 | 0.77 | 0.50 | 0.07 | >1–4 | |

| 8 | 89 | 133.66 | 9.15 | 110 | 153 | −0.22 | 0.26 | −0.32 | 0.51 | 0.07 | >1–5 | |

| Mixed Congruent CR | 1 | 46 | 26.83 | 3.36 | 20 | 32 | −0.55 | 0.35 | −0.51 | 0.69 | 0.13 | <5–8 |

| 2 | 153 | 27.45 | 3.57 | 19 | 32 | −0.74 | 0.20 | −0.53 | 0.39 | 0.13 | <7–8 | |

| 3 | 201 | 28.10 | 3.07 | 19 | 32 | −0.97 | 0.17 | 0.42 | 0.34 | 0.11 | <5–8 | |

| 4 | 263 | 28.51 | 3.33 | 19 | 32 | −1.07 | 0.15 | 0.33 | 0.30 | 0.12 | <5, 7–8 | |

| 5 | 181 | 29.65 | 2.21 | 23 | 32 | −1.06 | 0.18 | 0.42 | 0.36 | 0.07 | >1, 3–4 | |

| 6 | 83 | 29.60 | 2.29 | 22 | 32 | −1.32 | 0.26 | 1.57 | 0.52 | 0.08 | >1, 3 | |

| 7 | 88 | 30.17 | 2.11 | 22 | 32 | −1.67 | 0.26 | 2.90 | 0.51 | 0.07 | >1–4 | |

| 8 | 87 | 30.76 | 1.32 | 26 | 32 | −1.22 | 0.26 | 1.40 | 0.51 | 0.04 | >1–4 | |

| Mixed Incongruent CR | 1 | 46 | 15.85 | 4.97 | 2 | 29 | 0.06 | 0.35 | 0.80 | 0.69 | 0.31 | <5–8 |

| 2 | 152 | 15.89 | 4.59 | 3 | 29 | −0.03 | 0.20 | 0.06 | 0.39 | 0.29 | <4–8 | |

| 3 | 201 | 18.94 | 4.74 | 7 | 31 | 0.07 | 0.17 | −0.39 | 0.34 | 0.25 | <4–8 | |

| 4 | 262 | 20.96 | 5.20 | 7 | 32 | −0.27 | 0.15 | −0.62 | 0.30 | 0.25 | <6–8 | |

| 5 | 183 | 22.43 | 4.92 | 8 | 32 | −0.51 | 0.18 | −0.26 | 0.36 | 0.22 | <7–8 | |

| 6 | 85 | 23.27 | 4.66 | 12 | 31 | −0.45 | 0.26 | −0.58 | 0.52 | 0.20 | >1–4 | |

| 7 | 90 | 25.17 | 4.00 | 13 | 32 | −0.86 | 0.25 | 1.00 | 0.50 | 0.16 | >1–5 | |

| 8 | 88 | 25.41 | 4.56 | 13 | 31 | −0.95 | 0.26 | 0.21 | 0.51 | 0.18 | >1–5 | |

| Mixed Congruent RT | 1 | 45 | 1113.02 | 147.36 | 717 | 1361 | −0.65 | 0.35 | 0.36 | 0.69 | 0.13 | >6, 8 |

| 2 | 153 | 1049.94 | 172.94 | 649 | 1499 | 0.22 | 0.20 | −0.40 | 0.39 | 0.16 | >8 | |

| 3 | 201 | 1051.84 | 164.63 | 573 | 1412 | −0.24 | 0.17 | −0.21 | 0.34 | 0.16 | >6, 8 | |

| 4 | 263 | 1030.94 | 163.86 | 559 | 1484 | −0.13 | 0.15 | −0.23 | 0.30 | 0.16 | >8 | |

| 5 | 184 | 997.37 | 183.77 | 467 | 1495 | −0.26 | 0.18 | 0.36 | 0.36 | 0.18 | - | |

| 6 | 85 | 969.01 | 149.37 | 602 | 1393 | 0.04 | 0.26 | 0.09 | 0.52 | 0.15 | <1, 3 | |

| 7 | 91 | 985.71 | 158.46 | 593 | 1363 | −0.16 | 0.25 | 0.12 | 0.50 | 0.16 | - | |

| 8 | 89 | 913.04 | 165.32 | 527 | 1395 | 0.08 | 0.26 | −0.06 | 0.51 | 0.18 | <1–4 | |

| Mixed Incongruent RT | 1 | 45 | 1337.84 | 156.07 | 962 | 1692 | −0.45 | 0.35 | 0.45 | 0.69 | 0.12 | >8 |

| 2 | 153 | 1244.58 | 211.46 | 675 | 1688 | −0.42 | 0.20 | −0.12 | 0.39 | 0.17 | >8 | |

| 3 | 200 | 1275.66 | 191.09 | 714 | 1644 | −0.63 | 0.17 | 0.26 | 0.34 | 0.15 | >5–8 | |

| 4 | 262 | 1258.15 | 180.18 | 744 | 1793 | −0.11 | 0.15 | 0.08 | 0.30 | 0.14 | >6–8 | |

| 5 | 184 | 1201.87 | 165.32 | 767 | 1597 | −0.13 | 0.18 | −0.32 | 0.36 | 0.14 | >8 | |

| 6 | 85 | 1179.25 | 149.66 | 773 | 1513 | −0.33 | 0.26 | 0.21 | 0.52 | 0.13 | <3–4 | |

| 7 | 91 | 1179.13 | 165.16 | 710 | 1547 | −0.23 | 0.25 | −0.20 | 0.50 | 0.14 | <3–4 | |

| 8 | 89 | 1089.13 | 175.09 | 575 | 1497 | −0.30 | 0.26 | 0.52 | 0.51 | 0.16 | <1–5 | |

| DPT | 3 | 28 | 79.43 | 9.75 | 64 | 91 | −0.32 | 0.44 | −1.13 | 0.86 | 0.12 | <4, 6–8 |

| 4 | 49 | 88.61 | 9.85 | 55 | 100 | −1.13 | 0.34 | 1.93 | 0.67 | 0.11 | >3 | |

| 5 | 29 | 88.52 | 6.76 | 73 | 100 | −0.03 | 0.43 | −0.25 | 0.85 | 0.08 | - | |

| 6 | 30 | 91.00 | 9.15 | 73 | 100 | −0.84 | 0.43 | −0.25 | 0.83 | 0.10 | >3 | |

| 7 | 24 | 90.25 | 9.16 | 73 | 100 | −0.63 | 0.47 | −0.58 | 0.92 | 0.10 | >3 | |

| 8 | 18 | 92.00 | 6.83 | 82 | 100 | −0.19 | 0.54 | −1.12 | 1.04 | 0.07 | >3 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

| 1 | NoGo CR | — | ||||||||

| p | — | |||||||||

| 2 | Incongruent CR | 0.23 | — | |||||||

| p | <0.001 | — | ||||||||

| 3 | Incongruent RT | 0.14 | −0.28 | — | ||||||

| p | <0.001 | <0.001 | — | |||||||

| 4 | Mixed Incongruent CR | 0.22 | 0.42 | −0.30 | — | |||||

| p | <0.001 | <0.001 | <0.001 | — | ||||||

| 5 | Mixed Incongruent RT | 0.23 | 0.16 | 0.45 | −0.03 | — | ||||

| p | <0.001 | <0.001 | <0.001 | 0.385 | — | |||||

| 6 | 1-back CR | 0.27 | 0.30 | −0.11 | 0.27 | 0.06 | — | |||

| p | <0.001 | <0.001 | <0.001 | <0.001 | 0.060 | — | ||||

| 7 | 2-back CR | 0.28 | 0.33 | −0.16 | 0.32 | 0.04 | 0.55 | — | ||

| p | <0.001 | <0.001 | <0.001 | <0.001 | 0.154 | <0.001 | — | |||

| 8 | DPT | 0.16 | 0.28 | −0.20 | 0.17 | 0.07 | 0.17 | 0.17 | — | |

| p | 0.039 | <0.001 | 0.008 | 0.032 | 0.343 | 0.031 | 0.031 | — | ||

| 9 | QUFE Parents | 0.12 | 0.13 | −0.04 | 0.13 | −0.03 | 0.12 | 0.13 | 0.08 | — |

| p | 0.003 | 0.003 | 0.319 | 0.003 | 0.534 | 0.006 | 0.002 | 0.332 | — | |

| 10 | QUFE Teachers | 0.20 | 0.24 | 0.00 | 0.18 | 0.23 | 0.19 | 0.27 | 0.33 | 0.34 |

| p | <0.001 | <0.001 | 0.919 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | |

| Test retest reliability | 0.43 | 0.38 | 0.64 | 0.52 | 0.67 | 0.42 | 0.61 | - | - | |

| p | 0.002 | 0.006 | <0.001 | <0.001 | <0.001 | 0.003 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rivella, C.; Ruffini, C.; Bombonato, C.; Capodieci, A.; Frascari, A.; Marzocchi, G.M.; Mingozzi, A.; Pecini, C.; Traverso, L.; Usai, M.C.; et al. TeleFE: A New Tool for the Tele-Assessment of Executive Functions in Children. Appl. Sci. 2023, 13, 1728. https://doi.org/10.3390/app13031728

Rivella C, Ruffini C, Bombonato C, Capodieci A, Frascari A, Marzocchi GM, Mingozzi A, Pecini C, Traverso L, Usai MC, et al. TeleFE: A New Tool for the Tele-Assessment of Executive Functions in Children. Applied Sciences. 2023; 13(3):1728. https://doi.org/10.3390/app13031728

Chicago/Turabian StyleRivella, Carlotta, Costanza Ruffini, Clara Bombonato, Agnese Capodieci, Andrea Frascari, Gian Marco Marzocchi, Alessandra Mingozzi, Chiara Pecini, Laura Traverso, Maria Carmen Usai, and et al. 2023. "TeleFE: A New Tool for the Tele-Assessment of Executive Functions in Children" Applied Sciences 13, no. 3: 1728. https://doi.org/10.3390/app13031728

APA StyleRivella, C., Ruffini, C., Bombonato, C., Capodieci, A., Frascari, A., Marzocchi, G. M., Mingozzi, A., Pecini, C., Traverso, L., Usai, M. C., & Viterbori, P. (2023). TeleFE: A New Tool for the Tele-Assessment of Executive Functions in Children. Applied Sciences, 13(3), 1728. https://doi.org/10.3390/app13031728